前言

有好几个月没搞神经网络代码了,期间也就是回顾了两边之前的文字。

不料,对nn,cnn的理解反而更深入了-_-!。

修改

《零基础学习人工智能---Python---Pytorch学习(四)》,关于Linear的理解是错误的,已修改。

RNN

RNN是Recurrent Neural Network的缩写。中文叫循环神经网络。

RNN是在NN的基础上的扩展。

我们知道在训练NN的时候,要传递一个待训练的tensor。RNN简单来说就是在传递tensor的同时,再传递一个hidden。

这个hidden是哪来的?它是上一轮训练时,返回y_predict的同时,返回的(我们之前写NN只会返回y_predict,现在要多返回一个hidden)。

如果没有上一轮,那hidden就等于空的tensor。

代码实现

定义RNN

class RNN(nn.Module):

# implement RNN from scratch rather than using nn.RNN

def __init__(self, input_size, hidden_size, output_size):

super(RNN, self).__init__()

self.hidden_size = hidden_size

self.i2h = nn.Linear(input_size + hidden_size, hidden_size)

self.i2o = nn.Linear(input_size + hidden_size, output_size)

self.softmax = nn.LogSoftmax(dim=1)

def forward(self, input_tensor, hidden_tensor):

combined = torch.cat((input_tensor, hidden_tensor), 1)

hidden = self.i2h(combined) #input to hidden=i2h

output = self.i2o(combined) #input to output=i2o

output = self.softmax(output)

return output, hidden

def init_hidden(self):

return torch.zeros(1, self.hidden_size)代码分析

init

init函数增加了一个参数hidden_size。我们知道init函数是接受x的列和y_predict的列的,x的列是input,y_predict的列是输出。

现在我们增加了hidden_size,他是hidden层的tensor的列。

我们在使用linear的时候,会把input_size和hidden_size相加,因为,我们后面再前向传播的时候,也会把x的tensor和hidden的tensor组合成一个向量。

forward

forward函数,我们先看return。

return多返回了一个对象------hidden,hidden是一个tensor,是执行linear(i2h)时的output,他也是根据x进行线性变换后的tensor,因为不是最后输出的tensor,所以他叫做hidden,而最后输出的output的tensor叫做y_predict。

我们在看forward的第一行,combined = torch.cat((input_tensor, hidden_tensor), 1)。

这是把tensor变量input_tensor和hidden_tensor给合并成了一个tensor,使用的是torch.cat函数。参数1是指按列的方向合并。

即input_tensor如果是(3,5),hidden_tensor是(3,2),我们将得到一个(3,7),相当与把hidden的每一行的值取出来,添加进相同行的input_tensor里。

所以我们上面的init里,初始化linear的第一个参数(x的列)是input_size + hidden_size。

定义工具函数

import io

import os

import unicodedata

import string

import glob

import torch

import random

# alphabet small + capital letters + " .,;'"

ALL_LETTERS = string.ascii_letters + " .,;'"

N_LETTERS = len(ALL_LETTERS)

# Turn a Unicode string to plain ASCII, thanks to https://stackoverflow.com/a/518232/2809427

def unicode_to_ascii(s):

return ''.join(

c for c in unicodedata.normalize('NFD', s)

if unicodedata.category(c) != 'Mn'

and c in ALL_LETTERS

)

def load_data():

# Build the category_lines dictionary, a list of names per language

category_lines = {}

all_categories = []

def find_files(path):

return glob.glob(path)

# Read a file and split into lines

def read_lines(filename):

lines = io.open(filename, encoding='utf-8').read().strip().split('\n')

return [unicode_to_ascii(line) for line in lines]

for filename in find_files('data/names/*.txt'):

category = os.path.splitext(os.path.basename(filename))[0]

all_categories.append(category)

lines = read_lines(filename)

category_lines[category] = lines

return category_lines, all_categories

"""

To represent a single letter, we use a "one-hot vector" of

size <1 x n_letters>. A one-hot vector is filled with 0s

except for a 1 at index of the current letter, e.g. "b" = <0 1 0 0 0 ...>.

To make a word we join a bunch of those into a

2D matrix <line_length x 1 x n_letters>.

That extra 1 dimension is because PyTorch assumes

everything is in batches - we're just using a batch size of 1 here.

"""

# Find letter index from all_letters, e.g. "a" = 0

def letter_to_index(letter):

return ALL_LETTERS.find(letter)

# Just for demonstration, turn a letter into a <1 x n_letters> Tensor

def letter_to_tensor(letter):

tensor = torch.zeros(1, N_LETTERS)

tensor[0][letter_to_index(letter)] = 1

return tensor

# Turn a line into a <line_length x 1 x n_letters>,

# or an array of one-hot letter vectors

def line_to_tensor(line):

tensor = torch.zeros(len(line), 1, N_LETTERS)

for i, letter in enumerate(line):

tensor[i][0][letter_to_index(letter)] = 1

return tensor

def random_training_example(category_lines, all_categories):

def random_choice(a):

random_idx = random.randint(0, len(a) - 1)

return a[random_idx]

category = random_choice(all_categories)

line = random_choice(category_lines[category])

category_tensor = torch.tensor([all_categories.index(category)], dtype=torch.long)

line_tensor = line_to_tensor(line)

return category, line, category_tensor, line_tensor

if __name__ == '__main__':

print(ALL_LETTERS)

print(unicode_to_ascii('Ślusàrski'))

category_lines, all_categories = load_data()

print(category_lines['Italian'][:5])

print(letter_to_tensor('J')) # [1, 57]

print(line_to_tensor('Jones').size()) # [5, 1, 57]代码分析

string.ascii_letters 是一个字符串常量,它包含了所有 ASCII 字母,即小写字母 'abcdefghijklmnopqrstuvwxyz' 和大写字母 'ABCDEFGHIJKLMNOPQRSTUVWXYZ' 的组合

letter_to_tensor函数:是把入参的字母转成tensor,tensor的shape是(1,57),后面我们使用该向量做神经网络的输入。

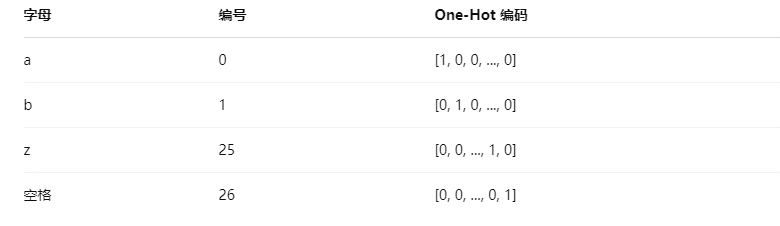

one-hot 向量

letter_to_tensor函数输出是tensor被称为one-hot向量,直译就是一个热点向量,即向量里就一个值是有意义的值。

【one-hot向量】就是一行N列的向量,列的值为1时,该列的位置,就是字母在字符串的位置。

如果我们有以下字母表,共27个字符(26个字母 + 空格):

ALL_LETTERS = "abcdefghijklmnopqrstuvwxyz "转成one-hot 向量,就会如下图模式分配。

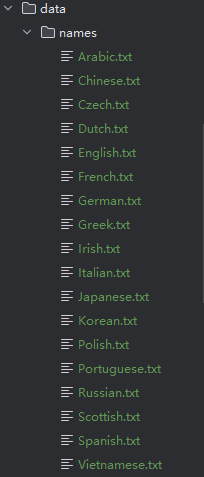

load_data函数:查询一个文件夹下的全部文件,然后把文件名全部取出来,组成集合,然后把文件的内容取出来,结合文件的名字,组成字典。

glob.glob:系统函数,查询指定目录下的指定文件。

data.names文件夹如下:

RNN前向传播

category_lines, all_categories = load_data()

n_categories = len(all_categories)

n_hidden = 128

print("N_LETTERS",N_LETTERS)

rnn = RNN(N_LETTERS, n_hidden, n_categories) # RNN(57,128,18) 18是18个国家即18个类别

input_tensor = letter_to_tensor('A') # 把A转成tensor(1,57)

hidden_tensor = rnn.init_hidden()

output, next_hidden = rnn(input_tensor, hidden_tensor)

input_tensor = line_to_tensor('Albert')

hidden_tensor = rnn.init_hidden()

output, next_hidden = rnn(input_tensor[0], hidden_tensor)

def category_from_output(output):

category_idx = torch.argmax(output).item()

return all_categories[category_idx]

print(category_from_output(output))代码分析

torch.argmax(output).item()是取出tensor里的最大值,其最大值的索引。

这里,我们通过整个RNN的前向传播,得到了一个output,即通过线性转换,我们得到y_predict,然后我们找到y_predict里最大的值的索引,我们认为,我们这个索引对应国家集合里对应索引的国家名,就是我们预测的我们输入的名字(实际输入的是字母)的所属国家。

完整代码:

import threading

import torch

import torch.nn as nn

from utils import ALL_LETTERS, N_LETTERS

from utils import load_data, letter_to_tensor, line_to_tensor, random_training_example

def category_from_output(output):

category_idx = torch.argmax(output).item()

return all_categories[category_idx]

class RNN(nn.Module):

# implement RNN from scratch rather than using nn.RNN

def __init__(self, input_size, hidden_size, output_size):

super(RNN, self).__init__()

self.hidden_size = hidden_size

self.i2h = nn.Linear(input_size + hidden_size, hidden_size)

self.i2o = nn.Linear(input_size + hidden_size, output_size)

self.softmax = nn.LogSoftmax(dim=1)

def forward(self, input_tensor, hidden_tensor):

combined = torch.cat((input_tensor, hidden_tensor), 1)

hidden = self.i2h(combined) #input to hidden=i2h

output = self.i2o(combined) #input to output=i2o

output = self.softmax(output)

return output, hidden

def init_hidden(self):

return torch.zeros(1, self.hidden_size)

category_lines, all_categories = load_data()

n_categories = len(all_categories)

n_hidden = 128

print("N_LETTERS",N_LETTERS)

rnn = RNN(N_LETTERS, n_hidden, n_categories) # RNN(57,128,18) 18是18个国家即18个类别

input_tensor = letter_to_tensor('A') # 把A转成tensor(1,57)

hidden_tensor = rnn.init_hidden()

output, next_hidden = rnn(input_tensor, hidden_tensor)

print(category_from_output(output))

input_tensor = line_to_tensor('Albert')

hidden_tensor = rnn.init_hidden()

output, next_hidden = rnn(input_tensor[0], hidden_tensor)

print(category_from_output(output))使用RNN训练及预测

定义训练函数

def train(line_tensor, category_tensor):

hidden = rnn.init_hidden()

for i in range(line_tensor.size()[0]):

output, hidden = rnn(line_tensor[i], hidden)

loss = criterion(output, category_tensor)

optimizer.zero_grad()

loss.backward()

optimizer.step()

return output, loss.item()代码分析

入参line_tensor是一个tesnor,shape是(n,1,57),即,是一个字符串转成n个one-hot向量。他是一个名字的字符串。

入参category_tensor是真实的y值,这里他的shape是tensor(1),即,这个名字的真实国家的索引。

然后我们通过循环执行output, hidden = rnn(line_tensor[i], hidden),把每个字母都在rnn里进行线性变换,这里每次都会把hidden返回,然后下次调用rnn,会把hideen传递过去,形成循环网络。

最后输出的output是个1行18列的tensor,1行是因为我们输出的x是1行,18列是因为我们创建rnn时,指定了18列的输出。

然后我们执行损失函数,loss = criterion(output, category_tensor),计算损失值,损失函数有很多种。(我们可以简单这样理解损失函数的运行逻辑,上面通过线性计算把X转成了tensor(1,18),这个tensor是y_predict,现在我们计算y_predicd与真y的差,在把结果平方,在求平均数,反正就是计算出一个数,这个数如果是2,3,那就代表我们的模型错的很离谱)

损失值求完,我们就可以使用损失值进行逆向传播了loss.backward()。这里loss是触发逆向传播的,也就是计算梯度,也就是求偏导数,之所以是loss触发,是因为loss本身是通过函数计算出来的,而我们求梯度,是对这个求loss的函数偏导,但是loss值,并不参与求偏导,所以loss是触发逆向传播的启点。

完整代码

import threading

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

from utils import ALL_LETTERS, N_LETTERS

from utils import load_data, letter_to_tensor, line_to_tensor, random_training_example

def category_from_output(output):

category_idx = torch.argmax(output).item()

return all_categories[category_idx]

class RNN(nn.Module):

# implement RNN from scratch rather than using nn.RNN

def __init__(self, input_size, hidden_size, output_size):

super(RNN, self).__init__()

self.hidden_size = hidden_size

self.i2h = nn.Linear(input_size + hidden_size, hidden_size)

self.i2o = nn.Linear(input_size + hidden_size, output_size)

self.softmax = nn.LogSoftmax(dim=1)

def forward(self, input_tensor, hidden_tensor):

combined = torch.cat((input_tensor, hidden_tensor), 1)

hidden = self.i2h(combined) #input to hidden=i2h

output = self.i2o(combined) #input to output=i2o

output = self.softmax(output)

return output, hidden

def init_hidden(self):

return torch.zeros(1, self.hidden_size)

category_lines, all_categories = load_data()

# print("category_lines",category_lines)

# print("all_categories",all_categories)

n_categories = len(all_categories)

n_hidden = 128

rnn = RNN(N_LETTERS, n_hidden, n_categories)

criterion = nn.NLLLoss()

learning_rate = 0.005

optimizer = torch.optim.SGD(rnn.parameters(), lr=learning_rate)

def train(line_tensor, category_tensor):

hidden = rnn.init_hidden()

for i in range(line_tensor.size()[0]):

output, hidden = rnn(line_tensor[i], hidden)

loss = criterion(output, category_tensor)

optimizer.zero_grad()

loss.backward()

optimizer.step()

return output, loss.item()

current_loss = 0

all_losses = []

plot_steps, print_steps = 1000, 5000

n_iters = 100000

for i in range(n_iters):

category, line, category_tensor, line_tensor = random_training_example(category_lines, all_categories) # category=国家名 line=这个国家下的随机一个名字 category_tensor=国家名索引值转换成的tensor(1) line_tensor=名字转换成的tensor(n,1,57)

output, loss = train(line_tensor, category_tensor)

current_loss += loss

if (i+1) % plot_steps == 0:

all_losses.append(current_loss / plot_steps)

current_loss = 0

if (i+1) % print_steps == 0:

guess = category_from_output(output)

correct = "CORRECT" if guess == category else f"WRONG ({category})"

print(f"{i+1} {(i+1)/n_iters*100} {loss:.4f} {line} / {guess} {correct}")

def show():

plt.figure()

plt.plot(all_losses)

plt.show()

show()

def predict(input_line):

print(f"\n> {input_line}")

with torch.no_grad():

line_tensor = line_to_tensor(input_line)

hidden = rnn.init_hidden()

for i in range(line_tensor.size()[0]):

output, hidden = rnn(line_tensor[i], hidden)

guess = category_from_output(output)

print("guess:",guess)

while True:

sentence = input("Input:")

if sentence == "quit":

break

predict(sentence)到此,Python---Pytorch学习-RNN(一)就介绍完了。

参考网站:https://github.com/patrickloeber/pytorch-examples/tree/master/rnn-name-classification

注:此文章为原创,任何形式的转载都请联系作者获得授权并注明出处!

若您觉得这篇文章还不错,请点击下方的【推荐】,非常感谢!