博客配套代码发布于github:半自动化cookie更新(欢迎顺手Star一下⭐)

相关爬虫专栏:JS逆向爬虫实战 爬虫知识点合集 爬虫实战案例 逆向知识点合集

前言:

有些网站的反爬检测会基于cookie做检测。在不用JS逆向,纯用自动化角度考虑的话,我们需要自主构建一个可以被反复更新的cookie池,在遇到风控时开启自动化,通过手动(或第三方打码平台)过掉当前可能存在的滑块/验证码等检测,即半自动化。过掉检测后再获取当前的cookie并更新返回到我们的cookie池,方便爬虫程序再调用。

一、初始cookie池搭建

这里我们以网站阿里法拍为目标站点,进行测试。经笔者测试已确定该网站主要反爬点就是cookie,如果一套cookie访问过多会出现滑块反爬,把该滑块过掉获取的新cookie才能继续访问。接下来我们进行实际操作。

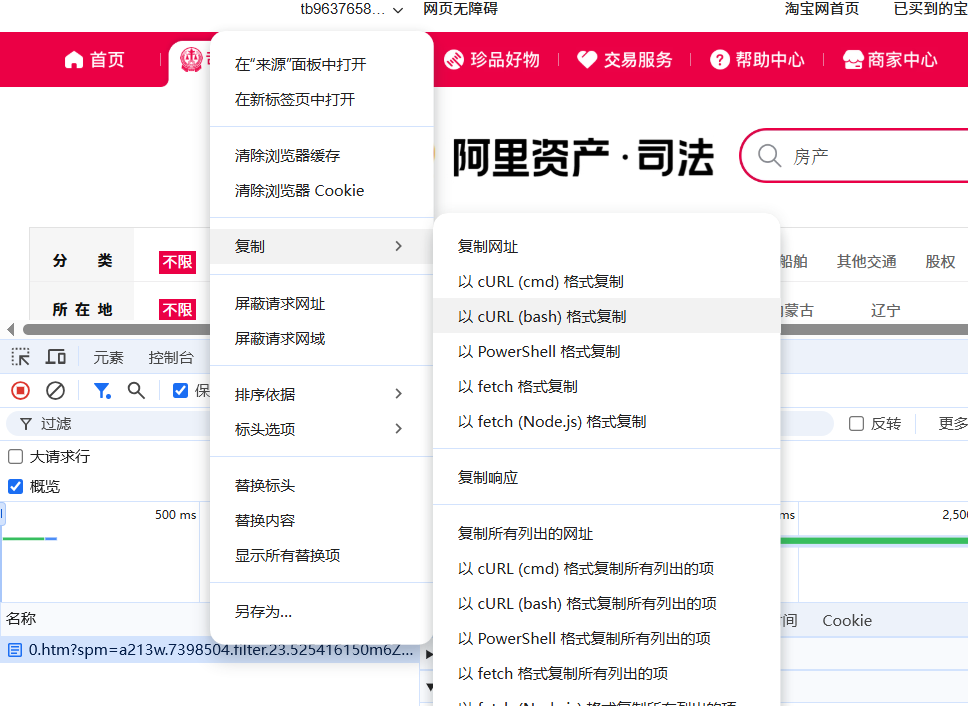

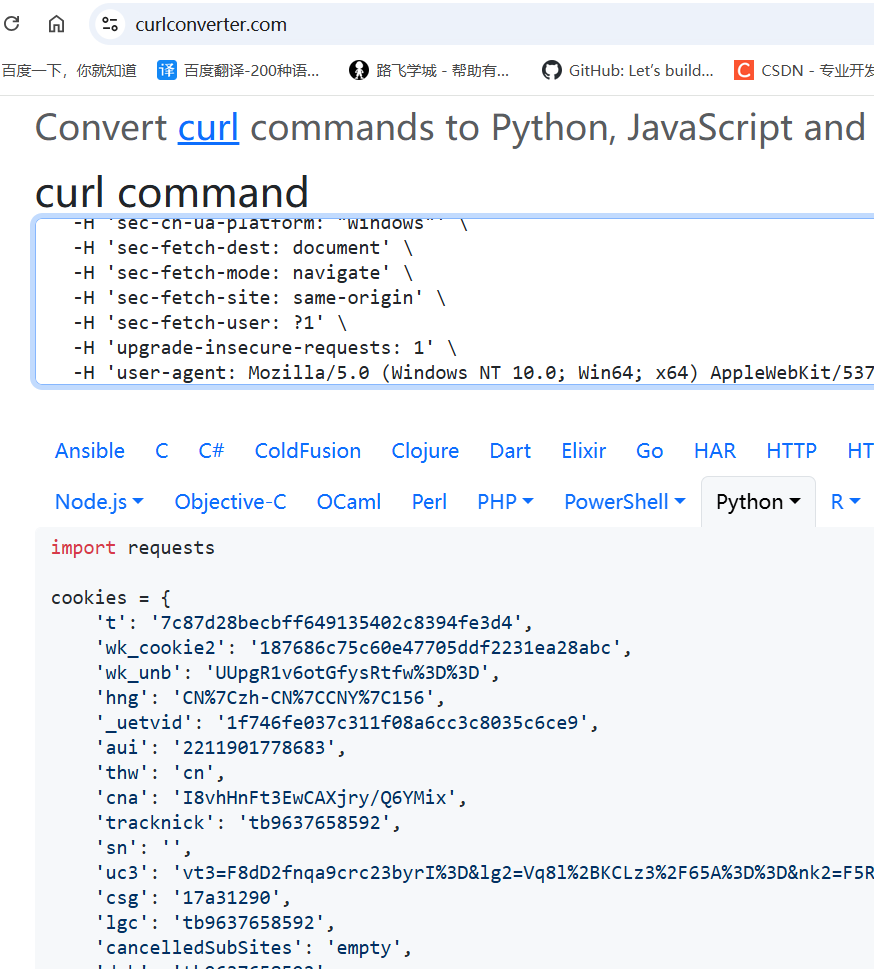

如上,将当前网页以cURL(bash)复制到网站Convert curl commands to code 并将返回的cookies复制,手动创建一个文件:cookie.json,将其粘贴进去:

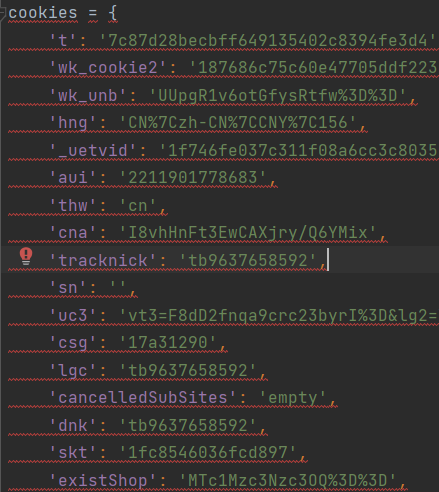

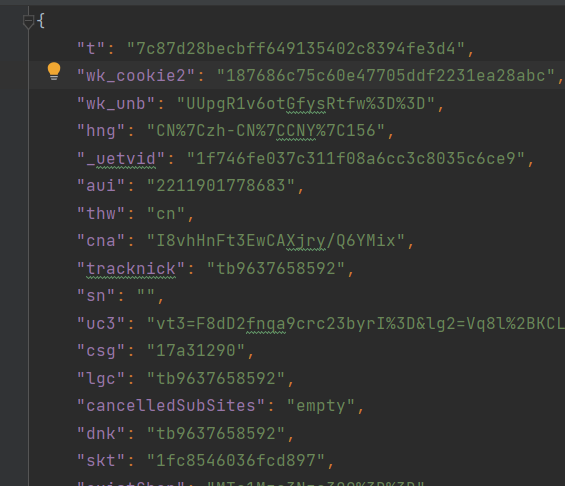

再将内部单引号统一改成双引号并去掉前面的cookies=即可。

再将内部单引号统一改成双引号并去掉前面的cookies=即可。

如此就完成了我们最初的cookie池构建。

二、加入cookie池自动化更新

我们再把刚才curl网站的py代码再复制过来输出,同时将cookies替换成刚才写好的文件。

python

# fapai-surf.py

import json

import requests

with open('cookie.json','r',encoding='utf8')as f:

cookies = json.load(f)

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'zh-CN,zh;q=0.9',

'cache-control': 'no-cache',

'pragma': 'no-cache',

'priority': 'u=0, i',

'referer': 'https://sf.taobao.com/list/0_____%C9%EE%DB%DA.htm?spm=a213w.7398504.pagination.1.36c21615Iqzq5r&auction_source=0&st_param=-1&auction_start_seg=0&auction_start_from=2025-08-07&auction_start_to=2025-09-30&q=%B7%BF%B2%FA&page=2',

'sec-ch-ua': '"Not)A;Brand";v="8", "Chromium";v="138", "Google Chrome";v="138"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/138.0.0.0 Safari/537.36',

# 'cookie': 't=7c87d28becbff649135402c8394fe3d4; wk_cookie2=187686c75c60e47705ddf2231ea28abc; wk_unb=UUpgR1v6otGfysRtfw%3D%3D; hng=CN%7Czh-CN%7CCNY%7C156; _uetvid=1f746fe037c311f08a6cc3c8035c6ce9; aui=2211901778683; thw=cn; cna=I8vhHnFt3EwCAXjry/Q6YMix; tracknick=tb9637658592; sn=; uc3=vt3=F8dD2fnqa9crc23byrI%3D&lg2=Vq8l%2BKCLz3%2F65A%3D%3D&nk2=F5RMHy8mIWUL74ML&id2=UUpgR1v6otGfysRtfw%3D%3D; csg=17a31290; lgc=tb9637658592; cancelledSubSites=empty; dnk=tb9637658592; skt=1fc8546036fcd897; existShop=MTc1Mzc3Nzc3OQ%3D%3D; uc4=nk4=0%40FY4HWrZETjmS5CNmnn3odzjwzx4sMTc%3D&id4=0%40U2gqyOZjcBXJtSK9LYN2Anu2eXYBJcOf; _cc_=W5iHLLyFfA%3D%3D; sgcookie=E100Ivtiu912QpYb8r3H%2BClZvPSXjjzy8MYqidkIR4%2FjKTPjuV%2FW2XlkKIIPg8QXjvKECCHjhUZxRv0fBTK5NHr%2FTx92k9PCyFBLXG5tNrXJRZY%3D; 3PcFlag=1754710767171; xlly_s=1; isg=BAIC-dH93kXTUMxboakkfnOPUwhk0wbtBpyU-kwbdnUgn6IZNGE4_Y_MSZvjz36F; mtop_partitioned_detect=1; _m_h5_tk=06c72fd4e126769ac097835f7c4ca1bd_1754979539453; _m_h5_tk_enc=fc02a0c9d635626a0006afdffd45beb1; _m_h5_tk=332b6afccb6258416a92b3d2ff4ccf63_1754981699539; _m_h5_tk_enc=b04012cfe670ef1583928d5234c9a9f7; x5sec=7b2274223a313735343937313434342c22733b32223a2230306333356564633862363932623835222c22617365727665723b33223a22307c434f583536735147454e53716b6676382f2f2f2f2f774561447a49794d5445354d4445334e7a67324f444d374d6a447a764e32582b2f2f2f2f2f3842227d; tfstk=gBzoF4Al-uo7R38Wq605ViumXMjxP4gILJLKp2HF0xkXykFEp223KJD-YTg8--2bEULKz2rDt5w0KD1Spvk3pWrRMNQTN7gI82XOWNUWXlHzxXuEY67q62crnRVMo7gI8tdv8GQgN8s8ldDETj5m9XLeUJozgIlK3blr8Jkq0fl9U2uULs0qsfTy8Y8ygIlK3vuE8JPViXHqa2uULS5mOj2P4YaUurWhStstlvo0obmoQSj6Je_IadHgaYYe8YcoqhFrne8UokjhLkkPfIHIyYNmZJ_6P4o3Yz34zt7E7lw0-cuGv_izto2Iyr5e4Ar8eqzzjB8Ui4DUIrnMEtDaPSzsumQNsSzbe7a09B7EMRH4Nzuh71gozYumGyBXzvq4YznSRLWndrV4zkjrxn-NAVYIg6U2AHirGjDtRzzHuSYebgCcih5I4jGSBsfDAHirGjDOisxNV0ljNAC..',

}

response = requests.get(

'https://sf.taobao.com/item_list.htm?_input_charset=utf-8&q=&spm=a213w.7398504.search_index_input.1&keywordSource=5',

cookies=cookies,

headers=headers,

).text

print(response)

没有问题,之后我们再将自动化逻辑加入。

python

# new_cookie.py

import json

from DrissionPage import ChromiumPage

from DrissionPage._configs.chromium_options import ChromiumOptions

def save_cookies(cookies, file='cookie.json'):

with open(file, 'w', encoding='utf-8') as f:

json.dump(cookies, f, ensure_ascii=False,indent=2)

# json.dump可直读文件,ensure_ascii指将中文字符原样转入,

# indent=2让json文件内容自动缩进,美化其格式

# url = 'https://sf.taobao.com/item_list.htm?_input_charset=utf-8&q=&spm=a213w.7398504.search_index_input.1&keywordSource=5'

def get_new_cookie(url):

page = ChromiumPage()

page.get(url)

# 进入页面先保存一次 cookie

page.wait.load_start()

cookies = page.cookies()

new_cookies = {c['name']: c['value'] for c in cookies}

save_cookies(new_cookies)

print(f'已保存{len(new_cookies)}条')并把主页面代码换成如下:

python

import json

import requests

from new_cookie import save_cookies,get_new_cookie

url = 'https://sf.taobao.com/item_list.htm?_input_charset=utf-8&q=&spm=a213w.7398504.search_index_input.1&keywordSource=5'

with open('cookie.json','r',encoding='utf8')as f:

cookies = json.load(f) # 从文件直读

flag = True

if flag:

print('遇到反爬...更新cookie...')

get_new_cookie(url)

print('新cookie获得成功!')

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'zh-CN,zh;q=0.9',

'cache-control': 'no-cache',

'pragma': 'no-cache',

'priority': 'u=0, i',

'referer': 'https://sf.taobao.com/list/0_____%C9%EE%DB%DA.htm?spm=a213w.7398504.pagination.1.36c21615Iqzq5r&auction_source=0&st_param=-1&auction_start_seg=0&auction_start_from=2025-08-07&auction_start_to=2025-09-30&q=%B7%BF%B2%FA&page=2',

'sec-ch-ua': '"Not)A;Brand";v="8", "Chromium";v="138", "Google Chrome";v="138"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/138.0.0.0 Safari/537.36',

# 'cookie': 't=7c87d28becbff649135402c8394fe3d4; wk_cookie2=187686c75c60e47705ddf2231ea28abc; wk_unb=UUpgR1v6otGfysRtfw%3D%3D; hng=CN%7Czh-CN%7CCNY%7C156; _uetvid=1f746fe037c311f08a6cc3c8035c6ce9; aui=2211901778683; thw=cn; cna=I8vhHnFt3EwCAXjry/Q6YMix; tracknick=tb9637658592; sn=; uc3=vt3=F8dD2fnqa9crc23byrI%3D&lg2=Vq8l%2BKCLz3%2F65A%3D%3D&nk2=F5RMHy8mIWUL74ML&id2=UUpgR1v6otGfysRtfw%3D%3D; csg=17a31290; lgc=tb9637658592; cancelledSubSites=empty; dnk=tb9637658592; skt=1fc8546036fcd897; existShop=MTc1Mzc3Nzc3OQ%3D%3D; uc4=nk4=0%40FY4HWrZETjmS5CNmnn3odzjwzx4sMTc%3D&id4=0%40U2gqyOZjcBXJtSK9LYN2Anu2eXYBJcOf; _cc_=W5iHLLyFfA%3D%3D; sgcookie=E100Ivtiu912QpYb8r3H%2BClZvPSXjjzy8MYqidkIR4%2FjKTPjuV%2FW2XlkKIIPg8QXjvKECCHjhUZxRv0fBTK5NHr%2FTx92k9PCyFBLXG5tNrXJRZY%3D; 3PcFlag=1754710767171; xlly_s=1; isg=BAIC-dH93kXTUMxboakkfnOPUwhk0wbtBpyU-kwbdnUgn6IZNGE4_Y_MSZvjz36F; mtop_partitioned_detect=1; _m_h5_tk=06c72fd4e126769ac097835f7c4ca1bd_1754979539453; _m_h5_tk_enc=fc02a0c9d635626a0006afdffd45beb1; _m_h5_tk=332b6afccb6258416a92b3d2ff4ccf63_1754981699539; _m_h5_tk_enc=b04012cfe670ef1583928d5234c9a9f7; x5sec=7b2274223a313735343937313434342c22733b32223a2230306333356564633862363932623835222c22617365727665723b33223a22307c434f583536735147454e53716b6676382f2f2f2f2f774561447a49794d5445354d4445334e7a67324f444d374d6a447a764e32582b2f2f2f2f2f3842227d; tfstk=gBzoF4Al-uo7R38Wq605ViumXMjxP4gILJLKp2HF0xkXykFEp223KJD-YTg8--2bEULKz2rDt5w0KD1Spvk3pWrRMNQTN7gI82XOWNUWXlHzxXuEY67q62crnRVMo7gI8tdv8GQgN8s8ldDETj5m9XLeUJozgIlK3blr8Jkq0fl9U2uULs0qsfTy8Y8ygIlK3vuE8JPViXHqa2uULS5mOj2P4YaUurWhStstlvo0obmoQSj6Je_IadHgaYYe8YcoqhFrne8UokjhLkkPfIHIyYNmZJ_6P4o3Yz34zt7E7lw0-cuGv_izto2Iyr5e4Ar8eqzzjB8Ui4DUIrnMEtDaPSzsumQNsSzbe7a09B7EMRH4Nzuh71gozYumGyBXzvq4YznSRLWndrV4zkjrxn-NAVYIg6U2AHirGjDtRzzHuSYebgCcih5I4jGSBsfDAHirGjDOisxNV0ljNAC..',

}

response = requests.get(

url,

cookies=cookies,

headers=headers,

).text

print(response)注:此处的flag=True,if flag是假设遇到cookie反爬的事件(比如滑块)。这里省略,实战中如有遇到将该检测到反爬的逻辑完善(如自动化中检测打开的网页是否有这个滑块元素)即可。

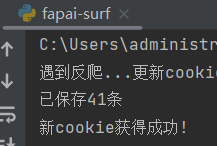

运行代码:

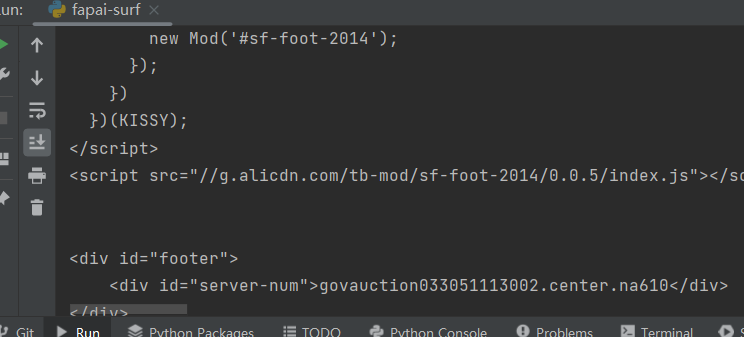

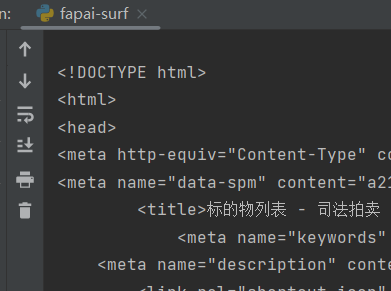

响应返回成功。

响应返回成功。

三、深挖cookie与自动化实战

上面做法其实还是简化了实战逻辑。

笔者在实际使用这种方法时,发现自动化与手动打开获取的cookie逻辑并不相同 。在反爬时很可能会遇到手动打开的网页被风控,但自动化打开的网页并没有,或者相反。因为二者获取的cookie并不相同。所以我们必须保证程序的运行cookie思路跟着自动化来走。

这里想法应该是:

程序运行时,当遇到反爬,使用自动化并查看当前自动化的网页是否也被反爬。如果是,处理当前网页反爬;如果不,记录并返回当前自动化获得的cookie(不然程序还是在被风控中)。以此来保证程序能稳定过反爬。

综上思路,笔者构造代码逻辑如下:

python

def get_more_cookie(url,first_try=True):

page = ChromiumPage()

page.get(url)

# 进入页面先保存一次 cookie

page.wait.load_start()

if first_try:

cookies = page.cookies()

new_cookies = {c['name']: c['value'] for c in cookies}

save_cookies(new_cookies)

return new_cookies

# ---- 第二次才执行人工滑块 ----

slider = page.ele('#nc_1__scale_text', timeout=0.1)

if slider:

print('正在处理反爬逻辑...')

# 等页面刷新完成

page.wait.load_start()

page.wait.doc_loaded() # 确保 DOM 加载完成

time.sleep(0.5) # 给 Cookie 同步留一点缓冲

else:

# 再极速轮询一次(最多再 0.5s)

for _ in range(2):

time.sleep(0.05)

if page.ele('#nc_1__scale_text', timeout=0):

print('正在处理反爬逻辑...')

# 等页面刷新完成

page.wait.load_start()

page.wait.doc_loaded() # 确保 DOM 加载完成

time.sleep(0.5) # 给 Cookie 同步留一点缓冲

break

else:

print('无滑块,自动继续...')

# 无论是否人工,都抓最新 cookie

cookies = page.cookies()

new_cookies = {c['name']: c['value'] for c in cookies}

save_cookies(new_cookies)

return new_cookies这个def会默认first_try为true,即第一次运行。该函数运行时是假设程序已返回反爬 的情况下,通过自动化打开看一下。并在第一次运行时直接先把cookie获取并返回(如果是自动化无反爬的情况下就不用再检查自动化是否有反爬)。在之后再运行时,给一个first_try=False即可让它确认自动化也有风控,让它专门去处理。

四、小结

在实际爬虫过程中,如果反爬过于厉害比如阿里滑块等,用半自动化的思路是个非常可行的做法,程序一直在代码中获取数据,仅遇到风控时开一下自动化。它既大幅减少人工量 的同时也能提高爬虫程序运行的成功率。