目录

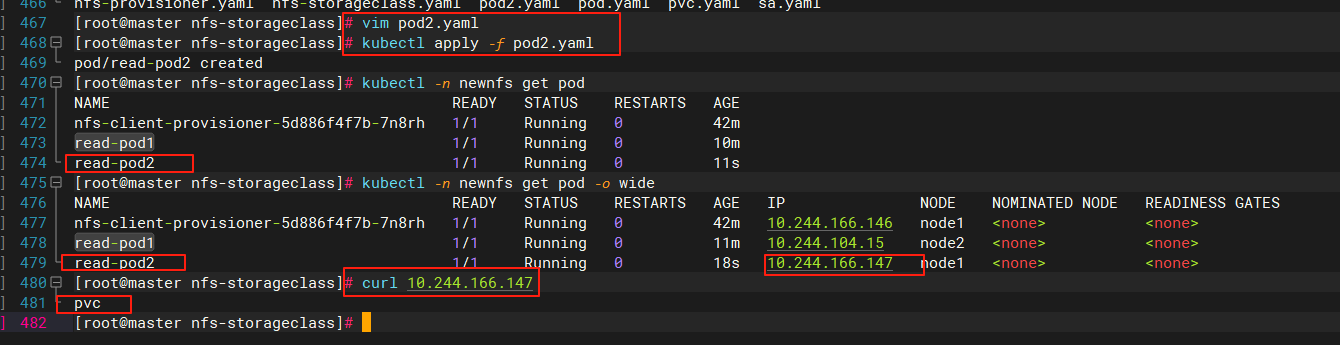

10、创建pod,挂载storageclass动态生成的pvc:storage-pvc

[apiVersion: v1kind: Podmetadata: name: read-pod1 namespace: newnfsspec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent volumeMounts: - name: nginx-html mountPath: /usr/share/nginx/html volumes: - name: nginx-html persistentVolumeClaim: claimName: storage-pvc 编辑二、验证是否挂载成功](#apiVersion: v1kind: Podmetadata: name: read-pod1 namespace: newnfsspec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent volumeMounts: - name: nginx-html mountPath: /usr/share/nginx/html volumes: - name: nginx-html persistentVolumeClaim: claimName: storage-pvc 编辑二、验证是否挂载成功)

一、nfs主机端部署

1、在k8s集群外部署一台nfs存储主机

2、在k8s集群所有主机中部署nfs-utils

yum install -y nfs-utils

3、配置nfs主机上的共享目录

vim /etc/exports

/data 192.168.33.0/24(rw,sync,no_root_squash,no_subtree_check)

4、启动nfs主机上的nfs

systemctl enable --now nfs

5、查看共享目录

shoumount -e

二、k8s集群master节点部署

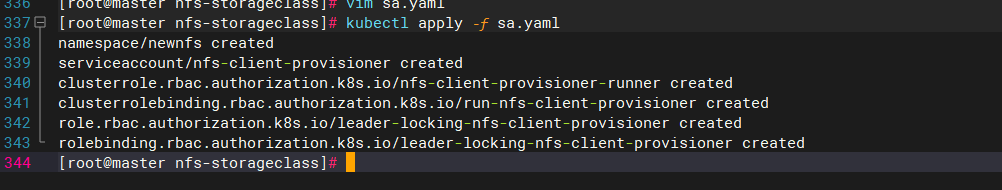

1、创建运行nfs-provisioner需要的sa账号

apiVersion: v1

kind: Namespace

metadata:

name: newnfs

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: newnfs

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"] - apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: newnfs

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: newnfs

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: newnfs

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: newnfs

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

2、安装nfs-provisioner存储制备器

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

namespace: newnfs

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate #设置升级策略为删除再创建(默认为滚动更新)

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner #上一步创建的ServiceAccount名称

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/mydlq/nfs-subdir-external-provisioner:v4.0.0

imagePullPolicy: IfNotPresent

volumeMounts: - name: nfs-client-root

mountPath: /persistentvolumes

env: - name: PROVISIONER_NAME # Provisioner的名称,以后设置的storageclass要和这个保持一致

value: storage-nfs - name: NFS_SERVER # NFS服务器地址,需和valumes参数中配置的保持一致

value: 192.168.33.200 - name: NFS_PATH # NFS服务器数据存储目录,需和volumes参数中配置的保持一致

value: /data - name: ENABLE_LEADER_ELECTION

value: "true"

volumes: - name: nfs-client-root

nfs:

server: 192.168.33.200 # NFS服务器地址

path: /data # NFS共享目录

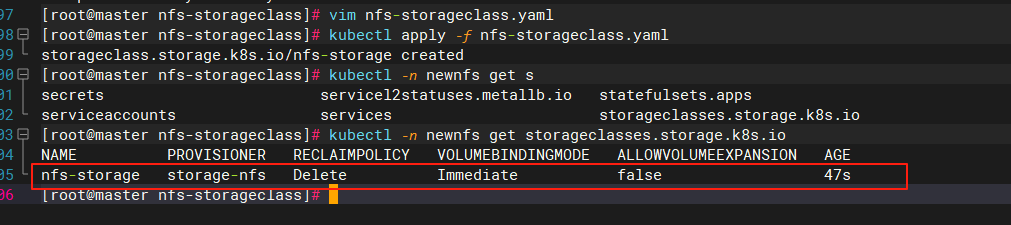

3、创建storageclass存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

namespace: newnfs

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "false" ## 是否设置为默认的storageclass

provisioner: storage-nfs ## 动态卷分配者名称,必须和上面创建的deploy中环境变量"PROVISIONER_NAME"变量值一致

parameters:

archiveOnDelete: "true" ## 设置为"false"时删除PVC不会保留数据,"true"则保留数据

mountOptions:

- hard ## 指定为硬挂载方式

- nfsvers=4 ## 指定NFS版本,这个需要根据NFS Server版本号设置(nfsstat查看)

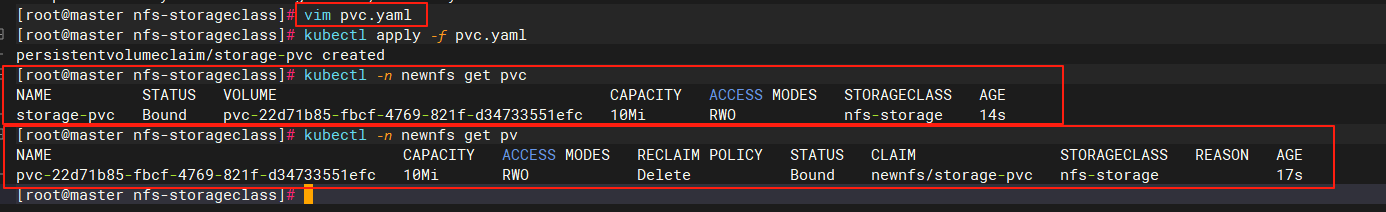

4、创建pvc,通过storageclass动态生成pv

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: storage-pvc

namespace: newnfs

spec:

storageClassName: nfs-storage ## 需要与上面创建的storageclass的名称一致

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Mi

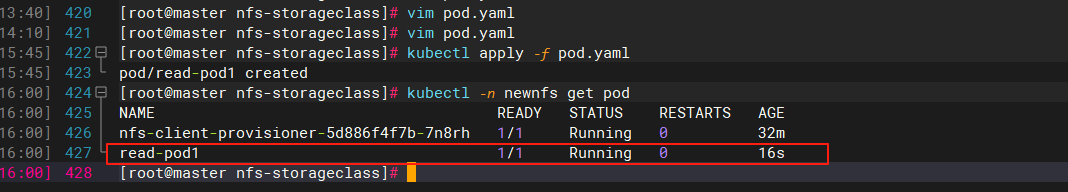

5、创建pod,挂载storageclass动态生成的pvc:storage-pvc

**apiVersion: v1

kind: Pod

metadata:

name: read-pod1

namespace: newnfs

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts: - name: nginx-html

mountPath: /usr/share/nginx/html

volumes: - name: nginx-html

persistentVolumeClaim:

claimName: storage-pvc**

三、验证是否挂载成功

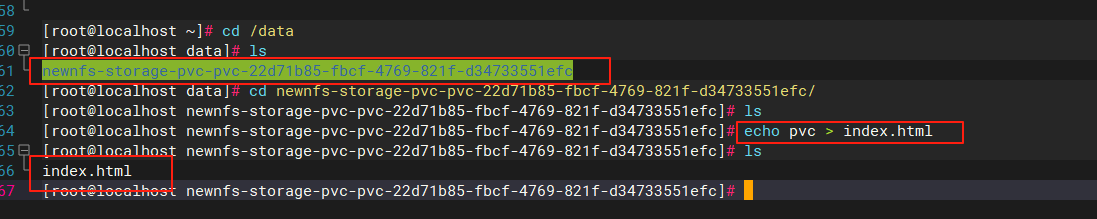

1、到nfs主机共享目录中创建index.html文件

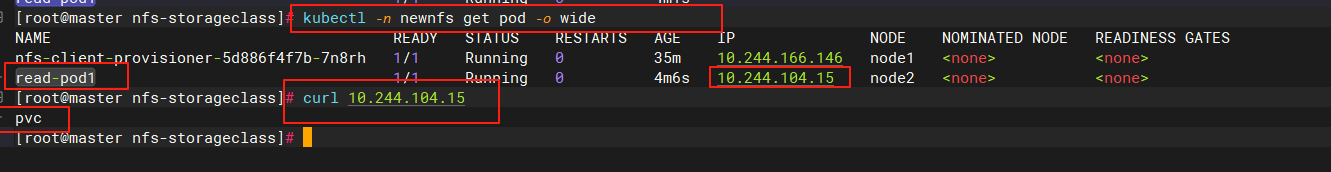

2、在k8s集群中访问read-pod1的IP

3、多个pod可以共同挂载一个nfs共享目录,实现目录共享

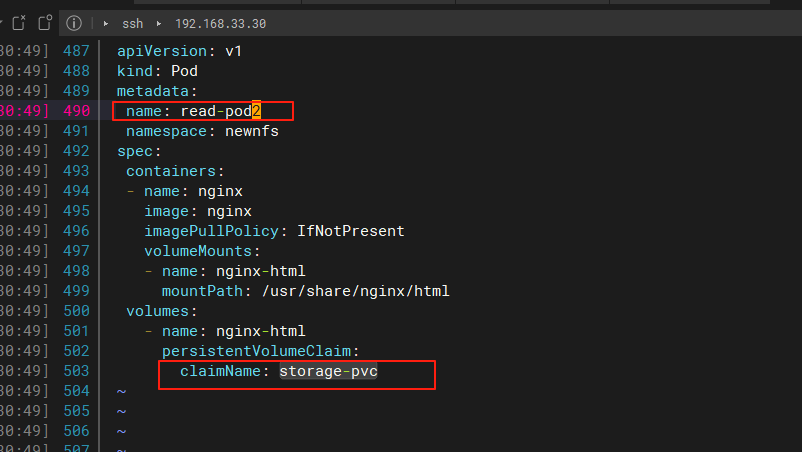

- 创建一个新pod并挂载同一个pvc "storage-pvc"

- 访问pod2的IP