一:背景

1. 讲故事

分享了几篇崩溃,这一篇跟大家分享一例内存暴涨,训练营里有位学员朋友找到我,说他们的软件存在内存暴涨,让我帮忙看下怎么回事,dump也抓到了。

二:内存暴涨分析

1. 内存真的暴涨吗

一直都给训练营里的学员灌输一个思想,就是不要相信任何人说的话,而应该是以数据说话,由于是 linux dump,可以使用 !maddress -summary 命令观察。

C#

0:000> !maddress -summary

+-------------------------------------------------------------------------+

| Memory Type | Count | Size | Size (bytes) |

+-------------------------------------------------------------------------+

| GCHeap | 12 | 2.39gb | 2,565,107,712 |

| Stack | 32 | 237.33mb | 248,860,672 |

| Image | 1,380 | 200.21mb | 209,934,848 |

| PAGE_READWRITE | 83 | 121.45mb | 127,348,736 |

| LowFrequencyHeap | 208 | 13.89mb | 14,561,280 |

| HighFrequencyHeap | 146 | 9.10mb | 9,539,584 |

| LoaderCodeHeap | 9 | 9.00mb | 9,437,184 |

| HostCodeHeap | 6 | 360.00kb | 368,640 |

| ResolveHeap | 1 | 348.00kb | 356,352 |

| DispatchHeap | 1 | 196.00kb | 200,704 |

| PAGE_READONLY | 84 | 175.50kb | 179,712 |

| CacheEntryHeap | 2 | 100.00kb | 102,400 |

| IndirectionCellHeap | 2 | 88.00kb | 90,112 |

| LookupHeap | 2 | 80.00kb | 81,920 |

| StubHeap | 1 | 12.00kb | 12,288 |

| PAGE_EXECUTE_WRITECOPY | 2 | 8.00kb | 8,192 |

| PAGE_EXECUTE_READ | 1 | 4.00kb | 4,096 |

+-------------------------------------------------------------------------+

| [TOTAL] | 1,972 | 2.97gb | 3,186,194,432 |

+-------------------------------------------------------------------------+ 从卦象看,总计 2.97G 的总内存,托管堆就吃了 2.39G,也就表示当前存在托管内存泄露,接下来的关注点就是托管堆了。

2. 托管堆暴涨分析

要想观察托管堆中到底有什么,可以使用 !dumpheap -stat 观察详情,输出如下:

C#

0:000> !dumpheap -stat

Statistics:

MT Count TotalSize Class Name

...

7f5640cb1168 115,010 71,585,040 System.Collections.Generic.Dictionary<System.String, Newtonsoft.Json.Linq.JToken>+Entry[]

7f5640ae64f8 629,539 75,544,680 xxx.TraceContext+TraceScope

7f5640937348 2,100,061 134,403,904 Newtonsoft.Json.Linq.JValue

7f563b060f90 1,945,658 205,832,084 System.String

7f5640c8d660 2,118,456 220,319,424 Newtonsoft.Json.Linq.JProperty

7f563b0614c0 101,757 1,047,579,498 System.Byte[]

Total 18,591,667 objects, 2,539,831,376 bytes从卦中看托管堆中有 10w 个 System.Byte[] ,大概吃了一半的托管堆内存,从经验上来说,这里面肯定有不少大的 Byte[],接下来做个简单的过滤,发现有2.8w 的 size=0n32792 的 Byte[] 数组,简化后如下:

C#

0:000> !dumpheap -mt 7f563b0614c0 -min 0x8018 -max 0x8018

Address MT Size

7f552fc394a0 7f563b0614c0 32,792

7f552fc48858 7f563b0614c0 32,792

7f552fc52590 7f563b0614c0 32,792

7f552fc5e500 7f563b0614c0 32,792

7f552fc77fa0 7f563b0614c0 32,792

7f552fc834d0 7f563b0614c0 32,792

7f552fc8b718 7f563b0614c0 32,792

7f552fc93730 7f563b0614c0 32,792

...

7f5623f3f9a0 7f563b0614c0 32,792

7f5623f912e0 7f563b0614c0 32,792

7f5623f9b0a8 7f563b0614c0 32,792

7f5623fa5ee0 7f563b0614c0 32,792

Statistics:

MT Count TotalSize Class Name

7f563b0614c0 28,563 936,637,896 System.Byte[]

Total 28,563 objects, 936,637,896 bytes那为什么有 2.8w 的 byte[] 得不到GC回收呢?随机抽几个使用 !gcroot 命令观察,参考如下:

C#

0:000> !gcroot 7f55c7a6cf90

r15:

-> 7f5567484b08 System.Threading.ThreadPoolWorkQueueThreadLocals

-> 7f5567484b40 System.Threading.Thread

-> 7f55bb5d7d58 System.Threading.ExecutionContext

-> 7f55bb5d7ce8 System.Threading.AsyncLocalValueMap+MultiElementAsyncLocalValueMap

-> 7f55bb5d7d00 System.Collections.Generic.KeyValuePair<System.Threading.IAsyncLocal, System.Object>[]

-> 7f55bb5d7b98 xxxt+TraceScope

-> 7f555658d478 Microsoft.Extensions.DependencyInjection.ServiceLookup.ServiceProviderEngineScope

-> 7f56140ea038 Microsoft.Extensions.DependencyInjection.ServiceLookup.DynamicServiceProviderEngine

-> 7f56140ea0c8 Microsoft.Extensions.DependencyInjection.ServiceLookup.ServiceProviderEngineScope

-> 7f56140f7160 System.Collections.Generic.List<System.IDisposable>

-> 7f561412fd80 System.IDisposable[]

...

-> 7f561434b6f8 xxx.RequestTrace.AspNetTraceContext

...

-> 7f561406ef10 Microsoft.Extensions.Configuration.ConfigurationRoot

-> 7f561406ef30 Microsoft.Extensions.Configuration.ConfigurationReloadToken

-> 7f561406ef48 System.Threading.CancellationTokenSource

-> 7f56140f50a0 System.Threading.CancellationTokenSource+CallbackPartition[]

-> 7f56142b1d38 System.Threading.CancellationTokenSource+CallbackPartition

-> 7f55cfe26900 System.Threading.CancellationTokenSource+CallbackNode

-> 7f55a585a858 System.Threading.CancellationTokenSource+CallbackNode

...

-> 7f55a59b4848 System.Threading.CancellationTokenSource+CallbackNode

-> 7f55a59b4178 System.Threading.ExecutionContext

-> 7f55a59b4108 System.Threading.AsyncLocalValueMap+MultiElementAsyncLocalValueMap

-> 7f55a59b4120 System.Collections.Generic.KeyValuePair<System.Threading.IAsyncLocal, System.Object>[]

-> 7f55a59b3bb0 xxx.RequestTrace.AspNetTraceContext

-> 7f55a59b3708 Microsoft.AspNetCore.Http.DefaultHttpContext

-> 7f55a59b2308 Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http.Http1Connection

-> 7f55a59b4b68 Microsoft.AspNetCore.WebUtilities.FileBufferingReadStream

-> 7f55a5b219b0 System.Byte[] 通过卦中的输出,很快就发现有超多的 CallbackNode 节点,熟悉这个类的朋友应该知道,它是你通过 CancellationTokenSource.Token.Register 注册的回调函数,在底层的话可以观察首节点的 Id=17812 即可,参考如下:

C#

0:000> !do 7f55cfe26900

Name: System.Threading.CancellationTokenSource+CallbackNode

MethodTable: 00007f56405c1c18

EEClass: 00007f56405aa078

Size: 80(0x50) bytes

File: /usr/share/dotnet/shared/Microsoft.NETCore.App/3.1.32/System.Private.CoreLib.dll

Fields:

MT Field Offset Type VT Attr Value Name

00007f56405c2690 4000705 8 ...CallbackPartition 0 instance 00007f56142b1d38 Partition

00007f56405c1c18 4000706 10 ...urce+CallbackNode 0 instance 0000000000000000 Prev

00007f56405c1c18 4000707 18 ...urce+CallbackNode 0 instance 00007f55cc695010 Next

00007f563b05b468 4000708 40 System.Int64 1 instance 17812 Id

00007f56405b55b8 4000709 20 ...Private.CoreLib]] 0 instance 00007f55cfe268c0 Callback

00007f563a5dc7a8 400070a 28 System.Object 0 instance 00007f561406ef10 CallbackState

00007f563b0f8fe0 400070b 30 ....ExecutionContext 0 instance 00007f55cfe23e88 ExecutionContext

00007f563b0f91d0 400070c 38 ...ronizationContext 0 instance 0000000000000000 SynchronizationContext从卦中看到这个单链表已经有17812 个节点,正常情况下不会有w级别的注册函数,这是一种失控的状态。

3. 为什么Register失控了

要想找到这个答案,可以深挖 Callback 和 CallbackState 两个字段,参考如下:

C#

0:000> !dumpdelegate 00007f55cfe268c0

Target Method Name

00007f55cfe26788 00007f5640ae1040 xxx.ConfigurationExtensions+<>c__DisplayClass18_0.<OnChange>g__Callback|0(System.Object)

0:000> !DumpObj /d 00007f561406ef10

Name: Microsoft.Extensions.Configuration.ConfigurationRoot

MethodTable: 00007f56407bd910

EEClass: 00007f564079f808

Size: 32(0x20) bytes

File: /data/apps/xxx/Microsoft.Extensions.Configuration.dll

Fields:

MT Field Offset Type VT Attr Value Name

00007f56407bd3a0 400000a 8 ...on.Abstractions]] 0 instance 00007f561406ece0 _providers

00007f56407be120 400000b 10 ...rationReloadToken 0 instance 00007f561406ef30 _changeToken

从卦象来看是和 IConfiguration 相关的变更令牌有关,比如对配置文件的监视,由于这些都是底层的东西,看样子是高层使用者用的不对。

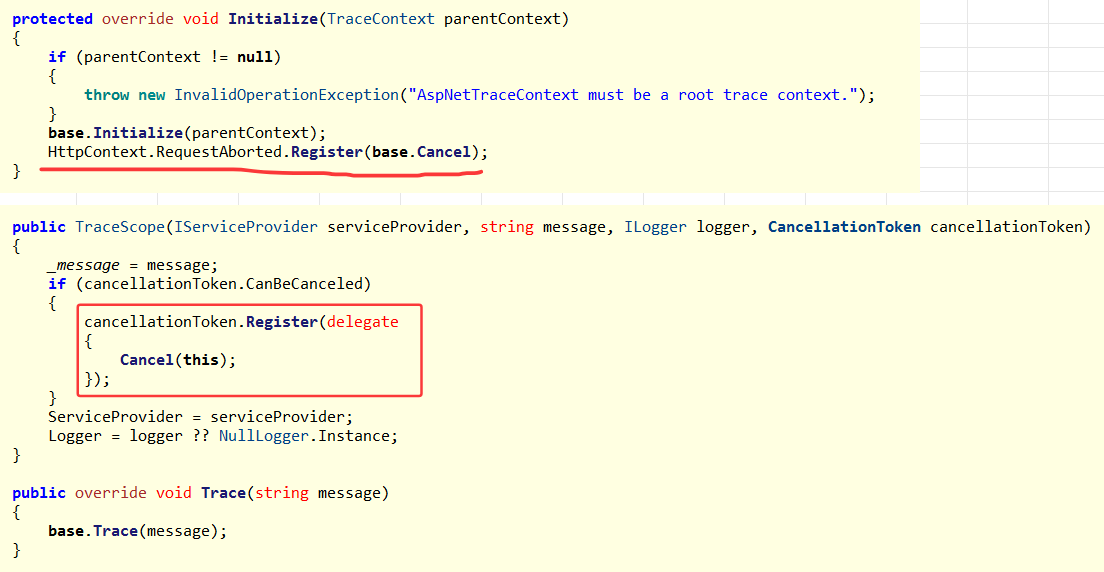

那到底是哪里的不对呢?一时也没搞清楚,接下来回头观察引用链,发现有用户代码 TraceScope 和 AspNetTraceContext,赶紧研究相关代码,发现有相关的 Register 操作。

并且在托管堆上也发现了3w多的 AspNetTraceContext 和 62w 的TraceScope,这也是一种失控状态,输出如下:

C#

...

7f5640af9a70 31,949 4,345,064 xxx.RequestTrace.AspNetTraceContext

...

7f5640ae64f8 629,539 75,544,680 xxx.TraceContext+TraceScope

...

7f563b0614c0 101,757 1,047,579,498 System.Byte[]最后发现它是一个 HttpTrace的中间件,公司的架构组做的,然后就是告诉朋友重点关注对 AspNetTraceContext 和 TraceScope 相关的注册代码,或者直接将这个中间件剔除,观察下效果。

三:总结

这篇文章需要你对 CancellationToken 和 IChangeToken 有一定的了解,调试工作者难呀,不管是开源的还是非开源的,若要解决问题,需要对这些东西的底层有一个较微观的认识。。。否则如何给出指导意见呢!