ceph-mimic版本部署

- 一、ceph-mimic版本部署

-

- 1、环境规划

- 2、系统基础环境准备

-

- [2.1 关闭防火墙、SELinux](#2.1 关闭防火墙、SELinux)

- [2.2 确保所有主机时间同步](#2.2 确保所有主机时间同步)

- [2.3 所有主机ssh免密](#2.3 所有主机ssh免密)

- [2.4 添加所有主机解析](#2.4 添加所有主机解析)

- 3、配置ceph软件仓库

- 4、安装ceph-deploy工具

- 5、ceph集群初始化

- 6、所有ceph集群节点安装相关软件

- 7、客户端安装ceph-common软件

- [8、在ceph集群中创建ceph monitor组件](#8、在ceph集群中创建ceph monitor组件)

-

- [8.1 在配置文件中添加public network配置](#8.1 在配置文件中添加public network配置)

- [8.2 在node01节点创建MON服务](#8.2 在node01节点创建MON服务)

- [8.2 在所有ceph集群节点上同步配置](#8.2 在所有ceph集群节点上同步配置)

- [8.3 为避免MON的单点故障,在另外两个节点添加MON服务](#8.3 为避免MON的单点故障,在另外两个节点添加MON服务)

- [8.4 查看ceph集群状态](#8.4 查看ceph集群状态)

- 9、创建mgr服务

-

- [9.1 在node01节点创建mgr](#9.1 在node01节点创建mgr)

- [9.2 添加多个mgr](#9.2 添加多个mgr)

- [9.3 再次查看集群状态](#9.3 再次查看集群状态)

- 10、添加osd

-

- [10.1 磁盘初始化](#10.1 磁盘初始化)

- [10.2 添加osd服务](#10.2 添加osd服务)

- [10.3 再次查看集群状态](#10.3 再次查看集群状态)

- 11、添加dashboard插件

-

- [11.1 查看mgr运行的主节点](#11.1 查看mgr运行的主节点)

- [11.2 启用dashboard插件](#11.2 启用dashboard插件)

- [11.3 创建dashboard需要的证书](#11.3 创建dashboard需要的证书)

- [11.4 设置dashboard访问地址](#11.4 设置dashboard访问地址)

- [11.5 重启dashboard插件](#11.5 重启dashboard插件)

- [11.6 设置用户名、密码](#11.6 设置用户名、密码)

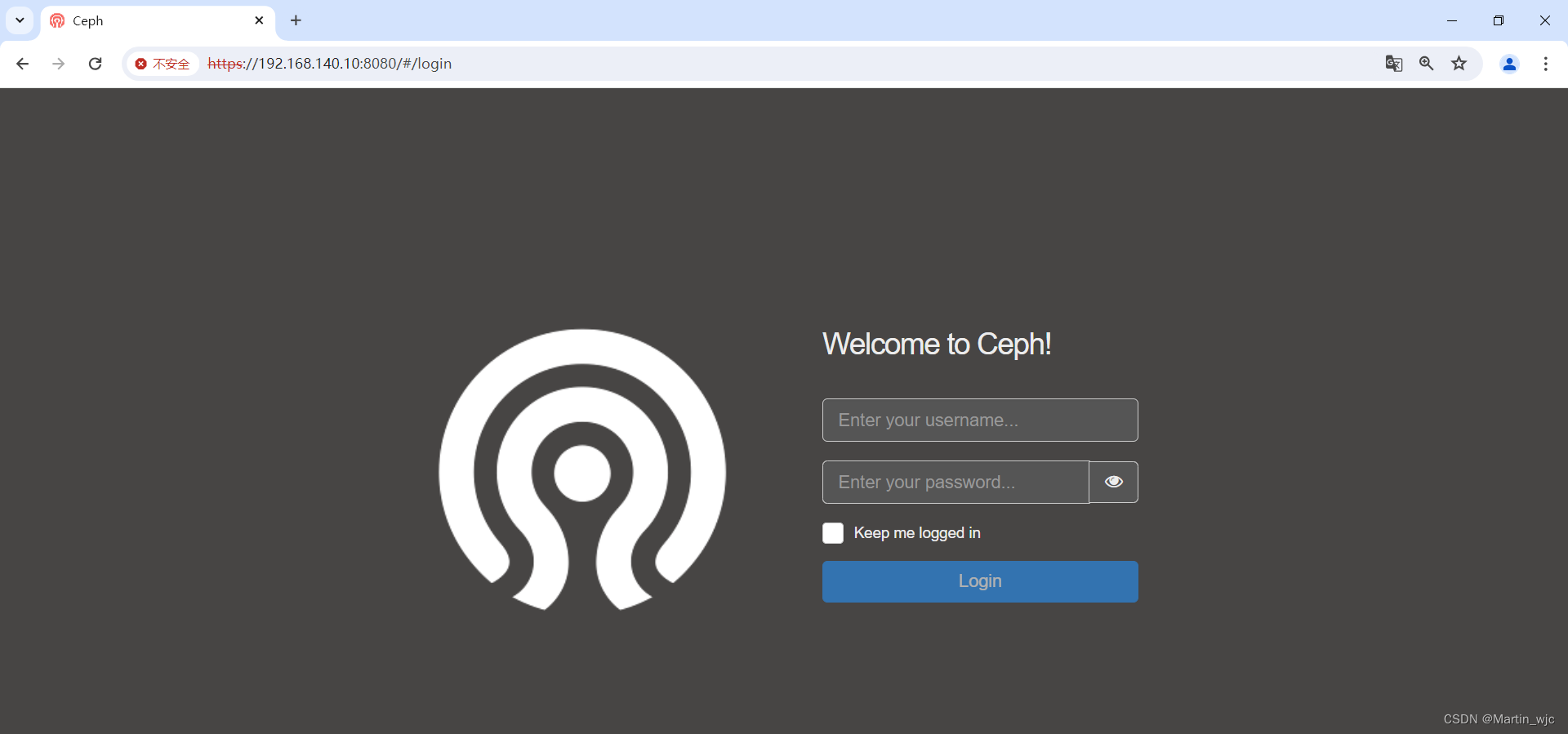

- [11.7 访问webUI](#11.7 访问webUI)

一、ceph-mimic版本部署

1、环境规划

192.168.140.10 node01 ceph集群节点/ceph-deploy /dev/sdb

192.168.140.11 node02 ceph集群节点 /dev/sdb

192.168.140.12 node03 ceph集群节点 /dev/sdb

192.168.140.13 业务服务器

2、系统基础环境准备

2.1 关闭防火墙、SELinux

2.2 确保所有主机时间同步

bash

[root@node01 ~]# ntpdate 120.25.115.20

14 Jun 11:43:21 ntpdate[1300]: step time server 120.25.115.20 offset -86398.543174 sec

[root@node01 ~]# crontab -l

*/30 * * * * /usr/sbin/ntpdate 120.25.115.20 &> /dev/null2.3 所有主机ssh免密

bash

[root@node01 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:I56kRZucE7YCQAOM4RGGOuYuiBj4tgo/1CI9IXqaDEw root@node01

The key's randomart image is:

+---[RSA 2048]----+

|XB. |

|=oo |

|... + |

|+E.. + * |

|B+ o. @ S |

|*o* .* + . |

|OO o. o |

|O++ |

|oooo |

+----[SHA256]-----+

[root@node01 ~]# mv /root/.ssh/id_rsa.pub /root/.ssh/authorized_keys

[root@node01 ~]# scp -r /root/.ssh/ root@192.168.140.11:/root/

[root@node01 ~]# scp -r /root/.ssh/ root@192.168.140.12:/root/

[root@node01 ~]# scp -r /root/.ssh/ root@192.168.140.13:/root/

bash

[root@node01 ~]# for i in 10 11 12 13; do ssh root@192.168.140.$i hostname; ssh root@192.168.140.$i date; done

node01

Fri Jun 14 11:49:57 CST 2024

node02

Fri Jun 14 11:49:57 CST 2024

node03

Fri Jun 14 11:49:58 CST 2024

app

Fri Jun 14 11:49:58 CST 20242.4 添加所有主机解析

bash

[root@node01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.140.10 node01

192.168.140.11 node02

192.168.140.12 node03

192.168.140.13 app

[root@node01 ~]# for i in 10 11 12 13

> do

> scp /etc/hosts root@192.168.140.$i:/etc/hosts

> done

hosts 100% 244 411.0KB/s 00:00

hosts 100% 244 51.2KB/s 00:00

hosts 100% 244 58.0KB/s 00:00

hosts 100% 244 58.3KB/s 00:00

[root@node01 ~]# 3、配置ceph软件仓库

bash

[root@node01 ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@node01 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

bash

[root@node01 ~]# cat /etc/yum.repos.d/ceph.repo

[ceph]

name=ceph

baseurl=http://mirrors.aliyun.com/ceph/rpm-mimic/el7/x86_64/

enabled=1

gpgcheck=0

priority=1

[ceph-noarch]

name=cephnoarch

baseurl=http://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch/

enabled=1

gpgcheck=0

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-mimic/el7/SRPMS

enabled=1

gpgcheck=0

priority=1

bash

[root@ceph-node01 ~]# yum update

[root@ceph-node01 ~]# reboot4、安装ceph-deploy工具

bash

[root@node01 ~]# yum install -y ceph-deploy 5、ceph集群初始化

bash

[root@node01 ~]# mkdir /etc/ceph

[root@node01 ~]# cd /etc/ceph

[root@node01 ceph]# ImportError: No module named pkg_resources初始化时提示错误,安装yum install -y python2-pip

bash

[root@node01 ceph]# ceph-deploy new node01

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy new node01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] func : <function new at 0x7f671c856ed8>

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f671bfd17a0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['node01']

[ceph_deploy.cli][INFO ] public_network : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[node01][DEBUG ] connected to host: node01

[node01][DEBUG ] detect platform information from remote host

[node01][DEBUG ] detect machine type

[node01][DEBUG ] find the location of an executable

[node01][INFO ] Running command: /usr/sbin/ip link show

[node01][INFO ] Running command: /usr/sbin/ip addr show

[node01][DEBUG ] IP addresses found: [u'192.168.140.10']

[ceph_deploy.new][DEBUG ] Resolving host node01

[ceph_deploy.new][DEBUG ] Monitor node01 at 192.168.140.10

[ceph_deploy.new][DEBUG ] Monitor initial members are ['node01']

[ceph_deploy.new][DEBUG ] Monitor addrs are ['192.168.140.10']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

bash

[root@node01 ceph]# ls

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

ceph.conf:配置文件

ceph-deploy-ceph.log:日志文件

ceph.mon.keyring:ceph mon组件通信时的认证令牌6、所有ceph集群节点安装相关软件

bash

[root@node01 ceph]# yum install -y ceph ceph-radosgw

[root@node01 ceph]# ceph -v

ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable)7、客户端安装ceph-common软件

bash

[root@app ~]# yum install -y ceph-common8、在ceph集群中创建ceph monitor组件

8.1 在配置文件中添加public network配置

bash

[root@node01 ceph]# cat ceph.conf

[global]

fsid = e2010562-0bae-4999-9247-4017f875acc8

mon_initial_members = node01

mon_host = 192.168.140.10

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 192.168.140.0/248.2 在node01节点创建MON服务

bash

[root@node01 ceph]# ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mon create-initial

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create-initial

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f45d3cec368>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mon at 0x7f45d3d3c500>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] keyrings : None

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts node01

[ceph_deploy.mon][DEBUG ] detecting platform for host node01 ...

[node01][DEBUG ] connected to host: node01

[node01][DEBUG ] detect platform information from remote host

[node01][DEBUG ] detect machine type

[node01][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.9.2009 Core

[node01][DEBUG ] determining if provided host has same hostname in remote

[node01][DEBUG ] get remote short hostname

[node01][DEBUG ] deploying mon to node01

[node01][DEBUG ] get remote short hostname

[node01][DEBUG ] remote hostname: node01

[node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node01][DEBUG ] create the mon path if it does not exist

[node01][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-node01/done

[node01][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-node01/done

[node01][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-node01.mon.keyring

[node01][DEBUG ] create the monitor keyring file

[node01][INFO ] Running command: ceph-mon --cluster ceph --mkfs -i node01 --keyring /var/lib/ceph/tmp/ceph-node01.mon.keyring --setuser 167 --setgroup 167

[node01][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-node01.mon.keyring

[node01][DEBUG ] create a done file to avoid re-doing the mon deployment

[node01][DEBUG ] create the init path if it does not exist

[node01][INFO ] Running command: systemctl enable ceph.target

[node01][INFO ] Running command: systemctl enable ceph-mon@node01

[node01][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node01.service to /usr/lib/systemd/system/ceph-mon@.service.

[node01][INFO ] Running command: systemctl start ceph-mon@node01

[node01][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node01.asok mon_status

[node01][DEBUG ] ********************************************************************************

[node01][DEBUG ] status for monitor: mon.node01

[node01][DEBUG ] {

[node01][DEBUG ] "election_epoch": 3,

[node01][DEBUG ] "extra_probe_peers": [],

[node01][DEBUG ] "feature_map": {

[node01][DEBUG ] "mon": [

[node01][DEBUG ] {

[node01][DEBUG ] "features": "0x3ffddff8ffacfffb",

[node01][DEBUG ] "num": 1,

[node01][DEBUG ] "release": "luminous"

[node01][DEBUG ] }

[node01][DEBUG ] ]

[node01][DEBUG ] },

[node01][DEBUG ] "features": {

[node01][DEBUG ] "quorum_con": "4611087854031667195",

[node01][DEBUG ] "quorum_mon": [

[node01][DEBUG ] "kraken",

[node01][DEBUG ] "luminous",

[node01][DEBUG ] "mimic",

[node01][DEBUG ] "osdmap-prune"

[node01][DEBUG ] ],

[node01][DEBUG ] "required_con": "144115738102218752",

[node01][DEBUG ] "required_mon": [

[node01][DEBUG ] "kraken",

[node01][DEBUG ] "luminous",

[node01][DEBUG ] "mimic",

[node01][DEBUG ] "osdmap-prune"

[node01][DEBUG ] ]

[node01][DEBUG ] },

[node01][DEBUG ] "monmap": {

[node01][DEBUG ] "created": "2024-06-14 14:33:44.291070",

[node01][DEBUG ] "epoch": 1,

[node01][DEBUG ] "features": {

[node01][DEBUG ] "optional": [],

[node01][DEBUG ] "persistent": [

[node01][DEBUG ] "kraken",

[node01][DEBUG ] "luminous",

[node01][DEBUG ] "mimic",

[node01][DEBUG ] "osdmap-prune"

[node01][DEBUG ] ]

[node01][DEBUG ] },

[node01][DEBUG ] "fsid": "e2010562-0bae-4999-9247-4017f875acc8",

[node01][DEBUG ] "modified": "2024-06-14 14:33:44.291070",

[node01][DEBUG ] "mons": [

[node01][DEBUG ] {

[node01][DEBUG ] "addr": "192.168.140.10:6789/0",

[node01][DEBUG ] "name": "node01",

[node01][DEBUG ] "public_addr": "192.168.140.10:6789/0",

[node01][DEBUG ] "rank": 0

[node01][DEBUG ] }

[node01][DEBUG ] ]

[node01][DEBUG ] },

[node01][DEBUG ] "name": "node01",

[node01][DEBUG ] "outside_quorum": [],

[node01][DEBUG ] "quorum": [

[node01][DEBUG ] 0

[node01][DEBUG ] ],

[node01][DEBUG ] "rank": 0,

[node01][DEBUG ] "state": "leader",

[node01][DEBUG ] "sync_provider": []

[node01][DEBUG ] }

[node01][DEBUG ] ********************************************************************************

[node01][INFO ] monitor: mon.node01 is running

[node01][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node01.asok mon_status

[ceph_deploy.mon][INFO ] processing monitor mon.node01

[node01][DEBUG ] connected to host: node01

[node01][DEBUG ] detect platform information from remote host

[node01][DEBUG ] detect machine type

[node01][DEBUG ] find the location of an executable

[node01][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node01.asok mon_status

[ceph_deploy.mon][INFO ] mon.node01 monitor has reached quorum!

[ceph_deploy.mon][INFO ] all initial monitors are running and have formed quorum

[ceph_deploy.mon][INFO ] Running gatherkeys...

[ceph_deploy.gatherkeys][INFO ] Storing keys in temp directory /tmp/tmph2e00E

[node01][DEBUG ] connected to host: node01

[node01][DEBUG ] detect platform information from remote host

[node01][DEBUG ] detect machine type

[node01][DEBUG ] get remote short hostname

[node01][DEBUG ] fetch remote file

[node01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --admin-daemon=/var/run/ceph/ceph-mon.node01.asok mon_status

[node01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node01/keyring auth get client.admin

[node01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node01/keyring auth get client.bootstrap-mds

[node01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node01/keyring auth get client.bootstrap-mgr

[node01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node01/keyring auth get client.bootstrap-osd

[node01][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-node01/keyring auth get client.bootstrap-rgw

[ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring

[ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmph2e00E

[root@node01 ceph]#

bash

[root@node01 ceph]# netstat -tunlp | grep ceph-mon

tcp 0 0 192.168.140.10:6789 0.0.0.0:* LISTEN 8522/ceph-mon

bash

[root@node01 ceph]# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log rbdmap

ceph.bootstrap-mgr.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring

bash

[root@node01 ceph]# ceph health

HEALTH_OK8.2 在所有ceph集群节点上同步配置

bash

[root@node01 ceph]# ceph-deploy admin node01 node02 node03

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy admin node01 node02 node03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fbc339dd7e8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['node01', 'node02', 'node03']

[ceph_deploy.cli][INFO ] func : <function admin at 0x7fbc346f3320>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node01

[node01][DEBUG ] connected to host: node01

[node01][DEBUG ] detect platform information from remote host

[node01][DEBUG ] detect machine type

[node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node02

The authenticity of host 'node02 (192.168.140.11)' can't be established.

ECDSA key fingerprint is SHA256:zyaTdmF7ziBAGyqkd/uOp+BnislJYeB9cr5rg0Mh488.

ECDSA key fingerprint is MD5:3d:95:e4:7f:f0:ca:e6:0d:db:24:15:49:da:50:01:d4.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node02' (ECDSA) to the list of known hosts.

[node02][DEBUG ] connected to host: node02

[node02][DEBUG ] detect platform information from remote host

[node02][DEBUG ] detect machine type

[node02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node03

The authenticity of host 'node03 (192.168.140.12)' can't be established.

ECDSA key fingerprint is SHA256:cMuRX+kQHIlvZ74g4XfGisp4SpqQ14GmzD8fXk/7Rc0.

ECDSA key fingerprint is MD5:9b:1f:e0:95:ac:b8:ca:43:3c:49:9a:48:4f:e3:11:a5.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node03' (ECDSA) to the list of known hosts.

[node03][DEBUG ] connected to host: node03

[node03][DEBUG ] detect platform information from remote host

[node03][DEBUG ] detect machine type

[node03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf在其他节点查看配置

bash

[root@node02 ~]# ls /etc/ceph/

ceph.client.admin.keyring ceph.conf rbdmap tmp5ymlpm8.3 为避免MON的单点故障,在另外两个节点添加MON服务

bash

[root@node01 ceph]# ceph-deploy mon add node02

[root@node01 ceph]# ceph-deploy mon add node038.4 查看ceph集群状态

bash

[root@node01 ceph]# ceph -s

cluster:

id: e2010562-0bae-4999-9247-4017f875acc8

health: HEALTH_OK

services:

mon: 3 daemons, quorum node01,node02,node03

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 9、创建mgr服务

ceph自L版本后,添加Ceph Manager Daemon,简称ceph-mgr

该组件的出现主要是为了缓解ceph-monitor的压力,分担了moniotr的工作,例如插件管理等,以更好的管理集群

9.1 在node01节点创建mgr

bash

[root@node01 ceph]# ceph-deploy mgr create node01

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mgr create node01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('node01', 'node01')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f0a5c66ebd8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mgr at 0x7f0a5cf4f230>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts node01:node01

[node01][DEBUG ] connected to host: node01

[node01][DEBUG ] detect platform information from remote host

[node01][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to node01

[node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node01][WARNIN] mgr keyring does not exist yet, creating one

[node01][DEBUG ] create a keyring file

[node01][DEBUG ] create path recursively if it doesn't exist

[node01][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.node01 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-node01/keyring

[node01][INFO ] Running command: systemctl enable ceph-mgr@node01

[node01][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@node01.service to /usr/lib/systemd/system/ceph-mgr@.service.

[node01][INFO ] Running command: systemctl start ceph-mgr@node01

[node01][INFO ] Running command: systemctl enable ceph.target

[root@node01 ceph]#

[root@node01 ceph]# netstat -tunlp | grep ceph-mgr

tcp 0 0 192.168.140.10:6800 0.0.0.0:* LISTEN 9115/ceph-mgr 9.2 添加多个mgr

bash

[root@node01 ceph]# ceph-deploy mgr create node02

[root@node01 ceph]# ceph-deploy mgr create node039.3 再次查看集群状态

bash

[root@node01 ceph]# ceph -s

cluster:

id: e2010562-0bae-4999-9247-4017f875acc8

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 3 daemons, quorum node01,node02,node03

mgr: node01(active), standbys: node02, node03

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 10、添加osd

10.1 磁盘初始化

bash

[root@node01 ceph]# ceph-deploy disk zap node01 /dev/sdb

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk zap node01 /dev/sdb

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : zap

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fbbcf959878>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : node01

[ceph_deploy.cli][INFO ] func : <function disk at 0x7fbbcf994a28>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : ['/dev/sdb']

[ceph_deploy.osd][DEBUG ] zapping /dev/sdb on node01

[node01][DEBUG ] connected to host: node01

[node01][DEBUG ] detect platform information from remote host

[node01][DEBUG ] detect machine type

[node01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[node01][DEBUG ] zeroing last few blocks of device

[node01][DEBUG ] find the location of an executable

[node01][INFO ] Running command: /usr/sbin/ceph-volume lvm zap /dev/sdb

[node01][WARNIN] --> Zapping: /dev/sdb

[node01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[node01][WARNIN] Running command: /usr/bin/dd if=/dev/zero of=/dev/sdb bs=1M count=10 conv=fsync

[node01][WARNIN] stderr: 10+0 records in

[node01][WARNIN] 10+0 records out

[node01][WARNIN] 10485760 bytes (10 MB) copied

[node01][WARNIN] stderr: , 0.0304543 s, 344 MB/s

[node01][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdb>

bash

[root@node01 ceph]# ceph-deploy disk zap node02 /dev/sdb

[root@node01 ceph]# ceph-deploy disk zap node03 /dev/sdb 10.2 添加osd服务

bash

[root@node01 ceph]# ceph-deploy osd create --data /dev/sdb node01

[root@node01 ceph]# ceph-deploy osd create --data /dev/sdb node02

[root@node01 ceph]# ceph-deploy osd create --data /dev/sdb node03

bash

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create --data /dev/sdb node03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fbf998db998>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] host : node03

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] func : <function osd at 0x7fbf999119b0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] data : /dev/sdb

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdb

[node03][DEBUG ] connected to host: node03

[node03][DEBUG ] detect platform information from remote host

[node03][DEBUG ] detect machine type

[node03][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.osd][DEBUG ] Deploying osd to node03

[node03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node03][WARNIN] osd keyring does not exist yet, creating one

[node03][DEBUG ] create a keyring file

[node03][DEBUG ] find the location of an executable

[node03][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdb

[node03][WARNIN] Running command: /bin/ceph-authtool --gen-print-key

[node03][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 33310a37-6e35-4f34-a8c6-543254bf0384

[node03][WARNIN] Running command: /usr/sbin/vgcreate --force --yes ceph-0cf70ab4-cab2-4492-9610-691efc7bc222 /dev/sdb

[node03][WARNIN] stdout: Physical volume "/dev/sdb" successfully created.

[node03][WARNIN] stdout: Volume group "ceph-0cf70ab4-cab2-4492-9610-691efc7bc222" successfully created

[node03][WARNIN] Running command: /usr/sbin/lvcreate --yes -l 100%FREE -n osd-block-33310a37-6e35-4f34-a8c6-543254bf0384 ceph-0cf70ab4-cab2-4492-9610-691efc7bc222

[node03][WARNIN] stdout: Logical volume "osd-block-33310a37-6e35-4f34-a8c6-543254bf0384" created.

[node03][WARNIN] Running command: /bin/ceph-authtool --gen-print-key

[node03][WARNIN] Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-2

[node03][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/ceph-0cf70ab4-cab2-4492-9610-691efc7bc222/osd-block-33310a37-6e35-4f34-a8c6-543254bf0384

[node03][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-2

[node03][WARNIN] Running command: /bin/ln -s /dev/ceph-0cf70ab4-cab2-4492-9610-691efc7bc222/osd-block-33310a37-6e35-4f34-a8c6-543254bf0384 /var/lib/ceph/osd/ceph-2/block

[node03][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-2/activate.monmap

[node03][WARNIN] stderr: got monmap epoch 3

[node03][WARNIN] Running command: /bin/ceph-authtool /var/lib/ceph/osd/ceph-2/keyring --create-keyring --name osd.2 --add-key AQDc7Wtm/uzoEhAAJfd9GdY0QxFhzRgalw5UWg==

[node03][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-2/keyring

[node03][WARNIN] stdout: added entity osd.2 auth auth(auid = 18446744073709551615 key=AQDc7Wtm/uzoEhAAJfd9GdY0QxFhzRgalw5UWg== with 0 caps)

[node03][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/keyring

[node03][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/

[node03][WARNIN] Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 2 --monmap /var/lib/ceph/osd/ceph-2/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-2/ --osd-uuid 33310a37-6e35-4f34-a8c6-543254bf0384 --setuser ceph --setgroup ceph

[node03][WARNIN] --> ceph-volume lvm prepare successful for: /dev/sdb

[node03][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2

[node03][WARNIN] Running command: /bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-0cf70ab4-cab2-4492-9610-691efc7bc222/osd-block-33310a37-6e35-4f34-a8c6-543254bf0384 --path /var/lib/ceph/osd/ceph-2 --no-mon-config

[node03][WARNIN] Running command: /bin/ln -snf /dev/ceph-0cf70ab4-cab2-4492-9610-691efc7bc222/osd-block-33310a37-6e35-4f34-a8c6-543254bf0384 /var/lib/ceph/osd/ceph-2/block

[node03][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-2/block

[node03][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-2

[node03][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2

[node03][WARNIN] Running command: /bin/systemctl enable ceph-volume@lvm-2-33310a37-6e35-4f34-a8c6-543254bf0384

[node03][WARNIN] stderr: Created symlink from /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-2-33310a37-6e35-4f34-a8c6-543254bf0384.service to /usr/lib/systemd/system/ceph-volume@.service.

[node03][WARNIN] Running command: /bin/systemctl enable --runtime ceph-osd@2

[node03][WARNIN] stderr: Created symlink from /run/systemd/system/ceph-osd.target.wants/ceph-osd@2.service to /usr/lib/systemd/system/ceph-osd@.service.

[node03][WARNIN] Running command: /bin/systemctl start ceph-osd@2

[node03][WARNIN] --> ceph-volume lvm activate successful for osd ID: 2

[node03][WARNIN] --> ceph-volume lvm create successful for: /dev/sdb

[node03][INFO ] checking OSD status...

[node03][DEBUG ] find the location of an executable

[node03][INFO ] Running command: /bin/ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host node03 is now ready for osd use.

bash

[root@node01 ceph]# netstat -tunlp | grep osd

tcp 0 0 192.168.140.10:6801 0.0.0.0:* LISTEN 9705/ceph-osd

tcp 0 0 192.168.140.10:6802 0.0.0.0:* LISTEN 9705/ceph-osd

tcp 0 0 192.168.140.10:6803 0.0.0.0:* LISTEN 9705/ceph-osd

tcp 0 0 192.168.140.10:6804 0.0.0.0:* LISTEN 9705/ceph-osd 10.3 再次查看集群状态

bash

[root@node01 ceph]# ceph -s

cluster:

id: e2010562-0bae-4999-9247-4017f875acc8

health: HEALTH_OK

services:

mon: 3 daemons, quorum node01,node02,node03

mgr: node01(active), standbys: node02, node03

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 57 GiB / 60 GiB avail

pgs: 集群扩容的流程(加osd):

1、准备系统基础环境

2、新节点安装ceph, ceph-radosgw软件

3、同步配置文件到新节点

4、磁盘初始化,添加osd

11、添加dashboard插件

11.1 查看mgr运行的主节点

bash

[root@node01 ceph]# ceph -s

cluster:

id: e2010562-0bae-4999-9247-4017f875acc8

health: HEALTH_OK

services:

mon: 3 daemons, quorum node01,node02,node03

mgr: node01(active), standbys: node02, node03

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 57 GiB / 60 GiB avail

pgs: 11.2 启用dashboard插件

bash

[root@node01 ceph]# ceph mgr module enable dashboard11.3 创建dashboard需要的证书

bash

[root@node01 ceph]# ceph dashboard create-self-signed-cert

Self-signed certificate created

[root@node01 ceph]# mkdir /etc/mgr-dashboard

[root@node01 ceph]# cd /etc/mgr-dashboard

[root@node01 mgr-dashboard]# openssl req -new -nodes -x509 -subj "/O=IT-ceph/CN=cn" -days 3650 -keyout dashboard.key -out dashboard.crt -extensions v3_ca

Generating a 2048 bit RSA private key

...............................................................................................+++

......+++

writing new private key to 'dashboard.key'

-----

[root@node01 mgr-dashboard]#

[root@node01 mgr-dashboard]# ls

dashboard.crt dashboard.key

[root@node01 mgr-dashboard]# 11.4 设置dashboard访问地址

bash

[root@node01 mgr-dashboard]# ceph config set mgr mgr/dashboard/server_addr 192.168.140.10

[root@node01 mgr-dashboard]# ceph config set mgr mgr/dashboard/server_port 8080

[root@node01 mgr-dashboard]# 11.5 重启dashboard插件

bash

[root@node01 mgr-dashboard]# ceph mgr module disable dashboard

[root@node01 mgr-dashboard]# ceph mgr module enable dashboard11.6 设置用户名、密码

bash

[root@node01 mgr-dashboard]# ceph dashboard set-login-credentials martin redhat

Username and password updated

[root@node01 mgr-dashboard]# 11.7 访问webUI