1、概述

kube-rbac-proxy 是 Kubernetes 生态中一个专注于"基于角色的访问控制(RBAC)"的轻量级代理组件 ,通常以 Sidecar 容器的形式部署在 Pod 中,为服务提供细粒度的请求鉴权能力。它尤其适用于保护 /metrics、/debug 等敏感端点,通过将 RBAC 策略直接应用到应用层,弥补了 Kubernetes 原生网络策略的不足,成为服务安全的关键防线。

在使用 Prometheus 监控 Kubernetes 集群时,一个常见的问题是 Prometheus 检索的指标可能包含敏感信息(例如,Prometheus 节点导出器暴露了主机的内核版本),这些信息可能被潜在入侵者利用。虽然 Prometheus 中验证和授权指标端点 的默认方法是使用 TLS 客户端证书,但由于发行、验证和轮换客户端证书的复杂性,Prometheus 请求在大多数情况下并未经过验证和授权。为了解决这一问题,kube-rbac-proxy 应运而生,它是一个针对单一上游的小型 HTTP 代理,能够使用 SubjectAccessReviews 对 Kubernetes API 执行 RBAC 鉴权,从而确保只有经过授权的请求(如来自 Prometheus 的请求)能够从 Pod 中运行的应用程序中检索指标。本文将详细解释 kube-rbac-proxy 如何利用 Kubernetes RBAC 实现这一功能。

项目地址:https://github.com/brancz/kube-rbac-proxy

2、RBAC是如何在幕后工作的

Kubernetes基于角色的访问控制(RBAC)本身只解决了一半的问题。顾名思义,它只涉及访问控制,意味着授权,而不是认证。在一个请求能够被授权之前,它需要被认证。简单地说:我们需要找出谁在执行这个请求。在Kubernetes中,服务自我认证的机制是ServiceAccount令牌。

Kubernetes API公开了验证ServiceAccount令牌的能力,使用所谓的TokenReview。TokenReview的响应仅仅是ServiceAccount令牌是否被成功验证,以及指定的令牌与哪个用户有关。kube-rbac-proxy期望ServiceAccount令牌在Authorization HTTP头中被指定,然后使用TokenReview对其进行验证。

在这一点上,一个请求已经被验证,但还没有被授权。与TokenReview平行,Kuberenetes有一个SubjectAccessReview,它是授权API的一部分。在SubjectAccessReview中,指定了一个预期的行动以及想要执行该行动的用户。在Prometheus请求度量的具体案例中,/metrics HTTP端点被请求。不幸的是,在Kubernetes中这不是一个完全指定的资源,然而,SubjectAccessReview资源也能够授权所谓的 "非资源请求"。

当用Prometheus监控Kubernetes时,那么Prometheus服务器可能已经拥有访问/metrics非资源url的权限,因为从Kubernetes apiserver检索指标需要同样的RBAC角色。

注意 1:TokenReview详解参见《Kubernetes身份认证资源 ------ TokenReview详解》这篇博文,SubjectAccessReview详解参见《Kubernetes鉴权资源 ------ SubjectAccessReview详解》这篇博文。

3、kube-rbac-proxy原理及示例

3.1 原理

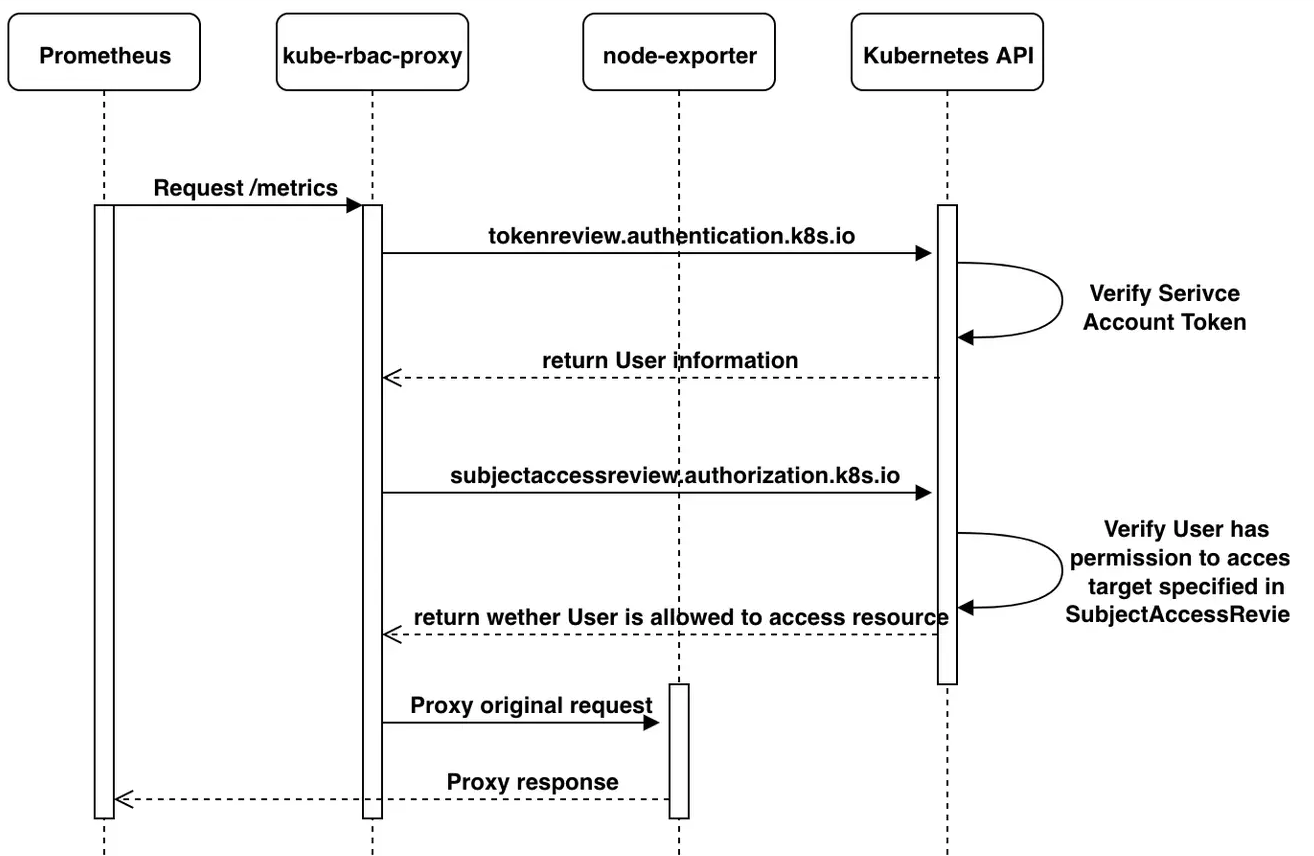

现在已经解释了所有必要的部分,让我们看看kube-rbac-proxy是如何具体地验证和授权一个请求的,案例在本博文的开头就已经说明了。普罗米修斯从节点输出器中请求度量。

当Prometheus对node-exporter执行请求时,kube-rbac-proxy在它前面,kube-rbac-proxy用提供的ServiceAccount令牌执行TokenReview,如果TokenReview成功,它继续使用SubjectAccessReview来验证,ServiceAccount被授权访问/metrics HTTP端点。

可见,从Prometheus验证和授权请求的整个流程是这样的:

3.2 示例

通过node-exporter的servicemonitors资源对象配置可知,prometheus访问node-expoter指标用的是/var/run/secrets/kubernetes.io/serviceaccount/token。

[root@xxx]# kubectl get servicemonitors.monitoring.coreos.com -n=xxxx node-exporter -o yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

.....

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 1m

metricRelabelings:

- action: keep

regex: node_(uname|network)_info|node_cpu_.+|node_memory_Mem.+_bytes|node_memory_SReclaimable_bytes|node_memory_Cached_bytes|node_memory_Buffers_bytes|node_network_(.+_bytes_total|up)|node_network_.+_errs_total|node_nf_conntrack_entries.*|node_disk_.+_completed_total|node_disk_.+_bytes_total|node_filesystem_files|node_filesystem_files_free|node_filesystem_avail_bytes|node_filesystem_size_bytes|node_filesystem_free_bytes|node_filesystem_readonly|node_load.+|node_timex_offset_seconds

sourceLabels:

- __name__

port: https

relabelings:

- action: labeldrop

regex: (service|endpoint)

- action: replace

regex: (.*)

replacement: $1

sourceLabels:

- __meta_kubernetes_pod_node_name

targetLabel: instance

scheme: https

tlsConfig:

insecureSkipVerify: true

jobLabel: app.kubernetes.io/name

selector:

matchLabels:

app.kubernetes.io/name: node-exporter进入prometheus容器内部获取并解析token。

[root@x]# kubectl exec -it -n=xxxx prometheus-k8s-0 /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "prometheus" out of: prometheus, config-reloader

/prometheus # cd /var/run/secrets/kubernetes.io/serviceaccount/

/var/run/secrets/kubernetes.io/serviceaccount # ls

ca.crt namespace token

/var/run/secrets/kubernetes.io/serviceaccount # cat token

eyJhbGciOiJSUzI1NiIsImtpZCI6ImpyaFRNMDRFR0h5a2JpSUY2Vk5jM2lZYnRYY2Fwcl9yTmhDV04tTkdzdnMifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzczMTA4NDgwLCJpYXQiOjE3NDE1NzI0ODAsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJjbG91ZGJhc2VzLW1vbml0b3Jpbmctc3lzdGVtIiwicG9kIjp7Im5hbWUiOiJwcm9tZXRoZXVzLWs4cy0wIiwidWlkIjoiZDhmMDJmZDgtNGVjNS00M2VlLWIyYzQtMzY3MWViMTEyOWViIn0sInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJwcm9tZXRoZXVzLWs4cyIsInVpZCI6IjE4NDRjNzcxLTkyMjctNDYzOS1iY2FlLTQ3NTQ0NmU5MDU0OCJ9LCJ3YXJuYWZ0ZXIiOjE3NDE1NzYwODd9LCJuYmYiOjE3NDE1NzI0ODAsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpjbG91ZGJhc2VzLW1vbml0b3Jpbmctc3lzdGVtOnByb21ldGhldXMtazhzIn0.lBPR2j1Ad_zzRH98nk35DFIKhBXPCLN4KcnqzZA1i8tOwVEkMY4nGUIXT8par1AWDKNm2S6wpJUH7WPh3VM4k-KOrD-gFC8lovPj67NEY__6cW0X6VHTSBC2euAr4uVYSoL4tCW0EzPoDajoXrqmcOAqwzNSZb7ecLaN5yv5VyEUK79zbJvZ2-n-T0y2nYBe3qP1wH2XIrhcWB2Vam4_9YICeJ6WmGwc16rH_phQu8Zu58EJ7CDt_oiQ7Iz/var/run/secrets/kubernetes.io/serviceaccount #

查看node-exporter服务配置:

apiVersion: v1

kind: Service

metadata:

......

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: https

port: 9100

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/name: node-exporter

sessionAffinity: None

type: ClusterIP查看node-exporter守护进程集配置,可以看到node-exporter应用容器本身是没有开放容器端口的,所有外部进入流量都走kube-rbac-proxy,进入流量kube-rbac-proxy先认证(TokenReview),认证并获取用户信息prometheus-k8s,然后再鉴权(SubjectAccessReview,查看是否有/metrics访问权限),鉴权通过后再把流量代理到node-exporter容器(http://127.0.0.1:9100/):

apiVersion: apps/v1

kind: DaemonSet

metadata:

......

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/name: node-exporter

template:

metadata:

creationTimestamp: null

labels:

app.kubernetes.io/name: node-exporter

app.kubernetes.io/version: v0.18.1

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/edge

operator: DoesNotExist

containers:

- args:

- --web.listen-address=127.0.0.1:9100

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --no-collector.wifi

- --no-collector.hwmon

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+)($|/)

- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$

image: 192.168.137.94:80/cscec_big_data-cloudbases/node-exporter:v0.18.1

imagePullPolicy: IfNotPresent

name: node-exporter

resources:

limits:

cpu: "1"

memory: 500Mi

requests:

cpu: 102m

memory: 180Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /host/proc

name: proc

readOnly: true

- mountPath: /host/sys

name: sys

readOnly: true

- mountPath: /host/root

mountPropagation: HostToContainer

name: root

readOnly: true

- args:

- --logtostderr

- --secure-listen-address=[$(IP)]:9100

- --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_RSA_WITH_AES_128_CBC_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256,TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256

- --upstream=http://127.0.0.1:9100/

env:

- name: IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

image: 192.168.137.94:80/cscec_big_data-cloudbases/kube-rbac-proxy:v0.8.0

imagePullPolicy: IfNotPresent

name: kube-rbac-proxy

ports:

- containerPort: 9100

hostPort: 9100

name: https

protocol: TCP

resources:

limits:

cpu: "1"

memory: 100Mi

requests:

cpu: 10m

memory: 20Mi

securityContext:

runAsGroup: 65532

runAsNonRoot: true

runAsUser: 65532

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

hostPID: true

nodeSelector:

kubernetes.io/os: linux

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

runAsNonRoot: true

runAsUser: 65534

serviceAccount: node-exporter

serviceAccountName: node-exporter

terminationGracePeriodSeconds: 30

tolerations:

- operator: Exists

volumes:

- hostPath:

path: /proc

type: ""

name: proc

- hostPath:

path: /sys

type: ""

name: sys

- hostPath:

path: /

type: ""

name: root

updateStrategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate

status:

currentNumberScheduled: 1

desiredNumberScheduled: 1

numberAvailable: 1

numberMisscheduled: 0

numberReady: 1

observedGeneration: 1

updatedNumberScheduled: 1注意 1: clusterrolebinding:

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: ...... roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: xxxx-prometheus-k8s subjects: - kind: ServiceAccount name: prometheus-k8s namespace: xxxxx

clusterrole:

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: ...... rules: - apiGroups: - "" resources: - nodes/metrics - nodes - services - endpoints - pods verbs: - get - list - watch - nonResourceURLs: - /metrics verbs: - get

5、总结

kube-rbac-proxy 作为 Kubernetes RBAC 的扩展组件,通过将权限控制能力延伸至应用层,为服务端点提供细粒度权限控制。特别适用于敏感接口防护、多租户资源隔离等场景(如 Prometheus 安全采集 node-exporter/kube-state-metrics 指标)。尽管会增加少量部署复杂度,但通过强化 API 访问审计、身份验证和权限校验,显著提升集群安全防护能力,已成为云原生环境中保障关键业务安全的核心组件。