概述

官方文档:https://kubernetes.io/zh-cn/docs/concepts/scheduling-eviction/taint-and-toleration/

污点是作用在k8s集群节点上的(包括worker和master),Node被设置上污点之后就和Pod之间存在了一种相斥的关系,进而拒绝Pod调度进来,甚至可以将已经存在的Pod驱逐出去。

污点类似于Label标签,但是污点是在节点上的。定义的语法结构也有点类似,但也存在一定区别

学习Label标签可以阅读这篇文章:K8s新手系列之Label标签和Label选择器

污点的组成结构

一个污点由以下三部分组成:

key=value:effect- key:污点的键(自定义,如 node-type)。

- value:污点的值(可选,如 special)。

- effect:污点的效果,决定 Pod 如何被影响,可选值:

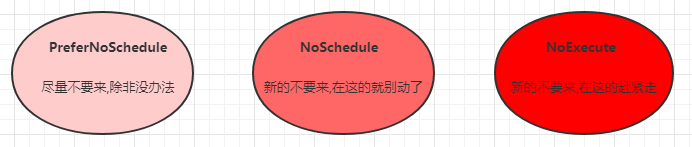

- PreferNoSchedule:尽量避免 Pod 调度到该节点(非强制,调度器会尝试寻找其他节点,但若没有合适节点仍会调度)。

- NoSchedule:禁止 Pod 调度到该节点(除非 Pod 有对应的容忍)。

- NoExecute:不仅禁止调度,还会驱逐已存在的不满足容忍的 Pod(适用于节点维护、故障处理等场景)。

污点的作用

污点(Taint) 是一种节点级别的属性,用于 阻止特定 Pod 调度到节点,或使节点对 Pod 具有 "排斥性"。它通常与 容忍度(Toleration) 配合使用,实现更精细的资源调度策略。以下是污点的核心作用、应用场景和工作机制

隔离节点

将节点标记为特定用途(如专用节点、性能节点),阻止普通 Pod 调度到该节点,确保关键业务独占资源。

例如:将 GPU 节点、高内存节点标记为污点,仅允许特定业务的 Pod(如机器学习任务、数据库)通过容忍度调度至此。

驱逐非预期 Pod

通过 NoExecute 类型的污点,可强制驱逐节点上 不匹配容忍度的现有 Pod,常用于节点维护、升级或故障处理。

例如:节点需要重启时,添加 NoExecute 污点,驱逐所有不兼容的 Pod 到其他节点。

实现分层调度策略

结合容忍度,实现 "节点分组 + Pod 定向调度" 的分层管理,避免资源混用导致的性能干扰或安全问题。

例如:区分开发、测试、生产环境节点,通过污点限制不同环境的 Pod 只能调度到对应节点。

K8s集群中默认存在的污点

在 Kubernetes(K8s)集群中,控制平面节点(如 Master 节点)通常会自动添加默认污点,以避免普通业务 Pod 调度到这些节点,确保控制平面组件(如 API Server、Scheduler、Controller Manager 等)的资源不受干扰。

-

node-role.kubernetes.io/control-plane:NoSchedule

-

该污点阻止普通 Pod 调度到控制平面节点,但允许 K8s 系统组件(如 kube-apiserver、etcd)的 Pod 运行。

-

因为控制平面节点需专注于处理集群管理任务,普通业务 Pod(如 Web 服务、数据库)不应占用其资源。

-

-

node.kubernetes.io/not-ready:NoExecute

- 该污点是一个动态污点,由 K8s 自动管理的临时污点,当节点处于 NotReady 状态(如网络故障、节点失联)时自动添加,用于驱逐该节点上的 Pod。当节点恢复正常后,该污点会自动移除。

-

node.kubernetes.io/unreachable:NoExecute

- 该污点是一个动态污点,当节点与控制平面(API Server)失联(如网络分区、节点故障),且超过 pod-eviction-timeout(默认 5 分钟)时,节点会被标记为 Unreachable,并自动添加此污点。

- 当节点恢复通信,污点会自动移除,已调度到其他节点的 Pod 不会回迁。

-

node.kubernetes.io/out-of-disk:NoExecute

-

该污点是一个动态污点,节点磁盘使用率超过阈值(如 kubelet 参数 --eviction-hard 配置的memory.available<100Mi、nodefs.available<10%)

-

主要作用是:驱逐 Pod 以释放磁盘空间,优先驱逐消耗磁盘资源较多的 Pod(如日志、临时文件)。

-

此污点可能导致关键系统 Pod(如 kube-proxy)被驱逐,需合理配置 tolerations。

-

-

node.kubernetes.io/memory-pressure:NoExecute

-

该污点是一个动态污点,节点内存压力过大(如可用内存低于阈值)会触发。

-

触发 Pod 驱逐,优先驱逐资源请求高、QoS 等级低的 Pod(如 BestEffort 类型)。

-

可通过 kubelet 参数 --eviction-hard 调整内存压力阈值(如 memory.available<5%)。

-

-

node.kubernetes.io/disk-pressure:NoExecute

-

该污点是一个动态污点,节点磁盘压力过大(如根分区或容器运行时分区空间不足)会触发

-

与 out-of-disk 类似,但在磁盘空间接近耗尽(尚未完全耗尽)时触发,用于预防磁盘溢出。

-

-

node.kubernetes.io/pid-pressure:NoExecute

-

该污点是一个动态污点,节点进程 ID(PID)资源不足(如系统创建新进程的能力受限)会触发

-

驱逐 Pod 以释放 PID 资源,避免系统因 PID 耗尽而崩溃。

-

污点的管理

查看污点

语法:

# 查看所有节点的污点,grep查看多行

kubectl describe node | grep -C <int-num> Taints

# 查看指定节点的污点,grep查看多行

kubectl describe node <node-name> | grep -C <int-num>Taints示例:

# 查看主节点的污点

[root@master ~]# kubectl describe node master | grep Taints

Taints: node-role.kubernetes.io/control-plane:NoSchedule添加污点

语法:

# 其中=value可以省略,相当于添加一个不带value的污点

kubectl taint node <node-name> key<=value>:effect示例:

# 给master节点添加一个带value的污点

[root@master ~]# kubectl taint node master name=huangsir:PreferNoSchedule

node/master tainted

# 给master节点添加一个不带value的污点

[root@master ~]# kubectl taint node master app:PreferNoSchedule

node/master tainted

# 查看污点

[root@master ~]# kubectl describe node master | grep -C 2 Taints

Taints: node-role.kubernetes.io/control-plane:NoSchedule #master节点自带的污点

app:PreferNoSchedule # 添加的不带value的污点

name=huangsir:PreferNoSchedule # 添加的带value的污点删除污点

语法:

kubectl taint nodes <node-name> <key><=value>:<effect>-示例:

# 删除app:PreferNoSchedule的污点

[root@master01 ~]# kubectl taint nodes master01 app:PreferNoSchedule-

node/master01 untainted

# 验证,发现已经删除

[root@master01 ~]# kubectl describe node master01 | grep -C 2 -i taint

CreationTimestamp: Sat, 26 Apr 2025 14:02:33 +0800

Taints: node-role.kubernetes.io/control-plane:NoSchedule

name=huangsir:PreferNoSchedule修改污点

修改污点实际上是先删除原有的污点,再添加新的污点。

示例:将name=huangsir:PreferNoSchedule修改为name=zhangsan:NoSchedule

#先删除name=huangsir:PreferNoSchedule

[root@master01 ~]# kubectl taint nodes master01 name=huangsir:PreferNoSchedule-

node/master01 untainted

# 再添加name=zhangsan:NoSchedule

[root@master01 ~]# kubectl taint nodes master01 name=zhangsan:NoSchedule

node/master01 tainted

# 查看

[root@master01 ~]# kubectl describe node master01 | grep -C 2 Taint

Taints: name=zhangsan:NoSchedule # 修改的污点

node-role.kubernetes.io/control-plane:NoSchedule验证三个污点类型的调度

PreferNoSchedule

PreferNoSchedule是限制Pod调度最弱的一个类型,会尽量避免 Pod 调度到该节点(非强制,调度器会尝试寻找其他节点,但若没有合适节点仍会调度)。

示例:给node01节点创建一个PreferNoSchedule类型的污点。

[root@master01 ~]# kubectl taint node node01 name=zhangsan:PreferNoSchedule

node/node01 tainted

[root@master01 ~]# kubectl describe node node01 | grep Taints

Taints: name=zhangsan:PreferNoSchedule现在node01节点上存在一个污点,是name=zhangsan:PreferNoSchedule,我们指定Pod调度到该节点上会发生什么呢?

我们使用deploy创建10个Pod看看会发生什么?

# 创建deploy,创建10个Pod

[root@master01 ~/pod]# cat deploy-pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

# 创建10个副本

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

# 创建deploy

[root@master01 ~/pod]# kubectl apply -f deploy-pod.yaml

deployment.apps/nginx-deployment created查看一下Pod的调度状态,发现全部调度到node02节点上了

[root@master01 ~/pod]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-654975c8cd-2kzzs 1/1 Running 0 108s 100.95.185.204 node02 <none> <none>

nginx-deployment-654975c8cd-5jbvk 1/1 Running 0 108s 100.95.185.203 node02 <none> <none>

nginx-deployment-654975c8cd-6g2jd 1/1 Running 0 108s 100.95.185.206 node02 <none> <none>

nginx-deployment-654975c8cd-8pfb7 1/1 Running 0 108s 100.95.185.209 node02 <none> <none>

nginx-deployment-654975c8cd-c7s6m 1/1 Running 0 108s 100.95.185.208 node02 <none> <none>

nginx-deployment-654975c8cd-dphzf 1/1 Running 0 108s 100.95.185.211 node02 <none> <none>

nginx-deployment-654975c8cd-kvllb 1/1 Running 0 108s 100.95.185.205 node02 <none> <none>

nginx-deployment-654975c8cd-mbdhc 1/1 Running 0 108s 100.95.185.210 node02 <none> <none>

nginx-deployment-654975c8cd-mnfkz 1/1 Running 0 108s 100.95.185.207 node02 <none> <none>

nginx-deployment-654975c8cd-psbtk 1/1 Running 0 108s 100.95.185.212 node02 <none> <none>我们接着给node02节点上添加一个NoSchedule类型的污点

[root@master01 ~]# kubectl taint node node02 app:NoSchedule

node/node01 tainted

[root@master01 ~]# kubectl describe node node02 | grep Taints

Taints: name=zhangsan:NoSchedule我们继续使用deploy创建10个Pod,看看会发生什么?

# 创建deploy,创建10个Pod

[root@master01 ~/pod]# cat deploy-noschedule.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-noschedule

labels:

app: nginx

spec:

# 创建10个副本

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

[root@master01 ~/pod]# kubectl apply -f deploy-noschedule.yaml

deployment.apps/nginx-noschedule created查看一下pod的调度状态,发现Pod又都调度到node01节点上了

[root@master01 ~/pod]# kubectl get po -o wide | grep nginx-noschedule

nginx-noschedule-654975c8cd-27529 1/1 Running 0 63s 100.117.144.143 node01 <none> <none>

nginx-noschedule-654975c8cd-472wh 1/1 Running 0 63s 100.117.144.144 node01 <none> <none>

nginx-noschedule-654975c8cd-56wbp 1/1 Running 0 63s 100.117.144.142 node01 <none> <none>

nginx-noschedule-654975c8cd-5vwvx 1/1 Running 0 63s 100.117.144.138 node01 <none> <none>

nginx-noschedule-654975c8cd-99ld7 1/1 Running 0 63s 100.117.144.146 node01 <none> <none>

nginx-noschedule-654975c8cd-brjlh 1/1 Running 0 63s 100.117.144.145 node01 <none> <none>

nginx-noschedule-654975c8cd-fkzwr 1/1 Running 0 63s 100.117.144.147 node01 <none> <none>

nginx-noschedule-654975c8cd-hmqkg 1/1 Running 0 63s 100.117.144.141 node01 <none> <none>

nginx-noschedule-654975c8cd-sxx2h 1/1 Running 0 63s 100.117.144.140 node01 <none> <none>

nginx-noschedule-654975c8cd-xbgkc 1/1 Running 0 63s 100.117.144.139 node01 <none> <none>NoSchedule

NoSchedule会禁止 Pod 调度到该节点(除非 Pod 有对应的容忍),但是不会影响当前节点已经存在Pod的状态

示例:我们给node节点上添加一个NoSchedule类型的污点:

[root@master01 ~]# kubectl taint node node01 app:NoSchedule

node/node01 tainted

[root@master01 ~]# kubectl taint node node02 app:NoSchedule

node/node01 tainted

# 查看污点,所有K8s节点上都存在了NoSchedule类型的污点

[root@master01 ~/pod]# kubectl describe node | grep Taint

Taints: node-role.kubernetes.io/control-plane:NoSchedule

Taints: app:NoSchedule

Taints: app:NoSchedule创建deploy

# 定义资源文件

[root@master01 ~/pod]# cat test-schedule.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-noschedule

labels:

app: nginx

spec:

# 创建10个副本

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

# 创建deploy

[root@master01 ~/pod]# kubectl apply -f test-schedule.yaml

deployment.apps/test-noschedule created查看pod的调度状态,发现pod全部处于pending状态

[root@master01 ~/pod]# kubectl get po -o wide | grep test

test-noschedule-654975c8cd-79hzm 0/1 Pending 0 79s <none> <none> <none> <none>

test-noschedule-654975c8cd-7hsr7 0/1 Pending 0 79s <none> <none> <none> <none>

test-noschedule-654975c8cd-8zf82 0/1 Pending 0 79s <none> <none> <none> <none>

test-noschedule-654975c8cd-bk6fh 0/1 Pending 0 79s <none> <none> <none> <none>

test-noschedule-654975c8cd-fq7hk 0/1 Pending 0 79s <none> <none> <none> <none>

test-noschedule-654975c8cd-htf66 0/1 Pending 0 79s <none> <none> <none> <none>

test-noschedule-654975c8cd-n7bsk 0/1 Pending 0 79s <none> <none> <none> <none>

test-noschedule-654975c8cd-nv5vh 0/1 Pending 0 79s <none> <none> <none> <none>

test-noschedule-654975c8cd-rq9th 0/1 Pending 0 79s <none> <none> <none> <none>

test-noschedule-654975c8cd-wkg7d 0/1 Pending 0 79s <none> <none> <none> <none>查看一下详细信息,发现是因为污点的原因

[root@master01 ~/pod]# kubectl describe po test-noschedule-654975c8cd-79hzm

Name: test-noschedule-654975c8cd-79hzm

##...省略万字内容

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 2m7s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 2 node(s) had untolerated taint {app: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling..NoExecute

NoExecute类型的污点,不仅禁止调度,还会驱逐已存在的不满足容忍的 Pod(适用于节点维护、故障处理等场景)。

准备一下测试环境

将上面案例中的关于node01节点中的污点及Pod进行删除,

# 删除污点

[root@master01 ~/pod]# kubectl taint node node01 app:NoSchedule-

node/node01 untainted

[root@master01 ~/pod]# kubectl taint node node01 name=zhangsan:PreferNoSchedule-

node/node01 untainted

[root@master01 ~/pod]# kubectl describe node node01 | grep Taint

Taints: <none>

# 删除deploy

[root@master01 ~/pod]# kubectl delete deploy nginx-noschedule

deployment.apps "nginx-noschedule" deleted

[root@master01 ~/pod]# kubectl delete deploy test-noschedule

deployment.apps "test-noschedule" deleted

# 查看node02节点上的pod

[root@master01 ~/pod]# kubectl get po -o wide | grep node02

nginx-deployment-654975c8cd-5j2kn 1/1 Running 0 28m 100.95.185.220 node02 <none> <none>

nginx-deployment-654975c8cd-b44mb 1/1 Running 0 28m 100.95.185.218 node02 <none> <none>

nginx-deployment-654975c8cd-b9pg7 1/1 Running 0 28m 100.95.185.214 node02 <none> <none>

nginx-deployment-654975c8cd-dlwqc 1/1 Running 0 28m 100.95.185.213 node02 <none> <none>

nginx-deployment-654975c8cd-dvkhh 1/1 Running 0 28m 100.95.185.219 node02 <none> <none>

nginx-deployment-654975c8cd-kxlpc 1/1 Running 0 28m 100.95.185.215 node02 <none> <none>

nginx-deployment-654975c8cd-nv99z 1/1 Running 0 28m 100.95.185.221 node02 <none> <none>

nginx-deployment-654975c8cd-p79bz 1/1 Running 0 28m 100.95.185.216 node02 <none> <none>

nginx-deployment-654975c8cd-p84cj 1/1 Running 0 28m 100.95.185.217 node02 <none> <none>

nginx-deployment-654975c8cd-q4ll4 1/1 Running 0 28m 100.95.185.222 node02 <none> <none>验证禁止调度(该步骤省略)

该步骤和NoSchedule类型一致,在这里省略了

验证驱逐Pod

给node02节点上添加一个NoExecute类型的污点

# 添加污点

[root@master01 ~]# kubectl taint node node02 app:NoExecute

node/node02 tainted

# 查看

[root@master01 ~]# kubectl describe node node02 | grep -C 2 Taint

Taints: app:NoExecute

app:NoSchedule查看一下pod,发现pod全部调度到node01节点上了

[root@master01 ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-654975c8cd-29kbl 1/1 Running 0 73s 100.117.144.160 node01 <none> <none>

nginx-deployment-654975c8cd-55fmq 1/1 Running 0 72s 100.117.144.163 node01 <none> <none>

nginx-deployment-654975c8cd-7rq6t 1/1 Running 0 72s 100.117.144.166 node01 <none> <none>

nginx-deployment-654975c8cd-cb8hl 1/1 Running 0 73s 100.117.144.161 node01 <none> <none>

nginx-deployment-654975c8cd-fzblg 1/1 Running 0 73s 100.117.144.159 node01 <none> <none>

nginx-deployment-654975c8cd-m6mxw 1/1 Running 0 71s 100.117.144.167 node01 <none> <none>

nginx-deployment-654975c8cd-mw8st 1/1 Running 0 71s 100.117.144.169 node01 <none> <none>

nginx-deployment-654975c8cd-p2kcc 1/1 Running 0 71s 100.117.144.168 node01 <none> <none>

nginx-deployment-654975c8cd-x7k8p 1/1 Running 0 73s 100.117.144.158 node01 <none> <none>

nginx-deployment-654975c8cd-xx7t7 1/1 Running 0 73s 100.117.144.162 node01 <none> <none>污点容忍

我们想让Pod调度到存在污点的节点上,我们可以使用 spec.tolerations 字段配置污点容忍

tolerations解析:

tolerations:

- key: "env" # 匹配污点的key(必须存在)

operator: "Equal" # 匹配方式(Equal表示值需相等,Exists表示无需值)

value: "prod" # 匹配污点的value(仅Equal时需要)

effect: "NoSchedule" # 匹配污点的effect(可选,不指定则匹配所有effect)

tolerationSeconds # 容忍时间, 当effect为NoExecute时生效,表示pod在Node上的停留时间污点容忍规则

污点容忍需要匹配节点的所有污点,节点的污点与 Pod 的容忍度是 多对多匹配关系:

- 若节点有 多个污点(如 taint1、taint2),Pod 必须配置 所有对应污点的容忍度,才能调度到该节点。

- 若 Pod 仅容忍其中部分污点,则无法调度(除非节点的某些污点未设置 effect 或 effect 为 NoExecute 且 Pod 满足特殊条件)。

污点容忍匹配规则:

-

key+operator+value+effect 全匹配:完全匹配污点。

tolerations:

- key: "maintenance"

operator: "Equal"

value: "true"

effect: "NoExecute"

- key: "maintenance"

-

key+operator=Exists:匹配所有带有该 key 的污点(无论 value 和 effect)。

tolerations:

- key: "maintenance"

operator: "Exists"

- key: "maintenance"

-

不指定 key 和 operator:匹配所有污点(慎用,相当于绕过所有污点限制)。

tolerations:

- effect: "NoSchedule"

operator: "Exists" # 容忍所有 NoSchedule 类型的污点

- effect: "NoSchedule"

示例:仅容忍节点上存在 app=web:NoSchedule的污点

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- name: nginx

image: nginx

tolerations:

- key: "app"

operator: "Equal"

value: "web"

effect: "NoSchedule"示例:显示匹配多个污点

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- name: nginx

image: nginx

tolerations:

- key: "app"

operator: "Equal"

value: "web"

effect: "NoSchedule"

- key: "env"

operator: "Equal"

value: "prod"

effect: "NoSchedule"