目录

创建第一个pod

bash

kubectl create deployment nginx-deploy --image=nginx

kubectl get pod -o wide # 查看 pod

kubectl expose deployment nginx-deploy --port=80 --type=NodePort # 暴露 deployment

kubectl get service nginx-deploy # 查看 service

kubectl delete deployment nginx-deploy # 删除 deployment

kubectl delete service nginx-deploy # 删除 service

kubectl get pod nginx-deploy-8cc8b9c49-xshrr -o yaml

kubectl get pod nginx-deploy-8cc8b9c49-xshrr -o wide

kubectl describe pods nginx-deploy-8cc8b9c49-xshrr

kubectl exec -it nginx-deploy-8cc8b9c49-xshrr -- sh

kubectl logs nginx-deploy-8cc8b9c49-xshrr创建Deployment

vi deployment.yaml

yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy # Deployment名称

spec:

replicas: 2 # 期望的Pod副本数

selector:

matchLabels:

app: nginx # 选择所有包含app=nginx标签的Pod

minReadySeconds: 2 # Pod准备就绪前需要等待的最小秒数

strategy:

type: RollingUpdate # 更新策略为滚动更新

rollingUpdate:

maxUnavailable: 1 # 滚动更新期间最多允许1个Pod不可用

maxSurge: 1 # 滚动更新期间最多允许额外创建1个Pod

template:

metadata:

labels:

app: nginx # Pod的标签

spec:

containers:

- name: nginx-pod # 容器名称

image: nginx:1.13.0 # 使用的镜像

imagePullPolicy: IfNotPresent # 优先使用本地镜像,不存在时才拉取

ports:

- containerPort: 80 # 容器内部监听的端口,官方的nginx镜像默认配置为监听 80 端口,而非 8080。即使这里配置的是8080,访问的时候仍然需要使用80端口。

resources: # 后续HPA会用到资源限制

limits:

cpu: "100m" # CPU限制为100毫核

requests:

cpu: "50m" # CPU请求为50毫核

bash

kubectl apply -f deployment.yaml

kubectl get deployment nginx-deploy -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deploy 2/2 2 2 178m nginx-pod nginx:1.13.0 app=nginx

kubectl get rs -owide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

nginx-deploy-68ff6f9d47 2 2 2 151m nginx-pod nginx:1.13.0 app=nginx,pod-template-hash=68ff6f9d47滚动升级和回滚

bash

vi deployment.yaml

image: nginx:1.13.0 >> image: nginx:latest # 修改镜像版本

kubectl apply -f deployment.yaml --record=true # 记录此次更新,以便日后回滚

kubectl rollout status deployment nginx-deploy # 查看滚动升级状态

# 回滚到上一个版本(无需指定版本号)

kubectl rollout undo deployment nginx-deploy

# 或回滚到指定版本(需确认版本号存在)

kubectl rollout undo deployment nginx-deploy --to-revision=1

kubectl rollout status deployment nginx-deploy # 查看回滚状态

创建serivce

vi service.yaml

yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-svc # Service的名称,在同一个命名空间内必须唯一

labels:

app: nginx

spec:

type: NodePort

ports:

- port: 80 # Service 在集群内部的端口(其他 Pod 通过此端口访问该服务)

nodePort: 30080 # 节点上暴露的端口,外部可通过 <NodeIP>:30080 访问服务,范围是30000-32767。

protocol: TCP

selector:

app: nginx # selector下的app标签要和Pod的label一致

bash

kubectl apply -f service.yaml

以下两个地方要注意,要保持一致

service.yaml --> spec.selector

deployment.yaml --> spec.template.metadata.labels

bash

kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: app=nginx

Annotations: <none>

Selector: app=nginx

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.160.27

IPs: 10.100.160.27

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30080/TCP

Endpoints: 10.240.214.207:80,10.240.214.208:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>Endpoint 是Service和Pod之间的桥梁,它记录了哪些Pod可以被访问。

访问Service

Service 的网络标识:ClusterIP、DNS 名称

bash

kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 43h

nginx-svc NodePort 10.100.160.27 <none> 80:30080/TCP 18hNAME: nginx-svc

CLUSTER-IP: 10.100.160.27

PORT:80

NodePort: 30080

从集群内部,可以通过前3个值(Name、ClusterIP、port)来直接访问

从集群外部,可以通过NodeIP:NodePort来访问

curl 10.100.160.27:80 # 集群内部访问Service

curl nginx-svc:80 # Pod内部通过DNS访问Service

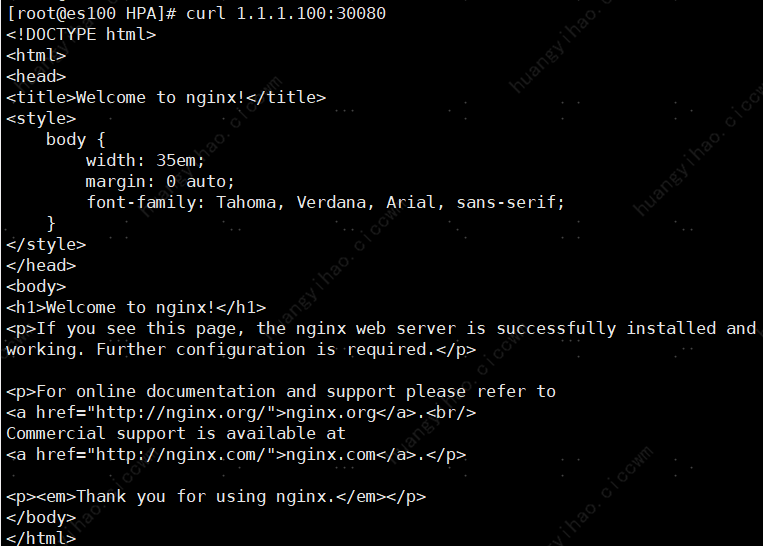

curl 1.1.1.100:30080 # 集群外部访问Service es100:30800

HPA

前提条件:部署nginx,可参考上面的Deployment和Service部分

vi metric-server.yaml

yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/registry.k8s.io/metrics-server/metrics-server:v0.6.4

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

bash

kubectl apply -f metric-server.yaml

kubectl autoscale deployment nginx-deploy --cpu-percent=50 --min=2 --max=10 # 创建HPA,自动扩容,CPU使用率超过50%,最小2个Pod,最大10个Pod

horizontalpodautoscaler.autoscaling/nginx-deploy autoscaled # 查看HPA状态测试开始:使用一下Python代码进行连接模拟

原本是来模拟http版本请求的,凑合着用吧

python

import time

import httpx

import ssl

while True:

with httpx.Client(http2=False, verify=False) as client: # http2=True则使用 HTTP2,http2=False,则默认采用 HTTP/1.1 协议

response = client.get('http://1.1.1.100:30080', headers={'Host': url})

response = client.get('http://1.1.1.80:30080', headers={'Host': url})

time.sleep(1) # 这里可以自由调节,间隔越小,CPU使用率越高观测结果:

bash

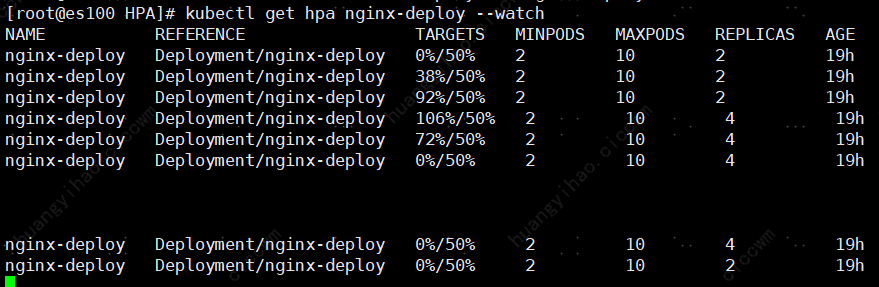

kubectl get hpa nginx-deploy --watch # 观察HPA自动扩容

kubectl get pod

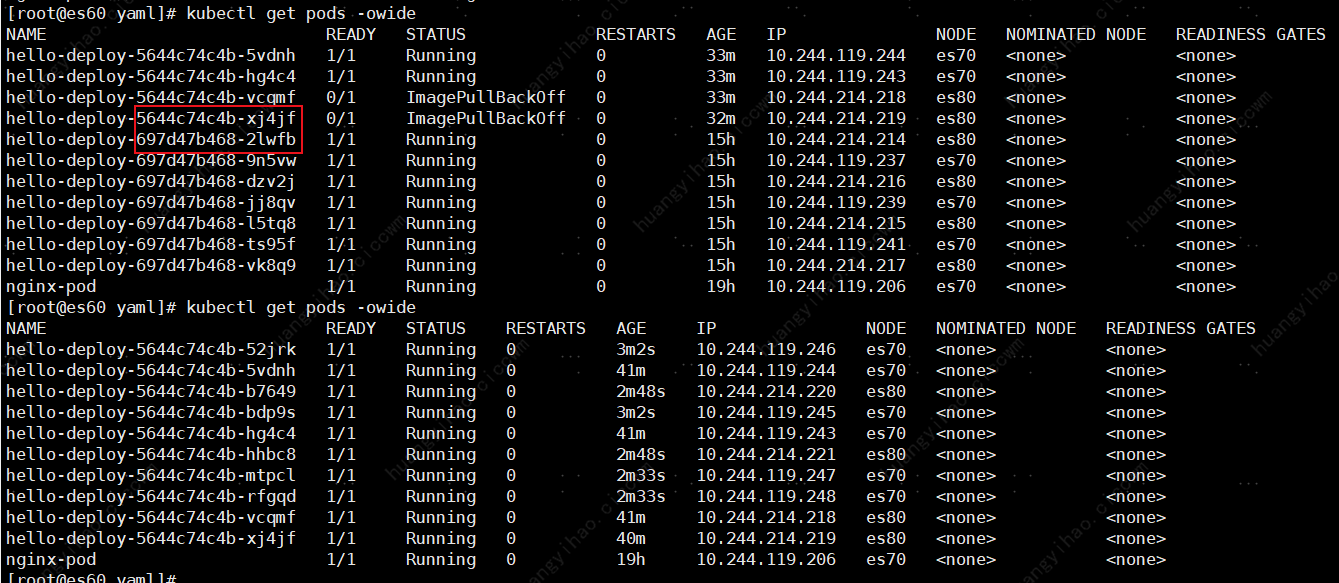

NAME READY STATUS RESTARTS AGE

nginx-deploy-74db4c6d6f-65lzx 1/1 Running 0 16h

nginx-deploy-74db4c6d6f-kzwvn 1/1 Running 0 5m11s

nginx-deploy-74db4c6d6f-xf99c 1/1 Running 0 16h

nginx-deploy-74db4c6d6f-xv9hc 1/1 Running 0 5m11s

kubectl get hpa nginx-deploy --watch

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-deploy Deployment/nginx-deploy 0%/50% 2 10 2 19h

nginx-deploy Deployment/nginx-deploy 38%/50% 2 10 2 19h

nginx-deploy Deployment/nginx-deploy 92%/50% 2 10 2 19h

nginx-deploy Deployment/nginx-deploy 106%/50% 2 10 4 19h

nginx-deploy Deployment/nginx-deploy 72%/50% 2 10 4 19h

nginx-deploy Deployment/nginx-deploy 0%/50% 2 10 4 19h

nginx-deploy Deployment/nginx-deploy 0%/50% 2 10 4 19h

nginx-deploy Deployment/nginx-deploy 0%/50% 2 10 2 19h