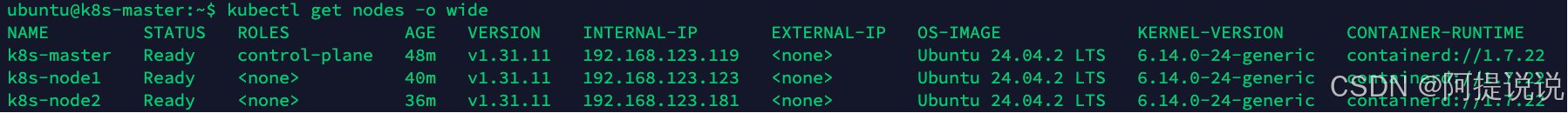

准备3台虚拟机

在自己电脑上使用virtualbox 开了3台1核2G的Ubuntu虚拟机,你可以先安装好一台,安装第一台的时候配置临时调高到2核4G,安装速度会快很多,安装完通过如下命令关闭桌面,能够省内存占用,后面我们都是通过SSH进行连接。

- 安装配置第一台虚拟机

shell

sudo systemctl set-default multi-user.target

sudo systemctl reboot重启完成后,安装SSH

shell

sudo apt install openssh-server

# 查看启动状态,Active: active (running) ,不是这个状态的,就是没启动

sudo systemctl status ssh

# 没用启动,使用如下命令启动

sudo /etc/init.d/ssh start记录本机的IP地址

shell

ip addr- 关闭交换分区

k8s 默认要求关闭linux的交换分区,否则会无法启动,除非手动配置交换分区,这里我们选择禁用

shell

# 永久禁用交换分区,会给/etc/fstab的swap配置注释掉,修改完要重启生效

sudo sed -i '/swap/s/^\(.*\)$/#\1/g' /etc/fstab- 复制剩余虚拟机

通过virtualbox的复制功能,复制另外两台虚拟机,网卡我们都选择重新生成MAC地址,等待复制完成后,记录虚拟机的IP地址 - 测试SSH连接

在SSH工具中配置完成,我用的工具是Termius,测试连接是否正常 - 设置主机名

给3台虚拟机设置不同的主机名,确定其中一台做为k8s-master,其余两台做为k8s-node1何k8s-node2

shell

sudo hostnamectl set-hostname youhostname安装容器运行时

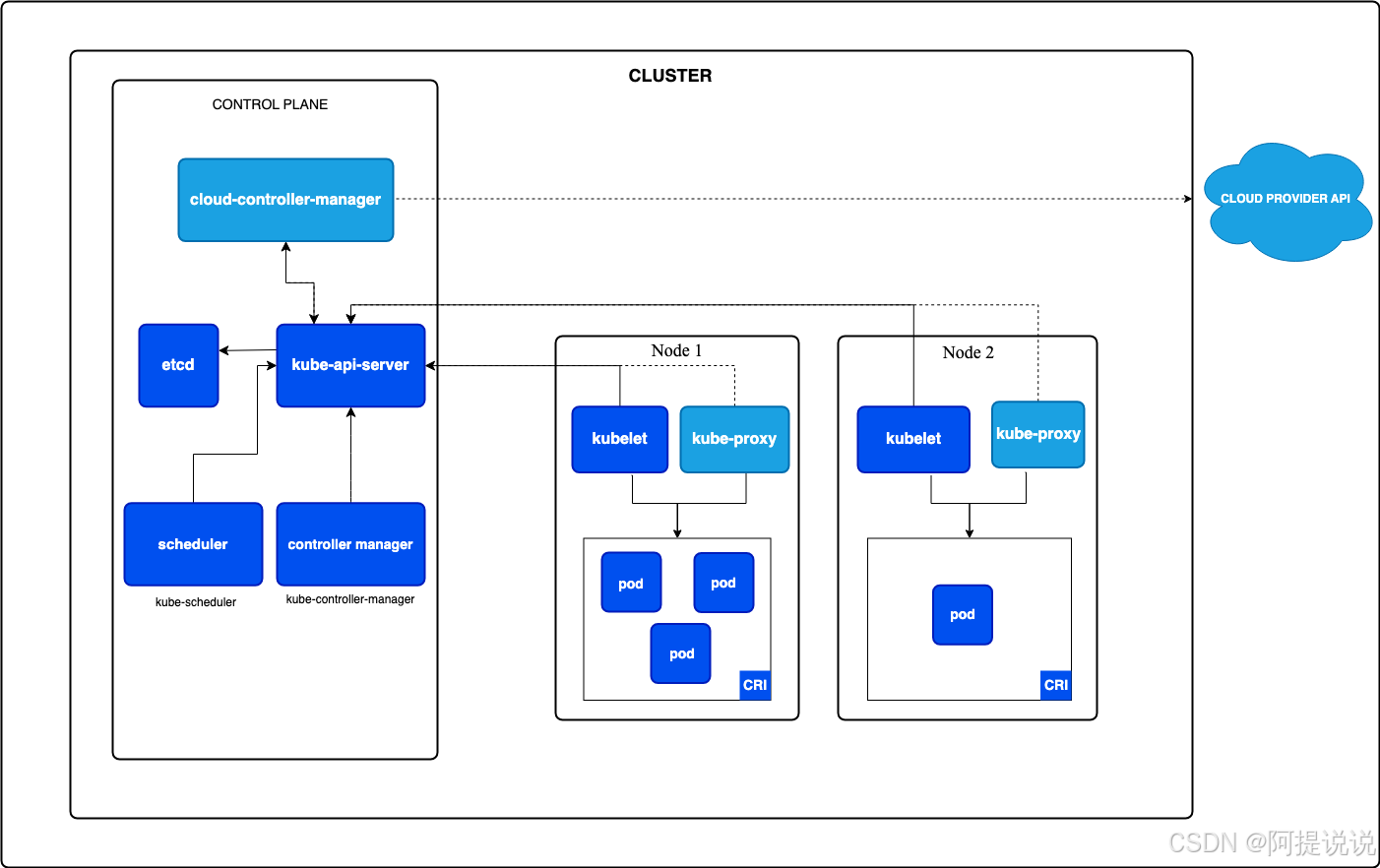

k8s 1.24 后废弃了直接集成的dockershim,如果要继续使用docker就需要额外安装CRI,https://github.com/Mirantis/cri-dockerd

这里我们使用containerd,该容器运行时,也是docker 公司的,只是更核心

shell

## 1、containerd

# 下载包

wget https://github.com/containerd/containerd/releases/download/v1.7.22/containerd-1.7.22-linux-amd64.tar.gz

# 将下载的包解压到/usr/local下

tar Cxzvf /usr/local containerd-1.7.22-linux-amd64.tar.gz

# 下载服务启动文件

wget -O /etc/systemd/system/containerd.service https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

# 文件内容如下,下载不下来直接复制

cat /etc/systemd/system/containerd.service

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target dbus.service

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/local/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

# Comment TasksMax if your systemd version does not supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

# 启动containerd

systemctl daemon-reload

systemctl enable --now containerd

## 2、Installing runc

wget https://github.com/opencontainers/runc/releases/download/v1.2.0-rc.3/runc.amd64

install -m 755 runc.amd64 /usr/local/sbin/runc切换国内源

shell

# 创建containerd目录

mkdir /etc/containerd

# 恢复默认配置文件

containerd config default | sudo tee /etc/containerd/config.toml

# 切换为国内源

sed -i 's/registry.k8s.io/registry.aliyuncs.com\/google_containers/' /etc/containerd/config.toml

# 修改SystemCgroup为true

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true镜像加速

shell

[root@master ~]# vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d" #修改此处

[root@master ~]# mkdir -p /etc/containerd/certs.d/docker.io

# docker hub加速

[root@master ~]# vim /etc/containerd/certs.d/docker.io/hosts.toml

[root@master ~]# cat /etc/containerd/certs.d/docker.io/hosts.toml

server ="https://docker.io"

[host."https://docker.m.daocloud.io"]

capabilities =["pull","resolve"]

[host."https://reg-mirror.giniu.com"]

capabilities =["pull","resolve"]

# registry.k8s.io镜像加速

[root@master ~]# mkdir -p /etc/containerd/certs.d/registry.k8s.io

[root@master ~]# vim /etc/containerd/certs.d/registry.k8s.io/hosts.toml

[root@master ~]# cat /etc/containerd/certs.d/registry.k8s.io/hosts.toml

server ="https://registry.k8s.io"

[host."https://k8s.m.daocloud.io"]

capabilities =["pull","resolve","push"]

# 重启服务(更多加速文档参考上述文档)

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart containerd.service安装kubeadm、kubelet、kubectl

在3台机器上分别安装kubeadm、kubelet、kubectl,我安装版本是1.31

shell

# 配置签名秘钥

# 如果 `/etc/apt/keyrings` 目录不存在,则应在 curl 命令之前创建它,请阅读下面的注释。

# sudo mkdir -p -m 755 /etc/apt/keyrings

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.31/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

# 添加仓库

# 此操作会覆盖 /etc/apt/sources.list.d/kubernetes.list 中现存的所有配置。

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.31/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

# 更新包索引、安装 kubelet、kubeadm 和 kubectl,并锁定其版本

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

# 启动

sudo systemctl enable --now kubelet安装kube-apiserver、kube-proxy、kube-controller-manager等

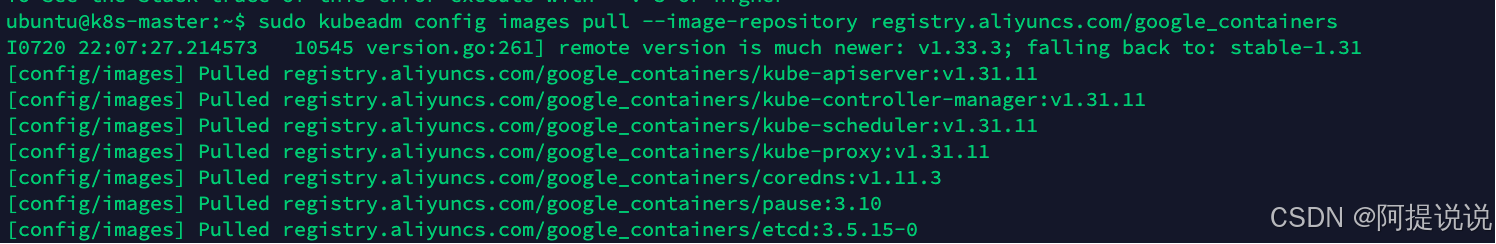

获取镜像,只要执行这个命名就能自己拉取需要的镜像

shell

kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers

初始化控制平面

shell

# 创建初始化配置文件

kubeadm config print init-defaults | sudo tee /etc/kubernetes/init-default.yaml

# 修改为国内阿里源

sed -i 's/registry.k8s.io/registry.aliyuncs.com\/google_containers/' /etc/kubernetes/init-default.yaml

# 设置 apiServerIP 地址. 请自行替换192.168.123.119为自己宿主机IP

sed -i 's/1.2.3.4/192.168.123.119/' /etc/kubernetes/init-default.yaml

# 文件内容

[root@master ~]# cat /etc/kubernetes/init-default.yaml

apiVersion: kubeadm.k8s.io/v1beta4

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.123.119

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

imagePullSerial: true

name: node

taints: null

timeouts:

controlPlaneComponentHealthCheck: 4m0s

discovery: 5m0s

etcdAPICall: 2m0s

kubeletHealthCheck: 4m0s

kubernetesAPICall: 1m0s

tlsBootstrap: 5m0s

upgradeManifests: 5m0s

---

apiServer: {}

apiVersion: kubeadm.k8s.io/v1beta4

caCertificateValidityPeriod: 87600h0m0s

certificateValidityPeriod: 8760h0m0s

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

encryptionAlgorithm: RSA-2048

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.31.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 192.168.0.0/16 #加入此句

proxy: {}

scheduler: {}

# 初始化主节点

kubeadm init --image-repository registry.aliyuncs.com/google_containers部署完成后提示:

text

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.123.119:6443 --token ruyc2h.0e2tbzjopd6jte33 \

--discovery-token-ca-cert-hash sha256:d320cc377ffbf516d017d7ed0ccc9f416013808827d3f8ffe545a57ca5271f4f 按照说明执行

以普通用户身份运行以下操作:

shell

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config如果您是root用户,也可以运行

shell

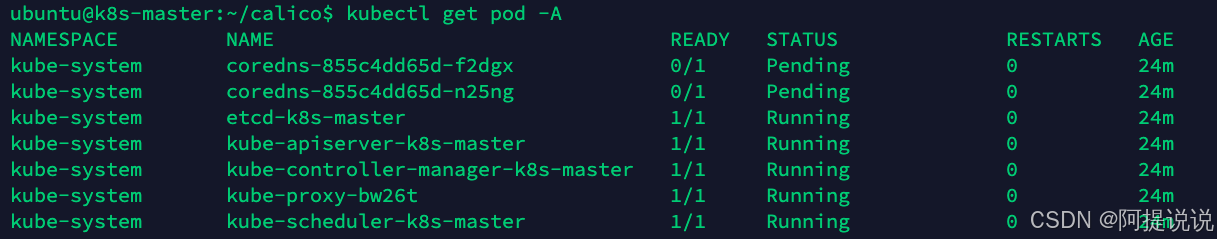

export KUBECONFIG=/etc/kubernetes/admin.conf目前为止容器运行状态:

coredns 一直是Pending状态,需要安装网络一个网络插件

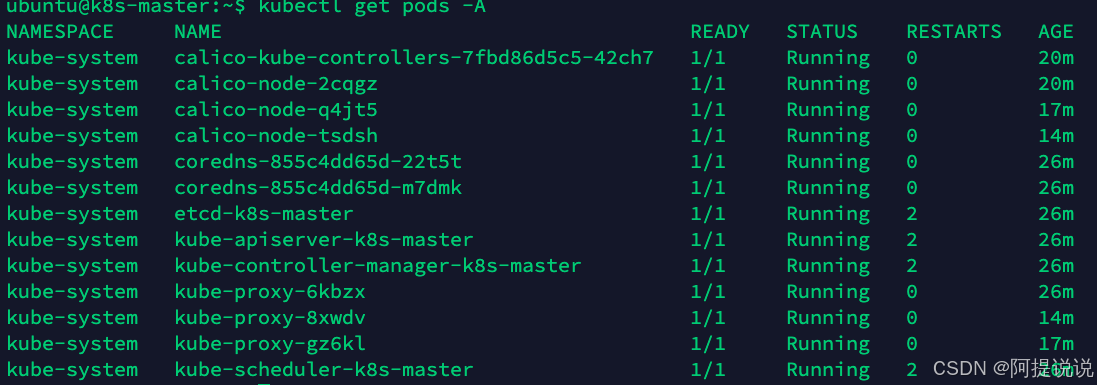

安装网络插件

我选择Calico

shell

wget https://calico-v3-25.netlify.app/archive/v3.25/manifests/calico.yaml

kubectl apply -f calico.yaml在其他的虚拟机上运行如下命令,加入节点,命令来自kubeadm init 初始化后打印的:

shell

kubeadm join 192.168.123.119:6443 --token u0zv3l.pprli0wxqm8zvx5y \

--discovery-token-ca-cert-hash sha256:7f16be323774a4e2dd41639e3188ce458614bb570899c39d245bc93b9cac13d2

# 过期后,在master重新获取

kubeadm token create --print-join-command如果安装完,coredns节点还是pending, 节点也是NotReady状态,在各节点上重启

sudo systemctl restart kubelet

sudo systemctl restart containerd

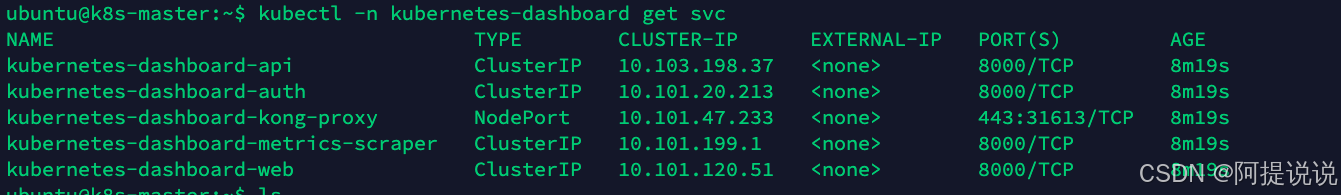

部署kubernetes-dashboard

目前官方推荐使用heml 来部署

shell

# 下载 helm

wget https://get.helm.sh/helm-v3.16.1-linux-amd64.tar.gz

tar zxf helm-v3.16.1-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm && rm -rf linux-amd64

# 添加 kubernetes-dashboard 仓库

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

# 使用 kubernetes-dashboard Chart 部署名为 `kubernetes-dashboard` 的 Helm Release

helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard

# 修改网络类型,type:ClusterIP --> type:NodePort

kubectl edit svc kubernetes-dashboard-kong-proxy -n kubernetes-dashboard

# 查看网络

kubectl -n kubernetes-dashboard get svc

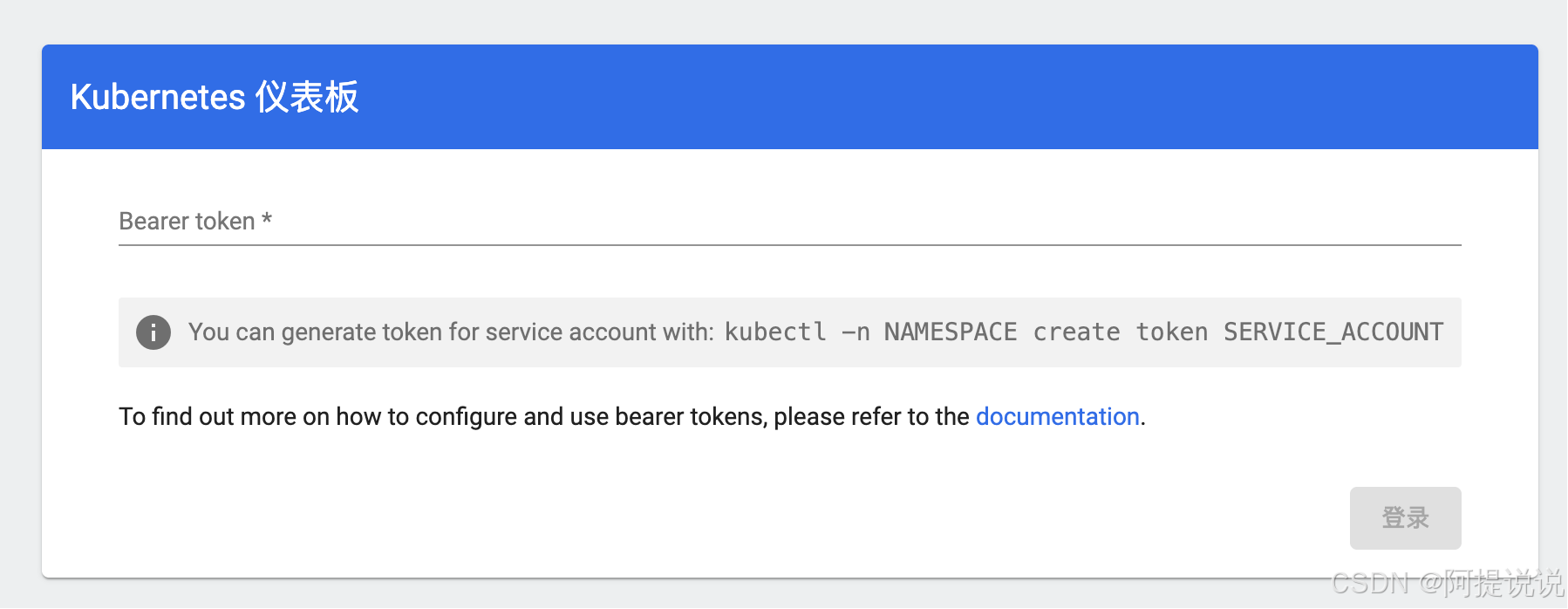

系统自动分配了31613端口,使用https://任意集群IP:端口,https://192.168.123.119:31613

创建长效token

shell

# 创建ServiceAccount

kubectl -n kubernetes-dashboard create serviceaccount admin-user-permanent

# 绑定 ClusterRole

kubectl create clusterrolebinding admin-user-permanent \

--clusterrole=cluster-admin \

--serviceaccount=kubernetes-dashboard:admin-user-permanent

# 创建长期有效的token

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

metadata:

name: admin-user-permanent-token

namespace: kubernetes-dashboard

annotations:

kubernetes.io/service-account.name: admin-user-permanent

type: kubernetes.io/service-account-token

EOF

# 获取token

kubectl -n kubernetes-dashboard get secret admin-user-permanent-token -o jsonpath="{.data.token}" | base64 --decode

# 让token 失效

kubectl -n kubernetes-dashboard delete secret admin-user-permanent-token常用命令:

-- 查看pod

kubectl get pods -A

-- 删除pod

kubectl delete deployment -n kube-system coredns

-- 查看pod事件

kubectl describe pod -n kube-system coredns-6b59c98dd4-r5fmt

-- 查看pod日志最后50行

kubectl logs -n kube-system -l k8s-app=calico-node --tail=50