直接使用Clickhouse官方支持的Mysql引擎表的方式!

一、首先创建Mysql引擎表:

sql

CREATE TABLE saas_analysis.t_page_view_new_for_write

(

`id` Int64,

`shop_id` Nullable(Int64),

`session_id` Nullable(String),

`client_id` Nullable(String),

`one_id` Nullable(String),

`browser_ip` Nullable(String),

`ip_country` Nullable(String),

`ua` Nullable(String),

`referer` Nullable(String),

`current_url` Nullable(String),

`page_type` Nullable(String),

`extra_params` Nullable(String),

`cloak_strategy` Nullable(String),

`cloak_risk_type` Nullable(Int32),

`flow_channel` Nullable(String),

`first_paint_time` Nullable(Int32),

`ready_time` Nullable(Int32),

`create_time` DateTime

)

ENGINE = MySQL('127.0.0.1:3306', 'saas_analysis', 't_page_view_new', 'mysql_rw', 'password', 0, '1')

SETTINGS

connect_timeout = 30, -- 连接超时时间(秒)

read_write_timeout = 1800, -- 读写超时时间(秒)

connection_pool_size = 16, -- 连接池大小

connection_max_tries = 3;ENGINE配置密码后两位代表:

replace_query--- 将INSERT INTO查询转换为REPLACE INTO的标志。如果replace_query=1,则查询会被替换。(参数值int类型)on_duplicate_clause--- 添加到INSERT查询中的ON DUPLICATE KEY on_duplicate_clause表达式。(参数值String类型)'1' 代表开启,不能和replace_query同时开启。

二、执行Clickhouse查询写入SQL(insert into select):

sql

-- 会话级别设置(仅当前会话有效)

SET max_execution_time = 1200; -- 设置为1200秒(20分钟)

INSERT INTO saas_analysis.t_page_view_new_for_write

(

id,

shop_id,

session_id,

client_id,

one_id,

browser_ip,

ip_country,

ua,

referer,

current_url,

page_type,

extra_params,

flow_channel,

first_paint_time,

ready_time,

create_time

)

SELECT

id,

shop_id,

session_id,

client_id,

one_id,

browser_ip,

ip_country,

ua,

referer,

current_url,

page_type,

extra_params,

flow_channel,

first_paint_time,

ready_time,

create_time

FROM saas_analysis.t_page_view

WHERE create_time >='2025-06-02 00:00:00' and create_time < '2025-06-03 00:00:00'注意:这里由于数据量问题,需要加大CK的最大查询超时时间。

否则会报错 Estimated query execution time (633.9467681108954 seconds) is too long. Maximum: 600.

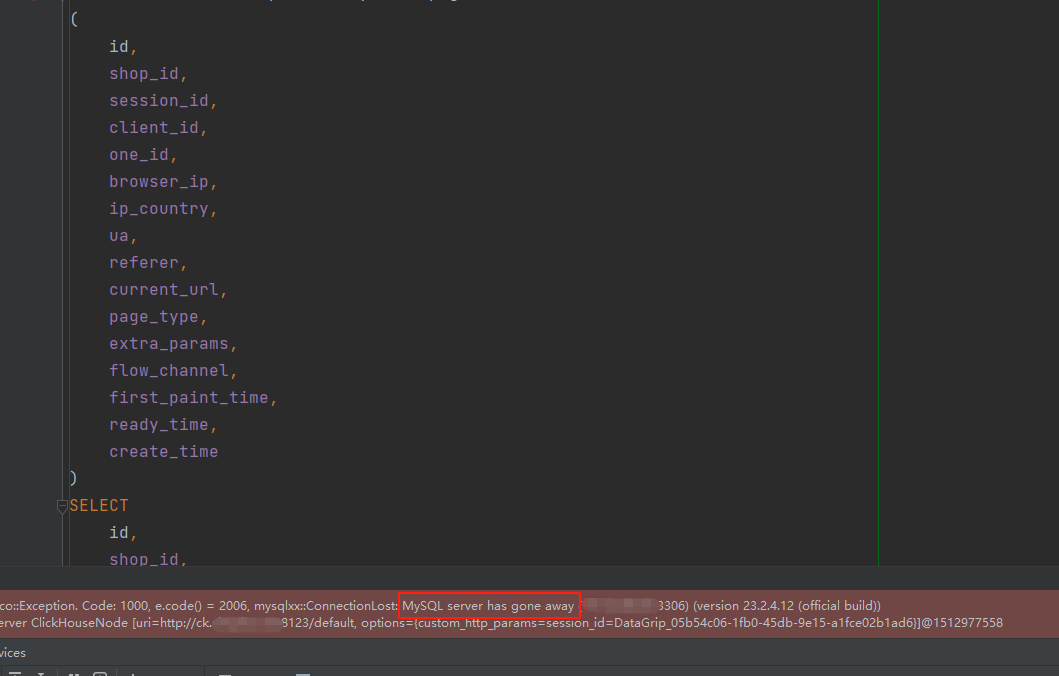

三、在执行过程中遇到的其他报错:

MySQL server has gone away

①加大客户端的socket_timeout时间为3000000

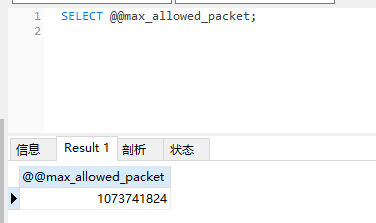

②加大Mysql允许最大接收数据包

SET GLOBAL max_allowed_packet=10737418240; // 10G

四、执行结果:

数据量大的需要写脚本分批去执行,我这边一天作为一批,一天数据量在2000万,耗时10分钟左右。