GitHub地址:

https://github.com/gao7025/pytorch_cnn_mnist.git

1.定义模型、损失函数、优化器

- 定义一个卷积神经网络的网络结构,并设置一个优化器(optimizer)和一个损失准则(losscriterion)。创建一个随机梯度下降(stochasticgradientdescent)优化器

python

# 仅定义模型

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 16, kernel_size=4)

self.conv2 = nn.Conv2d(16, 32, kernel_size=4)

self.fc1 = nn.Linear(32 * 4 * 4, 32)

self.fc2 = nn.Linear(32, 10)

def forward(self, x):

x = f.relu(self.conv1(x))

x = f.max_pool2d(x, 2)

x = f.relu(self.conv2(x))

x = f.max_pool2d(x, 2)

x = x.view(-1, 32 * 4 * 4)

x = f.relu(self.fc1(x))

x = self.fc2(x)

return x

python

# 定义模型、损失函数、优化器

from model_class import Net

model = Net()

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.01)2.加载已有或下载mnist数据集

python

# 数据加载与预处理

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,)) # MNIST数据集的均值和标准差

])

train_dataset = datasets.MNIST(root='mnist_data', train=True, download=False, transform=transform)

test_dataset = datasets.MNIST(root='mnist_data', train=False, download=False, transform=transform)

train_loader = DataLoader(train_dataset, batch_size=512, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=512)3.模型训练

python

# 模型训练

def train(model, train_loader, criterion, optimizer, epoch, train_losses):

model.train()

train_loss = 0

for batch_idx, (data, target) in enumerate(train_loader):

optimizer.zero_grad()

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

train_loss += loss.item()

if batch_idx % 100 == 0:

print(f'Epoch: {epoch + 1}, Batch: {batch_idx}, Loss: {loss.item():.4f}')

avg_train_loss = train_loss / len(train_loader)

train_losses.append(avg_train_loss)

return avg_train_loss4.模型的验证与测试

python

# 模型验证

def evaluate(model, test_loader, criterion, test_losses, accuracies):

model.eval()

test_loss = 0

correct = 0

# 禁用梯度计算区域

with torch.no_grad():

for data, target in test_loader:

output = model(data)

test_loss += criterion(output, target).item()

pred = output.argmax(dim=1, keepdim=True)

correct += pred.eq(target.view_as(pred)).sum().item()

avg_test_loss = test_loss / len(test_loader)

accuracy = 100. * correct / len(test_loader.dataset)

test_losses.append(avg_test_loss)

accuracies.append(accuracy)

print(

f'\nTest set: Average loss: {avg_test_loss:.4f}, '

f'Accuracy: {correct}/{len(test_loader.dataset)} ({accuracy:.2f}%)\n')

return avg_test_loss, accuracy5.绘制训练与测试的loss曲线和acc曲线并调优

python

def plot_loss_acc(train_losses, test_losses, accuracies):

"""

针对训练过程绘制loss曲线和acc曲线

Parameters

----------

train_losses : list_like

train loss list。

test_losses : list_like

test loss list。

accuracies : list_like

accuracies list。

"""

plt.figure(figsize=(10, 4))

plt.subplot(1, 2, 1)

plt.plot(train_losses, 'o-', label='Training Loss')

plt.plot(test_losses, 's-', label='Test Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('Training and Test Loss')

plt.legend()

# plt.grid(True)

plt.subplot(1, 2, 2)

plt.plot(accuracies, 'd-', color='green')

plt.xlabel('Epoch')

plt.ylabel('Accuracy (%)')

plt.title('Test Accuracy')

# plt.grid(True)

plt.tight_layout()

timestamp = str(dt.now().strftime("%y%m%d%H%M%S"))

plt.savefig('./results/training_metrics_{time}.png'.format(time=timestamp))

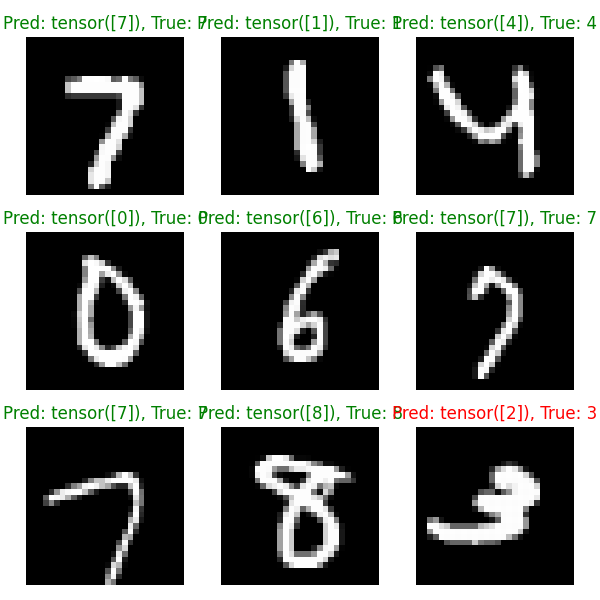

plt.show()6.模型预测与结果可视化

- 加载训练好的模型和参数,并将模型设置为评估模式

python

from model_class import Net

model = Net()

model.load_state_dict(torch.load('mnist_cnn_model_params.pth'))

model.eval()- 加载测试集数据

python

# 测试集数据加载

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,)) # MNIST数据集的均值和标准差

])

test_dataset = datasets.MNIST(root='mnist_data', train=False, download=False, transform=transform)

test_loader = DataLoader(test_dataset, batch_size=512)- 预测结果,并将部分结果可视化展示

python

# 预测函数

def predict_random_samples(model, test_dataset, num_samples=9, max_show_num=16):

"""随机选择样本进行预测并可视化结果"""

model.eval()

indices = np.random.choice(len(test_dataset), num_samples, replace=False)

# 计算子图网格的行数和列数

cols = int(np.ceil(np.sqrt(min(num_samples, max_show_num)))) # 向上取整

rows = int(np.ceil(min(num_samples, max_show_num) / cols))

# 创建相应大小的子图网格

fig, axes = plt.subplots(rows, cols, figsize=(cols * 2, rows * 2))

# 如果只有一个子图,axes会是一个单独的对象,需要将其转为数组

if num_samples == 1:

axes = np.array([axes])

else:

# 将多维数组展平为一维数组

axes = axes.flatten()

# fig, axes = plt.subplots(3, 3, figsize=(10, 6))

# axes = axes.flatten()

correct = 0

with torch.no_grad():

for i, idx in enumerate(indices):

image, true_label = test_dataset[idx]

output = model(image.unsqueeze(0))

pred = output.argmax(dim=1)

correct += pred.eq(true_label).sum().item()

# 反标准化以便正确显示图像

img = image.squeeze().numpy() * 0.3081 + 0.1307

# 只处理有效的子图索引

if i < len(axes) and i <= max_show_num:

axes[i].imshow(img, cmap='gray')

axes[i].set_title(f'Pred: {pred}, True: {true_label}',

color=('green' if pred == true_label else 'red'))

axes[i].axis('off')

# 隐藏多余的子图

for i in range(num_samples, len(axes)):

axes[i].axis('off')

accuracy = 100. * correct / num_samples

print(

f'Accuracy: {correct}/{num_samples} ({accuracy:.2f}%)\n')

plt.tight_layout()

timestamp = str(dt.now().strftime("%y%m%d%H%M%S"))

plt.savefig('./results/prediction_samples_{time}.png'.format(time=timestamp))

plt.show()

print(f"预测样本已保存为 'prediction_samples_{timestamp}.png'")可视化结果