1、安装Docker

# 安装Docker

https://docs.docker.com/get-docker/

# 安装Docker Compose

https://docs.docker.com/compose/install/

# CentOS安装Docker

https://mp.weixin.qq.com/s/nHNPbCmdQs3E5x1QBP-ueA2、安装Coze Studio

详见:https://github.com/coze-dev/coze-studio/blob/main/README.zh_CN.md安装要求:

创建目录:

mkdir coze-studio切换目录:

cd coze-studio下载:

wget https://github.com/coze-dev/coze-studio/archive/refs/tags/v0.2.4.tar.gz解压:

tar -xf v0.2.4.tar.gz切换目录:

cd coze-studio-0.2.4/模板文件目录:

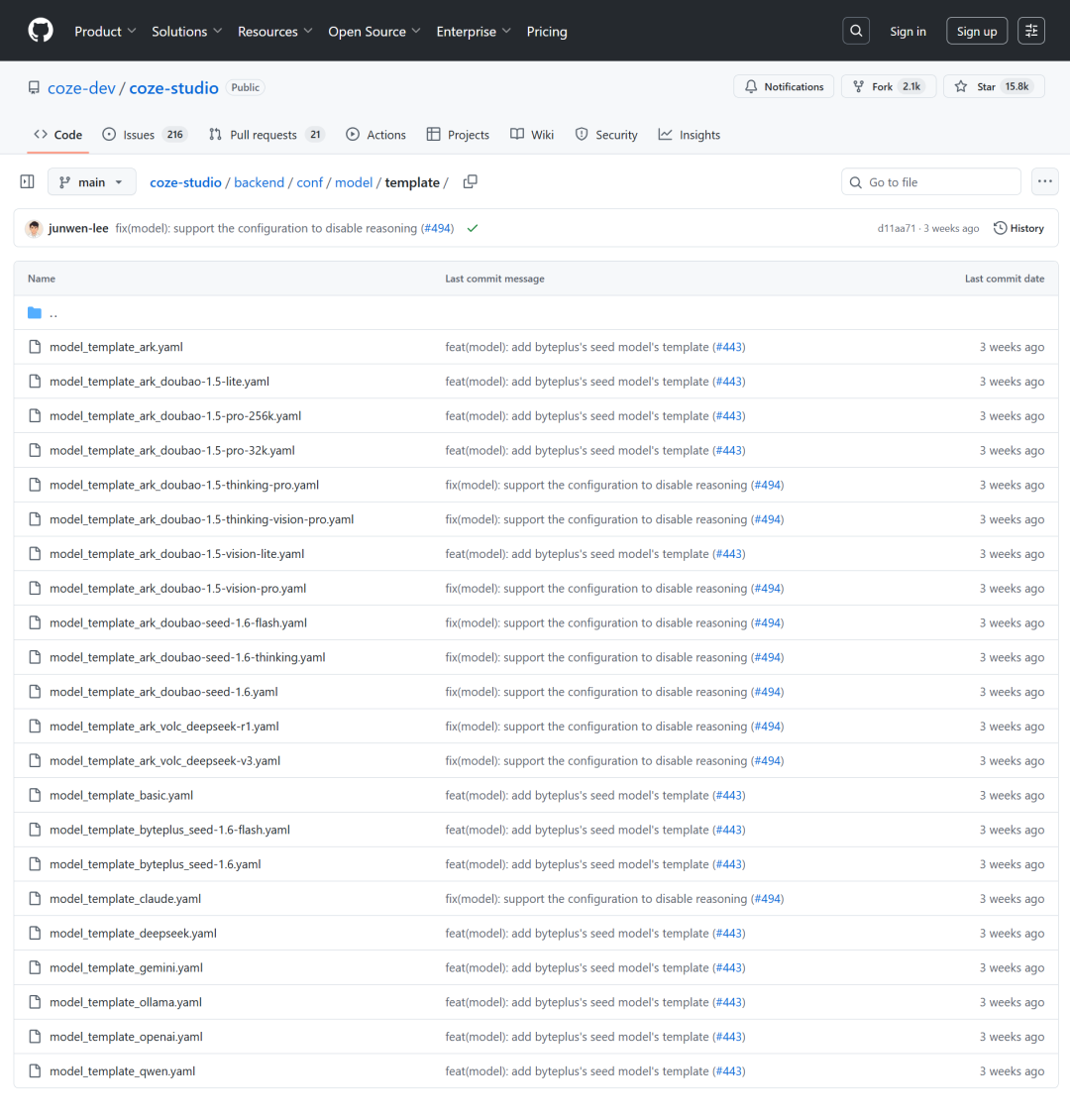

详见:https://github.com/coze-dev/coze-studio/tree/main/backend/conf/model/template

复制模型配置模板:

# 请根据实际情况选择对应的模型配置模板,当前选择gemini

cp backend/conf/model/template/model_template_gemini.yaml \

backend/conf/model/gemini.yaml

# 请根据实际情况选择对应的模型配置模板,当前选择deepseek

cp backend/conf/model/template/model_template_deepseek.yaml \

backend/conf/model/deepseek.yaml查看模型配置模版:

id: 67010

name: Gemini-2.5-Flash

icon_uri: default_icon/gemini_v2.png

icon_url: ""

description:

zh: gemini 模型简介

en: gemini model description

default_parameters:

- name: temperature

label:

zh: 生成随机性

en: Temperature

desc:

zh: '- **temperature**: 调高温度会使得模型的输出更多样性和创新性,反之,降低温度会使输出内容更加遵循指令要求但减少多样性。建议不要与"Top p"同时调整。'

en: '**Temperature**:\n\n- When you increase this value, the model outputs more diverse and innovative content; when you decrease it, the model outputs less diverse content that strictly follows the given instructions.\n- It is recommended not to adjust this value with \"Top p\" at the same time.'

type: float

min: "0"

max: "1"

default_val:

balance: "0.8"

creative: "1"

default_val: "1.0"

precise: "0.3"

precision: 1

options: []

style:

widget: slider

label:

zh: 生成多样性

en: Generation diversity

- name: max_tokens

label:

zh: 最大回复长度

en: Response max length

desc:

zh: 控制模型输出的Tokens 长度上限。通常 100 Tokens 约等于 150 个中文汉字。

en: You can specify the maximum length of the tokens output through this value. Typically, 100 tokens are approximately equal to 150 Chinese characters.

type: int

min: "1"

max: "4096"

default_val:

default_val: "4096"

options: []

style:

widget: slider

label:

zh: 输入及输出设置

en: Input and output settings

- name: top_p

label:

zh: Top P

en: Top P

desc:

zh: '- **Top p 为累计概率**: 模型在生成输出时会从概率最高的词汇开始选择,直到这些词汇的总概率累积达到Top p 值。这样可以限制模型只选择这些高概率的词汇,从而控制输出内容的多样性。建议不要与"生成随机性"同时调整。'

en: '**Top P**:\n\n- An alternative to sampling with temperature, where only tokens within the top p probability mass are considered. For example, 0.1 means only the top 10% probability mass tokens are considered.\n- We recommend altering this or temperature, but not both.'

type: float

min: "0"

max: "1"

default_val:

default_val: "0.7"

precision: 2

options: []

style:

widget: slider

label:

zh: 生成多样性

en: Generation diversity

- name: response_format

label:

zh: 输出格式

en: Response format

desc:

zh: '- **文本**: 使用普通文本格式回复\n- **JSON**: 将引导模型使用JSON格式输出'

en: '**Response Format**:\n\n- **JSON**: Uses JSON format for replies'

type: int

min: ""

max: ""

default_val:

default_val: "0"

options:

- label: Text

value: "0"

- label: JSON

value: "2"

style:

widget: radio_buttons

label:

zh: 输入及输出设置

en: Input and output settings

meta:

protocol: gemini

capability:

function_call: true

input_modal:

- text

- image

- audio

- video

input_tokens: 1048576

json_mode: true

max_tokens: 1114112

output_modal:

- text

output_tokens: 65536

prefix_caching: true

reasoning: true

prefill_response: true

conn_config:

base_url: "https://generativelanguage.googleapis.com/"

api_key: ""

timeout: 0s

model: gemini-2.5-flash

temperature: 0.7

frequency_penalty: 0

presence_penalty: 0

max_tokens: 4096

top_p: 1

top_k: 0

stop: []

gemini:

backend: 0

project: ""

location: ""

api_version: ""

headers:

key_1:

- val_1

- val_2

timeout_ms: 0

include_thoughts: true

thinking_budget: null

custom: {}

status: 0

id: 66010

name: DeepSeek-V3

icon_uri: default_icon/deepseek_v2.png

icon_url: ""

description:

zh: deepseek 模型简介

en: deepseek model description

default_parameters:

- name: temperature

label:

zh: 生成随机性

en: Temperature

desc:

zh: '- **temperature**: 调高温度会使得模型的输出更多样性和创新性,反之,降低温度会使输出内容更加遵循指令要求但减少多样性。建议不要与"Top p"同时调整。'

en: '**Temperature**:\n\n- When you increase this value, the model outputs more diverse and innovative content; when you decrease it, the model outputs less diverse content that strictly follows the given instructions.\n- It is recommended not to adjust this value with \"Top p\" at the same time.'

type: float

min: "0"

max: "1"

default_val:

balance: "0.8"

creative: "1"

default_val: "1.0"

precise: "0.3"

precision: 1

options: []

style:

widget: slider

label:

zh: 生成随机性

en: Generation diversity

- name: max_tokens

label:

zh: 最大回复长度

en: Response max length

desc:

zh: 控制模型输出的Tokens 长度上限。通常 100 Tokens 约等于 150 个中文汉字。

en: You can specify the maximum length of the tokens output through this value. Typically, 100 tokens are approximately equal to 150 Chinese characters.

type: int

min: "1"

max: "4096"

default_val:

default_val: "4096"

options: []

style:

widget: slider

label:

zh: 输入及输出设置

en: Input and output settings

- name: response_format

label:

zh: 输出格式

en: Response format

desc:

zh: '- **文本**: 使用普通文本格式回复\n- **JSON**: 将引导模型使用JSON格式输出'

en: '**Response Format**:\n\n- **Text**: Replies in plain text format\n- **Markdown**: Uses Markdown format for replies\n- **JSON**: Uses JSON format for replies'

type: int

min: ""

max: ""

default_val:

default_val: "0"

options:

- label: Text

value: "0"

- label: JSON Object

value: "1"

style:

widget: radio_buttons

label:

zh: 输入及输出设置

en: Input and output settings

meta:

protocol: deepseek

capability:

function_call: false

input_modal:

- text

input_tokens: 128000

json_mode: false

max_tokens: 128000

output_modal:

- text

output_tokens: 16384

prefix_caching: false

reasoning: false

prefill_response: false

conn_config:

base_url: "https://api.deepseek.com"

api_key: "sk-89fb15811b6944e09cfc2fe257274a18"

timeout: 0s

model: "DeepSeek-R1-0528"

temperature: 0.7

frequency_penalty: 0

presence_penalty: 0

max_tokens: 4096

top_p: 1

top_k: 0

stop: []

deepseek:

response_format_type: text

custom: {}

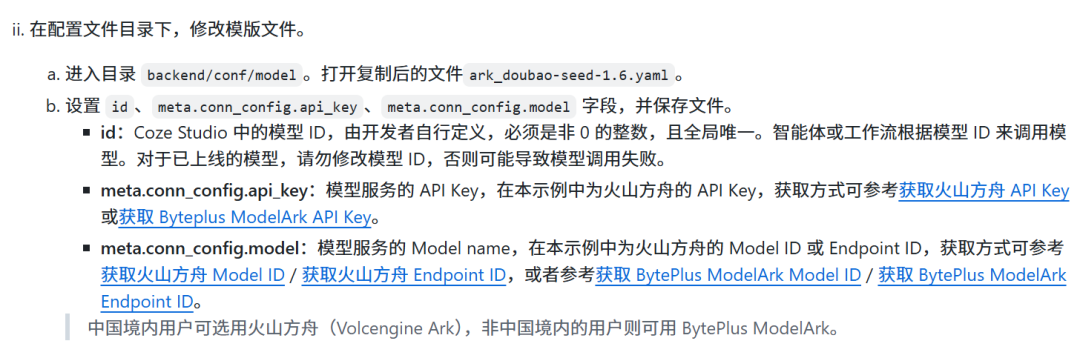

status: 0修改模型配置模版:

说明:

设置id、meta.conn_config.api_key、meta.conn_config.model字段

# 获取gemini的API key地址:

https://aistudio.google.com/apikey

# 获取deepseek的API key地址:

https://platform.deepseek.com/api_keys

https://api-docs.deepseek.com/zh-cn/切换目录:

cd docker复制.env.example文件:

cp .env.example .env查看.env文件:

详见:https://github.com/coze-dev/coze-studio/blob/main/docker/.env.example

# Server

export LISTEN_ADDR=":8888"

export LOG_LEVEL="debug"

export MAX_REQUEST_BODY_SIZE=1073741824

export SERVER_HOST="http://localhost${LISTEN_ADDR}"

export MINIO_PROXY_ENDPOINT=""

export USE_SSL="0"

export SSL_CERT_FILE=""

export SSL_KEY_FILE=""

# MySQL

export MYSQL_ROOT_PASSWORD=root

export MYSQL_DATABASE=opencoze

export MYSQL_USER=coze

export MYSQL_PASSWORD=coze123

export MYSQL_HOST=mysql

export MYSQL_PORT=3306

export MYSQL_DSN="${MYSQL_USER}:${MYSQL_PASSWORD}@tcp(${MYSQL_HOST}:${MYSQL_PORT})/${MYSQL_DATABASE}?charset=utf8mb4&parseTime=True"

export ATLAS_URL="mysql://${MYSQL_USER}:${MYSQL_PASSWORD}@${MYSQL_HOST}:${MYSQL_PORT}/${MYSQL_DATABASE}?charset=utf8mb4&parseTime=True"

# Redis

export REDIS_AOF_ENABLED=no

export REDIS_IO_THREADS=4

export ALLOW_EMPTY_PASSWORD=yes

export REDIS_ADDR="redis:6379"

export REDIS_PASSWORD=""

# This Upload component used in Agent / workflow File/Image With LLM , support the component of imagex / storage

# default: storage, use the settings of storage component

# if imagex, you must finish the configuration of <VolcEngine ImageX>

export FILE_UPLOAD_COMPONENT_TYPE="storage"

# VolcEngine ImageX

export VE_IMAGEX_AK=""

export VE_IMAGEX_SK=""

export VE_IMAGEX_SERVER_ID=""

export VE_IMAGEX_DOMAIN=""

export VE_IMAGEX_TEMPLATE=""

export VE_IMAGEX_UPLOAD_HOST="https://imagex.volcengineapi.com"

# Storage component

export STORAGE_TYPE="minio" # minio / tos / s3

export STORAGE_UPLOAD_HTTP_SCHEME="http" # http / https. If coze studio website is https, you must set it to https

export STORAGE_BUCKET="opencoze"

# MiniIO

export MINIO_ROOT_USER=minioadmin

export MINIO_ROOT_PASSWORD=minioadmin123

export MINIO_DEFAULT_BUCKETS=milvus

export MINIO_AK=$MINIO_ROOT_USER

export MINIO_SK=$MINIO_ROOT_PASSWORD

export MINIO_ENDPOINT="minio:9000"

export MINIO_API_HOST="http://${MINIO_ENDPOINT}"

# TOS

export TOS_ACCESS_KEY=

export TOS_SECRET_KEY=

export TOS_ENDPOINT=https://tos-cn-beijing.volces.com

export TOS_BUCKET_ENDPOINT=https://opencoze.tos-cn-beijing.volces.com

export TOS_REGION=cn-beijing

# S3

export S3_ACCESS_KEY=

export S3_SECRET_KEY=

export S3_ENDPOINT=

export S3_BUCKET_ENDPOINT=

export S3_REGION=

# Elasticsearch

export ES_ADDR="http://elasticsearch:9200"

export ES_VERSION="v8"

export ES_USERNAME=""

export ES_PASSWORD=""

export COZE_MQ_TYPE="nsq" # nsq / kafka / rmq

export MQ_NAME_SERVER="nsqd:4150"

# RocketMQ

export RMQ_ACCESS_KEY=""

export RMQ_SECRET_KEY=""

# Settings for VectorStore

# VectorStore type: milvus / vikingdb

# If you want to use vikingdb, you need to set up the vikingdb configuration.

export VECTOR_STORE_TYPE="milvus"

# milvus vector store

export MILVUS_ADDR="milvus:19530"

# vikingdb vector store for Volcengine

export VIKING_DB_HOST=""

export VIKING_DB_REGION=""

export VIKING_DB_AK=""

export VIKING_DB_SK=""

export VIKING_DB_SCHEME=""

export VIKING_DB_MODEL_NAME="" # if vikingdb model name is not set, you need to set Embedding settings

# Settings for Embedding

# The Embedding model relied on by knowledge base vectorization does not need to be configured

# if the vector database comes with built-in Embedding functionality (such as VikingDB). Currently,

# Coze Studio supports four access methods: openai, ark, ollama, and custom http. Users can simply choose one of them when using

# embedding type: openai / ark / ollama / http

export EMBEDDING_TYPE="ark"

export EMBEDDING_MAX_BATCH_SIZE=100

# openai embedding

export OPENAI_EMBEDDING_BASE_URL="" # (string, required) OpenAI embedding base_url

export OPENAI_EMBEDDING_MODEL="" # (string, required) OpenAI embedding model

export OPENAI_EMBEDDING_API_KEY="" # (string, required) OpenAI embedding api_key

export OPENAI_EMBEDDING_BY_AZURE=false # (bool, optional) OpenAI embedding by_azure

export OPENAI_EMBEDDING_API_VERSION="" # (string, optional) OpenAI embedding azure api version

export OPENAI_EMBEDDING_DIMS=1024 # (int, required) OpenAI embedding dimensions

export OPENAI_EMBEDDING_REQUEST_DIMS=1024 # (int, optional) OpenAI embedding dimensions in requests, need to be empty if api doesn't support specifying dimensions.

# ark embedding by volcengine / byteplus

export ARK_EMBEDDING_MODEL="" # (string, required) Ark embedding model

export ARK_EMBEDDING_API_KEY="" # (string, required) Ark embedding api_key

export ARK_EMBEDDING_DIMS="2048" # (int, required) Ark embedding dimensions

export ARK_EMBEDDING_BASE_URL="" # (string, required) Ark embedding base_url

export ARK_EMBEDDING_API_TYPE="" # (string, optional) Ark embedding api type, should be "text_api" / "multi_modal_api". Default "text_api".

# ollama embedding

export OLLAMA_EMBEDDING_BASE_URL="" # (string, required) Ollama embedding base_url

export OLLAMA_EMBEDDING_MODEL="" # (string, required) Ollama embedding model

export OLLAMA_EMBEDDING_DIMS="" # (int, required) Ollama embedding dimensions

# http embedding

export HTTP_EMBEDDING_ADDR="" # (string, required) http embedding address

export HTTP_EMBEDDING_DIMS=1024 # (string, required) http embedding dimensions

# Settings for OCR

# If you want to use the OCR-related functions in the knowledge base feature,You need to set up the OCR configuration.

# Currently, Coze Studio has built-in Volcano OCR.

# Supported OCR types: `ve`, `paddleocr`

export OCR_TYPE="ve"

# ve ocr

export VE_OCR_AK=""

export VE_OCR_SK=""

# paddleocr ocr

export PADDLEOCR_OCR_API_URL=""

# Settings for Model

# Model for agent & workflow

# add suffix number to add different models

export MODEL_PROTOCOL_0="ark" # protocol

export MODEL_OPENCOZE_ID_0="100001" # id for record

export MODEL_NAME_0="" # model name for show

export MODEL_ID_0="" # model name for connection

export MODEL_API_KEY_0="" # model api key

export MODEL_BASE_URL_0="" # model base url

# Model for knowledge nl2sql, messages2query (rewrite), image annotation, workflow knowledge recall

# add prefix to assign specific model, downgrade to default config when prefix is not configured:

# 1. nl2sql: NL2SQL_ (e.g. NL2SQL_BUILTIN_CM_TYPE)

# 2. messages2query: M2Q_ (e.g. M2Q_BUILTIN_CM_TYPE)

# 3. image annotation: IA_ (e.g. IA_BUILTIN_CM_TYPE)

# 4. workflow knowledge recall: WKR_ (e.g. WKR_BUILTIN_CM_TYPE)

# supported chat model type: openai / ark / deepseek / ollama / qwen / gemini

export BUILTIN_CM_TYPE="ark"

# type openai

export BUILTIN_CM_OPENAI_BASE_URL=""

export BUILTIN_CM_OPENAI_API_KEY=""

export BUILTIN_CM_OPENAI_BY_AZURE=false

export BUILTIN_CM_OPENAI_MODEL=""

# type ark

export BUILTIN_CM_ARK_API_KEY=""

export BUILTIN_CM_ARK_MODEL=""

export BUILTIN_CM_ARK_BASE_URL=""

# type deepseek

export BUILTIN_CM_DEEPSEEK_BASE_URL=""

export BUILTIN_CM_DEEPSEEK_API_KEY=""

export BUILTIN_CM_DEEPSEEK_MODEL=""

# type ollama

export BUILTIN_CM_OLLAMA_BASE_URL=""

export BUILTIN_CM_OLLAMA_MODEL=""

# type qwen

export BUILTIN_CM_QWEN_BASE_URL=""

export BUILTIN_CM_QWEN_API_KEY=""

export BUILTIN_CM_QWEN_MODEL=""

# type gemini

export BUILTIN_CM_GEMINI_BACKEND=""

export BUILTIN_CM_GEMINI_API_KEY=""

export BUILTIN_CM_GEMINI_PROJECT=""

export BUILTIN_CM_GEMINI_LOCATION=""

export BUILTIN_CM_GEMINI_BASE_URL=""

export BUILTIN_CM_GEMINI_MODEL=""

# Workflow Code Runner Configuration

# Supported code runner types: sandbox / local

# Default using local

# - sandbox: execute python code in a sandboxed env with deno + pyodide

# - local: using venv, no env isolation

export CODE_RUNNER_TYPE="local"

# Sandbox sub configuration

# Access restricted to specific environment variables, split with comma, e.g. "PATH,USERNAME"

export CODE_RUNNER_ALLOW_ENV=""

# Read access restricted to specific paths, split with comma, e.g. "/tmp,./data"

export CODE_RUNNER_ALLOW_READ=""

# Write access restricted to specific paths, split with comma, e.g. "/tmp,./data"

export CODE_RUNNER_ALLOW_WRITE=""

# Subprocess execution restricted to specific commands, split with comma, e.g. "python,git"

export CODE_RUNNER_ALLOW_RUN=""

# Network access restricted to specific domains/IPs, split with comma, e.g. "api.test.com,api.test.org:8080"

# The following CDN supports downloading the packages required for pyodide to run Python code. Sandbox may not work properly if removed.

export CODE_RUNNER_ALLOW_NET="cdn.jsdelivr.net"

# Foreign Function Interface access to specific libraries, split with comma, e.g. "/usr/lib/libm.so"

export CODE_RUNNER_ALLOW_FFI=""

# Directory for deno modules, default using pwd. e.g. "/tmp/path/node_modules"

export CODE_RUNNER_NODE_MODULES_DIR=""

# Code execution timeout, default 60 seconds. e.g. "2.56"

export CODE_RUNNER_TIMEOUT_SECONDS=""

# Code execution memory limit, default 100MB. e.g. "256"

export CODE_RUNNER_MEMORY_LIMIT_MB=""

# The function of registration controller

# If you want to disable the registration feature, set DISABLE_USER_REGISTRATION to true. You can then control allowed registrations via a whitelist with ALLOW_REGISTRATION_EMAIL.

export DISABLE_USER_REGISTRATION="" # default "", if you want to disable, set to true

export ALLOW_REGISTRATION_EMAIL="" # is a list of email addresses, separated by ",". Example: "11@example.com,22@example.com"

# Plugin AES secret.

# PLUGIN_AES_AUTH_SECRET is the secret of used to encrypt plugin authorization payload.

# The size of secret must be 16, 24 or 32 bytes.

export PLUGIN_AES_AUTH_SECRET='^*6x3hdu2nc%-p38'

# PLUGIN_AES_STATE_SECRET is the secret of used to encrypt oauth state.

# The size of secret must be 16, 24 or 32 bytes.

export PLUGIN_AES_STATE_SECRET='osj^kfhsd*(z!sno'

# PLUGIN_AES_OAUTH_TOKEN_SECRET is the secret of used to encrypt oauth refresh token and access token.

# The size of secret must be 16, 24 or 32 bytes.

export PLUGIN_AES_OAUTH_TOKEN_SECRET='cn+$PJ(HhJ[5d*z9'查看docker-compose.yml文件:

详见:https://github.com/coze-dev/coze-studio/blob/main/docker/docker-compose.yml

name: coze-studio

x-env-file: &env_file

- .env

services:

mysql:

image: mysql:8.4.5

container_name: coze-mysql

restart: always

environment:

MYSQL_ROOT_PASSWORD: ${MYSQL_ROOT_PASSWORD:-root}

MYSQL_DATABASE: ${MYSQL_DATABASE:-opencoze}

MYSQL_USER: ${MYSQL_USER:-coze}

MYSQL_PASSWORD: ${MYSQL_PASSWORD:-coze123}

env_file: *env_file

ports:

- '3306'

volumes:

- ./data/mysql:/var/lib/mysql

- ./volumes/mysql/schema.sql:/docker-entrypoint-initdb.d/init.sql

command:

- --character-set-server=utf8mb4

- --collation-server=utf8mb4_unicode_ci

healthcheck:

test:

[

'CMD',

'mysqladmin',

'ping',

'-h',

'localhost',

'-u$${MYSQL_USER}',

'-p$${MYSQL_PASSWORD}',

]

interval: 10s

timeout: 5s

retries: 5

start_period: 30s

networks:

- coze-network

redis:

image: bitnami/redis:8.0

container_name: coze-redis

restart: always

user: root

privileged: true

env_file: *env_file

environment:

- REDIS_AOF_ENABLED=${REDIS_AOF_ENABLED:-no}

- REDIS_PORT_NUMBER=${REDIS_PORT_NUMBER:-6379}

- REDIS_IO_THREADS=${REDIS_IO_THREADS:-4}

- ALLOW_EMPTY_PASSWORD=${ALLOW_EMPTY_PASSWORD:-yes}

ports:

- '6379'

volumes:

- ./data/bitnami/redis:/bitnami/redis/data:rw,Z

command: >

bash -c "

/opt/bitnami/scripts/redis/setup.sh

# Set proper permissions for data directories

chown -R redis:redis /bitnami/redis/data

chmod g+s /bitnami/redis/data

exec /opt/bitnami/scripts/redis/entrypoint.sh /opt/bitnami/scripts/redis/run.sh

"

healthcheck:

test: ['CMD', 'redis-cli', 'ping']

interval: 5s

timeout: 10s

retries: 10

start_period: 10s

networks:

- coze-network

elasticsearch:

image: bitnami/elasticsearch:8.18.0

container_name: coze-elasticsearch

restart: always

user: root

privileged: true

env_file: *env_file

environment:

- TEST=1

# Add Java certificate trust configuration

# - ES_JAVA_OPTS=-Djdk.tls.client.protocols=TLSv1.2 -Dhttps.protocols=TLSv1.2 -Djavax.net.ssl.trustAll=true -Xms4096m -Xmx4096m

ports:

- '9200'

volumes:

- ./data/bitnami/elasticsearch:/bitnami/elasticsearch/data

- ./volumes/elasticsearch/elasticsearch.yml:/opt/bitnami/elasticsearch/config/my_elasticsearch.yml

- ./volumes/elasticsearch/analysis-smartcn.zip:/opt/bitnami/elasticsearch/analysis-smartcn.zip:rw,Z

- ./volumes/elasticsearch/setup_es.sh:/setup_es.sh

- ./volumes/elasticsearch/es_index_schema:/es_index_schemas

healthcheck:

test:

[

'CMD-SHELL',

'curl -f http://localhost:9200 && [ -f /tmp/es_plugins_ready ] && [ -f /tmp/es_init_complete ]',

]

interval: 5s

timeout: 10s

retries: 10

start_period: 10s

networks:

- coze-network

# Install smartcn analyzer plugin and initialize ES

command: >

bash -c "

/opt/bitnami/scripts/elasticsearch/setup.sh

# Set proper permissions for data directories

chown -R elasticsearch:elasticsearch /bitnami/elasticsearch/data

chmod g+s /bitnami/elasticsearch/data

# Create plugin directory

mkdir -p /bitnami/elasticsearch/plugins;

# Unzip plugin to plugin directory and set correct permissions

echo 'Installing smartcn plugin...';

if [ ! -d /opt/bitnami/elasticsearch/plugins/analysis-smartcn ]; then

# Download plugin package locally

echo 'Copying smartcn plugin...';

cp /opt/bitnami/elasticsearch/analysis-smartcn.zip /tmp/analysis-smartcn.zip

elasticsearch-plugin install file:///tmp/analysis-smartcn.zip

if [[ "$$?" != "0" ]]; then

echo 'Plugin installation failed, exiting operation';

rm -rf /opt/bitnami/elasticsearch/plugins/analysis-smartcn

exit 1;

fi;

rm -f /tmp/analysis-smartcn.zip;

fi;

# Create marker file indicating plugin installation success

touch /tmp/es_plugins_ready;

echo 'Plugin installation successful, marker file created';

# Start initialization script in background

(

echo 'Waiting for Elasticsearch to be ready...'

until curl -s -f http://localhost:9200/_cat/health >/dev/null 2>&1; do

echo 'Elasticsearch not ready, waiting...'

sleep 2

done

echo 'Elasticsearch is ready!'

# Run ES initialization script

echo 'Running Elasticsearch initialization...'

sed 's/\r$$//' /setup_es.sh > /setup_es_fixed.sh

chmod +x /setup_es_fixed.sh

/setup_es_fixed.sh --index-dir /es_index_schemas

# Create marker file indicating initialization completion

touch /tmp/es_init_complete

echo 'Elasticsearch initialization completed successfully!'

) &

# Start Elasticsearch

exec /opt/bitnami/scripts/elasticsearch/entrypoint.sh /opt/bitnami/scripts/elasticsearch/run.sh

echo -e "⏳ Adjusting Elasticsearch disk watermark settings..."

"

minio:

image: minio/minio:RELEASE.2025-06-13T11-33-47Z-cpuv1

container_name: coze-minio

user: root

privileged: true

restart: always

env_file: *env_file

ports:

- '9000'

- '9001'

volumes:

- ./data/minio:/data

- ./volumes/minio/default_icon/:/default_icon

- ./volumes/minio/official_plugin_icon/:/official_plugin_icon

environment:

MINIO_ROOT_USER: ${MINIO_ROOT_USER:-minioadmin}

MINIO_ROOT_PASSWORD: ${MINIO_ROOT_PASSWORD:-minioadmin123}

MINIO_DEFAULT_BUCKETS: ${MINIO_BUCKET:-opencoze},${MINIO_DEFAULT_BUCKETS:-milvus}

entrypoint:

- /bin/sh

- -c

- |

# Run initialization in background

(

# Wait for MinIO to be ready

until (/usr/bin/mc alias set localminio http://localhost:9000 $${MINIO_ROOT_USER} $${MINIO_ROOT_PASSWORD}) do

echo "Waiting for MinIO to be ready..."

sleep 1

done

# Create bucket and copy files

/usr/bin/mc mb --ignore-existing localminio/$${STORAGE_BUCKET}

/usr/bin/mc cp --recursive /default_icon/ localminio/$${STORAGE_BUCKET}/default_icon/

/usr/bin/mc cp --recursive /official_plugin_icon/ localminio/$${STORAGE_BUCKET}/official_plugin_icon/

echo "MinIO initialization complete."

) &

# Start minio server in foreground

exec minio server /data --console-address ":9001"

healthcheck:

test:

[

'CMD-SHELL',

'/usr/bin/mc alias set health_check http://localhost:9000 ${MINIO_ROOT_USER} ${MINIO_ROOT_PASSWORD} && /usr/bin/mc ready health_check',

]

interval: 30s

timeout: 10s

retries: 3

start_period: 30s

networks:

- coze-network

etcd:

image: bitnami/etcd:3.5

container_name: coze-etcd

user: root

restart: always

privileged: true

env_file: *env_file

environment:

- ETCD_AUTO_COMPACTION_MODE=revision

- ETCD_AUTO_COMPACTION_RETENTION=1000

- ETCD_QUOTA_BACKEND_BYTES=4294967296

- ALLOW_NONE_AUTHENTICATION=yes

ports:

- 2379:2379

- 2380:2380

volumes:

- ./data/bitnami/etcd:/bitnami/etcd:rw,Z

- ./volumes/etcd/etcd.conf.yml:/opt/bitnami/etcd/conf/etcd.conf.yml:ro,Z

command: >

bash -c "

/opt/bitnami/scripts/etcd/setup.sh

# Set proper permissions for data and config directories

chown -R etcd:etcd /bitnami/etcd

chmod g+s /bitnami/etcd

exec /opt/bitnami/scripts/etcd/entrypoint.sh /opt/bitnami/scripts/etcd/run.sh

"

healthcheck:

test: ['CMD', 'etcdctl', 'endpoint', 'health']

interval: 5s

timeout: 10s

retries: 10

start_period: 10s

networks:

- coze-network

milvus:

container_name: coze-milvus

image: milvusdb/milvus:v2.5.10

user: root

privileged: true

restart: always

env_file: *env_file

command: >

bash -c "

# Set proper permissions for data directories

chown -R root:root /var/lib/milvus

chmod g+s /var/lib/milvus

exec milvus run standalone

"

security_opt:

- seccomp:unconfined

environment:

ETCD_ENDPOINTS: etcd:2379

MINIO_ADDRESS: minio:9000

MINIO_BUCKET_NAME: ${MINIO_BUCKET:-milvus}

MINIO_ACCESS_KEY_ID: ${MINIO_ROOT_USER:-minioadmin}

MINIO_SECRET_ACCESS_KEY: ${MINIO_ROOT_PASSWORD:-minioadmin123}

MINIO_USE_SSL: false

LOG_LEVEL: debug

volumes:

- ./data/milvus:/var/lib/milvus:rw,Z

healthcheck:

test: ['CMD', 'curl', '-f', 'http://localhost:9091/healthz']

interval: 5s

timeout: 10s

retries: 10

start_period: 10s

ports:

- '19530'

- '9091'

depends_on:

etcd:

condition: service_healthy

minio:

condition: service_healthy

networks:

- coze-network

nsqlookupd:

image: nsqio/nsq:v1.2.1

container_name: coze-nsqlookupd

command: /nsqlookupd

restart: always

ports:

- '4160'

- '4161'

networks:

- coze-network

healthcheck:

test: ['CMD-SHELL', 'nsqlookupd --version']

interval: 5s

timeout: 10s

retries: 10

start_period: 10s

nsqd:

image: nsqio/nsq:v1.2.1

container_name: coze-nsqd

command: /nsqd --lookupd-tcp-address=nsqlookupd:4160 --broadcast-address=nsqd

restart: always

ports:

- '4150'

- '4151'

depends_on:

nsqlookupd:

condition: service_healthy

networks:

- coze-network

healthcheck:

test: ['CMD-SHELL', '/nsqd --version']

interval: 5s

timeout: 10s

retries: 10

start_period: 10s

nsqadmin:

image: nsqio/nsq:v1.2.1

container_name: coze-nsqadmin

command: /nsqadmin --lookupd-http-address=nsqlookupd:4161

restart: always

ports:

- '4171'

depends_on:

nsqlookupd:

condition: service_healthy

networks:

- coze-network

coze-server:

# build:

# context: ../

# dockerfile: backend/Dockerfile

image: opencoze/opencoze:latest

restart: always

container_name: coze-server

env_file: *env_file

# environment:

# LISTEN_ADDR: 0.0.0.0:8888

networks:

- coze-network

ports:

- '8888'

- '8889:8889'

volumes:

- .env:/app/.env

- ../backend/conf:/app/resources/conf

# - ../backend/static:/app/resources/static

depends_on:

mysql:

condition: service_healthy

redis:

condition: service_healthy

elasticsearch:

condition: service_healthy

minio:

condition: service_healthy

milvus:

condition: service_healthy

command: ['/app/opencoze']

coze-web:

# build:

# context: ..

# dockerfile: frontend/Dockerfile

image: opencoze/web:latest

container_name: coze-web

restart: always

ports:

- "8888:80"

# - "443:443" # SSL port (uncomment if using SSL)

volumes:

- ./nginx/nginx.conf:/etc/nginx/nginx.conf:ro # Main nginx config

- ./nginx/conf.d/default.conf:/etc/nginx/conf.d/default.conf:ro # Proxy config

# - ./nginx/ssl:/etc/nginx/ssl:ro # SSL certificates (uncomment if using SSL)

depends_on:

- coze-server

networks:

- coze-network

networks:

coze-network:

driver: bridge创建并启动容器:

docker-compose up -d查看容器列表:

docker ps停止并销毁容器:

docker-compose down删除镜像:

docker rmi \

mysql:8.4.5 \

bitnami/redis:8.0 \

bitnami/elasticsearch:8.18.0 \

minio/minio:RELEASE.2025-06-13T11-33-47Z-cpuv1 \

bitnami/etcd:3.5 \

milvusdb/milvus:v2.5.10 \

nsqio/nsq:v1.2.1 \

opencoze/opencoze:latest \

opencoze/web:latest删除目录:

rm -rf ./data3、浏览器访问

假设当前ip为192.168.186.128

浏览器访问:http://192.168.186.128:8888输入邮箱、密码,选择登录 或 注册:

管理后台:

4、详见

https://www.coze.cn/

https://www.coze.cn/open/docs

https://www.coze.cn/opensource

https://github.com/coze-dev/coze-studio

https://github.com/coze-dev/coze-studio/blob/main/README.zh_CN.md

https://mp.weixin.qq.com/s/amNVehNZib1gwnJt37utxg