在这篇文章中,我将演示如何用 Streamlit 快速构建一个轻量的对话机器人 UI,并通过 LangChain / LangGraph 调用 LLM,实现简单的对话功能。通过将前端和后端分离,你可以单独测试模型调用和 UI 显示。

为什么选择 Streamlit?

Streamlit 是一个专为 Python 数据应用设计的前端框架,特点是:

-

极简化前端开发,只需 Python 代码即可构建 Web 应用。

-

与 Python 生态兼容,方便集成机器学习、LLM 等工具。

-

交互组件丰富,如表单、滑块、下拉框等。

通过 Streamlit,我们可以专注于业务逻辑,而不用写复杂的 HTML/CSS/JS。

系统架构

我们将系统拆分为两部分:

-

后端 (

backend.py)-

管理对话状态(状态图)

-

调用 LLM(如 ChatTongyi)

-

处理对话记忆和存储(SQLite)

-

-

前端 (

app.py)-

使用 Streamlit 显示对话界面

-

负责收集用户输入

-

调用后端生成模型回复

-

这种架构有几个好处:

-

UI 与后端解耦,可单独测试

-

后端逻辑可复用到其他应用或接口

-

数据存储统一管理,方便扩展

后端实现 (backend.py)

python

import sqlite3

from typing import Annotated, List

from langchain_core.messages import AnyMessage, HumanMessage

from langchain_community.chat_models import ChatTongyi

from langgraph.graph import StateGraph, END

from langgraph.graph.message import add_messages

from langgraph.checkpoint.sqlite import SqliteSaver

from typing_extensions import TypedDict

# ============ 状态定义 ============

class ChatState(TypedDict):

messages: Annotated[List[AnyMessage], add_messages]

# ============ 节点函数 ============

def make_call_model(llm: ChatTongyi):

"""返回一个节点函数,读取 state -> 调用 LLM -> 更新 state.messages"""

def call_model(state: ChatState) -> ChatState:

response = llm.invoke(state["messages"])

return {"messages": [response]}

return call_model

# ============ 后端核心类 ============

class ChatBackend:

def __init__(self, api_key: str, model_name: str = "qwen-plus", temperature: float = 0.7, db_path: str = "memory.sqlite"):

self.llm = ChatTongyi(model=model_name, temperature=temperature, api_key=api_key)

self.builder = StateGraph(ChatState)

self.builder.add_node("model", make_call_model(self.llm))

self.builder.set_entry_point("model")

self.builder.add_edge("model", END)

# SQLite 检查点

conn = sqlite3.connect(db_path, check_same_thread=False)

self.checkpointer = SqliteSaver(conn)

self.app = self.builder.compile(checkpointer=self.checkpointer)

def chat(self, user_input: str, thread_id: str):

config = {"configurable": {"thread_id": thread_id}}

final_state = self.app.invoke({"messages": [HumanMessage(content=user_input)]}, config)

ai_text = final_state["messages"][-1].content

return ai_text

if __name__ == '__main__':

from backend import ChatBackend

backend = ChatBackend(api_key="YOUR_KEY")

print(backend.chat("你好", "thread1"))前端实现 (app_ui.py)

python

import os

import uuid

import streamlit as st

from backend import ChatBackend

st.set_page_config(page_title="Chatbot", page_icon="💬", layout="wide")

# ======== Streamlit CSS =========

st.markdown(

"""

<style>

.main {padding: 2rem 2rem;}

.chat-card {background: rgba(255,255,255,0.65); backdrop-filter: blur(8px); border-radius: 20px; padding: 1.25rem; box-shadow: 0 10px 30px rgba(0,0,0,0.08);}

.msg {border-radius: 16px; padding: 0.8rem 1rem; margin: 0.35rem 0; line-height: 1.5;}

.human {background: #eef2ff;}

.ai {background: #ecfeff;}

.small {font-size: 0.86rem; color: #6b7280;}

.tag {display:inline-block; padding: .25rem .6rem; border-radius: 9999px; border: 1px solid #e5e7eb; margin-right:.4rem; cursor:pointer}

.tag:hover {background:#f3f4f6}

.footer-note {color:#9ca3af; font-size:.85rem}

[data-testid="stSidebarHeader"] {

margin-bottom: 0px

}

/* 让主标题上移,和侧边栏对齐 */

.block-container {

padding-top: 1.5rem !important;

}

</style>

""",

unsafe_allow_html=True,

)

# ======== 侧边栏配置 =========

with st.sidebar:

st.markdown("## ⚙️ 配置")

if "thread_id" not in st.session_state:

st.session_state.thread_id = str(uuid.uuid4())

if "chat_display" not in st.session_state:

st.session_state.chat_display = []

api_key = st.text_input("DashScope API Key", type="password", value=os.getenv("DASHSCOPE_API_KEY", ""))

model_name = st.selectbox("选择模型", ["qwen-plus", "qwen-turbo", "qwen-max"], index=0)

temperature = st.slider("Temperature", 0.0, 1.0, 0.7, 0.05)

if st.button("➕ 新建会话"):

st.session_state.thread_id = str(uuid.uuid4())

st.session_state.chat_display = []

st.rerun()

if st.button("🗑️ 清空当前会话"):

st.session_state.chat_display = []

st.rerun()

# ======== 主区域 =========

st.markdown("# 💬 Chatbot")

if not api_key:

st.warning("请先在左侧输入 DashScope API Key 才能开始对话。")

else:

backend = ChatBackend(api_key=api_key, model_name=model_name, temperature=temperature)

st.markdown('<div class="chat-card">', unsafe_allow_html=True)

if not st.session_state.chat_display:

st.info("开始对话吧!")

else:

for role, content in st.session_state.chat_display:

css_class = "human" if role == "user" else "ai"

avatar = "🧑💻" if role == "user" else "🤖"

st.markdown(f"<div class='msg {css_class}'><span class='small'>{avatar} {role}</span><br/>{content}</div>", unsafe_allow_html=True)

with st.form("chat-form", clear_on_submit=True):

user_input = st.text_area("输入你的问题/指令:", height=100, placeholder="比如:帮我写一个二分查找函数。")

submitted = st.form_submit_button("发送 ➤")

if submitted and user_input.strip():

st.session_state.chat_display.append(("user", user_input))

with st.spinner("思考中..."):

ai_text = backend.chat(user_input, st.session_state.thread_id)

st.session_state.chat_display.append(("assistant", ai_text))

st.rerun()

st.markdown('</div>', unsafe_allow_html=True)

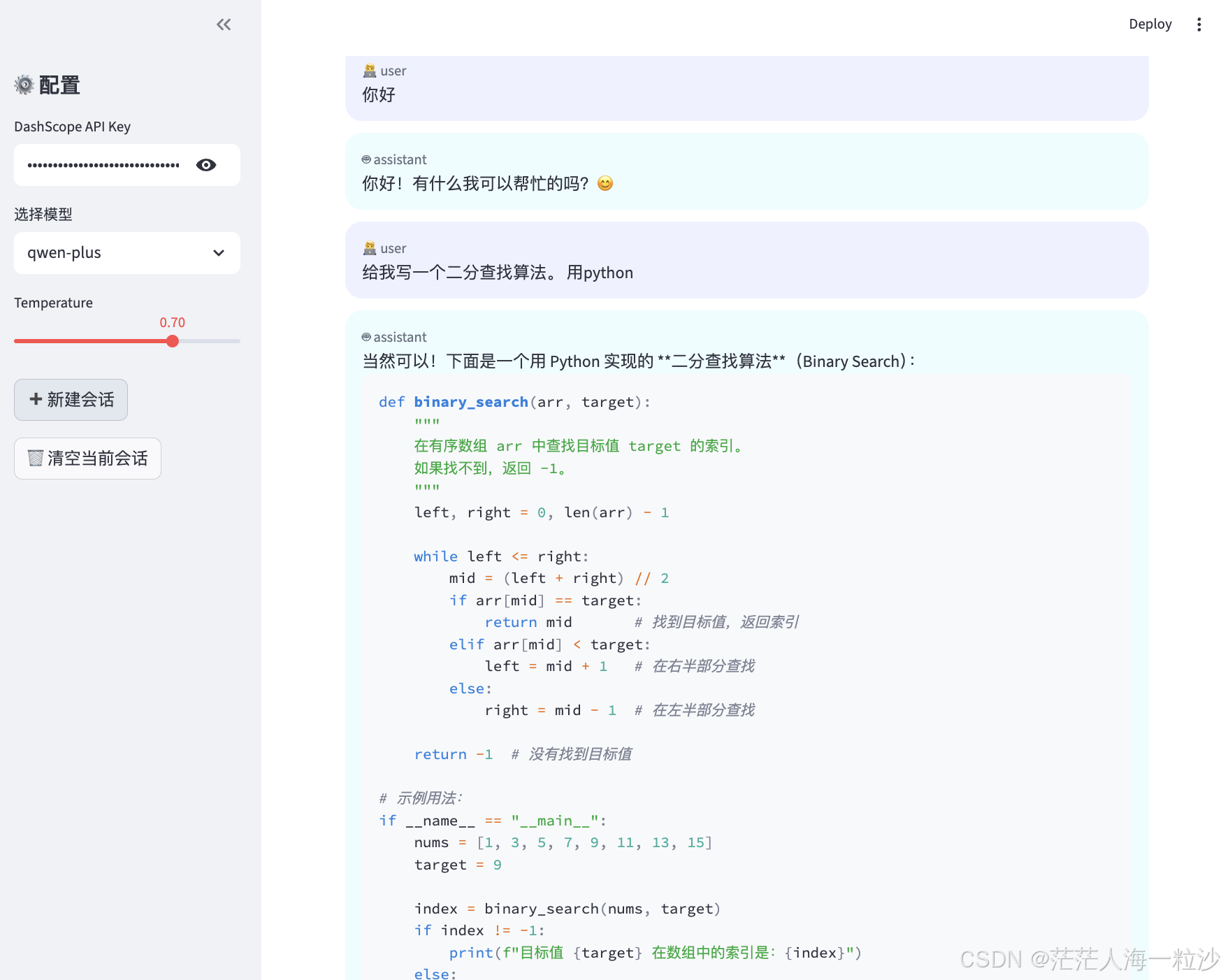

st.markdown(f"<div class='footer-note'>Session: <code>{st.session_state.thread_id}</code></div>", unsafe_allow_html=True)运行效果

-

运行后端服务:无需额外启动,

backend.py被app.py调用即可。 -

在浏览器中访问 Streamlit 页面:

python

streamlit run app_ui.py-

左侧输入 API Key,选择模型和温度。

-

在主区域输入问题或指令,点击"发送",AI 回复将显示在聊天窗口。

效果示例:

总结与扩展

通过这套架构,我们实现了:

-

前后端解耦:UI 与 LLM 调用分离,可单独测试

-

对话记忆管理:使用 SQLite 保存会话状态

-

可扩展性:后端可替换不同 LLM 或添加多轮对话逻辑

其它

如果你的模型支持流式输出

backend_stream.py

python

import os

import sqlite3

import time

from typing import Annotated, List

from dotenv import load_dotenv

from langchain_core.messages import AnyMessage, HumanMessage

from langchain_community.chat_models import ChatTongyi

from langgraph.graph import StateGraph, END

from langgraph.graph.message import add_messages

from langgraph.checkpoint.sqlite import SqliteSaver

from typing_extensions import TypedDict

from langchain.callbacks.base import BaseCallbackHandler

# ===== 状态定义 =====

class ChatState(TypedDict):

messages: Annotated[List[AnyMessage], add_messages]

# ===== 节点函数(流式调用) =====

def make_call_model(llm: ChatTongyi):

def call_model(state: ChatState) -> ChatState:

# ⚡ 这里改为流式

responses = []

for chunk in llm.stream(state["messages"]):

responses.append(chunk)

return {"messages": responses}

return call_model

# ===== 流式回调 =====

class StreamCallbackHandler(BaseCallbackHandler):

def __init__(self):

self.chunks = []

def on_llm_new_token(self, token: str, **kwargs):

self.chunks.append(token)

# ===== 后端核心 =====

class ChatBackend:

def __init__(self, api_key: str, model_name: str = "qwen-plus", temperature: float = 0.7, db_path: str = "memory.sqlite"):

self.llm = ChatTongyi(

model=model_name,

temperature=temperature,

api_key=api_key,

streaming=True, # 开启流式

)

self.builder = StateGraph(ChatState)

self.builder.add_node("model", make_call_model(self.llm))

self.builder.set_entry_point("model")

self.builder.add_edge("model", END)

conn = sqlite3.connect(db_path, check_same_thread=False)

self.checkpointer = SqliteSaver(conn)

self.app = self.builder.compile(checkpointer=self.checkpointer)

def chat_stream_simulated(self, user_input: str):

"""模拟流式输出(调试用)"""

response = f"AI 正在回答你的问题: {user_input}"

for ch in response:

yield ch

time.sleep(0.05)

def chat_stream(self, user_input: str, thread_id: str):

"""真正的流式输出"""

config = {"configurable": {"thread_id": thread_id}}

# 用 llm.stream() 获取流式输出

for chunk in self.llm.stream([HumanMessage(content=user_input)]):

token = chunk.text()

if token:

yield tokenapp_stream_ui.py

python

# app_stream_ui_no_thinking.py

import os

import time

import uuid

import streamlit as st

from backend_stream import ChatBackend # 请确保 backend_stream.chat_stream 返回逐 token 字符串

st.set_page_config(page_title="Chatbot", page_icon="💬", layout="wide")

# ======== 原有 CSS(保持不变) =========

st.markdown(

"""

<style>

.main {padding: 2rem 2rem;}

.chat-card {background: rgba(255,255,255,0.65); backdrop-filter: blur(8px); border-radius: 20px; padding: 1.25rem; box-shadow: 0 10px 30px rgba(0,0,0,0.08);}

.msg {border-radius: 16px; padding: 0.8rem 1rem; margin: 0.35rem 0; line-height: 1.5;}

.human {background: #eef2ff;}

.ai {background: #ecfeff;}

.small {font-size: 0.86rem; color: #6b7280;}

.footer-note {color:#9ca3af; font-size:.85rem}

[data-testid="stSidebarHeader"] { margin-bottom: 0px }

.block-container { padding-top: 1.5rem !important; }

</style>

""",

unsafe_allow_html=True,

)

# ======== 侧边栏配置 =========

with st.sidebar:

st.markdown("## ⚙️ 配置")

if "thread_id" not in st.session_state:

st.session_state.thread_id = str(uuid.uuid4())

if "chat_display" not in st.session_state:

st.session_state.chat_display = []

api_key = st.text_input("DashScope API Key", type="password", value=os.getenv("DASHSCOPE_API_KEY", ""))

model_name = st.selectbox("选择模型", ["qwen-plus", "qwen-turbo", "qwen-max"], index=0)

temperature = st.slider("Temperature", 0.0, 1.0, 0.7, 0.05)

if st.button("➕ 新建会话"):

st.session_state.thread_id = str(uuid.uuid4())

st.session_state.chat_display = []

st.experimental_rerun()

if st.button("🗑️ 清空当前会话"):

st.session_state.chat_display = []

st.experimental_rerun()

# ======== 主区域 =========

st.markdown("# 💬 Chatbot")

if not api_key:

st.warning("请先在左侧输入 DashScope API Key 才能开始对话。")

else:

# 可以缓存 backend 到 session_state,但为简单起见这里每次实例化(如需优化我可以帮你加缓存)

backend = ChatBackend(api_key=api_key, model_name=model_name, temperature=temperature)

# 聊天历史占位(位于输入上方)

chat_area = st.container()

history_ph = chat_area.empty()

def render_history(ph):

"""渲染 st.session_state.chat_display(使用你原来的 HTML + CSS 样式)"""

html = '<div class="chat-card">'

for role, content in st.session_state.chat_display:

css_class = "human" if role == "user" else "ai"

avatar = "🧑💻" if role == "user" else "🤖"

html += f"<div class='msg {css_class}'><span class='small'>{avatar} {role}</span><br/>{content}</div>"

html += "</div>"

ph.markdown(html, unsafe_allow_html=True)

# 初始渲染历史

render_history(history_ph)

# 用户输入表单(表单在历史下方,因此输出始终在上面)

with st.form("chat-form", clear_on_submit=True):

user_input = st.text_area("输入你的问题/指令:", height=100, placeholder="比如:帮我写一个带注释的二分查找函数。")

submitted = st.form_submit_button("发送 ➤")

if submitted and user_input.strip():

# 1) 把用户消息写入历史(立即可见)

st.session_state.chat_display.append(("user", user_input))

# 2) 插入 assistant 占位(空字符串),保证后续输出显示在上方历史

st.session_state.chat_display.append(("assistant", ""))

ai_index = len(st.session_state.chat_display) - 1

# 立刻渲染一次,使用户看到自己的消息和空 assistant 气泡(输出会填充此气泡)

render_history(history_ph)

# 3) 开始流式生成并实时写回历史

full_text = ""

try:

for token in backend.chat_stream(user_input, st.session_state.thread_id):

# token: 每次 yield 的字符串(可能是字符或片段)

full_text += token

# 更新 session 中的 assistant 占位内容(注意不要添加"思考中")

st.session_state.chat_display[ai_index] = ("assistant", full_text)

# 重新渲染历史,保证输出始终在输入上方

render_history(history_ph)

# 小睡短暂时间帮助 Streamlit 刷新(按需调整或删除)

time.sleep(0.01)

except Exception as e:

# 如果生成出错,把错误消息放到 assistant 气泡里(替代原逻辑)

st.session_state.chat_display[ai_index] = ("assistant", f"[生成出错] {e}")

render_history(history_ph)

else:

# 生成完成(full_text 已包含最终结果),已在循环中写回,无需额外操作

pass

# 页脚(session id)

st.markdown(f"<div class='footer-note'>Session: <code>{st.session_state.thread_id}</code></div>", unsafe_allow_html=True)