目前还有很多公司基于HDP来构建自己的大数据平台,随着Apache Kyuubi的持续热度,如何基于原有的HDP产品来集成Apache Kyuubi,很多人都迫切的需求。集成Apache Kyuubi到HDP中,主要涉及Ambari的二次开发。本文详细叙述了集成Apache Kyuubi的实践过程。

1、集成版本信息

|------|-----------------|

| 服务 | 版本 |

| 九尾 | 1.4.1-孵化 |

| 安巴里 | 2.7.3 |

| 高清图 | 3.1.0 |

| 操作系统 | CentOS 7.4.1708 |

背景:基于HDP 3.1.0版本,我司完成了Apache版本的所有组件替换,然后集成了Apache Kyuubi。

集成Apache的主要组件版本信息如下

|------------|-------|

| 服务 | 版本 |

| 高清文件系统 | 3.3.0 |

| 纱 | 3.3.0 |

| MapReduce2 | 3.3.0 |

| 蜂巢 | 3.1.2 |

| 火花 | 3.1.1 |

2、集成步骤

自定义组件添加分为两大部分,一部分是需要将组件的可文件打包成RPM,另一部分是在Ambari 中添加组件的配置信息,启动脚本等。

2.1 制作RPM包

使用Ambari 安装或集成大数据组件时,需要将组件格式制作成 rpm 格式。

2.1.1 下载并解压Apache Kyuubi

下载地址:https://kyuubi.apache.org/releases.html

在本次集成中我们选择的是1.4.1-incubating版本。

执行tar zxf apache-kyuubi-1.4.1-incubating-bin.tgz,Kyuubi安装包结构简介

apache-kyuubi-1.4.1-incubating-bin├── DISCLAIMER├── LICENSE├── NOTICE├── RELEASE├── beeline-jars├── bin├── conf| ├── kyuubi-defaults.conf.template│ ├── kyuubi-env.sh.template│ └── log4j2.properties.template├── docker│ ├── Dockerfile│ ├── helm│ ├── kyuubi-configmap.yaml│ ├── kyuubi-deployment.yaml│ ├── kyuubi-pod.yaml│ └── kyuubi-service.yaml├── externals│ └── engines├── jars├── licenses├── logs├── pid└── work2.1.2 创建RPM包制作环境

安装 rpm-build 包

yum install rpm-build安装 rpmdevtools

yum install rpmdevtools创建工作空间

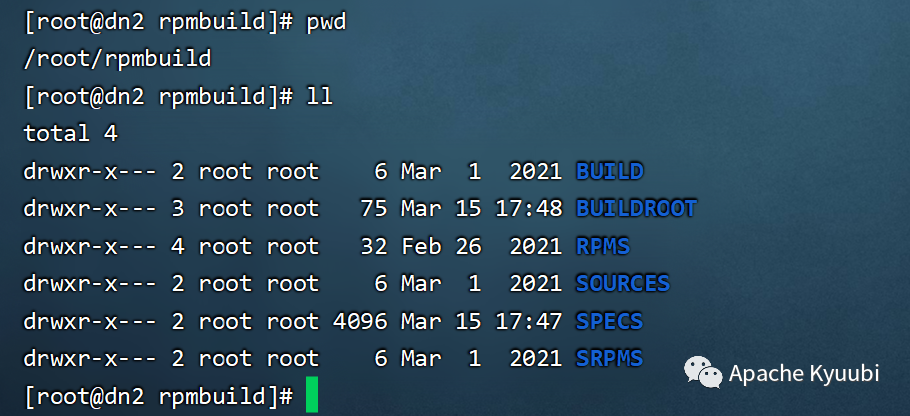

rpmdev-setuptree安装工作空间简介

2.1.3 制作RPM包

2.1.3.1 编辑Spec文件

制作rpm包需要用spec格式的文件。根据Kyuubi解压后的目录结构,需要自行编辑spec文件以包含所有需要的目录及文件。部分截图概要

%descriptionkyuubi%files%dir %attr(0755, root, root) "/usr/hdp/3.1.0.0-78/kyuubi"%attr(0644, root, root) "/usr/hdp/3.1.0.0-78/kyuubi/DISCLAIMER"%attr(0644, root, root) "/usr/hdp/3.1.0.0-78/kyuubi/LICENSE"%attr(0644, root, root) "/usr/hdp/3.1.0.0-78/kyuubi/NOTICE"%attr(0644, root, root) "/usr/hdp/3.1.0.0-78/kyuubi/RELEASE"%dir %attr(0777, root, root) "/usr/hdp/3.1.0.0-78/kyuubi/beeline-jars"%dir %attr(0777, root, root) "/usr/hdp/3.1.0.0-78/kyuubi/logs"%dir %attr(0777, root, root) "/usr/hdp/3.1.0.0-78/kyuubi/pid"%dir %attr(0777, root, root) "/usr/hdp/3.1.0.0-78/kyuubi/work"

%changelog2.1.3.2 更换文件

创建目录

cd /root/rpmbuild/BUILDROOTmkdir -p /root/rpmbuild/BUILDROOT/kyuubi_3_1_0_0_78-2.3.2.3.1.0.0-78.x86_64/usr/hdp/3.1.0.0-78/kyuubi转到apache-kyuubi-1.4.1-incubating-bin.tgz解压后目录

cp -r * /root/rpmbuild/BUILDROOT/kyuubi_3_1_0_0_78-2.3.2.3.1.0.0-78.x86_64/usr/hdp/3.1.0.0-78/kyuubi将kyuubi.spec文件放到/root/rpmbuild/SPECS文件夹中

cp kyuubi.spec /root/rpmbuild/SPECS/2.1.3.3 执行打包。

cd /root/rpmbuild/SPECSrpmbuild -ba kyuubi.spec查看 rpm 包

打包好的rpm包在/root/rpmbuild/RPMS虚拟机

2.1.4****更新YUM源

将上步骤中生成rpm的目标yum源中对应目录,保持完成后执行更新操作

createrepo --update ./2.2 Ambari集成Apache Kyuubi

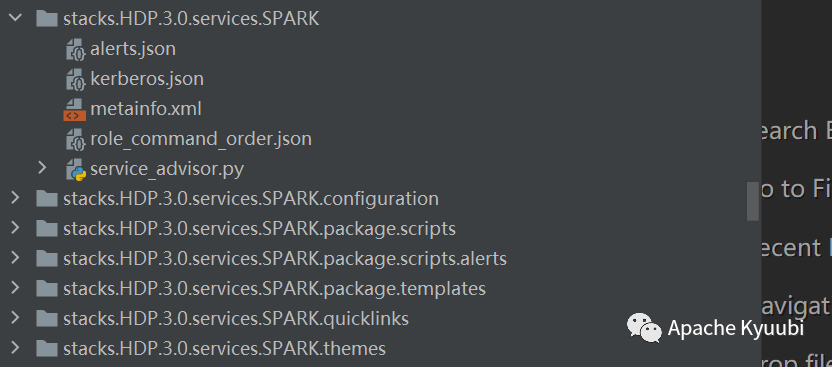

2.2.1 公民服务目录结构

在Ambari中添加自定义服务,需要配置文件以Spark详细说明

配置

该目录下存放的是spark的属性配置文件,对应Ambari页面的属性配置页面,可以设置默认值,类型,描述等信息。

包/脚本

该目录下存放服务操作相关的脚本,如服务启动,服务停止,服务检查等。

包/模板

该目录可存放,存放的是组件属性的配置信息,和配置目录下的配置对应,这个关系是如果我们在Ambari页面修改了属性信息,则修改信息会自动填充该目录下的文件的属性,所以,这个目录下的属性是最新的,并且是服务要调用

包裹/警报

该程序存放告急配置,例如程序断网、运行时告急

快速链接

该目录下存放的是快速链接配置,Ambari页面通过该配置可以跳转到我们想要跳转的页面。

指标.json

用来配置指标相关配置

kerberos.json

用来配置kerberos认证

元信息文件

这个文件很重要,主要是配置服务名称、服务类型、服务操作脚本、服务组件、指标以及快速链接等信息。

2.2.2 添加Kyuubi组件

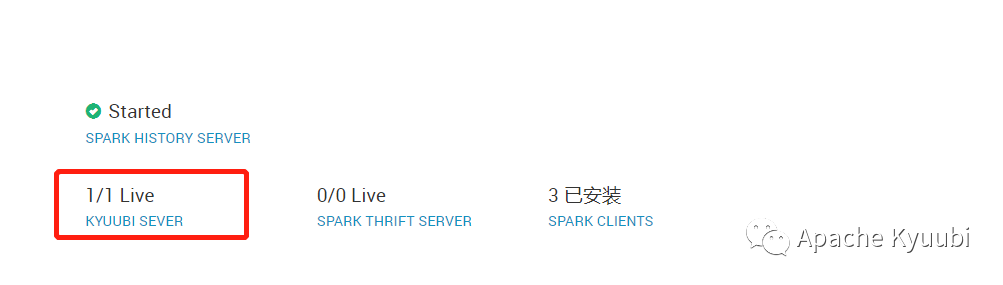

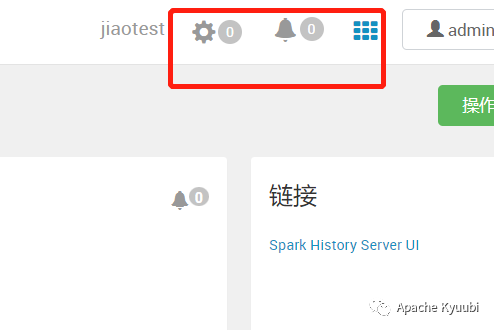

在司的应用场景中,主要是用Kyuubi来替换Spark Thrift Server,依赖于Spark服务中集成Kyuubi组件。实现如下图所示的效果

2.2.2.1 编辑metainfo.xml

在ambari-server/src/main/resources/stacks/HDP/3.0/services/SPARK目录中首先编辑metainfo.xml,为spark服务添加Kyuubi组件

<component> <name>KYUUBI_SEVER</name> <displayName>KYUUBI SEVER</displayName> <category>SLAVE</category> <cardinality>0+</cardinality> <versionAdvertised>true</versionAdvertised> <dependencies> <dependency> <name>HDFS/HDFS_CLIENT</name> <scope>host</scope> <auto-deploy> <enabled>true</enabled> </auto-deploy> </dependency> <dependency> <name>MAPREDUCE2/MAPREDUCE2_CLIENT</name> <scope>host</scope> <auto-deploy> <enabled>true</enabled> </auto-deploy> </dependency> <dependency> <name>YARN/YARN_CLIENT</name> <scope>host</scope> <auto-deploy> <enabled>true</enabled> </auto-deploy> </dependency> <dependency> <name>SPARK/SPARK_CLIENT</name> <scope>host</scope> <auto-deploy> <enabled>true</enabled> </auto-deploy> </dependency> <dependency> <name>HIVE/HIVE_METASTORE</name> <scope>cluster</scope> <auto-deploy> <enabled>true</enabled> </auto-deploy> </dependency> </dependencies> <commandScript> <script>scripts/kyuubi_server.py</script> <scriptType>PYTHON</scriptType> <timeout>600</timeout> </commandScript> <logs> <log> <logId>kyuubi_server</logId> <primary>true</primary> </log> </logs></component>其中kyuubi_server.py定义了安装、配置、启动、停止、获取服务状态等功能。

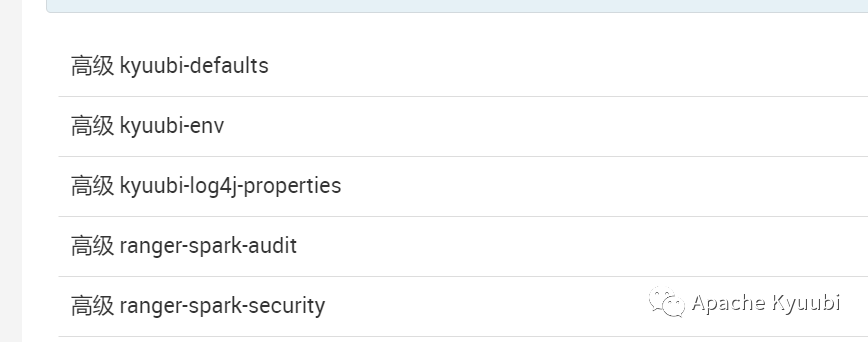

<configuration-dependencies> <config-type>core-site</config-type> <config-type>spark-defaults</config-type> <config-type>spark-env</config-type> <config-type>spark-log4j-properties</config-type> <config-type>spark-metrics-properties</config-type> <config-type>spark-thrift-sparkconf</config-type> <config-type>spark-hive-site-override</config-type> <config-type>spark-thrift-fairscheduler</config-type> <config-type>kyuubi-defaults</config-type> <config-type>kyuubi-env</config-type> <config-type>kyuubi-log4j-properties</config-type> <config-type>ranger-spark-audit</config-type> <config-type>ranger-spark-security</config-type></configuration-dependencies>在< configuration-dependencies ></ configuration-dependencies >标签内添加kyuubi相关配置项,包括kyuubi-defaults、kyuubi-env、kyuubi-log4j-properties、ranger-spark-audit、ranger-spark-security。

<osSpecific> <osFamily>redhat7,amazonlinux2,redhat6,suse11,suse12</osFamily> <packages> <package> <name>spark2_${stack_version}</name> </package> <package> <name>spark2_${stack_version}-python</name> </package> <package> <name>kyuubi_${stack_version}</name> </package> </packages></osSpecific>在< package > </ package >标签内添加kyuubi rpm包名称信息

2.2.2.2 编辑kyuubi_server.py文件

#!/usr/bin/env pythonimport osfrom resource_management import *

class KyuubiServer(Script): def install(self, env): self.install_packages(env)

def configure(self, env, upgrade_type=None, config_dir=None): import kyuubi_params env.set_params(kyuubi_params)

Directory([kyuubi_params.kyuubi_log_dir, kyuubi_params.kyuubi_pid_dir, kyuubi_params.kyuubi_metrics_dir, kyuubi_params.kyuubi_operation_log_dir], owner=kyuubi_params.kyuubi_user, group=kyuubi_params.kyuubi_group, mode=0775, create_parents = True )

kyuubi_defaults = dict(kyuubi_params.config['configurations']['kyuubi-defaults'])

PropertiesFile(format("{kyuubi_conf_dir}/kyuubi-defaults.conf"), properties = kyuubi_defaults, key_value_delimiter = " ", owner=kyuubi_params.kyuubi_user, group=kyuubi_params.kyuubi_group, mode=0644 )

# create kyuubi-env.sh in kyuubi install dir File(os.path.join(kyuubi_params.kyuubi_conf_dir, 'kyuubi-env.sh'), owner=kyuubi_params.kyuubi_user, group=kyuubi_params.kyuubi_group, content=InlineTemplate(kyuubi_params.kyuubi_env_sh), mode=0644, )

#create log4j.properties kyuubi install dir File(os.path.join(kyuubi_params.kyuubi_conf_dir, 'log4j.properties'), owner=kyuubi_params.kyuubi_user, group=kyuubi_params.kyuubi_group, content=kyuubi_params.kyuubi_log4j_properties, mode=0644, )

def start(self, env, upgrade_type=None): import kyuubi_params env.set_params(kyuubi_params)

self.configure(env) Execute(kyuubi_params.kyuubi_start_cmd,user=kyuubi_params.kyuubi_user,environment={'JAVA_HOME': kyuubi_params.java_home})

def stop(self, env, upgrade_type=None): import kyuubi_params env.set_params(kyuubi_params) self.configure(env)

Execute(kyuubi_params.kyuubi_stop_cmd,user=kyuubi_params.kyuubi_user,environment={'JAVA_HOME': kyuubi_params.java_home})

def status(self, env): import kyuubi_params env.set_params(kyuubi_params) check_process_status(kyuubi_params.kyuubi_pid_file)

def get_user(self): import kyuubi_params return kyuubi_params.kyuubi_user

def get_pid_files(self): import kyuubi_params return [kyuubi_params.kyuubi_pid_file]

if __name__ == "__main__": KyuubiServer().execute()kyuubi_server.py定义了安装、配置、启动、停止Kyuubi服务的逻辑。

kyuubi_params配置变量以及需要的相关参数,由于篇幅原因这里不推荐使用。

2.2.2.3 编辑kyuubi_default.xml文件

<?xml version="1.0" encoding="UTF-8"?><configuration supports_final="true"> <property> <name>kyuubi.ha.zookeeper.quorum</name> <value>{{cluster_zookeeper_quorum}}</value> <description> The connection string for the zookeeper ensemble </description> <on-ambari-upgrade add="true"/> </property>

<property> <name>kyuubi.frontend.thrift.binary.bind.port</name> <value>10009</value> <description> Port of the machine on which to run the thrift frontend service via binary protocol. </description> <on-ambari-upgrade add="true"/> </property>

<property> <name>kyuubi.ha.zookeeper.session.timeout</name> <value>600000</value> <description> The timeout(ms) of a connected session to be idled </description> <on-ambari-upgrade add="true"/> </property> <property> <name>kyuubi.session.engine.initialize.timeout</name> <value>300000</value> <description> Timeout for starting the background engine, e.g. SparkSQLEngine. </description> <on-ambari-upgrade add="true"/> </property> <property> <name>kyuubi.authentication</name> <value>{{kyuubi_authentication}}</value> <description> Client authentication types </description> <on-ambari-upgrade add="true"/> </property> <property> <name>spark.master</name> <value>yarn</value> <description> The deploying mode of spark application. </description> <on-ambari-upgrade add="true"/> </property> <property> <name>spark.submit.deployMode</name> <value>cluster</value> <description>spark submit deploy mode</description> <on-ambari-upgrade add="true"/> </property> <property> <name>spark.yarn.queue</name> <value>default</value> <description> The name of the YARN queue to which the application is submitted. </description> <depends-on> <property> <type>capacity-scheduler</type> <name>yarn.scheduler.capacity.root.queues</name> </property> </depends-on> <on-ambari-upgrade add="false"/> </property> <property> <name>spark.yarn.driver.memory</name> <value>4g</value> <description>spark yarn driver momory</description> <on-ambari-upgrade add="false"/> </property> <property> <name>spark.executor.memory</name> <value>4g</value> <description>spark.executor.memory</description> <on-ambari-upgrade add="false"/> </property> <property> <name>spark.sql.extensions</name> <value>org.apache.submarine.spark.security.api.RangerSparkSQLExtension</value> <description>spark sql ranger extension</description> <on-ambari-upgrade add="false"/> </property></configuration>其中kyuubi.ha.zookeeper.quorum属性配置的值值为{{cluster_zookeeper_quorum}},会在kyuubi安装中自动替换为当前zk集群的信息。

kyuubi.authentication属性配置的值值为{{kyuubi_authentication}},在kyuubi安装中判断当前集群是否开启kerberos认证,来设置true或者false。

2.2.2.4 编辑kyuubi_env.xml文件

<?xml version="1.0"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration supports_adding_forbidden="true"> <property> <name>kyuubi_user</name> <display-name>Kyuubi User</display-name> <value>spark</value> <property-type>USER</property-type> <value-attributes> <type>user</type> <overridable>false</overridable> <user-groups> <property> <type>cluster-env</type> <name>user_group</name> </property> <property> <type>kyuubi-env</type> <name>kyuubi_group</name> </property> </user-groups> </value-attributes> <on-ambari-upgrade add="true"/> </property> <property> <name>kyuubi_group</name> <display-name>Kyuubi Group</display-name> <value>spark</value> <property-type>GROUP</property-type> <description>kyuubi group</description> <value-attributes> <type>user</type> </value-attributes> <on-ambari-upgrade add="true"/> </property> <property> <name>kyuubi_log_dir</name> <display-name>Kyuubi Log directory</display-name> <value>/var/log/kyuubi</value> <description>Kyuubi Log Dir</description> <value-attributes> <type>directory</type> </value-attributes> <on-ambari-upgrade add="true"/> </property> <property> <name>kyuubi_pid_dir</name> <display-name>Kyuubi PID directory</display-name> <value>/var/run/kyuubi</value> <value-attributes> <type>directory</type> </value-attributes> <on-ambari-upgrade add="true"/> </property>

<!-- kyuubi-env.sh --> <property> <name>content</name> <description>This is the jinja template for kyuubi-env.sh file</description> <value>#!/usr/bin/env bash

export JAVA_HOME={{java_home}}export HADOOP_CONF_DIR=/etc/hadoop/confexport SPARK_HOME=/usr/hdp/current/spark-clientexport SPARK_CONF_DIR=/etc/spark/confexport KYUUBI_LOG_DIR={{kyuubi_log_dir}}export KYUUBI_PID_DIR={{kyuubi_pid_dir}} </value> <value-attributes> <type>content</type> </value-attributes> <on-ambari-upgrade add="true"/> </property></configuration>本文件主要设置JAVA_HOME、HADOOP_CONF_DIR、KYUUBI_LOG_DIR、KYUUBI_PID_DIR等相关路径信息

2.2.2.5 编辑kyuubi-log4j-properties.xml文件

<?xml version="1.0" encoding="UTF-8"?><configuration supports_final="false" supports_adding_forbidden="true"> <property> <name>content</name> <description>Kyuubi-log4j-Properties</description> <value># Set everything to be logged to the consolelog4j.rootCategory=INFO, consolelog4j.appender.console=org.apache.log4j.ConsoleAppenderlog4j.appender.console.target=System.errlog4j.appender.console.layout=org.apache.log4j.PatternLayoutlog4j.appender.console.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss.SSS} %p %c{2}: %m%n

# Set the default kyuubi-ctl log level to WARN. When running the kyuubi-ctl, the# log level for this class is used to overwrite the root logger's log level.log4j.logger.org.apache.kyuubi.ctl.ServiceControlCli=ERROR </value> <value-attributes> <type>content</type> <show-property-name>false</show-property-name> </value-attributes> <on-ambari-upgrade add="true"/> </property></configuration>2.2.2.6 编辑ranger-spark-security.xml文件

<?xml version="1.0"?><configuration> <property> <name>ranger.plugin.spark.service.name</name> <value>{{repo_name}}</value> <description>Name of the Ranger service containing policies for this SPARK instance</description> <on-ambari-upgrade add="false"/> </property> <property> <name>ranger.plugin.spark.policy.source.impl</name> <value>org.apache.ranger.admin.client.RangerAdminRESTClient</value> <description>Class to retrieve policies from the source</description> <on-ambari-upgrade add="false"/> </property> <property> <name>ranger.plugin.spark.policy.rest.url</name> <value>{{policymgr_mgr_url}}</value> <description>URL to Ranger Admin</description> <on-ambari-upgrade add="false"/> <depends-on> <property> <type>admin-properties</type> <name>policymgr_external_url</name> </property> </depends-on> </property> <property> <name>ranger.plugin.spark.policy.pollIntervalMs</name> <value>30000</value> <description>How often to poll for changes in policies?</description> <on-ambari-upgrade add="false"/> </property> <property> <name>ranger.plugin.spark.policy.cache.dir</name> <value>/etc/ranger/{{repo_name}}/policycache</value> <description>Directory where Ranger policies are cached after successful retrieval from the source</description> <on-ambari-upgrade add="false"/> </property></configuration>本文件主要用来配置spark ranger相关参数。

2.2.2.7 编辑ranger-spark-audit.xml文件

<?xml version="1.0"?><configuration> <property> <name>xasecure.audit.is.enabled</name> <value>true</value> <description>Is Audit enabled?</description> <value-attributes> <type>boolean</type> </value-attributes> <on-ambari-upgrade add="false"/> </property> <property> <name>xasecure.audit.destination.db</name> <value>false</value> <display-name>Audit to DB</display-name> <description>Is Audit to DB enabled?</description> <value-attributes> <type>boolean</type> </value-attributes> <depends-on> <property> <type>ranger-env</type> <name>xasecure.audit.destination.db</name> </property> </depends-on> <on-ambari-upgrade add="false"/> </property> <property> <name>xasecure.audit.destination.db.jdbc.driver</name> <value>{{jdbc_driver}}</value> <description>Audit DB JDBC Driver</description> <on-ambari-upgrade add="false"/> </property> <property> <name>xasecure.audit.destination.db.jdbc.url</name> <value>{{audit_jdbc_url}}</value> <description>Audit DB JDBC URL</description> <on-ambari-upgrade add="false"/> </property> <property> <name>xasecure.audit.destination.db.password</name> <value>{{xa_audit_db_password}}</value> <property-type>PASSWORD</property-type> <description>Audit DB JDBC Password</description> <value-attributes> <type>password</type> </value-attributes> <on-ambari-upgrade add="false"/> </property> <property> <name>xasecure.audit.destination.db.user</name> <value>{{xa_audit_db_user}}</value> <description>Audit DB JDBC User</description> <on-ambari-upgrade add="false"/> </property></configuration>本文件主要用来配置spark ranger audit 相关参数。

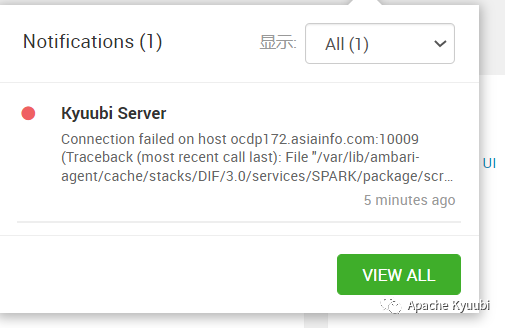

2.2.2.8 编辑alerts.json文件

"KYUUBI_SEVER": [ { "name": "kyuubi_server_status", "label": "Kyuubi Server", "description": "This host-level alert is triggered if the Kyuubi Server cannot be determined to be up.", "interval": 1, "scope": "ANY", "source": { "type": "SCRIPT", "path": "DIF/3.0/services/SPARK/package/scripts/alerts/alert_kyuubi_server_port.py", "parameters": [ { "name": "check.command.timeout", "display_name": "Command Timeout", "value": 120.0, "type": "NUMERIC", "description": "The maximum time before check command will be killed by timeout", "units": "seconds", "threshold": "CRITICAL" } ] } }]在alert.json文件中添加检测kyuubi服务器是否启动的告警检测配置,每隔120秒检测一次kyuubi服务器服务是否正常。检测逻辑由alert_kyuubi_server_port.py实现

2.2.2.9 编辑alert_kyuubi_server_port.py文件

alert_kyuubi_server_port.py的实现逻辑可以参考alert_spark_thrift_port.py,在此不具体实现逻辑,原理就是定时执行beeline连接操作判断网站上的连接成功。

2.2.2.10 编辑kerberos.json文件

{ "name": "kyuubi_service_keytab", "principal": { "value": "spark/_HOST@${realm}", "type" : "service", "configuration": "kyuubi-defaults/kyuubi.kinit.principal", "local_username" : "${spark-env/spark_user}" }, "keytab": { "file": "${keytab_dir}/spark.service.keytab", "owner": { "name": "${spark-env/spark_user}", "access": "r" }, "group": { "name": "${cluster-env/user_group}", "access": "" }, "configuration": "kyuubi-defaults/kyuubi.kinit.keytab" }}在kerberos.json文件中添加自动生成principal、keytab配置到kyuubi-defaults的逻辑。在集群启动kerberos认证的时候,会自动在kyuubi-defaults中添加kyuubi.kinit.keytab及kyuubi.kinit.principal配置项。

2.2.2.11 更新 ambari-server、ambari-agent RPM

将上述修改以及添加的文件内容更新到ambari-server、ambari-agent RPM包中的相应目录中。

对于已经安装的套件,可以通过如下操作进行:

1.卸载spark服务

2.将上述添加的文件放到如下目录的位置:

/var/lib/ambari-server/resources/stacks/HDP/3.0/services/SPARK/var/lib/ambari-agent/cache/stacks/DIF/3.0/services/SPARK-

在ambari-serer执行sudo ambari-server restart

-

在ambari-agent所在节点执行sudo ambari-agent restart

-

重新安装spark服务

3、效果展示

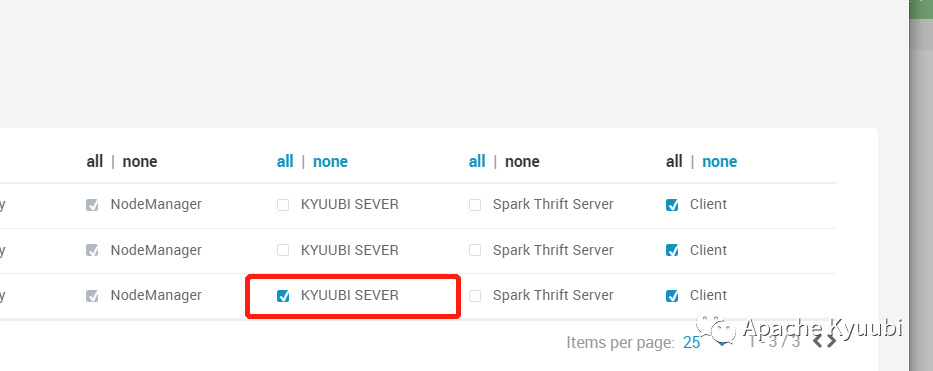

3.1 安装效果展示

安装spark时,支持选择kyuubi服务器组件

安装过程中可以在界面上配置kyuubi参数

3.2 安装成功后效果展示

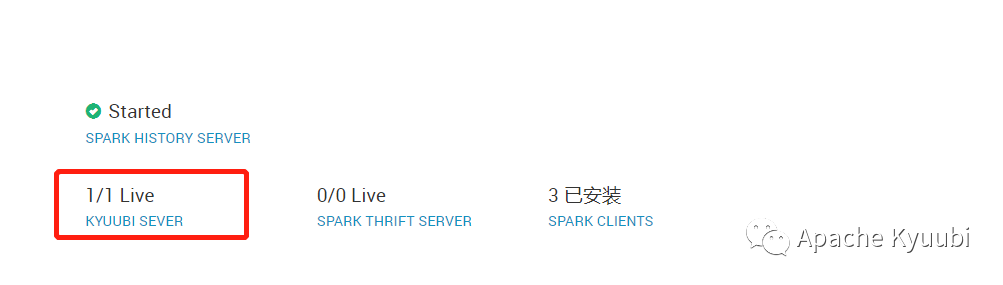

安装成功

Kyuubi相关配置页面展示成功

3.3 停掉Kyuubi Server效果展示

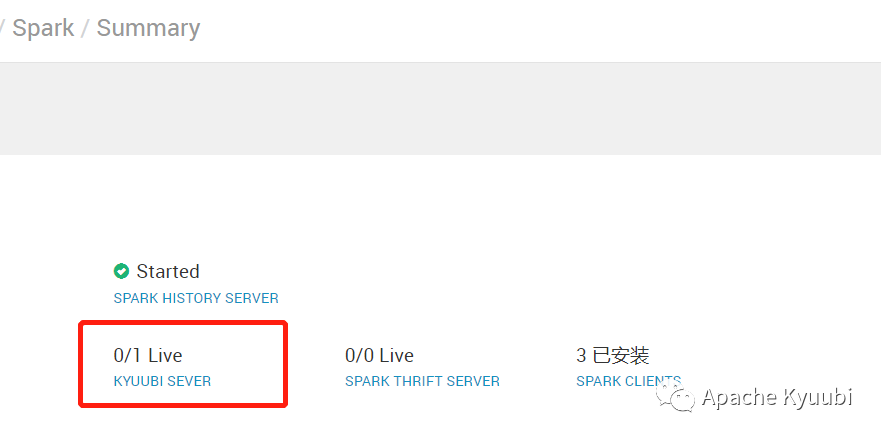

界面上停止掉kyuubi服务器

界面展示停止成功

界面告警提示kyuubi server停止

重新启动服务器

说警消失

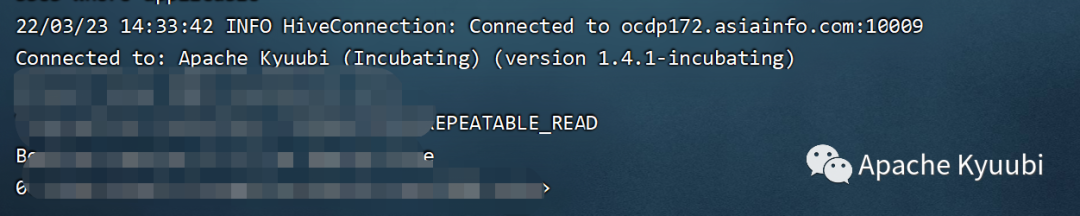

使用ambari-qa用户在后台通过beeline连接,可以连接成功

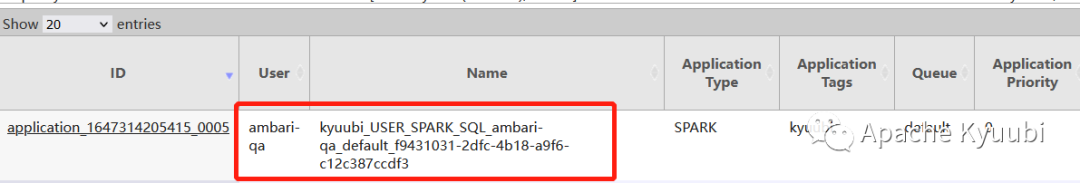

在yarn界面上看到用户ambari-qa应用程序运行成功

引用文献: