简单视频过滤器例子

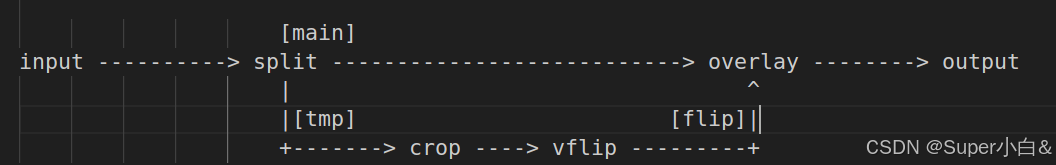

这个例子的处理流程如上所示,首先使用 split 滤波器将 input 流分成两路流(main和tmp),然后分别对两路流进行处理。

对于 tmp 流,先经过 crop 滤波器进行裁剪处理,再经过 flip 滤波器进行垂直方向上的翻转操作,输出的结果命名为 flip 流。

再将 main 流和 flip 流输入到 overlay 滤波器进行合成操作。

上图的 input 就是上面提过的 buffer 源滤波器 output 就是上面的提过的 buffersink 滤波器。

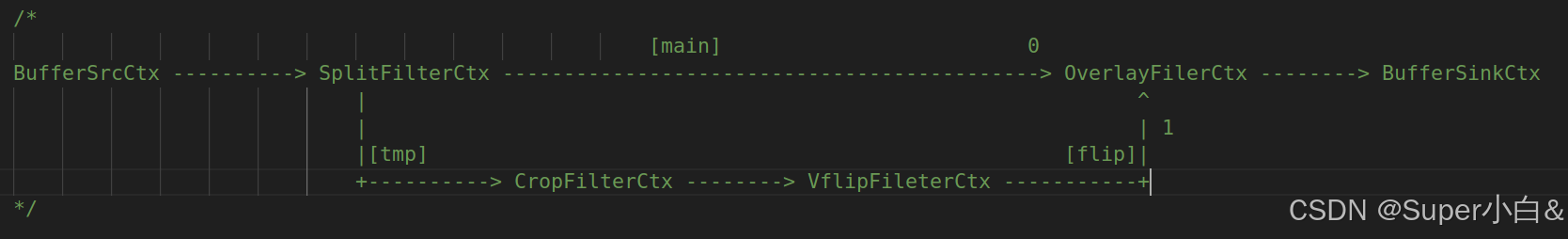

上图中每个节点都是一个 AVfilterContext,每个连线就是 AVFliterLink。所有这些信息都统一由 AVFilterGraph 来管理。

结构流程如下

代码如下:

cpp

#include "FilterDemo.h"

int Filter(const char *inFileName, const char *outFileName)

{

FILE *IN_FILE = fopen(inFileName, "rb+");

FILE *OUT_FILE = fopen(outFileName, "wb");

if (IN_FILE == NULL || OUT_FILE == NULL)

{

av_log(NULL, AV_LOG_ERROR, "[%s] open file error! -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

uint16_t InWidth = 1920;

uint16_t InHeight = 1080;

// FilterGraph - 对filters系统的整体管理

AVFilterGraph *FilterGraph = avfilter_graph_alloc();

if (FilterGraph == NULL)

{

av_log(NULL, AV_LOG_ERROR, "[%s] avfilter graph alloc error! -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// source filter

char args[512]; // 对源数据进行描述

sprintf(args, "video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d", InWidth, InHeight, AV_PIX_FMT_YUV420P, 1, 25, 1, 1);

// 定义filter本身的能力

const AVFilter *BufferSrc = avfilter_get_by_name("buffer"); // AVFilterGraph 的输入源 [流程图中的input]

// AVFilterContext - filter实例, 管理filter与外部的联系

AVFilterContext *BufferSrcCtx = NULL;

/** 创建一个滤波器实例 AVFilterContext ,并添加到AVFilterGraph中

*创建并添加一个 BufferSrc 实例到现有的图中。filter 实例由filter filt创建并初始化,使用参数args。Opaque当前被忽略。

*如果成功,将指向创建对象的指针放入 BufferSrcCtx 过滤器实例,否则将 BufferSrcCtx 设置为NULL。

**/

int ret = avfilter_graph_create_filter(&BufferSrcCtx, BufferSrc, "in" /*创建的过滤器实例的实例名*/, args, NULL, FilterGraph /*过滤器图*/);

if (ret < 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] File to create filter BufferSrc error! -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// sink filter -- 创建一个输出

const AVFilter *BufferSink = avfilter_get_by_name("buffersink"); // AVFilterGraph 输出 [流程图中的output]

AVFilterContext *BufferSinkCtx = NULL;

ret = avfilter_graph_create_filter(&BufferSinkCtx, BufferSink, "out", NULL, NULL, FilterGraph);

if (ret < 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] File to create filter BufferSink error! -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// 设置输出的像素格式

enum AVPixelFormat PixFmt[] = {AV_PIX_FMT_YUV420P, AV_PIX_FMT_NONE};

ret = av_opt_set_int_list(BufferSinkCtx->priv, "pix_fmts", PixFmt, AV_PIX_FMT_NONE, 0);

if (ret < 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] Failed to set pixel formats for bufferSink:%s -- line:%d\n", __FUNCTION__, av_err2str(ret), __LINE__);

goto _end;

}

// split filter - 用于缩放的 filter

const AVFilter *SplitFilter = avfilter_get_by_name("split");

AVFilterContext *SplitFilterCtx = NULL;

ret = avfilter_graph_create_filter(&SplitFilterCtx, SplitFilter, "split", "outputs=2" /*表示有两个输出*/, NULL, FilterGraph);

if (ret < 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] File to create filter SplitFilter -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// crop filter -- 用于裁剪的 filter

const AVFilter *CropFilter = avfilter_get_by_name("crop");

AVFilterContext *CropFilterCtx = NULL;

ret = avfilter_graph_create_filter(&CropFilterCtx, CropFilter, "crop", "out_w=iw:out_h=ih/2:x=0:y=0" /*宽度不变,高度是一半,只要上半部分x,y的起始位置0,0*/, NULL, FilterGraph);

if (ret < 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] File to create filter CropFilter -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// vflip filter -- 用于镜像翻转的 filter

const AVFilter *VflipFileter = avfilter_get_by_name("vflip");

AVFilterContext *VflipFileterCtx = NULL;

ret = avfilter_graph_create_filter(&VflipFileterCtx, VflipFileter, "vflip", NULL, NULL, FilterGraph);

if (ret < 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] File to create vflip filter -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// overlay filter - 滤镜用于视频叠加

const AVFilter *OverlayFiler = avfilter_get_by_name("overlay");

AVFilterContext *OverlayFilerCtx;

ret = avfilter_graph_create_filter(&OverlayFilerCtx, OverlayFiler, "overlay", "y=0:H/2", NULL, FilterGraph);

if (ret < 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] File to create overlay filter -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// src filter to split filter | BufferSrcCtx -> SplitFilterCtx

ret = avfilter_link(BufferSrcCtx, 0, SplitFilterCtx, 0);

if (ret != 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] Fail to link src filter and split filter -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// split filter's first pad to overlay filter's main pad | SplitFilterCtx[main] -> OverlayFilerCtx

ret = avfilter_link(SplitFilterCtx, 0 /* 0 表示 main*/, OverlayFilerCtx, 0);

if (ret != 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] Fail to link split filter and overlay filter main pad -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// split filter's second pad to crop filter | SplitFilterCtx[tmp] -> CropFilterCtx

ret = avfilter_link(SplitFilterCtx, 1 /*1 表示 tmp*/, CropFilterCtx, 0);

if (ret != 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] Fail to link split filter's second pad and crop filter -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// crop filter to vflip filter | CropFilterCtx -> VflipFileterCtx

ret = avfilter_link(CropFilterCtx, 0, VflipFileterCtx, 0);

if (ret != 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] Fail to link crop filter and vflip filter -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// vflip filter to overlay filter's second pad | VflipFileterCtx -> OverlayFilerCtx

ret = avfilter_link(VflipFileterCtx, 0, OverlayFilerCtx, 1);

if (ret != 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] Fail to link vflip filter and overlay filter's tmp pad -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// overlay filter to sink filter | OverlayFilerCtx -> BufferSinkCtx

ret = avfilter_link(OverlayFilerCtx, 0, BufferSinkCtx, 0);

if (ret != 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] Fail to link overlay filter and sink filter -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// check filter graph

ret = avfilter_graph_config(FilterGraph, NULL);

if (ret < 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] Fail in filter graph -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

char *GraphString = avfilter_graph_dump(FilterGraph, NULL);

if (GraphString != NULL)

{

FILE *GraphFile = fopen("GraphFile.txt", "w"); // 打印 filterfraph 的 具体情况

if (GraphFile != NULL)

{

fwrite(GraphString, 1, strlen(GraphString), GraphFile);

fclose(GraphFile);

}

}

av_free(GraphString);

AVFrame *InFrame = av_frame_alloc();

uint8_t *InFrameBuffer = (uint8_t *)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, InWidth, InHeight, 1));

av_image_fill_arrays(InFrame->data, InFrame->linesize, InFrameBuffer, AV_PIX_FMT_YUV420P, InWidth, InHeight, 1);

AVFrame *OutFrame = av_frame_alloc();

uint8_t *OutFrameBuffer = (uint8_t *)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, InWidth, InHeight, 1));

av_image_fill_arrays(OutFrame->data, OutFrame->linesize, OutFrameBuffer, AV_PIX_FMT_YUV420P, InWidth, InHeight, 1);

InFrame->width = InWidth;

InFrame->height = InHeight;

InFrame->format = AV_PIX_FMT_YUV420P;

uint32_t FrameCount = 0;

size_t ReadSize = -1;

size_t PictureSize = InWidth * InHeight * 3 / 2;

while (1)

{

ReadSize = fread(InFrameBuffer, 1, PictureSize, IN_FILE); /*一次读取一张图*/

if (ReadSize < PictureSize)

{

break;

}

// input Y,U.V

InFrame->data[0] = InFrameBuffer;

InFrame->data[1] = InFrameBuffer + InWidth * InHeight;

InFrame->data[2] = InFrameBuffer + InWidth * InHeight * 5 / 4;

ret = av_buffersrc_add_frame(BufferSrcCtx, InFrame);

if (ret < 0)

{

av_log(NULL, AV_LOG_ERROR, "[%s] Error while add frame -- line:%d\n", __FUNCTION__, __LINE__);

goto _end;

}

// pull filtered pictrues from the filtergraph

ret = av_buffersink_get_frame(BufferSinkCtx, OutFrame);

if (ret < 0)

{

break;

}

/* YUV420

Y Y Y Y Y Y Y Y

UV UV UV UV

Y Y Y Y Y Y Y Y

Y Y Y Y Y Y Y Y

UV UV UV UV

Y Y Y Y Y Y Y Y

Y Y Y Y Y Y Y Y

UV UV UV UV

Y Y Y Y Y Y Y Y

*/

// output Y,U,V

if (OutFrame->format == AV_PIX_FMT_YUV420P) // YYYY..U..V..

{

for (int i = 0; i < OutFrame->height; i++)

{

fwrite(OutFrame->data[0] /*Y*/ + OutFrame->linesize[0] * i, 1, OutFrame->width, OUT_FILE);

}

for (int i = 0; i < OutFrame->height / 2; i++)

{

fwrite(OutFrame->data[1] /*U*/ + OutFrame->linesize[1] * i, 1, OutFrame->width / 2, OUT_FILE);

}

for (int i = 0; i < OutFrame->height / 2; i++)

{

fwrite(OutFrame->data[2] /*V*/ + OutFrame->linesize[2] * i, 1, OutFrame->width / 2, OUT_FILE);

}

}

if (++FrameCount % 25 == 0)

{

av_log(NULL, AV_LOG_INFO, "[%s] %d frames have been processed -- line:%d\n", __FUNCTION__, FrameCount, __LINE__);

}

av_frame_unref(OutFrame);

}

_end:

if (IN_FILE != NULL)

{

fclose(IN_FILE);

}

if (OUT_FILE != NULL)

{

fclose(OUT_FILE);

}

if (InFrame != NULL)

{

av_frame_free(&InFrame);

}

if (OutFrame != NULL)

{

av_frame_free(&OutFrame);

}

if (FilterGraph != NULL)

{

avfilter_graph_free(&FilterGraph); // 内部去释放 AVFilterContext

}

}