目录

说明

利用周杰的开源项目 Sdcb.FFmpeg

项目地址:https://github.com/sdcb/Sdcb.FFmpeg/

代码实现参考:https://github.com/sdcb/ffmpeg-muxing-video-demo

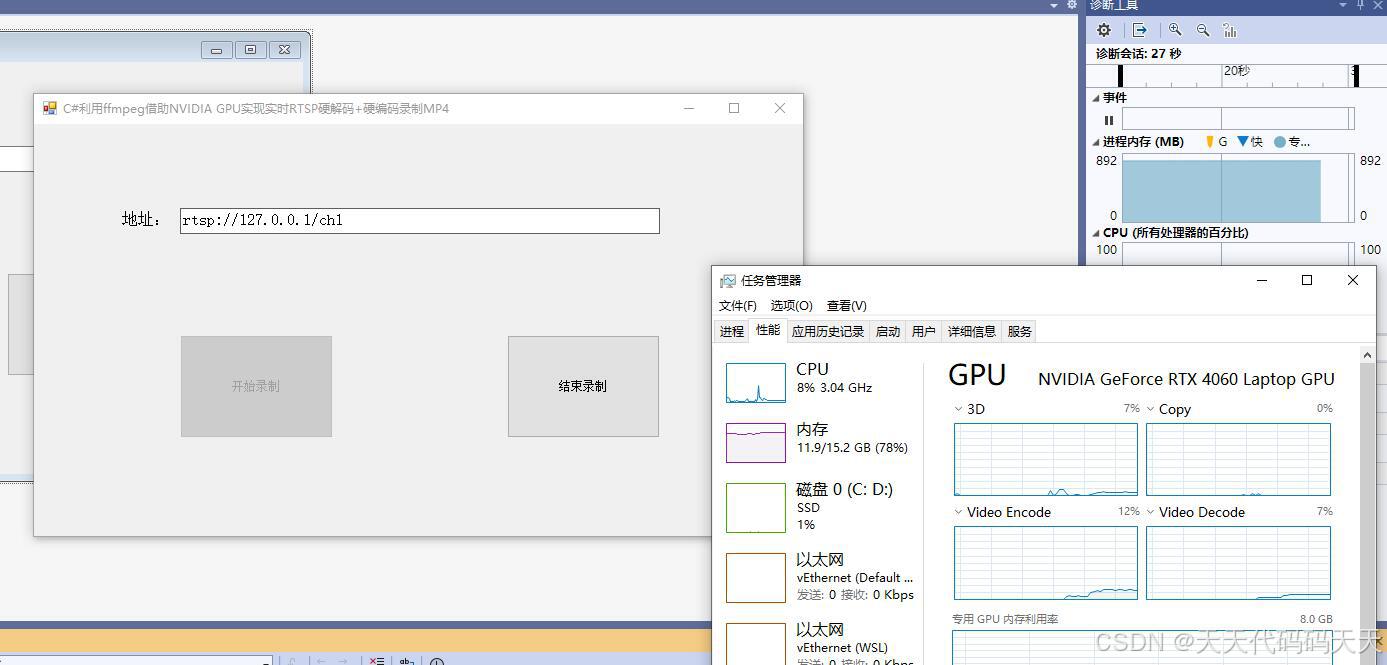

效果

C#利用ffmpeg借助NVIDIA GPU实现实时RTSP硬解码+硬编码录制MP4

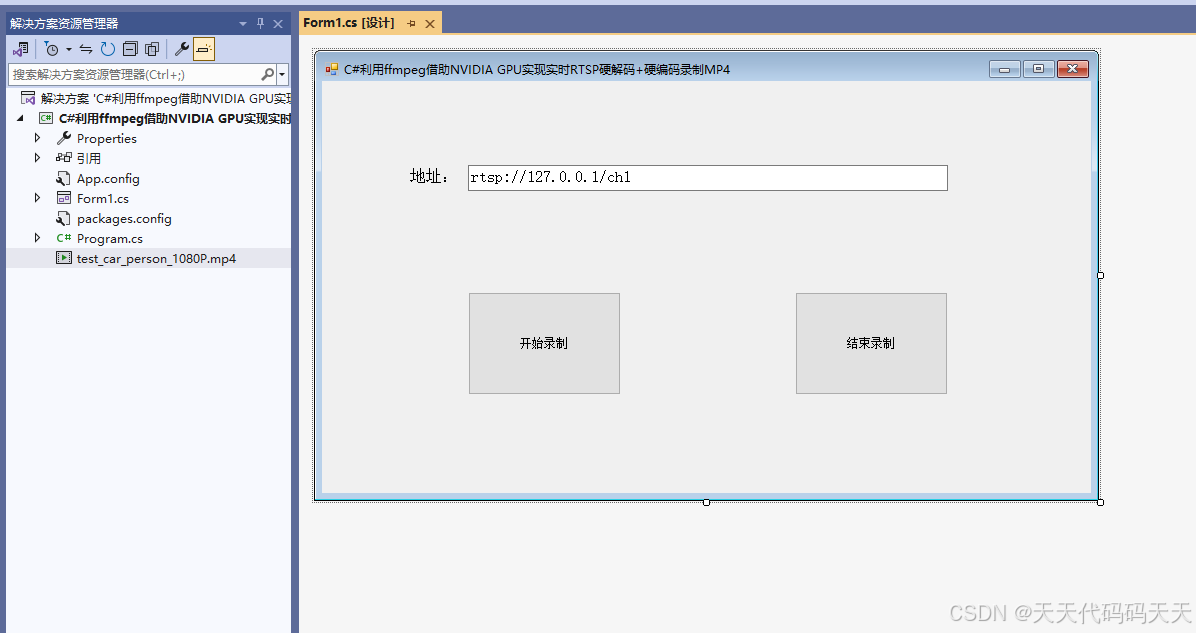

项目

代码

using Sdcb.FFmpeg.Codecs;

using Sdcb.FFmpeg.Formats;

using Sdcb.FFmpeg.Raw;

using Sdcb.FFmpeg.Toolboxs.Extensions;

using System;

using System.Linq;

using System.Threading;

using System.Threading.Tasks;

using System.Windows.Forms;

namespace Sdcb.FFmpegDemo

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

CancellationTokenSource cts;

/// <summary>

/// 播放

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private void button1_Click(object sender, EventArgs e)

{

button1.Enabled = false;

button2.Enabled = true;

cts = new CancellationTokenSource();

string rtsp_url = txtURL.Text;

//输出视频文件的名称。

string outputFile = "output.mp4";

Task.Run(() => Recording(rtsp_url, outputFile, cts.Token));

}

void Recording(string url, string outputFile, CancellationToken cancellationToken)

{

//输出视频的帧率,帧率设置为每秒25帧

AVRational frameRate = new AVRational(25, 1);

//输出视频的比特率。

long bitRate = 16 * 1024 * 1024; // 16M

//从文件夹读取

//该字符串指定了源图像的文件夹和命名模式。%03d部分表示图像以三位数字命名(例如,001.jpg,002.jpg等)。

//string sourceFolder = @".\src\%03d.jpg";

//FormatContext srcFc = FormatContext.OpenInputUrl(sourceFolder, options: new MediaDictionary

//{

// ["framerate"] = frameRate.ToString()

//});

FormatContext srcFc = FormatContext.OpenInputUrl(url);

srcFc.LoadStreamInfo();

MediaStream srcVideo = srcFc.GetVideoStream();

CodecParameters srcCodecParameters = srcVideo.Codecpar;

CodecContext videoDecoder = new CodecContext(Codec.FindDecoderByName("h264_cuvid"));

{

};

videoDecoder.FillParameters(srcCodecParameters);

videoDecoder.Open();

//var d= Codec.FindDecoders(AVCodecID.H264).Select(x => x.Name);

//var e = Codec.FindEncoders(AVCodecID.H264).Select(x => x.Name);

FormatContext dstFc = FormatContext.AllocOutput(OutputFormat.Guess("mp4"));

dstFc.VideoCodec = Codec.FindEncoderByName("h264_nvenc");

MediaStream vstream = dstFc.NewStream(dstFc.VideoCodec);

CodecContext vcodec = new CodecContext(dstFc.VideoCodec)

{

Width = srcCodecParameters.Width,

Height = srcCodecParameters.Height,

TimeBase = frameRate.Inverse(),

PixelFormat = AVPixelFormat.Yuv420p,

Flags = AV_CODEC_FLAG.GlobalHeader,

BitRate = bitRate,

};

vcodec.Open(dstFc.VideoCodec);

vstream.Codecpar.CopyFrom(vcodec);

vstream.TimeBase = vcodec.TimeBase;

IOContext io = IOContext.OpenWrite(outputFile);

dstFc.Pb = io;

dstFc.WriteHeader();

// src -- srcFc.ReadPackets() -->

// src Packet -- DecodePackets(videoDecoder) -->

// src Frame -- ConvertFrames(vcodec) -->

// dst Frame -- EncodeFrames(vcodec) -->

// dst Packet -- dstFc.InterleavedWritePacket -->

// dst

foreach (Packet packet in srcFc

.ReadPackets().Where(x => x.StreamIndex == srcVideo.Index)

.DecodePackets(videoDecoder)

.ConvertFrames(vcodec)

.EncodeFrames(vcodec)

)

{

try

{

packet.RescaleTimestamp(vcodec.TimeBase, vstream.TimeBase);

packet.StreamIndex = vstream.Index;

dstFc.InterleavedWritePacket(packet);

if (cancellationToken.IsCancellationRequested) break;

}

finally

{

packet.Unref();

}

}

dstFc.WriteTrailer();

io.Dispose();

vcodec.Dispose();

dstFc.Dispose();

videoDecoder.Dispose();

srcFc.Dispose();

}

/// <summary>

/// 停止

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private void button2_Click(object sender, EventArgs e)

{

button2.Enabled = false;

button1.Enabled = true;

cts.Cancel();

}

private void Form1_Load(object sender, EventArgs e)

{

button2.Enabled = false;

button1.Enabled = true;

Sdcb.FFmpeg.Utils.FFmpegLogger.LogWriter = (level, msg) => Console.WriteLine(msg);

}

}

}

using Sdcb.FFmpeg.Codecs;

using Sdcb.FFmpeg.Formats;

using Sdcb.FFmpeg.Raw;

using Sdcb.FFmpeg.Toolboxs.Extensions;

using System;

using System.Linq;

using System.Threading;

using System.Threading.Tasks;

using System.Windows.Forms;

namespace Sdcb.FFmpegDemo

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

CancellationTokenSource cts;

/// <summary>

/// 播放

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private void button1_Click(object sender, EventArgs e)

{

button1.Enabled = false;

button2.Enabled = true;

cts = new CancellationTokenSource();

string rtsp_url = txtURL.Text;

//输出视频文件的名称。

string outputFile = "output.mp4";

Task.Run(() => Recording(rtsp_url, outputFile, cts.Token));

}

void Recording(string url, string outputFile, CancellationToken cancellationToken)

{

//输出视频的帧率,帧率设置为每秒25帧

AVRational frameRate = new AVRational(25, 1);

//输出视频的比特率。

long bitRate = 16 * 1024 * 1024; // 16M

//从文件夹读取

//该字符串指定了源图像的文件夹和命名模式。%03d部分表示图像以三位数字命名(例如,001.jpg,002.jpg等)。

//string sourceFolder = @".\src\%03d.jpg";

//FormatContext srcFc = FormatContext.OpenInputUrl(sourceFolder, options: new MediaDictionary

//{

// ["framerate"] = frameRate.ToString()

//});

FormatContext srcFc = FormatContext.OpenInputUrl(url);

srcFc.LoadStreamInfo();

MediaStream srcVideo = srcFc.GetVideoStream();

CodecParameters srcCodecParameters = srcVideo.Codecpar;

CodecContext videoDecoder = new CodecContext(Codec.FindDecoderByName("h264_cuvid"));

{

};

videoDecoder.FillParameters(srcCodecParameters);

videoDecoder.Open();

//var d= Codec.FindDecoders(AVCodecID.H264).Select(x => x.Name);

//var e = Codec.FindEncoders(AVCodecID.H264).Select(x => x.Name);

FormatContext dstFc = FormatContext.AllocOutput(OutputFormat.Guess("mp4"));

dstFc.VideoCodec = Codec.FindEncoderByName("h264_nvenc");

MediaStream vstream = dstFc.NewStream(dstFc.VideoCodec);

CodecContext vcodec = new CodecContext(dstFc.VideoCodec)

{

Width = srcCodecParameters.Width,

Height = srcCodecParameters.Height,

TimeBase = frameRate.Inverse(),

PixelFormat = AVPixelFormat.Yuv420p,

Flags = AV_CODEC_FLAG.GlobalHeader,

BitRate = bitRate,

};

vcodec.Open(dstFc.VideoCodec);

vstream.Codecpar.CopyFrom(vcodec);

vstream.TimeBase = vcodec.TimeBase;

IOContext io = IOContext.OpenWrite(outputFile);

dstFc.Pb = io;

dstFc.WriteHeader();

// src -- srcFc.ReadPackets() -->

// src Packet -- DecodePackets(videoDecoder) -->

// src Frame -- ConvertFrames(vcodec) -->

// dst Frame -- EncodeFrames(vcodec) -->

// dst Packet -- dstFc.InterleavedWritePacket -->

// dst

foreach (Packet packet in srcFc

.ReadPackets().Where(x => x.StreamIndex == srcVideo.Index)

.DecodePackets(videoDecoder)

.ConvertFrames(vcodec)

.EncodeFrames(vcodec)

)

{

try

{

packet.RescaleTimestamp(vcodec.TimeBase, vstream.TimeBase);

packet.StreamIndex = vstream.Index;

dstFc.InterleavedWritePacket(packet);

if (cancellationToken.IsCancellationRequested) break;

}

finally

{

packet.Unref();

}

}

dstFc.WriteTrailer();

io.Dispose();

vcodec.Dispose();

dstFc.Dispose();

videoDecoder.Dispose();

srcFc.Dispose();

}

/// <summary>

/// 停止

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private void button2_Click(object sender, EventArgs e)

{

button2.Enabled = false;

button1.Enabled = true;

cts.Cancel();

}

private void Form1_Load(object sender, EventArgs e)

{

button2.Enabled = false;

button1.Enabled = true;

Sdcb.FFmpeg.Utils.FFmpegLogger.LogWriter = (level, msg) => Console.WriteLine(msg);

}

}

}