import json

import concurrent.futures

import requests

from datasets import Dataset

from ragas.metrics import faithfulness, context_recall, context_precision, answer_relevancy

from ragas.run_config import RunConfig

from FlagEmbedding import FlagModel

from transformers import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

from ragas.embeddings import BaseRagasEmbeddings

import os

from typing import List, Optional, Any

from langchain.llms.base import LLM

from langchain.callbacks.manager import CallbackManagerForLLMRun

from ragas.llms import LangchainLLMWrapper

from ragas import evaluate

from langchain_openai import ChatOpenAI

# **********评估模型-LLM**********

class MyLLM(LLM):

# Support Qwen2

tokenizer: AutoTokenizer = None

model: AutoModelForCausalLM = None

def __init__(self, mode_name_or_path: str):

super().__init__()

os.environ['CUDA_VISIBLE_DEVICES'] = '6,7' # 使用第一个GPU

self.tokenizer = AutoTokenizer.from_pretrained(mode_name_or_path)

self.model = AutoModelForCausalLM.from_pretrained(mode_name_or_path, device_map="cuda").cuda()

self.model.generation_config = GenerationConfig.from_pretrained(mode_name_or_path)

def _call(

self,

prompt: str,

stop: Optional[List[str]] = None,

run_manager: Optional[CallbackManagerForLLMRun] = None,

**kwargs: Any,

) -> str:

messages = [{"role": "user", "content": prompt}]

input_ids = self.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

model_inputs = self.tokenizer([input_ids], return_tensors="pt").to(self.model.device)

generated_ids = self.model.generate(model_inputs.input_ids, max_new_tokens=2048)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = self.tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

return response

@property

def _llm_type(self):

return "CompassJudger-1"

# **********评估模型-Embedding**********

class MyEmbedding(BaseRagasEmbeddings):

def __init__(self, path, run_config, max_length=512, batch_size=16):

self.model = FlagModel(path, query_instruction_for_retrieval="为这个句子生成表示以用于检索相关文章:")

self.max_length = max_length

self.batch_size = batch_size

self.run_config = run_config

def embed_documents(self, texts: List[str]) -> List[List[float]]:

return self.model.encode_corpus(texts, self.batch_size, self.max_length).tolist()

def embed_query(self, text: str) -> List[float]:

return self.model.encode_queries(text, self.batch_size, self.max_length).tolist()

llm_path = "/home/alg/qdl/model/Qwen2_5-7B-Instruct"

# llm_path = "/home/alg/qdl/model/CompassJudger-1-7B-Instruct"

emb_path = "/home/alg/qdl/model/BAAI/bge-large-zh-v1.5"

url = 'http://10.7.82.78:3000/v1/chat-messages'

data = {

"inputs": {},

"response_mode": "blocking",

"conversation_id": "",

"user": "abc-123",

"files": [

]

}

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer app-k363atU8NLiwMmbCgdIcDQLB'

}

answers = []

questions = []

ground_truths = []

contexts = []

# 读取用例文件

def read_cases(case_path):

# 每行一个用例,json格式:question context answer weight

samples = []

with open(case_path, 'r') as file:

for line in file:

item = json.loads(line.rstrip())

samples.append(item)

return samples

def call_model(url, data, header):

# print(f"request:{data}")

# print(f'url:{url}')

# print(f'data:{data}')

# print(f'header:{header}')

response = requests.post(url, json=data, headers=header)

# 打印响应

# print("状态码:", response.status_code)

# print("响应内容:", response.json())

response = response.json()

# 将评估结果添加到样本中

return response

# 调用dify接口

def evaluate_task(sample, i):

similar = sample['similar']

goldanswer = sample['goldanswer']

# 调用dify接口

questions.append(similar)

ground_truths.append(goldanswer)

results = ''

try:

print(f'执行第{i + 1}个')

data['query'] = similar

response = call_model(url, data, headers)

answer = response['answer']

context = [item['content'] for item in response['metadata']['retriever_resources']]

contexts.append(context)

answers.append(answer)

# print(f'answer:{answer}')

# print(f'context:{context}')

except:

print(f"第{i}个用例出现异常,模型响应为:{response}")

answers.append('异常')

contexts.append(['异常'])

return results

# 1,读取用例文件

case_path = 'rag_cases.jsonl'

samples = read_cases(case_path)

# 2,提取相似问,调dify接口获取答案和上下文

with concurrent.futures.ThreadPoolExecutor(max_workers=1) as executor:

for i in range(len(samples)):

# executor.submit(get_eval_score,samples[i],i)

executor.submit(evaluate_task(samples[i], i))

# 3,执行评测

data = {

"question": questions,

"answer": answers,

"contexts": contexts,

"ground_truth": ground_truths

}

print(f'data:{data}')

# Convert dict to dataset

dataset = Dataset.from_dict(data)

print("dataset")

print(dataset)

run_config = RunConfig(timeout=800, max_wait=800)

#dify接口

qwen_service_url = "http://xx.xx.net.cn:50015/v1"

embedding_model = MyEmbedding(emb_path, run_config)

# my_llm = LangchainLLMWrapper(MyLLM(llm_path), run_config)

my_llm=LangchainLLMWrapper( ChatOpenAI(

model_name='Qwen2-72B-Instruct-AWQ-Preprod',

openai_api_base=qwen_service_url,

openai_api_key='EMPTY',

))

print("开始评测*************")

result = evaluate(

dataset,

metrics=[context_recall, context_precision, answer_relevancy, faithfulness],

llm=my_llm,

embeddings=embedding_model,

run_config=run_config

)

df = result.to_pandas()

print(result)

df.to_csv("result.csv", index=False)

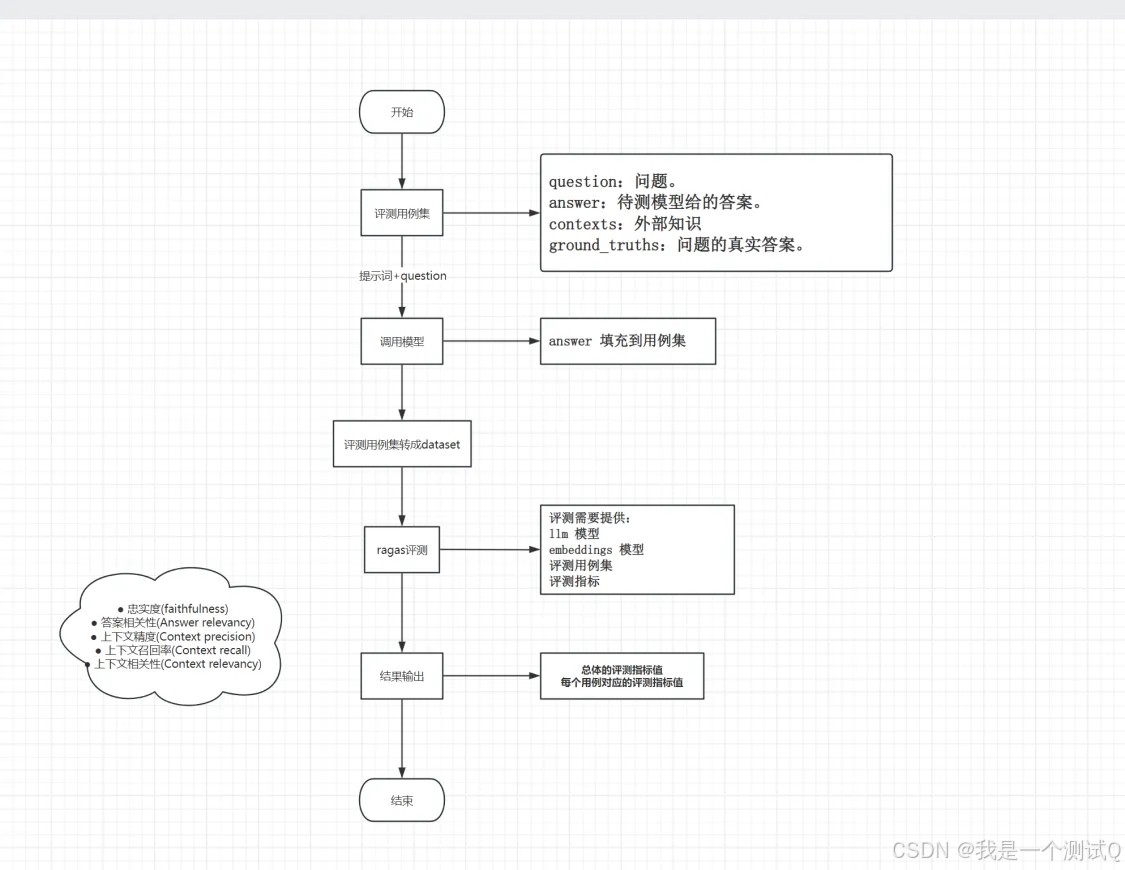

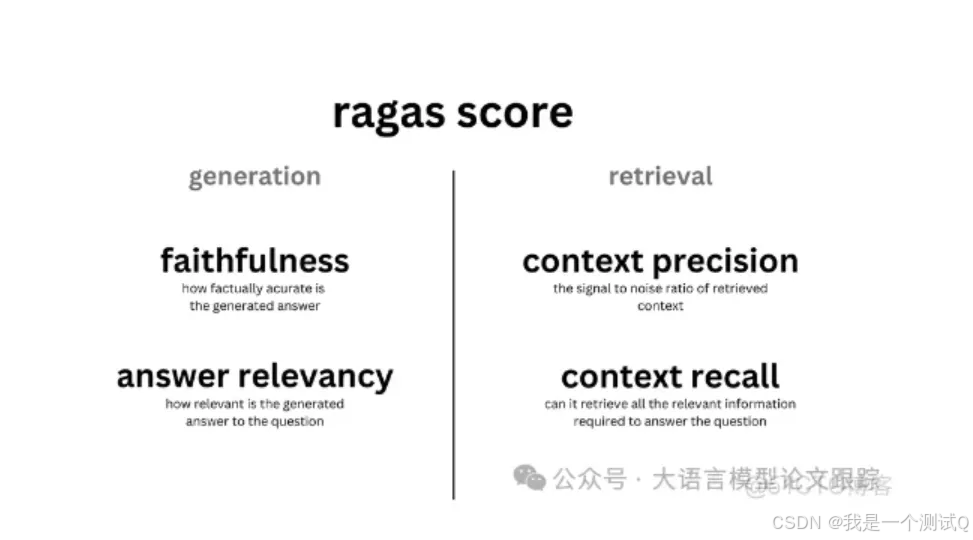

Ragas是一个框架,它可以帮助你从不同的方面评估你的问答(QA)流程。它为你提供了一些指标来评估你的问答系统的不同方面,具体包括:

- 评估检索(context) 的指标:提供了上下文相关性(context_relevancy)和上下文召回率(context_recall),这些可以衡量你的检索系统的性能。

- 评估生成(answer) 的指标:提供了忠实度(faithfulness),用以衡量生成的信息是否准确无误;以及答案相关性(answer_relevancy),用以衡量答案对问题的切题程度。

特别地,正如前面也提到的,为了使用 RAGAS,你所需要的只是一些问题。但是,如果你使用上下文召回率(context_recall),还需要一些参考答案。

数据格式

RAGAs 需要以下信息:

- question:用户输入的问题。

- answer:从 RAG 系统生成的答案(由LLM给出)。

- contexts:根据用户的问题从外部知识源检索的上下文即与问题相关的文档。

- ground_truths: 人类提供的基于问题的真实(正确)答案。 这是唯一的需要人类提供的信息。

RAG评测指标

Ragas提供了五种评估指标包括:

- 忠实度(faithfulness)

- 答案相关性(Answer relevancy)

- 上下文精度(Context precision)

- 上下文召回率(Context recall)

- 上下文相关性(Context relevancy)

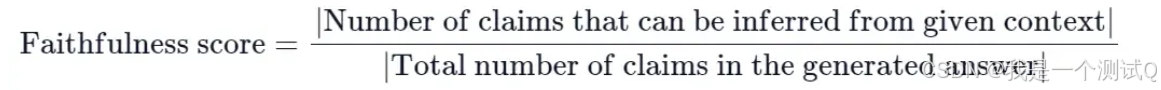

2.1忠实度

忠实度指标衡量生成答案与给定上下文的事实一致性。它是根据答案和检索到的上下文来计算的。答案被缩放到(0,1)范围内。数值越高越好。

如果生成答案中提出的所有主张都能从给定的上下文中推断出来,则认为该答案是忠实的。为了计算这个指标,首先需要识别生成答案中的一系列主张。然后,将这些主张与给定的上下文进行交叉检查,以确定它们是否可以从上下文中推断出来。忠实度得分由以下公式给出:

reagas评分

Given a question, an answer, and sentences from the answer analyze the complexity of each sentence given under 'sentences' and break down each sentence into one or more fully understandable statements while also ensuring no pronouns are used in each statement. Format the outputs in JSON.

给定一个问题、一个答案以及答案中的句子,分析"sentences"下每个句子的复杂度,并将每个句子分解成一个或多个完全可理解的陈述,同时确保每个陈述中不使用代词。将输出格式化为JSON。

输入:

{

"question": "阿尔伯特·爱因斯坦是谁,他最出名的是什么?",

"answer": "他是一位出生在德国的理论物理学家,被广泛认为是有史以来最伟大和最有影响力的物理学家之一。他最出名的是发展了相对论,同时也对量子力学理论的发展做出了重要贡献。",

"sentences": {

"0": "阿尔伯特·爱因斯坦是一位出生在德国的理论物理学家,被广泛认为是有史以来最伟大和最有影响力的物理学家之一。",

"1": "他最出名的是发展了相对论,同时也对量子力学理论的发展做出了重要贡献。"

}

}

输出:

{

"SentenceComponents": [

{

"sentence_index": 0,

"simpler_statements": [

"阿尔伯特·爱因斯坦是一位出生在德国的理论物理学家。",

"阿尔伯特·爱因斯坦被认为是有史以来最伟大和最有影响力的物理学家之一。"

]

},

{

"sentence_index": 1,

"simpler_statements": [

"阿尔伯特·爱因斯坦最出名的是发展了相对论。",

"阿尔伯特·爱因斯坦还对量子力学理论的发展做出了重要贡献。"

]

}

]

}

我们的任务是根据给定的上下文来判断一系列陈述的忠实度。对于每个陈述,如果该陈述可以根据上下文直接推断出来,则必须返回裁决结果为1;如果该陈述不能根据上下文直接推断出来,则返回裁决结果为0。

```json

{

"NLIStatementInput": {

"context": "约翰是XYZ大学的学生。他正在攻读计算机科学学位。这个学期他注册了几门课程,包括数据结构、算法和数据库管理。约翰是一个勤奋的学生,他花大量的时间学习和完成作业。他经常在图书馆待到很晚来完成他的项目。",

"statements": [

"约翰主修生物学。",

"约翰正在上人工智能课程。",

"约翰是一个专注的学生。",

"约翰有一份兼职工作。"

]

},

"NLIStatementOutput": {

"statements": [

{

"StatementFaithfulnessAnswer": {

"statement": "约翰主修生物学。",

"reason": "约翰的专业明确提到是计算机科学。没有信息表明他主修生物学。",

"verdict": 0

}

},

{

"StatementFaithfulnessAnswer": {

"statement": "约翰正在上人工智能课程。",

"reason": "上下文提到了约翰目前正在注册的课程,而人工智能并未被提及。因此,不能推断出约翰正在上人工智能课程。",

"verdict": 0

}

},

{

"StatementFaithfulnessAnswer": {

"statement": "约翰是一个专注的学生。",

"reason": "上下文说明他花大量的时间学习和完成作业。此外,提到他经常在图书馆待到很晚来完成他的项目,这暗示了他的专注。",

"verdict": 1

}

},

{

"StatementFaithfulnessAnswer": {

"statement": "约翰有一份兼职工作。",

"reason": "上下文中没有给出约翰有兼职工作的信息。",

"verdict": 0

}

}

]

}

}例子

from ragas.database_schema import SingleTurnSample

from ragas.metrics import Faithfulness

sample = SingleTurnSample(

user_input="When was the first super bowl?",

response="The first superbowl was held on Jan 15, 1967",

retrieved_contexts=[

"The First AFL--NFL World Championship Game was an American football game played on January 15, 1967, at the Los Angeles Memorial Coliseum in Los Angeles."

]

)

scorer = Faithfulness()

await scorer.single_turn_ascore(sample)2.2 答案相关性(Answer relevancy/ResponseRelevancy )

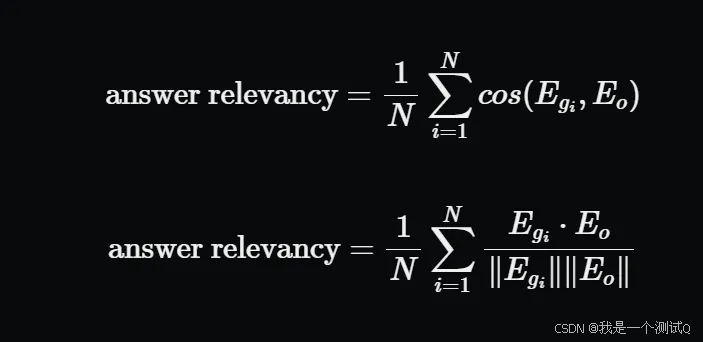

评估指标"答案相关性"重点评估生成的答案(answer)与用户问题(question)之间相关程度。不完整或包含冗余信息的答案会被赋予较低的分数,而相关性更好的答案则获得更高的分数。这个指标是使用用户输入、检索到的上下文和响应来计算的。

答案相关性被定义为原始用户输入与一系列人造问题之间的平均余弦相似度,这些问题是基于响应生成的(反向工程):

-

\( e_{g} \) 是生成问题的句子嵌入(embedding)。

-

\( e_{o} \) 是原始问题的句子嵌入(embedding)。

-

\( n \) 是生成问题的数量,默认为3。

为了计算这个分数,大型语言模型(LLM)被提示多次为生成的答案生成一个合适的问题,然后测量这些生成的问题与原始问题之间的平均余弦相似度。背后的理念是,如果生成的答案准确地回应了最初的问题,那么LLM应该能够从答案中生成与原始问题一致的问题。

ragas评分

为给定的答案生成一个问题,并识别答案是否含糊其辞。如果答案是含糊其辞的,则将含糊其辞标记为1;如果答案是明确的,则标记为0。含糊其辞的答案是指那些回避、模糊或不明确的答案。例如,"我不知道"或"我不确定"就是含糊其辞的答案。

{

"ResponseRelevanceInput": {

"response": "阿尔伯特·爱因斯坦出生于德国。"

},

"ResponseRelevanceOutput": {

"question": "阿尔伯特·爱因斯坦在哪里出生?",

"noncommittal": 0

}

},

{

"ResponseRelevanceInput": {

"response": "我不知道2023年发明的智能手机的突破性功能,因为我不了解2022年之后的信息。"

},

"ResponseRelevanceOutput": {

"question": "2023年发明的智能手机的突破性功能是什么?",

"noncommittal": 1

}

}

calculate_similarity(self, question: str, generated_questions: list[str]):例子

from ragas import SingleTurnSample

from ragas.metrics import ResponseRelevancy

sample = SingleTurnSample(

user_input="When was the first super bowl?",

response="The first superbowl was held on Jan 15, 1967",

retrieved_contexts=[

"The First AFL--NFL World Championship Game was an American football game played on January 15, 1967, at the Los Angeles Memorial Coliseum in Los Angeles."

]

)

scorer = ResponseRelevancy()

await scorer.single_turn_ascore(sample)2.3 噪声敏感性 Noise Sensitivity

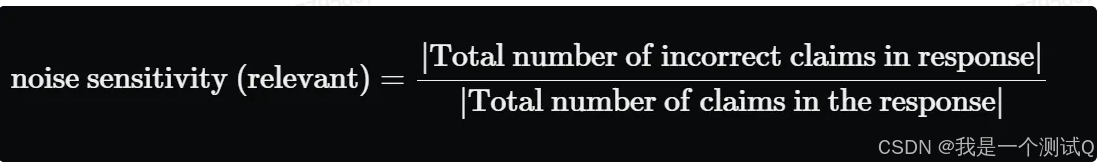

噪声敏感性衡量系统在使用相关或不相关的检索文档时提供错误回答的频率。分数范围从0到1,较低的值表示更好的性能。噪声敏感性是使用用户输入、参考标准、响应和检索到的上下文来计算的。

为了估计噪声敏感性,生成的响应中的每个主张都被检查,以确定它是否基于真实情况正确,以及是否可以归因于相关(或不相关)的检索上下文。理想情况下,答案中的所有主张都应得到相关检索上下文的支持。

给定一个问题、一个答案以及答案中的句子,分析"sentences"下每个句子的复杂度,并将每个句子分解成一个或多个完全可理解的陈述,同时确保每个陈述中不使用代词。将输出格式化为JSON。

您的任务是根据给定的上下文来判断一系列陈述的忠实度。对于每个陈述,如果该陈述可以根据上下文直接推断出来,则必须返回裁决结果为1;如果该陈述不能根据上下文直接推断出来,则返回裁决结果为0。

from ragas.dataset_schema import SingleTurnSample

from ragas.metrics import NoiseSensitivity

sample = SingleTurnSample(

user_input="What is the Life Insurance Corporation of India (LIC) known for?",

response="The Life Insurance Corporation of India (LIC) is the largest insurance company in India, known for its vast portfolio of investments. LIC contributes to the financial stability of the country.",

reference="The Life Insurance Corporation of India (LIC) is the largest insurance company in India, established in 1956 through the nationalization of the insurance industry. It is known for managing a large portfolio of investments.",

retrieved_contexts=[

"The Life Insurance Corporation of India (LIC) was established in 1956 following the nationalization of the insurance industry in India.",

"LIC is the largest insurance company in India, with a vast network of policyholders and huge investments.",

"As the largest institutional investor in India, LIC manages substantial funds, contributing to the financial stability of the country.",

"The Indian economy is one of the fastest-growing major economies in the world, thanks to sectors like finance, technology, manufacturing etc."

]

)

scorer = NoiseSensitivity()

await scorer.single_turn_ascore(sample)2.4 上下文精度(Context precision)

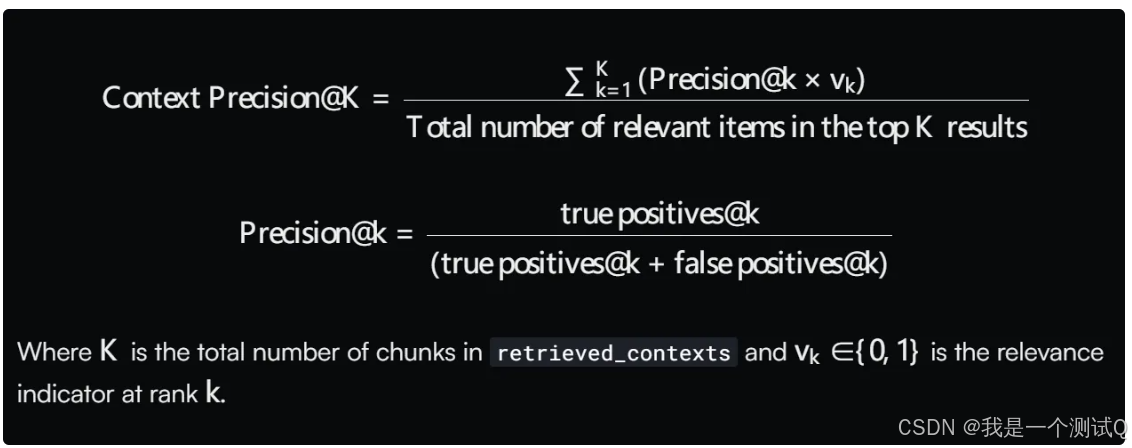

上下文精度是一个衡量检索到的上下文中相关块的比例的指标。它是通过计算上下文中每个块的精度@k的平均值来得出的。精度@k是排名第k的相关块的数量与排名第k的总块数的比率。

'Given question, answer and context verify if the context was useful in arriving at the given answer. Give verdict as "1" if useful and "0" if not with json output.

遍历检索到的上下文,给定问题、答案和上下文,验证上下文是否有助于得出给定答案。如果上下文有用,则给出的判断结果为"1";如果没用,则为"0",并以JSON格式输出。

例子:

{

"QAC": {

"question": "世界上最高的山峰是什么?",

"context": "安第斯山脉是世界上最长的大陆山脉,位于南美洲。它横跨七个国家,拥有西半球许多最高的山峰。这个山脉以其多样的生态系统而闻名,包括高海拔的安第斯高原和亚马逊雨林。",

"answer": "珠穆朗玛峰。"

},

"Verification": {

"reason": "提供的上下文讨论了安第斯山脉,虽然令人印象深刻,但并未包括珠穆朗玛峰,也没有直接关联到关于世界最高峰的问题。",

"verdict": 0

}

}

{

"QAC": {

"question": "谁赢得了2020年ICC板球世界杯?",

"context": "2022年ICC男子T20世界杯于2022年10月16日至11月13日在澳大利亚举行,这是该锦标赛的第八届。原定于2020年举行,但由于COVID-19大流行而推迟。英格兰队在决赛中以5个球的优势击败巴基斯坦队,赢得了他们的第二个ICC男子T20世界杯冠军。",

"answer": "英格兰"

},

"Verification": {

"reason": "上下文有助于澄清关于2020年ICC世界杯的情况,并指出英格兰是原定于2020年举行但实际在2022年举行的锦标赛的获胜者。",

"verdict": 1

}

}

from ragas import SingleTurnSample

from ragas.metrics import LLMContextPrecisionWithoutReference

context_precision = LLMContextPrecisionWithoutReference()

sample = SingleTurnSample(

user_input="Where is the Eiffel Tower located?",

response="The Eiffel Tower is located in Paris.",

retrieved_contexts=["The Eiffel Tower is located in Paris."],

)

await context_precision.single_turn_ascore(sample)2.5 上下文召回率(Context recall)

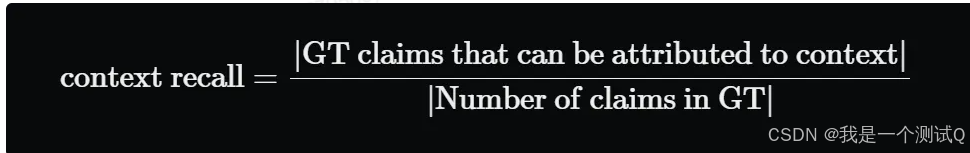

上下文召回率衡量成功检索到的相关文档(或信息片段)的数量。它关注的是不要错过重要的结果。召回率越高,意味着遗漏的相关文档越少。简而言之,召回率是关于不要错过任何重要内容。由于它涉及到不要错过任何内容,计算上下文召回率总是需要一个参考来比较。

上下文召回率(Context recall)衡量检索到的上下文(Context)与人类提供的真实答案(ground truth)的一致程度。它是根据ground truth和检索到的Context计算出来的,取值范围在 0 到 1 之间,值越高表示性能越好。

为了根据真实答案(ground truth)估算上下文召回率(Context recall),分析真实答案中的每个句子以确定它是否可以归因于检索到的Context。 在理想情况下,真实答案中的所有句子都应归因于检索到的Context

Given a context, and an answer, analyze each sentence in the answer and classify if the sentence can be attributed to the given context or not. Use only 'Yes' (1) or 'No' (0) as a binary classification. Output json with reason.

给定一个上下文和一个答案,分析答案中的每个句子,并分类判断该句子是否可以归因于给定的上下文。仅使用"是"(1)或"否"(0)作为二元分类。输出带有原因的JSON。

{

"QCA": {

"question": "你能告诉我关于阿尔伯特·爱因斯坦的什么?",

"context": "阿尔伯特·爱因斯坦(1879年3月14日 - 1955年4月18日)是一位出生在德国的理论物理学家,被广泛认为是有史以来最伟大和最有影响力的科学家之一。他以发展相对论而闻名,同时也对量子力学做出了重要贡献,因此成为现代物理学在20世纪前几十年对自然科学理解革命性重塑中的中心人物。他的质能等价公式E = mc^2,源于相对论理论,被称为"世界上最著名的方程"。他因"对理论物理学的贡献,特别是他对光电效应定律的发现"而获得1921年诺贝尔物理学奖,这是量子理论发展中的一个关键步骤。他的工作也因其对科学哲学的影响而闻名。在1999年英国《物理世界》杂志对全球130位顶尖物理学家的调查中,爱因斯坦被评为有史以来最伟大的物理学家。他的智力成就和独创性使爱因斯坦成为天才的代名词。",

"answer": "阿尔伯特·爱因斯坦,出生于1879年3月14日,是一位出生在德国的理论物理学家,被广泛认为是有史以来最伟大和最有影响力的科学家之一。他因对理论物理学的贡献而获得1921年诺贝尔物理学奖。他在1905年发表了4篇论文。爱因斯坦于1895年移居瑞士。"

},

"ContextRecallClassifications": {

"classifications": [

{

"statement": "阿尔伯特·爱因斯坦,出生于1879年3月14日,是一位出生在德国的理论物理学家,被广泛认为是有史以来最伟大和最有影响力的科学家之一。",

"reason": "上下文中清楚提到了爱因斯坦的出生日期。",

"attributed": 1

},

{

"statement": "他因对理论物理学的贡献而获得1921年诺贝尔物理学奖。",

"reason": "给定的上下文中有完全相同的句子。",

"attributed": 1

},

{

"statement": "他在1905年发表了4篇论文。",

"reason": "给定的上下文中没有提到他写的论文。",

"attributed": 0

},

{

"statement": "爱因斯坦于1895年移居瑞士。",

"reason": "给定的上下文中没有支持这一说法的证据。",

"attributed": 0

}

]

}

}

from ragas.dataset_schema import SingleTurnSample

from ragas.metrics import LLMContextRecall

sample = SingleTurnSample(

user_input="Where is the Eiffel Tower located?",

response="The Eiffel Tower is located in Paris.",

reference="The Eiffel Tower is located in Paris.",

retrieved_contexts=["Paris is the capital of France."],

)

context_recall = LLMContextRecall()

await context_recall.single_turn_ascore(sample)2.6 上下文实体召回率(ContextEntityRecall)

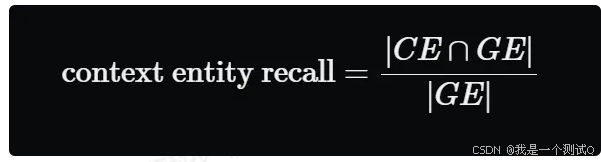

基于参考上下文和检索到的上下文中存在的实体数量相对于参考上下文中存在的实体数量。简单来说,它衡量的是从参考上下文中回忆起的实体的比例。这个指标在基于事实的应用案例中很有用,如旅游帮助台、历史问答等。这个指标可以帮助评估实体的检索机制,通过与参考上下文中的实体进行比较,因为在实体重要的案例中,我们需要检索到的上下文能够涵盖这些实体。

要计算这个指标,我们使用两个集合:

- GE,表示参考上下文中存在的实体集合;

- CE,表示检索到的上下文中存在的实体集合。

然后我们取这两个集合交集中的元素数量,并将其除以存在于 RR 中的元素数量,公式如下

"Given a text, extract unique entities without repetition. Ensure you consider different forms or mentions of the same entity as a single entity."

给定一段文本,提取不重复的独特实体。确保你将同一实体的不同形式或提及视为单一实体。

{

"StringIO": {

"text": "埃菲尔铁塔位于法国巴黎,是全球最具标志性的地标之一。每年有数百万游客因其令人叹为观止的城市景观而慕名而来。它于1889年完工,正是为了赶上1889年的世界博览会。"

},

"EntitiesList": {

"entities": ["埃菲尔铁塔", "巴黎", "法国", "1889", "世界博览会"]

}

}

num_entities_in_both = len(

set(context_entities).intersection(set(ground_truth_entities))

)

return num_entities_in_both / (len(ground_truth_entities) + 1e-8)

from ragas import SingleTurnSample

from ragas.metrics import ContextEntityRecall

sample = SingleTurnSample(

reference="The Eiffel Tower is located in Paris.",

retrieved_contexts=["The Eiffel Tower is located in Paris."],

)

scorer = ContextEntityRecall()

await scorer.single_turn_ascore(sample)检索+测评

评测集

{"questions":"对于哪些级别的拟录用人员需要进行背景调查","ground_truths":["对M7和P9及以上级别的拟录用人员,人资行政中心还应对其履历的真实性进行背景调查"],"answers":"","contexts":[]}

{"questions":"无故缺席公司总部组织的信息安全培训的人员,本人会受到什么处罚","ground_truths":["处以200-500元处罚"],"answers":"","contexts":[]}

{"questions":"哪些行为会被处以200-500元处罚","ground_truths":["抵触、无故缺席公司总部、省区组织的信息安全培训的人","未经授权,擅自扫的人员","盗用、擅自挪用的人员","违反办公电脑管理办法其他规定,第二次发现对责任员工处200至500元罚款"],"answers":"","contexts":[]}检索文档并测评检索的结果

import json

import os

from modelscope import AutoModelForCausalLM, AutoTokenizer

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

DASHSCOPE_API_KEY = 'sk-xx'

os.environ["DASHSCOPE_API_KEY"] = DASHSCOPE_API_KEY

os.environ["OPENAI_API_KEY"] = 'EMPTY'

model_name = "/home/alg/qdl/model/Qwen2_5-7B-Instruct"

os.environ["DASHSCOPE_API_KEY"] = DASHSCOPE_API_KEY

# **********需要评测的模型**********

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# **********需要评测的模型**********

# **********评估模型**********

llm_path = "/home/alg/qdl/model/CompassJudger-1-7B-Instruct"

emb_path = "/home/alg/qdl/model/BAAI/bge-large-zh-v1.5"

# qwen_service_url = "http://10.199.xx.xx:50015/v1/chat/"

# os.environ["OPENAI_API_BASE"] = qwen_service_url

from datasets import load_dataset

import pandas as pd

from datasets import Dataset

from typing import List, Optional, Any

from datasets import Dataset

from ragas.metrics import faithfulness, context_recall, context_precision, answer_relevancy

from ragas import evaluate

from ragas.llms import LangchainLLMWrapper

from ragas.embeddings import BaseRagasEmbeddings

from ragas.run_config import RunConfig

from FlagEmbedding import FlagModel

from transformers import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

from langchain.llms.base import LLM

from langchain.callbacks.manager import CallbackManagerForLLMRun

import requests

# **********评估模型-LLM**********

class MyLLM(LLM):

# Support Qwen2

tokenizer: AutoTokenizer = None

model: AutoModelForCausalLM = None

def __init__(self, mode_name_or_path: str):

super().__init__()

os.environ['CUDA_VISIBLE_DEVICES'] = '6,7' # 使用第一个GPU

self.tokenizer = AutoTokenizer.from_pretrained(mode_name_or_path)

self.model = AutoModelForCausalLM.from_pretrained(mode_name_or_path, device_map="cuda").cuda()

self.model.generation_config = GenerationConfig.from_pretrained(mode_name_or_path)

def _call(

self,

prompt: str,

stop: Optional[List[str]] = None,

run_manager: Optional[CallbackManagerForLLMRun] = None,

**kwargs: Any,

) -> str:

messages = [{"role": "user", "content": prompt}]

input_ids = self.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

model_inputs = self.tokenizer([input_ids], return_tensors="pt").to(self.model.device)

generated_ids = self.model.generate(model_inputs.input_ids, max_new_tokens=2048)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = self.tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

return response

@property

def _llm_type(self):

return "CompassJudger-1"

# **********评估模型-Embedding**********

class MyEmbedding(BaseRagasEmbeddings):

def __init__(self, path, run_config, max_length=512, batch_size=16):

self.model = FlagModel(path, query_instruction_for_retrieval="为这个句子生成表示以用于检索相关文章:")

self.max_length = max_length

self.batch_size = batch_size

self.run_config = run_config

def embed_documents(self, texts: List[str]) -> List[List[float]]:

return self.model.encode_corpus(texts, self.batch_size, self.max_length).tolist()

def embed_query(self, text: str) -> List[float]:

return self.model.encode_queries(text, self.batch_size, self.max_length).tolist()

from langchain_community.embeddings import HuggingFaceBgeEmbeddings

from langchain_community.document_loaders import TextLoader

from langchain.chains import RetrievalQA

from langchain_community.llms import Tongyi

from langchain_openai import OpenAI

from langchain_community.vectorstores import Chroma

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.prompts import ChatPromptTemplate

from vllm import LLM

from langchain.schema.runnable import RunnableMap

from langchain.schema.output_parser import StrOutputParser

# ***加载文档

bge_embeddings = HuggingFaceBgeEmbeddings(model_name=emb_path)

# 1,创建loaders 加载文档

loaders = [

TextLoader("/home/alg/qdl/rags/docs/zhidu_1.txt", encoding='utf8'),

TextLoader("/home/alg/qdl/rags/docs/zhidu_2.txt", encoding='utf8'),

TextLoader("/home/alg/qdl/rags/docs/zhidu_3.txt", encoding='utf8'),

]

print('开始加载文档')

docs = []

for loader in loaders:

docs.extend(loader.load())

print(len(docs))

# 分割文本

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(docs)

# 2,创建向量数据库

vectorstore = Chroma.from_documents(all_splits, bge_embeddings)

# 3. 设置检索器

retriever = vectorstore.as_retriever(search_type="similarity", search_kwargs={"k": 5}) # top-k = 5

# 4. 创建RAG链

# 创建prompt模板

prompt = """You are an assistant for question-answering tasks.

Use the following pieces of retrieved context to answer the question.

If you don't know the answer, just say that you don't know.

Use five sentences maximum and keep the answer concise.

Question: {question}

Context: {context}

Answer:

"""

prompt = ChatPromptTemplate.from_template(prompt)

# llm = Tongyi(base_url=qwen_service_url) # 替换为适当的Qwen初始化

# llm = LLM(model=model_name, trust_remote_code=True)

# llm = OpenAI()

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=200,

temperature=0.1

)

from langchain_huggingface import HuggingFacePipeline

llm = HuggingFacePipeline(pipeline=pipe)

# rag_chain = RetrievalQA.from_llm(

# llm,

# retriever=retriever,

# return_source_documents=True, # including source documents in output

# # chain_type_kwargs={'prompt': prompt} # customizing the prompt

# prompt= prompt

# )

rag_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=retriever, # here we are using the vectorstore as a retriever

# chain_type="stuff",

return_source_documents=True, # including source documents in output

chain_type_kwargs={'prompt': prompt}

)

import re

#评测数据

data_files = "/home/alg/qdl/rags/docs/rag.jsonl"

answers = []

questions = []

ground_truths = []

contexts = []

reference = []

with open(data_files, 'r') as file:

for line in file:

data = json.loads(line)

questions.append(data["questions"])

ground_truths.append(str(data["ground_truths"]))

print("questions")

print(questions)

for query in questions:

# 调用模型

result = rag_chain.invoke(query)

text = result['result']

# 正则提取答案

match = re.search(r'Answer:(.*?)Assistant:', text, re.DOTALL)

if (match):

answer = match.group(1).strip()

print(f'query-{query},answer-{answer}')

else:

match = re.search(r'Answer:(.*)', text.replace('\n', ''), re.DOTALL)

if (match):

answer = match.group(1).strip()

print(f'query-{query},answer-{answer}')

else:

answer = ''

print('没有提取到')

print('result')

print(result)

answers.append(answer)

contexts.append([docs.page_content for docs in result['source_documents']])

print("*****************************执行完成********************************************")

# To dict

data = {

"question": questions,

"answer": answers,

"contexts": contexts,

"ground_truth": ground_truths

}

# Convert dict to dataset

dataset = Dataset.from_dict(data)

print("dataset")

print(dataset)

run_config = RunConfig(timeout=800, max_wait=800)

embedding_model = MyEmbedding(emb_path, run_config)

my_llm = LangchainLLMWrapper(MyLLM(llm_path), run_config)

print("开始评测*************")

result = evaluate(

dataset,

metrics=[context_recall, context_precision, answer_relevancy, faithfulness],

llm=my_llm,

embeddings=embedding_model,

run_config=run_config

)

df = result.to_pandas()

print(result)

df.to_csv("result.csv", index=False)基于dify平台的rag评测

dify上添加大模型rag的流程,通过调用dify接口拿到问题答案和上下文相关内容

import json

import concurrent.futures

import requests

from datasets import Dataset

from ragas.metrics import faithfulness, context_recall, context_precision, answer_relevancy

from ragas.run_config import RunConfig

from FlagEmbedding import FlagModel

from transformers import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

from ragas.embeddings import BaseRagasEmbeddings

import os

from typing import List, Optional, Any

from langchain.llms.base import LLM

from langchain.callbacks.manager import CallbackManagerForLLMRun

from ragas.llms import LangchainLLMWrapper

from ragas import evaluate

from langchain_openai import ChatOpenAI

# **********评估模型-LLM**********

class MyLLM(LLM):

# Support Qwen2

tokenizer: AutoTokenizer = None

model: AutoModelForCausalLM = None

def __init__(self, mode_name_or_path: str):

super().__init__()

os.environ['CUDA_VISIBLE_DEVICES'] = '6,7' # 使用第一个GPU

self.tokenizer = AutoTokenizer.from_pretrained(mode_name_or_path)

self.model = AutoModelForCausalLM.from_pretrained(mode_name_or_path, device_map="cuda").cuda()

self.model.generation_config = GenerationConfig.from_pretrained(mode_name_or_path)

def _call(

self,

prompt: str,

stop: Optional[List[str]] = None,

run_manager: Optional[CallbackManagerForLLMRun] = None,

**kwargs: Any,

) -> str:

messages = [{"role": "user", "content": prompt}]

input_ids = self.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

model_inputs = self.tokenizer([input_ids], return_tensors="pt").to(self.model.device)

generated_ids = self.model.generate(model_inputs.input_ids, max_new_tokens=2048)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = self.tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

return response

@property

def _llm_type(self):

return "CompassJudger-1"

# **********评估模型-Embedding**********

class MyEmbedding(BaseRagasEmbeddings):

def __init__(self, path, run_config, max_length=512, batch_size=16):

self.model = FlagModel(path, query_instruction_for_retrieval="为这个句子生成表示以用于检索相关文章:")

self.max_length = max_length

self.batch_size = batch_size

self.run_config = run_config

def embed_documents(self, texts: List[str]) -> List[List[float]]:

return self.model.encode_corpus(texts, self.batch_size, self.max_length).tolist()

def embed_query(self, text: str) -> List[float]:

return self.model.encode_queries(text, self.batch_size, self.max_length).tolist()

llm_path = "/home/alg/qdl/model/Qwen2_5-7B-Instruct"

# llm_path = "/home/alg/qdl/model/CompassJudger-1-7B-Instruct"

emb_path = "/home/alg/qdl/model/BAAI/bge-large-zh-v1.5"

url = 'http://10.7.82.78:3000/v1/chat-messages'

data = {

"inputs": {},

"response_mode": "blocking",

"conversation_id": "",

"user": "abc-123",

"files": [

]

}

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer app-k363atU8NLiwMmbCgdIcDQLB'

}

answers = []

questions = []

ground_truths = []

contexts = []

# 读取用例文件

def read_cases(case_path):

# 每行一个用例,json格式:question context answer weight

samples = []

with open(case_path, 'r') as file:

for line in file:

item = json.loads(line.rstrip())

samples.append(item)

return samples

def call_model(url, data, header):

# print(f"request:{data}")

# print(f'url:{url}')

# print(f'data:{data}')

# print(f'header:{header}')

response = requests.post(url, json=data, headers=header)

# 打印响应

# print("状态码:", response.status_code)

# print("响应内容:", response.json())

response = response.json()

# 将评估结果添加到样本中

return response

# 调用dify接口

def evaluate_task(sample, i):

similar = sample['similar']

goldanswer = sample['goldanswer']

# 调用dify接口

questions.append(similar)

ground_truths.append(goldanswer)

results = ''

try:

print(f'执行第{i + 1}个')

data['query'] = similar

response = call_model(url, data, headers)

answer = response['answer']

context = [item['content'] for item in response['metadata']['retriever_resources']]

contexts.append(context)

answers.append(answer)

# print(f'answer:{answer}')

# print(f'context:{context}')

except:

print(f"第{i}个用例出现异常,模型响应为:{response}")

answers.append('异常')

contexts.append(['异常'])

return results

# 1,读取用例文件

case_path = 'rag_cases.jsonl'

samples = read_cases(case_path)

# 2,提取相似问,调dify接口获取答案和上下文

with concurrent.futures.ThreadPoolExecutor(max_workers=1) as executor:

for i in range(len(samples)):

# executor.submit(get_eval_score,samples[i],i)

executor.submit(evaluate_task(samples[i], i))

# 3,执行评测

data = {

"question": questions,

"answer": answers,

"contexts": contexts,

"ground_truth": ground_truths

}

print(f'data:{data}')

# Convert dict to dataset

dataset = Dataset.from_dict(data)

print("dataset")

print(dataset)

run_config = RunConfig(timeout=800, max_wait=800)

#dify接口

qwen_service_url = "http://xx.net.cn:50015/v1"

embedding_model = MyEmbedding(emb_path, run_config)

# my_llm = LangchainLLMWrapper(MyLLM(llm_path), run_config)

my_llm=LangchainLLMWrapper( ChatOpenAI(

model_name='Qwen2-72B-Instruct-AWQ-Preprod',

openai_api_base=qwen_service_url,

openai_api_key='EMPTY',

))

print("开始评测*************")

result = evaluate(

dataset,

metrics=[context_recall, context_precision, answer_relevancy, faithfulness],

llm=my_llm,

embeddings=embedding_model,

run_config=run_config

)

df = result.to_pandas()

print(result)

df.to_csv("result.csv", index=False)