1节点规划

|------|------------|----------------|----------------|

| 节点类型 | 主机名 | IP规划 | |

| | | 内部管理 | 实例通信 |

| 控制节点 | Controller | 192.168.100.10 | 192.168.200.10 |

| 计算节点 | Compute01 | 192.168.100.20 | 192.168.200.20 |

2环境准备

在物理机上申请两台安装了 openEuler-22.09 操作系统的虚拟机分别作为 OpenStack 控

制节点和计算节点,处理器处勾选"虚拟化 IntelVT-x/EPT 或 AMD-V/RVI(V)"。控制节点

类型为 4 vCPU、8 GB内存、120 GB系统硬盘;计算节点类型为 2 vCPU、8 GB内存、

120GB系统磁盘以及 4 个20GB额外磁盘;需要给虚拟机设置两个网络接口,网络接口 2 设

置为内部网络,其网卡使用仅主机模式,作为控制节点通信和管理使用,网络接口 1设置

为外部网络,其网卡使用 NAT 模式,主要作为控制节点的数据网络,在集群部署完成后创

建的云主机使用网络接口 2 的网卡。

3系统基础环境设置

- 修改主机名

root@localhost \~\]# hostnamectl set-hostname controller \[root@localhost \~\]# bash \[root@localhost \~\]# hostnamectl set-hostname compute01 \[root@localhost \~\]# bash

- 更新系统软件包

所有节点更新系统软件包以获得最新的功能支持和错误(Bug)修复。

root@controller \~\]# dnf -y update \&\& dnf -y upgrade \[root@compute01 \~\]# dnf -y update \&\& dnf -y upgrade

4安装asible和kolla-ansible

执行以下命令,下载并安装 pip3。

root@controller \~\]# dnf -y install python3-pip

使用镜像源来加速 pip 安装包的下载速度。

root@controller \~\]# cat \<\< WXIC \> .pip/pip.conf \> \[global

> index-url = https://pypi.tuna.tsinghua.edu.cn/simple

> [install]

> trusted-host=pypi.tuna.tsinghua.edu.cn

> WXIC

更新 Python3 中的 pip 工具到最新版本。

root@controller \~\]# pip3 install --ignore-installed --upgrade pip

使用以下命令安装 Ansible,并查看所安装的版本号。

root@controller \~\]# pip3 install -U 'ansible\>=4,\<6' \[root@controller \~\]# ansible --version

安装 Kolla-ansible 和 Kolla-ansible 环境必需的依赖项。

root@controller \~\]# dnf -y install git python3-devel libffi-devel gcc openssl-devel python3-libselinux \[root@controller \~\]# dnf -y install openstack-kolla-ansible \[root@controller \~\]# kolla-ansible --version \[root@controller \~\]# dnf -y install python3-libselinux

创建 kolla-ansible 配置文件目录。

root@controller \~\]# mkdir -p /etc/kolla/{globals.d,config} \[root@controller \~\]# chown $USER:$USER /etc/kolla

将 inventory 文件复制到/etc/ansible 目录

root@controller \~\]# mkdir /etc/ansible \[root@controller \~\]# cp /usr/share/kolla-ansible/ansible/inventory/\* /etc/ansible

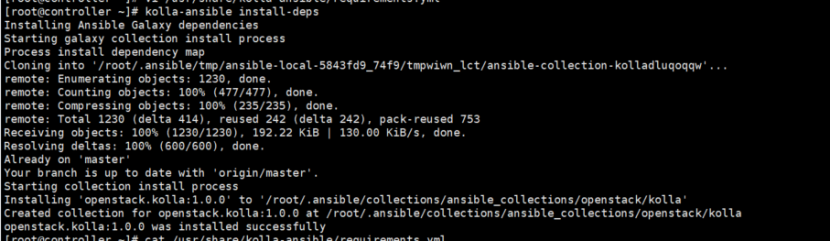

执行以下命令安装 Ansible Galaxy 的依赖项。

root@controller \~\]# pip3 install cryptography==38.0.4 #修改分支 \[root@controller \~\]# cat /usr/share/kolla-ansible/requirements.yml --- collections: - name: https://opendev.org/openstack/ansible-collection-kolla type: git version: master \[root@controller kolla-ansible\]# kolla-ansible install-deps

5 Ansible 运行配置优化

对 Ansible 进行调优以加快执行速度,具体优化如下。

root@controller kolla-ansible\]# cat \<\< MXD \> /etc/ansible/ansible.cfg \> \[defaults

> #SSH 服务关闭密钥检测

> host_key_checking=False

> #如果不使用 sudo,建议开启

> pipelining=True

> #执行任务的并发数

> forks=100

> timeout=800

> #禁用警告#

> devel_warning = False

> deprecation_warnings=False

> #显示每个任务花费的时间

> callback_whitelist = profile_tasks

> #记录 ansible 的输出,相对路径表示

> log_path= wxic_cloud.log

> #主机清单文件,相对路径表示

> inventory = openstack_cluster

> #命令执行环境,也可更改为/bin/bash

> executable = /bin/sh

> remote_port = 22

> remote_user = root

> #默认输出的详细程度

> #可选值 0、1、2、3、4 等

> 52

> #值越高输出越详细

> verbosity = 0

> show_custom_stats = True

> interpreter_python = auto_legacy_silent

> [colors]

> #成功的任务绿色显示

> ok = green

> #跳过的任务使用亮灰色显示

> skip = bright gray

> #警告使用亮紫色显示

> warn = bright purple

> [privilege_escalation]

> become_user = root

> [galaxy]

> display_progress = True

> MXD

使用 ansible-config view 命令查看修改后的配置。

root@controller \~\]# ansible-config view

6 Kolla-ansible 环境初始配置

- 修改主机清单文件

进入/etc/ansible 目录,编辑 openstack_cluster 清单文件来指定集群节点的主机及其所属

组。在这个清单文件中还可以用来指定控制节点连接集群各个节点的用户名、密码等(注

意:ansible_password 为 root 用户的密码,所有节点的 root 用户密码不可以是纯数字)。

root@controller \~\]# cd /etc/ansible/ \[root@controller ansible\]# awk '!/\^#/ \&\& !/\^$/' multinode \> openstack_cluster \[root@controller ansible\]# cat -n openstack_cluster 1 \[all:vars

2 ansible_password=Shq.15541076909

3 ansible_become=false

4 [control]

5 192.168.100.10

6 [network]

7 192.168.100.10

8 [compute]

9 192.168.100.20

10 [monitoring]

11 192.168.100.10

12 [storage]

13 192.168.100.20

......

在上面的主机清单文件中定义了 control、network、compute、monitoring 和 storage 五

个组,指定了各个节点需要承担的角色,并且在组 all:vars 中定义了全局变量,各组中会

有一些变量配置信息,这些变量主要用来连接服务器。其中 ansible_password 用来指定登

录服务器的密码,ansible_become 用来指定是否使用 sudo 来执行命令,其他组内的内容保持默认即可,不用修改。

- 检查主机清单文件是否配置正确

使用以下命令测试各主机之间能否连通。

root@controller \~\]# dnf -y install sshpass #两个节点 \[root@controller \~\]# ansible all -m ping \[WARNING\]: Invalid characters were found in group names but not replaced, use -vvvv to see details localhost \| SUCCESS =\> { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python3.10" }, "changed": false, "ping": "pong" } 192.168.239.10 \| SUCCESS =\> { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python3.10" }, "changed": false, "ping": "pong" } 192.168.239.20 \| SUCCESS =\> { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python3.10" }, "changed": false, "ping": "pong" }

- 配置 OpenStack 各服务组件密码

在使用 Kolla-ansible 部署 OpenStack Yoga 平台时建议使用随机密码生成器来生成各个

服务的密码,命令如下。

root@controller \~\]# kolla-genpwd

修改 Horizon 界面登录密码为 wxic@2024,命令如下。

root@controller \~\]# sed -i 's/keystone_admin_password: .\*/keystone_admin_password: \\ wxic@2024/g' /etc/kolla/passwords.yml

验证修改结果,命令如下。

root@controller \~\]# grep keystone_admin /etc/kolla/passwords.yml keystone_admin_password: wxic@2024

- 编辑 globals.yml 文件

在使用 Kolla-ansible 部署 OpenStack Yoga 平台时,最重要的便是 globals.yml 文件的修

改,通过阅读 OpenStack 官方文档的服务指南,按照自己的需求选择安装相关的组件。

本次部署时安装了较多组件,具体的组件列表可查看以下修改后的 globals.yml 文件,

其中要注意一个选项是 kolla_internal_vip_address 的地址,该地址为 192.168.239.0/24 网段里的任何一个未被使用的 IP(本次部署使用 192.168.239.100),部署完成后使用该地址登录 Horizon,。

root@controller \~\]# cd /etc/kolla/ \[root@controller kolla\]# cp globals.yml{,_bak} \[root@controller kolla\]# cat globals.yml --- kolla_base_distro: "ubuntu" 55kolla_install_type: "source" openstack_release: "yoga" kolla_internal_vip_address: "192.168.239.100" docker_registry: "quay.nju.edu.cn" network_interface: "ens36" neutron_external_interface: "ens33" neutron_plugin_agent: "openvswitch" openstack_region_name: "RegionWxic" enable_aodh: "yes" enable_barbican: "yes" enable_ceilometer: "yes" enable_ceilometer_ipmi: "yes" enable_cinder: "yes" enable_cinder_backup: "yes" enable_cinder_backend_lvm: "yes" enable_cloudkitty: "yes" enable_gnocchi: "yes" enable_gnocchi_statsd: "yes" enable_manila: "yes" enable_manila_backend_generic: "yes" enable_neutron_vpnaas: "yes" enable_neutron_qos: "yes" enable_neutron_bgp_dragent: "yes" enable_neutron_provider_networks: "yes" enable_redis: "yes" enable_swift: "yes" glance_backend_file: "yes" glance_file_datadir_volume: "/var/lib/glance/wxic/" barbican_crypto_plugin: "simple_crypto" barbican_library_path: "/usr/lib/libCryptoki2_64.so" cinder_volume_group: "cinder-wxic" cloudkitty_collector_backend: "gnocchi" cloudkitty_storage_backend: "influxdb" nova_compute_virt_type: "kvm" swift_devices_name: "KOLLA_SWIFT_DATA"

在/etc/kolla/config/目录自定义 Neutron 和 Manila 服务的一些配置,在部署集群时使用

自定义的配置覆盖掉默认的配置。

root@controller kolla\]# cd /etc/kolla/config/ \[root@controller config\]# mkdir neutron \[root@controller config\]# cat \<\< MXD \> neutron/dhcp_agent.ini \> \[DEFAULT

> dnsmasq_dns_servers = 8.8.8.8,8.8.4.4,223.6.6.6,119.29.29.29

> MXD

root@controller config\]# cat \<\< MXD \> neutron/ml2_conf.ini \> \[ml2

> tenant_network_types = flat,vxlan,vlan

> [ml2_type_vlan]

> network_vlan_ranges = provider:10:1000

> [ml2_type_flat]

> flat_networks = provider

> MXD

root@controller config\]# cat \<\< MXD \> neutron/openvswitch_agent.ini \> \[securitygroup

> firewall_driver = openvswitch

> [ovs]

> bridge_mappings = provider:br-ex

> MXD

root@controller config\]# cat \<\< MXD \> manila-share.conf \[generic

service_instance_flavor_id = 100

MXD

7 存储节点磁盘初始化

- 初始化 Cinder 服务磁盘

在compute01节点使用一块20 GB磁盘创建cinder-volumes卷组,该卷组 名和globals.yml 里面"cinder_volume_group"指定的参数一致。

root@compute01 \~\]# pvcreate /dev/sdc Physical volume "/dev/sdc" successfully created. \[root@compute01 \~\]# vgcreate cinder-wxic /dev/sdc Volume group "cinder-wxic" successfully created \[root@compute01 \~\]# vgs cinder-wxic VG #PV #LV #SN Attr VSize VFree cinder-wxic 1 0 0 wz--n- \<20.00g \<20.00g

- 初始化 Swift 服务磁盘

在 compute01 节点使用三块20 GB磁盘用于 Swift 存储设备的磁盘,并添加特殊的分区

名 称 和 文 件 系 统 标 签 , 编 写 Swift_disk_init.sh 脚 本 初 始 化 磁 盘 。 其 中 设 备 名KOLLA_SWIFT_DATA 和 globals.yml 文件里面"swift_devices_name"指定的参数一致。

root@compute01 \~\]# cat Swift_disk_init.sh #!/bin/bash index=0 for d in sdd sde sdb; do parted /dev/${d} -s -- mklabel gpt mkpart KOLLA_SWIFT_DATA 1% 100% mkfs.xfs -f -L d${index} /dev/${d}1 (( index++ )) done \[root@compute01 \~\]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS sda 8:0 0 100G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 99G 0 part ├─openeuler-root 253:0 0 63.9G 0 lvm / ├─openeuler-swap 253:1 0 3.9G 0 lvm \[SWAP

└─openeuler-home 253:2 0 31.2G 0 lvm /home

sdb 8:16 0 20G 0 disk

└─sdb1 8:17 0 19.8G 0 part

sdc 8:32 0 20G 0 disk

sdd 8:48 0 20G 0 disk

└─sdd1 8:49 0 19.8G 0 part

sde 8:64 0 20G 0 disk

└─sde1 8:65 0 19.8G 0 part

root@compute01 \~\]# parted /dev/sdd print Model: VMware, VMware Virtual S (scsi) Disk /dev/sdd: 21.5GB Sector size (logical/physical): 512B/512B Partition Table: gpt Disk Flags: Number Start End Size File system Name Flags 1 215MB 21.5GB 21.3GB KOLLA_SWIFT_DATA

8 部署集群环境

在控制节点安装 OpenStack CLI 客户端。

root@controller \~\]# dnf -y install python3-openstackclient

为了使部署的控制节点网络路由正常工作,需要在 Linux 系统中启用 IP 转发功能,修

改 controller 和 compute01 节点的/etc/sysctl.conf 文件,并配置在系统启动时自动加载

br_netfilter 模块。

#控制节点

root@controller \~\]# cat \<\< MXD \>\> /etc/sysctl.conf \> net.ipv4.ip_forward=1 \> net.bridge.bridge-nf-call-ip6tables=1 \> net.bridge.bridge-nf-call-iptables=1 \> MXD #临时加载模块,重启后失效 \[root@controller \~\]# modprobe br_netfilter #重新加载配置 \[root@controller \~\]# sysctl -p /etc/sysctl.conf kernel.sysrq = 0 net.ipv4.ip_forward = 0 net.ipv4.conf.all.send_redirects = 0 net.ipv4.conf.default.send_redirects = 0 net.ipv4.conf.all.accept_source_route = 0 net.ipv4.conf.default.accept_source_route = 0 net.ipv4.conf.all.accept_redirects = 0 net.ipv4.conf.default.accept_redirects = 0 net.ipv4.conf.all.secure_redirects = 0 net.ipv4.conf.default.secure_redirects = 0 net.ipv4.icmp_echo_ignore_broadcasts = 1 net.ipv4.icmp_ignore_bogus_error_responses = 1 net.ipv4.conf.all.rp_filter = 1 net.ipv4.conf.default.rp_filter = 1 net.ipv4.tcp_syncookies = 1 kernel.dmesg_restrict = 1 net.ipv6.conf.all.accept_redirects = 0 net.ipv6.conf.default.accept_redirects = 0 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 创建yoga.service文件,设置系统开机自动加载br_netfilter模块 \[root@controller \~\]# cat \<\< MXD \> /usr/lib/systemd/system/yoga.service \> \[Unit

> Description=Load br_netfilter and sysctl settings for OpenStack

> [Service]

> Type=oneshot

> RemainAfterExit=yes

> ExecStart=/sbin/modprobe br_netfilter

> ExecStart=/usr/sbin/sysctl -p

> [Install]

> WantedBy=multi-user.target

> MXD

root@controller \~\]# systemctl enable --now yoga.service Created symlink /etc/systemd/system/multi-user.target.wants/yoga.service → /usr/lib/systemd/system/yoga.service. #compute01节点 \[root@compute01 \~\]# cat \<\< MXD \>\> /etc/sysctl.conf \> net.ipv4.ip_forward=1 \> net.bridge.bridge-nf-call-ip6tables=1 \> net.bridge.bridge-nf-call-iptables=1 \> MXD \[root@compute01 \~\]# modprobe br_netfilter \[root@compute01 \~\]# sysctl -p /etc/sysctl.conf kernel.sysrq = 0 net.ipv4.ip_forward = 0 net.ipv4.conf.all.send_redirects = 0 net.ipv4.conf.default.send_redirects = 0 net.ipv4.conf.all.accept_source_route = 0 net.ipv4.conf.default.accept_source_route = 0 net.ipv4.conf.all.accept_redirects = 0 net.ipv4.conf.default.accept_redirects = 0 net.ipv4.conf.all.secure_redirects = 0 net.ipv4.conf.default.secure_redirects = 0 net.ipv4.icmp_echo_ignore_broadcasts = 1 net.ipv4.icmp_ignore_bogus_error_responses = 1 net.ipv4.conf.all.rp_filter = 1 net.ipv4.conf.default.rp_filter = 1 net.ipv4.tcp_syncookies = 1 kernel.dmesg_restrict = 1 net.ipv6.conf.all.accept_redirects = 0 net.ipv6.conf.default.accept_redirects = 0 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 \[root@compute01 \~\]# cat \<\< MXD \> /usr/lib/systemd/system/yoga.service \> \[Unit

> Description=Load br_netfilter and sysctl settings for OpenStack

> [Service]

> 60

> Type=oneshot

> RemainAfterExit=yes

> ExecStart=/sbin/modprobe br_netfilter

> ExecStart=/usr/sbin/sysctl -p

> [Install]

> WantedBy=multi-user.target

> MXD

root@compute01 \~\]# systemctl enable --now yoga.service

在控制节点使用命令安装 OpenStack 集群所需要的基础依赖项和修改一些配置文件

(如安装 Docker 和修改 Hosts 文件等)。

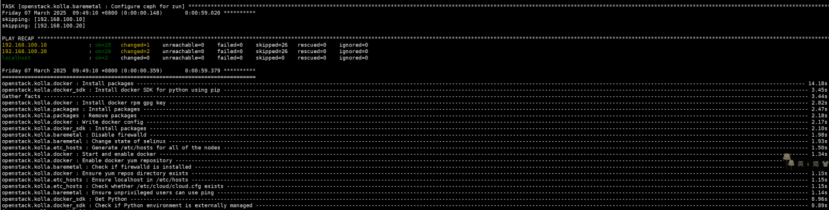

在控制节点使用命令安装OpenStack集群所需要的基础依赖项和修改一些配置文件(如安装Docker和修改Hosts文件等),执行结果和用时如图所示。

修改文件使其下载阿里云的镜像,不下载官网镜像

root@controller \~\]# vi $HOME/.ansible/collections/ansible_collections/openstack/kolla/roles/docker/tasks/repo-RedHat.yml --- - name: Ensure yum repos directory exists file: path: /etc/yum.repos.d/ state: directory recurse: true become: true - name: Enable docker yum repository yum_repository: name: docker description: Docker main Repository baseurl: " https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable " gpgcheck: "true" gpgkey: "https://mirrors.aliyun.com/docker-ce/linux/centos/gpg" # NOTE(yoctozepto): required to install containerd.io due to runc being a # modular package in CentOS 8 see: # https://bugzilla.redhat.com/show_bug.cgi?id=1734081 module_hotfixes: true become: true - name: Install docker rpm gpg key rpm_key: state: present key: "{{ docker_yum_gpgkey }}" become: true when: docker_yum_gpgcheck \| bool

修改此文件,将此处的网址换为阿里云的

root@controller \~\]# vi $HOME/.ansible/collections/ansible_collections/openstack/kolla/roles/docker/defaults/main.yml docker_yum_url: "https://mirrors.aliyun.com/docker-ce/linux/centos/" docker_yum_baseurl: "https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable"

在控制节点使用命令安装OpenStack集群所需要的基础依赖项和修改一些配置文件(如安装Docker和修改Hosts文件等),执行结果和用时如图所示:

root@controller \~\]# kolla-ansible bootstrap-servers

修改国内镜像地址加速Docker镜像的拉取,编辑控制节点和计算节点的/etc/docker/daemon.json文件,添加registry-mirrors部分的内容,下面给出控制节点的/etc/docker/daemon.json文件的配置示例,计算节点同样修改即可。

root@controller \~\]# cat /etc/docker/daemon.json { "bridge": "none", "default-ulimits": { "nofile": { "hard": 1048576, "name": "nofile", "soft": 1048576 } }, "ip-forward": false, "iptables": false, "registry-mirrors": \[ "https://docker.1ms.run", "https://docker.1panel.live", "https://hub.rat.dev", "https://docker.m.daocloud.io", "https://do.nark.eu.org", "https://dockerpull.com", "https://dockerproxy.cn", "https://docker.awsl9527.cn"\], "exec-opts": \["native.cgroupdriver=systemd"

}

root@controller \~\]# systemctl daemon-reload \[root@controller \~\]# systemctl restart docker

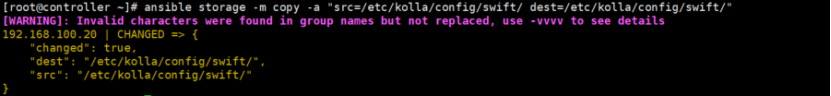

在控制节点生成Swift服务所需要的环,编写Swift-init.sh脚本,其中STORAGE_NODES的IP为Swift磁盘所在节点的IP。

root@controller \~\]# docker pull kolla/swift-base:master-ubuntu-jammy \[root@controller \~\]# cat swift-ring.sh #!/bin/bash TORAGE_NODES=(192.168.100.20) # 替换为你的存储节点 IP KOLLA_SWIFT_BASE_IMAGE="kolla/swift-base:master-ubuntu-jammy" CONFIG_DIR="/etc/kolla/config/swift" # 创建配置目录 mkdir -p ${CONFIG_DIR} # 定义函数:生成环并添加设备 build_ring() { local ring_type=$1 local port=$2 echo "===== 创建 ${ring_type} 环 =====" docker run --rm \\ -v ${CONFIG_DIR}:/etc/kolla/config/swift \\ ${KOLLA_SWIFT_BASE_IMAGE} \\ swift-ring-builder /etc/kolla/config/swift/${ring_type}.builder create 10 3 1 # 添加设备到环 for node in ${STORAGE_NODES\[@\]}; do for i in {0..2}; do echo "添加设备 d${i} 到 ${ring_type} 环(节点 ${node},端口 ${port})" docker run --rm \\ -v ${CONFIG_DIR}:/etc/kolla/config/swift \\ ${KOLLA_SWIFT_BASE_IMAGE} \\ swift-ring-builder /etc/kolla/config/swift/${ring_type}.builder \\ add "r1z1-${node}:${port}/d${i}" 1 done done # 重新平衡环 docker run --rm \\ -v ${CONFIG_DIR}:/etc/kolla/config/swift \\ ${KOLLA_SWIFT_BASE_IMAGE} \\ swift-ring-builder /etc/kolla/config/swift/${ring_type}.builder rebalance } # 生成各类型环 build_ring object 6000 build_ring account 6001 build_ring container 6002 # 验证环文件 echo "===== 生成的环文件 =====" ls -lh ${CONFIG_DIR}/\*.ring.gz echo "===== Object 环设备状态 =====" docker run --rm \\ -v ${CONFIG_DIR}:/etc/kolla/config/swift \\ ${KOLLA_SWIFT_BASE_IMAGE} \\ swift-ring-builder /etc/kolla/config/swift/object.builde \[root@controller \~\]# chmod +x swift-ring.sh \[root@controller \~\]# ./swift-ring.sh \[root@controller \~\]# ansible storage -m copy -a "src=/etc/kolla/config/swift/ dest=/etc/kolla/config/swift/"

root@controller \~\]# vi /usr/share/kolla-ansible/ansible/roles/prechecks/vars/main.yml openEuler: - "20.03" - "22.09"

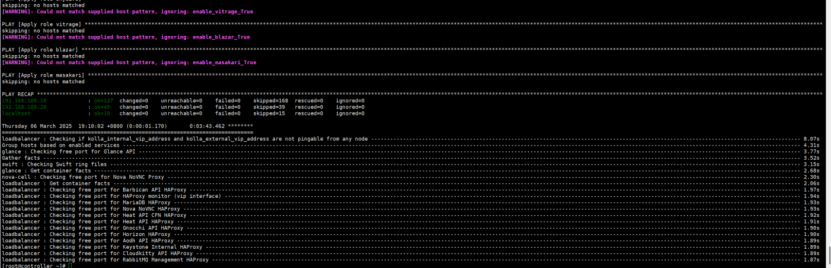

在节点进行部署前检查:

root@controller \~\]# kolla-ansible prechecks

部署服务

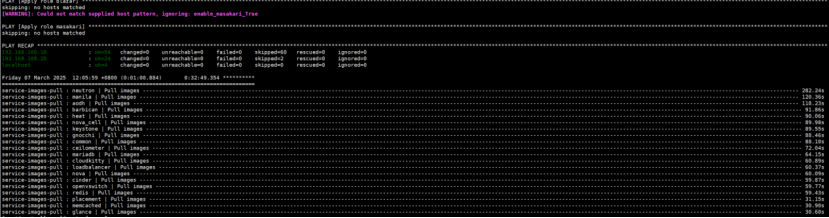

root@controller \~\]# kolla-ansible pull

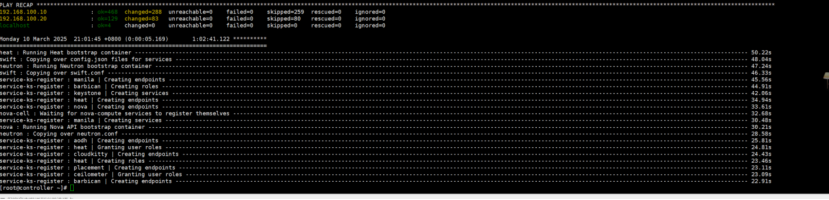

root@controller \~\]# kolla-ansible deploy

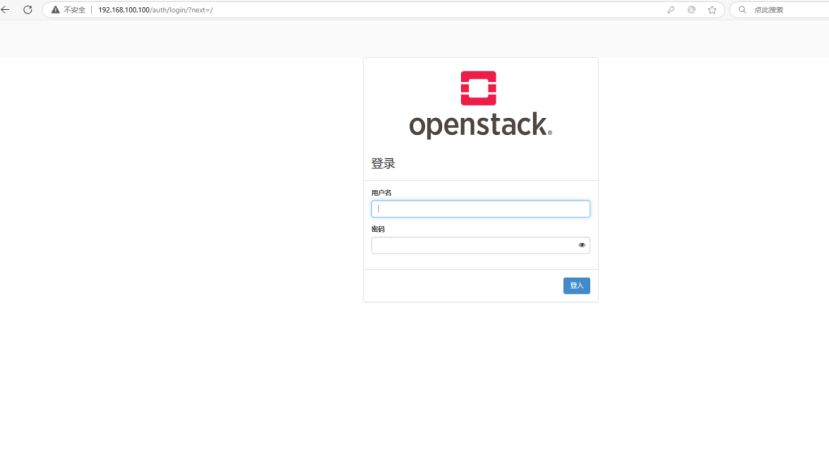

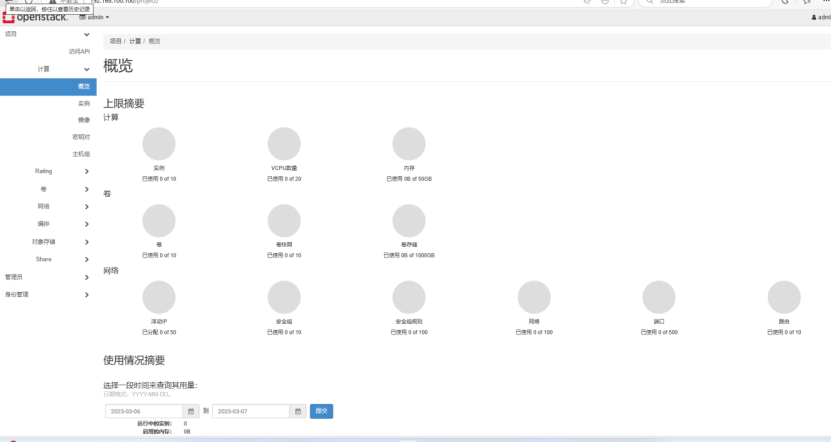

浏览器访问。

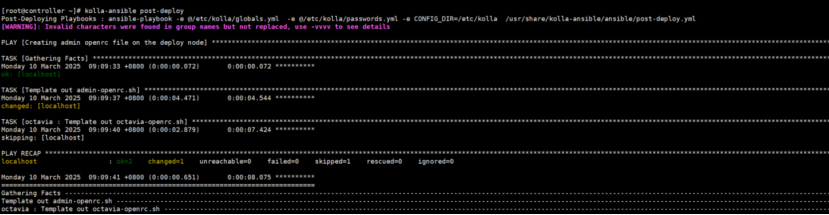

9 OpenStack CLI 客户端设置

OpenStack 集群部署完成后,客户端执行命令需要生成 clouds.yaml 和 admin-openrc.sh

文件,这些是 admin 用户的凭据,执行以下命令,执行结果

root@controller \~\]# kolla-ansible post-deploy

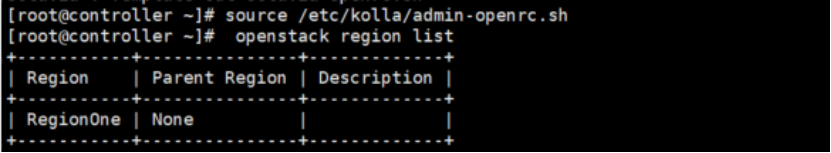

root@controller \~\]# source /etc/kolla/admin-openrc.sh \[root@controller \~\]# openstack region list

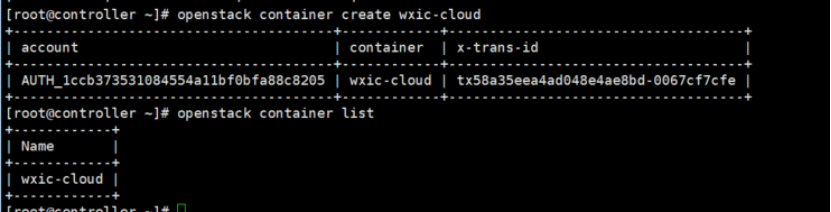

root@controller \~\]# openstack container create wxic-cloud \[root@controller \~\]# openstack container list

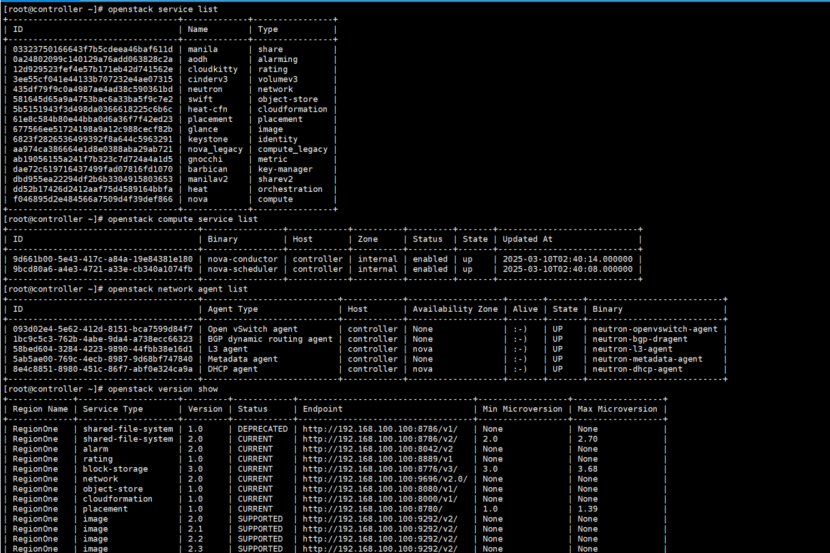

root@controller \~\]# openstack service list \[root@controller \~\]# openstack compute service list \[root@controller \~\]# openstack network agent list \[root@controller \~\]# openstack version show

10安装skyline服务

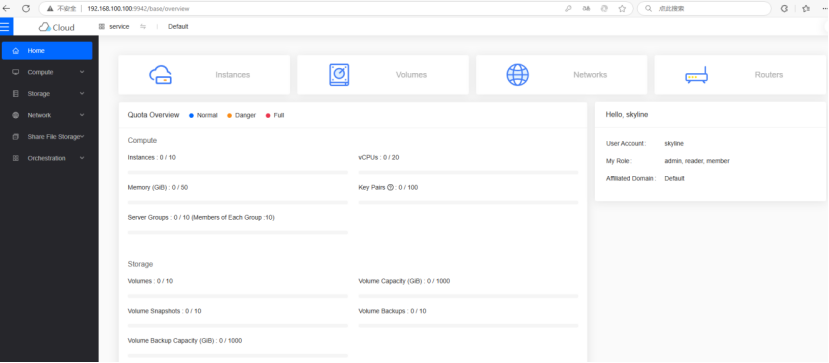

接下来安装Skyline组件,可以参考任务3.1里的内容进行安装部署,因为此次部署比单节点时多了更多组件,故在安装完成后登录首页查看服务列表,可以发现功能更加丰富

- 创建Skyline服务的数据库

在MariaDB容器中创建Skyline服务的数据库并赋予远程访问权限,命令及执行结果如下所示。

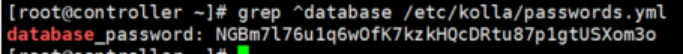

查询数据库登录密码

root@controller \~\]# grep \^database /etc/kolla/passwords.yml

进入数据库容器创建Skyline服务的数据库并赋予远程访问权限

root@controller \~\]# docker exec -it mariadb sh (mariadb)\[mysql@controller /\]$ mysql -uroot -p Enter password: Welcome to the MariaDB monitor. Commands end with ; or \\g. Your MariaDB connection id is 4166 Server version: 10.6.17-MariaDB-1:10.6.17+maria\~ubu2004-log mariadb.org binary distribution Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\\h' for help. Type '\\c' to clear the current input statement. MariaDB \[(none)\]\> CREATE DATABASE skyline DEFAULT CHARACTER SET utf8 DEFAULT -\> -\> COLLATE utf8_general_ci; ERROR 1064 (42000): You have an error in your SQL syntax; check the manual that corresponds to your MariaDB server version for the right syntax to use near '-\> COLLATE utf8_general_ci' at line 2 MariaDB \[(none)\]\> CREATE DATABASE skyline DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.020 sec) MariaDB \[(none)\]\> GRANT ALL PRIVILEGES ON skyline.\* TO 'skyline'@'localhost' IDENTIFIED BY 'mariadb_yoga'; Query OK, 0 rows affected (0.008 sec) MariaDB \[(none)\]\> GRANT ALL PRIVILEGES ON skyline.\* TO 'skyline'@'%' IDENTIFIED BY 'mariadb_yoga'; Query OK, 0 rows affected (0.008 sec) MariaDB \[(none)\]\> flush privileges; Query OK, 0 rows affected (0.016 sec)

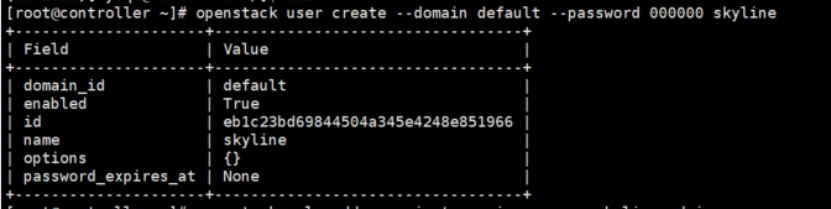

- 创建Skyline用户并添加admin角色

创建Skyline服务的新用户skyline,密码为000000,在默认域default中创建,并向Skyline用户分配admin角色。

root@controller \~\]# openstack user create --domain default --password 000000 skyline

root@controller \~\]# openstack role add --project service --user skyline admin

- 修改Skyline服务配置文件

创建Skyline服务需要的配置文件目录和日志文件目录。

root@controller \~\]# mkdir -p /etc/skyline /var/log/skyline /var/lib/skyline \\ \> /var/log/nginx \[root@controller \~\]# openstack endpoint list --interface internal --service keystone -f value -c URL http://192.168.100.100:5000

创建配置文件skyline.yaml

root@controller \~\]# cat /etc/skyline/skyline.yaml default: access_token_expire: 3600 access_token_renew: 1800 cors_allow_origins: \[

MySQL连接地址及密码

database_url: mysql://skyline:mariadb_yoga@192.168.100.100:3306/skyline

debug: false

log_dir: /var/log/skyline

log_file: skyline_wxic.log

prometheus_basic_auth_password: '000000'

prometheus_basic_auth_user: ''

prometheus_enable_basic_auth: false

prometheus_endpoint: http://192.168.84.100:9091

secret_key: nVvPJIqQLsU4dab4C8dpipFVxJsax1JvzKVJmNxH

session_name: session

ssl_enabled: true

openstack:

base_domains:

- heat_user_domain

修改默认区域

default_region: RegionOne

enforce_new_defaults: true

extension_mapping:

floating-ip-port-forwarding: neutron_port_forwarding

fwaas_v2: neutron_firewall

qos: neutron_qos

vpnaas: neutron_vpn

interface_type: public

keystone认证地址

keystone_url: http://192.168.84.100:5000/v3/

nginx_prefix: /api/openstack

reclaim_instance_interval: 604800

service_mapping:

baremetal: ironic

compute: nova

container: zun

container-infra: magnum

database: trove

identity: keystone

image: glance

key-manager: barbican

load-balancer: octavia

network: neutron

object-store: swift

orchestration: heat

placement: placement

sharev2: manilav2

volumev3: cinder

sso_enabled: false

sso_protocols:

- openid

修改region名

sso_region: RegionOne

system_admin_roles:

admin

system_admin

system_project: service

system_project_domain: Default

system_reader_roles:

- system_reader

system_user_domain: Default

system_user_name: skyline

#skyline用户密码

system_user_password: '000000'

setting:

base_settings:

flavor_families

gpu_models

usb_models

flavor_families:

- architecture: x86_architecture

categories:

- name: general_purpose

properties: []

- name: compute_optimized

properties: []

- name: memory_optimized

properties: []

- name: high_clock_speed

properties: []

- architecture: heterogeneous_computing

categories:

- name: compute_optimized_type_with_gpu

properties: []

- name: visualization_compute_optimized_type_with_gpu

properties: []

gpu_models:

- nvidia_t4

usb_models:

- usb_c

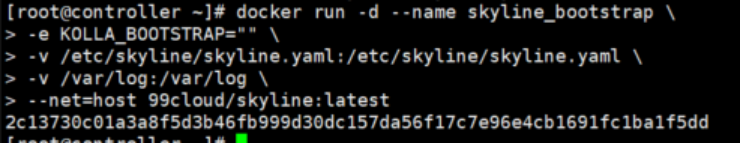

- 运行Skyline服务

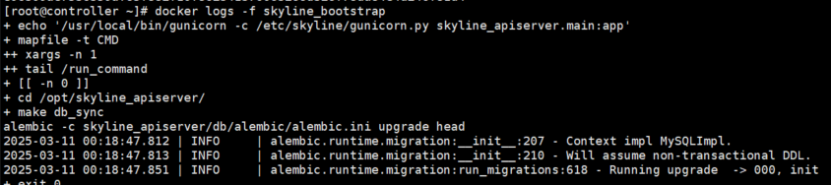

运行初始化引导容器生成数据库Skyline的表结构,并查看日志以验证数据库是否正常连接和表结构的创建。

root@controller \~\]# docker run -d --name skyline_bootstrap \\ \> -e KOLLA_BOOTSTRAP="" \\ \> -v /etc/skyline/skyline.yaml:/etc/skyline/skyline.yaml \\ \> -v /var/log:/var/log \\ \> --net=host 99cloud/skyline:latest

root@controller \~\]# docker logs -f skyline_bootstrap

表结构创建成功后,删除数据库初始化引导容器skyline_bootstrap。

root@controller \~\]# docker rm -f skyline_bootstrap skyline_bootstrap

运行skyline-apiserver服务容器skyline,设置重启策略为always,并挂载配置文件和日志目录的数据卷,将容器的网络连接设置为主机的网络。

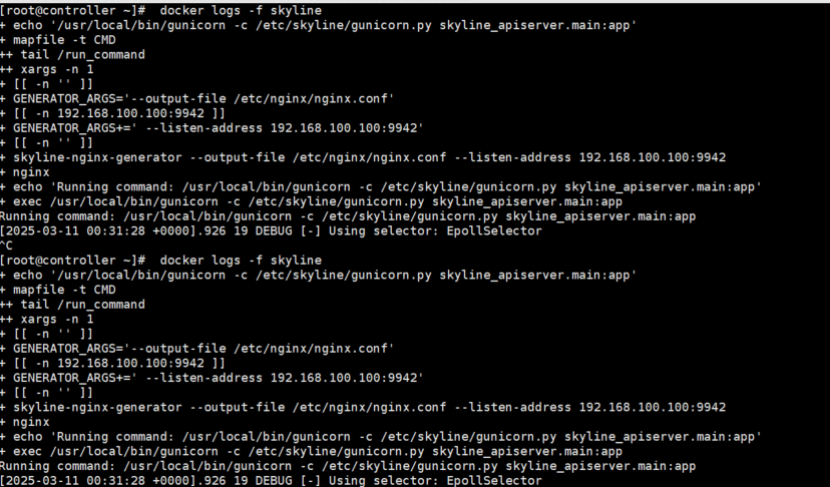

root@controller \~\]# docker run -d --name skyline --restart=always -v /etc/skyline/skyline.yaml:/etc/skyline/skyline.yaml -v /var/log:/var/log -e LISTEN_ADDRESS=192.168.100.100:9942 --net=host 99cloud/skyline:latest 5f97e75a1ad315dadfedadeae41a3e602c2a0f36dcedca118ecc897da2e568ba \[root@controller \~\]# docker logs -f skyline

Skyline服务组件安装结束,在浏览器访问http://192.168.84.100:9942地址便可以看到如图所示的Skyline登录界面,输入用户名和密码登录后,可看到如图所示的Skyline首页界面。

进不去的话看看时间能否同步。