目录

[1. 准确率(Accuracy)](#1. 准确率(Accuracy))

[2. 损失(Loss)](#2. 损失(Loss))

[3. 精确率(Precision)](#3. 精确率(Precision))

[4. 召回率(Recall)](#4. 召回率(Recall))

[1. 先理解一个关键:为什么不能只用一个指标?](#1. 先理解一个关键:为什么不能只用一个指标?)

[2. 每个指标的 "不可替代性"](#2. 每个指标的 “不可替代性”)

[(1)准确率(Accuracy):看整体 "蒙对" 的概率](#(1)准确率(Accuracy):看整体 “蒙对” 的概率)

[(2)损失(Loss):看模型 "心里有数没数"](#(2)损失(Loss):看模型 “心里有数没数”)

[(3)精确率(Precision):看 "说有就真有" 的概率](#(3)精确率(Precision):看 “说有就真有” 的概率)

[(4)召回率(Recall):看 "有病就不会漏" 的概率](#(4)召回率(Recall):看 “有病就不会漏” 的概率)

[3. 总结:4 个指标的 "分工合作"](#3. 总结:4 个指标的 “分工合作”)

[ROC 曲线与模型性能分析](#ROC 曲线与模型性能分析)

[F1-score 模型综合分](#F1-score 模型综合分)

结果指标解读

《模型训练指标解析:肺炎诊断》

好的!这四个指标(准确率、损失、精确率、召回率)是评估模型性能的关键指标,我来为你逐一解释它们的定义、用途和解读方法。

一、为什么选择这些指标?

这些指标是机器学习中最常用的评估标准,特别是在医疗诊断等场景中:

- 准确率(Accuracy ):最直观的指标,反映模型整体的判断能力

- 损失(Loss ):反映模型预测值与真实值的差距,指导训练过程

- 精确率(Precision ):关注 "预测为正的样本中真正正确的比例",适合关注 "不误诊" 的场景

- 召回率(Recall ):关注 "实际为正的样本中被正确识别的比例",适合关注 "不漏诊" 的场景

在肺炎诊断中,我们既需要 "不误诊"(精确率),也需要 "不漏诊"(召回率),所以这四个指标能全面评估模型性能。

二、各指标的定义和解读

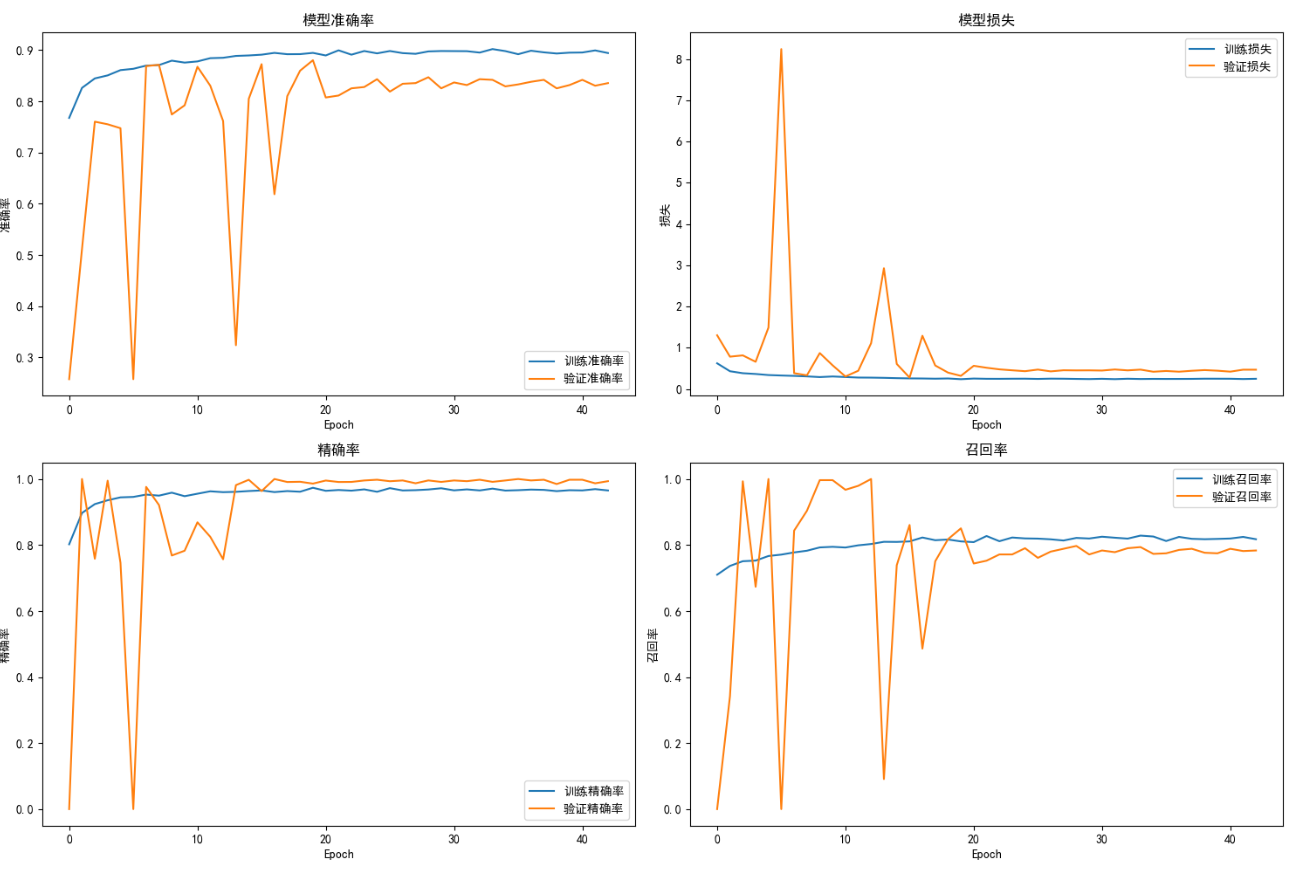

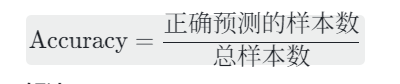

1. 准确率(Accuracy)

定义:模型正确预测的样本数占总样本数的比例

公式:

解读:

- 左上子图中,蓝色线(训练准确率)最终稳定在 0.9 以上,说明模型在训练集上判断正确的比例超过 90%

- 橙色线(验证准确率)也接近 0.9,说明模型在新数据上的泛化能力不错

- 两条线的差距不大,说明模型没有明显过拟合

2. 损失(Loss)

定义:模型预测值与真实值之间的误差,值越小说明预测越准确

(具体计算方式取决于损失函数,如交叉熵损失)

大白话解释:

想象你是老师,让学生做 10 道数学题。

- 学生第一次做,错了 7 道题 → 损失很大(7/10)

- 学生复习后再做,只错了 2 道 → 损失变小(2/10)

- 学生第三次做,全对 → 损失为 0

例子:

假设模型预测 "张三有 80% 概率得肺炎",但实际张三没得肺炎:

- 预测值(80%)和真实值(0%)之间的差距就是损失

- 如果用均方误差计算:(0.8-0)² = 0.64 → 损失为 0.64

- 如果用交叉熵损失计算:-ln (0.2) ≈ 1.61 → 损失更大(因为模型错得很离谱)

解读:

- 右上子图中,蓝色线(训练损失)持续下降并趋于平稳,说明模型在训练集上的预测误差在减小

- 橙色线(验证损失)虽然波动较大,但整体也呈下降趋势,说明模型在验证集上的误差也在降低

- 训练损失和验证损失最终都维持在较低水平,说明模型收敛良好

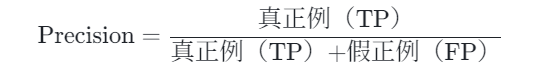

3. 精确率(Precision)

定义:预测为肺炎的样本中,真正是肺炎的比例

公式:

解读:

- 左下子图中,两条线都接近 1.0,说明模型预测为肺炎的样本中,大部分确实是肺炎

- 这对医疗诊断很重要:如果模型说 "是肺炎",那么有很高的概率是正确的(减少不必要的治疗)

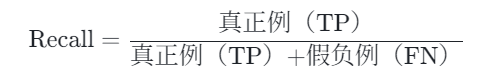

4. 召回率(Recall)

定义:实际是肺炎的样本中,被模型正确识别的比例

公式:

解读:

- 右下子图中,两条线也接近 0.8-0.9,说明模型能识别出大部分的肺炎病例

- 这在医疗诊断中至关重要:尽量减少 "漏诊"(把肺炎当成正常)的情况

- 虽然存在波动,但最终稳定在较高水平,说明模型对肺炎的识别能力不错

三、这些指标说明什么?

- 模型整体表现良好:四个指标都达到了较高水平,说明模型既没有 "漏诊" 太多肺炎,也没有 "误诊" 太多正常样本

- 泛化能力尚可:训练集和验证集的指标差距不大,说明模型没有过度 "死记硬背" 训练数据

- 仍有优化空间:验证集的指标波动较大,可能说明模型对某些样本的识别能力不稳定,或者训练过程中存在噪声

四、如果要进一步优化模型,可以关注:

- 验证集指标的波动原因(是否有某些样本特别难识别?)

- 精确率和召回率的权衡(是否需要牺牲一点精确率来提高召回率?)

- 损失函数的选择是否合适(是否需要调整损失函数来更关注某些样本?)

分类任务中指标的分工合作

选择这 4 个指标(准确率、损失、精确率、召回率),核心原因是它们能从不同维度互补,全面反映模型的真实性能,尤其是在分类任务(比如之前提到的肺炎诊断)中,单一指标往往会 "骗人",而这四个指标组合能避免这种情况。

1. 先理解一个关键:为什么不能只用一个指标?

举个极端例子:

假设医院里 100 个病人中只有 1 个是肺炎患者,99 个是健康人。

如果模型偷懒,不管啥情况都预测 "健康",那么:

- 正确率(准确率)能达到 99%(因为 99 个健康人都对了),但这模型毫无意义 ------ 它漏诊了唯一的病人。

这说明:单一指标(比如只看准确率)会掩盖模型的关键问题。而这 4 个指标的组合,就是为了从不同角度 "拷问" 模型,让它的真实能力无所遁形。

2. 每个指标的 "不可替代性"

(1)准确率(Accuracy):看整体 "蒙对" 的概率

- 作用:快速判断模型的 "整体靠谱度"。比如准确率 90%,说明 100 个预测里平均 90 个是对的。

- 为什么必须有:它是最直观的 "入门指标",能让你第一时间知道模型大概在什么水平(比如准确率 30% 就是瞎猜,90% 可能还不错)。

- 局限:当数据中 "正反样本比例悬殊"(比如上面 1 个病人 99 个健康人)时,准确率会失真,所以需要其他指标补充。

(2)损失(Loss):看模型 "心里有数没数"

- 作用:比准确率更细腻地反映 "预测和真实的差距"。比如同样是 "预测错误",模型猜 "90% 概率患病" 但实际健康,比猜 "51% 概率患病" 的错误更严重(损失更大)。

- 为什么必须有 :

- 训练时靠它 "导航":损失下降,说明模型在进步;损失不变,说明模型 "学不进去了"。

- 反映模型的 "自信度":比如两个模型准确率都是 80%,但一个损失小(错误时也猜得接近真实),另一个损失大(错误时完全瞎猜),显然前者更可靠。

(3)精确率(Precision):看 "说有就真有" 的概率

- 作用:模型说 "是肺炎" 时,到底有多大概率真的是肺炎?(比如精确率 95%,意味着 100 个被预测为肺炎的人里,95 个真患病)。

- 为什么必须有:避免 "误诊"。比如在医疗中,如果精确率低,会把大量健康人当成病人,导致过度治疗(浪费资源 + 病人焦虑)。

(4)召回率(Recall):看 "有病就不会漏" 的概率

- 作用:所有真正的肺炎患者中,模型能抓出多少?(比如召回率 90%,意味着 100 个真病人里,90 个能被正确识别)。

- 为什么必须有:避免 "漏诊"。在医疗中,漏诊比误诊更危险 ------ 如果召回率低,会有很多病人被当成健康人,耽误治疗。

3. 总结:4 个指标的 "分工合作"

- 准确率:给模型打个 "总体分",看大概靠不靠谱;

- 损失:看模型 "学习过程" 和 "预测细腻度",指导训练;

- 精确率:盯着 "别冤枉好人"(少误诊);

- 召回率:盯着 "别放过坏人"(少漏诊)。

这四个指标一起,既能反映模型的整体表现,又能暴露它在关键场景(比如医疗中的误诊 / 漏诊)中的短板,所以成为分类任务中最经典的评估组合。

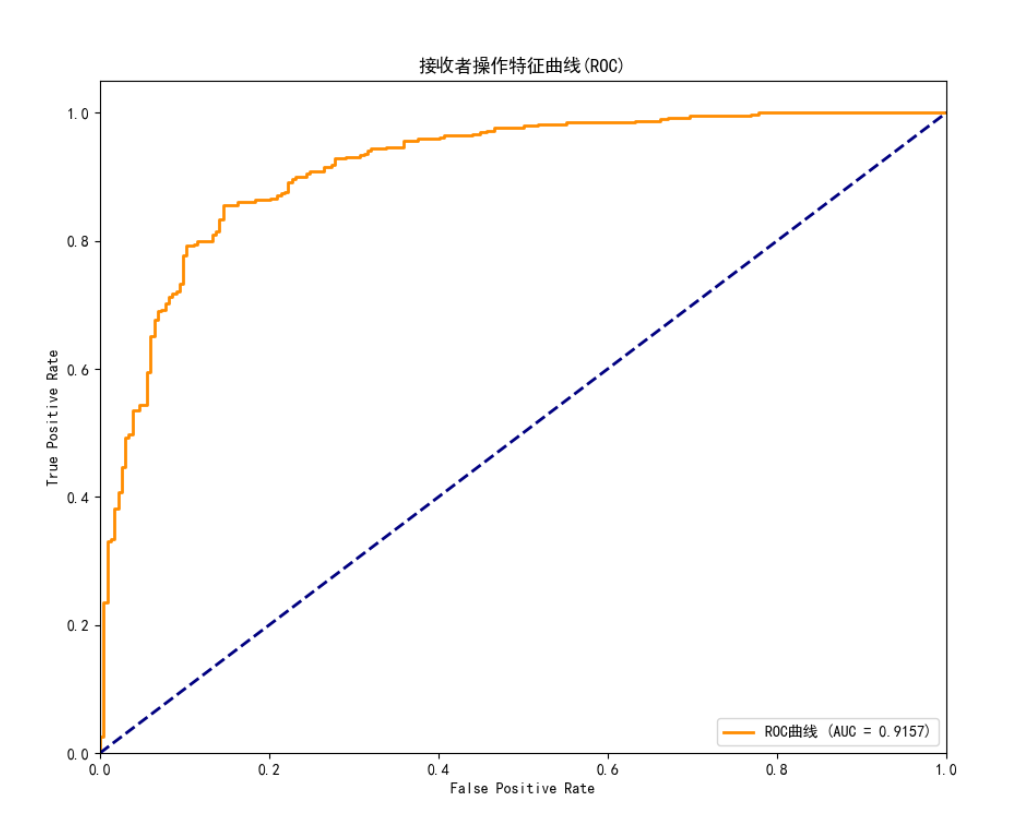

ROC 曲线与模型性能分析

这张图是ROC 曲线(受试者工作特征曲线),是评估二分类模型性能的重要工具。我来用大白话给你解释:

一、核心概念

- 横轴(False Positive Rate, FPR ):

实际为负样本(比如 "健康人")中,被模型错误预测为正样本(比如 "肺炎患者")的比例。

公式:

FPR = \frac{\text{被误诊的健康人数}}{\text{总健康人数}}

(FPR 越低,说明模型越不容易 "冤枉好人")

- 纵轴(True Positive Rate, TPR ):

实际为正样本(比如 "肺炎患者")中,被模型正确识别的比例(也就是 "召回率")。

公式:

TPR = \frac{\text{被正确识别的肺炎患者数}}{\text{总肺炎患者数}}

(TPR 越高,说明模型越不容易 "漏掉病人")

二、图中元素解读

- 橙色曲线(ROC 曲线):

模型在不同 "判断阈值" 下的 FPR 和 TPR 组合。

-

- 曲线越 "靠近左上角",说明模型在相同 FPR 下能达到更高的 TPR(更优秀)

- 图中曲线快速上升并接近顶部,说明模型性能很好

- 蓝色虚线(对角线):

代表 "随机猜测" 的模型(比如抛硬币)。

-

- 如果 ROC 曲线在虚线下方,说明模型比随机猜测还差

- 图中曲线明显在虚线上方,说明模型远优于随机猜测

- AUC (曲线下面积):

图中显示 AUC=0.9157,代表 ROC 曲线下的面积。

-

- AUC 越接近 1,模型性能越好

- 0.9157 是非常优秀的结果,说明模型有很强的区分能力

三、这张图说明什么?

- 模型区分能力强:

AUC=0.9157 > 0.9,说明模型能很好地区分 "肺炎患者" 和 "健康人"

- 漏诊率低:

曲线快速上升到接近 1.0,说明在 FPR 较低的情况下(比如 <0.2),TPR 已经很高(>0.8),即模型能识别出大部分肺炎患者,漏诊率低

- 误诊率可控:

当 TPR 达到 0.9 时,FPR 仍低于 0.1,说明模型在识别出 90% 肺炎患者的同时,只误诊了不到 10% 的健康人

四、和之前指标的关系

- 与召回率(Recall )的关系:

TPR 就是召回率,所以 ROC 曲线的纵轴直接反映了模型的召回能力

- 与精确率(Precision )的关系:

虽然 ROC 曲线不直接显示精确率,但 AUC 高通常意味着模型在各种阈值下都能保持较好的精确率和召回率平衡

- 与准确率(Accuracy )的关系:

AUC 和准确率都是模型性能的综合指标,但 AUC 更关注模型的 "区分能力",而准确率更关注 "整体正确率"

这张 ROC 曲线和 AUC 值表明:

- 模型具有很强的区分肺炎患者和健康人的能力

- 模型在控制误诊率的同时,能有效降低漏诊率

- 整体性能达到优秀水平(AUC>0.9)

如果要进一步优化,可以关注:

- 是否需要在 "减少漏诊" 和 "减少误诊" 之间做权衡

- 不同阈值下的精确率表现

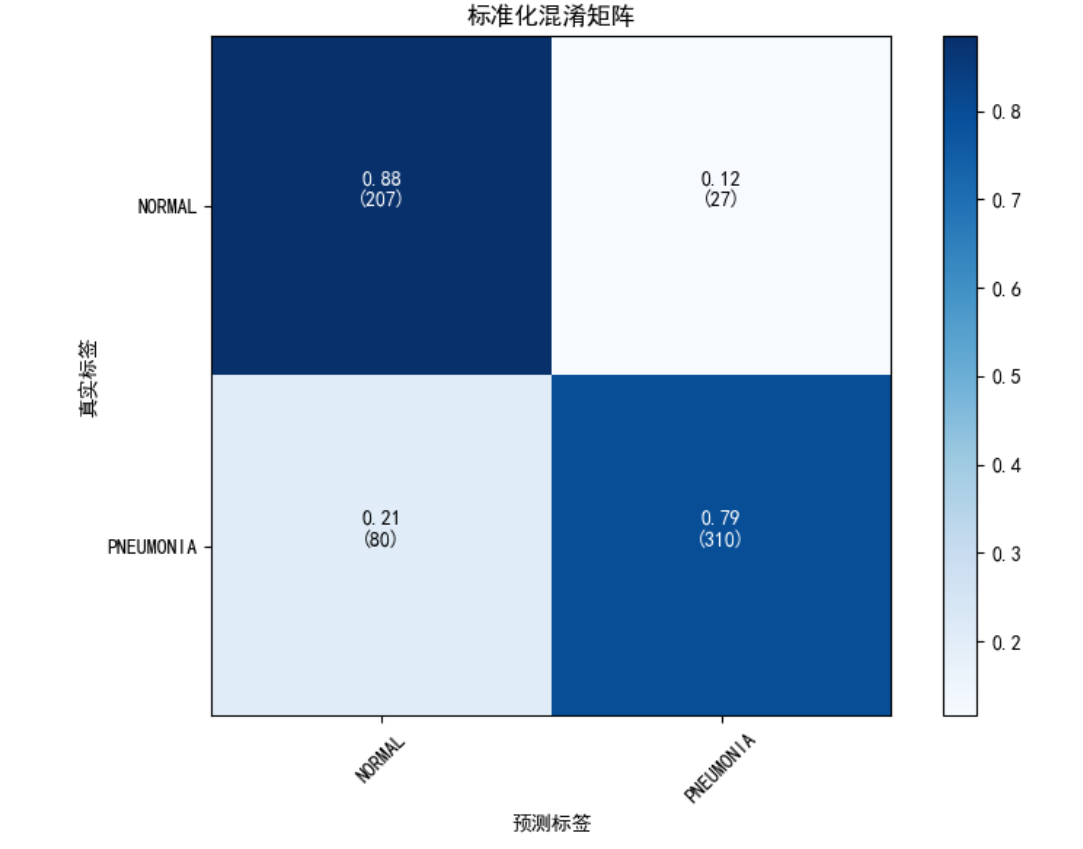

标准化混淆矩阵解析

这张图是标准化混淆矩阵( Normalized Confusion Matrix ),是评估分类模型性能的核心工具。我来用大白话给你解释:

一、核心概念

混淆矩阵是用来展示模型预测结果与真实标签之间对应关系的表格,包含四个关键部分:

- 真正例(True Positive, TP ):实际是肺炎,模型也预测为肺炎

- 假正例(False Positive, FP ):实际是正常,模型错误预测为肺炎

- 真负例(True Negative, TN ):实际是正常,模型也预测为正常

- 假负例(False Negative, FN ):实际是肺炎,模型错误预测为正常

二、图中元素解读

- 行(真实标签) :

- NORMAL:实际是正常的样本

- PNEUMONIA:实际是肺炎的样本

- 列(预测标签) :

- NORMAL:模型预测为正常的样本

- PNEUMONIA:模型预测为肺炎的样本

- 颜色深浅 :

- 深蓝色:数值接近 1(预测准确)

- 浅蓝色:数值接近 0(预测错误)

- 颜色条显示数值与颜色的对应关系

- 数值含义 :

- 每个格子显示两个数值:标准化比例(如 0.88)和原始数量(如 207)

- 标准化比例 = 该格子数量 / 对应行的总样本数

三、具体数值解读

- 真实为 NORMAL 的样本(第一行) :

- 预测为 NORMAL:0.88(207 个)→ 模型正确识别了 88% 的正常样本

- 预测为 PNEUMONIA:0.12(27 个)→ 模型将 12% 的正常样本错误预测为肺炎

- 真实为 PNEUMONIA 的样本(第二行) :

- 预测为 NORMAL:0.21(80 个)→ 模型将 21% 的肺炎样本错误预测为正常

- 预测为 PNEUMONIA:0.79(310 个)→ 模型正确识别了 79% 的肺炎样本

四、这张图说明什么?

- 正常样本识别能力强 :

- 0.88 的准确率说明模型在识别正常样本方面表现不错

- 只有 12% 的正常样本被误诊为肺炎

- 肺炎样本识别能力尚可但有提升空间 :

- 79% 的肺炎样本被正确识别,说明模型对肺炎有一定识别能力

- 但仍有 21% 的肺炎样本被漏诊(预测为正常)

- 整体表现 :

- 模型在正常样本上的表现优于肺炎样本

- 漏诊率(21%)高于误诊率(12%)

五、和之前指标的关系

- 与准确率(Accuracy )的关系 :

- 准确率 = (TP+TN)/(TP+TN+FP+FN) = (310+207)/(310+207+27+80) ≈ 0.84

- 与之前看到的准确率指标(约 0.8-0.9)一致

- 与精确率(Precision )的关系 :

- 精确率(肺炎)= TP/(TP+FP) = 310/(310+27) ≈ 0.92

- 说明模型预测为肺炎的样本中,92% 确实是肺炎

- 与召回率(Recall )的关系 :

- 召回率(肺炎)= TP/(TP+FN) = 310/(310+80) ≈ 0.79

- 与混淆矩阵中第二行的 0.79 直接对应

这张混淆矩阵表明:

- 模型对正常样本的识别准确率较高(88%)

- 对肺炎样本的识别准确率尚可(79%),但漏诊率偏高(21%)

- 误诊率相对较低(12%)

如果要进一步优化,可以关注:

- 如何减少肺炎样本的漏诊率(假负例)

- 是否需要在误诊率和漏诊率之间做权衡

- 结合临床需求调整模型决策阈值

bash

D:\ProgramData\anaconda3\envs\tf_env\python.exe D:\workspace_py\deeplean\medical_image_classification_fixed2.py

Found 4448 images belonging to 2 classes.

Found 784 images belonging to 2 classes.

Found 624 images belonging to 2 classes.

原始样本分布: 正常=1147, 肺炎=3301

过采样后分布: 正常=3301, 肺炎=3301

类别权重: 正常=1.94, 肺炎=0.67

使用本地权重文件: models/resnet50_weights_tf_dim_ordering_tf_kernels_notop.h5

2025-07-25 14:47:12.955043: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: SSE SSE2 SSE3 SSE4.1 SSE4.2 AVX AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

resnet50 (Functional) (None, 7, 7, 2048) 23587712

global_average_pooling2d ( (None, 2048) 0

GlobalAveragePooling2D)

dense (Dense) (None, 512) 1049088

batch_normalization (Batch (None, 512) 2048

Normalization)

dropout (Dropout) (None, 512) 0

dense_1 (Dense) (None, 256) 131328

batch_normalization_1 (Bat (None, 256) 1024

chNormalization)

dropout_1 (Dropout) (None, 256) 0

dense_2 (Dense) (None, 1) 257

=================================================================

Total params: 24771457 (94.50 MB)

Trainable params: 1182209 (4.51 MB)

Non-trainable params: 23589248 (89.99 MB)

_________________________________________________________________

Epoch 1/50

207/207 [==============================] - ETA: 0s - loss: 0.6233 - accuracy: 0.7675 - precision: 0.8022 - recall: 0.7101 - auc: 0.8398

Epoch 1: val_auc improved from -inf to 0.87968, saving model to best_pneumonia_model.h5

207/207 [==============================] - 245s 1s/step - loss: 0.6233 - accuracy: 0.7675 - precision: 0.8022 - recall: 0.7101 - auc: 0.8398 - val_loss: 1.3033 - val_accuracy: 0.2577 - val_precision: 0.0000e+00 - val_recall: 0.0000e+00 - val_auc: 0.8797 - lr: 1.0000e-04

Epoch 2/50

207/207 [==============================] - ETA: 0s - loss: 0.4313 - accuracy: 0.8261 - precision: 0.8971 - recall: 0.7367 - auc: 0.9035

Epoch 2: val_auc improved from 0.87968 to 0.92927, saving model to best_pneumonia_model.h5

207/207 [==============================] - 257s 1s/step - loss: 0.4313 - accuracy: 0.8261 - precision: 0.8971 - recall: 0.7367 - auc: 0.9035 - val_loss: 0.7843 - val_accuracy: 0.5115 - val_precision: 1.0000 - val_recall: 0.3419 - val_auc: 0.9293 - lr: 1.0000e-04

Epoch 3/50

207/207 [==============================] - ETA: 0s - loss: 0.3833 - accuracy: 0.8446 - precision: 0.9240 - recall: 0.7510 - auc: 0.9174

Epoch 3: val_auc did not improve from 0.92927

207/207 [==============================] - 242s 1s/step - loss: 0.3833 - accuracy: 0.8446 - precision: 0.9240 - recall: 0.7510 - auc: 0.9174 - val_loss: 0.8157 - val_accuracy: 0.7602 - val_precision: 0.7585 - val_recall: 0.9931 - val_auc: 0.8778 - lr: 1.0000e-04

Epoch 4/50

207/207 [==============================] - ETA: 0s - loss: 0.3648 - accuracy: 0.8505 - precision: 0.9356 - recall: 0.7528 - auc: 0.9254

Epoch 4: val_auc improved from 0.92927 to 0.94531, saving model to best_pneumonia_model.h5

207/207 [==============================] - 242s 1s/step - loss: 0.3648 - accuracy: 0.8505 - precision: 0.9356 - recall: 0.7528 - auc: 0.9254 - val_loss: 0.6605 - val_accuracy: 0.7551 - val_precision: 0.9949 - val_recall: 0.6735 - val_auc: 0.9453 - lr: 1.0000e-04

Epoch 5/50

207/207 [==============================] - ETA: 0s - loss: 0.3387 - accuracy: 0.8608 - precision: 0.9444 - recall: 0.7667 - auc: 0.9325

Epoch 5: val_auc did not improve from 0.94531

207/207 [==============================] - 239s 1s/step - loss: 0.3387 - accuracy: 0.8608 - precision: 0.9444 - recall: 0.7667 - auc: 0.9325 - val_loss: 1.4892 - val_accuracy: 0.7474 - val_precision: 0.7462 - val_recall: 1.0000 - val_auc: 0.7024 - lr: 1.0000e-04

Epoch 6/50

207/207 [==============================] - ETA: 0s - loss: 0.3275 - accuracy: 0.8634 - precision: 0.9457 - recall: 0.7710 - auc: 0.9403

Epoch 6: val_auc did not improve from 0.94531

207/207 [==============================] - 240s 1s/step - loss: 0.3275 - accuracy: 0.8634 - precision: 0.9457 - recall: 0.7710 - auc: 0.9403 - val_loss: 8.2440 - val_accuracy: 0.2577 - val_precision: 0.0000e+00 - val_recall: 0.0000e+00 - val_auc: 0.5095 - lr: 1.0000e-04

Epoch 7/50

207/207 [==============================] - ETA: 0s - loss: 0.3184 - accuracy: 0.8696 - precision: 0.9529 - recall: 0.7776 - auc: 0.9406

Epoch 7: val_auc improved from 0.94531 to 0.94572, saving model to best_pneumonia_model.h5

207/207 [==============================] - 240s 1s/step - loss: 0.3184 - accuracy: 0.8696 - precision: 0.9529 - recall: 0.7776 - auc: 0.9406 - val_loss: 0.3840 - val_accuracy: 0.8686 - val_precision: 0.9761 - val_recall: 0.8436 - val_auc: 0.9457 - lr: 1.0000e-04

Epoch 8/50

207/207 [==============================] - ETA: 0s - loss: 0.3061 - accuracy: 0.8706 - precision: 0.9497 - recall: 0.7828 - auc: 0.9458

Epoch 8: val_auc did not improve from 0.94572

207/207 [==============================] - 238s 1s/step - loss: 0.3061 - accuracy: 0.8706 - precision: 0.9497 - recall: 0.7828 - auc: 0.9458 - val_loss: 0.3327 - val_accuracy: 0.8712 - val_precision: 0.9212 - val_recall: 0.9038 - val_auc: 0.9368 - lr: 1.0000e-04

Epoch 9/50

207/207 [==============================] - ETA: 0s - loss: 0.2896 - accuracy: 0.8793 - precision: 0.9586 - recall: 0.7928 - auc: 0.9498

Epoch 9: val_auc did not improve from 0.94572

207/207 [==============================] - 236s 1s/step - loss: 0.2896 - accuracy: 0.8793 - precision: 0.9586 - recall: 0.7928 - auc: 0.9498 - val_loss: 0.8718 - val_accuracy: 0.7742 - val_precision: 0.7682 - val_recall: 0.9966 - val_auc: 0.8534 - lr: 1.0000e-04

Epoch 10/50

207/207 [==============================] - ETA: 0s - loss: 0.3042 - accuracy: 0.8755 - precision: 0.9480 - recall: 0.7946 - auc: 0.9467

Epoch 10: val_auc did not improve from 0.94572

207/207 [==============================] - 237s 1s/step - loss: 0.3042 - accuracy: 0.8755 - precision: 0.9480 - recall: 0.7946 - auc: 0.9467 - val_loss: 0.5748 - val_accuracy: 0.7921 - val_precision: 0.7827 - val_recall: 0.9966 - val_auc: 0.9386 - lr: 1.0000e-04

Epoch 11/50

207/207 [==============================] - ETA: 0s - loss: 0.2909 - accuracy: 0.8778 - precision: 0.9554 - recall: 0.7925 - auc: 0.9501

Epoch 11: val_auc improved from 0.94572 to 0.95169, saving model to best_pneumonia_model.h5

207/207 [==============================] - 237s 1s/step - loss: 0.2909 - accuracy: 0.8778 - precision: 0.9554 - recall: 0.7925 - auc: 0.9501 - val_loss: 0.3041 - val_accuracy: 0.8673 - val_precision: 0.8688 - val_recall: 0.9674 - val_auc: 0.9517 - lr: 1.0000e-04

Epoch 12/50

207/207 [==============================] - ETA: 0s - loss: 0.2779 - accuracy: 0.8841 - precision: 0.9628 - recall: 0.7992 - auc: 0.9537

Epoch 12: val_auc did not improve from 0.95169

207/207 [==============================] - 236s 1s/step - loss: 0.2779 - accuracy: 0.8841 - precision: 0.9628 - recall: 0.7992 - auc: 0.9537 - val_loss: 0.4429 - val_accuracy: 0.8304 - val_precision: 0.8249 - val_recall: 0.9794 - val_auc: 0.9420 - lr: 1.0000e-04

Epoch 13/50

207/207 [==============================] - ETA: 0s - loss: 0.2761 - accuracy: 0.8849 - precision: 0.9602 - recall: 0.8031 - auc: 0.9530

Epoch 13: val_auc did not improve from 0.95169

207/207 [==============================] - 236s 1s/step - loss: 0.2761 - accuracy: 0.8849 - precision: 0.9602 - recall: 0.8031 - auc: 0.9530 - val_loss: 1.1105 - val_accuracy: 0.7615 - val_precision: 0.7568 - val_recall: 1.0000 - val_auc: 0.8080 - lr: 1.0000e-04

Epoch 14/50

207/207 [==============================] - ETA: 0s - loss: 0.2710 - accuracy: 0.8885 - precision: 0.9612 - recall: 0.8098 - auc: 0.9555

Epoch 14: ReduceLROnPlateau reducing learning rate to 1.9999999494757503e-05.

Epoch 14: val_auc did not improve from 0.95169

207/207 [==============================] - 236s 1s/step - loss: 0.2710 - accuracy: 0.8885 - precision: 0.9612 - recall: 0.8098 - auc: 0.9555 - val_loss: 2.9286 - val_accuracy: 0.3240 - val_precision: 0.9815 - val_recall: 0.0911 - val_auc: 0.8606 - lr: 1.0000e-04

Epoch 15/50

207/207 [==============================] - ETA: 0s - loss: 0.2634 - accuracy: 0.8894 - precision: 0.9636 - recall: 0.8095 - auc: 0.9567

Epoch 15: val_auc improved from 0.95169 to 0.95699, saving model to best_pneumonia_model.h5

207/207 [==============================] - 237s 1s/step - loss: 0.2634 - accuracy: 0.8894 - precision: 0.9636 - recall: 0.8095 - auc: 0.9567 - val_loss: 0.6077 - val_accuracy: 0.8048 - val_precision: 0.9977 - val_recall: 0.7388 - val_auc: 0.9570 - lr: 2.0000e-05

Epoch 16/50

207/207 [==============================] - ETA: 0s - loss: 0.2576 - accuracy: 0.8909 - precision: 0.9654 - recall: 0.8110 - auc: 0.9600

Epoch 16: val_auc did not improve from 0.95699

207/207 [==============================] - 236s 1s/step - loss: 0.2576 - accuracy: 0.8909 - precision: 0.9654 - recall: 0.8110 - auc: 0.9600 - val_loss: 0.2776 - val_accuracy: 0.8724 - val_precision: 0.9635 - val_recall: 0.8608 - val_auc: 0.9566 - lr: 2.0000e-05

Epoch 17/50

207/207 [==============================] - ETA: 0s - loss: 0.2553 - accuracy: 0.8944 - precision: 0.9604 - recall: 0.8228 - auc: 0.9601

Epoch 17: val_auc did not improve from 0.95699

207/207 [==============================] - 238s 1s/step - loss: 0.2553 - accuracy: 0.8944 - precision: 0.9604 - recall: 0.8228 - auc: 0.9601 - val_loss: 1.2915 - val_accuracy: 0.6186 - val_precision: 1.0000 - val_recall: 0.4863 - val_auc: 0.9440 - lr: 2.0000e-05

Epoch 18/50

207/207 [==============================] - ETA: 0s - loss: 0.2505 - accuracy: 0.8919 - precision: 0.9635 - recall: 0.8146 - auc: 0.9608

Epoch 18: val_auc did not improve from 0.95699

207/207 [==============================] - 236s 1s/step - loss: 0.2505 - accuracy: 0.8919 - precision: 0.9635 - recall: 0.8146 - auc: 0.9608 - val_loss: 0.5704 - val_accuracy: 0.8099 - val_precision: 0.9909 - val_recall: 0.7509 - val_auc: 0.9460 - lr: 2.0000e-05

Epoch 19/50

207/207 [==============================] - ETA: 0s - loss: 0.2557 - accuracy: 0.8920 - precision: 0.9615 - recall: 0.8167 - auc: 0.9593

Epoch 19: ReduceLROnPlateau reducing learning rate to 3.999999898951501e-06.

Epoch 19: val_auc did not improve from 0.95699

207/207 [==============================] - 236s 1s/step - loss: 0.2557 - accuracy: 0.8920 - precision: 0.9615 - recall: 0.8167 - auc: 0.9593 - val_loss: 0.3977 - val_accuracy: 0.8597 - val_precision: 0.9917 - val_recall: 0.8179 - val_auc: 0.9558 - lr: 2.0000e-05

Epoch 20/50

207/207 [==============================] - ETA: 0s - loss: 0.2383 - accuracy: 0.8944 - precision: 0.9735 - recall: 0.8110 - auc: 0.9643

Epoch 20: val_auc improved from 0.95699 to 0.96287, saving model to best_pneumonia_model.h5

207/207 [==============================] - 236s 1s/step - loss: 0.2383 - accuracy: 0.8944 - precision: 0.9735 - recall: 0.8110 - auc: 0.9643 - val_loss: 0.3187 - val_accuracy: 0.8801 - val_precision: 0.9861 - val_recall: 0.8505 - val_auc: 0.9629 - lr: 4.0000e-06

Epoch 21/50

207/207 [==============================] - ETA: 0s - loss: 0.2539 - accuracy: 0.8894 - precision: 0.9642 - recall: 0.8088 - auc: 0.9606

Epoch 21: val_auc did not improve from 0.96287

207/207 [==============================] - 236s 1s/step - loss: 0.2539 - accuracy: 0.8894 - precision: 0.9642 - recall: 0.8088 - auc: 0.9606 - val_loss: 0.5630 - val_accuracy: 0.8074 - val_precision: 0.9954 - val_recall: 0.7440 - val_auc: 0.9522 - lr: 4.0000e-06

Epoch 22/50

207/207 [==============================] - ETA: 0s - loss: 0.2480 - accuracy: 0.8994 - precision: 0.9667 - recall: 0.8273 - auc: 0.9616

Epoch 22: ReduceLROnPlateau reducing learning rate to 7.999999979801942e-07.

Epoch 22: val_auc did not improve from 0.96287

207/207 [==============================] - 236s 1s/step - loss: 0.2480 - accuracy: 0.8994 - precision: 0.9667 - recall: 0.8273 - auc: 0.9616 - val_loss: 0.5155 - val_accuracy: 0.8112 - val_precision: 0.9910 - val_recall: 0.7526 - val_auc: 0.9588 - lr: 4.0000e-06

Epoch 23/50

207/207 [==============================] - ETA: 0s - loss: 0.2474 - accuracy: 0.8909 - precision: 0.9647 - recall: 0.8116 - auc: 0.9634

Epoch 23: val_auc improved from 0.96287 to 0.96317, saving model to best_pneumonia_model.h5

207/207 [==============================] - 238s 1s/step - loss: 0.2474 - accuracy: 0.8909 - precision: 0.9647 - recall: 0.8116 - auc: 0.9634 - val_loss: 0.4768 - val_accuracy: 0.8253 - val_precision: 0.9912 - val_recall: 0.7715 - val_auc: 0.9632 - lr: 8.0000e-07

Epoch 24/50

207/207 [==============================] - ETA: 0s - loss: 0.2496 - accuracy: 0.8981 - precision: 0.9686 - recall: 0.8228 - auc: 0.9612

Epoch 24: val_auc improved from 0.96317 to 0.96399, saving model to best_pneumonia_model.h5

207/207 [==============================] - 236s 1s/step - loss: 0.2496 - accuracy: 0.8981 - precision: 0.9686 - recall: 0.8228 - auc: 0.9612 - val_loss: 0.4538 - val_accuracy: 0.8278 - val_precision: 0.9956 - val_recall: 0.7715 - val_auc: 0.9640 - lr: 8.0000e-07

Epoch 25/50

207/207 [==============================] - ETA: 0s - loss: 0.2504 - accuracy: 0.8935 - precision: 0.9613 - recall: 0.8201 - auc: 0.9618

Epoch 25: ReduceLROnPlateau reducing learning rate to 1.600000018697756e-07.

Epoch 25: val_auc did not improve from 0.96399

207/207 [==============================] - 237s 1s/step - loss: 0.2504 - accuracy: 0.8935 - precision: 0.9613 - recall: 0.8201 - auc: 0.9618 - val_loss: 0.4323 - val_accuracy: 0.8431 - val_precision: 0.9978 - val_recall: 0.7904 - val_auc: 0.9596 - lr: 8.0000e-07

Epoch 26/50

207/207 [==============================] - ETA: 0s - loss: 0.2448 - accuracy: 0.8981 - precision: 0.9723 - recall: 0.8194 - auc: 0.9617

Epoch 26: val_auc did not improve from 0.96399

207/207 [==============================] - 236s 1s/step - loss: 0.2448 - accuracy: 0.8981 - precision: 0.9723 - recall: 0.8194 - auc: 0.9617 - val_loss: 0.4714 - val_accuracy: 0.8189 - val_precision: 0.9933 - val_recall: 0.7612 - val_auc: 0.9618 - lr: 1.6000e-07

Epoch 27/50

207/207 [==============================] - ETA: 0s - loss: 0.2514 - accuracy: 0.8940 - precision: 0.9653 - recall: 0.8173 - auc: 0.9611

Epoch 27: val_auc improved from 0.96399 to 0.96583, saving model to best_pneumonia_model.h5

207/207 [==============================] - 236s 1s/step - loss: 0.2514 - accuracy: 0.8940 - precision: 0.9653 - recall: 0.8173 - auc: 0.9611 - val_loss: 0.4280 - val_accuracy: 0.8342 - val_precision: 0.9956 - val_recall: 0.7801 - val_auc: 0.9658 - lr: 1.6000e-07

Epoch 28/50

207/207 [==============================] - ETA: 0s - loss: 0.2496 - accuracy: 0.8926 - precision: 0.9662 - recall: 0.8137 - auc: 0.9627

Epoch 28: ReduceLROnPlateau reducing learning rate to 1e-07.

Epoch 28: val_auc did not improve from 0.96583

207/207 [==============================] - 235s 1s/step - loss: 0.2496 - accuracy: 0.8926 - precision: 0.9662 - recall: 0.8137 - auc: 0.9627 - val_loss: 0.4548 - val_accuracy: 0.8355 - val_precision: 0.9871 - val_recall: 0.7887 - val_auc: 0.9576 - lr: 1.6000e-07

Epoch 29/50

207/207 [==============================] - ETA: 0s - loss: 0.2448 - accuracy: 0.8973 - precision: 0.9682 - recall: 0.8216 - auc: 0.9635

Epoch 29: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2448 - accuracy: 0.8973 - precision: 0.9682 - recall: 0.8216 - auc: 0.9635 - val_loss: 0.4514 - val_accuracy: 0.8469 - val_precision: 0.9957 - val_recall: 0.7973 - val_auc: 0.9533 - lr: 1.0000e-07

Epoch 30/50

207/207 [==============================] - ETA: 0s - loss: 0.2409 - accuracy: 0.8981 - precision: 0.9720 - recall: 0.8198 - auc: 0.9636

Epoch 30: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2409 - accuracy: 0.8981 - precision: 0.9720 - recall: 0.8198 - auc: 0.9636 - val_loss: 0.4526 - val_accuracy: 0.8253 - val_precision: 0.9912 - val_recall: 0.7715 - val_auc: 0.9612 - lr: 1.0000e-07

Epoch 31/50

207/207 [==============================] - ETA: 0s - loss: 0.2476 - accuracy: 0.8979 - precision: 0.9656 - recall: 0.8252 - auc: 0.9615

Epoch 31: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2476 - accuracy: 0.8979 - precision: 0.9656 - recall: 0.8252 - auc: 0.9615 - val_loss: 0.4479 - val_accuracy: 0.8367 - val_precision: 0.9956 - val_recall: 0.7835 - val_auc: 0.9585 - lr: 1.0000e-07

Epoch 32/50

207/207 [==============================] - ETA: 0s - loss: 0.2393 - accuracy: 0.8978 - precision: 0.9686 - recall: 0.8222 - auc: 0.9645

Epoch 32: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2393 - accuracy: 0.8978 - precision: 0.9686 - recall: 0.8222 - auc: 0.9645 - val_loss: 0.4738 - val_accuracy: 0.8316 - val_precision: 0.9934 - val_recall: 0.7784 - val_auc: 0.9583 - lr: 1.0000e-07

Epoch 33/50

207/207 [==============================] - ETA: 0s - loss: 0.2492 - accuracy: 0.8950 - precision: 0.9654 - recall: 0.8194 - auc: 0.9614

Epoch 33: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2492 - accuracy: 0.8950 - precision: 0.9654 - recall: 0.8194 - auc: 0.9614 - val_loss: 0.4522 - val_accuracy: 0.8431 - val_precision: 0.9978 - val_recall: 0.7904 - val_auc: 0.9589 - lr: 1.0000e-07

Epoch 34/50

207/207 [==============================] - ETA: 0s - loss: 0.2429 - accuracy: 0.9018 - precision: 0.9709 - recall: 0.8285 - auc: 0.9618

Epoch 34: val_auc did not improve from 0.96583

207/207 [==============================] - 239s 1s/step - loss: 0.2429 - accuracy: 0.9018 - precision: 0.9709 - recall: 0.8285 - auc: 0.9618 - val_loss: 0.4706 - val_accuracy: 0.8418 - val_precision: 0.9914 - val_recall: 0.7938 - val_auc: 0.9541 - lr: 1.0000e-07

Epoch 35/50

207/207 [==============================] - ETA: 0s - loss: 0.2455 - accuracy: 0.8979 - precision: 0.9650 - recall: 0.8258 - auc: 0.9627

Epoch 35: val_auc improved from 0.96583 to 0.97260, saving model to best_pneumonia_model.h5

207/207 [==============================] - 237s 1s/step - loss: 0.2455 - accuracy: 0.8979 - precision: 0.9650 - recall: 0.8258 - auc: 0.9627 - val_loss: 0.4193 - val_accuracy: 0.8291 - val_precision: 0.9956 - val_recall: 0.7732 - val_auc: 0.9726 - lr: 1.0000e-07

Epoch 36/50

207/207 [==============================] - ETA: 0s - loss: 0.2442 - accuracy: 0.8919 - precision: 0.9661 - recall: 0.8122 - auc: 0.9631

Epoch 36: val_auc did not improve from 0.97260

207/207 [==============================] - 234s 1s/step - loss: 0.2442 - accuracy: 0.8919 - precision: 0.9661 - recall: 0.8122 - auc: 0.9631 - val_loss: 0.4375 - val_accuracy: 0.8329 - val_precision: 1.0000 - val_recall: 0.7749 - val_auc: 0.9634 - lr: 1.0000e-07

Epoch 37/50

207/207 [==============================] - ETA: 0s - loss: 0.2447 - accuracy: 0.8987 - precision: 0.9680 - recall: 0.8246 - auc: 0.9628

Epoch 37: val_auc did not improve from 0.97260

207/207 [==============================] - 235s 1s/step - loss: 0.2447 - accuracy: 0.8987 - precision: 0.9680 - recall: 0.8246 - auc: 0.9628 - val_loss: 0.4201 - val_accuracy: 0.8380 - val_precision: 0.9956 - val_recall: 0.7852 - val_auc: 0.9673 - lr: 1.0000e-07

Epoch 38/50

207/207 [==============================] - ETA: 0s - loss: 0.2460 - accuracy: 0.8955 - precision: 0.9671 - recall: 0.8188 - auc: 0.9621

Epoch 38: val_auc did not improve from 0.97260

207/207 [==============================] - 236s 1s/step - loss: 0.2460 - accuracy: 0.8955 - precision: 0.9671 - recall: 0.8188 - auc: 0.9621 - val_loss: 0.4425 - val_accuracy: 0.8418 - val_precision: 0.9978 - val_recall: 0.7887 - val_auc: 0.9652 - lr: 1.0000e-07

Epoch 39/50

207/207 [==============================] - ETA: 0s - loss: 0.2503 - accuracy: 0.8932 - precision: 0.9632 - recall: 0.8176 - auc: 0.9622

Epoch 39: val_auc did not improve from 0.97260

207/207 [==============================] - 234s 1s/step - loss: 0.2503 - accuracy: 0.8932 - precision: 0.9632 - recall: 0.8176 - auc: 0.9622 - val_loss: 0.4590 - val_accuracy: 0.8253 - val_precision: 0.9847 - val_recall: 0.7766 - val_auc: 0.9571 - lr: 1.0000e-07

Epoch 40/50

207/207 [==============================] - ETA: 0s - loss: 0.2499 - accuracy: 0.8949 - precision: 0.9660 - recall: 0.8185 - auc: 0.9611

Epoch 40: val_auc did not improve from 0.97260

207/207 [==============================] - 235s 1s/step - loss: 0.2499 - accuracy: 0.8949 - precision: 0.9660 - recall: 0.8185 - auc: 0.9611 - val_loss: 0.4443 - val_accuracy: 0.8316 - val_precision: 0.9978 - val_recall: 0.7749 - val_auc: 0.9639 - lr: 1.0000e-07

Epoch 41/50

207/207 [==============================] - ETA: 0s - loss: 0.2489 - accuracy: 0.8952 - precision: 0.9654 - recall: 0.8198 - auc: 0.9612

Epoch 41: val_auc did not improve from 0.97260

207/207 [==============================] - 240s 1s/step - loss: 0.2489 - accuracy: 0.8952 - precision: 0.9654 - recall: 0.8198 - auc: 0.9612 - val_loss: 0.4213 - val_accuracy: 0.8418 - val_precision: 0.9978 - val_recall: 0.7887 - val_auc: 0.9677 - lr: 1.0000e-07

Epoch 42/50

207/207 [==============================] - ETA: 0s - loss: 0.2420 - accuracy: 0.8993 - precision: 0.9694 - recall: 0.8246 - auc: 0.9637

Epoch 42: val_auc did not improve from 0.97260

207/207 [==============================] - 234s 1s/step - loss: 0.2420 - accuracy: 0.8993 - precision: 0.9694 - recall: 0.8246 - auc: 0.9637 - val_loss: 0.4687 - val_accuracy: 0.8304 - val_precision: 0.9870 - val_recall: 0.7818 - val_auc: 0.9554 - lr: 1.0000e-07

Epoch 43/50

207/207 [==============================] - ETA: 0s - loss: 0.2483 - accuracy: 0.8941 - precision: 0.9653 - recall: 0.8176 - auc: 0.9614Restoring model weights from the end of the best epoch: 35.

Epoch 43: val_auc did not improve from 0.97260

207/207 [==============================] - 234s 1s/step - loss: 0.2483 - accuracy: 0.8941 - precision: 0.9653 - recall: 0.8176 - auc: 0.9614 - val_loss: 0.4686 - val_accuracy: 0.8355 - val_precision: 0.9935 - val_recall: 0.7835 - val_auc: 0.9582 - lr: 1.0000e-07

Epoch 43: early stopping

20/20 [==============================] - 20s 998ms/step - loss: 0.4608 - accuracy: 0.8285 - precision: 0.9199 - recall: 0.7949 - auc: 0.9151

测试集评估结果:

准确率: 0.8285

精确率: 0.9199

召回率: 0.7949

AUC: 0.9151

F1-score: 0.8528

AUC-ROC: 0.9157

分类报告:

precision recall f1-score support

NORMAL 0.72 0.88 0.79 234

PNEUMONIA 0.92 0.79 0.85 390

accuracy 0.83 624

macro avg 0.82 0.84 0.82 624

weighted avg 0.85 0.83 0.83 624

混淆矩阵:

[[207 27]

[ 80 310]]

Process finished with exit code 0F1-score 模型综合分

F1-score 简单说就是模型 " 抓对的" 和 " 没漏的" 之间的平衡分。

打个比方:假设你要做一个模型,用来识别 "垃圾邮件"。

- 假设实际有 100 封邮件,其中 20 封是垃圾邮件,80 封是正常邮件。

- 模型识别出 15 封垃圾邮件,但其中有 5 封其实是正常邮件(错判),同时还有 10 封真正的垃圾邮件没识别出来(漏判)。

这时候:

- "抓对的"(精准率):模型说的 "垃圾邮件" 里,真正是垃圾的有 10 封(15-5),所以精准率是 10/15≈67%。

- "没漏的"(召回率):所有真正的垃圾邮件里,模型抓到了 10 封,所以召回率是 10/20=50%。

F1-score 就是把这两个数 "中和" 一下,算出来一个综合分(公式是:2× 精准率 × 召回率 ÷(精准率 + 召回率)),这里就是 2×67%×50%÷(67%+50%)≈57%。

它的作用是:当数据不平衡时(比如垃圾邮件只占 20%),光看 "准确率"(比如模型把所有邮件都判为正常,准确率也有 80%,但毫无意义)会骗人,而 F1-score 能更真实反映模型的好坏 ------ 既不能乱判(精准率低),也不能漏判(召回率低),得分越高说明平衡得越好。

通过控制台信息了解肺炎诊断模型评估

一、数据准备阶段

|-----------------------------------------------------------------------------------------------------------------------------|

| Found 4448 images belonging to 2 classes. Found 784 images belonging to 2 classes. Found 624 images belonging to 2 classes. |

- 说明:程序找到了 4448 张训练图片、784 张验证图片和 624 张测试图片,分为 "正常" 和 "肺炎" 两类

|---------------------------------------------------|

| 原始样本分布: 正常=1147, 肺炎=3301 过采样后分布: 正常=3301, 肺炎=3301 |

- 说明:原始数据中肺炎样本比正常样本多很多(3301 vs 1147)

- 解决方法:用 "过采样" 技术生成更多正常样本,使两类样本数量相等(3301 vs 3301)

|------------------------|

| 类别权重: 正常=1.94, 肺炎=0.67 |

- 说明:给样本数量少的 "正常" 类分配更高权重(1.94),让模型更重视它

- 作用:解决样本不平衡问题,避免模型只学肺炎样本

二、模型构建阶段

|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= resnet50 (Functional) (None, 7, 7, 2048) 23587712 global_average_pooling2d ( (None, 2048) 0 GlobalAveragePooling2D) dense (Dense) (None, 512) 1049088 batch_normalization (Batch (None, 512) 2048 Normalization) dropout (Dropout) (None, 512) 0 dense_1 (Dense) (None, 256) 131328 batch_normalization_1 (Bat (None, 256) 1024 chNormalization) dropout_1 (Dropout) (None, 256) 0 dense_2 (Dense) (None, 1) 257 ================================================================= Total params: 24771457 (94.50 MB) Trainable params: 1182209 (4.51 MB) Non-trainable params: 23589248 (89.99 MB) |

- 说明:这是一个基于 ResNet50 的模型,包含多个层:

- ResNet50:预训练的图像特征提取网络

- 全局平均池化:将特征图转为向量

- 全连接层 + 批归一化 + dropout:处理特征

- 最后一层输出:预测是否为肺炎(0 或 1)

- 参数:总共有约 2400 万参数,其中 118 万可训练

三、训练过程

|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Epoch 1/50 207/207 [==============================] - ETA: 0s - loss: 0.6233 - accuracy: 0.7675 - precision: 0.8022 - recall: 0.7101 - auc: 0.8398 Epoch 1: val_auc improved from -inf to 0.87968, saving model to best_pneumonia_model.h5 207/207 [==============================] - 245s 1s/step - loss: 0.6233 - accuracy: 0.7675 - precision: 0.8022 - recall: 0.7101 - auc: 0.8398 - val_loss: 1.3033 - val_accuracy: 0.2577 - val_precision: 0.0000e+00 - val_recall: 0.0000e+00 - val_auc: 0.8797 - lr: 1.0000e-04 |

- 说明:开始第 1 轮训练(共 50 轮)

- 训练结果:

- 训练集:损失 0.62,准确率 76.75%,精确率 80.22%,召回率 701%

- 验证集:损失 1.30,准确率 25.77%(这一轮验证结果很差)

- 特殊事件:验证集的 AUC 指标从无穷大提升到 0.8797,所以保存当前模型

|-------------------------------------------------------------------------------|

| Epoch 14: ReduceLROnPlateau reducing learning rate to 1.9999999494757503e-05. |

- 说明:第 14 轮时,验证集性能没有提升,学习率从 0.0001 降到 0.00002

- 作用:防止模型 "学不进去",帮助找到更好的参数

|---------------------------------------------------------------------------------------------|

| Epoch 35: val_auc improved from 0.96583 to 0.97260, saving model to best_pneumonia_model.h5 |

- 说明:第 35 轮时,验证集的 AUC 指标从 0.9658 提升到 0.9726,保存模型

|--------------------------|

| Epoch 43: early stopping |

- 说明:第 43 轮时,验证集性能连续多轮没有提升,训练提前停止

- 作用:防止模型 "过拟合"(只记住训练数据,不会举一反三)

四、测试结果

|------------------------------------------------------------------------------------------------------------------------------------------------|

| 20/20 [==============================] - 20s 998ms/step - loss: 0.4608 - accuracy: 0.8285 - precision: 0.9199 - recall: 0.7949 - auc: 0.9151 |

- 说明:在 624 张测试图片上的最终结果

- 关键指标:

- 准确率:82.85%(约 83% 的图片分类正确)

- 精确率:91.99%(模型说 "是肺炎" 的样本中,92% 确实是肺炎)

- 召回率:79.49%(所有肺炎样本中,79% 被正确识别)

- AUC:0.9151(ROC 曲线下面积,0.9 以上说明模型性能优秀)

|------------------|

| F1-score: 0.8528 |

- 说明:精确率和召回率的综合指标,0.85 表示模型在 "不错判" 和 "不漏判" 之间平衡得很好

|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| 分类报告: precision recall f1-score support NORMAL 0.72 0.88 0.79 234 PNEUMONIA 0.92 0.79 0.85 390 accuracy 0.83 624 macro avg 0.82 0.84 0.82 624 weighted avg 0.85 0.83 0.83 624 |

- 说明:详细的分类结果

- 正常样本:精确率 72%,召回率 88%(模型对正常样本的识别更 "保守")

- 肺炎样本:精确率 92%,召回率 79%(模型对肺炎样本的识别更 "积极")

|----------------------------------|

| 混淆矩阵: [[207 27] [ 80 310]] |

- 说明:模型预测结果的详细分布

- 第一行(实际正常):207 个正确识别,27 个被误诊为肺炎

- 第二行(实际肺炎):80 个被漏诊为正常,310 个正确识别

五、总结

- 模型性能 :

- 整体准确率 83%,AUC>0.9,说明模型性能优秀

- 对肺炎样本的精确率 92%(很少误诊),但召回率 79%(漏诊率 21%)

- 对正常样本的召回率 88%(很少漏诊),但精确率 72%(误诊率 28%)

- 改进空间 :

- 可以尝试降低肺炎样本的漏诊率(比如调整决策阈值)

- 可以尝试提高正常样本的精确率(减少误诊)

- 可以考虑使用更复杂的模型或数据增强技术

- 实际应用建议 :

- 在医疗场景中,可能需要优先保证肺炎样本的召回率(宁可多诊断,不能漏诊)

- 可以结合临床医生的经验,调整模型的决策标准