大文件分片上传

很久没有输出文章了,最近在工作中需要实现大文件分片上传的功能,所以就写了一个简单的 demo 出来,分享一下。

首先说一下为什么要用大文件分片上传

- 超时问题:我们一般使用 axios 请求一般都有超时时间,大文件一旦很大上传必然会超时(当然你可以设置很长的超时时间)

- 网络中断重传:一般网络中断 或者遇到超时上传失败,我们就得重新再传了

- 内存占用过高:不管是浏览器读取文件的时候,还是服务器在接收的时候都需要占用很大的内存

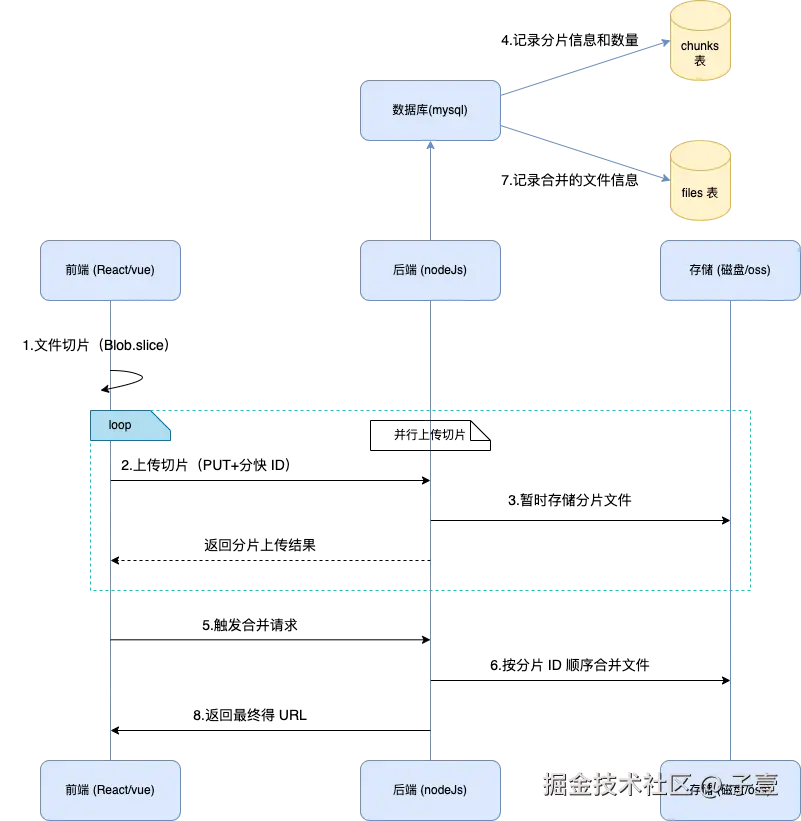

图解思路:

前端部分

-

先说单文件分片上传:需要定义的

- 文件的唯一标识 文件的MD5

- 文件分片的数组 chunks

- 每个分片的大小

- 每个分片的上传状态

- 总得分片数量(判断是否需要合并)

vue<!-- template --> <el-upload ref="uploader" class="flex-center gap-20" action="" multiple :http-request="httpRequsetSubmit" :auto-upload="false" :show-file-list="false" :disabled="isUploading" :on-remove="uploaderOnRemove" :accept="acceptFileTypeString" :on-change="onChange" :limit="limit" :on-success="uploadFileOnSuccess" :on-error="uploadFileOnError" :on-exceed="uploadFileExceed"> <template #trigger> <el-button class="select-files" :disabled="isUploading || enContinue"> 选择文件 </el-button> </template> </el-upload>typescript<!-- script --> const fileSparkMD5 = ref([]); // 文件MD5 唯一标识 const fileChuncks = ref([]); // 文件分片list const chunckSize = ref(1*1024*1024); // 分片大小 const promiseArr = []; // 分片上传promise集合 const isUploadChuncks = ref([]); // 返回 [1,1,1,0,0,1] 格式数组(这里需要服务端返回的值是按照索引正序排列),标识对应下标上传状态 已上传:1 ,未上传:0 const uploadProgress = ref(0); // 上传进度 const uploadQuantity = ref(0); // 总上传数量 <!-- httpRequsetSubmit --> function httpRequsetSubmit({ file, onProgress, onSuccess, onError }: { file: File onProgress: Function onSuccess: Function onError: Function onException?: Function }) { const data = await getFileMD5(file); // 获取文件 md5 使用 spark-md5 fileSparkMD5.value.push({md5Value:data,fileKey:file.name}); sliceFile(file); const isUploaded = await checkFile(md5Value); //是否上传过 if(isUploaded) { const hasEmptyChunk = isUploadChuncks.value.findIndex(item => item === 0); //找没有上传过的分片继续上传 if(hasEmptyChunk === -1) { ElMessage({message:'上传成功',type:'success'}); return; }else { //上传缺失的分片文件,注意这里的索引,就是文件上传的序号 for(let k = 0; k < isUploadChuncks.value.length; k++) { if(isUploadChuncks.value[k] !== 1) { const {md5Value,fileKey} = fileSparkMD5.value[0]; //单文件处理,多文件需要遍历匹配对应的文件 let data = new FormData(); data.append('totalNumber',fileChuncks.value.length); // 分片总数 data.append("chunkSize",chunckSize.value); // 分片大小 data.append("chunckNumber",k); // 分片序号 data.append('md5',md5Value); // 文件唯一标识 data.append('name',fileKey); // 文件名称 data.append('file',new File([fileChuncks.value[k].fileChuncks],fileKey)) //分片文件 httpRequest(data,k,fileChuncks.value.length); } } } }else { //未上传,执行完整上传逻辑 fileChuncks.value.forEach((e, i)=>{ const {md5Value,fileKey} = fileSparkMD5.value.find(item => item.fileKey === e.fileName); let data = new FormData(); data.append('totalNumber',fileChuncks.value.length); data.append("chunkSize",chunckSize.value); data.append("chunckNumber",i); data.append('md5',md5Value); //文件唯一标识 data.append('name',fileKey); data.append('file',new File([e.fileChuncks],fileKey)) httpRequest(data,i,fileChuncks.value.length); // 是一个 promise }) } let uploadResult = uploadResultRef.value; Promise.all(promiseArr).then((e)=>{ uploadResult.innerHTML = '上传成功'; // pormise all 机制,所有上传完毕,执行正常回调,开启合并文件操作 mergeFile(fileSparkMD5.value,fileChuncks.value.length); }).catch(e=>{ ElMessage({message:'文件未上传完整,请继续上传',type:'error'}); uploadResult.innerHTML = '上传失败'; }) } //获取文件MD5,有的浏览器有文件大小限制 function getFileMD5 (file) { return new Promise((resolve, reject) => { const fileReader = new FileReader(); fileReader.onload = (e) =>{ const fileMd5 = SparkMD5.ArrayBuffer.hash(e.target.result) resolve(fileMd5) } fileReader.onerror = (e) =>{ reject('文件读取失败',e) } fileReader.readAsArrayBuffer(file); }) } //对文件进行切片 const sliceFile = (file) => { //文件分片之后的集合 const chuncks = []; let start = 0 ; let end; while(start < file.size) { end = Math.min(start + chunckSize.value,file.size); //slice 截取文件字节 chuncks.push({fileChuncks:file.slice(start,end),fileName:file.name}); start = end; } fileChuncks.value = [...chuncks]; } // 检查文件是否被上传过 const checkFile = async (md5) => { const data = await api.checkChuncks({ md5: md5 }); if (data.length === 0) { return false; } const {file_hash:fileHash,chunck_total_number:chunckTotal} = data[0]; // 文件的信息,hash值,分片总数,每条分片都是一致的内容 if(fileHash === md5) { const allChunckStatusList = new Array(Number(chunckTotal)).fill(0); const chunckNumberArr = data.map(item => item.chunck_number); // 数据库中记录已上传的分片 chunckNumberArr.forEach((item,index) => { allChunckStatusList[item] = 1 }); isUploadChuncks.value = [...allChunckStatusList]; return true; // 返回是否上传过,为下面的秒传,断点续传做铺垫 }else { return false; } } -

多文件分片上传 :步入真实需求 ,我们在上传的时候基本上都是多文件上传

- 总逻辑和单文件上传相同

- 每个文件都应该有自己的状态、分片数组、分片总数、文件名

vue<!-- template --> <div class="file-upload-view"> <div class="upload-status-container"> <el-table :data="mergedItem" style="width: 100%" max-height="700px"> <el-table-column prop="name" label="文件名称" width="300" /> <el-table-column prop="size" label="文件大小" :formatter="sizeformatter" /> <el-table-column prop="status" label="上传状态" :formatter="statusFormatter" /> <el-table-column prop="percentage" label="上传进度"> <template #default="scope"> <div v-if="fileUploadContexts[scope.row.uid]"> <el-progress :percentage="fileUploadContexts[scope.row.uid].progress" :status="fileUploadContexts[scope.row.uid].isCompleted ? 'success' : ''" /> </div> <span v-else class="text-primary text-15">准备中...</span> </template> </el-table-column> <el-table-column label="操作" align="center" width="100px"> <template #default="scope"> <div v-if="scope.row.status != 'success'" class="flex-center cursor-pointer" @click="onRemove(scope.row)"> <i class="i-material-symbols:scan-delete text-20"></i> </div> </template> </el-table-column> </el-table> </div> <div class="mt-20"> <!-- template --> <el-upload ref="uploader" class="flex-center gap-20" action="" multiple :http-request="httpRequsetSubmit" :auto-upload="false" :show-file-list="false" :disabled="isUploading" :on-remove="uploaderOnRemove" :accept="acceptFileTypeString" :on-change="onChange" :limit="limit" :on-success="uploadFileOnSuccess" :on-error="uploadFileOnError" :on-exceed="uploadFileExceed"> <template #trigger> <el-button class="select-files" :disabled="isUploading || enContinue"> 继续添加 </el-button> </template> <el-button v-if="isShowUploadButton" class="bg-primary text-white" :disabled="isUploading" @click="startUpload"> 开始上传 </el-button> <el-button v-else class="bg-primary text-white" :disabled="isUploading" @click="onComplete"> 完成 </el-button> </el-upload> </div> </div>typescript<!-- script --> // 定义文件上传上下文 interface FileChuncks { fileChuncks: File fileName: string index:number } interface FileUploadContext { file: File; uid: string; md5: string; chunks: FileChuncks[]; chunkStatus: number[]; // 分片状态数组 uploadedChunks: number; // 已上传分片数 totalChunks: number; chunkSize: number; isCompleted: boolean; progress: number; } const fileUploadContexts = ref<Record<string, FileUploadContext>>({}); async function httpRequsetSubmit({ file, onProgress, onSuccess, onError }: { file: File onProgress: Function onSuccess: Function onError: Function onException?: Function }) { // 获取文件的 uid const uid = file.uid; // 初始化文件上下文 const context: FileUploadContext = { file, uid, md5: '', chunks: [], chunkStatus: [], uploadedChunks: 0, totalChunks: 0, chunkSize: 1 * 1024 * 1024, // 1MB isCompleted: false, progress: 0 }; // 计算文件 MD5 try { context.md5 = await getFileMD5(file); } catch (err) { onError(err); return; } // 分片文件 sliceFile(file, context); // 检查文件状态 try { const hasUploaded = await checkFile(context); if (hasUploaded) { // 计算已上传分片数 context.uploadedChunks = context.chunkStatus.filter(status => status === 1).length; // 如果所有分片都已上传,直接完成 if (context.uploadedChunks === context.totalChunks) { fileUploadContexts.value[uid] = context; context.progress = 100; return await mergeFile(context) } } // 添加上下文 fileUploadContexts.value[uid] = context; // 开始上传缺失的分片 await uploadMissingChunks(context, onProgress); // 所有分片上传完成后合并文件 return await mergeFile(context); } catch (error) { onError(error); } } //=========================== 文件切片 function sliceFile (file: File, context: FileUploadContext) { //文件分片之后的集合 const chunks: FileChuncks[] = [] let start = 0 let end while (start < file.size) { end = Math.min(start + context.chunkSize, file.size) //slice 截取文件字节 chunks.push({ fileChuncks: file.slice(start, end), fileName: file.name, index: chunks.length }) start = end } context.chunks = chunks; context.totalChunks = chunks.length; context.chunkStatus = new Array(chunks.length).fill(0); // 保证初始状态 未上传的是 0 比如切分成 32个 那就是 [0,0,0,......32个] } // ========================== 检测文件是否上传过, async function checkFile (context: FileUploadContext): Promise<boolean> { const response = await api.checkChuncks({ md5: context.md5 }); if (response.data.length === 0) { return false; } const { file_hash: fileHash, chunck_total_number: chunckTotal } = response.data[0]; // 文件的信息,hash值,分片总数,每条分片都是一致的内容 if (fileHash === context.md5) { const allChunckStatusList = new Array(context.totalChunks).fill(0); // 文件所有分片状态list,默认都填充为0(0: 未上传,1:已上传) const chunckNumberArr = response.data.map(item => item.chunck_number); // 遍历已上传的分片,获取已上传分片对应的索引 (chunck_number为每个文件分片的索引) console.log(allChunckStatusList, 'allChunckStatusList') console.log(chunckNumberArr, 'chunckNumberArr') chunckNumberArr.forEach((item, index) => { // 遍历已上传分片的索引,将对应索引赋值为1,代表已上传的分片 (注意这里,服务端返回的值是按照索引正序排列) if (item < context.totalChunks) { allChunckStatusList[item] = 1; } }); context.chunkStatus = allChunckStatusList; return true; // 返回是否上传过,为下面的秒传,断点续传做铺垫 } return false; } // ========================== 上传缺失分片 async function uploadMissingChunks(context: FileUploadContext, onProgress: Function) { const uploadPromises: Promise<void>[] = []; for (let i = 0; i < context.totalChunks; i++) { if (context.chunkStatus[i] === 0) { uploadPromises.push(uploadChunk(context, i, onProgress)); } } // 并行上传所有缺失分片 await Promise.all(uploadPromises); } // =========================== 上传方法 promise async function uploadChunk(context: FileUploadContext, index: number, onProgress: Function) { const chunk = context.chunks[index]; const data = { totalNumber: context.totalChunks, chunkSize: context.chunkSize, chunckNumber: index, md5: context.md5, name: context.file.name, }; try { await apiData.request( new File([chunk.fileChuncks], context.file.name), { ...apiData.data, ...data, onUploadProgress: ({ percent }) => { // 修复进度计算逻辑 const completedChunks = context.uploadedChunks; const currentChunkProgress = percent; console.log(currentChunkProgress,'currentChunkProgress') const totalProgress = (completedChunks + currentChunkProgress) / context.totalChunks * 100; console.log(totalProgress,'totalProgress') context.progress = Math.min(100, Math.round(totalProgress)); // // 调用 Element Plus 的进度回调 onProgress({ percent: context.progress }); } } ); // 标记分片为已上传 context.chunkStatus[index] = 1; context.uploadedChunks++; // 更新进度到100% (确保分片完成后进度准确) context.progress = Math.round(context.uploadedChunks / context.totalChunks * 100); onProgress({ percent: context.progress }); } catch (err) { throw new Error(`分片 ${index + 1}/${context.totalChunks} 上传失败: ${err.message}`); } } // ====================== 合并文件 async function mergeFile (context: FileUploadContext) { const params = { totalNumber: context.totalChunks, md5: context.md5, name: context.file.name }; try { const response = await api.merge(params); context.isCompleted = true; return response; } catch (err) { throw new Error(`文件合并失败: ${err.message}`); } }

后端 nodeJs 部分

-

Koa 框架

-

使用 koa-body 、mySql、koa-router

-

设计数据表

- chunks 表,应该包含 id、file_hash、file_name、chunk_total_number、chunk_size、chunk_number

- files 表,id、file_name、file_hash、file_path、file_size

-

checkChunks 接口

jsasync function checkChuncks(ctx: Context) { try { const { md5 } = ctx.request.body; const queryStr = `SELECT (SELECT count(*) FROM chunck_list WHERE file_hash = ?) as all_count, id as chunck_id, file_hash, chunck_number, chunck_total_number FROM chunck_list WHERE file_hash = ? GROUP BY id ORDER BY chunck_number ` const res = await files.checkChuncks(queryStr, [md5, md5]) ctx.body = { result: 200, msg: "获取成功", data: res ?? [] } } catch (err: any) { ctx.body = { result: 500, msg: "获取失败", data: err.message } } } -

upload 上传接口

jsfunction handleFileUpload(ctx: Context) { try { const { totalNumber, chunckNumber, chunkSize, md5, name } = ctx.request.body; // 指定 hash 文件路径 const chunckPath = path.join(uploadPath, 'chunks', md5, '/'); console.log(chunckPath) if (!fs.existsSync(chunckPath)) { fs.mkdirSync(chunckPath, { recursive: true }) } console.log(totalNumber, 'totalNumber') // 移动文件到指定目录 // 重点修改处:从 files 中获取上传的文件 const fileField = ctx.request.files?.file; if (!fileField) { throw new Error('未接收到文件'); } const file = Array.isArray(fileField) ? fileField[0] : fileField; // 5. 直接移动文件到目标位置 const targetPath = path.join(chunckPath, `${md5}-${chunckNumber}`); fs.renameSync(file.filepath, targetPath); // 插入数据到数据库 const sql = ` INSERT INTO file_split.chunck_list (file_hash,file_name, chunck_total_number, chunck_size ,chunck_number ) VALUES (?, ?, ?, ?, ?) `; const result = await files.insertFileChunks(sql, [md5, name, totalNumber, chunkSize, chunckNumber]); console.log(result, '数据插入成功') ctx.body = { result: 200, msg: "上传成功", data: null } } catch (err: any) { console.error('文件上传出错:', err); ctx.body = { result: 500, msg: "上传失败", data: err.message } } } -

mergeFile 合并接口(需要检查文件是否相同 md5 但是不同名,如果这样则使用同一个存储路径)

javascriptasync function mergeFile(ctx: Context) { const { totalNumber, md5, name } = ctx.request.body; const ext = path.extname(name); // 获取文件扩展名 try { // 1. 构建基于MD5的物理路径 const filePath = `uploads/${md5}${ext}`; const fullPath = path.join(process.cwd(), filePath); // 2. 查询数据库中所有相同MD5的记录 const md5Records = await checkMergeStatusInDB(md5) as Array<{ file_name: string stored_name: string file_hash: string file_path: string file_size: number }>; console.log(md5Records,'md5Records',name) // 3. 检查是否已存在同名记录 const sameNameRecord = md5Records.find(record => record.file_name === name); console.log(sameNameRecord,'sameNameRecord') if (sameNameRecord) { console.log(sameNameRecord,'sameNameRecord') ctx.body = { result: 200, msg: "文件已存在", data: { fileName: sameNameRecord.file_name, filePath: sameNameRecord.file_path, fileSize: sameNameRecord.file_size, md5: sameNameRecord.file_hash } }; return; } // 4. 检查物理文件是否存在 const physicalFileExists = fs.existsSync(fullPath); if (physicalFileExists && md5Records.length > 0) { // 情况2:物理文件存在,但不同名 // 使用第一条记录的文件大小(所有记录大小相同) const fileSize = md5Records[0].file_size; // 创建新记录 await createFileRecord(name, md5, filePath, fileSize) ctx.body = { result: 200, msg: "文件关联成功", data: { fileName: name, filePath: filePath, fileSize: fileSize, md5 } }; return; } // 5. 文件不存在,执行合并 const chunckPath = path.join(uploadPath, 'chunks', md5, '/'); //读取对应hash文件夹下的所有分片文件名称 const chunckList = fs.existsSync(chunckPath) ? fs.readdirSync(chunckPath) : []; //判断切片是否完整 console.log(chunckList.length, totalNumber, '我是总地址,和分片地址') if (chunckList.length !== totalNumber) { // 没有传完 ctx.body = { result: 500, msg: "Merge failed, missing file slices", data: null } process.exit(); } // 创建可写流 const writeStream = fs.createWriteStream(filePath); for (let index = 0; index < totalNumber; index++) { const chunkFilePath = path.join(chunckPath, `${md5}-${index}`); const readStream = fs.createReadStream(chunkFilePath); // 使用promise来等待该分片完成 await new Promise<void>((resolve, reject) => { readStream.pipe(writeStream, { end: false }); // 不自动结束可写流 readStream.on('end', () => { // 删除分片文件 fs.unlink(chunkFilePath, (err) => { if (err) { reject(err); } else { resolve(); } }); }); readStream.on('error', reject); }); } // 关闭可写流 writeStream.end(); // 等待流结束 await new Promise<void>((resolve) => writeStream.on('finish', resolve)); // 删除空文件夹 await fs.promises.rmdir(chunckPath); // 7. 获取文件大小 const fileSize = fs.statSync(fullPath).size; await createFileRecord(name, md5, filePath, fileSize) ctx.body = { result: 200, msg: "文件合并成功", data: { fileName: name, filePath: filePath, fileSize: fileSize, md5 } } } catch (error) { ctx.body = { result: 500, msg: "合并失败", data: null } } } // 添加文件合并状态检查 async function checkMergeStatusInDB(md5: string): Promise<[]> { try { const sql = `SELECT * FROM files WHERE file_hash = ?`; const { results } = await files.checkMergeStatus(sql, [md5]); return results; } catch (error) { console.error(`检查合并状态失败: ${error}`); return []; } } // 辅助方法:创建文件记录 async function createFileRecord(fileName: string, fileHash: string, filePath: string, fileSize: number) { const sql = `INSERT INTO files (file_name, file_hash, file_path, file_size) VALUES (?, ?, ?, ?)`; await files.insertFile(sql, [fileName, fileHash, filePath, fileSize]); }

总结:

总体优化的部分还有前端在切片的时候可以使用 web Worker 处理 ,使用多线程运行,刚写一点 nodeJs 不足之处请大佬们指出

源代码:

github: github.com/Yuhior/file...