1. 服务器 ollama 安装

bash

$ curl -fsSL https://ollama.com/install.sh | sh

>>> Cleaning up old version at /usr/local/lib/ollama

>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

>>> Enabling and starting ollama service...

>>> The Ollama API is now available at 127.0.0.1:11434.

>>> Install complete. Run "ollama" from the command line.2. 运行 ollama

bash

$ ollama run deepseek-r1:1.5b

>>> /bye多行输入

对于多行输入,可以使用 """ 包裹文本:

bash

>>> """Hello,

... world!

... """查看下载的模型

bash

$ ollama list

NAME ID SIZE MODIFIED

deepseek-r1:1.5b e0979632db5a 1.1 GB 2 hours agoollama create 用于从 Modelfile 创建模型

bash

$ ollama create mymodel -f ./Modelfile删除模型

bash

$ ollama rm deepseek-r1:1.5b复制模型

bash

$ ollama cp deepseek-r1:1.5b my-model多模态模型

bash

$ ollama run llava "这张图片中有什么? ~/people.png"2. 客户端连接服务器

服务器运行:

bash

$ curl http://localhost:11434/api/generate -d '{"model":"deepseek-r1:1.5b"}'

{"model":"deepseek-r1:1.5b","created_at":"2025-08-09T11:20:03.676711152Z","response":"","done":true,"done_reason":"load"}客户端运行:

bash

$ curl http://49.51.197.197:11434/api/generate -d '{"model":"deepseek-r1:1.5b"}'

curl: (7) Failed to connect to 49.51.197.197 port 11434 after 277 ms: Couldn't connect to server报错

2.1 解决错误

- 先检查网络连接

bash

$ ping -c 4 49.51.197.197

PING 49.51.197.197 (49.51.197.197) 56(84) bytes of data.

64 bytes from 49.51.197.197: icmp_seq=1 ttl=44 time=304 ms

64 bytes from 49.51.197.197: icmp_seq=2 ttl=44 time=301 ms

64 bytes from 49.51.197.197: icmp_seq=3 ttl=44 time=289 ms

64 bytes from 49.51.197.197: icmp_seq=4 ttl=44 time=280 ms

--- 49.51.197.197 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3004ms

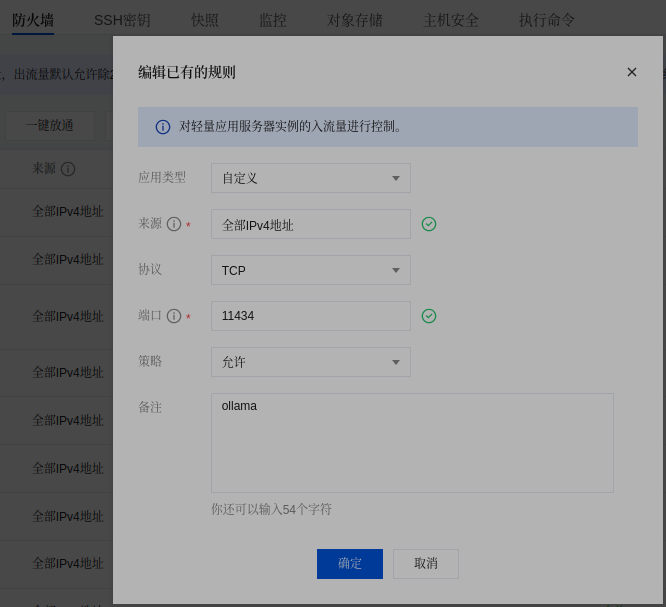

rtt min/avg/max/mdev = 280.205/293.788/304.213/9.659 ms- 防火墙放开 11434 端口

- 端口监听

bash

$ netstat -tuln | grep 11434

tcp 0 0 127.0.0.1:11434 0.0.0.0:* LISTEN 显示 127.0.0.1:11434:服务仅限本机(服务器)访问,需修改配置

- 查看配置:

bash

$ sudo systemctl cat ollama.service

# /etc/systemd/system/ollama.service

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin"

[Install]

WantedBy=default.target- 修改配置

bash

$ sudo vi /etc/systemd/system/ollama.service添加:

bash

Environment="OLLAMA_HOST=0.0.0.0" # 新增监听地址- 重新启动

bash

$ sudo systemctl daemon-reload

$ sudo systemctl restart ollama - 查看配置

bash

$ sudo systemctl cat ollama.service

# /etc/systemd/system/ollama.service

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin"

Environment="OLLAMA_HOST=0.0.0.0"

[Install]

WantedBy=default.target

$ netstat -tuln | grep 11434

tcp6 0 0 :::11434 :::* LISTEN - 客户端查看

bash

$ curl http://49.51.197.197:11434/api/generate -d '{"model":"deepseek-r1:1.5b"}'

{"model":"deepseek-r1:1.5b","created_at":"2025-08-09T13:54:49.560955998Z","response":"","done":true,"done_reason":"load"}查找正确

- 代码验证

python

import os

from openai import OpenAI

# 初始化 OpenAI 客户端

client = OpenAI(

api_key="ollama",

base_url="http://49.51.197.197:11434/v1",

)

response = client.chat.completions.create(

model="deepseek-r1:1.5b",

messages=[{'role': 'user', 'content': '9.9 和 9.11 谁大'}]

)

print(" 最终答案:")

print(response.choices[0].message.content)输出结果:

python

最终答案:

<think>

</think>

首先,我们要比较两个数的大小:**9.9**和**9.11**。

1. **统一小数位数**

- 将这两个数都转换为小数点后两位:

- **9.9** 可以写成 **9.90**。

- **9.11** 已经是两个小数位数,可以保持不变。

2. **逐位比较**

- 比较**个位数字:** 都是**9**,相等。

- 比较**十分位:**

- 9.90 的十分位是**9**,9.11 的十分位也是**1**。

- **9 > 1**,所以9.90 大于9.11。

3. **结论**

- 因为在十分位上,9.90 比9.11大,因此**9.9 大于9.11**。

总结:\boxed{9.9 \text{ 更大}}