- 总结负载均衡常见的算法

轮询 (Round Robin):按顺序将请求依次分配给后端服务器,适合服务器性能相近的场景。

加权轮询 (Weighted Round Robin):在轮询的基础上,根据服务器的权重分配请求。

随机 (Random):随机选择一台服务器处理请求,简单但不考虑负载。

加权随机 (Weighted Random):在随机的基础上,根据服务器的权重进行随机选择。

源IP哈希 (Source IP Hash) :根据请求的源IP地址进行哈希计算,将请求分配给特定的服务器。

最少连接算法(Least Connections)最少连接算法是一种动态调整的负载均衡算法。其思路是尽可能将请求分配到当前空闲连接数最少的后端服务器,以达到负载均衡的效果。在实现过程中,通常需要定期检测每个服务器的连接数并进行动态调整。

- 使用keepalived做nginx和haproxy高可用

#安装

sudo apt-get update

sudo apt-get install keepalived

sudo apt-get install nginx

#编辑配置文件

sudo vim /etc/keepalived/keepalived.conf

global_defs {

router_id nginx

}

nginx检测脚本

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

weight 50

}

vrrp_instance VI_1 {

实例初始化状态 (MASTER or BACKUP)

state MASTER

vrrp监听的网卡设备

interface ens33

虚拟路由ID(0-255),可用过tcpdump vrrp查看(vrid)

virtual_router_id 62

优先级, 优先级高的竞选为master

priority 151

vrrp广播间隔(单位:秒,可用小数点)

advert_int 1

非抢占模式,只适用于BACKUP state,允许一个priority更低的节点作为master

(官方)NOTE: For this to work, the initial state must not be MASTER.

nopreempt

vrrp单播,源IP

unicast_src_ip 172.25.254.131

vrrp单播,对端IP

unicast_peer {

172.25.254.132

}

用于节点间的通讯认证,需要主备设置一致

authentication {

auth_type PASS

auth_pass 1111

}

虚拟IP

virtual_ipaddress {

172.25.254.130

}

服务检测脚本

track_script {

check_nginx

}

}

#服务器编辑配置文件

sudo vim /etc/keepalived/keepalived.conf

global_defs {router_id nginx

}

nginx检测脚本

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

weight 50

}

vrrp_instance VI_1 {

实例初始化状态 (MASTER or BACKUP)

state BACKUP

vrrp监听的网卡设备

interface ens33

虚拟路由ID(0-255),可用过tcpdump vrrp查看(vrid)

virtual_router_id 62

优先级, 优先级高的竞选为master

priority 150

vrrp广播间隔(单位:秒,可用小数点)

advert_int 1

非抢占模式,只适用于BACKUP state,允许一个priority更低的节点作为master

NOTE: For this to work, the initial state must not be MASTER.

nopreempt

vrrp单播,源IP

unicast_src_ip 172.25.254.132

vrrp单播,对端IP

unicast_peer {

172.25.254.131

}

用于节点间的通讯认证,需要主备设置一致

authentication {

auth_type PASS

auth_pass 1111

}

虚拟IP

virtual_ipaddress {

172.25.254.130

}

服务检测脚本

track_script {

check_nginx

}

}

两台服务器中都生成如下nginx检测脚本

sudo vim /etc/keepalived/check_nginx.sh

#!/bin/shif [ -z "`/usr/bin/pidof nginx`" ]; then

systemctl stop keepalived

exit 1

fi

#添加权限

sudo chmod +x /etc/keepalived/check_nginx.sh

启动服务启动nginx,并检查服务状态

sudo systemctl start nginx

systemctl status nginx

● nginx.service - A high performance web server and a reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2024-05-07 07:22:43 UTC; 37s ago

Docs: man:nginx(8)

Process: 205677 ExecStartPre=/usr/sbin/nginx -t -q -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Process: 205678 ExecStart=/usr/sbin/nginx -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Main PID: 205679 (nginx)

Tasks: 3 (limit: 4515)

Memory: 3.3M

CPU: 52ms

CGroup: /system.slice/nginx.service

├─205679 "nginx: master process /usr/sbin/nginx -g daemon on; master_process on;"

├─205680 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ""

└─205681 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ""

May 07 07:22:43 svr1 systemd[1]: Starting A high performance web server and a reverse proxy server...

May 07 07:22:43 svr1 systemd[1]: Started A high performance web server and a reverse proxy server.

启动keepalived,并检查服务状态sudo systemctl start keepalived

systemctl status keepalived

● keepalived.service - Keepalive Daemon (LVS and VRRP)

Loaded: loaded (/lib/systemd/system/keepalived.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2024-05-07 07:25:20 UTC; 18s ago

Main PID: 205688 (keepalived)

Tasks: 2 (limit: 4515)

Memory: 2.0M

CPU: 265ms

CGroup: /system.slice/keepalived.service

├─205688 /usr/sbin/keepalived --dont-fork

└─205689 /usr/sbin/keepalived --dont-fork

May 07 07:25:20 svr1 Keepalived[205688]: Starting VRRP child process, pid=205689

May 07 07:25:20 svr1 systemd[1]: keepalived.service: Got notification message from PID 205689, but reception only permitted for main PID 205688

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: WARNING - default user 'keepalived_script' for script execution does not exist - please create.

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: SECURITY VIOLATION - scripts are being executed but script_security not enabled.

May 07 07:25:20 svr1 Keepalived[205688]: Startup complete

May 07 07:25:20 svr1 systemd[1]: Started Keepalive Daemon (LVS and VRRP).

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: (VI_1) Entering BACKUP STATE (init)

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: VRRP_Script(check_nginx) succeeded

May 07 07:25:20 svr1 Keepalived_vrrp[205689]: (VI_1) Changing effective priority from 101 to 151

May 07 07:25:24 svr1 Keepalived_vrrp[205689]: (VI_1) Entering MASTER STATE

启动Keepalived以后,主节点中查看是否绑定了配置中的VIPip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a1:d7:ea brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 172.25.254.131/24 brd 172.25.254.255 scope global ens33

valid_lft forever preferred_lft forever

inet 172.25.254.130/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea1:d7ea/64 scope link

valid_lft forever preferred_lft forever

#服务器2

ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:5c:c4:91 brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 172.25.254.132/24 brd 172.25.254.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe5c:c491/64 scope link

valid_lft forever preferred_lft forever

尝试停止nginx服务,看是否能够故障转移。sudo systemctl stop nginx

1服务器(master)

ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a1:d7:ea brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 172.25.254.131/24 brd 172.25.254.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea1:d7ea/64 scope link

valid_lft forever preferred_lft forever2服务器(backup)

ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:5c:c4:91 brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 172.25.254.132/24 brd 172.25.254.255 scope global ens33

valid_lft forever preferred_lft forever

inet 172.25.254.130/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe5c:c491/64 scope link

valid_lft forever preferred_lft forever

关闭主节点nginx服务后,能看到keepalived正常执行了故障转移

通过Keepalived & HAProxy,实现负载均衡(Active-Active)

在1阶段的架构上,我们添加HAProxy来实现双主负载均衡。

两台服务器都安装HAProxy

sudo apt-get install haproxy

编辑HAProxy配置文件:

sudo vim /etc/haproxy/haproxy.cfg

global

log /dev/log local0 info

log /dev/log local1 warning

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

默认为ulimit -n值,并受该数值的限制

可以当作每个连接占用32KB内存来计算出合适的数值,并分配相应的内存

maxconn 60000

Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

See: https://ssl-config.mozilla.org/#server=haproxy\&server-version=2.0.3\&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

retries 3

timeout http-request 10s

timeout connect 3s

timeout client 10s

timeout server 10s

timeout http-keep-alive 10s

timeout check 2s

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

frontend http-in

bind *:8000

maxconn 20000

default_backend servers

backend servers

balance roundrobin

server server1 172.25.254.131:80 check

server server2 172.25.254.132:80 check

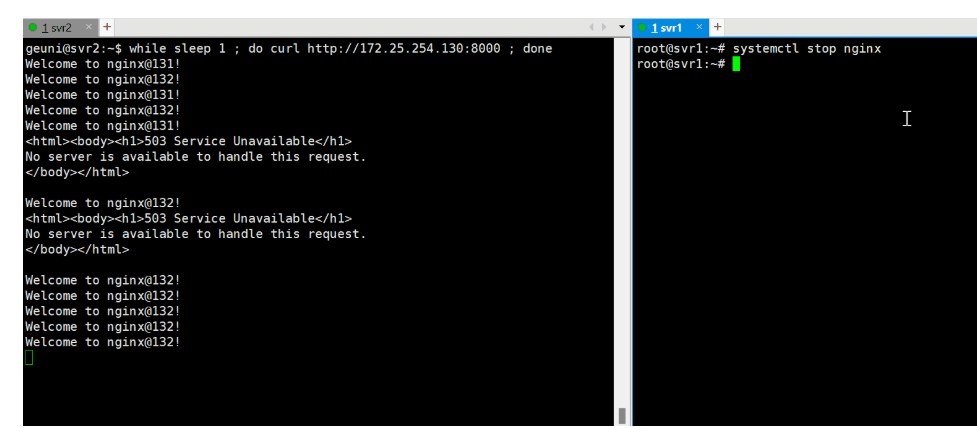

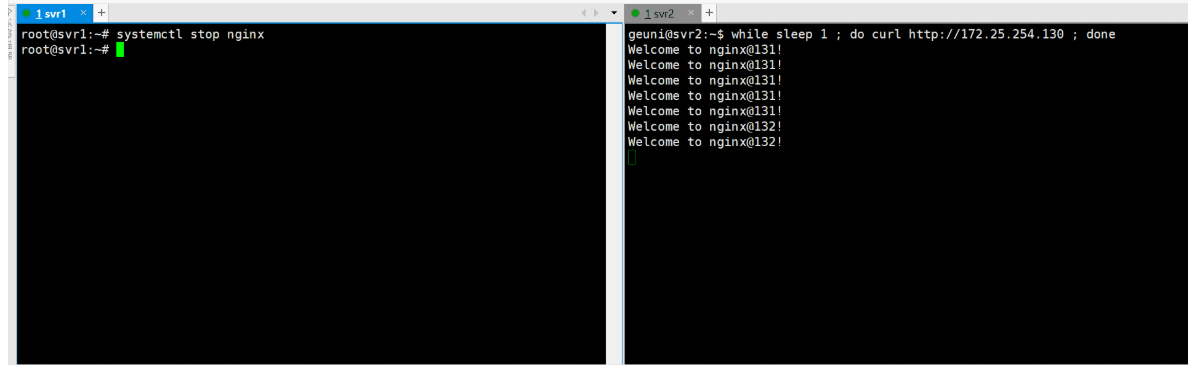

负载均衡及高可用测试

while sleep 1 ; do curl http://172.25.254.130:8000 ; done