目录

[修改 hostpath.yaml 文件](#修改 hostpath.yaml 文件)

1、k8s持久化存储:emptyDir

我们想要使用存储卷,需要经历如下步骤

1、定义pod的volume,这个volume指明它要关联到哪个存储上的

2、在容器中要使用volumemounts挂载对应的存储

经过以上两步才能正确的使用存储卷

emptyDir类型的Volume是在Pod分配到Node上时被创建,Kubernetes会在Node上自动分配一个目录,因此无需指定宿主机Node上对应的目录文件。 这个目录的初始内容为空,当Pod从Node上移除时,emptyDir中的数据会被永久删除。emptyDir Volume主要用于某些应用程序无需永久保存的临时目录,多个容器的共享目录等。

查看k8s支持哪些存储

cpp

kubectl explain pods.spec.volumes常用的如下:

cpp

emptyDir

hostPath

nfs

persistentVolumeClaim

glusterfs

cephfs

configMap

secret案例:

cpp

[root@k8s-master ~]# ls

[root@k8s-master ~]# mkdir pvc

[root@k8s-master ~]# cd pvc

[root@k8s-master pvc]# vim emptydir.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-empty

spec:

containers:

- name: container-empty

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /cache #这个目录名即使当前镜像不存在耶不影响

name: cache-volume ##与volumes中的name保持一致

volumes:

- emptyDir: {}

name: cache-volume #

更新资源清单文件

cpp

[root@k8s-master pvc]# kubectl apply -f emptydir.yaml

pod/pod-empty created查看本机临时目录存在的位置,可用如下方法:

查看pod调度到哪个节点

cpp

[root@k8s-master pvc]# kubectl get pod pod-empty -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-empty 1/1 Running 0 2m35s 10.244.169.178 k8s-node2 <none> <none>查看pod的uid

cpp

[root@k8s-master pvc]# kubectl get pod pod-empty -o yaml | grep uid

uid: b848d29c-c146-45e1-87b9-4d55a784e28f登录到k8s-node2上

/var/lib/kubelet/pods是插件pod目录

cpp

[root@k8s-node2 ~]# cd /var/lib/kubelet/pods/b848d29c-c146-45e1-87b9-4d55a784e28f/

[root@k8s-node2 b848d29c-c146-45e1-87b9-4d55a784e28f]# ls

containers etc-hosts plugins volumes

[root@k8s-node2 b848d29c-c146-45e1-87b9-4d55a784e28f]# cd volumes/

[root@k8s-node2 volumes]# ls

kubernetes.io~empty-dir kubernetes.io~projected

[root@k8s-node2 volumes]# cd kubernetes.io~empty-dir/

[root@k8s-node2 kubernetes.io~empty-dir]# ls

cache-volume

[root@k8s-node2 kubernetes.io~empty-dir]# cd cache-volume/测试

在cache-volume目录里创建一个aaa目录进行测试

cpp

[root@k8s-node2 cache-volume]# ls

[root@k8s-node2 cache-volume]# mkdir aaa

[root@k8s-node2 cache-volume]# ls

aaa回到master主节点

查看挂载目录cache

成功挂载,目录共享

cpp

[root@k8s-master pvc]# kubectl exec pod-empty -- ls /cache

aaa

[root@k8s-master pvc]# kubectl exec pod-empty -- ls -l /cache

total 4

drwxr-xr-x 2 root root 4096 Aug 21 13:19 aaa2、k8s持久化存储:hostPath

hostPath Volume是指Pod挂载宿主机上的目录或文件。 hostPath Volume使得容器可以使用宿主机的文件系统进行存储,hostpath(宿主机路径):节点级别的存储卷,在pod被删除,这个存储卷还是存在的,不会被删除,所以只要同一个pod被调度到同一个节点上来,在pod被删除重新被调度到这个节点之后,对应的数据依然是存在的。

存储能力有限,宕机则数据丢失

hostpath存储卷缺点

单节点,pod删除之后重新创建必须调度到同一个node节点,数据才不会丢失

查看hostPath存储卷的用法

cpp

# kubectl explain pods.spec.volumes.hostPath

KIND: Pod

VERSION: v1

FIELD: hostPath <HostPathVolumeSource>

DESCRIPTION:

hostPath represents a pre-existing file or directory on the host machine

that is directly exposed to the container. This is generally used for system

agents or other privileged things that are allowed to see the host machine.

Most containers will NOT need this. More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

Represents a host path mapped into a pod. Host path volumes do not support

ownership management or SELinux relabeling.

FIELDS:

path <string> -required-

path of the directory on the host. If the path is a symlink, it will follow

the link to the real path. More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

type <string>

type for HostPath Volume Defaults to "" More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

Possible enum values:

- `""` For backwards compatible, leave it empty if unset

- `"BlockDevice"` A block device must exist at the given path

- `"CharDevice"` A character device must exist at the given path

- `"Directory"` A directory must exist at the given path

- `"DirectoryOrCreate"` If nothing exists at the given path, an empty

directory will be created there as needed with file mode 0755, having the

same group and ownership with Kubelet.

- `"File"` A file must exist at the given path

- `"FileOrCreate"` If nothing exists at the given path, an empty file will

be created there as needed with file mode 0644, having the same group and

ownership with Kubelet.

- `"Socket"` A UNIX socket must exist at the given path

# Socket 网络套接字创建一个pod,挂载hostPath存储卷

cpp

[root@k8s-master pvc]# vim hostpath.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-hostpath

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: test-nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: test-volume

volumes:

- name: test-volume

hostPath:

path: /data

type: DirectoryOrCreate翻译:

cpp

apiVersion: v1 # Kubernetes API 版本

kind: Pod # 资源类型:Pod

metadata:

name: test-hostpath # Pod 名称:test-hostpath

spec:

containers: # 容器配置

- image: nginx:latest # 使用 nginx 镜像

imagePullPolicy: IfNotPresent # 镜像拉取策略:本地已存在则不拉取

name: test-nginx # 容器名称:test-nginx

volumeMounts: # 容器内挂载点配置

- mountPath: /usr/share/nginx/html # 挂载到容器内路径

name: test-volume # 引用的卷名称

volumes: # 定义卷列表

- name: test-volume # 卷名称:test-volume

hostPath: # 卷类型:节点本地路径

path: /data # 主机上的绝对路径

type: DirectoryOrCreate # 目录不存在则创建注意:

DirectoryOrCreate表示本地有/data目录,就用本地的,本地没有就会在pod调度到的节点自动创建一个

更新资源清单文件,并查看pod调度到了哪个物理节点

cpp

[root@k8s-master pvc]# kubectl apply -f hostpath.yaml

pod/test-hostpath created

挂载到node2节点上

[root@k8s-master pvc]# kubectl get pod test-hostpath -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-hostpath 1/1 Running 0 105s 10.244.169.179 k8s-node2 <none> <none>测试

访问是没有首页的

cpp

[root@k8s-master pvc]# curl 10.244.169.179

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx/1.29.1</center>

</body>

</html>生成首页

cpp

[root@k8s-node2 ~]# cd /data

[root@k8s-node2 data]# ls

[root@k8s-node2 data]# echo 12345 > index.html

[root@k8s-node2 data]# 再次访问,挂载正常

cpp

[root@k8s-master ~]# curl 10.244.169.179

12345hostpath存储卷缺点

单节点,pod删除之后重新创建必须调度到同一个node节点,数据才不会丢失

如何调度到同一个nodeName呢 ? 需要我们再yaml文件中进行指定就可以

修改 hostpath.yaml 文件

cpp

[root@k8s-master ~]# cd pvc/

[root@k8s-master pvc]# vim hostpath.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-hostpath

spec:

nodeName: k8s-node2

containers:

- image: nginx:latest

imagePullPolicy: IfNotPresent

name: test-nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: test-volume

volumes:

- name: test-volume

hostPath:

path: /data

type: DirectoryOrCreate测试

cpp

#先删除之前创建的pod

[root@k8s-master pvc]# kubectl delete pod test-hostpath

pod "test-hostpath" deleted

#提交资源

[root@k8s-master pvc]# kubectl apply -f hostpath.yaml

pod/test-hostpath created

#查看pod为test-hostpath 的虚拟ip

[root@k8s-master pvc]# kubectl get pod test-hostpath -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-hostpath 1/1 Running 0 26s 10.244.169.180 k8s-node2 <none> <none>

#访问结果跟上一个被删除pod的访问结果一样

[root@k8s-master pvc]# curl 10.244.169.180

123453、k8s持久化存储:nfs

上节说的hostPath存储,存在单点故障,pod挂载hostPath时,只有调度到同一个节点,数据才不会丢失。那可以使用nfs作为持久化存储。

搭建nfs服务

以k8s的控制节点作为NFS服务端

cpp

[root@k8s-master ~]# yum install -y nfs-utils在宿主机创建NFS需要的共享目录

cpp

[root@k8s-master ~]# mkdir /data -pv

mkdir: 已创建目录 "/data"配置nfs共享服务器上的/data目录

cpp

[root@k8s-master] ~]# systemctl enable --now nfs

[root@k8s-master] ~]# vim /etc/exports

/data 192.168.158.0/24(rw,sync,no_root_squash)

rw 读写权限 (ReadWrite)

sync 同步写入模式

no_root_squash 禁用root权限降级

no_subtree_check 禁用子树检查使NFS配置生效

cpp

[root@k8s-master pvc]# exportfs -avr

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "192.168.158.0/24:/data".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exporting 192.168.158.0/24:/data

[root@k8s-master pvc]# showmount -e

Export list for k8s-master:

/data 192.168.158.0/24所有的worker节点安装nfs-utils

cpp

yum install nfs-utils -y

systemctl enable --now nfsnode节点测试nfs

cpp

[root@k8s-node1 ~]# showmount -e 192.168.158.33

Export list for 192.168.158.33:

/data 192.168.158.0/24

[root@k8s-node2 data]# showmount -e 192.168.158.33

Export list for 192.168.158.33:

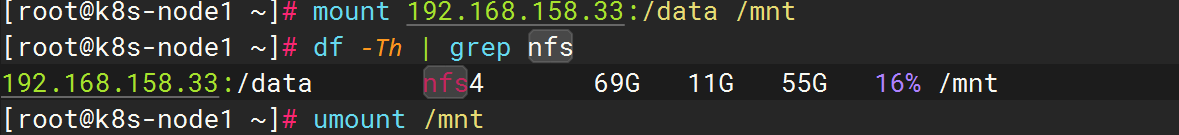

/data 192.168.158.0/24测试

cpp

#在k8s-node1和k8s-node2上手动挂载试试:

#别忘了这里是主机ip

[root@k8s-node1 ~]# mount 192.168.158.33:/data /mnt

[root@k8s-node1 ~]# df -Th | grep nfs

192.168.166.3:/data nfs4 116G 6.7G 109G 6% /mnt

#nfs可以被正常挂载

#手动卸载:

[root@k8s-node1 ~]# umount /mnt

创建Pod,挂载NFS共享出来的目录

cpp

[root@k8s-master ~]# cat test-nfs.yaml

# API版本:v1(核心API)

apiVersion: v1

# 资源类型:Pod(容器组)

kind: Pod

# 元数据

metadata:

# Pod名称:test-nfs

name: test-nfs

# 规格定义

spec:

# 容器配置

containers:

- name: test-nfs # 容器名称:test-nfs

image: nginx:latest # 使用nginx镜像

imagePullPolicy: IfNotPresent # 镜像拉取策略:本地存在则不拉取

# 端口配置

ports:

- containerPort: 80 # 容器暴露端口:80

protocol: TCP # 协议类型:TCP

# 卷挂载配置

volumeMounts:

- name: nfs-volumes # 挂载的卷名称:nfs-volumes

mountPath: /usr/share/nginx/html # 容器内挂载路径:Nginx默认站点目录

# 存储卷配置

volumes:

- name: nfs-volumes # 卷名称:nfs-volumes

nfs: # 使用NFS存储类型

path: /data # NFS共享目录路径(服务端)

server: 192.168.158.33 # NFS服务器IP地址更新资源清单文件

cpp

[root@k8s-master pvc]# kubectl apply -f test.nfs.yaml

pod/test-nfs created查看pod是否创建成功

cpp

[root@k8s-master pvc]# kubectl get pod test-nfs -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

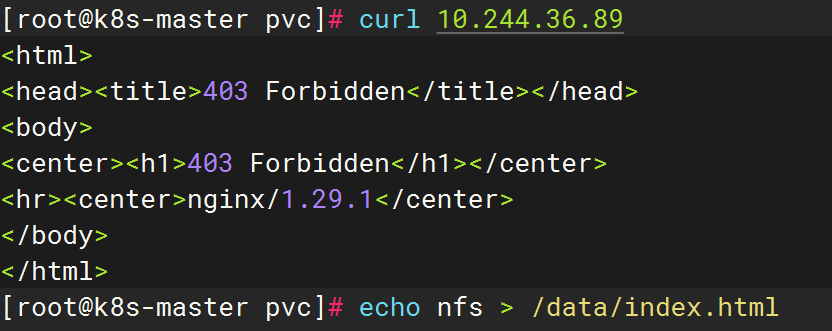

test-nfs 1/1 Running 0 38s 10.244.36.89 k8s-node1 <none> <none>测试

首页不显示

cpp

[root@k8s-master pvc]# curl 10.244.36.89

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx/1.29.1</center>

</body>

</html>

#登录到nfs服务器,在共享目录创建一个index.html

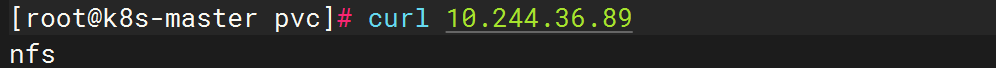

[root@k8s-master pvc]# echo nfs > /data/index.html

[root@k8s-master pvc]# curl 10.244.36.89

nfs

上面说明挂载nfs存储卷成功了,nfs支持多个客户端挂载,可以创建多个pod,挂载同一个nfs服务器共享出来的目录;但是nfs如果宕机了,数据也就丢失了,所以需要使用分布式存储,常见的分布式存储有glusterfs和cephfs