文章目录

- 说明

- 前言

- 实验平台基本配置

- 一、为什么舍弃opencv进行拉流解码?

- 二、核心模块设计

-

- [2.1 模块接口定义 (VideoStreamDecoder)](#2.1 模块接口定义 (VideoStreamDecoder))

- [2.2 单路拉流解码实现(支持关键帧策略)](#2.2 单路拉流解码实现(支持关键帧策略))

- [2.3 资源消耗对比](#2.3 资源消耗对比)

- [2.3 代码汇总](#2.3 代码汇总)

-

- [2.3.1 pyav代码实现汇总](#2.3.1 pyav代码实现汇总)

- [2.3.2 opencv代码实现汇总](#2.3.2 opencv代码实现汇总)

- [2.4 章节小节](#2.4 章节小节)

- 三、管理模块的设计

- 四、完整案例-基于pyav的视频拉流显示

-

- [4.1 程序代码实现](#4.1 程序代码实现)

- [4.2 验证代码实现](#4.2 验证代码实现)

- 总结

- 下期预告

- 附录

说明

使用本章节代码请引用此CSDN链接,尊重知识、尊重他人劳动。

前言

这一小节将正式进入代码实现部分。我们会结合实际业务需要和拉流解码流程实现一个灵活、轻便、易用的视频拉流解码模块,支持多路视频拉流解码且可以动态更新更新内部参数。

实验平台基本配置

- 本次测试的平台硬件如下:

- CPU:i5-12490f

- CPG:RTX 3080 10G

- RAM:16G GDDR4 3200Hz

- 显示分辨率:4K

一、为什么舍弃opencv进行拉流解码?

在开始设计之初,我们首先考虑的是使用大家所熟知的 **OpenCV(cv2.VideoCapture)**来实现拉流和解码功能。它的 API 简单直接,几行代码就能实现从网络流中读取帧,对于快速原型验证来说非常方便。

然而,在经过深入的调研和测试后,我们发现 OpenCV 在处理多路视频流、追求低延迟和高性能的生产环境时,存在一些无法忽视的瓶颈:

-

性能和资源消耗:OpenCV 的 VideoCapture 对于网络流的处理不够高效。在多路同时拉流时,其线程模型和缓冲区管理可能成为性能瓶颈,容易导致延迟累积(Buffer Accumulation),CPU 占用率也相对较高。这对于需要同时处理数十路甚至上百路流媒体的应用来说是难以接受的。

-

控制力不足:OpenCV 提供了一个高级的、封装好的抽象接口,这牺牲了底层的灵活性。我们很难精细地控制网络参数(如超时时间、缓冲区大小、重连逻辑)、解码参数(如硬件加速选择)以及音视频流的处理策略。例如,它自动管理缓冲区,我们无法有效地"清空"陈旧的、积压的视频帧来获取最新的实时帧,这在监控等低延迟场景中是致命缺陷。

-

对复杂流格式的支持和稳定性:OpenCV 底层通常依赖于 FFmpeg,但其封装可能不是最新的,或者编译时未包含所有所需的协议和格式支持。在处理某些特定的流媒体协议(如 HLS、RTMP)或编码格式时,可能会遇到无法解析、卡顿或崩溃等稳定性和兼容性问题。

-

音频流的缺失:cv2.VideoCapture 专注于视频流,会完全忽略媒体流中的音频数据。如果我们的业务后续需要音画同步处理或音频分析,OpenCV 无法满足需求,虽然在当前阶段,我们暂不考虑音频的问题。

因此,我们决定舍弃 OpenCV,转而使用更底层、更专业的多媒体库 PyAV。

PyAV 是 FFmpeg 的 Python 绑定,它提供了对 FFmpeg 库近乎完整的访问能力。这一选择带来了显著的优势:

-

高效与精准控制:PyAV 允许我们直接操作底层的网络和解码模块。我们可以精细设置超时、缓冲、解码线程数、硬件加速(如 CUDA, VAAPI, DXVA2)等参数,从而优化多路流的资源占用和延迟。

-

强大的多路处理能力:基于 FFmpeg 的强大生态和 PyAV 的灵活 API,我们可以轻松构建高效的多路流处理管道,并有效地管理各路的生命周期。

-

完整的媒体支持:PyAV 可以同时处理视频和音频流,为未来的功能扩展奠定了基础。它支持几乎所有 FFmpeg 支持的容器格式和编解码器,保证了最佳的兼容性。

-

避免冗余缓冲:PyAV 提供了更"原始"的数据包(Packet)和帧(Frame)对象,允许我们以更低的开销获取数据,便于直接送入像 NumPy 数组或深度学习框架中进行后续处理。

综上所述,为了实现一个灵活、轻便、易用且能满足多路流低延迟、高性能解码的模块,PyAV 是我们的不二之选(当然,gstreamer也是一个很好的选择,但是对于初学者而言,基于ffmpeg的pyav库是很好的选择,也是工程化很好的工具)。

二、核心模块设计

在上一节中,我们阐述了选择 PyAV 的原因。本节将详细介绍视频拉流解码模块的核心设计,包括单路视频流的解码实现(支持关键帧解码策略)和多路流管理器。

2.1 模块接口定义 (VideoStreamDecoder)

我们首先定义一个核心类 VideoStreamDecoder,它负责一路视频流的生命周期管理,包括连接、解码、参数更新和销毁。

核心属性:

-

stream_url: 输入流地址(RTSP, HTTP, etc.)

-

buffer_size: 网络操作缓冲区大小

-

hw_accel: 硬件加速类型(例如 "cuda", "cpu",本例依旧为软解码,并不提供cuda实现)

-

timeout: 网络操作超时时间

-

reconnect_delay: 连接失败后的重连延迟

-

max_retries: 最大重试次数

-

keyframe_only: 是否只解码关键帧

-

_container: PyAV 的输入容器对象

-

_video_stream: 视频流对象

-

_current_iterator: 当前解复用器迭代器

-

_last_frame: 最后解码出的视频帧(numpy array)

-

_running: 运行状态标志

核心方法:

-

init(): 初始化解码器,配置参数。

-

connect(): 建立到流媒体的连接,查找视频流。

-

start(): 开始解码循环(通常在独立线程中运行)。

-

decode_frame(): 从流中读取并解码一帧视频。

-

get_frame(): 获取当前最新的一帧视频(供外部调用)。

-

update_settings(): 动态更新内部参数(如超时时间、缓冲区大小)。

-

stop(): 安全地停止拉流和解码,释放资源。

-

_reconnect(): 内部重连逻辑。

2.2 单路拉流解码实现(支持关键帧策略)

在开始之前,我们需要准备网络视频流资源,我们在前面已经提供了使用liveNVR准备网络视频流的素材,详细参考。我们下面是支持关键帧解码策略的 VideoStreamDecoder 类的实现:

- 实现的逻辑示意图如下所示:

异常处理 解码循环 Connect流程 是 否 是 否 是 否 是 否 是 否 关闭当前连接 解码错误 尝试重连 重连成功? 达到最大重试次数? 终止解码循环 获取下一帧 进入_decode_loop循环 是否关键帧? 更新关键帧计数 非关键帧处理 转换为numpy数组 keyframe_only=True? 跳过此帧 更新_last_frame和_last_pts 等待下一帧 使用av.open打开流

设置超时和缓冲区参数 调用connect方法 查找视频流 keyframe_only=True? 设置skip_frame='NONKEY'

只解码关键帧 正常解码所有帧 创建解码器迭代器 连接成功 创建VideoStreamDecoder实例 初始化参数

stream_url, buffer_size, hw_accel, timeout,

reconnect_delay, max_retries, keyframe_only 调用start方法 启动解码线程

-

运行结果展示(注意pyav推荐11.0.0版本! )

-

单路展示

-

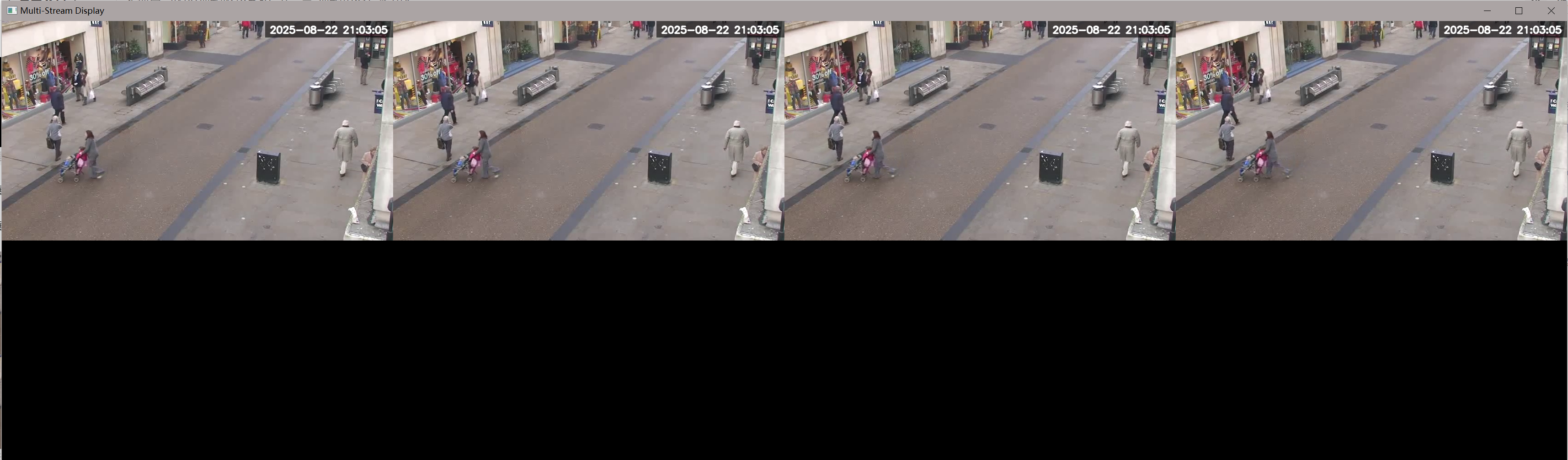

4路展示

-

8路展示

-

-

注意,随着分辨率的提高,软解码对cpu的依赖会越来越高

2.3 资源消耗对比

- pyav拉流解码资源消耗

| 测试实例 | 测试路数 | 资源占用 |

|---|---|---|

| 满帧解码 | 路数1 | CPU:3%-4% RAM:66MB GPU:0% |

| 满帧解码 | 路数4 | CPU:6%-8% RAM:92MB GPU:0% |

| 满帧解码 | 路数8 | CPU:12%-15% RAM:128MB GPU:0% |

- 在极端情况下pyav可以只解码关键帧!

| 测试实例 | 测试路数 | 资源占用 |

|---|---|---|

| 关键帧解码 | 路数1 | CPU:2.5%-4.5% RAM:67.5MB GPU:0% |

| 关键帧解码 | 路数4 | CPU:4%-6% RAM:91.6MB GPU:0% |

| 关键帧解码 | 路数8 | CPU:6%-8% RAM:128MB GPU:0% |

- opencv拉流解码资源消耗

| 测试实例 | 测试路数 | 资源占用 |

|---|---|---|

| 满帧解码 | 路数1 | CPU:4%-6% RAM:85MB GPU:0% |

| 满帧解码 | 路数4 | CPU:7%-10% RAM:170MB GPU:0% |

| 满帧解码 | 路数8 | CPU:14%-16% RAM:287MB GPU:0% |

2.3 代码汇总

2.3.1 pyav代码实现汇总

python

import av

import cv2

import time

import logging

import threading

import numpy as np

from typing import Optional, Any, Dict, List, Tuple

import datetime

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("StreamDecoder")

def add_timestamp(frame_array):

# 获取当前时间

current_time = datetime.datetime.now()

# 格式化时间字符串

time_str = current_time.strftime("%Y-%m-%d %H:%M:%S")

# 设置文本参数

font = cv2.FONT_HERSHEY_SIMPLEX

font_scale = 0.7

font_color = (255, 255, 255) # 白色文本

font_thickness = 2

background_color = (0, 0, 0) # 黑色背景

# 获取文本尺寸

(text_width, text_height), baseline = cv2.getTextSize(time_str, font, font_scale, font_thickness)

# 设置文本位置(右上角)

margin = 10

text_x = frame_array.shape[1] - text_width - margin

text_y = text_height + margin

# 添加半透明背景矩形以提高文本可读性

bg_rect = np.zeros((text_height + 2 * margin, text_width + 2 * margin, 3), dtype=np.uint8)

bg_rect[:, :] = background_color

# 将背景矩形放置在帧上

alpha = 0.6 # 背景透明度

y1, y2 = text_y - text_height - margin, text_y + margin

x1, x2 = text_x - margin, text_x + text_width + margin

# 确保坐标不超出范围

y1, y2 = max(0, y1), min(frame_array.shape[0], y2)

x1, x2 = max(0, x1), min(frame_array.shape[1], x2)

# 混合背景矩形和帧

if y2 > y1 and x2 > x1:

roi = frame_array[y1:y2, x1:x2]

bg_resized = cv2.resize(bg_rect, (x2 - x1, y2 - y1))

blended = cv2.addWeighted(roi, 1 - alpha, bg_resized, alpha, 0)

frame_array[y1:y2, x1:x2] = blended

# 添加时间文本

cv2.putText(

frame_array,

time_str,

(text_x, text_y),

font,

font_scale,

font_color,

font_thickness,

cv2.LINE_AA

)

return frame_array

class VideoStreamDecoder:

def __init__(self, stream_url: str, buffer_size: int = 102400,

hw_accel: Optional[str] = None, timeout: int = 5000000,

reconnect_delay: int = 5, max_retries: int = -1,

keyframe_only: bool = False):

self.stream_url = stream_url

self.buffer_size = buffer_size

self.hw_accel = hw_accel

self.timeout = timeout

self.reconnect_delay = reconnect_delay

self.max_retries = max_retries

self.keyframe_only = keyframe_only

self._retry_count = 0

self._container: Optional[av.container.InputContainer] = None

self._video_stream: Optional[av.video.stream.VideoStream] = None

self._current_iterator: Optional[Any] = None

self._last_frame: Optional[np.ndarray] = None

self._last_pts: int = -1

self._frame_count: int = 0

self._keyframe_count: int = 0

self._running = False

self._decode_thread: Optional[threading.Thread] = None

# 解码器选项

self._options = self._get_codec_options()

self.lock = threading.Lock()

def _get_codec_options(self) -> Dict:

"""根据硬件加速类型获取解码器选项"""

options = {'threads': 'auto'}

if self.hw_accel:

# 硬件加速配置,根据具体需求调整

options.update({

'hwaccel': self.hw_accel,

})

return options

def connect(self) -> bool:

"""尝试连接流并初始化视频流"""

try:

# 使用av.open打开流,可以设置超时和缓冲区大小

self._container = av.open(

self.stream_url,

options={

'rtsp_flags': 'prefer_tcp', # RTSP建议使用TCP,更稳定

'buffer_size': str(self.buffer_size),

'stimeout': str(self.timeout), # 设置超时(microseconds)

},

timeout=(self.timeout / 1000000) # 转换为秒

)

# 查找视频流

self._video_stream = next(s for s in self._container.streams if s.type == 'video')

# 设置只解码关键帧的策略

if self.keyframe_only:

# 设置跳过非关键帧

self._video_stream.codec_context.skip_frame = 'NONKEY'

# 创建解码器迭代器

self._current_iterator = self._container.decode(self._video_stream)

logger.info(f"Successfully connected to {self.stream_url}")

self._retry_count = 0 # 重置重试计数

return True

except Exception as e:

logger.error(f"Failed to connect to {self.stream_url}: {e}")

return False

def _decode_loop(self):

"""运行在独立线程中的解码循环"""

while self._running:

try:

# 从迭代器中获取下一个解码后的帧

frame = next(self._current_iterator)

# 统计帧信息

self._frame_count += 1

if frame.key_frame:

self._keyframe_count += 1

# 将帧转换为BGR格式的numpy数组

frame_array = frame.to_ndarray(format='bgr24')

frame_array = add_timestamp(frame_array)

with self.lock:

self._last_frame = frame_array

self._last_pts = frame.pts

except (av.AVError, StopIteration, ValueError) as e:

logger.warning(f"Decoding error on {self.stream_url}: {e}. Attempting reconnect...")

if self._container:

self._container.close()

if not self._attempt_reconnect():

break # 如果重试失败且达到最大次数,退出循环

except Exception as e:

logger.error(f"Unexpected error in decode loop for {self.stream_url}: {e}")

with self.lock:

self._last_frame = None

time.sleep(1) # 防止意外错误导致疯狂循环

def _attempt_reconnect(self) -> bool:

"""尝试重连,根据重连策略"""

if self.max_retries > 0 and self._retry_count >= self.max_retries:

logger.error(f"Reached max retries ({self.max_retries}) for {self.stream_url}. Giving up.")

return False

self._retry_count += 1

logger.info(f"Attempting to reconnect ({self._retry_count}) in {self.reconnect_delay} seconds...")

time.sleep(self.reconnect_delay)

if self.connect():

return True

return False

def start(self):

"""启动解码线程"""

if self._running:

logger.warning(f"Decoder for {self.stream_url} is already running.")

return

if self._container is None:

if not self.connect():

logger.error(f"Failed to start because connection failed for {self.stream_url}.")

return

self._running = True

self._decode_thread = threading.Thread(target=self._decode_loop, daemon=True)

self._decode_thread.start()

logger.info(f"Started decoder thread for {self.stream_url}.")

def get_frame(self) -> Optional[np.ndarray]:

"""获取当前最新的视频帧(numpy array)"""

with self.lock:

return self._last_frame.copy() if self._last_frame is not None else None

def get_stats(self) -> Dict[str, Any]:

"""获取解码统计信息"""

return {

"frame_count": self._frame_count,

"keyframe_count": self._keyframe_count,

"retry_count": self._retry_count

}

def update_settings(self, **kwargs):

"""动态更新参数"""

allowed_params = ['buffer_size', 'timeout', 'reconnect_delay', 'max_retries', 'keyframe_only']

need_restart = False

for key, value in kwargs.items():

if key in allowed_params and hasattr(self, key):

old_value = getattr(self, key)

setattr(self, key, value)

logger.info(f"Updated {key} from {old_value} to {value} for {self.stream_url}.")

# 如果更改了关键帧设置或超时等需要重新连接的参数,标记需要重启

if key in ['keyframe_only', 'timeout', 'buffer_size']:

need_restart = True

# 如果需要重启且当前正在运行,则重启

if need_restart and self._running:

self.restart()

def stop(self):

"""停止解码并清理资源"""

self._running = False

if self._decode_thread and self._decode_thread.is_alive():

self._decode_thread.join(timeout=2.0)

if self._container:

self._container.close()

logger.info(f"Stopped decoder for {self.stream_url}.")

def restart(self):

"""重启流"""

self.stop()

time.sleep(1)

self._container = None

self._video_stream = None

self.start()

class MultiStreamDisplay:

def __init__(self, stream_urls):

self.stream_urls = stream_urls

self.streams = []

self.stopped = False

self.latest_frames = [None] * len(stream_urls)

self.lock = threading.Lock()

def start(self):

# 创建并启动所有视频流

for url in self.stream_urls:

stream = VideoStreamDecoder(url)

stream.start()

self.streams.append(stream)

# 启动帧获取线程

threading.Thread(target=self.update_frames, args=()).start()

# 启动显示线程

threading.Thread(target=self.display, args=()).start()

def update_frames(self):

while not self.stopped:

for i, stream in enumerate(self.streams):

frame = stream.get_frame()

if frame is not None:

with self.lock:

self.latest_frames[i] = frame

time.sleep(0.01) # 短暂休眠避免过度占用CPU

def display(self):

# 创建一个大画布,用于放置所有视频流

# 布局:上面4个,下面4个,每个856×480

canvas_width = 856 * 4

canvas_height = 480 * 2

canvas = np.zeros((canvas_height, canvas_width, 3), dtype=np.uint8)

while not self.stopped:

with self.lock:

# 复制当前帧以避免在显示过程中被修改

frames = [frame.copy() if frame is not None else None

for frame in self.latest_frames]

# 清空画布

canvas.fill(0)

# 将所有帧放置到画布上的适当位置

for i, frame in enumerate(frames):

if frame is not None:

if i < 4: # 上面一行

y_offset = 0

x_offset = i * 856

else: # 下面一行

y_offset = 480

x_offset = (i - 4) * 856

# 确保不会超出画布边界

if y_offset + 480 <= canvas_height and x_offset + 856 <= canvas_width:

canvas[y_offset:y_offset + 480, x_offset:x_offset + 856] = frame

# 显示画布

cv2.imshow("Multi-Stream Display", canvas)

# 按'q'键退出

if cv2.waitKey(40) & 0xFF == ord('q'):

self.stop()

break

def stop(self):

self.stopped = True

for stream in self.streams:

stream.stop()

cv2.destroyAllWindows()

if __name__=="__main__":

# 假设有8个视频流URL

stream_urls = [

"rtsp://localhost:5001/stream_1",

"rtsp://localhost:5001/stream_2",

"rtsp://localhost:5001/stream_3",

"rtsp://localhost:5001/stream_4",

"rtsp://localhost:5001/stream_1",

"rtsp://localhost:5001/stream_2",

"rtsp://localhost:5001/stream_3",

"rtsp://localhost:5001/stream_4"

]

display = MultiStreamDisplay(stream_urls)

display.start()2.3.2 opencv代码实现汇总

python

import cv2

import threading

import numpy as np

from queue import Queue

import time

import datetime

def add_timestamp(frame_array):

# 获取当前时间

current_time = datetime.datetime.now()

# 格式化时间字符串

time_str = current_time.strftime("%Y-%m-%d %H:%M:%S")

# 设置文本参数

font = cv2.FONT_HERSHEY_SIMPLEX

font_scale = 0.7

font_color = (255, 255, 255) # 白色文本

font_thickness = 2

background_color = (0, 0, 0) # 黑色背景

# 获取文本尺寸

(text_width, text_height), baseline = cv2.getTextSize(time_str, font, font_scale, font_thickness)

# 设置文本位置(右上角)

margin = 10

text_x = frame_array.shape[1] - text_width - margin

text_y = text_height + margin

# 添加半透明背景矩形以提高文本可读性

bg_rect = np.zeros((text_height + 2 * margin, text_width + 2 * margin, 3), dtype=np.uint8)

bg_rect[:, :] = background_color

# 将背景矩形放置在帧上

alpha = 0.6 # 背景透明度

y1, y2 = text_y - text_height - margin, text_y + margin

x1, x2 = text_x - margin, text_x + text_width + margin

# 确保坐标不超出范围

y1, y2 = max(0, y1), min(frame_array.shape[0], y2)

x1, x2 = max(0, x1), min(frame_array.shape[1], x2)

# 混合背景矩形和帧

if y2 > y1 and x2 > x1:

roi = frame_array[y1:y2, x1:x2]

bg_resized = cv2.resize(bg_rect, (x2 - x1, y2 - y1))

blended = cv2.addWeighted(roi, 1 - alpha, bg_resized, alpha, 0)

frame_array[y1:y2, x1:x2] = blended

# 添加时间文本

cv2.putText(

frame_array,

time_str,

(text_x, text_y),

font,

font_scale,

font_color,

font_thickness,

cv2.LINE_AA

)

return frame_array

class VideoStreamDecoder:

def __init__(self, stream_url, buffer_size=128):

self.stream_url = stream_url

self.cap = cv2.VideoCapture(stream_url)

self.stopped = False

self.frame_queue = Queue(maxsize=buffer_size)

self.lock = threading.Lock()

self._last_frame = None

print("start video stream")

def start(self):

threading.Thread(target=self.update, args=()).start()

return self

def update(self):

while not self.stopped:

if not self.frame_queue.full():

ret, frame = self.cap.read()

if ret:

# 调整帧大小为856×480

# frame = cv2.resize(frame, (856, 480))

with self.lock:

self._last_frame = add_timestamp(frame)

# else:

# # 如果读取失败,尝试重新连接

# self.cap.release()

# time.sleep(2) # 等待2秒后重连

# self.cap = cv2.VideoCapture(self.stream_url)

else:

time.sleep(0.01) # 队列满时稍作等待

def get_frame(self):

"""获取当前最新的视频帧(numpy array)"""

with self.lock:

return self._last_frame.copy() if self._last_frame is not None else None

def stop(self):

self.stopped = True

self.cap.release()

class MultiStreamDisplay:

def __init__(self, stream_urls):

self.stream_urls = stream_urls

self.streams = []

self.stopped = False

self.latest_frames = [None] * len(stream_urls)

self.lock = threading.Lock()

def start(self):

# 创建并启动所有视频流

for url in self.stream_urls:

stream = VideoStreamDecoder(url)

stream.start()

self.streams.append(stream)

# 启动帧获取线程

threading.Thread(target=self.update_frames, args=()).start()

# 启动显示线程

threading.Thread(target=self.display, args=()).start()

def update_frames(self):

while not self.stopped:

for i, stream in enumerate(self.streams):

frame = stream.get_frame()

if frame is not None:

with self.lock:

self.latest_frames[i] = frame

# print("get stream")

time.sleep(0.01) # 短暂休眠避免过度占用CPU

def display(self):

# 创建一个大画布,用于放置所有视频流

# 布局:上面4个,下面4个,每个856×480

canvas_width = 856 * 4

canvas_height = 480 * 2

canvas = np.zeros((canvas_height, canvas_width, 3), dtype=np.uint8)

while not self.stopped:

with self.lock:

# 复制当前帧以避免在显示过程中被修改

frames = [frame.copy() if frame is not None else None

for frame in self.latest_frames]

# 清空画布

canvas.fill(0)

# 将所有帧放置到画布上的适当位置

for i, frame in enumerate(frames):

if frame is not None:

if i < 4: # 上面一行

y_offset = 0

x_offset = i * 856

else: # 下面一行

y_offset = 480

x_offset = (i - 4) * 856

# 确保不会超出画布边界

if y_offset + 480 <= canvas_height and x_offset + 856 <= canvas_width:

canvas[y_offset:y_offset + 480, x_offset:x_offset + 856] = frame

# 显示画布

cv2.imshow("Multi-Stream Display", canvas)

# 按'q'键退出

if cv2.waitKey(40) & 0xFF == ord('q'):

self.stop()

break

def stop(self):

self.stopped = True

for stream in self.streams:

stream.stop()

cv2.destroyAllWindows()

if __name__ == "__main__":

# 假设有8个视频流URL

stream_urls = [

"rtsp://localhost:5001/stream_1",

"rtsp://localhost:5001/stream_2",

"rtsp://localhost:5001/stream_3",

"rtsp://localhost:5001/stream_4",

"rtsp://localhost:5001/stream_1",

"rtsp://localhost:5001/stream_2",

"rtsp://localhost:5001/stream_3",

"rtsp://localhost:5001/stream_4"

]

display = MultiStreamDisplay(stream_urls)

display.start()2.4 章节小节

- 从我们的对比实验可以看出

- 采用pyav实现的拉流解码,在相同条件下,其内存的消耗要远小于opencv的实现,且cpu负载近似但略低(底层都是基于ffmpeg的软解码)。

- pyav提供较为精细的关键帧控制,在I帧间隔50的情况下,cpu的利用率仅为满帧解码的一半,对于非连续性监测场景,关键帧的控制会变得格外重要

三、管理模块的设计

为了有效管理多路视频流,我们创建一个 StreamManager 类,它负责:

-

创建和管理多个 VideoStreamDecoder 实例

-

提供统一的启动/停止接口

-

提供获取所有路视频帧的接口

-

监控各路的运行状态

以下是基于StreamManager 类和pyav拉流解码的代码实现和使用案例:

python

import av

import cv2

import time

import logging

import threading

import numpy as np

from typing import Optional, Any, Dict, List, Tuple

import datetime

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("StreamDecoder")

def add_timestamp(frame_array):

# 获取当前时间

current_time = datetime.datetime.now()

# 格式化时间字符串

time_str = current_time.strftime("%Y-%m-%d %H:%M:%S")

# 设置文本参数

font = cv2.FONT_HERSHEY_SIMPLEX

font_scale = 0.7

font_color = (255, 255, 255) # 白色文本

font_thickness = 2

background_color = (0, 0, 0) # 黑色背景

# 获取文本尺寸

(text_width, text_height), baseline = cv2.getTextSize(time_str, font, font_scale, font_thickness)

# 设置文本位置(右上角)

margin = 10

text_x = frame_array.shape[1] - text_width - margin

text_y = text_height + margin

# 添加半透明背景矩形以提高文本可读性

bg_rect = np.zeros((text_height + 2 * margin, text_width + 2 * margin, 3), dtype=np.uint8)

bg_rect[:, :] = background_color

# 将背景矩形放置在帧上

alpha = 0.6 # 背景透明度

y1, y2 = text_y - text_height - margin, text_y + margin

x1, x2 = text_x - margin, text_x + text_width + margin

# 确保坐标不超出范围

y1, y2 = max(0, y1), min(frame_array.shape[0], y2)

x1, x2 = max(0, x1), min(frame_array.shape[1], x2)

# 混合背景矩形和帧

if y2 > y1 and x2 > x1:

roi = frame_array[y1:y2, x1:x2]

bg_resized = cv2.resize(bg_rect, (x2 - x1, y2 - y1))

blended = cv2.addWeighted(roi, 1 - alpha, bg_resized, alpha, 0)

frame_array[y1:y2, x1:x2] = blended

# 添加时间文本

cv2.putText(

frame_array,

time_str,

(text_x, text_y),

font,

font_scale,

font_color,

font_thickness,

cv2.LINE_AA

)

return frame_array

class VideoStreamDecoder:

def __init__(self, stream_url: str, buffer_size: int = 102400,

hw_accel: Optional[str] = None, timeout: int = 5000000,

reconnect_delay: int = 5, max_retries: int = -1,

keyframe_only: bool = False):

self.stream_url = stream_url

self.buffer_size = buffer_size

self.hw_accel = hw_accel

self.timeout = timeout

self.reconnect_delay = reconnect_delay

self.max_retries = max_retries

self.keyframe_only = keyframe_only

self._retry_count = 0

self._container: Optional[av.container.InputContainer] = None

self._video_stream: Optional[av.video.stream.VideoStream] = None

self._current_iterator: Optional[Any] = None

self._last_frame: Optional[np.ndarray] = None

# self._last_pts: int = -1

self._frame_count: int = 0

self._keyframe_count: int = 0

self._running = False

self._decode_thread: Optional[threading.Thread] = None

# 解码器选项

self._options = self._get_codec_options()

self.lock = threading.Lock()

def _get_codec_options(self) -> Dict:

"""根据硬件加速类型获取解码器选项"""

options = {'threads': 'auto'}

if self.hw_accel:

# 硬件加速配置,根据具体需求调整

options.update({

'hwaccel': self.hw_accel,

})

return options

def connect(self) -> bool:

"""尝试连接流并初始化视频流"""

try:

# 使用av.open打开流,可以设置超时和缓冲区大小

self._container = av.open(

self.stream_url,

options={

'rtsp_flags': 'prefer_tcp', # RTSP建议使用TCP,更稳定

'buffer_size': str(self.buffer_size),

'stimeout': str(self.timeout), # 设置超时(microseconds)

},

timeout=(self.timeout / 1000000) # 转换为秒

)

# 查找视频流

self._video_stream = next(s for s in self._container.streams if s.type == 'video')

# 设置只解码关键帧的策略

if self.keyframe_only:

# 设置跳过非关键帧

self._video_stream.codec_context.skip_frame = 'NONKEY'

# 创建解码器迭代器

self._current_iterator = self._container.decode(self._video_stream)

logger.info(f"Successfully connected to {self.stream_url}")

self._retry_count = 0 # 重置重试计数

return True

except Exception as e:

logger.error(f"Failed to connect to {self.stream_url}: {e}")

return False

def _decode_loop(self):

"""运行在独立线程中的解码循环"""

while self._running:

try:

# 从迭代器中获取下一个解码后的帧

frame = next(self._current_iterator)

# 统计帧信息

self._frame_count += 1

if frame.key_frame:

self._keyframe_count += 1

# 将帧转换为BGR格式的numpy数组

frame_array = frame.to_ndarray(format='bgr24')

frame_array = add_timestamp(frame_array)

with self.lock:

self._last_frame = frame_array

# self._last_pts = frame.pts

except (av.AVError, StopIteration, ValueError) as e:

logger.warning(f"Decoding error on {self.stream_url}: {e}. Attempting reconnect...")

if self._container:

self._container.close()

if not self._attempt_reconnect():

break # 如果重试失败且达到最大次数,退出循环

except Exception as e:

logger.error(f"Unexpected error in decode loop for {self.stream_url}: {e}")

with self.lock:

self._last_frame = None

time.sleep(1) # 防止意外错误导致疯狂循环

def _attempt_reconnect(self) -> bool:

"""尝试重连,根据重连策略"""

if self.max_retries > 0 and self._retry_count >= self.max_retries:

logger.error(f"Reached max retries ({self.max_retries}) for {self.stream_url}. Giving up.")

return False

self._retry_count += 1

logger.info(f"Attempting to reconnect ({self._retry_count}) in {self.reconnect_delay} seconds...")

time.sleep(self.reconnect_delay)

if self.connect():

return True

return False

def start(self):

"""启动解码线程"""

if self._running:

logger.warning(f"Decoder for {self.stream_url} is already running.")

return

if self._container is None:

if not self.connect():

logger.error(f"Failed to start because connection failed for {self.stream_url}.")

return

self._running = True

self._decode_thread = threading.Thread(target=self._decode_loop, daemon=True)

self._decode_thread.start()

logger.info(f"Started decoder thread for {self.stream_url}.")

def get_frame(self) -> Optional[np.ndarray]:

"""获取当前最新的视频帧(numpy array)"""

with self.lock:

return self._last_frame.copy() if self._last_frame is not None else None

def get_stats(self) -> Dict[str, Any]:

"""获取解码统计信息"""

return {

"frame_count": self._frame_count,

"keyframe_count": self._keyframe_count,

"retry_count": self._retry_count

}

def update_settings(self, **kwargs):

"""动态更新参数"""

allowed_params = ['buffer_size', 'timeout', 'reconnect_delay', 'max_retries', 'keyframe_only']

need_restart = False

for key, value in kwargs.items():

if key in allowed_params and hasattr(self, key):

old_value = getattr(self, key)

setattr(self, key, value)

logger.info(f"Updated {key} from {old_value} to {value} for {self.stream_url}.")

# 如果更改了关键帧设置或超时等需要重新连接的参数,标记需要重启

if key in ['keyframe_only', 'timeout', 'buffer_size']:

need_restart = True

# 如果需要重启且当前正在运行,则重启

if need_restart and self._running:

self.restart()

def stop(self):

"""停止解码并清理资源"""

self._running = False

if self._decode_thread and self._decode_thread.is_alive():

self._decode_thread.join(timeout=2.0)

if self._container:

self._container.close()

logger.info(f"Stopped decoder for {self.stream_url}.")

def restart(self):

"""重启流"""

self.stop()

time.sleep(1)

self._container = None

self._video_stream = None

self.start()

class MultiStreamDisplay:

def __init__(self, stream_urls):

self.stream_urls = stream_urls

self.streams = []

self.stopped = False

self.latest_frames = [None] * len(stream_urls)

self.lock = threading.Lock()

def start(self):

# 创建并启动所有视频流

for url in self.stream_urls:

stream = VideoStreamDecoder(url,keyframe_only = False)

stream.start()

self.streams.append(stream)

# 启动帧获取线程

threading.Thread(target=self.update_frames, args=()).start()

# 启动显示线程

threading.Thread(target=self.display, args=()).start()

def update_frames(self):

while not self.stopped:

for i, stream in enumerate(self.streams):

frame = stream.get_frame()

if frame is not None:

with self.lock:

self.latest_frames[i] = frame

time.sleep(0.01) # 短暂休眠避免过度占用CPU

def display(self):

# 创建一个大画布,用于放置所有视频流

# 布局:上面4个,下面4个,每个856×480

canvas_width = 856 * 4

canvas_height = 480 * 2

canvas = np.zeros((canvas_height, canvas_width, 3), dtype=np.uint8)

while not self.stopped:

with self.lock:

# 复制当前帧以避免在显示过程中被修改

frames = [frame.copy() if frame is not None else None

for frame in self.latest_frames]

# 清空画布

canvas.fill(0)

# 将所有帧放置到画布上的适当位置

for i, frame in enumerate(frames):

if frame is not None:

if i < 4: # 上面一行

y_offset = 0

x_offset = i * 856

else: # 下面一行

y_offset = 480

x_offset = (i - 4) * 856

# 确保不会超出画布边界

if y_offset + 480 <= canvas_height and x_offset + 856 <= canvas_width:

canvas[y_offset:y_offset + 480, x_offset:x_offset + 856] = frame

# 显示画布

cv2.imshow("Multi-Stream Display", canvas)

# 按'q'键退出

if cv2.waitKey(40) & 0xFF == ord('q'):

self.stop()

break

def stop(self):

self.stopped = True

for stream in self.streams:

stream.stop()

cv2.destroyAllWindows()

class StreamManager:

def __init__(self):

self.decoders: Dict[str, VideoStreamDecoder] = {}

self.lock = threading.RLock()

def add_stream(self, stream_id: str, stream_url: str, **kwargs) -> bool:

"""添加一路视频流"""

with self.lock:

if stream_id in self.decoders:

logger.warning(f"Stream ID {stream_id} already exists.")

return False

decoder = VideoStreamDecoder(stream_url, **kwargs)

self.decoders[stream_id] = decoder

logger.info(f"Added stream {stream_id} with URL {stream_url}")

return True

def remove_stream(self, stream_id: str) -> bool:

"""移除一路视频流"""

with self.lock:

if stream_id not in self.decoders:

logger.warning(f"Stream ID {stream_id} does not exist.")

return False

decoder = self.decoders[stream_id]

decoder.stop()

del self.decoders[stream_id]

logger.info(f"Removed stream {stream_id}")

return True

def start_all(self):

"""启动所有视频流"""

with self.lock:

for stream_id, decoder in self.decoders.items():

try:

decoder.start()

except Exception as e:

logger.error(f"Failed to start stream {stream_id}: {e}")

def stop_all(self):

"""停止所有视频流"""

with self.lock:

for stream_id, decoder in self.decoders.items():

try:

decoder.stop()

except Exception as e:

logger.error(f"Failed to stop stream {stream_id}: {e}")

def get_frame(self, stream_id: str) -> Optional[np.ndarray]:

"""获取指定流的当前帧"""

with self.lock:

if stream_id not in self.decoders:

return None

return self.decoders[stream_id].get_frame()

def get_all_frames(self) -> Dict[str, Optional[np.ndarray]]:

"""获取所有流的当前帧"""

frames = {}

with self.lock:

for stream_id, decoder in self.decoders.items():

frames[stream_id] = decoder.get_frame()

return frames

def update_stream_settings(self, stream_id: str, **kwargs):

"""更新指定流的设置"""

with self.lock:

if stream_id not in self.decoders:

logger.warning(f"Stream ID {stream_id} does not exist.")

return

self.decoders[stream_id].update_settings(**kwargs)

def get_stream_stats(self, stream_id: str) -> Optional[Dict[str, Any]]:

"""获取指定流的统计信息"""

with self.lock:

if stream_id not in self.decoders:

return None

return self.decoders[stream_id].get_stats()

def get_all_stats(self) -> Dict[str, Dict[str, Any]]:

"""获取所有流的统计信息"""

stats = {}

with self.lock:

for stream_id, decoder in self.decoders.items():

stats[stream_id] = decoder.get_stats()

return stats

def get_active_streams(self) -> List[str]:

"""获取当前活跃的流ID列表"""

with self.lock:

return list(self.decoders.keys())

if __name__=="__main__":

# 使用示例

if __name__ == "__main__":

# 创建流管理器

manager = StreamManager()

# 添加多路流

streams = {

"cam1": "rtsp://localhost:5001/stream_1",

"cam2": "rtsp://localhost:5001/stream_2",

"cam3": "rtsp://localhost:5001/stream_3",

"cam4": "rtsp://localhost:5001/stream_4",

}

for stream_id, url in streams.items():

manager.add_stream(

stream_id,

url,

keyframe_only=True, # 只解码关键帧

timeout=3000000, # 3秒超时

reconnect_delay=3 # 3秒重连延迟

)

# 启动所有流

manager.start_all()

try:

while True:

# 获取所有流的当前帧

frames = manager.get_all_frames()

# 处理每一帧

# for stream_id, frame in frames.items():

# if frame is not None:

# # 在这里处理帧,例如显示、推理等

# cv2.imshow(stream_id, frame)

# 按'q'退出

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# 每10秒打印一次统计信息

if int(time.time()) % 100 == 0:

stats = manager.get_all_stats()

print("Stream stats:", stats)

except KeyboardInterrupt:

print("Interrupted by user")

finally:

# 停止所有流

manager.stop_all()

cv2.destroyAllWindows()四、完整案例-基于pyav的视频拉流显示

在熟悉拉流与解码显示这一完整的流程后,我们需要一个实际的应用案例进行知识的巩固和提高,我们将设计一个实时视频显示系统包含以下基本功能:

- 包含基本的显示界面,最高支持8路视频实时显示。

- 支持视频的动态添加和关闭。

- 支持不同通道的参数修改,比如开启或关闭关键帧策略。

- 提供网络服务接口,可以通过http进行上述3点功能。

4.1 程序代码实现

python

"""

fastapi 安装:

pip install fastapi

pip install "uvicorn[standard]"

"""

import av

import cv2

import time

import logging

import threading

import numpy as np

from typing import Optional, Any, Dict, List, Tuple

import datetime

from fastapi import FastAPI, HTTPException

from fastapi.responses import JSONResponse, StreamingResponse

from pydantic import BaseModel

import uvicorn

import json

import queue

import asyncio

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("StreamDecoder")

# 全局变量

app = FastAPI(title="多路视频流管理系统")

stream_manager = None

display_thread = None

stopped = False

frame_queue = queue.Queue(maxsize=10)

active_streams = set()

# 数据模型

class StreamConfig(BaseModel):

stream_url: str

buffer_size: int = 102400

hw_accel: Optional[str] = None

timeout: int = 5000000

reconnect_delay: int = 5

max_retries: int = -1

keyframe_only: bool = False

class StreamUpdate(BaseModel):

buffer_size: Optional[int] = None

hw_accel: Optional[str] = None

timeout: Optional[int] = None

reconnect_delay: Optional[int] = None

max_retries: Optional[int] = None

keyframe_only: Optional[bool] = None

def add_timestamp(frame_array):

"""添加时间戳到视频帧"""

current_time = datetime.datetime.now()

time_str = current_time.strftime("%Y-%m-%d %H:%M:%S")

font = cv2.FONT_HERSHEY_SIMPLEX

font_scale = 0.7

font_color = (255, 255, 255)

font_thickness = 2

background_color = (0, 0, 0)

(text_width, text_height), baseline = cv2.getTextSize(time_str, font, font_scale, font_thickness)

margin = 10

text_x = frame_array.shape[1] - text_width - margin

text_y = text_height + margin

bg_rect = np.zeros((text_height + 2 * margin, text_width + 2 * margin, 3), dtype=np.uint8)

bg_rect[:, :] = background_color

alpha = 0.6

y1, y2 = text_y - text_height - margin, text_y + margin

x1, x2 = text_x - margin, text_x + text_width + margin

y1, y2 = max(0, y1), min(frame_array.shape[0], y2)

x1, x2 = max(0, x1), min(frame_array.shape[1], x2)

if y2 > y1 and x2 > x1:

roi = frame_array[y1:y2, x1:x2]

bg_resized = cv2.resize(bg_rect, (x2 - x1, y2 - y1))

blended = cv2.addWeighted(roi, 1 - alpha, bg_resized, alpha, 0)

frame_array[y1:y2, x1:x2] = blended

cv2.putText(

frame_array,

time_str,

(text_x, text_y),

font,

font_scale,

font_color,

font_thickness,

cv2.LINE_AA

)

return frame_array

class VideoStreamDecoder:

def __init__(self, stream_url: str, buffer_size: int = 102400,

hw_accel: Optional[str] = None, timeout: int = 5000000,

reconnect_delay: int = 5, max_retries: int = -1,

keyframe_only: bool = False):

self.stream_url = stream_url

self.buffer_size = buffer_size

self.hw_accel = hw_accel

self.timeout = timeout

self.reconnect_delay = reconnect_delay

self.max_retries = max_retries

self.keyframe_only = keyframe_only

self._retry_count = 0

self._connection_status = "disconnected" # disconnected, connecting, connected, error

self._container: Optional[av.container.InputContainer] = None

self._video_stream: Optional[av.video.stream.VideoStream] = None

self._current_iterator: Optional[Any] = None

self._last_frame: Optional[np.ndarray] = None

self._frame_count: int = 0

self._keyframe_count: int = 0

self._running = False

self._decode_thread: Optional[threading.Thread] = None

self._options = self._get_codec_options()

self.lock = threading.Lock()

def _get_codec_options(self) -> Dict:

"""根据硬件加速类型获取解码器选项"""

options = {'threads': 'auto'}

if self.hw_accel:

options.update({

'hwaccel': self.hw_accel,

})

return options

def connect(self) -> bool:

"""尝试连接流并初始化视频流"""

try:

self._connection_status = "connecting"

self._container = av.open(

self.stream_url,

options={

'rtsp_flags': 'prefer_tcp',

'buffer_size': str(self.buffer_size),

'stimeout': str(self.timeout),

},

timeout=(self.timeout / 1000000)

)

self._video_stream = next(s for s in self._container.streams if s.type == 'video')

if self.keyframe_only:

self._video_stream.codec_context.skip_frame = 'NONKEY'

self._current_iterator = self._container.decode(self._video_stream)

logger.info(f"Successfully connected to {self.stream_url}")

self._retry_count = 0

self._connection_status = "connected"

return True

except Exception as e:

logger.error(f"Failed to connect to {self.stream_url}: {e}")

self._connection_status = f"error: {str(e)}"

return False

def _decode_loop(self):

"""运行在独立线程中的解码循环"""

while self._running:

try:

frame = next(self._current_iterator)

self._frame_count += 1

if frame.key_frame:

self._keyframe_count += 1

frame_array = frame.to_ndarray(format='bgr24')

frame_array = add_timestamp(frame_array)

with self.lock:

self._last_frame = frame_array

except (av.AVError, StopIteration, ValueError) as e:

logger.warning(f"Decoding error on {self.stream_url}: {e}. Attempting reconnect...")

self._connection_status = f"reconnecting: {str(e)}"

if self._container:

self._container.close()

if not self._attempt_reconnect():

# 一致保持重连

continue

except Exception as e:

logger.error(f"Unexpected error in decode loop for {self.stream_url}: {e}")

self._connection_status = f"error: {str(e)}"

with self.lock:

self._last_frame = None

time.sleep(1)

def _attempt_reconnect(self) -> bool:

"""尝试重连,根据重连策略"""

if self.max_retries > 0 and self._retry_count >= self.max_retries:

logger.error(f"Reached max retries ({self.max_retries}) for {self.stream_url}. Giving up.")

self._connection_status = "disconnected"

return False

self._retry_count += 1

logger.info(f"Attempting to reconnect ({self._retry_count}) in {self.reconnect_delay} seconds...")

time.sleep(self.reconnect_delay)

if self.connect():

return True

return False

def start(self):

"""启动解码线程"""

if self._running:

logger.warning(f"Decoder for {self.stream_url} is already running.")

return

if self._container is None:

if not self.connect():

logger.error(f"Failed to start because connection failed for {self.stream_url}.")

return

self._running = True

self._decode_thread = threading.Thread(target=self._decode_loop, daemon=True)

self._decode_thread.start()

logger.info(f"Started decoder thread for {self.stream_url}.")

def get_frame(self) -> Optional[np.ndarray]:

"""获取当前最新的视频帧(numpy array)"""

with self.lock:

return self._last_frame.copy() if self._last_frame is not None else None

def get_stats(self) -> Dict[str, Any]:

"""获取解码统计信息"""

return {

"frame_count": self._frame_count,

"keyframe_count": self._keyframe_count,

"retry_count": self._retry_count,

"connection_status": self._connection_status

}

def update_settings(self, **kwargs):

"""动态更新参数"""

allowed_params = ['buffer_size', 'timeout', 'reconnect_delay', 'max_retries', 'keyframe_only']

need_restart = False

for key, value in kwargs.items():

if key in allowed_params and hasattr(self, key):

old_value = getattr(self, key)

setattr(self, key, value)

logger.info(f"Updated {key} from {old_value} to {value} for {self.stream_url}.")

if key in ['keyframe_only', 'timeout', 'buffer_size']:

need_restart = True

if need_restart and self._running:

self.restart()

def stop(self):

"""停止解码并清理资源"""

self._running = False

self._connection_status = "disconnected"

if self._decode_thread and self._decode_thread.is_alive():

self._decode_thread.join(timeout=2.0)

if self._container:

self._container.close()

logger.info(f"Stopped decoder for {self.stream_url}.")

def restart(self):

"""重启流"""

self.stop()

time.sleep(1)

self._container = None

self._video_stream = None

self.start()

class StreamManager:

def __init__(self):

self.decoders: Dict[str, VideoStreamDecoder] = {}

self.lock = threading.RLock()

def add_stream(self, stream_id: str, stream_url: str, **kwargs) -> bool:

"""添加一路视频流"""

with self.lock:

if stream_id in self.decoders:

logger.warning(f"Stream ID {stream_id} already exists.")

return False

decoder = VideoStreamDecoder(stream_url, **kwargs)

self.decoders[stream_id] = decoder

logger.info(f"Added stream {stream_id} with URL {stream_url}")

return True

def remove_stream(self, stream_id: str) -> bool:

"""移除一路视频流"""

with self.lock:

if stream_id not in self.decoders:

logger.warning(f"Stream ID {stream_id} does not exist.")

return False

decoder = self.decoders[stream_id]

decoder.stop()

del self.decoders[stream_id]

logger.info(f"Removed stream {stream_id}")

return True

def start_stream(self, stream_id: str) -> bool:

"""启动指定视频流"""

with self.lock:

if stream_id not in self.decoders:

logger.warning(f"Stream ID {stream_id} does not exist.")

return False

try:

self.decoders[stream_id].start()

return True

except Exception as e:

logger.error(f"Failed to start stream {stream_id}: {e}")

return False

def stop_stream(self, stream_id: str) -> bool:

"""停止指定视频流"""

with self.lock:

if stream_id not in self.decoders:

logger.warning(f"Stream ID {stream_id} does not exist.")

return False

try:

self.decoders[stream_id].stop()

return True

except Exception as e:

logger.error(f"Failed to stop stream {stream_id}: {e}")

return False

def start_all(self):

"""启动所有视频流"""

with self.lock:

for stream_id, decoder in self.decoders.items():

try:

decoder.start()

except Exception as e:

logger.error(f"Failed to start stream {stream_id}: {e}")

def stop_all(self):

"""停止所有视频流"""

with self.lock:

for stream_id, decoder in self.decoders.items():

try:

decoder.stop()

except Exception as e:

logger.error(f"Failed to stop stream {stream_id}: {e}")

def get_frame(self, stream_id: str) -> Optional[np.ndarray]:

"""获取指定流的当前帧"""

with self.lock:

if stream_id not in self.decoders:

return None

return self.decoders[stream_id].get_frame()

def get_all_frames(self) -> Dict[str, Optional[np.ndarray]]:

"""获取所有流的当前帧"""

frames = {}

with self.lock:

for stream_id, decoder in self.decoders.items():

frames[stream_id] = decoder.get_frame()

return frames

def update_stream_settings(self, stream_id: str, **kwargs):

"""更新指定流的设置"""

with self.lock:

if stream_id not in self.decoders:

logger.warning(f"Stream ID {stream_id} does not exist.")

return

self.decoders[stream_id].update_settings(**kwargs)

def get_stream_stats(self, stream_id: str) -> Optional[Dict[str, Any]]:

"""获取指定流的统计信息"""

with self.lock:

if stream_id not in self.decoders:

return None

return self.decoders[stream_id].get_stats()

def get_all_stats(self) -> Dict[str, Dict[str, Any]]:

"""获取所有流的统计信息"""

stats = {}

with self.lock:

for stream_id, decoder in self.decoders.items():

stats[stream_id] = decoder.get_stats()

return stats

def get_active_streams(self) -> List[str]:

"""获取当前活跃的流ID列表"""

with self.lock:

return list(self.decoders.keys())

def display_loop():

"""显示循环,在主线程中运行"""

global stopped, stream_manager, frame_queue, active_streams

# 定义小屏幕尺寸和总分辨率

SMALL_SCREEN_WIDTH = 856

SMALL_SCREEN_HEIGHT = 480

TOTAL_WIDTH = SMALL_SCREEN_WIDTH * 4 # 4列

TOTAL_HEIGHT = SMALL_SCREEN_HEIGHT * 2 # 2行

# 创建显示窗口

cv2.namedWindow("Multi-Stream Display", cv2.WINDOW_NORMAL)

cv2.resizeWindow("Multi-Stream Display", TOTAL_WIDTH, TOTAL_HEIGHT)

# 定义布局 - 2x4网格 (2行,每行4个)

layout = [

["cam1", "cam2", "cam3", "cam4"],

["cam5", "cam6", "cam7", "cam8"]

]

# 创建状态帧(用于显示连接状态)

def create_status_frame(text, width, height, color=(0, 0, 0)):

frame = np.zeros((height, width, 3), dtype=np.uint8)

frame[:] = color

font = cv2.FONT_HERSHEY_SIMPLEX

font_scale = min(width, height) / 800 # 根据屏幕大小调整字体比例

text_size = cv2.getTextSize(text, font, font_scale, 2)[0]

text_x = (frame.shape[1] - text_size[0]) // 2

text_y = (frame.shape[0] + text_size[1]) // 2

cv2.putText(frame, text, (text_x, text_y), font, font_scale, (255, 255, 255), 2, cv2.LINE_AA)

return frame

while not stopped:

try:

# 创建画布

canvas = np.zeros((TOTAL_HEIGHT, TOTAL_WIDTH, 3), dtype=np.uint8)

# 获取所有流的帧和状态

frames = {}

stats = {}

if stream_manager:

frames = stream_manager.get_all_frames()

stats = stream_manager.get_all_stats()

# 填充每个位置

for row_idx, row in enumerate(layout):

for col_idx, stream_id in enumerate(row):

# 计算位置

x_start = col_idx * SMALL_SCREEN_WIDTH

y_start = row_idx * SMALL_SCREEN_HEIGHT

# 获取帧或创建状态帧

if stream_id in frames and frames[stream_id] is not None:

# 有有效帧,调整大小并显示

frame = cv2.resize(frames[stream_id], (SMALL_SCREEN_WIDTH, SMALL_SCREEN_HEIGHT))

# 添加流ID和状态信息

status_text = f"{stream_id}"

if stream_id in stats:

status = stats[stream_id].get("connection_status", "unknown")

# 截断状态文本,避免太长

if len(status) > 20:

status = status[:20] + "..."

status_text += f" - {status}"

cv2.putText(frame, status_text, (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 255, 0), 2)

else:

# 没有有效帧,显示状态信息

if stream_id in stats:

status = stats[stream_id].get("connection_status", "disconnected")

if "error" in status or "disconnected" in status:

frame = create_status_frame(

f"{stream_id}: {status}",

SMALL_SCREEN_WIDTH,

SMALL_SCREEN_HEIGHT,

(0, 0, 100) # 红色背景表示错误

)

elif "connecting" in status or "reconnecting" in status:

frame = create_status_frame(

f"{stream_id}: {status}",

SMALL_SCREEN_WIDTH,

SMALL_SCREEN_HEIGHT,

(0, 100, 100) # 黄色背景表示连接中

)

else:

frame = create_status_frame(

f"{stream_id}: {status}",

SMALL_SCREEN_WIDTH,

SMALL_SCREEN_HEIGHT,

(0, 0, 0) # 黑色背景表示未知状态

)

else:

frame = create_status_frame(

f"{stream_id}: Not configured",

SMALL_SCREEN_WIDTH,

SMALL_SCREEN_HEIGHT,

(50, 50, 50) # 灰色背景表示未配置

)

# 将帧放置到画布上

canvas[y_start:y_start + SMALL_SCREEN_HEIGHT, x_start:x_start + SMALL_SCREEN_WIDTH] = frame

# 显示画布

cv2.imshow("Multi-Stream Display", canvas)

# 检查按键

key = cv2.waitKey(30) & 0xFF

if key == ord('q'):

stopped = True

break

except Exception as e:

logger.error(f"Error in display loop: {e}")

time.sleep(1)

cv2.destroyAllWindows()

# FastAPI路由

@app.on_event("startup")

async def startup_event():

"""应用启动时初始化"""

global stream_manager, display_thread

stream_manager = StreamManager()

display_thread = threading.Thread(target=display_loop, daemon=True)

display_thread.start()

logger.info("Multi-stream display system started")

@app.on_event("shutdown")

async def shutdown_event():

"""应用关闭时清理资源"""

global stopped, stream_manager

stopped = True

if stream_manager:

stream_manager.stop_all()

logger.info("Multi-stream display system stopped")

@app.get("/")

async def root():

"""根路由,返回系统信息"""

return {"message": "Multi-Stream Video Management System", "status": "running"}

@app.get("/streams")

async def list_streams():

"""获取所有流列表"""

if not stream_manager:

raise HTTPException(status_code=500, detail="Stream manager not initialized")

streams = stream_manager.get_active_streams()

stats = stream_manager.get_all_stats()

return {

"streams": streams,

"stats": stats

}

@app.post("/streams/{stream_id}")

async def add_stream(stream_id: str, config: StreamConfig):

"""添加新流"""

if not stream_manager:

raise HTTPException(status_code=500, detail="Stream manager not initialized")

if stream_manager.add_stream(stream_id, config.stream_url,

buffer_size=config.buffer_size,

hw_accel=config.hw_accel,

timeout=config.timeout,

reconnect_delay=config.reconnect_delay,

max_retries=config.max_retries,

keyframe_only=config.keyframe_only):

return {"message": f"Stream {stream_id} added successfully"}

else:

raise HTTPException(status_code=400, detail=f"Stream {stream_id} already exists")

@app.delete("/streams/{stream_id}")

async def remove_stream(stream_id: str):

"""删除流"""

if not stream_manager:

raise HTTPException(status_code=500, detail="Stream manager not initialized")

if stream_manager.remove_stream(stream_id):

return {"message": f"Stream {stream_id} removed successfully"}

else:

raise HTTPException(status_code=404, detail=f"Stream {stream_id} not found")

@app.post("/streams/{stream_id}/start")

async def start_stream(stream_id: str):

"""启动指定流"""

if not stream_manager:

raise HTTPException(status_code=500, detail="Stream manager not initialized")

if stream_manager.start_stream(stream_id):

return {"message": f"Stream {stream_id} started successfully"}

else:

raise HTTPException(status_code=404, detail=f"Stream {stream_id} not found")

@app.post("/streams/{stream_id}/stop")

async def stop_stream(stream_id: str):

"""停止指定流"""

if not stream_manager:

raise HTTPException(status_code=500, detail="Stream manager not initialized")

if stream_manager.stop_stream(stream_id):

return {"message": f"Stream {stream_id} stopped successfully"}

else:

raise HTTPException(status_code=404, detail=f"Stream {stream_id} not found")

@app.put("/streams/{stream_id}/settings")

async def update_stream_settings(stream_id: str, settings: StreamUpdate):

"""更新流设置"""

if not stream_manager:

raise HTTPException(status_code=500, detail="Stream manager not initialized")

# 过滤掉None值

update_params = {k: v for k, v in settings.dict().items() if v is not None}

if not update_params:

raise HTTPException(status_code=400, detail="No valid parameters provided for update")

stream_manager.update_stream_settings(stream_id, **update_params)

return {"message": f"Stream {stream_id} settings updated successfully"}

@app.get("/streams/{stream_id}/frame")

async def get_stream_frame(stream_id: str):

"""获取指定流的当前帧(JPEG格式)"""

if not stream_manager:

raise HTTPException(status_code=500, detail="Stream manager not initialized")

frame = stream_manager.get_frame(stream_id)

if frame is None:

raise HTTPException(status_code=404, detail=f"No frame available for stream {stream_id}")

# 将帧编码为JPEG

_, jpeg_frame = cv2.imencode('.jpg', frame)

return StreamingResponse(

iter([jpeg_frame.tobytes()]),

media_type="image/jpeg"

)

if __name__ == "__main__":

# 启动FastAPI应用

uvicorn.run(app, host="0.0.0.0", port=8000)4.2 验证代码实现

- 这里提供一个完整封装的测试用例,以供大家参考

python

import requests

import json

import time

class VideoStreamController:

def __init__(self, base_url="http://localhost:8000"):

self.base_url = base_url

self.session = requests.Session()

def _make_request(self, method, endpoint, data=None):

"""发送HTTP请求"""

url = f"{self.base_url}{endpoint}"

try:

if method == "GET":

response = self.session.get(url)

elif method == "POST":

response = self.session.post(url, json=data)

elif method == "PUT":

response = self.session.put(url, json=data)

elif method == "DELETE":

response = self.session.delete(url)

else:

raise ValueError(f"不支持的HTTP方法: {method}")

if response.status_code >= 200 and response.status_code < 300:

return response.json()

else:

print(f"请求失败: {response.status_code} - {response.text}")

return None

except Exception as e:

print(f"请求出错: {e}")

return None

def add_stream(self, stream_id, stream_url, **kwargs):

"""添加视频流"""

data = {

"stream_url": stream_url,

"buffer_size": kwargs.get("buffer_size", 102400),

"hw_accel": kwargs.get("hw_accel", None),

"timeout": kwargs.get("timeout", 5000000),

"reconnect_delay": kwargs.get("reconnect_delay", 5),

"max_retries": kwargs.get("max_retries", -1),

"keyframe_only": kwargs.get("keyframe_only", False)

}

return self._make_request("POST", f"/streams/{stream_id}", data)

def start_stream(self, stream_id):

"""启动视频流"""

return self._make_request("POST", f"/streams/{stream_id}/start")

def stop_stream(self, stream_id):

"""停止视频流"""

return self._make_request("POST", f"/streams/{stream_id}/stop")

def remove_stream(self, stream_id):

"""移除视频流"""

return self._make_request("DELETE", f"/streams/{stream_id}")

def list_streams(self):

"""获取所有流列表"""

return self._make_request("GET", "/streams")

def update_stream_settings(self, stream_id, **kwargs):

"""更新流设置"""

data = {}

allowed_params = ["buffer_size", "hw_accel", "timeout", "reconnect_delay", "max_retries", "keyframe_only"]

for key, value in kwargs.items():

if key in allowed_params:

data[key] = value

return self._make_request("PUT", f"/streams/{stream_id}/settings", data)

def get_frame(self, stream_id):

"""获取视频帧(返回字节数据)"""

url = f"{self.base_url}/streams/{stream_id}/frame"

try:

response = self.session.get(url)

if response.status_code == 200:

return response.content

else:

print(f"获取帧失败: {response.status_code} - {response.text}")

return None

except Exception as e:

print(f"请求出错: {e}")

return None

# 使用示例

if __name__ == "__main__":

controller = VideoStreamController()

# 添加前5路流

controller.add_stream("cam1", "rtsp://localhost:5001/stream_1")

controller.add_stream("cam2", "rtsp://localhost:5001/stream_2")

controller.add_stream("cam3", "rtsp://localhost:5001/stream_3")

controller.add_stream("cam4", "rtsp://localhost:5001/stream_4")

controller.add_stream("cam5", "rtsp://localhost:5001/stream_1")

# 错误加入是否有误

controller.add_stream("cam5", "rtsp://localhost:5001/stream_1")

# 启动流

controller.start_stream("cam1")

controller.start_stream("cam2")

controller.start_stream("cam3")

controller.start_stream("cam4")

# 错误启动是否有误

controller.start_stream("cam4")

# 等待一段时间

time.sleep(10)

streams = controller.list_streams()

if streams:

print("当前所有流:")

print(json.dumps(streams, indent=2, ensure_ascii=False))

controller.update_stream_settings("cam1", keyframe_only = True)

streams = controller.list_streams()

if streams:

print("当前所有流:")

print(json.dumps(streams, indent=2, ensure_ascii=False))

time.sleep(10)

controller.update_stream_settings("cam1", keyframe_only=False)

time.sleep(10)

# 获取当前帧并保存为图片

frame_data = controller.get_frame("cam2")

if frame_data:

with open("frame.jpg", "wb") as f:

f.write(frame_data)

print("已保存当前帧为 frame.jpg")

# 停止流

controller.stop_stream("cam1")

controller.stop_stream("cam2")

controller.stop_stream("cam3")

controller.stop_stream("cam4")

# 错误停止 是否有误

controller.stop_stream("cam5")

# 移除流

controller.remove_stream("cam1")

controller.remove_stream("cam2")

controller.remove_stream("cam3")

controller.remove_stream("cam4")

controller.remove_stream("cam5")

# 列出所有流

streams = controller.list_streams()

if streams:

print("当前所有流:")

print(json.dumps(streams, indent=2, ensure_ascii=False))总结

本小节是重点内容之一,详细介绍了拉流解码模块的设计与管理,并在此基础之上基于fastapi实现了视频实时显示系统的设计,如果大家伙对代码不熟悉,或想听我进行详细讲解,我将抽空录制视频针对代码进行解读,以便大家进行理解和认识

下期预告

- 目标检测算法简介

- yolo算法的使用

附录

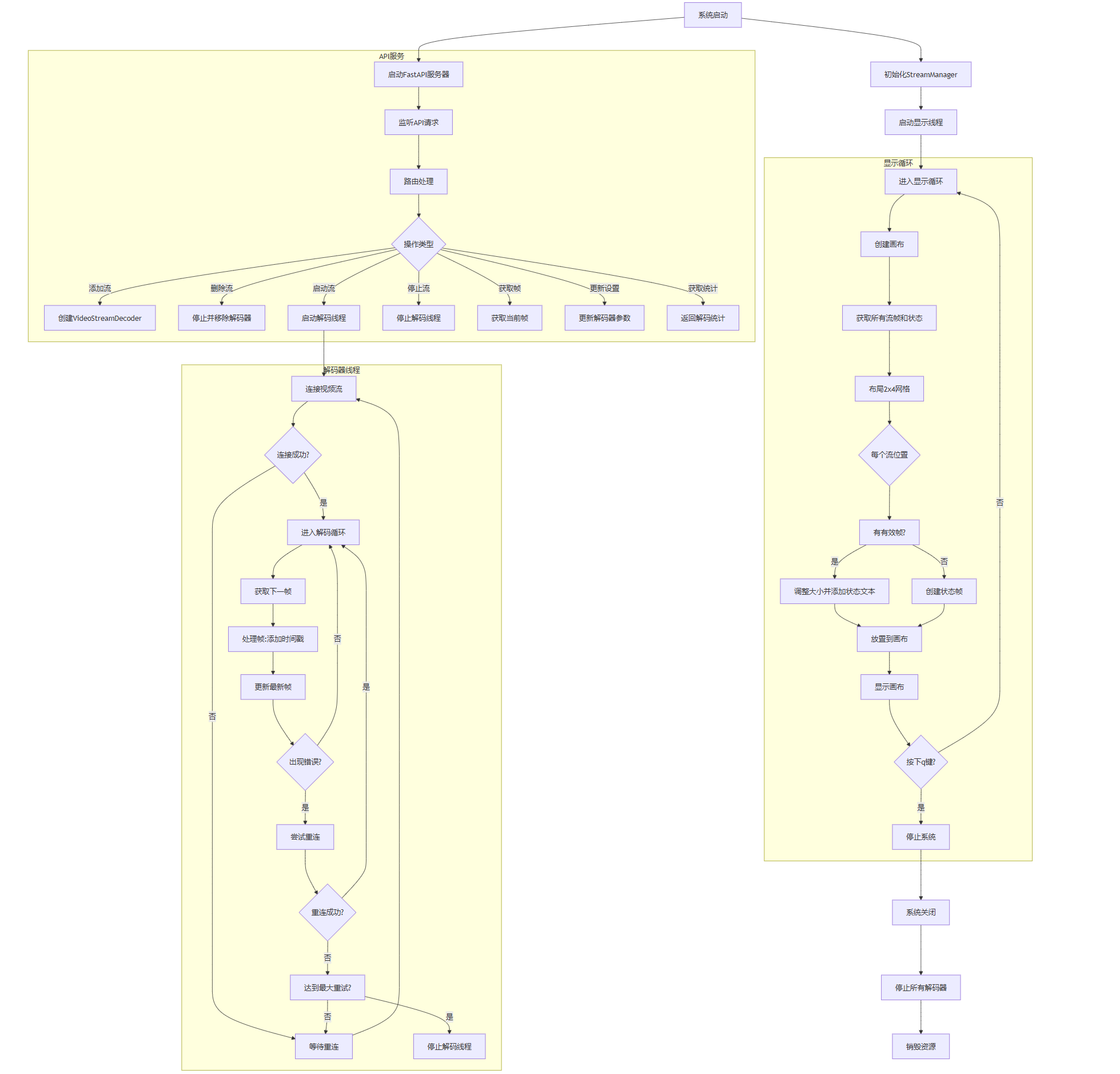

- 以下是案例代码的执行流程示意图,供大家参考

- 参考mermaid流程图

python

flowchart TD

A[系统启动] --> B[初始化StreamManager]

B --> C[启动显示线程]

C --> D[进入显示循环]

subgraph DisplayLoop [显示循环]

D --> E[创建画布]

E --> F[获取所有流帧和状态]

F --> G[布局2x4网格]

G --> H{每个流位置}

H --> I[有有效帧?]

I -->|是| J[调整大小并添加状态文本]

I -->|否| K[创建状态帧]

J --> L[放置到画布]

K --> L

L --> M[显示画布]

M --> N{按下q键?}

N -->|是| O[停止系统]

N -->|否| D

end

A --> P[启动FastAPI服务器]

subgraph APIServer [API服务]

P --> Q[监听API请求]

Q --> R[路由处理]

R --> S{操作类型}

S -->|添加流| T[创建VideoStreamDecoder]

S -->|删除流| U[停止并移除解码器]

S -->|启动流| V[启动解码线程]

S -->|停止流| W[停止解码线程]

S -->|获取帧| X[获取当前帧]

S -->|更新设置| Y[更新解码器参数]

S -->|获取统计| Z[返回解码统计]

end

subgraph DecoderThread [解码器线程]

V --> AA[连接视频流]

AA --> AB{连接成功?}

AB -->|是| AC[进入解码循环]

AB -->|否| AD[等待重连]

AD --> AA

AC --> AE[获取下一帧]

AE --> AF[处理帧:添加时间戳]

AF --> AG[更新最新帧]

AG --> AH{出现错误?}

AH -->|是| AI[尝试重连]

AH -->|否| AC

AI --> AJ{重连成功?}

AJ -->|是| AC

AJ -->|否| AK[达到最大重试?]

AK -->|是| AL[停止解码线程]

AK -->|否| AD

end

O --> AM[系统关闭]

AM --> AN[停止所有解码器]

AN --> AO[销毁资源]