-------------------------在此感谢土堆老师QAQ------------------------------

1. 环境配置

1.1 Python学习两大法宝

- dir():打开,看见里面包含哪些工具;

- help():说明书,说明怎样使用这些工具;

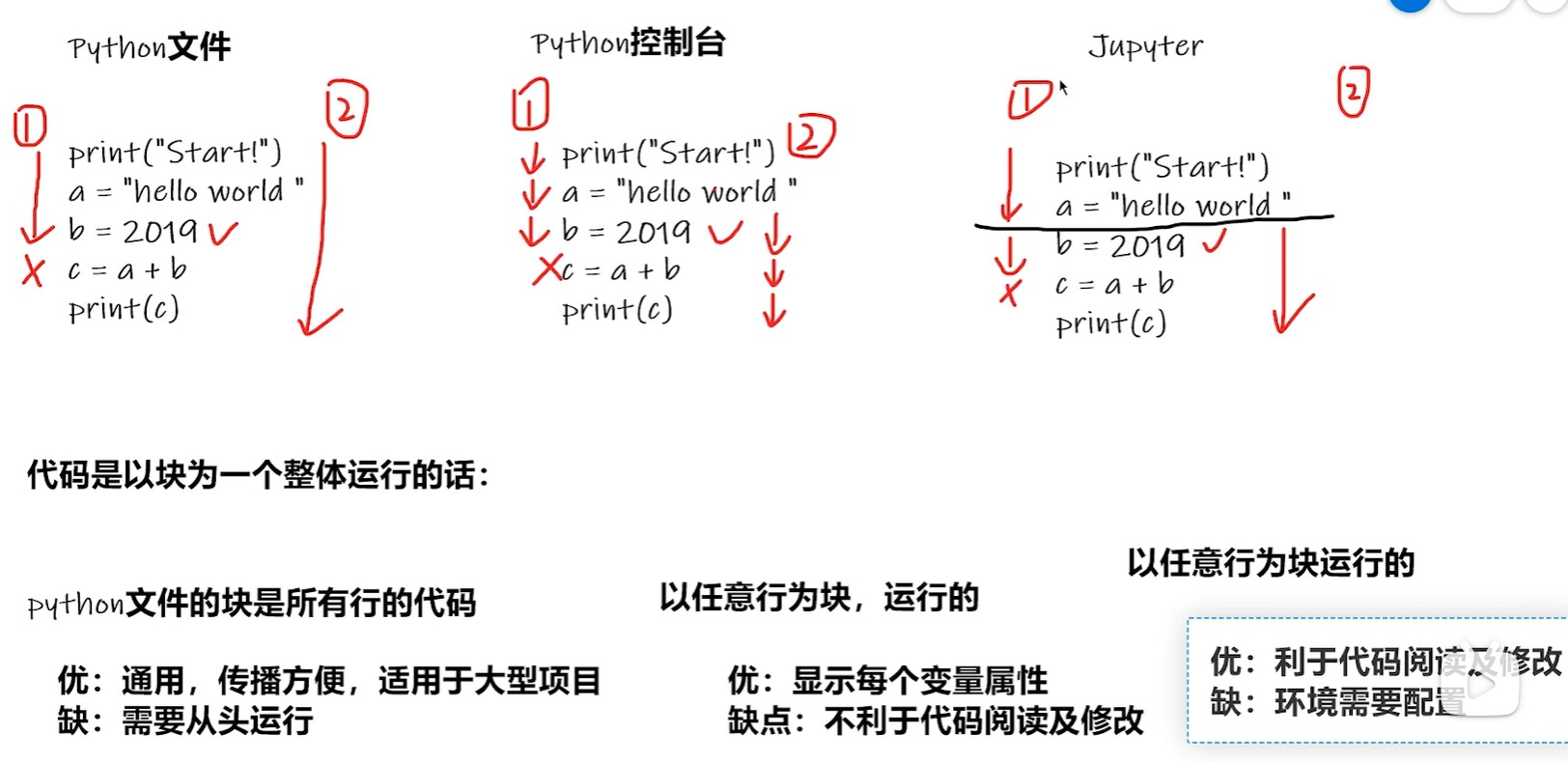

1.2 Pycham与Jupyter

2. 加载数据

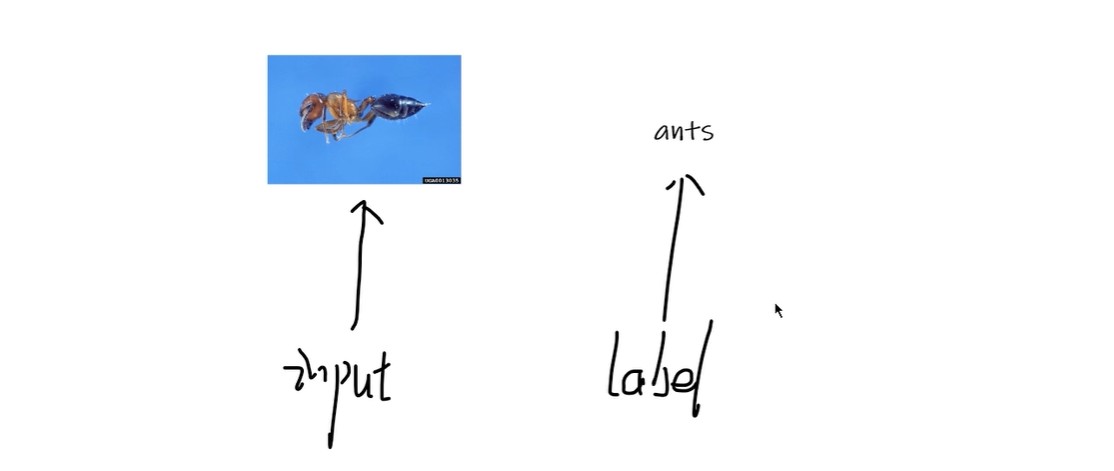

2.1 input and label

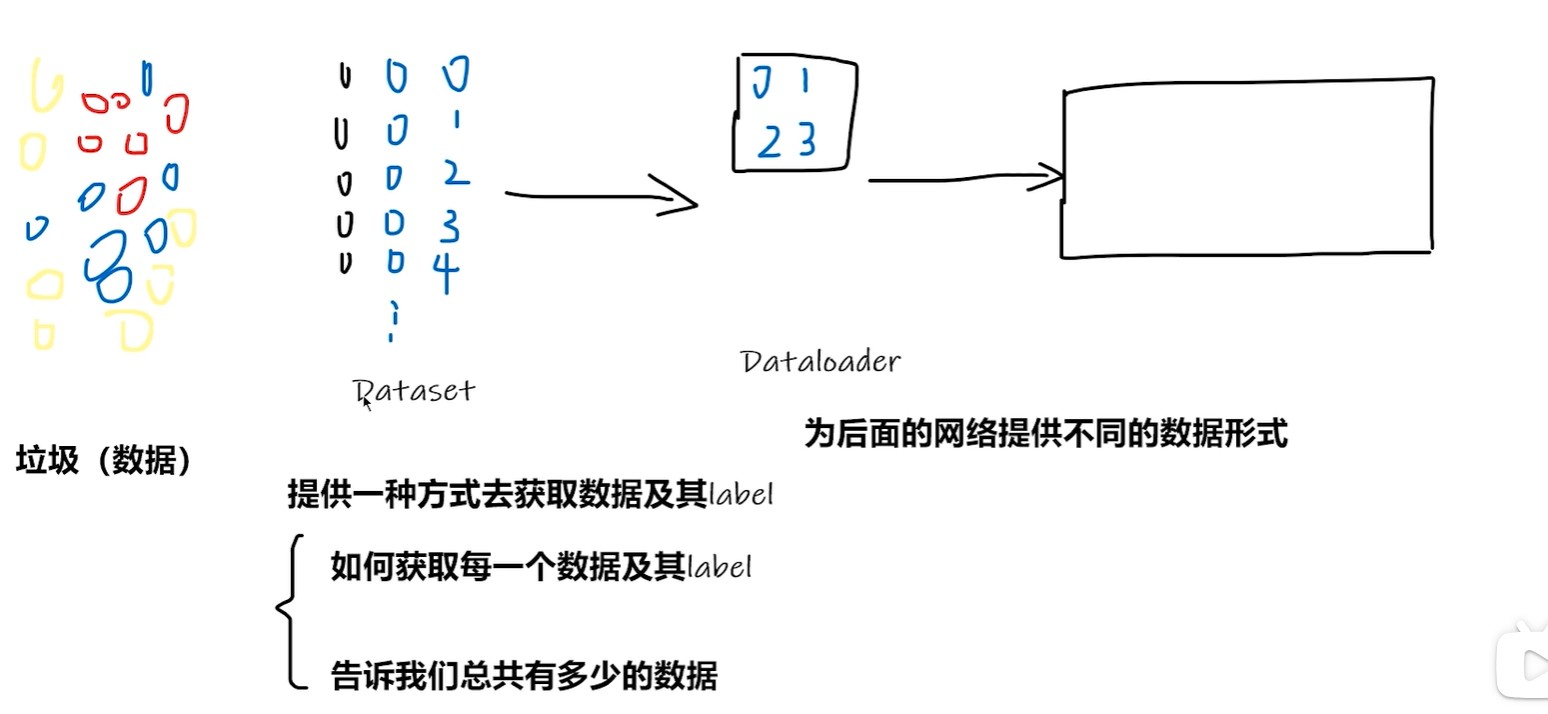

2.2 Dataset and Dataloader

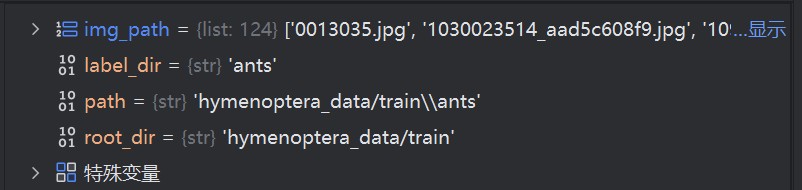

- 利用 Dataset 处理数据集并实例化:

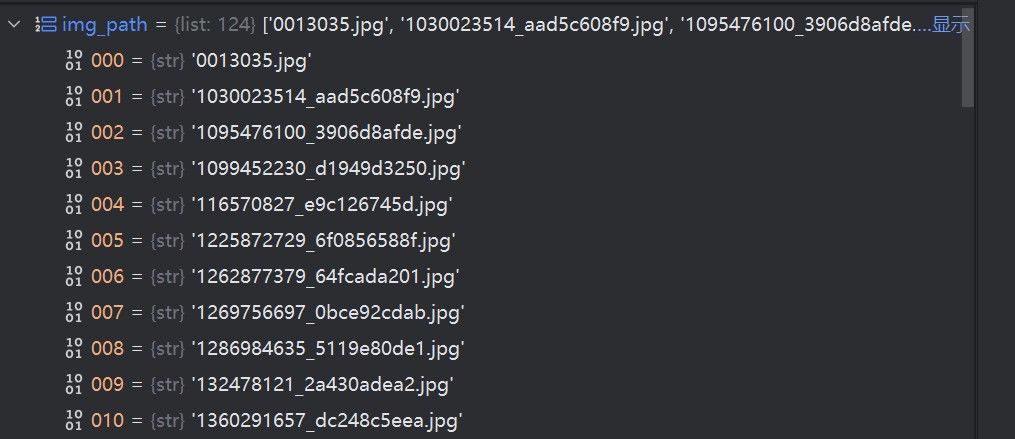

- 在 init 方法里面输入根目录和标签目录,创建完整路径并得到文件名列表;在 getitem 方法里面输入索引 idx,创建该索引图片完整路径,打开图片并和标签一起返回;在 len 方法里面返回数据集长度,即图片个数;

python

from torch.utils.data import Dataset

from PIL import Image # Python Image Libarary 图像处理库

import os

class MyData(Dataset):

def __init__(self, root_dir, label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(root_dir, label_dir) # 创建标签目录的完整路径

self.img_path = os.listdir(self.path) # 获取标签目录下的所有文件名

def __getitem__(self,idx):

img_name = self.img_path[idx] # 获取当前索引对应的图片文件名

img_item_path = os.path.join(self.root_dir, self.label_dir, img_name) # 构建完整的图片路径

img = Image.open(img_item_path)

label = self.label_dir

return img, label # 返回图片和标签

def __len__(self):

return len(self.img_path) # 返回数据集长度

root_dir = "hymenoptera_data/train"

ants_label_dir = "ants"

ants_dataset = MyData(root_dir, ants_label_dir) # 实例化

bees_label_dir = "bees"

bees_dataset = MyData(root_dir, bees_label_dir)

train_dataset = ants_dataset + bees_dataset # 合成一个数据集- 运行的一些结果:

3. TensorBoard

3.1 add_scalar

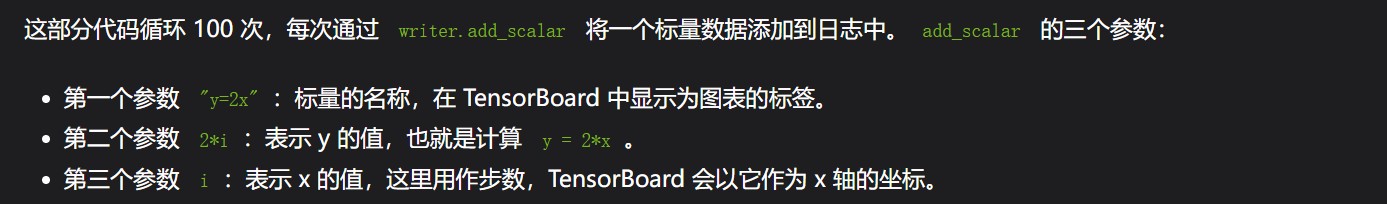

- 这段代码使用了

torch.utils.tensorboard中的SummaryWriter类,它是 PyTorch 提供的用于将训练过程中的信息(如损失、精度等)记录到 TensorBoard 的工具。 - TensorBoard 是一个可视化工具,可以查看和分析 PyTorch 或 TensorFlow 中的训练过程。

- 创建了一个

SummaryWriter对象writer,并指定日志存储的目录为logs。TensorBoard 会读取这个目录中的日志文件,并通过网页进行展示。每次运行这段代码时,logs目录中的数据会被更新。

python

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter("logs")

# writer.add_image()

# y = 2*x

for i in range(100):

writer.add_scalar("y=2x", 2*i, i)

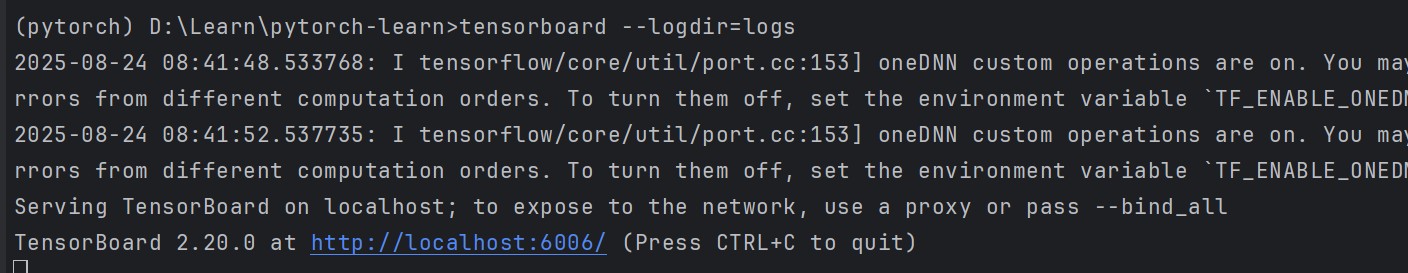

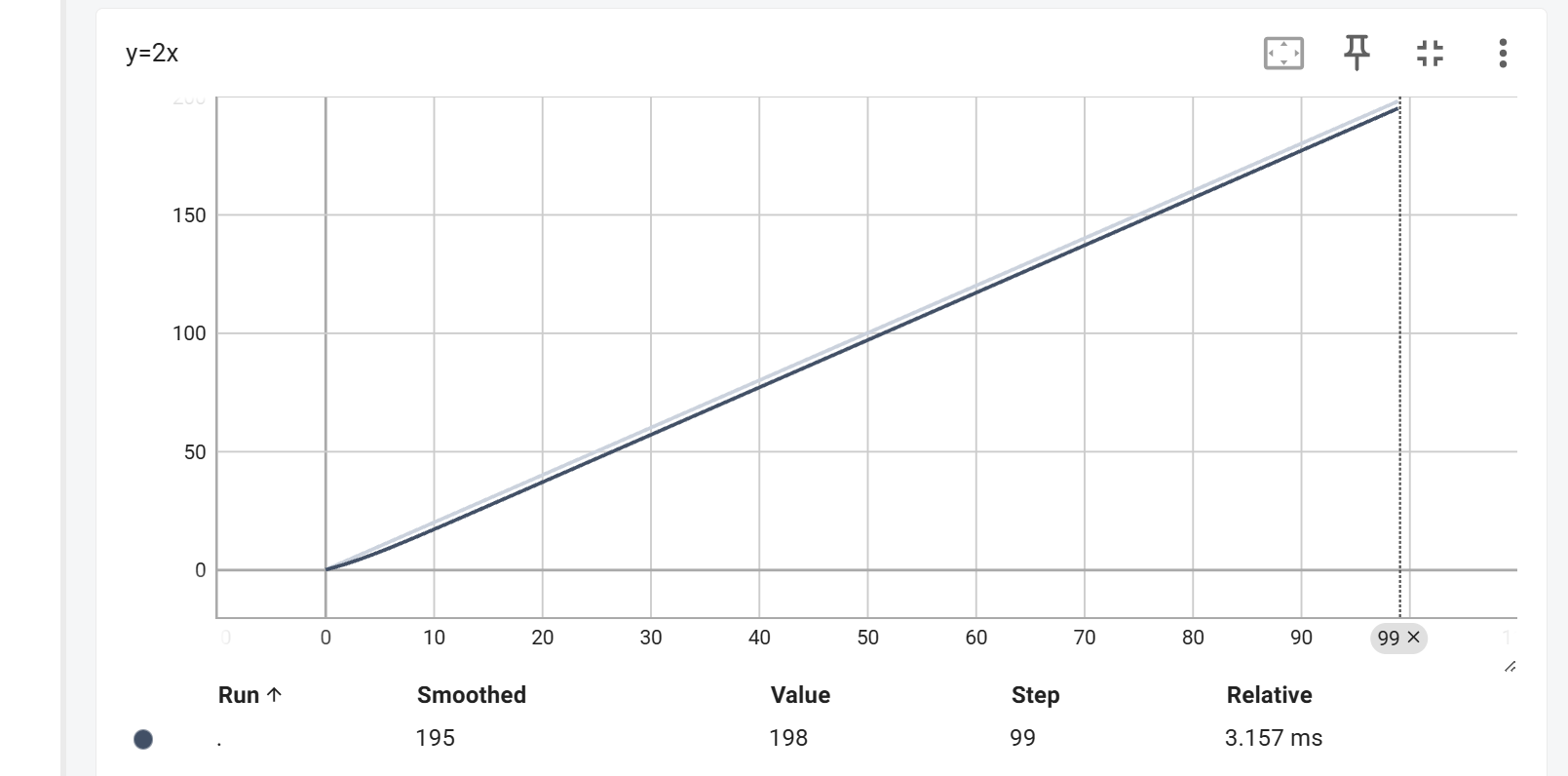

writer.close()- tensorboard --logdir=logs:启动 TensorBoard 来查看结果,访问

http://localhost:6006,可以看到名为y=2x的曲线图;(需要先运行代码) - 还可以通过 tensorboard --logdir=logs --port=6008 来切换别的端口进行访问;

- 在 Tensorboard 中显示如下:

3.2 add_image

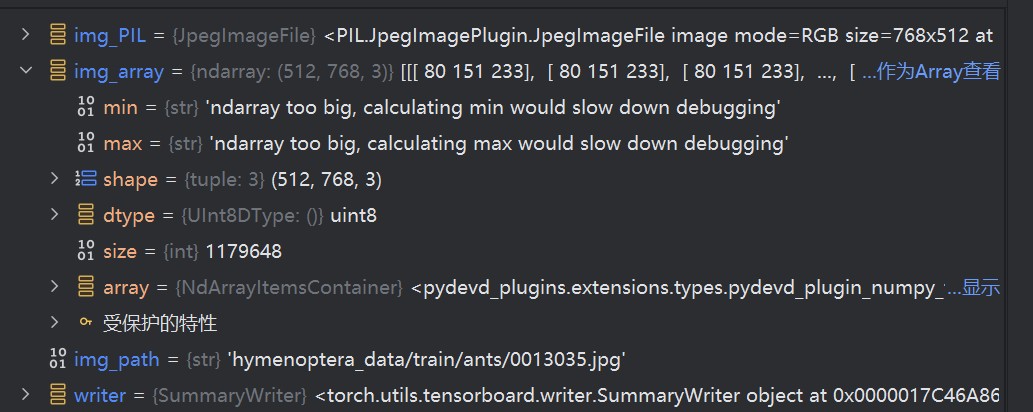

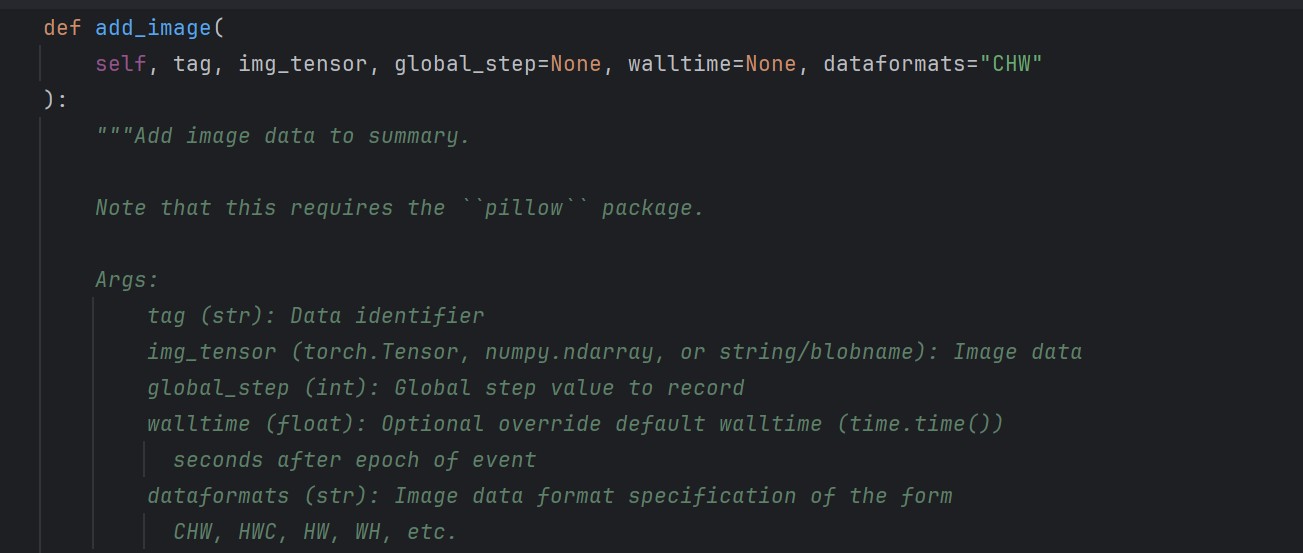

- 输入变量:

1.tag:图像的标签;-

- img_tensor 需要为 torch.Tensor(利用Transform)或者 numpy.ndarray(利用numy);

-

- global_step:训练的步数,可以用来记录训练过程中图像变化的时间点;

4.dataformats:表示输入图像数据的格式,默认值是'CHW'。常见的格式包括:'CHW':通道数 (C),高度 (H),宽度 (W)'HWC':高度 (H),宽度 (W),通道数 (C)

- 注意:从 PIL 导入的图片是 HWC 型(观察 shape 可知),但由于默认值为 CHW,所以需要在变量内指明;

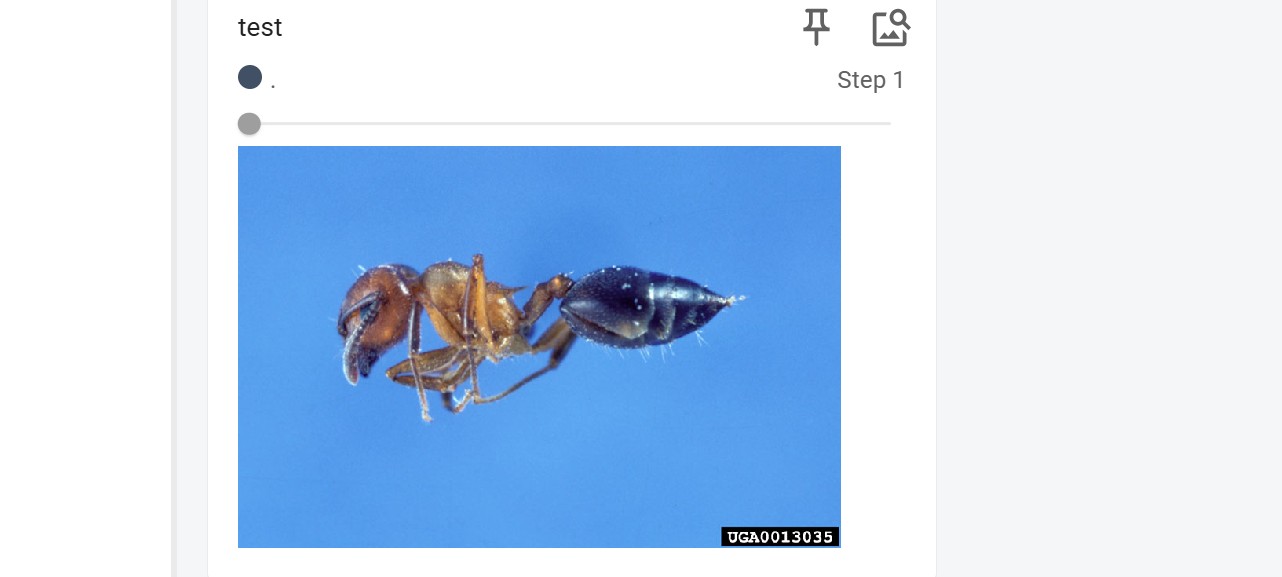

- 运行代码:

python

from torch.utils.tensorboard import SummaryWriter

import numpy as np

from PIL import Image

writer = SummaryWriter("logs")

img_path = "hymenoptera_data/train/ants/0013035.jpg"

img_PIL = Image.open(img_path)

img_array = np.array(img_PIL)

writer.add_image("test", img_array, 1, dataformats="HWC")

# y = 2*x

for i in range(100):

writer.add_scalar("y=2x", 2*i, i)

writer.close()- 在 TensorBoard 中观察:

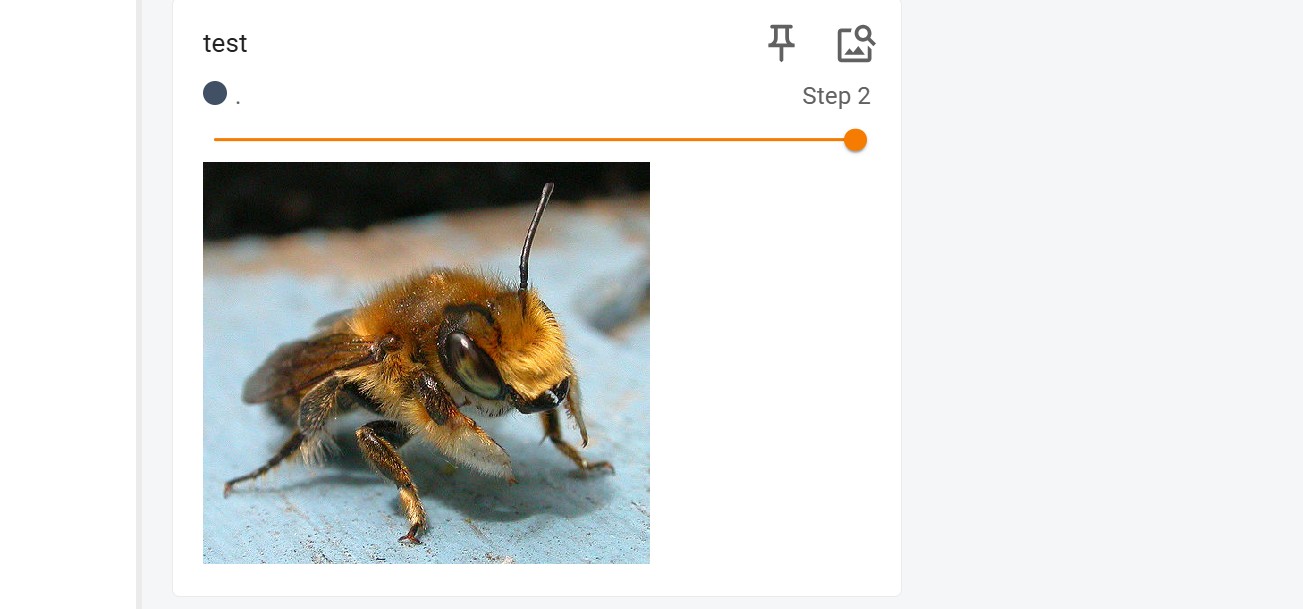

- 如果此时更换图片,且 tag 不变,global_step 改为 2,则可以滑动图片上方时间轴进行 step 的切换;

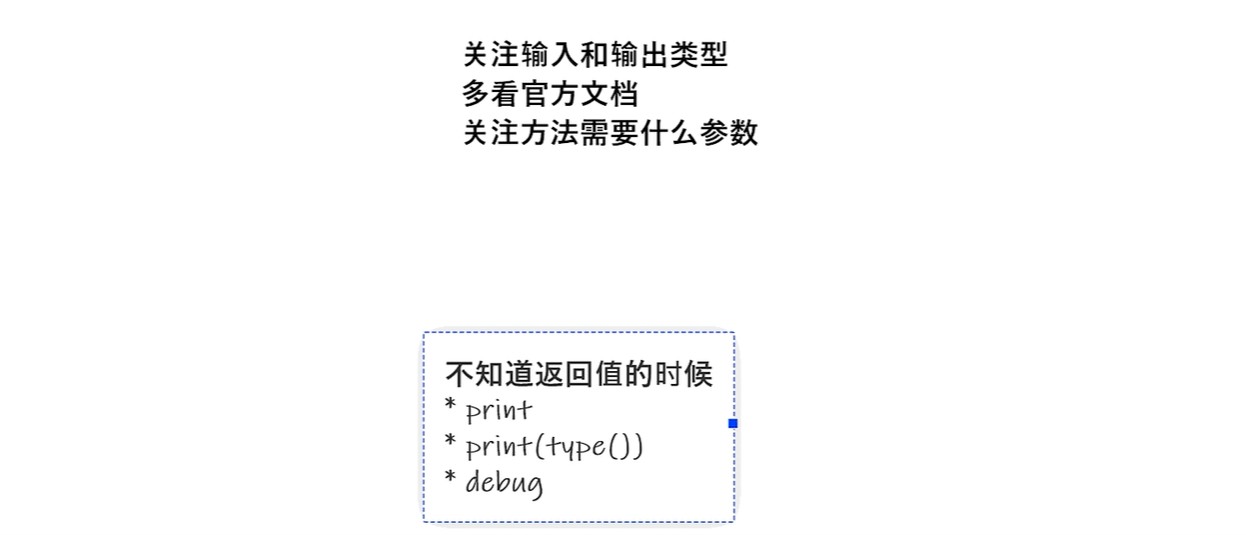

4. Transforms

4.1 魔法方法

python

class Person():

def __call__(self, name): # 魔法方法

print('__call__' + ' hello ' + name)

def hello(self,name):

print('hello ' + name)

person = Person()

person('zhangsan')

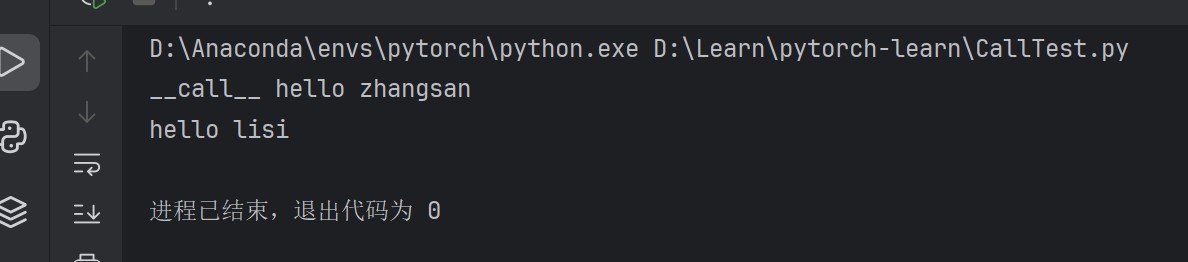

person.hello('lisi')- 结果如下:可以看到得到的结果是一样的,通过魔法方法(前后各加两条下划线),可以直接在实例化中输入,而不用 '. + 方法' 调用;

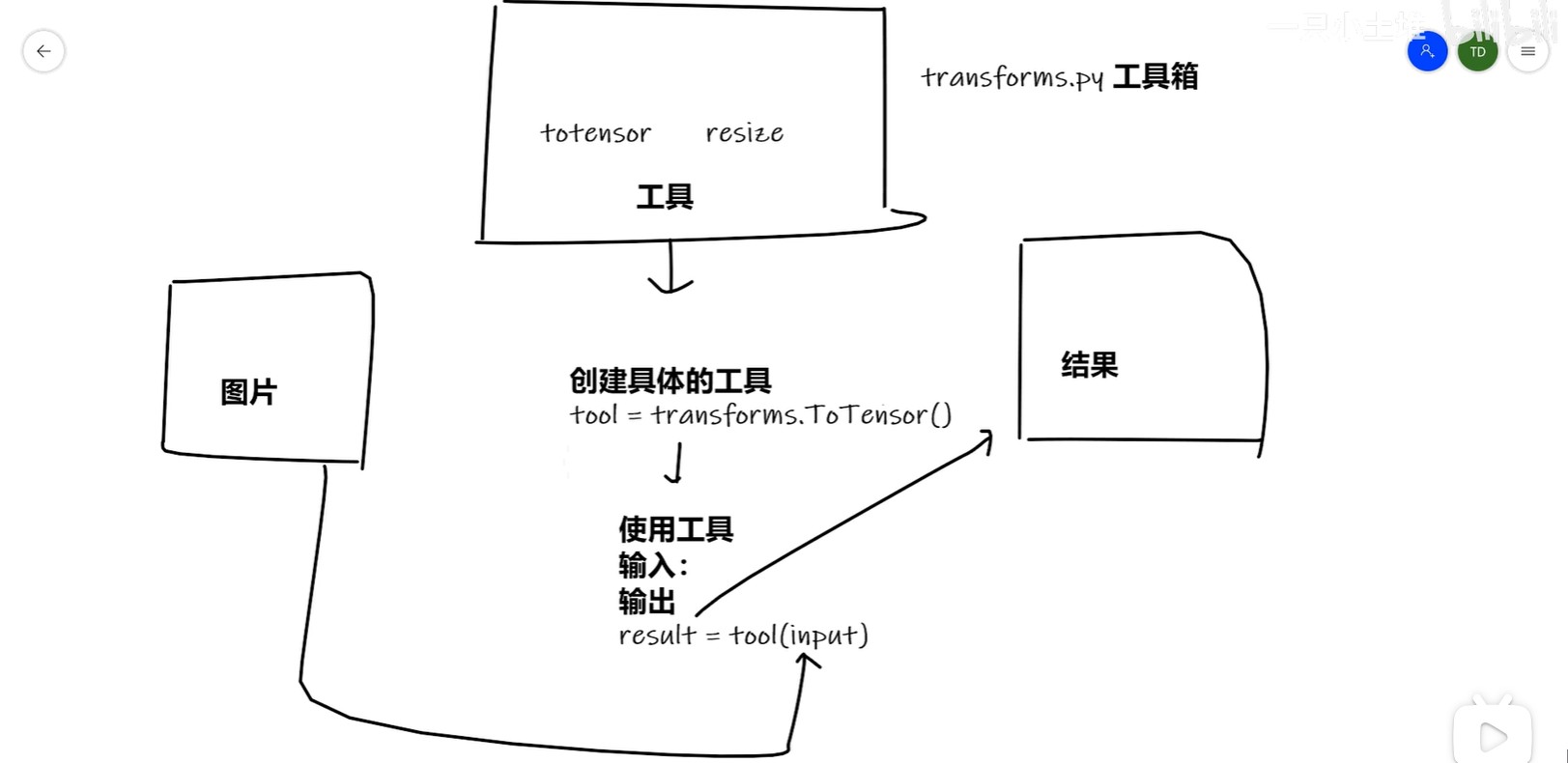

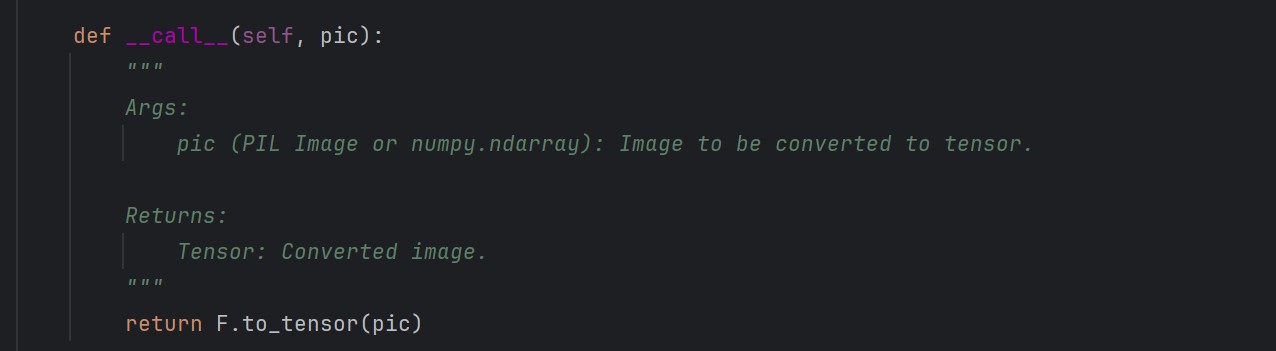

4.2 Tensor

- 输入一个 PIL(PIL读入)/ numpy.ndarray(cv2读入) 类型的图片,返回 tensor 类型;

python

from torchvision import transforms

from PIL import Image

import cv2

from torch.utils.tensorboard import SummaryWriter

img_path = "hymenoptera_data/train/ants/0013035.jpg"

img_PIL = Image.open(img_path)

img_cv = cv2.imread(img_path)

tensor_trans = transforms.ToTensor() # 实例化

img_tensor = tensor_trans(img_PIL)

print(img_tensor)

writer = SummaryWriter("logs")

writer.add_image("img_Tensor", img_tensor)

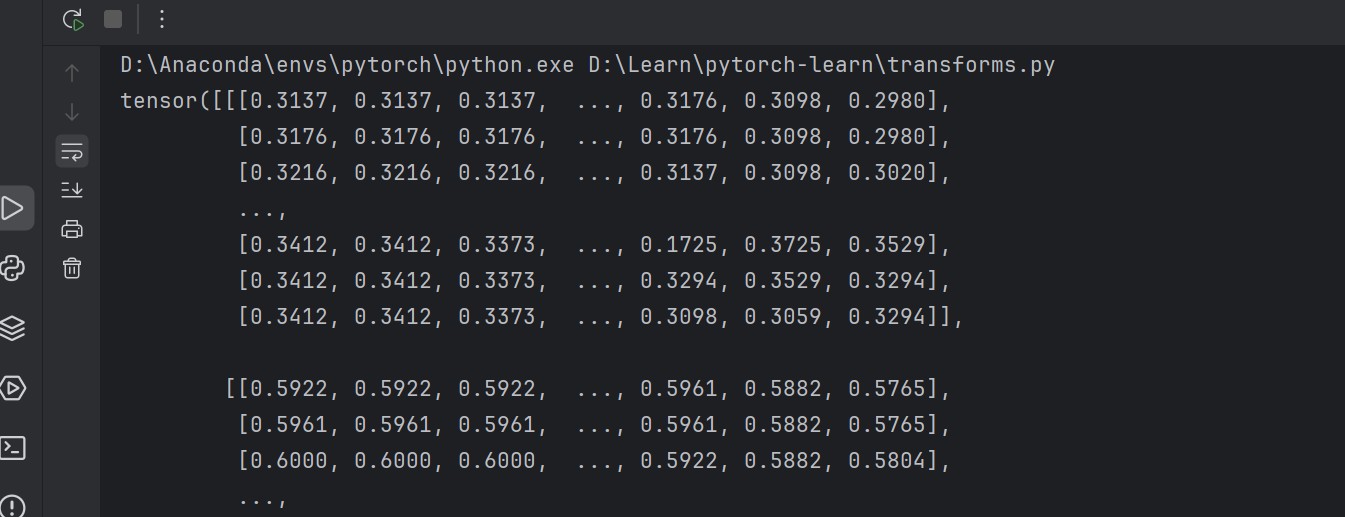

writer.close()- 运行结果:

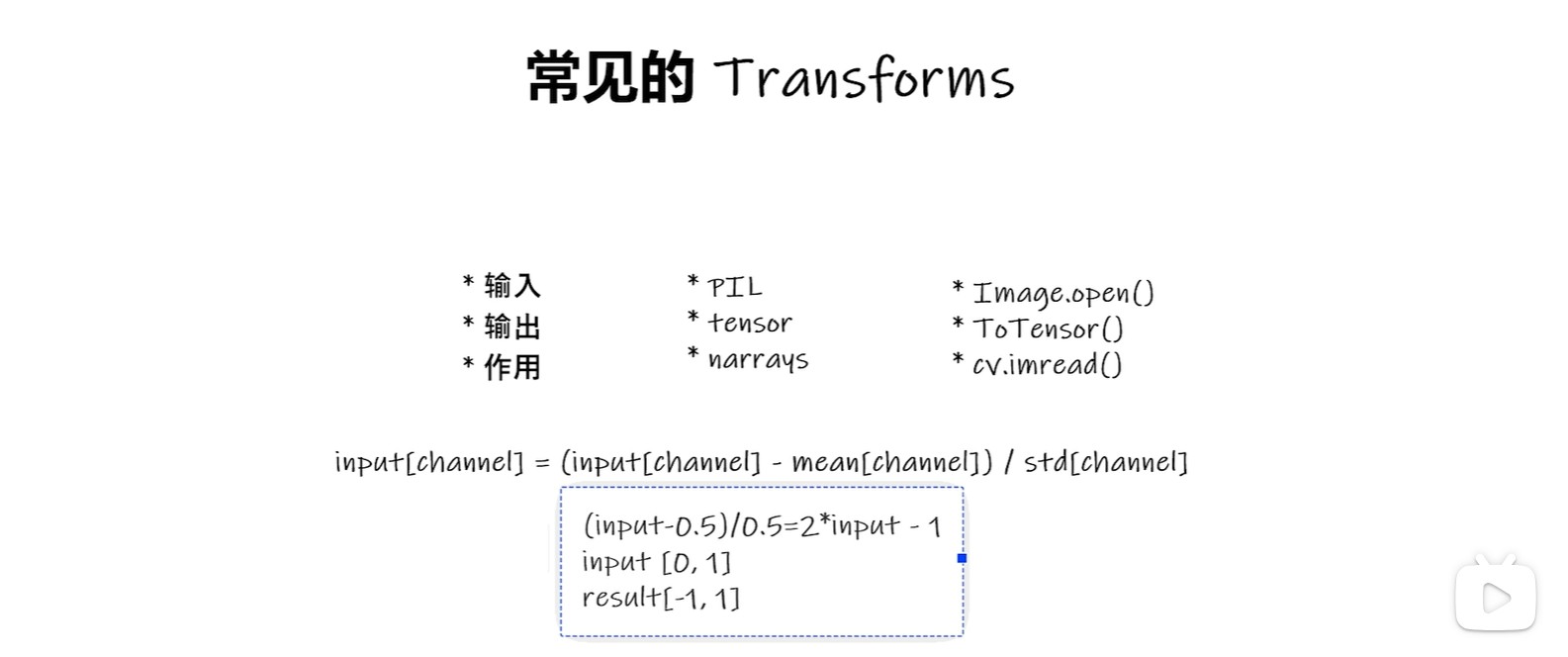

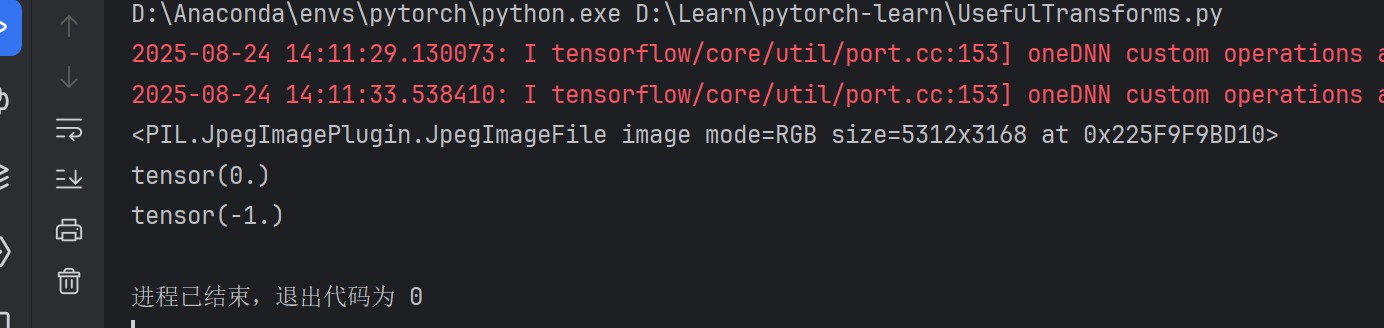

4.3 Normalize

- 像素值计算:

- 使用 ToTensor 和 Normalize(输入需要为 Tensor):

python

from PIL import Image

from torchvision import transforms

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter("logs")

img = Image.open("images/saber.png")

print(img)

# ToTensor

trans_tensor = transforms.ToTensor()

img_tensor = trans_tensor(img)

writer.add_image("ToTensor", img_tensor)

# Normalize

print(img_tensor[0][0][0])

trans_norm = transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

img_norm = trans_norm(img_tensor)

print(img_norm[0][0][0])

writer.add_image("Normalize", img_norm)

writer.close()- 计算结果:

- tensorboard 显示结果:

4.4 Resize

- 输入的图片可以是 PIL,也可以是 Tensor 类型:

- 如果 Resize 里面只有一个数字,则较短边变化为该大小,图片等比例变换;

python

# Resize

print(img.size)

trans_resize = transforms.Resize((512, 512))

img_resize = trans_resize(img_tensor)

writer.add_image("Resize", img_resize)

print(img_resize)4.5 Compose

- Compose 方法用来组合不同的 Transforms 工具,更加一体化;

- 这里先给 Resize 输入 PIL 类型的图片,再进行 Tensor 类型转换:

python

# Compose

trans_resize_2 = transforms.Resize(512)

trans_compose = transforms.Compose([trans_resize_2, trans_tensor])

img_resize_2 = trans_compose(img)

writer.add_image("Resize", img_resize_2, 1)4.6 RandomCrop

- 随机裁剪方法:输入一个数字则输出的是正方形,序列则为(H,W);

python

# RandomCrop

trans_crop = transforms.RandomCrop(512)

trans_compose_2 = transforms.Compose([trans_crop, trans_tensor])

for i in range(10):

img_crop = trans_compose_2(img)

writer.add_image("RandomCrop", img_crop, i)- 总结:

5. torchvision

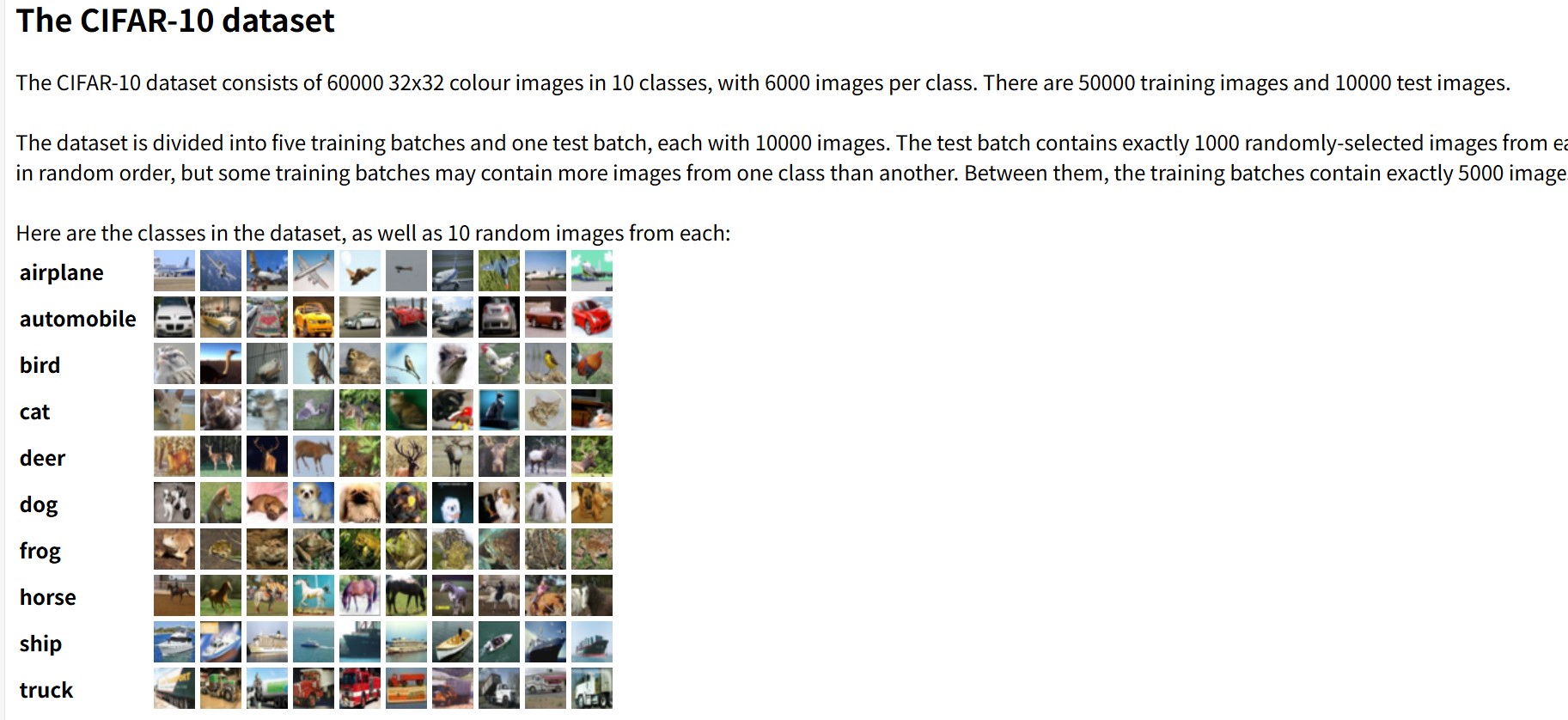

5.1 CIFAR10 数据集

- 数据集如下:

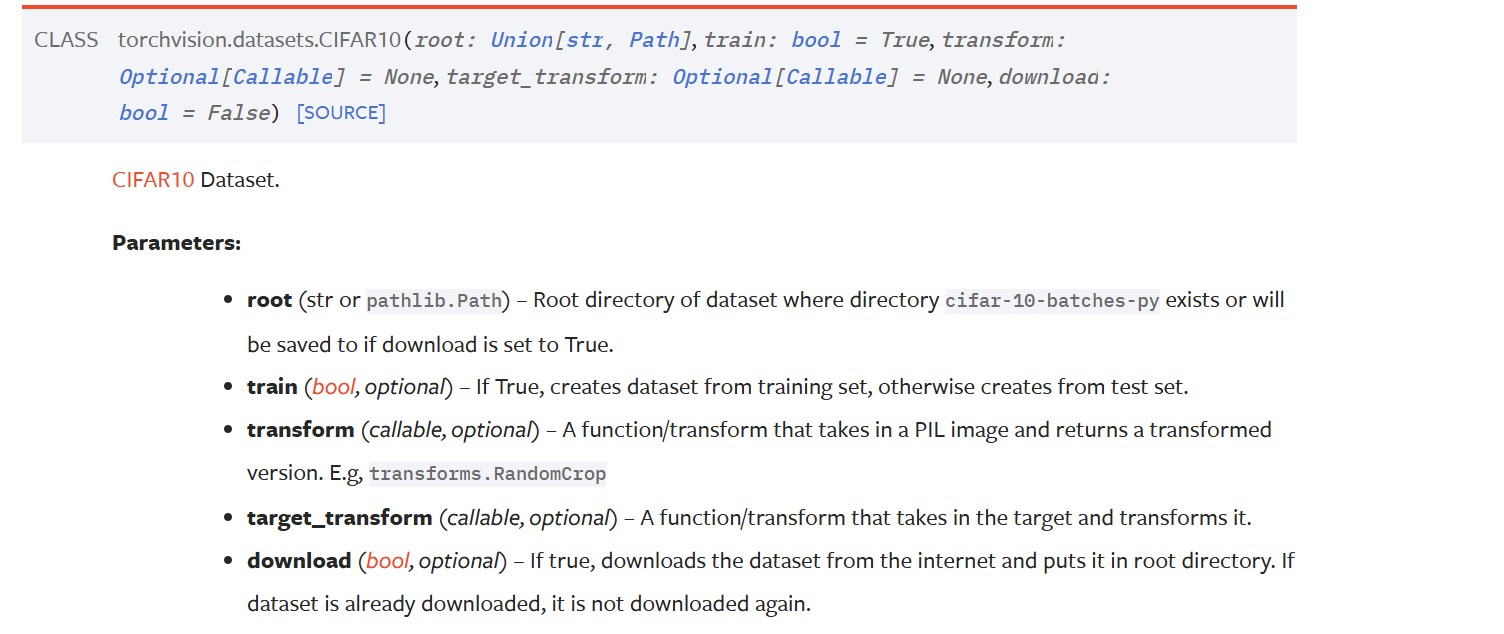

- 调用方法:

test_set[0]返回的是一个包含 一张图片 和它对应的 标签 的元组(image, label)

python

import torchvision

train_set = torchvision.datasets.CIFAR10("./dataset", train=True, download=True)

test_set = torchvision.datasets.CIFAR10("./dataset", train=False, download=True)

print(test_set[0])

print(train_set.classes)

img, target = train_set[0] # (图片, 标签)

print(img)

print(target)

print(test_set.classes[target])

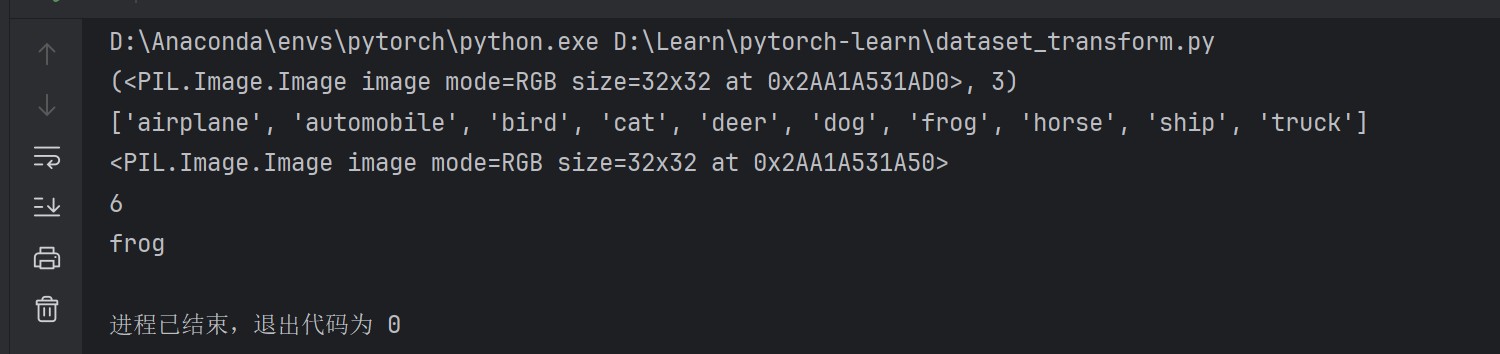

img.show()- 运行结果:

5.2 利用 transforms

- 加入 transform 变量,将图片转化为 tensor 类型:

python

import torchvision

from torchvision.transforms import transforms

from torch.utils.tensorboard import SummaryWriter

dataset_transform = transforms.Compose([

transforms.ToTensor()

])

train_set = torchvision.datasets.CIFAR10("./dataset", train=True, transform=dataset_transform, download=True)

test_set = torchvision.datasets.CIFAR10("./dataset", train=False, transform=dataset_transform, download=True)

# print(test_set[0])

# print(train_set.classes)

#

# img, target = train_set[0]

# print(img)

# print(target)

#

# print(test_set.classes[target])

# img.show()

writer = SummaryWriter("logs")

for i in range(10):

img, target = test_set[i]

writer.add_image("test_set", img, i)

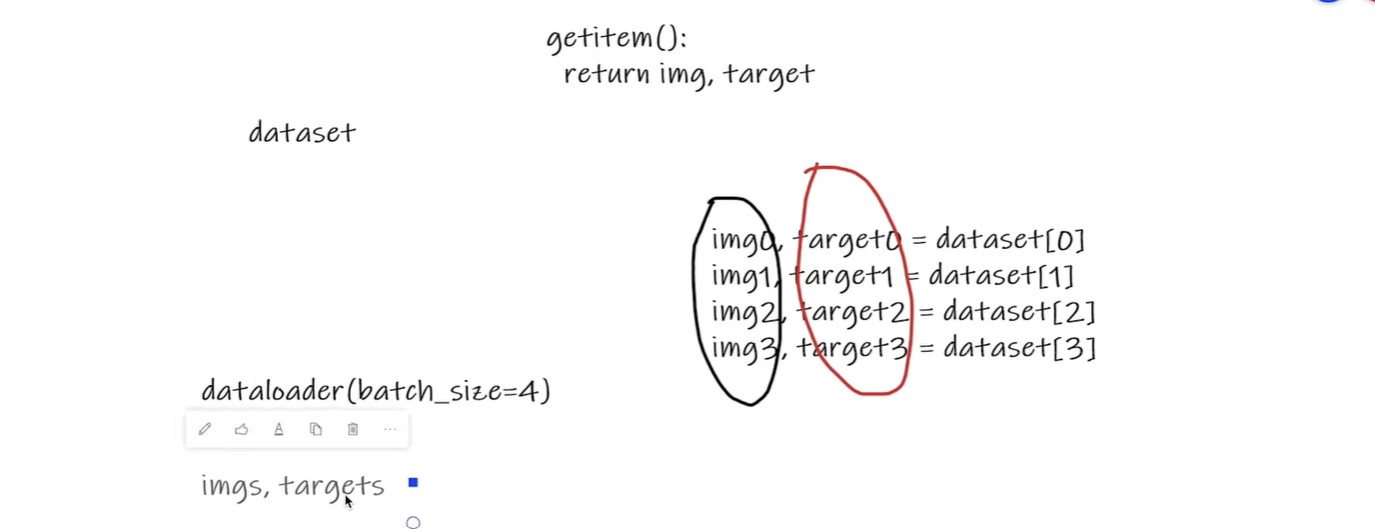

writer.close()6. DataLoader

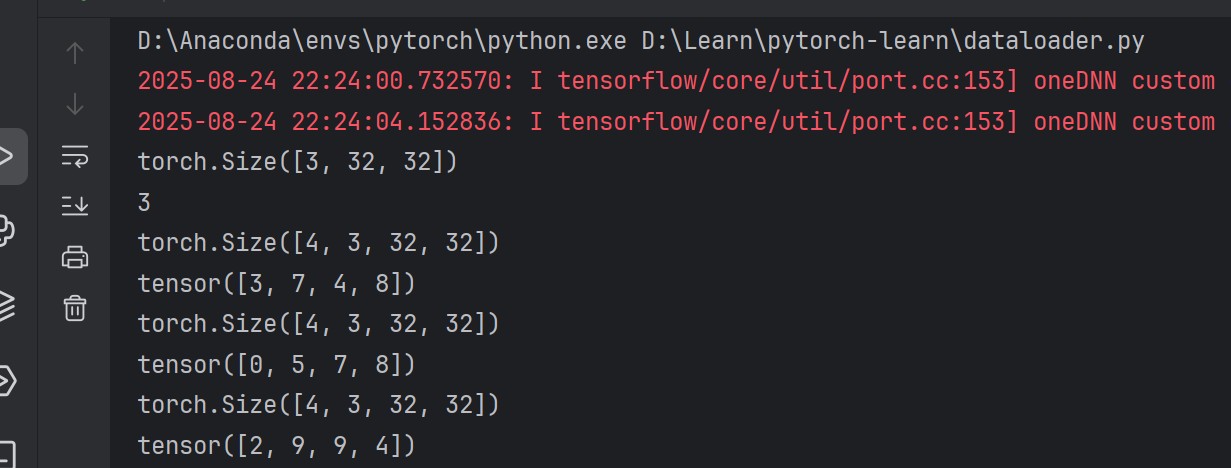

6.1 batch

- batch = 4:将 img,target 分别按 4 个进行打包分组;

- 运行结果如下:4 代表 4 张图片,tensor 是四张图片的标签集合;

6.2 DataLoader 使用方法

-

batch_size: 每次加载多少个样本(默认为

1); -

shuffle: 设置为

True可在每个 epoch 重新排列数据(默认为False); -

num_workers: 用于数据加载的子进程数量。

0表示数据将在主进程中加载; -

drop_last: 如果 Dataset 大小不能被 batch_size 整除,则设置为

True以丢弃最后一个不完整的批次; -

注意此代码中的 add_images 而不是 add_image,因为 imgs 包含多张图片;

python

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

# 准备的测试数据集

test_data = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64, shuffle=True, num_workers=0, drop_last=False)

# 测试数据集中第一张图片及target

img, target = test_data[0]

print(img.shape)

print(target)

writer = SummaryWriter("dataloader")

for epoch in range(2):

step = 0

for data in test_loader:

imgs, targets = data

# print(imgs.shape)

# print(targets)

writer.add_images("test_data", imgs, step)

step += 1

writer.close()7. 神经网络

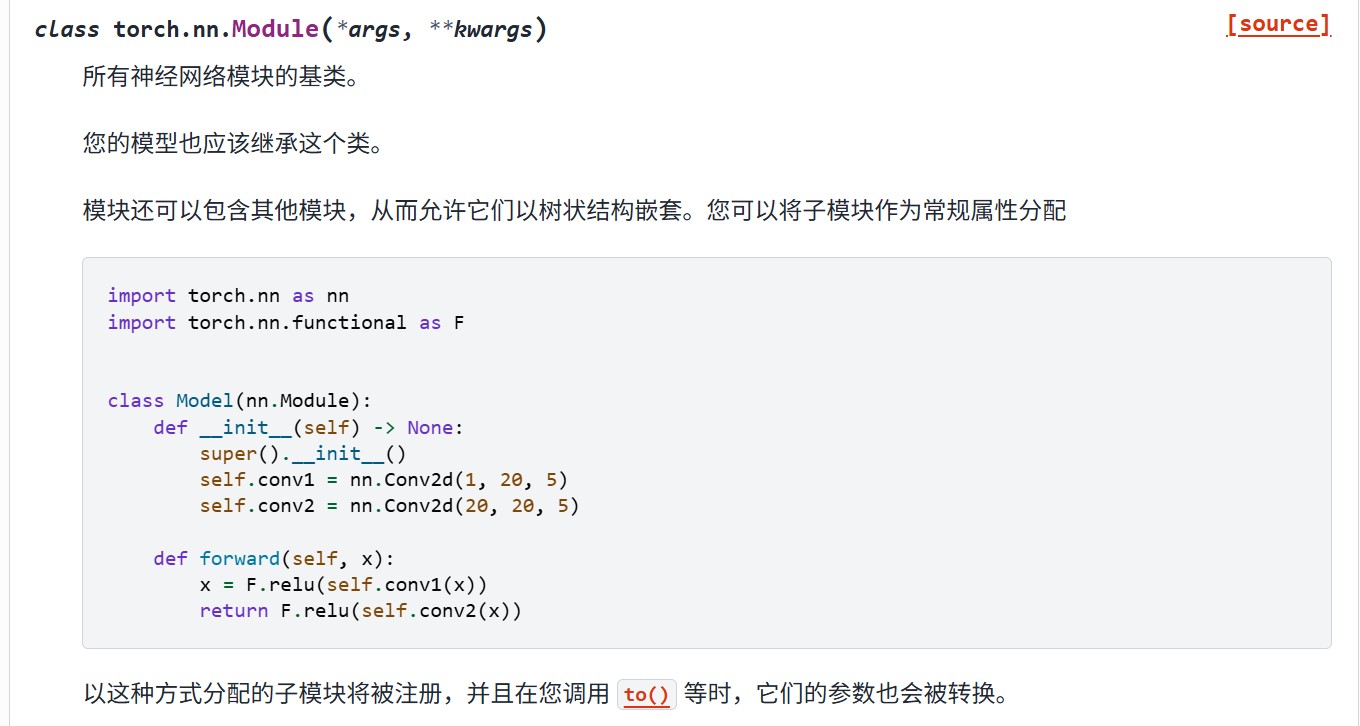

7.1 nn.Module

- 使用模板:

- 在 Python 3 中,建议使用

super().__init__();

python

import torch

from torch import nn

class Model(nn.Module):

def __init__(self):

super().__init__()

def forward(self, input):

output = input + 1

return output

model = Model()

x = torch.tensor(1.0)

output = model(x)

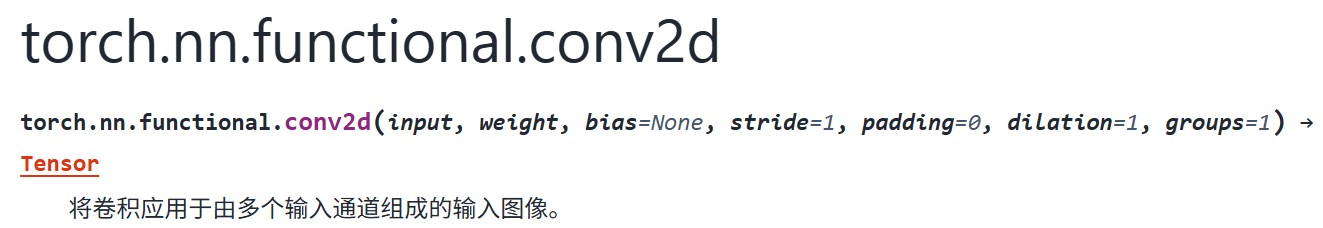

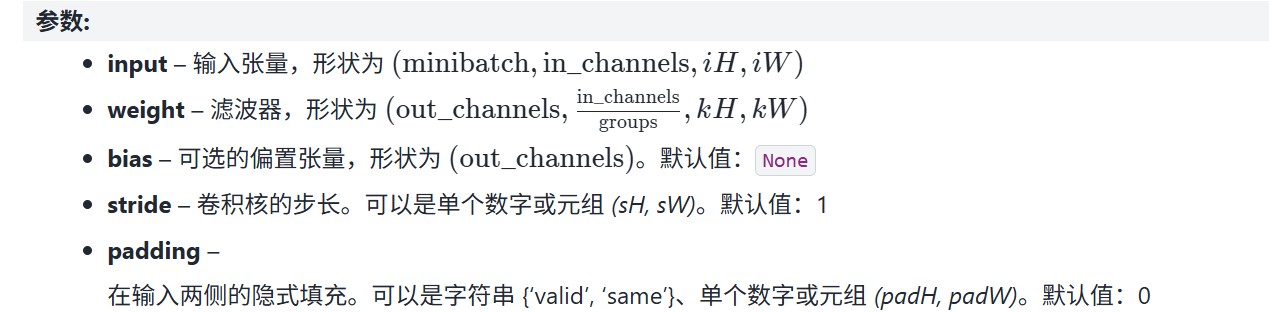

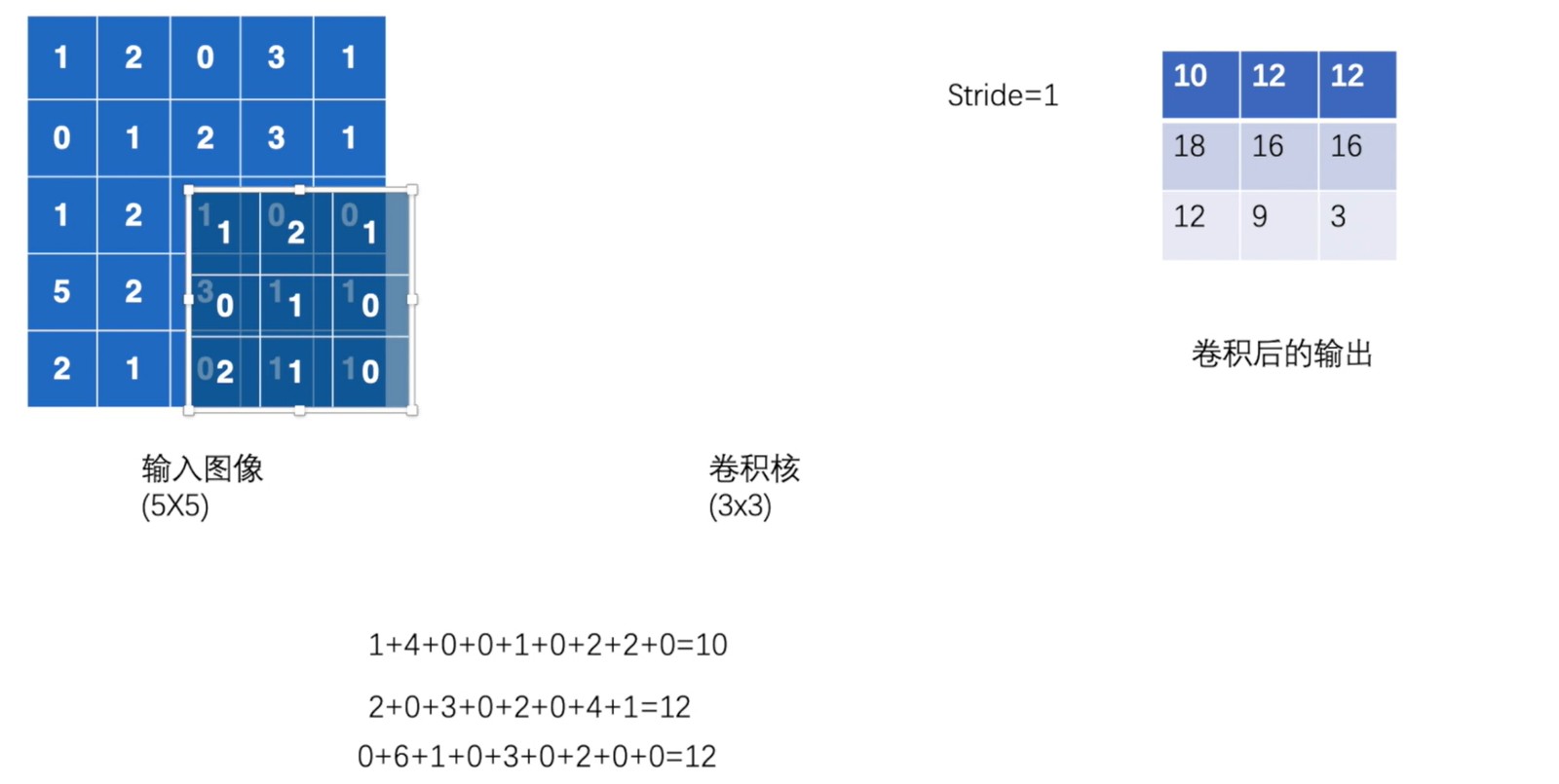

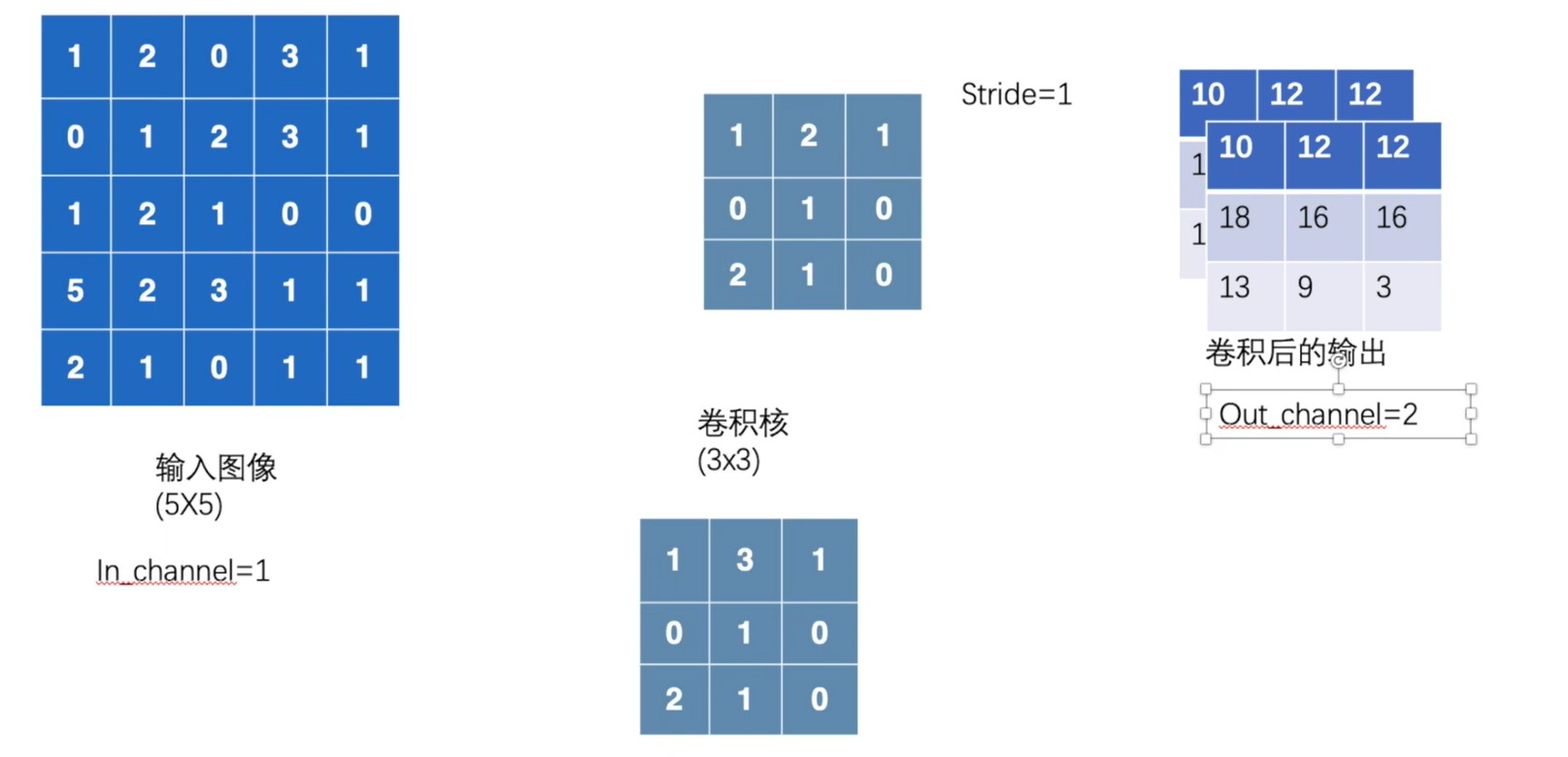

print(output)7.2 卷积(conv)计算

- 图像二维卷积:

- 计算方式:

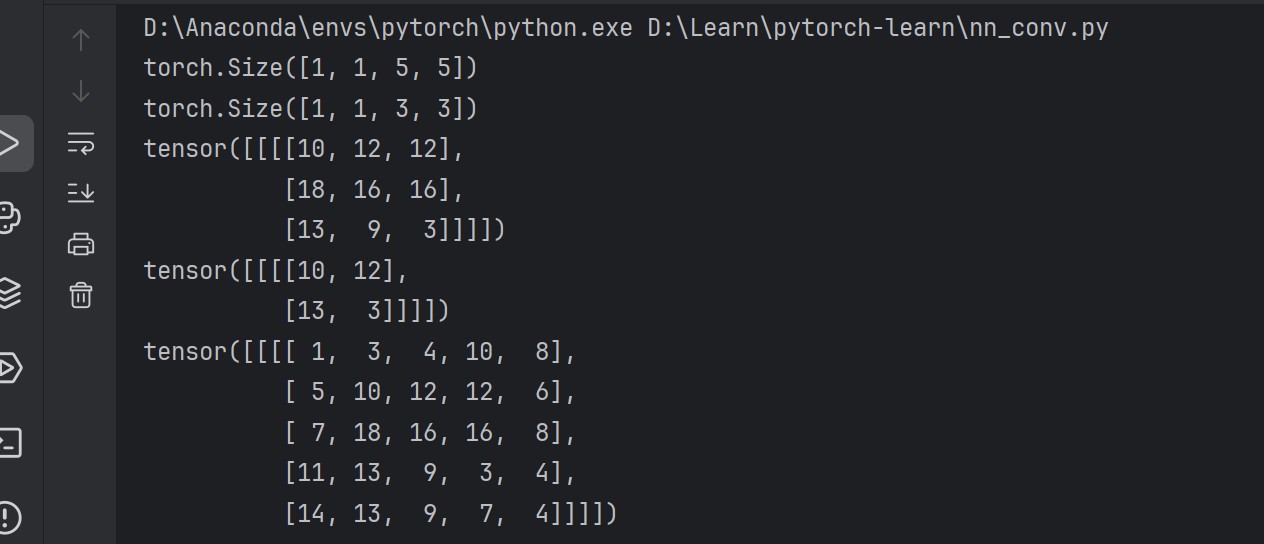

- 卷积实现:

python

import torch

import torch.nn.functional as F

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]])

kernel = torch.tensor([[1, 2, 1],

[0, 1, 0],

[2, 1, 0]])

# 将 (H, W) -> (batch_size, C, H, W)

input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

print(input.shape)

print(kernel.shape)

output = F.conv2d(input, kernel, stride=1)

print(output)

# 卷积核的步长,即移动的步数

output2 = F.conv2d(input, kernel, stride=2)

print(output2)

# 在图像四周添加 padding 层像素

output3 = F.conv2d(input, kernel, stride=1, padding=1)

print(output3)- 结果如下:

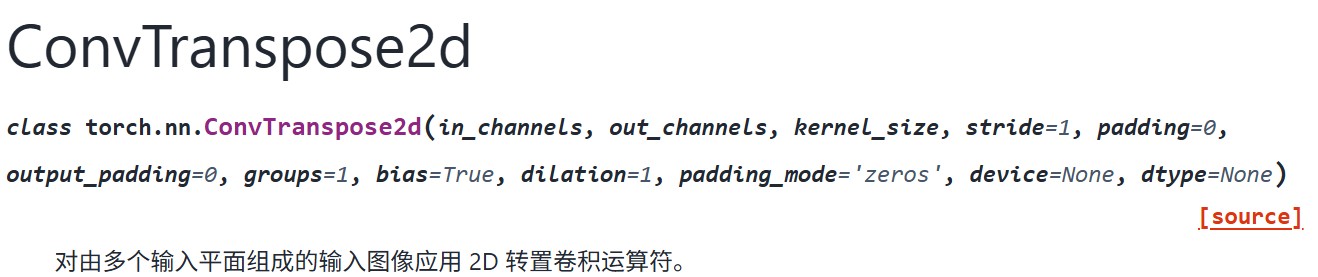

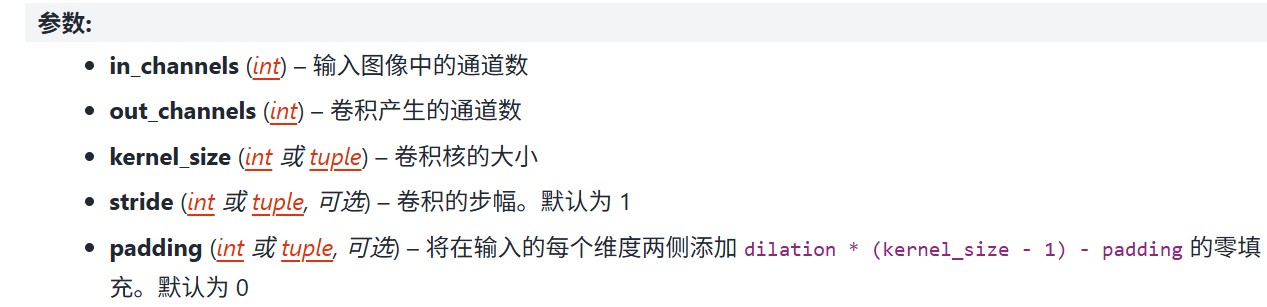

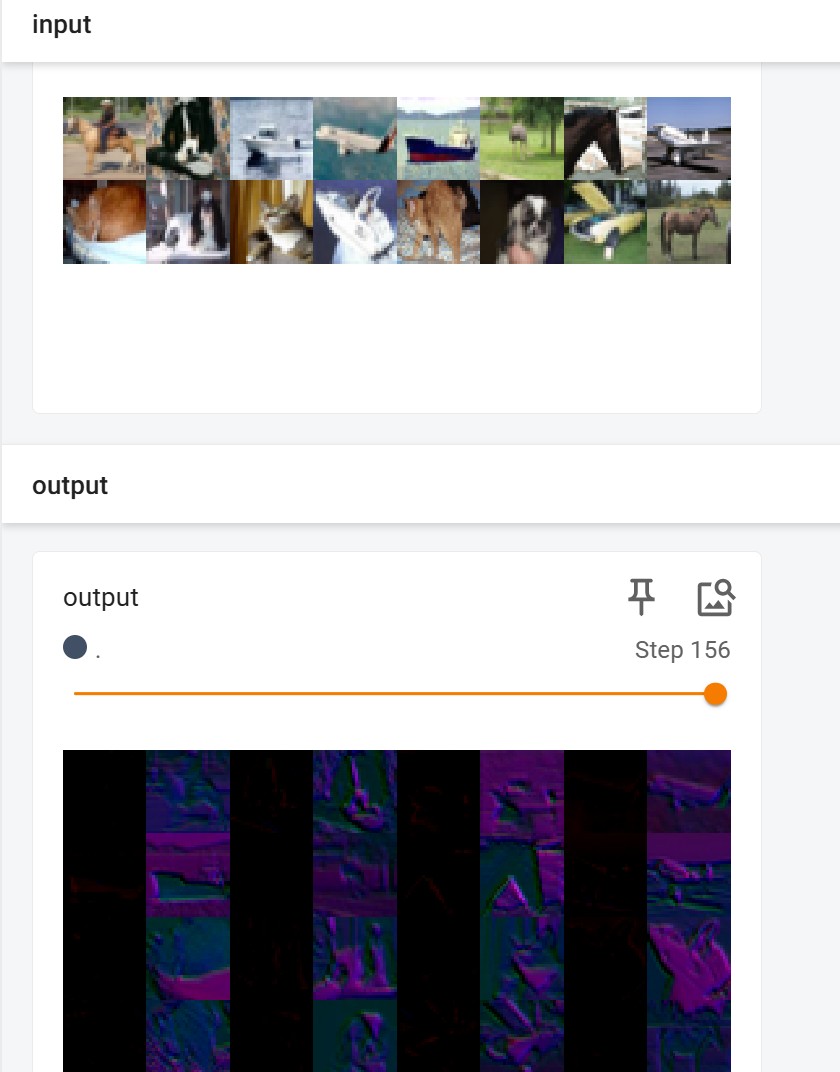

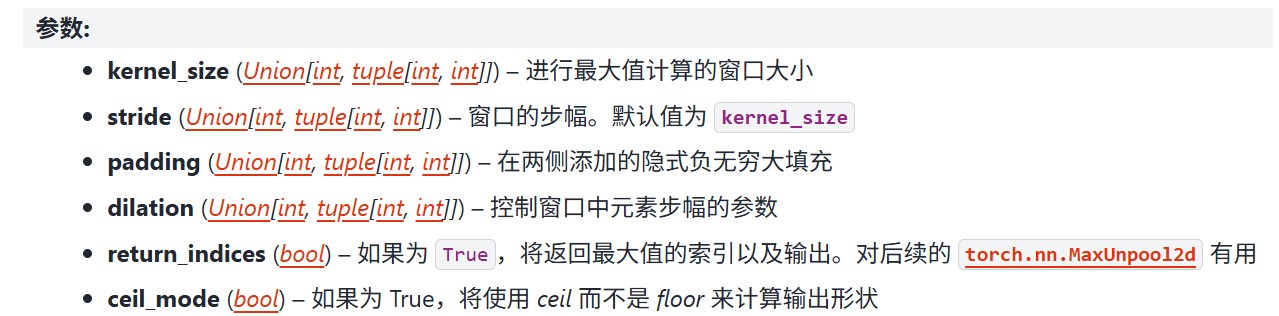

7.3 卷积层

- Conv2d:

- In_channel and Out_channel:利用多个卷积核就可以得到多层数的输出;

- 卷积操作:

python

import torch.nn

import torchvision

from torch.utils.data import DataLoader

from torch import nn

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset=dataset, batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = torch.nn.Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

model = Model()

writer = SummaryWriter("logs")

step = 0

for data in dataloader:

imgs, targets = data

output = model(imgs)

print(imgs.shape) # torch.Size([64, 3, 32, 32])

print(output.shape) # torch.Size([64, 6, 30, 30]),通道为 6 无法显示

output = torch.reshape(output, (-1, 3, 30, 30)) # -1 表示自动确认数值

writer.add_images("input", imgs, step)

writer.add_images("output", output, step)

step += 1

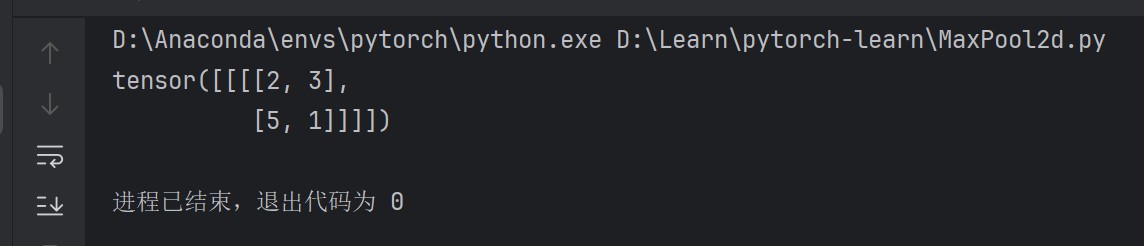

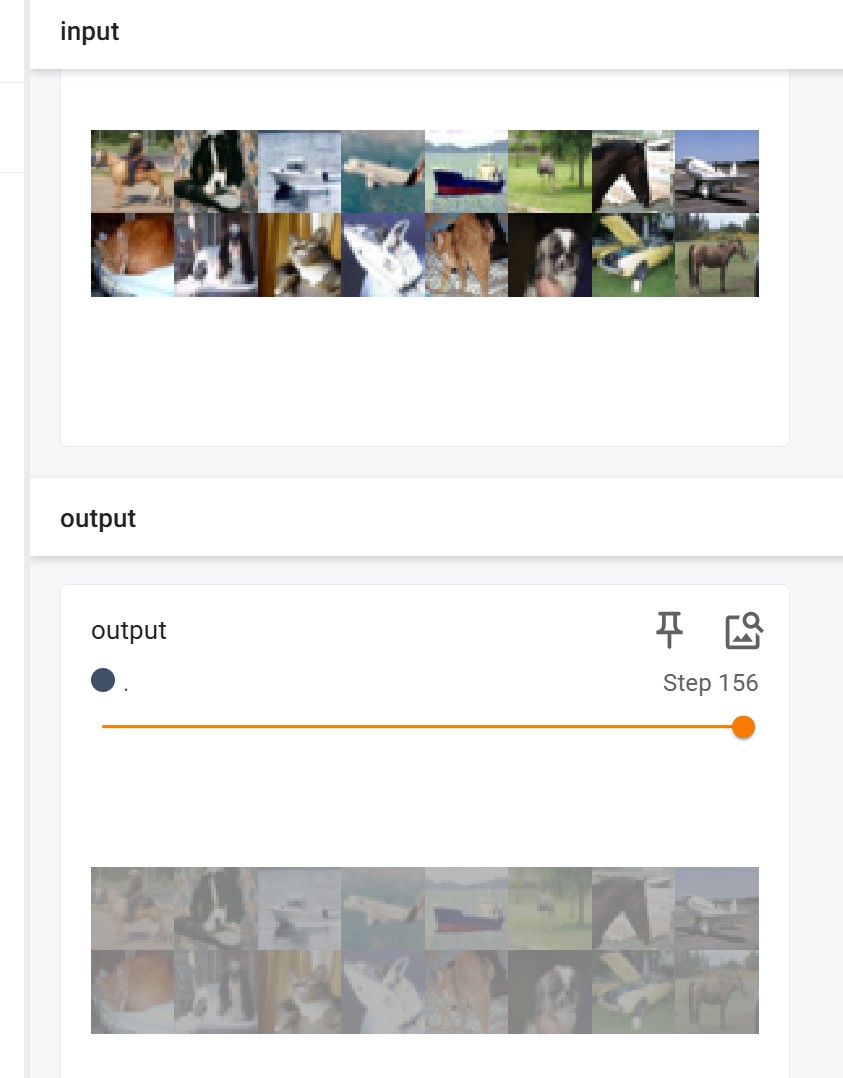

writer.close()- 可以看到卷积起到了"提取特征"的作用:

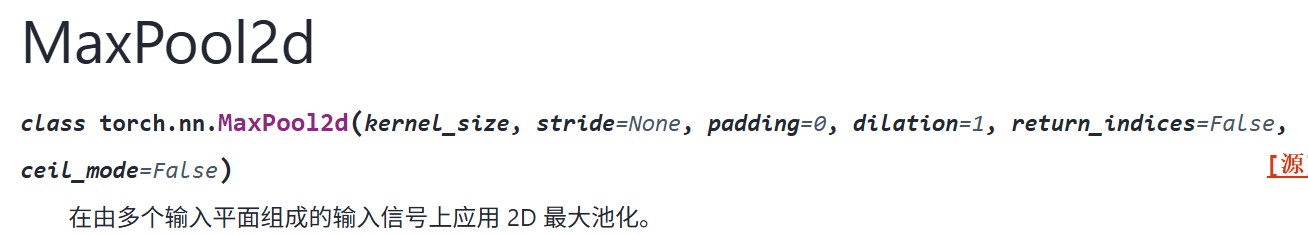

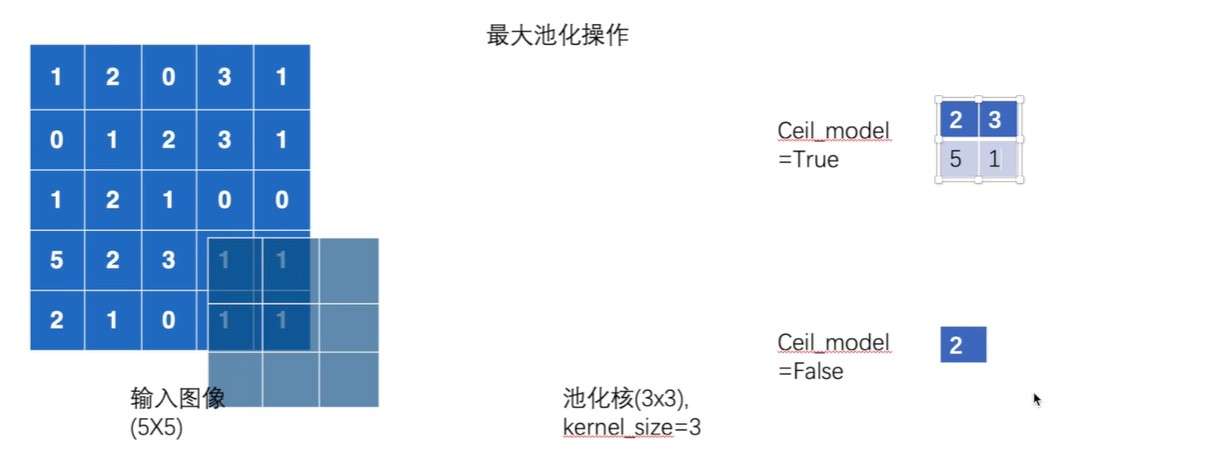

7.4 最大池化层

- MaxPool2d:

- 池化计算过程:

- Ceil_model = True:池化核内只要有数字,就会取一个最大值出来,反之必须全都有值时才会取最大值;

python

import torch

from torch import nn

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]])

input = torch.reshape(input, (-1, 1, 5, 5))

class Model(nn.Module):

def __init__(self):

super().__init__()

self.maxpool1 = nn.MaxPool2d(kernel_size=3, ceil_mode=True)

def forward(self, input):

output = self.maxpool1(input)

return output

model = Model()

output = model(input)

print(output)- 池化结果:

python

import torch

import torchvision.datasets

from torch import nn

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset=dataset, batch_size=64)

class Model(nn.Module):

def __init__(self):

super().__init__()

self.maxpool1 = nn.MaxPool2d(kernel_size=3, ceil_mode=True)

def forward(self, input):

output = self.maxpool1(input)

return output

model = Model()

writer = SummaryWriter("logs")

step = 0

for data in dataloader:

imgs, targets = data

output = model(imgs) # 池化不改变 channel

writer.add_images("input", imgs, step)

writer.add_images("output", output, step)

step += 1

writer.close()- 可以看到池化起到了"压缩特征"的作用:

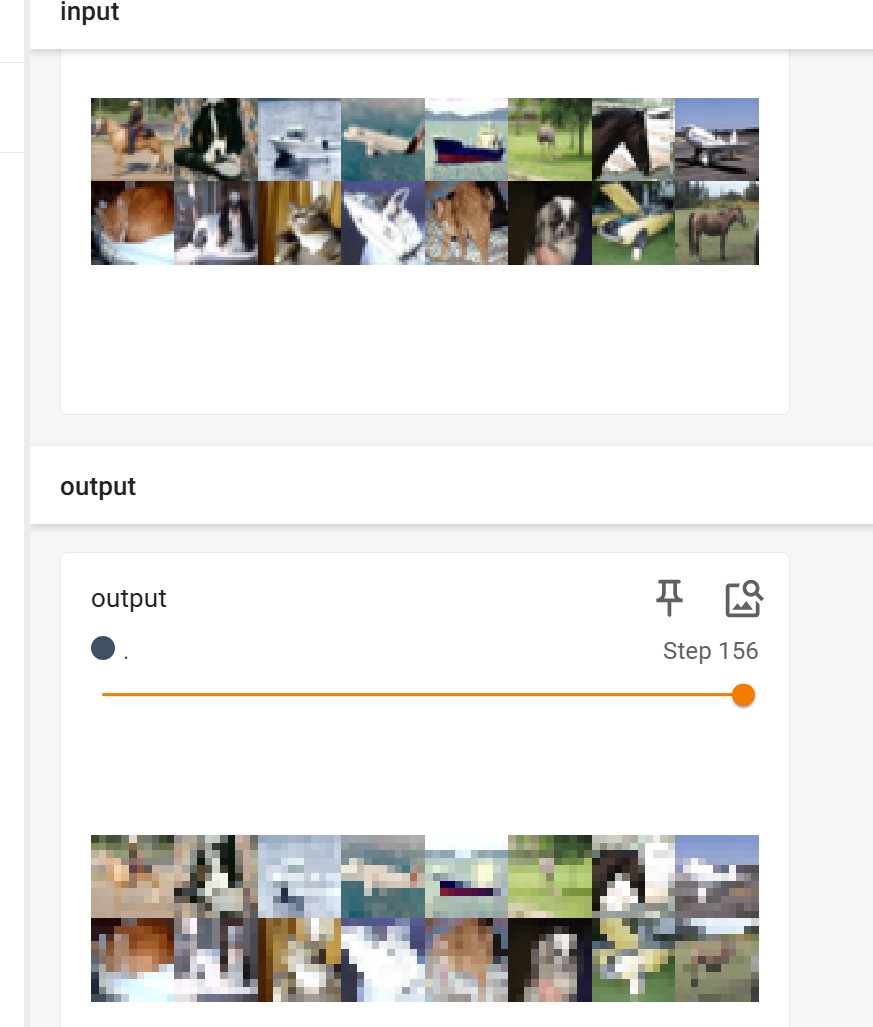

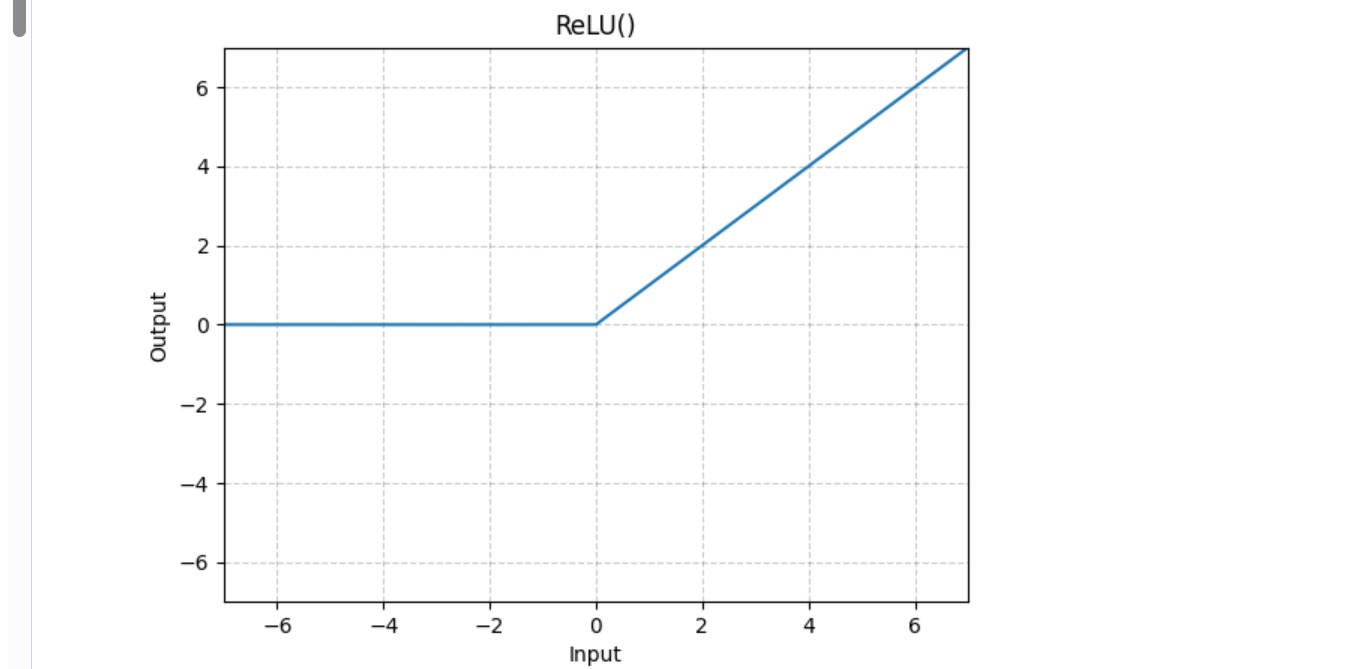

7.5 非线性激活

- ReLU:

python

import torch

from torch import nn

input = torch.tensor([[1, -0.5],

[-1, 3]])

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.relu1 = torch.nn.ReLU()

def forward(self, x):

x = self.relu1(x)

return x

model = Model()

output = model(input)

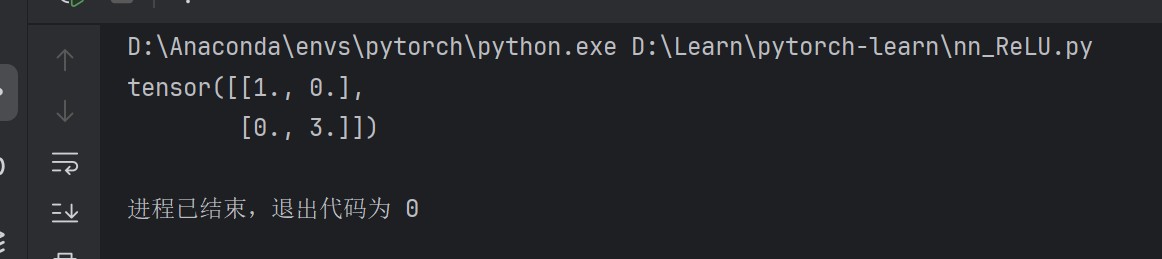

print(output)- 计算结果:

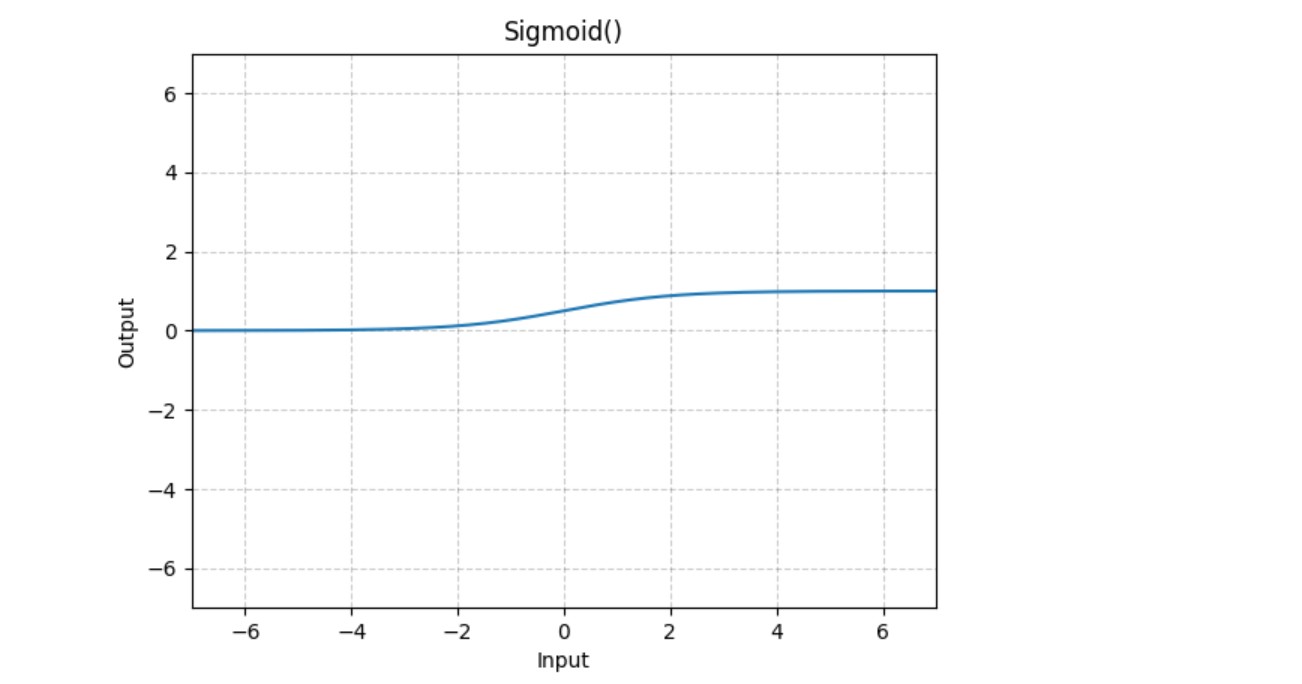

- Sigmoid:

python

import torch

import torchvision.datasets

from torch import nn

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.sigmoid1 = torch.nn.Sigmoid()

def forward(self, input):

output = self.sigmoid1(input)

return output

model = Model()

writer = SummaryWriter("logs")

step = 0

for data in dataloader:

imgs, targets = data

output = model(imgs)

writer.add_images("input", imgs, step)

writer.add_images("output", output, step)

step += 1

writer.close()- 激活结果如下:

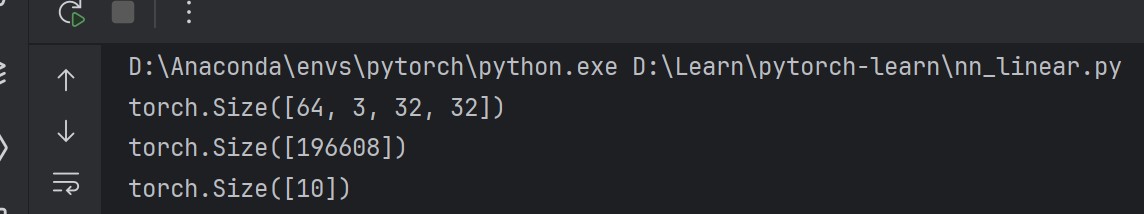

7.6 线性层

- Linear:

python

import torch

import torchvision

from torch import nn

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1 = torch.nn.Linear(196608, 10) # (1, 196608) -> (1, 10)

def forward(self, input):

output = self.linear1(input)

return output

model = Model()

for data in dataloader:

imgs, targets = data

print(imgs.shape)

output = torch.flatten(imgs) # 将输入向量展开成一行

print(output.shape)

output = model(output)

print(output.shape)- 输出结果如下:

7.7 搭建网络和 Sequential

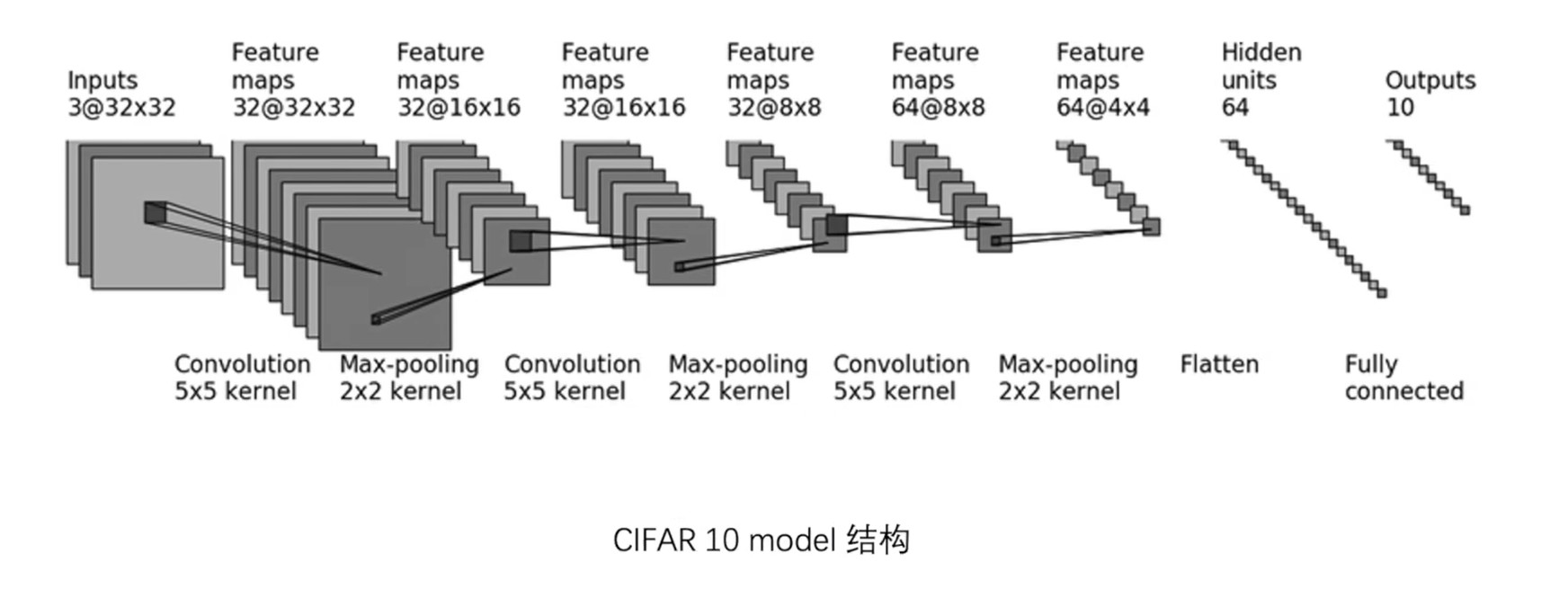

- CIFAR10 模型:

- 不用 Sequential 下:

python

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Model(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = Conv2d(3, 32, 5, padding=2)

self.maxpool1 = MaxPool2d(2)

self.conv2 = Conv2d(32, 32, 5, padding=2)

self.maxpool2 = MaxPool2d(2)

self.conv3 = Conv2d(32, 64, 5, padding=2)

self.maxpool3 = MaxPool2d(2)

self.flatten = Flatten()

self.linear1 = Linear(1024, 64)

self.linear2 = Linear(64, 10)

def forward(self, x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

model = Model()

print(model)

# 检验

input = torch.ones((64, 3, 32, 32))

output = model(input)

print(output.shape)- 使用 Sequential:

python

import torch

from tensorflow.python.keras import Sequential

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Model(nn.Module):

def __init__(self):

super().__init__()

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

model = Model()

print(model)

# 检验

input = torch.ones((64, 3, 32, 32))

output = model(input)

print(output.shape)

# 流程图

writer = SummaryWriter("logs")

writer.add_graph(model, input)

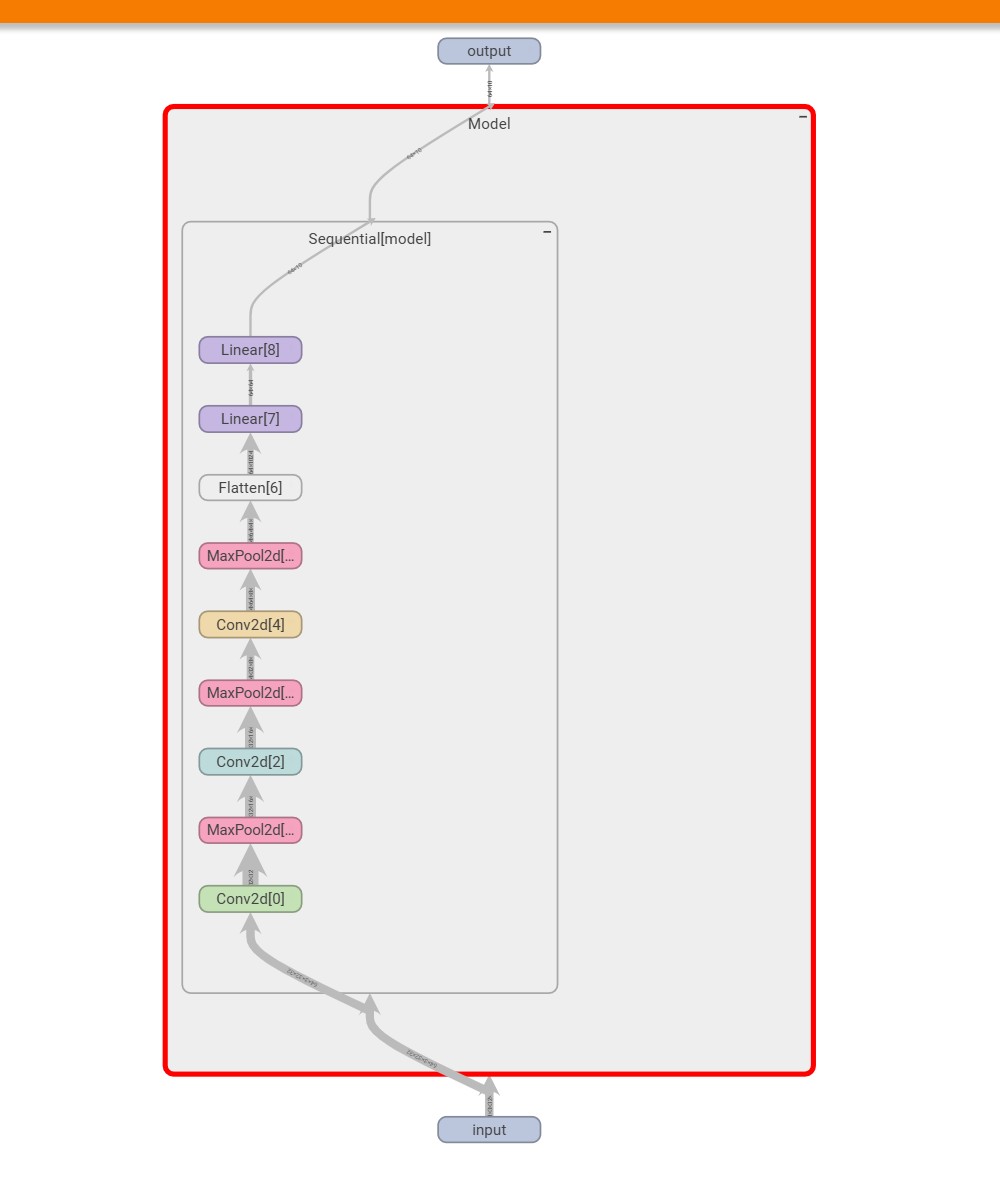

writer.close()- 流程图如下:

7.8 损失函数与反向传播

- 常用的损失函数:MSELoss(平均平方误差,用于回归问题),CrossEntropyLoss(交叉熵损失,用于分类问题);

python

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Model(nn.Module):

def __init__(self):

super().__init__()

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

model = Model()

criterion = nn.CrossEntropyLoss() # 计算交叉熵损失

for data in dataloader:

imgs, targets = data

output = model(imgs)

loss = criterion(imgs, output)

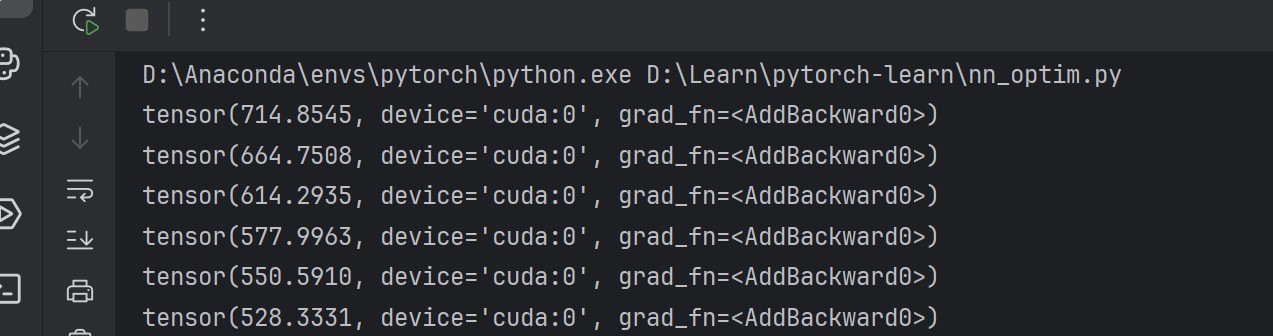

loss.backward()7.9 优化器

- Optimizer:这里采用 gpu 跑模型;

python

import torch

import torchvision.datasets

from torch import nn, optim

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=32)

device = torch.device("cuda")

class Model(nn.Module):

def __init__(self):

super().__init__()

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

model = Model().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

# 前馈

imgs, targets = data

imgs, targets = imgs.to(device), targets.to(device)

output = model(imgs)

loss = criterion(output, targets)

# 梯度清零 + 反馈

optimizer.zero_grad()

loss.backward()

# 更新

optimizer.step()

running_loss += loss

print(running_loss)- 损失变化:

8. 网络模型

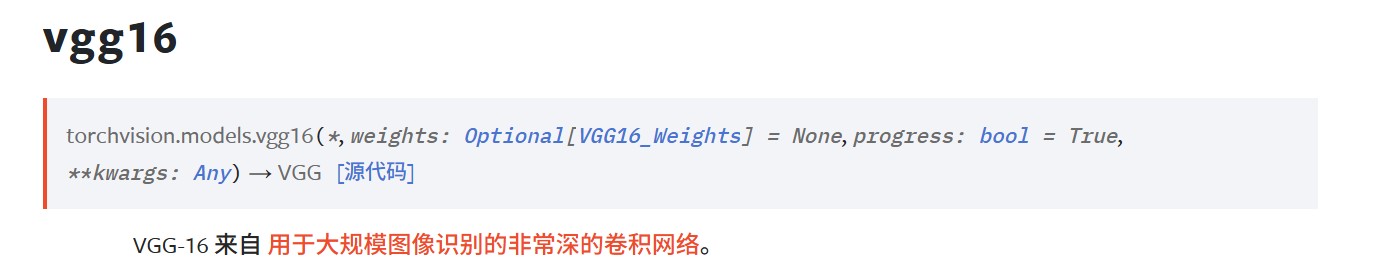

8.1 vgg16 模型

- 权重表示是否经过预训练:

- 这个模型预训练的数据集为 ImageNet,但是太大了,于是选择直接下载模型;

python

import torchvision.datasets

from torchvision.models import VGG16_Weights

# train_data = torchvision.datasets.ImageNet("./dataset", split="train",

# transform=torchvision.transforms.ToTensor())

vgg16_true = torchvision.models.vgg16(weights = VGG16_Weights.DEFAULT) # 预训练好了的模型

vgg16_false = torchvision.models.vgg16(weights = None)

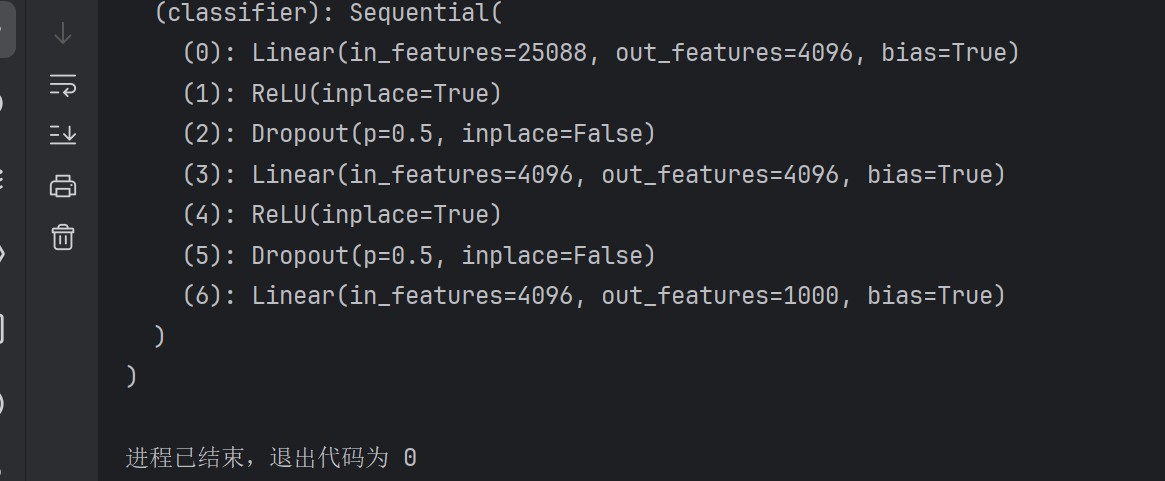

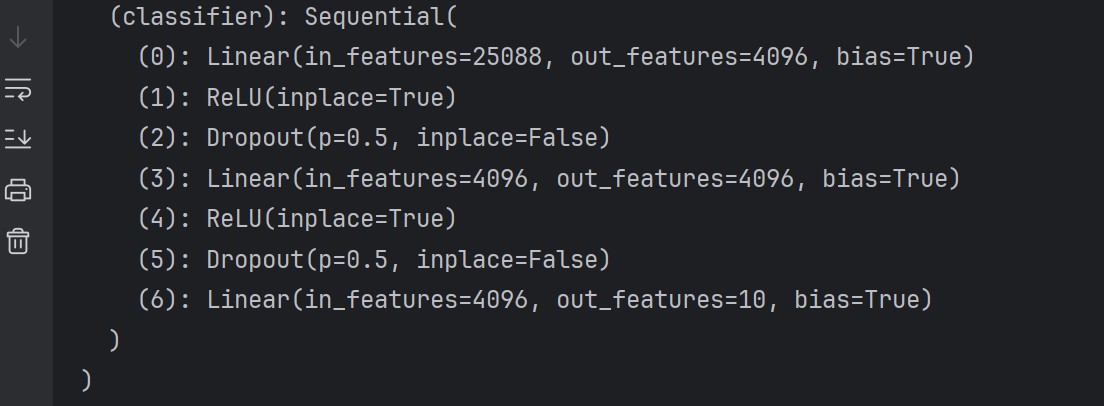

print(vgg16_true)- 可以看到这个模型是一个分类器,最后一层特征数为 1000:

8.2 修改模型

- 修改模型最后一层的输出特征数为 10:

python

import torchvision.datasets

from torch import nn

from torchvision.models import VGG16_Weights

# train_data = torchvision.datasets.ImageNet("./dataset", split="train",

# transform=torchvision.transforms.ToTensor())

vgg16_true = torchvision.models.vgg16(weights = VGG16_Weights.DEFAULT) # 预训练好了的模型

vgg16_false = torchvision.models.vgg16(weights = None)

# 在模型外层添加

vgg16_true.add_module("add_linear", nn.Linear(1000, 10))

print(vgg16_true)

# 在模型 classifier 中添加

vgg16_true.classifier.add_module("add_linear", nn.Linear(1000, 10))

print(vgg16_true)

# 在模型 classifier 中修改

vgg16_false.classifier[6] = nn.Linear(4096, 10)

print(vgg16_false)- 结果如下:

8.3 保存模型

- 两种方式:

python

import torch

import torchvision.models

vgg16 = torchvision.models.vgg16()

# 保存方式 1, 模型结构+模型结构

torch.save(vgg16, "vgg16_method1_pth")

# 保存方式 2, 模型参数(官方推荐)

torch.save(vgg16.state_dict(), "vgg16_method2_pth")8.4 加载模型

- 两种方式(对应保存模型):

python

import torch

import torchvision

# 方式 1 -> 加载模型

model = torch.load("vgg16_method1_pth", weights_only=False)

print(model)

# 方式 2 -> 加载模型

vgg16 = torchvision.models.vgg16()

vgg16.load_state_dict(torch.load("vgg16_method2_pth"))

print(vgg16)

# 注意: 不能实例化后保存模型9. 模型训练

9.1 CIFAR10 模型训练与测试

- 模型模块:

python

import torch

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

# 搭建神经网络

class Model(nn.Module):

def __init__(self):

super().__init__()

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

if __name__ == '__main__':

model = Model()

input = torch.ones((64, 3, 32, 32))

output = model(input)

print(output.shape)- 训练模块:

python

import torch

import torchvision.datasets

from torch import optim

from torch.nn import CrossEntropyLoss

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from CIFAR10_model import *

# 准备数据集

train_data = torchvision.datasets.CIFAR10("./dataset", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 数据集大小

train_data_size = len(train_data)

test_data_size = len(test_data)

print(f"训练数据集的长度为{train_data_size}")

print(f"测试数据集的长度为{test_data_size}")

# 利用 DataLoader 加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 创建网络模型

model = Model()

# 损失函数

criterion = CrossEntropyLoss()

# 优化器

learning_rate = 1e-2 # 0.01

optimizer = optim.SGD(model.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练轮数

epoch = 10

# 添加 tensorboard

writer = SummaryWriter("logs")

for i in range(epoch):

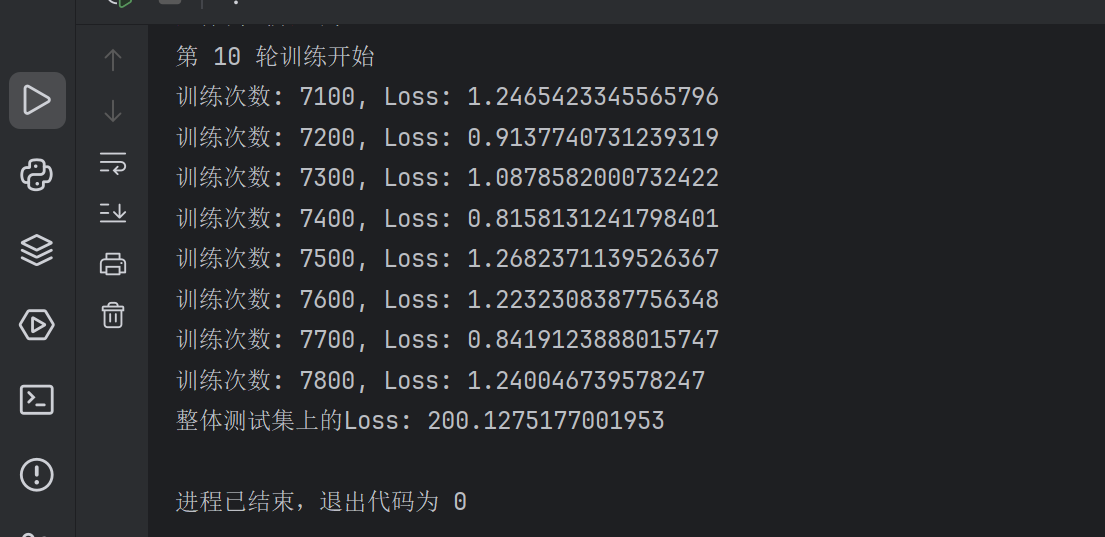

print(f"第 {i+1} 轮训练开始")

# 训练步骤开始

model.train()

for data in train_dataloader:

imgs, targets = data

output = model(imgs)

loss = criterion(output, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 100 == 0:

print(f"训练次数: {total_train_step}, Loss: {loss.item()}")

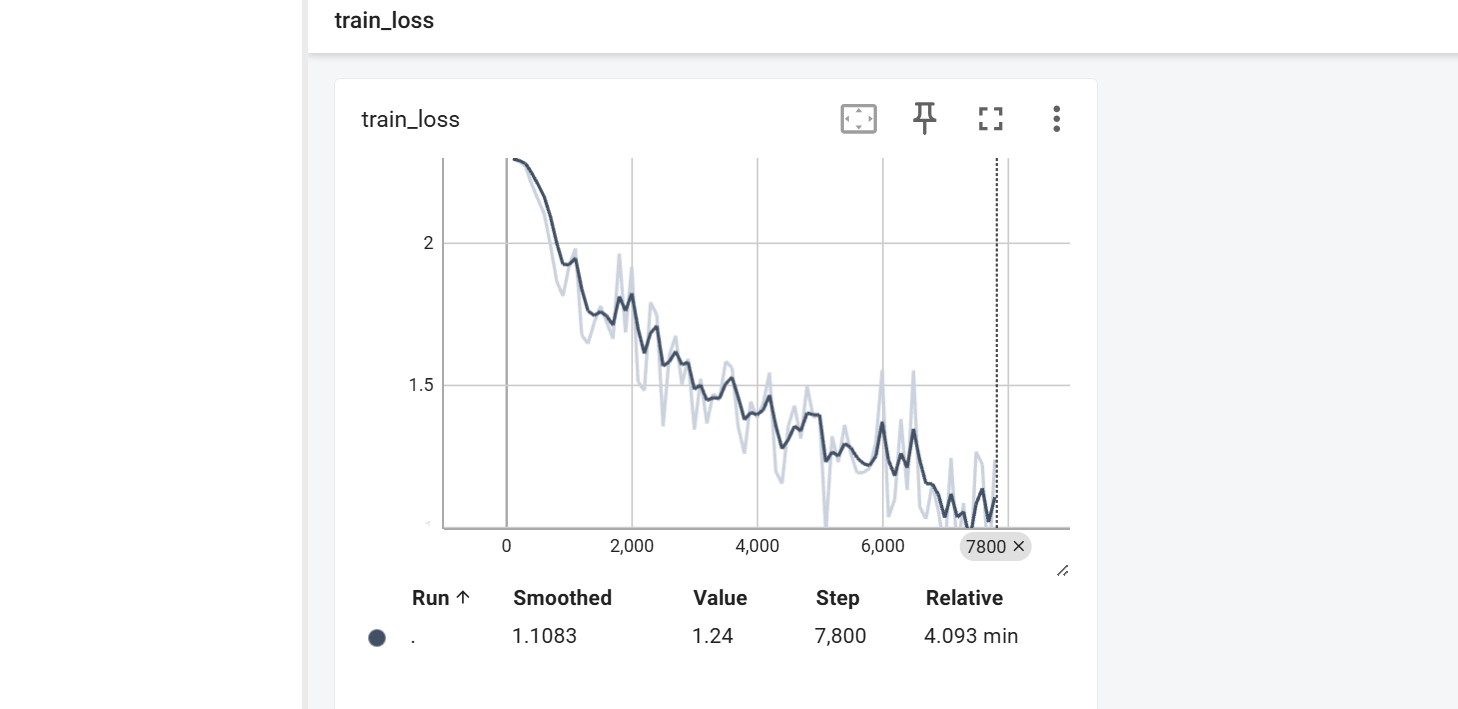

writer.add_scalar("train_loss", loss.item(), global_step=total_train_step)

# 测试步骤开始

model.eval()

total_test_loss = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

output = model(imgs)

loss = criterion(output, targets)

total_test_loss += loss

print(f"整体测试集上的Loss: {total_test_loss}")

writer.add_scalar("test_loss", total_test_loss, global_step=total_test_step)

total_test_step += 1

# 保存每一轮训练的模型

torch.save(model, f"model_{i}.pth")

print("模型已保存")

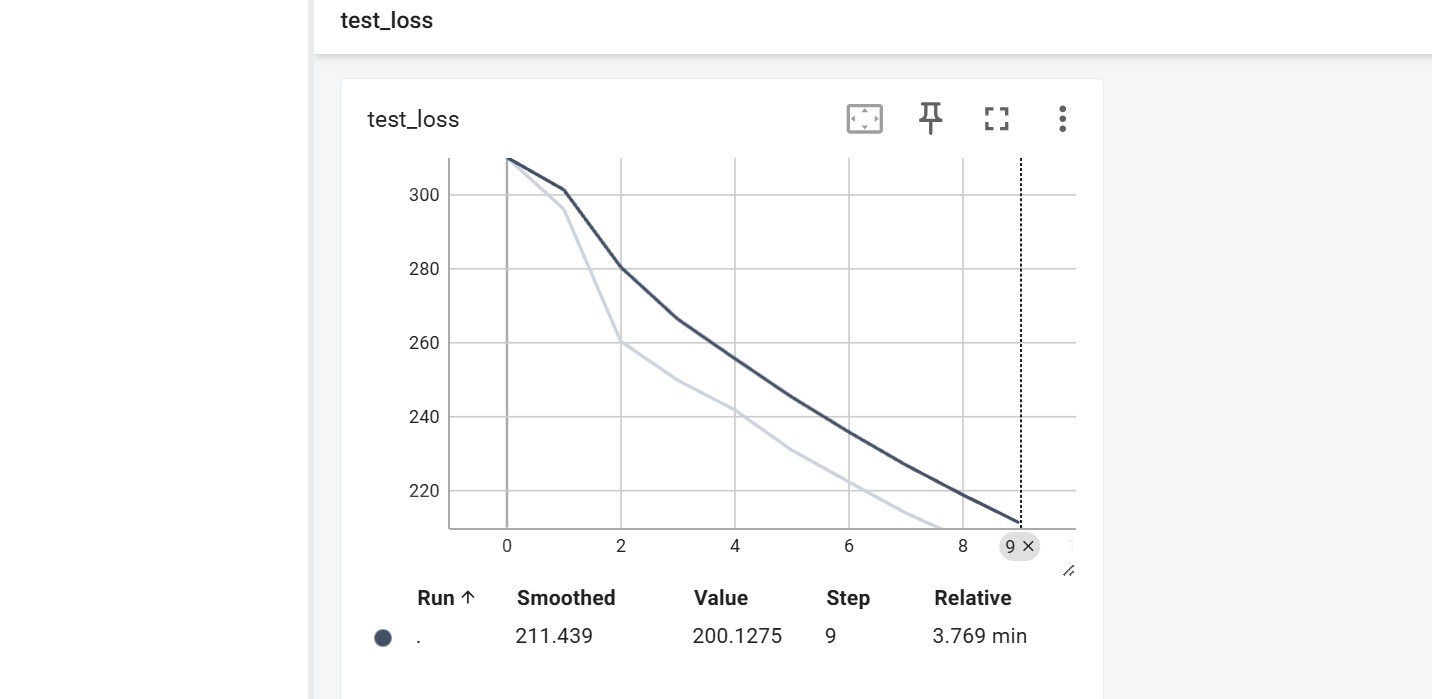

writer.close()- 训练结果:

- train_loss 与 test_loss:

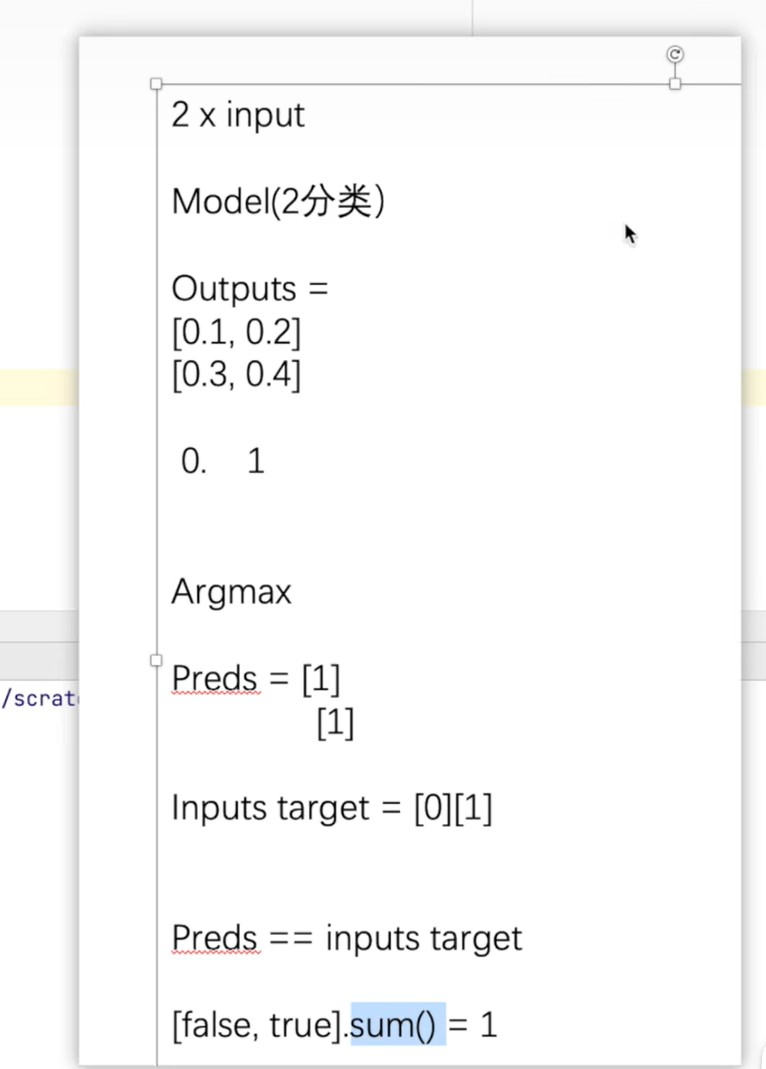

9.2 分类问题正确率

- 原先的输出是每个类别组成的概率集合,通过 argmax(1) 得到每一行的最大值对应类别,再与目标对比计算相同类别的总个数;

python

import torch

outputs = torch.tensor([[0.1, 0.2],

[0.3, 0.4]])

print(outputs.argmax(1))

preds = outputs.argmax(1)

targets = torch.tensor([0, 1])

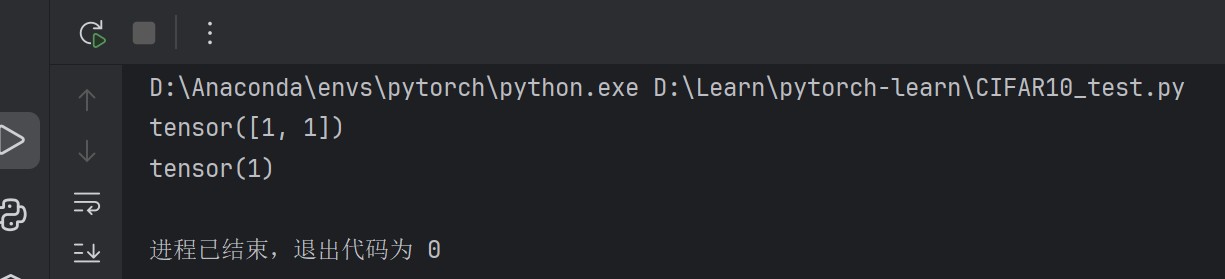

print((preds == targets).sum())- 结果如下:

- 代码修改如下:

python

import torch

import torchvision.datasets

from torch import optim

from torch.nn import CrossEntropyLoss

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from CIFAR10_model import *

# 准备数据集

train_data = torchvision.datasets.CIFAR10("./dataset", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 数据集大小

train_data_size = len(train_data)

test_data_size = len(test_data)

print(f"训练数据集的长度为{train_data_size}")

print(f"测试数据集的长度为{test_data_size}")

# 利用 DataLoader 加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 创建网络模型

model = Model()

# 损失函数

criterion = CrossEntropyLoss()

# 优化器

learning_rate = 1e-2 # 0.01

optimizer = optim.SGD(model.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练轮数

epoch = 10

# 添加 tensorboard

writer = SummaryWriter("logs")

for i in range(epoch):

print(f"第 {i+1} 轮训练开始")

# 训练步骤开始

model.train()

for data in train_dataloader:

imgs, targets = data

output = model(imgs)

loss = criterion(output, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 100 == 0:

print(f"训练次数: {total_train_step}, Loss: {loss.item()}")

writer.add_scalar("train_loss", loss.item(), global_step=total_train_step)

# 测试步骤开始

model.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

output = model(imgs)

loss = criterion(output, targets)

total_test_loss += loss

# 计算准确率

total_accuracy += (output.argmax(1) == targets).sum()

print(f"整体测试集上的Loss: {total_test_loss}")

print(f"整体测试集上的准确率: {total_accuracy / test_data_size}")

writer.add_scalar("test_loss", total_test_loss, global_step=total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / test_data_size, global_step=total_test_step)

total_test_step += 1

# 保存每一轮训练的模型

torch.save(model, f"model_{i}.pth")

print("模型已保存")

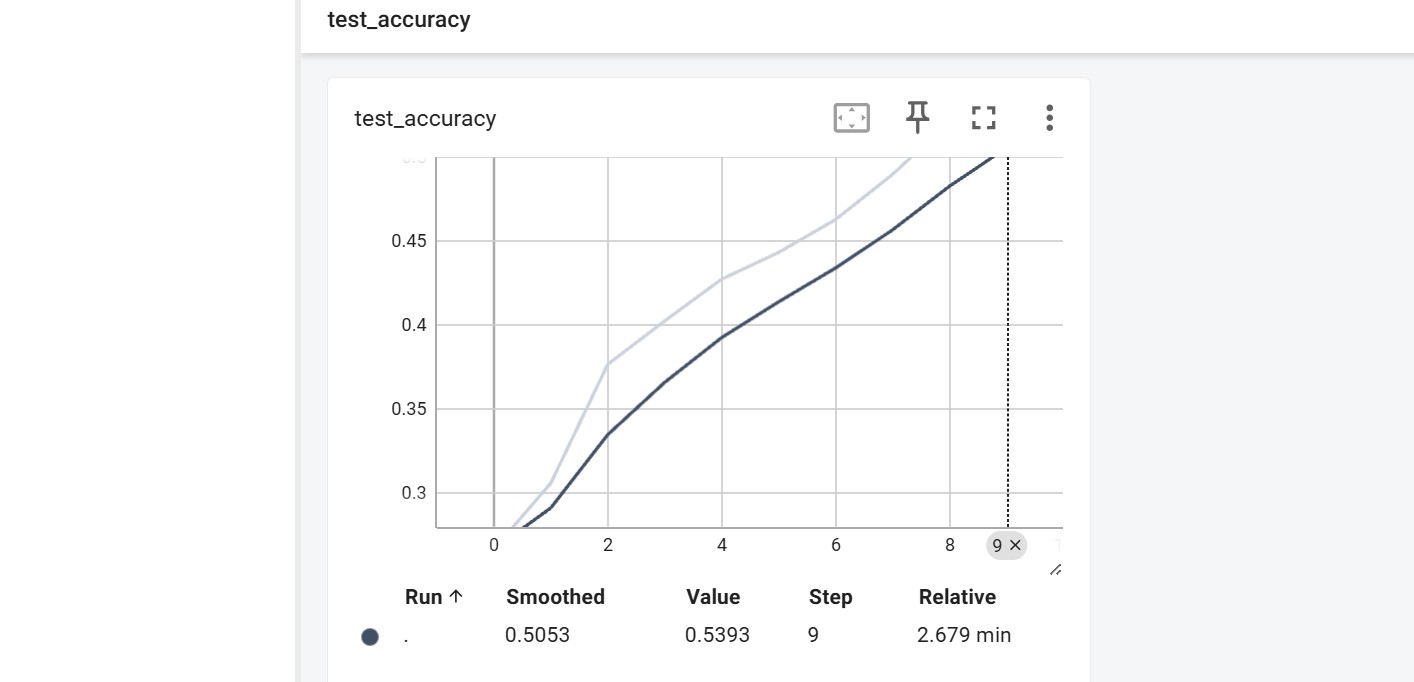

writer.close()- 准确率结果如下:

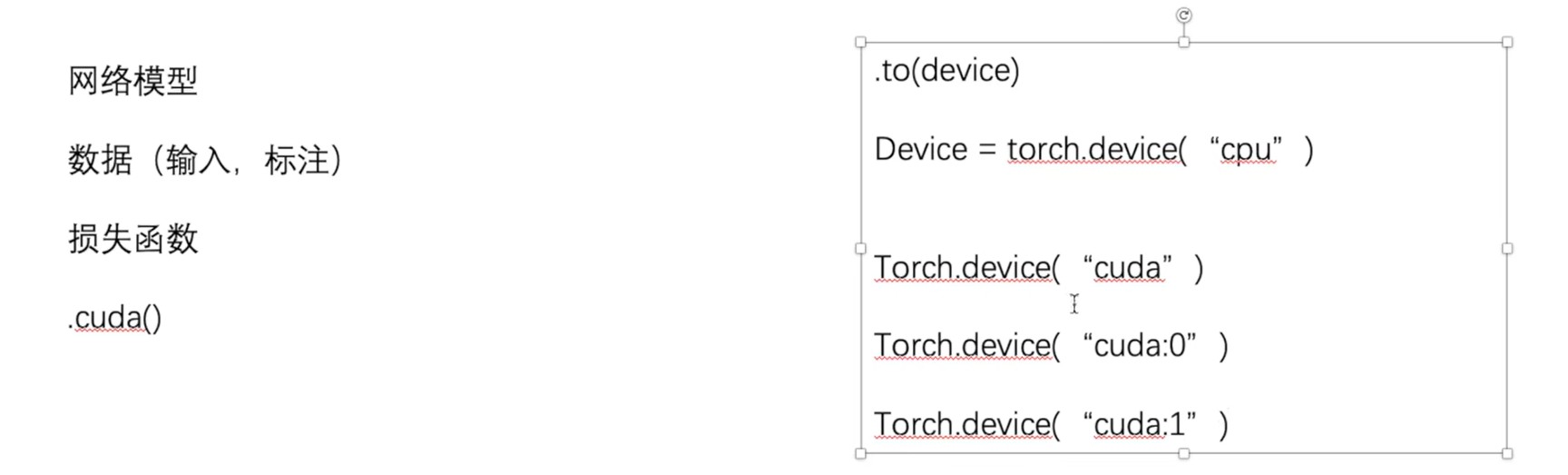

9.3 利用GPU训练

- 方法一:

python

import torch

import torchvision.datasets

from torch import optim, nn

from torch.nn import CrossEntropyLoss, Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

import time

# 准备数据集

train_data = torchvision.datasets.CIFAR10("./dataset", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 数据集大小

train_data_size = len(train_data)

test_data_size = len(test_data)

print(f"训练数据集的长度为{train_data_size}")

print(f"测试数据集的长度为{test_data_size}")

# 利用 DataLoader 加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 创建网络模型

class Model(nn.Module):

def __init__(self):

super().__init__()

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

model = Model()

if torch.cuda.is_available():

model = model.cuda()

# 损失函数

criterion = CrossEntropyLoss()

if torch.cuda.is_available():

criterion = criterion.cuda()

# 优化器

learning_rate = 1e-2 # 0.01

optimizer = optim.SGD(model.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练轮数

epoch = 10

# 添加 tensorboard

writer = SummaryWriter("logs")

start_time = time.time()

for i in range(epoch):

print(f"第 {i+1} 轮训练开始")

# 训练步骤开始

model.train()

for data in train_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

output = model(imgs)

loss = criterion(output, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 100 == 0:

end_time = time.time()

print(end_time - start_time) # 计算一轮训练时间

print(f"训练次数: {total_train_step}, Loss: {loss.item()}")

writer.add_scalar("train_loss", loss.item(), global_step=total_train_step)

# 测试步骤开始

model.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

output = model(imgs)

loss = criterion(output, targets)

total_test_loss += loss

# 计算准确率

total_accuracy += (output.argmax(1) == targets).sum()

print(f"整体测试集上的Loss: {total_test_loss}")

print(f"整体测试集上的准确率: {total_accuracy / test_data_size}")

writer.add_scalar("test_loss", total_test_loss, global_step=total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / test_data_size, global_step=total_test_step)

total_test_step += 1

# 保存每一轮训练的模型

torch.save(model, f"model_{i}.pth")

print("模型已保存")

writer.close()- 方法二:

python

import torch

import torchvision.datasets

from torch import optim, nn

from torch.nn import CrossEntropyLoss, Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

import time

# 定义训练的设备

device = torch.device("cuda")

# 准备数据集

train_data = torchvision.datasets.CIFAR10("./dataset", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 数据集大小

train_data_size = len(train_data)

test_data_size = len(test_data)

print(f"训练数据集的长度为{train_data_size}")

print(f"测试数据集的长度为{test_data_size}")

# 利用 DataLoader 加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 创建网络模型

class Model(nn.Module):

def __init__(self):

super().__init__()

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

model = Model()

model = model.to(device)

# 损失函数

criterion = CrossEntropyLoss()

criterion = criterion.to(device)

# 优化器

learning_rate = 1e-2 # 0.01

optimizer = optim.SGD(model.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练轮数

epoch = 10

# 添加 tensorboard

writer = SummaryWriter("logs")

start_time = time.time()

for i in range(epoch):

print(f"第 {i+1} 轮训练开始")

# 训练步骤开始

model.train()

for data in train_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

output = model(imgs)

loss = criterion(output, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 100 == 0:

end_time = time.time()

print(end_time - start_time) # 计算一轮训练时间

print(f"训练次数: {total_train_step}, Loss: {loss.item()}")

writer.add_scalar("train_loss", loss.item(), global_step=total_train_step)

# 测试步骤开始

model.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

output = model(imgs)

loss = criterion(output, targets)

total_test_loss += loss

# 计算准确率

total_accuracy += (output.argmax(1) == targets).sum()

print(f"整体测试集上的Loss: {total_test_loss}")

print(f"整体测试集上的准确率: {total_accuracy / test_data_size}")

writer.add_scalar("test_loss", total_test_loss, global_step=total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / test_data_size, global_step=total_test_step)

total_test_step += 1

# 保存每一轮训练的模型

torch.save(model, f"model_{i}.pth")

print("模型已保存")

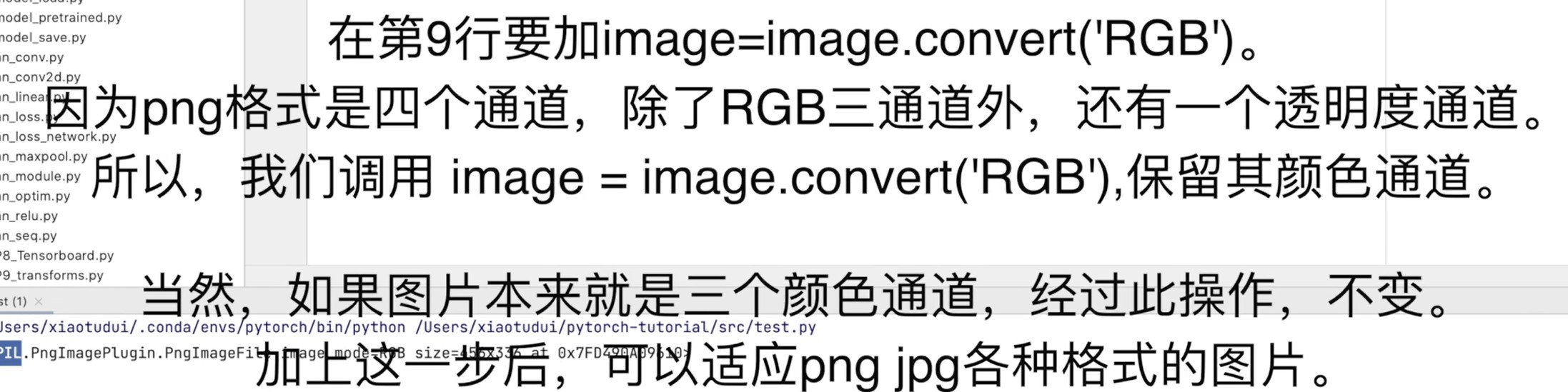

writer.close()9.4 模型验证

- 完整的模型验证(测试, demo):利用已经训练好的模型,然后给它提供输入;

- 利用训练好的 CIFAR10 数据集对应模型,选取一张狗狗图片进行测试:

python

import torch

import torchvision.transforms

from PIL import Image

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

# 导入需要进行分类的图片,转换为 'RGB' 形式

image_path = "./images/dog.jpg"

image = Image.open(image_path)

image = image.convert("RGB")

print(image)

# CIFAR10 要求输入图片大小为 (32, 32)

transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32, 32)),

torchvision.transforms.ToTensor()])

image = transform(image)

print(image.shape) # (3, 32, 32)

# 加载网络模型

class Model(nn.Module):

def __init__(self):

super().__init__()

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

# 第二个参数说明由gpu训练的模型要在cpu加载,第三个参数说明保存的网络不仅只有权重还有结构

model = torch.load("model_29_gpu.pth", map_location=torch.device('cpu'), weights_only=False)

# 数据集要求输入数据包含 batch_size

image = torch.reshape(image, (1, 3, 32, 32))

model.eval()

with torch.no_grad():

output = model(image)

# 输出概率最高的类别

print(output)

print(output.argmax(1))