目录

前言

实现CUDA,CUDNN与TensorRT各个版本之间的依赖关系尤为重要,但是在不同的工作环境下可能需要使用不同的版本匹配。本文主要通过软连接的方式实现各个版本之间的自由搭配。

系统信息

操作系统

-

lsb_release

bashLSB Version: core-11.1.0ubuntu4-noarch:security-11.1.0ubuntu4-noarch Distributor ID: Ubuntu Description: Ubuntu 22.04.5 LTS Release: 22.04 Codename: jammy -

hostnamectl

bashStatic hostname: msi Icon name: computer-desktop Chassis: desktop Machine ID: 0905fc3742014849a8f6e66a18eec86a Boot ID: 946b89d00b014454b09cbb0f267b287a Operating System: Ubuntu 22.04.5 LTS Kernel: Linux 6.8.0-85-generic Architecture: x86-64 Hardware Vendor: Micro-Star International Co., Ltd. Hardware Model: MS-7D25

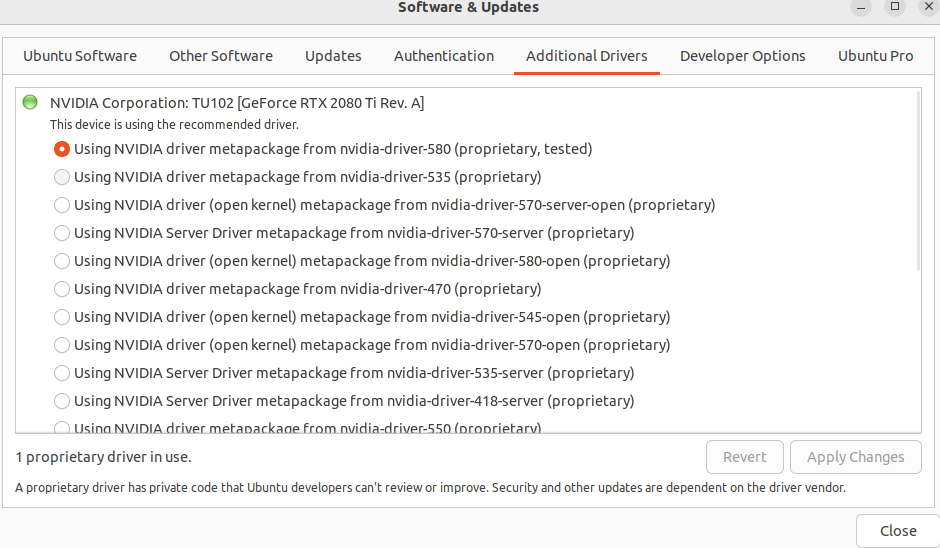

显卡驱动

-

查看系统推荐的显卡驱动(选择带recommended的驱动)

bashubuntu-drivers devices == /sys/devices/pci0000:00/0000:00:01.0/0000:01:00.0 == modalias : pci:v000010DEd00001E07sv000019DAsd00001503bc03sc00i00 vendor : NVIDIA Corporation model : TU102 [GeForce RTX 2080 Ti Rev. A] driver : nvidia-driver-470-server - distro non-free driver : nvidia-driver-550 - distro non-free driver : nvidia-driver-545-open - distro non-free driver : nvidia-driver-545 - distro non-free driver : nvidia-driver-570-open - distro non-free driver : nvidia-driver-550-open - distro non-free driver : nvidia-driver-535-server - distro non-free driver : nvidia-driver-570-server-open - distro non-free driver : nvidia-driver-580-server-open - distro non-free driver : nvidia-driver-570-server - distro non-free driver : nvidia-driver-535-open - distro non-free driver : nvidia-driver-535 - distro non-free driver : nvidia-driver-580 - distro non-free recommended driver : nvidia-driver-470 - distro non-free driver : nvidia-driver-450-server - distro non-free driver : nvidia-driver-580-server - distro non-free driver : nvidia-driver-580-open - distro non-free driver : nvidia-driver-570 - distro non-free driver : nvidia-driver-535-server-open - distro non-free driver : nvidia-driver-418-server - distro non-free driver : xserver-xorg-video-nouveau - distro free builtin -

安装驱动

-

显卡信息

bashnvidia-smi +-----------------------------------------------------------------------------------------+ | NVIDIA-SMI 580.65.06 Driver Version: 580.65.06 CUDA Version: 13.0 | +-----------------------------------------+------------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+========================+======================| | 0 NVIDIA GeForce RTX 2080 Ti Off | 00000000:01:00.0 On | N/A | | 44% 51C P2 127W / 260W | 1118MiB / 11264MiB | 44% Default | | | | N/A | +-----------------------------------------+------------------------+----------------------+ +-----------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=========================================================================================| | 0 N/A N/A 1737 G /usr/lib/xorg/Xorg 117MiB | | 0 N/A N/A 1899 G /usr/bin/gnome-shell 104MiB | | 0 N/A N/A 3911 C python 872MiB | +-----------------------------------------------------------------------------------------+

CUDA

https://developer.nvidia.com/cuda-toolkit-archive

配置环境变量

bash

# vim ~/.bashrc

export CUDA_HONE=/usr/local/cuda

export CUDA_INC_DIR=${CUDA_HONE}/include

export CUDA_LIB_DIR=${CUDA_HONE}/lib64

export CUDA_BIN_DIR=${CUDA_HONE}/bin

export CUDA_CUPTI_INC_DIR=${CUDA_HONE}/extras/CUPTI/include

export CUDA_CUPTI_LIB_DIR=${CUDA_HONE}/extras/CUPTI/lib64

export PATH=${CUDA_BIN_DIR}${PATH:+:${PATH}}

export LD_LIBRARY_PATH=${CUDA_LIB_DIR}${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

export LD_LIBRARY_PATH=${CUDA_CUPTI_LIB_DIR}${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}验证是否安装成功

-

nvcc -V -

/usr/local/cuda-11.8/extras/demo_suite/bandwidthTest -

/usr/local/cuda-11.8/extras/demo_suite/deviceQuery -

编译测试程序

cpp// test_cuda.cpp #include <stdio.h> #include <stdlib.h> #include <cuda_runtime.h> int main() { int deviceCount; cudaGetDeviceCount(&deviceCount); printf("找到 %d 个CUDA设备:\n", deviceCount); for (int i = 0; i < deviceCount; i++) { cudaDeviceProp prop; cudaGetDeviceProperties(&prop, i); printf("设备 %d: %s\n", i, prop.name); printf(" Compute Capability: %d.%d\n", prop.major, prop.minor); printf(" 全局内存: %.2f GB\n", prop.totalGlobalMem / (1024.0 * 1024.0 * 1024.0)); printf(" CUDA核心: %d\n", prop.multiProcessorCount * prop.maxThreadsPerMultiProcessor); } // 简单的GPU计算测试 float *d_array, *h_array; h_array = (float*)malloc(10 * sizeof(float)); cudaMalloc(&d_array, 10 * sizeof(float)); cudaMemcpy(d_array, h_array, 10 * sizeof(float), cudaMemcpyHostToDevice); cudaMemcpy(h_array, d_array, 10 * sizeof(float), cudaMemcpyDeviceToHost); cudaFree(d_array); free(h_array); printf("CUDA内存操作测试: 成功!\n"); return 0; }-

编译:

bashg++ -o test_cuda test_cuda.cpp -I${CUDA_INC_DIR} -I${CUDA_CUPTI_INC_DIR} -L${CUDA_LIB_DIR} -I${CUDA_CUPTI_LIB_DIR} -lcudart -

运行:

bash./test_cuda

-

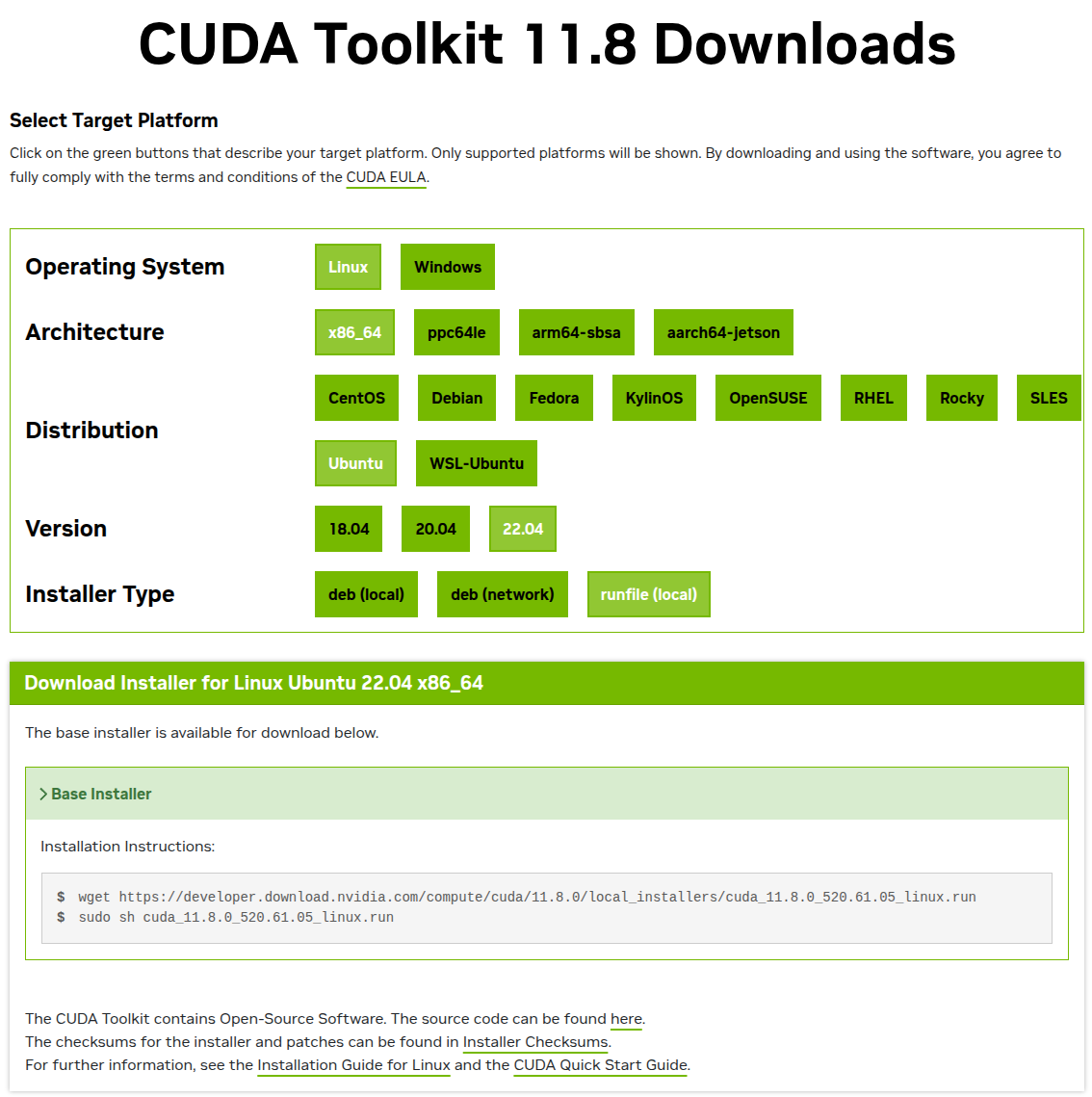

11.8.0

bash

# 下载链接

wget https://developer.download.nvidia.com/compute/cuda/11.8.0/local_installers/cuda_11.8.0_520.61.05_linux.run

# 安装(取消驱动安装)

sudo sh cuda_11.8.0_520.61.05_linux.run

# 配置软链接

sudo rm -rf /usr/local/cuda

sudo ln -s /usr/local/cuda-11.8 /usr/local/cuda

# 卸载(需重新配置软连接)

sudo rm -rf /usr/local/cuda

sudo ln -s /usr/local/cuda-11.8 /usr/local/cuda

sudo /usr/local/cuda/bin/cuda-uninstaller

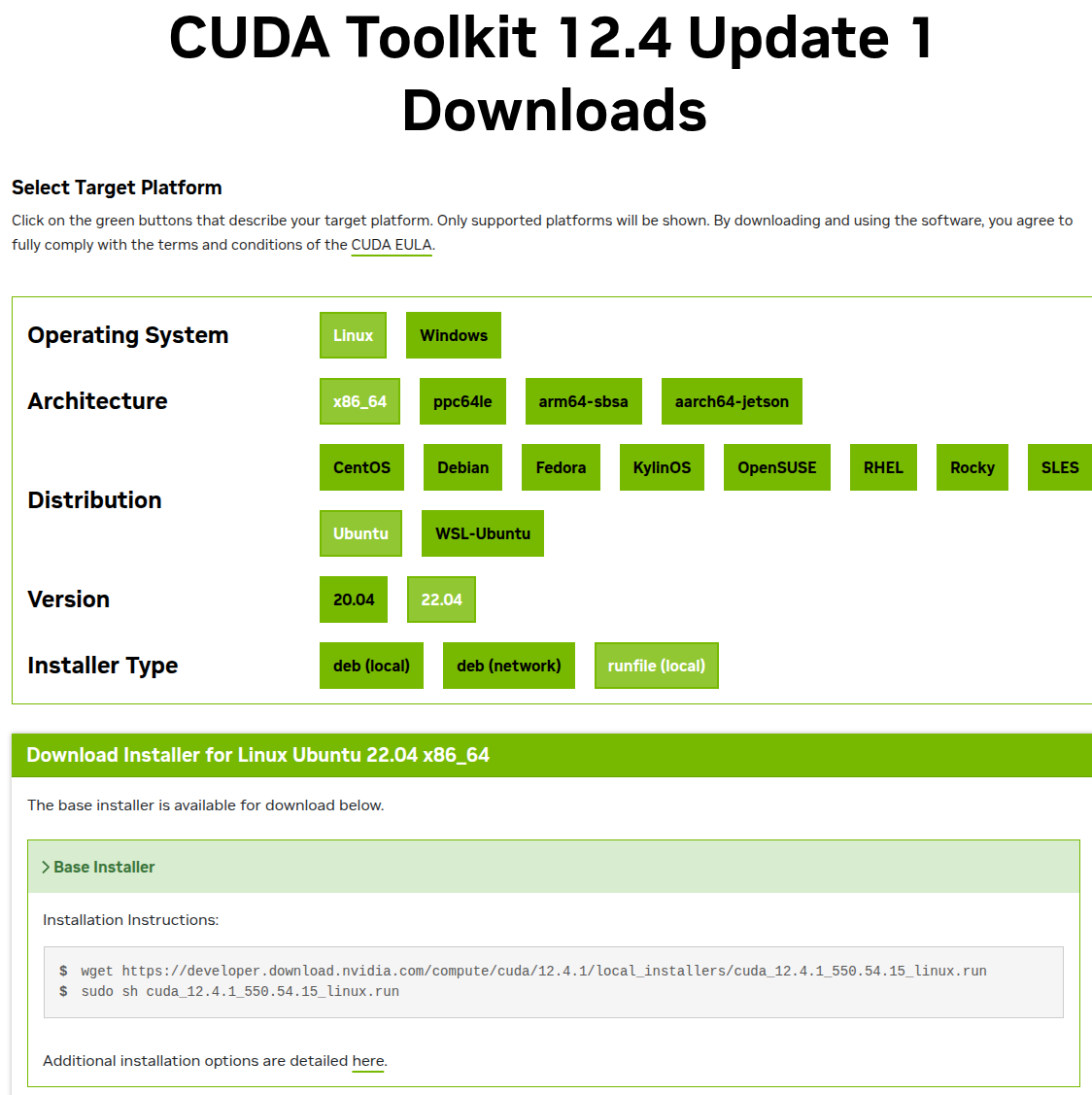

sudo rm -rf /usr/local/cuda12.4.1

bash

# 下载链接

wget https://developer.download.nvidia.com/compute/cuda/12.4.1/local_installers/cuda_12.4.1_550.54.15_linux.run

# 安装(取消驱动安装)

sudo sh cuda_12.4.1_550.54.15_linux.run

# 配置软链接

sudo rm -rf /usr/local/cuda

sudo ln -s /usr/local/cuda-12.4 /usr/local/cuda

# 卸载(需重新配置软连接)

sudo rm -rf /usr/local/cuda

sudo ln -s /usr/local/cuda-12.4 /usr/local/cuda

sudo /usr/local/cuda/bin/cuda-uninstaller

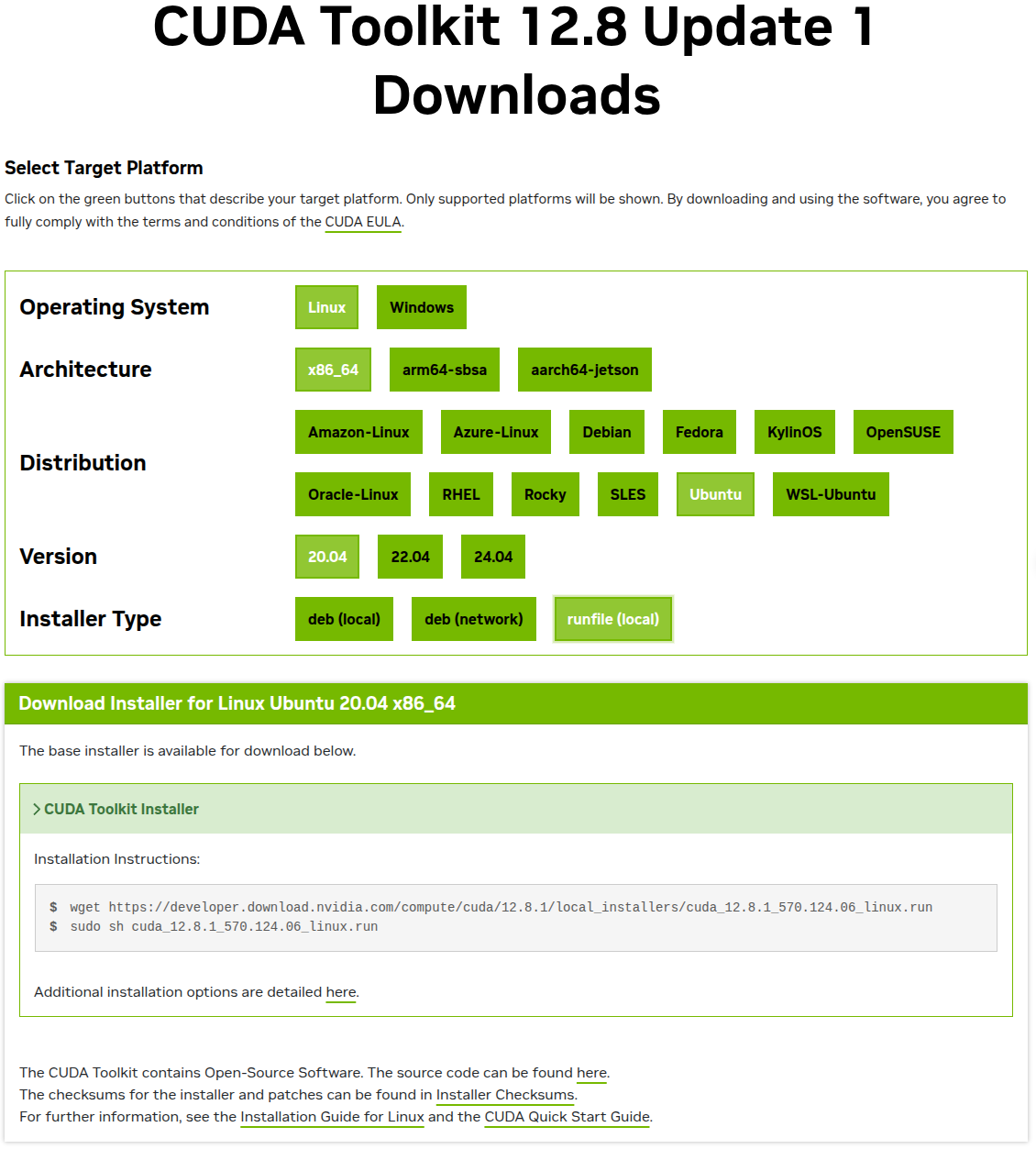

sudo rm -rf /usr/local/cuda12.8.1

bash

# 下载链接

wget https://developer.download.nvidia.com/compute/cuda/12.8.1/local_installers/cuda_12.8.1_570.124.06_linux.run

# 安装(取消驱动安装)

sudo sh cuda_12.8.1_570.124.06_linux.run

# 配置软链接

sudo rm -rf /usr/local/cuda

sudo ln -s /usr/local/cuda-12.8 /usr/local/cuda

# 卸载(需重新配置软连接)

sudo rm -rf /usr/local/cuda

sudo ln -s /usr/local/cuda-12.8 /usr/local/cuda

sudo /usr/local/cuda/bin/cuda-uninstaller

sudo rm -rf /usr/local/cudaCUDNN

https://developer.download.nvidia.cn/compute/cudnn/redist/cudnn/linux-x86_64/

配置环境变量

bash

# vim ~/.bashrc

export CUDNN_HOME=/opt/cudnn

export CUDNN_INC_DIR=${CUDNN_HOME}/include

export CUDNN_LIB_DIR=${CUDNN_HOME}/lib

export LD_LIBRARY_PATH=${CUDNN_LIB_DIR}${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}验证安装是否成功

-

cat ${CUDNN_INC_DIR}/cudnn_version.h | grep CUDNN_MAJOR -A 2 -

编译运行测试程序

cpp// test_cudnn.cpp #include <cudnn.h> #include <iostream> #include <cstdlib> int main() { cudnnHandle_t handle; cudnnStatus_t status = cudnnCreate(&handle); if (status != CUDNN_STATUS_SUCCESS) { std::cerr << "cuDNN初始化失败: " << cudnnGetErrorString(status) << std::endl; return EXIT_FAILURE; } std::cout << "✓ cuDNN初始化成功" << std::endl; // 获取版本信息 size_t version = cudnnGetVersion(); std::cout << "✓ cuDNN版本: " << version << std::endl; cudnnDestroy(handle); std::cout << "✓ cuDNN测试完成: 所有操作成功" << std::endl; return EXIT_SUCCESS; }-

编译

bashg++ -o test_cudnn test_cudnn.cpp -I${CUDA_INC_DIR} -I${CUDA_CUPTI_INC_DIR} -I${CUDNN_INC_DIR} -L${CUDNN_LIB_DIR} -lcudnn -

运行

bash./test_cudnn

-

8.9.7.29_cuda11

bash

下载

wget https://developer.download.nvidia.cn/compute/cudnn/redist/cudnn/linux-x86_64/cudnn-linux-x86_64-8.9.7.29_cuda11-archive.tar.xz

# 解压

sudo tar -xf cudnn-linux-x86_64-8.9.7.29_cuda11-archive.tar.xz -C /opt

# 配置软链接

sudo rm -rf /opt/cudnn

sudo ln -s /opt/cudnn-linux-x86_64-8.9.7.29_cuda11-archive /opt/cudnn

# 设置文件权限

sudo chmod a+r /opt/cudnn-linux-x86_64-8.9.7.29_cuda11-archive/include/cudnn*

sudo chmod a+r /opt/cudnn-linux-x86_64-8.9.7.29_cuda11-archive/lib/libcudnn*

# 卸载

sudo rm -rf /opt/cudnn-linux-x86_64-8.9.7.29_cuda11-archive

sudo rm -rf /opt/cudnn9.2.1.18_cuda12

bash

# 下载

wget https://developer.download.nvidia.cn/compute/cudnn/redist/cudnn/linux-x86_64/cudnn-linux-x86_64-9.2.1.18_cuda12-archive.tar.xz

# 解压

sudo tar -xf cudnn-linux-x86_64-9.2.1.18_cuda12-archive.tar.xz -C /opt

# 配置软链接

sudo rm -rf /opt/cudnn

sudo ln -s /opt/cudnn-linux-x86_64-9.2.1.18_cuda12-archive /opt/cudnn

# 设置文件权限

sudo chmod a+r /opt/cudnn-linux-x86_64-9.2.1.18_cuda12-archive/include/cudnn*

sudo chmod a+r /opt/cudnn-linux-x86_64-9.2.1.18_cuda12-archive/lib/libcudnn*

# 卸载

sudo rm -rf /opt/cudnn-linux-x86_64-9.2.1.18_cuda12-archive

sudo rm -rf /opt/cudnn9.14.0.64_cuda12

bash

# 下载

wget https://developer.download.nvidia.cn/compute/cudnn/redist/cudnn/linux-x86_64/cudnn-linux-x86_64-9.14.0.64_cuda12-archive.tar.xz

# 解压

sudo tar -xf cudnn-linux-x86_64-9.14.0.64_cuda12-archive.tar.xz -C /opt

# 配置软链接

sudo rm -rf /opt/cudnn

sudo ln -s /opt/cudnn-linux-x86_64-9.14.0.64_cuda12-archive /opt/cudnn

# 设置文件权限

sudo chmod a+r /opt/cudnn-linux-x86_64-9.14.0.64_cuda12-archive/include/cudnn*

sudo chmod a+r /opt/cudnn-linux-x86_64-9.14.0.64_cuda12-archive/lib/libcudnn*

# 卸载

sudo rm -rf /opt/cudnn-linux-x86_64-8.9.7.29_cuda11-archive

sudo rm -rf /opt/cudnnTensorRT

https://developer.nvidia.com/tensorrt/download

配置环境变量

bash

# vim ~/.bashrc

export TENSORRT_HOME=/opt/tensorrt

export TENSORRT_INC_DIR=${TENSORRT_HOME}/include

export TENSORRT_LIB_DIR=${TENSORRT_HOME}/lib

export TENSORRT_BIN_DIR=${TENSORRT_HOME}/bin

export PATH=${TENSORRT_BIN_DIR}${PATH:+:${PATH}}

export LD_LIBRARY_PATH=${TENSORRT_LIB_DIR}${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}验证是否安装成功

ls -l ${TENSORRT_LIB_DIR}/libnvinfer.so*trtexec --helptrtexec --onnx=${TENSORRT_HOME}/data/mnist/mnist.onnx | grep 'TensorRT version'

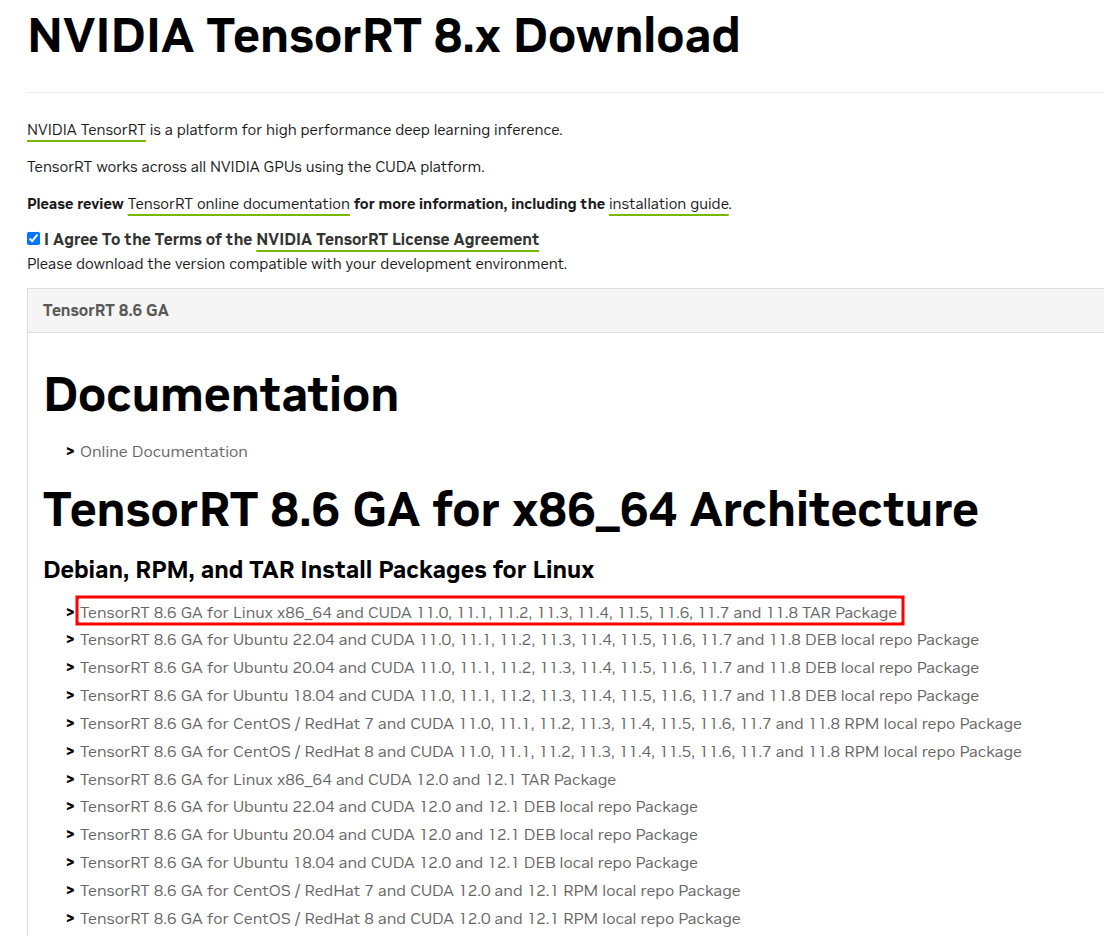

8.6.1.6(CUDA 11.x)

bash

# 下载

wget https://developer.nvidia.com/downloads/compute/machine-learning/tensorrt/secure/8.6.1/tars/TensorRT-8.6.1.6.Linux.x86_64-gnu.cuda-11.8.tar.gz

# 解压

sudo tar -xf TensorRT-8.6.1.6.Linux.x86_64-gnu.cuda-11.8.tar.gz -C /opt

# 改名(便于区分适配不同的CUDA版本)

sudo mv /opt/TensorRT-8.6.1.6 /opt/TensorRT-8.6.1.6-cuda-11.x

# 软链接

sudo rm -rf /opt/tensorrt

sudo ln -s /opt/TensorRT-8.6.1.6-cuda-11.x /opt/tensorrt

# 删除

sudo rm -rf /opt/TensorRT-8.6.1.6-cuda-11.x

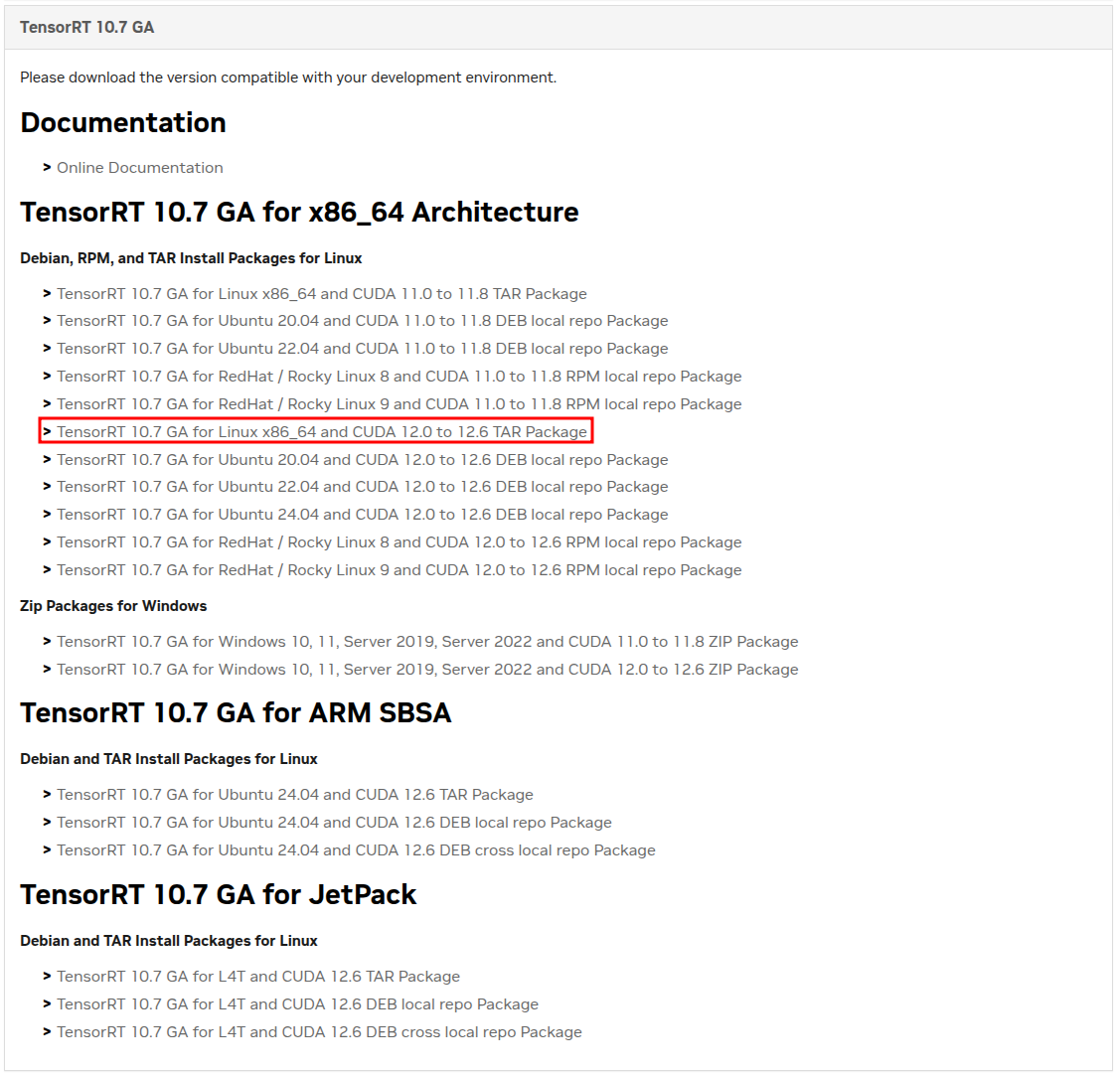

sudo rm -rf /opt/tensorrt10.7.0.23(CUDA 12.0-12.6)

bash

# 下载

wget https://developer.nvidia.com/downloads/compute/machine-learning/tensorrt/10.7.0/tars/TensorRT-10.7.0.23.Linux.x86_64-gnu.cuda-12.6.tar.gz

# 解压

sudo tar -xf TensorRT-10.7.0.23.Linux.x86_64-gnu.cuda-12.6.tar.gz -C /opt

# 改名(便于区分适配不同的CUDA版本)

sudo mv /opt/TensorRT-10.7.0.23 /opt/TensorRT-10.7.0.23-cuda-12.0-12.6

# 软链接

sudo rm -rf /opt/tensorrt

sudo ln -s /opt/TensorRT-10.7.0.23-cuda-12.0-12.6 /opt/tensorrt

# 删除

sudo rm -rf /opt/TensorRT-10.7.0.23-cuda-12.0-12.6

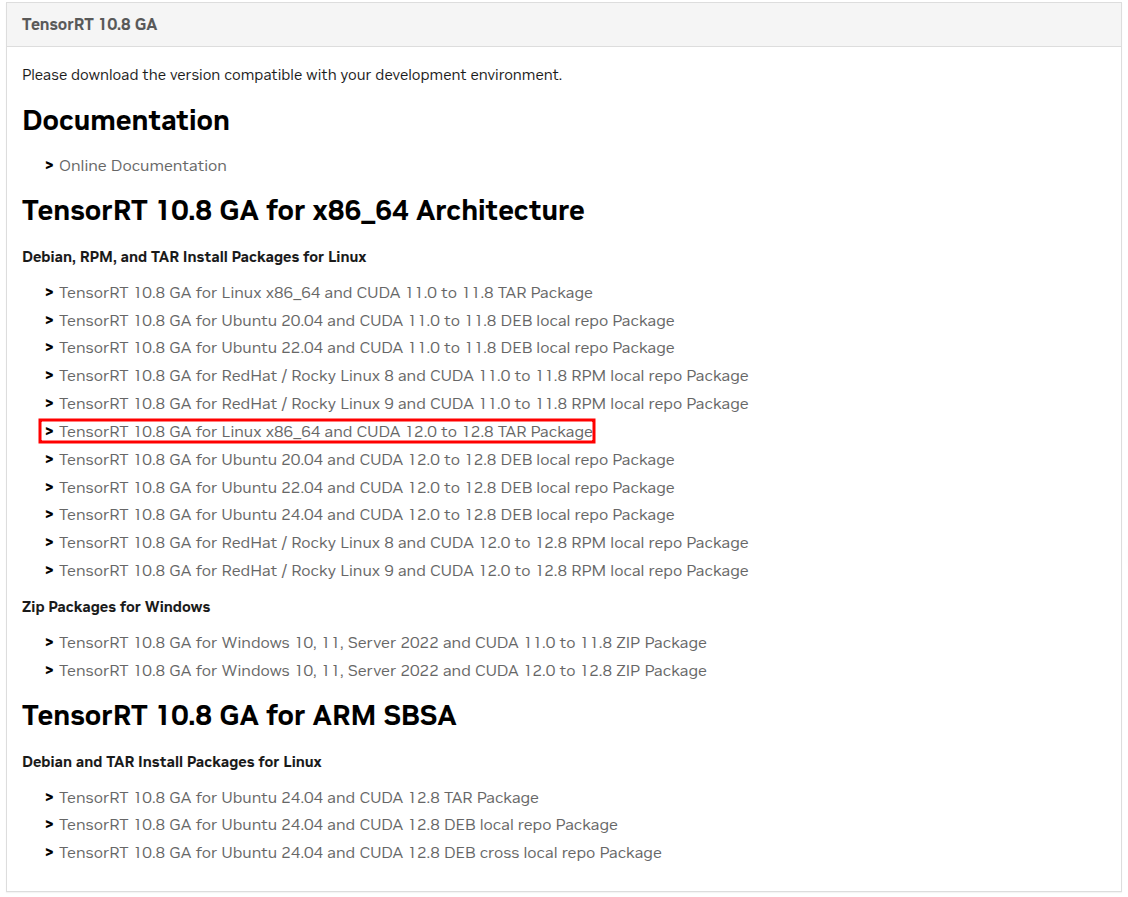

sudo rm -rf /opt/tensorrt10.8.0.43(CUDA 12.x)

bash

# 下载

wget https://developer.nvidia.com/downloads/compute/machine-learning/tensorrt/10.8.0/tars/TensorRT-10.8.0.43.Linux.x86_64-gnu.cuda-12.8.tar.gz

# 解压

sudo tar -xf TensorRT-10.8.0.43.Linux.x86_64-gnu.cuda-12.8.tar.gz -C /opt

# 改名(便于区分适配不同的CUDA版本)

sudo mv /opt/TensorRT-10.8.0.43 /opt/TensorRT-10.8.0.43-cuda-12.x

# 软链接

sudo rm -rf /opt/tensorrt

sudo ln -s /opt/TensorRT-10.8.0.43-cuda-12.x /opt/tensorrt

# 删除

sudo rm -rf /opt/TensorRT-10.8.0.43-cuda-12.x

sudo rm -rf /opt/tensorrt后记

可根据不同的需求调整版本适配,例如:

-

基于CUDA(11.8)训练与部署CNN网络

bash# CUDA sudo rm -rf /usr/local/cuda sudo ln -s /usr/local/cuda-11.8 /usr/local/cuda # CUDNN sudo rm -rf /opt/cudnn sudo ln -s /opt/cudnn-linux-x86_64-8.9.7.29_cuda11-archive /opt/cud # TensorRT sudo rm -rf /opt/tensorrt sudo ln -s /opt/TensorRT-8.6.1.6-cuda-11.x /opt/tensorrt -

基于CUDA(12.8)训练与部署千问大模型

bash# CUDA sudo rm -rf /usr/local/cuda sudo ln -s /usr/local/cuda-12.8 /usr/local/cuda # CUDNN sudo rm -rf /opt/cudnn sudo ln -s /opt/cudnn-linux-x86_64-9.14.0.64_cuda12-archive /opt/cudnn # TensorRT sudo rm -rf /opt/tensorrt sudo ln -s /opt/TensorRT-10.8.0.43-cuda-12.x /opt/tensorrt