写在前面

最近看到阿里云RDS DuckDB发布,手头有一个TB级别的业务库,会定期导入到CK中进行OLAP类分析,用于查看数据的整体趋势,在使用时,当前有下面的通点:

- 需要维护DTS由MySQL到ClickHouse的链路

- 异构数据库的数据同步存在较多限制

- CK与MySQL属于异构数据库,分析的语法需要使用CK语法,有额外负担

- 最头疼的点,有些大查询直接报内存不足,还需要设置过滤条件,分几批查询再汇总

也尝试过使用HTAP方案,但由于我的业务库是读写比较低,有大量写入,HTAP带来的写入开销基本不可接受,同时AP查询直接作用于TP引擎也可能对业务产生影响(尤其是IO和内存的消耗)。

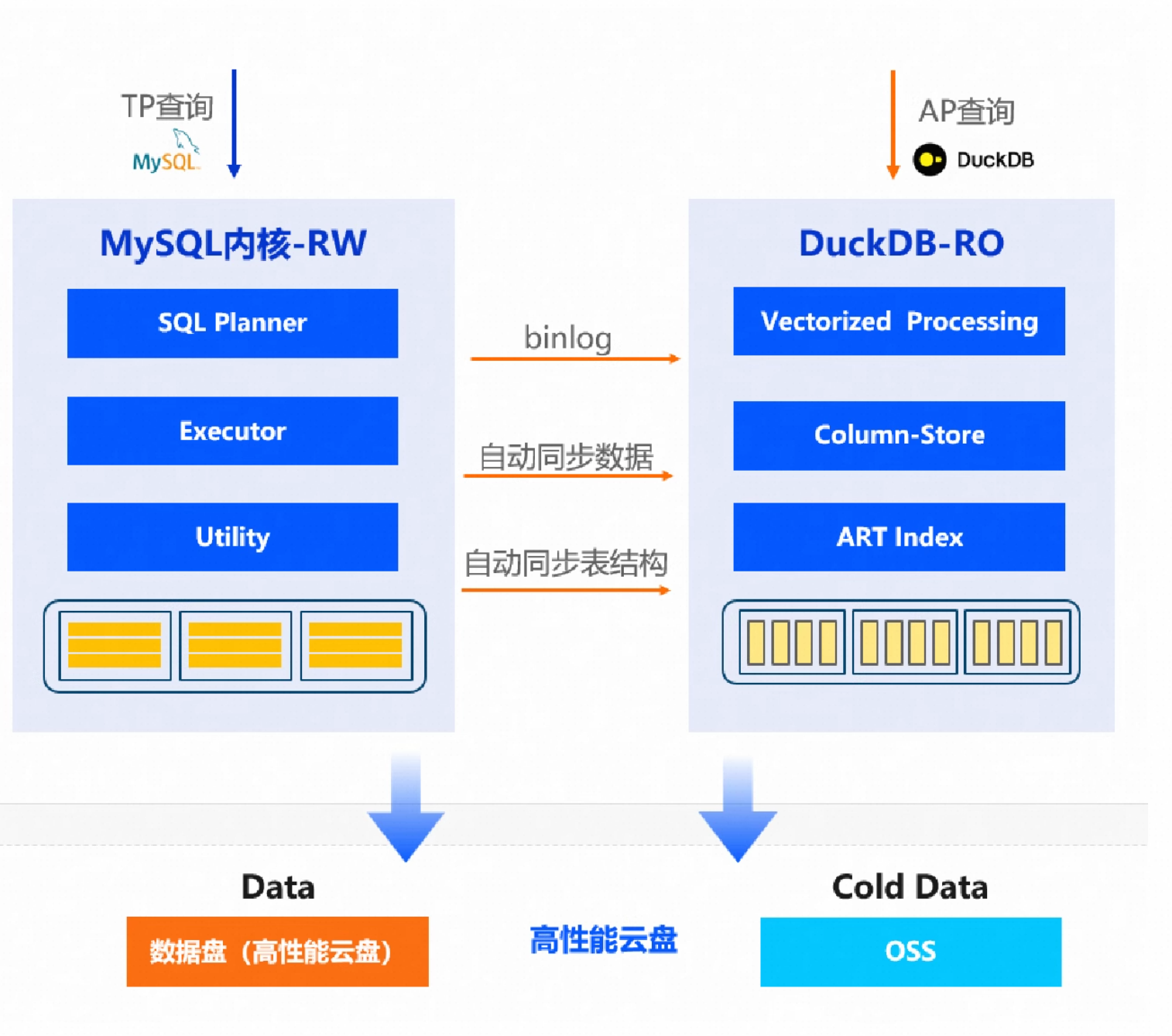

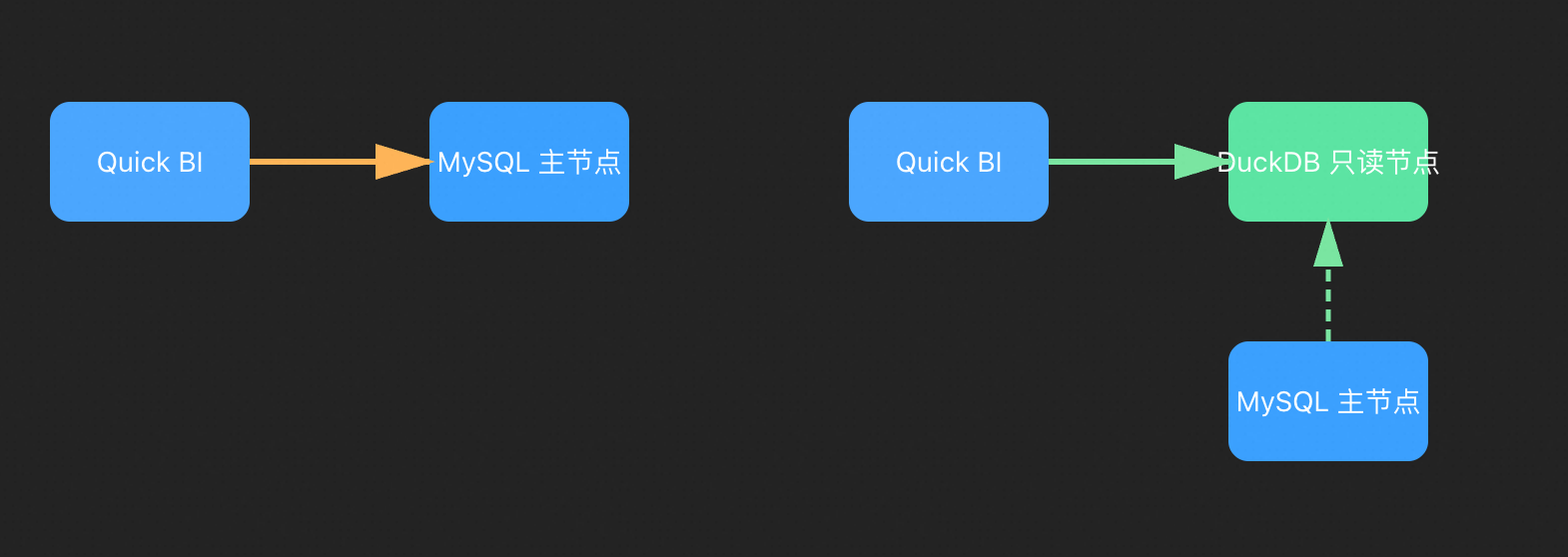

看到MySQL DuckDB的分析实例专题页(https://www.aliyun.com/activity/database/rds-duckdb )看到下面架构,主库承载正常的OLTP日常交易数据,而只读库使用DuckDB列存承载分析数据,整个同步过程看上去透明无感,看上去能够极大降低我的分析成本,因此对DuckDB进行测试。

从架构图中看到当于分析节点只是一个只读节点,感觉这种架构很好解决我之前提到的问题:

- 实时性(只读节点同步理论上应该是秒级)

- 兼容性(MySQL语法)

- 运维复杂性(RDS负责主备同步)

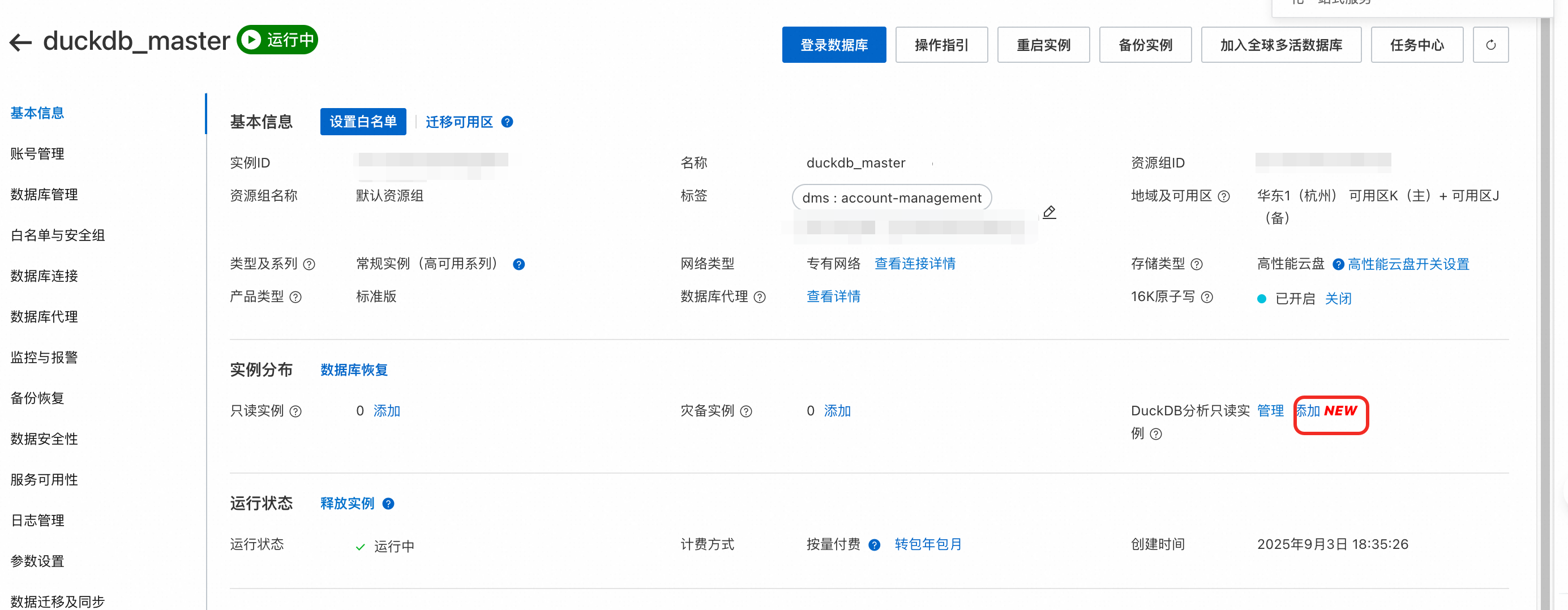

实例购买

根据官方文档(链接)步骤购买主实例后,在主实例的详情页面添加只读实例,购买规格如下:

- 主实例:MySQL 8.0 高可用版(4c16g)

- 分析实例:DuckDB 只读版(4c16g)

数据准备

AP类查询,需要有充足的数据量和复杂的业务场景,所以建立了多个表接近OLTP场景的一个数据库,并随机生成插入数据。

注意到MySQL主库和DuckDB从库完全一致,因此不适合设置Dim维度表和Fact事实表的星型结构,直接使用原始的MySQL TP类表。

测试数据库建立

SET @N_CUSTOMERS = 1000000;

SET @N_PRODUCTS = 50000;

SET @N_STORES = 2000;

SET @N_ORDERS_M = 10; -- 1000万订单

SET @ITEMS_PER_ORDER = 2;

SET @DATE_START = DATE('2021-01-01');

SET @DATE_END = DATE('2025-12-31');

/* 1) 序列表 & 确定性"随机"函数 */

DROP TABLE IF EXISTS t10;

CREATE TABLE t10 (d INT PRIMARY KEY) ENGINE=InnoDB;

INSERT INTO t10 VALUES (0),(1),(2),(3),(4),(5),(6),(7),(8),(9);

DROP TABLE IF EXISTS seq_1k;

CREATE TABLE seq_1k (n INT PRIMARY KEY) ENGINE=InnoDB;

INSERT INTO seq_1k(n)

SELECT a.d + b.d*10 + c.d*100 FROM t10 a, t10 b, t10 c; -- 0..999 共1000行

DROP FUNCTION IF EXISTS fn_hash32;

DELIMITER $$

CREATE FUNCTION fn_hash32(s VARCHAR(255)) RETURNS BIGINT

DETERMINISTIC

BEGIN

RETURN CAST(CONV(SUBSTRING(MD5(s),1,8),16,10) AS UNSIGNED);

END$$

DELIMITER ;

DROP FUNCTION IF EXISTS fn_rand01;

DELIMITER $$

CREATE FUNCTION fn_rand01(key_str VARCHAR(255)) RETURNS DOUBLE

DETERMINISTIC

BEGIN

RETURN fn_hash32(key_str) / 4294967295.0;

END$$

DELIMITER ;

/* 2) 业务表结构(纯 OLTP) */

DROP TABLE IF EXISTS customers;

CREATE TABLE customers (

customer_id BIGINT PRIMARY KEY,

name VARCHAR(64) NOT NULL,

gender ENUM('M','F') NOT NULL,

signup_ts DATETIME NOT NULL,

province VARCHAR(32) NOT NULL,

city VARCHAR(64) NOT NULL,

birth_date DATE NOT NULL,

vip_level TINYINT NOT NULL

) ENGINE=InnoDB;

DROP TABLE IF EXISTS stores;

CREATE TABLE stores (

store_id INT PRIMARY KEY,

name VARCHAR(64) NOT NULL,

region_code VARCHAR(16) NOT NULL,

city VARCHAR(64) NOT NULL,

opened_ts DATETIME NOT NULL

) ENGINE=InnoDB;

DROP TABLE IF EXISTS products;

CREATE TABLE products (

product_id INT PRIMARY KEY,

sku VARCHAR(32) NOT NULL,

name VARCHAR(128) NOT NULL,

category VARCHAR(64) NOT NULL,

brand VARCHAR(64) NOT NULL,

base_price DECIMAL(10,2) NOT NULL,

status ENUM('ON','OFF') NOT NULL

) ENGINE=InnoDB;

DROP TABLE IF EXISTS inventory_ledger;

CREATE TABLE inventory_ledger (

store_id INT NOT NULL,

product_id INT NOT NULL,

tx_ts DATETIME NOT NULL,

tx_type ENUM('IN','OUT','ADJ') NOT NULL,

quantity INT NOT NULL,

PRIMARY KEY (store_id, product_id, tx_ts)

) ENGINE=InnoDB;

DROP TABLE IF EXISTS orders;

CREATE TABLE orders (

order_id BIGINT PRIMARY KEY,

customer_id BIGINT NOT NULL,

store_id INT NOT NULL,

order_ts DATETIME NOT NULL,

channel ENUM('APP','WEB','STORE') NOT NULL,

payment_method ENUM('ALI','WECHAT','CARD','COD') NOT NULL,

status ENUM('CREATED','PAID','SHIPPED','DELIVERED','CANCELLED','REFUNDED') NOT NULL,

coupon_amount DECIMAL(10,2) NOT NULL,

shipping_fee DECIMAL(10,2) NOT NULL,

total_amount DECIMAL(12,2) NOT NULL

) ENGINE=InnoDB;

DROP TABLE IF EXISTS order_items;

CREATE TABLE order_items (

order_id BIGINT NOT NULL,

item_no TINYINT NOT NULL,

product_id INT NOT NULL,

quantity INT NOT NULL,

unit_price DECIMAL(10,2) NOT NULL,

discount_rate DECIMAL(5,4) NOT NULL,

tax_rate DECIMAL(5,4) NOT NULL,

line_amount DECIMAL(12,2) NOT NULL,

PRIMARY KEY (order_id, item_no)

) ENGINE=InnoDB;

DROP TABLE IF EXISTS payments;

CREATE TABLE payments (

payment_id BIGINT PRIMARY KEY,

order_id BIGINT NOT NULL,

pay_ts DATETIME NOT NULL,

method ENUM('ALI','WECHAT','CARD','COD') NOT NULL,

amount DECIMAL(12,2) NOT NULL,

status ENUM('INIT','SUCCESS','FAILED','REFUNDED') NOT NULL

) ENGINE=InnoDB;

DROP TABLE IF EXISTS shipments;

CREATE TABLE shipments (

shipment_id BIGINT PRIMARY KEY,

order_id BIGINT NOT NULL,

ship_ts DATETIME NULL,

deliver_ts DATETIME NULL,

carrier VARCHAR(32) NOT NULL,

fee DECIMAL(10,2) NOT NULL,

status ENUM('CREATED','SHIPPED','IN_TRANSIT','DELIVERED','EXCEPTION','CANCELLED') NOT NULL,

province VARCHAR(32) NOT NULL,

city VARCHAR(64) NOT NULL

) ENGINE=InnoDB;

DROP TABLE IF EXISTS order_status_history;

CREATE TABLE order_status_history (

order_id BIGINT NOT NULL,

seq_no TINYINT NOT NULL,

status VARCHAR(16) NOT NULL,

ts DATETIME NOT NULL,

PRIMARY KEY (order_id, seq_no)

) ENGINE=InnoDB;

/* 3) 造数(全部内联 ELT,不建任何 Dim 表) */

/* 3.1 customers 100万 */

INSERT INTO customers (customer_id, name, gender, signup_ts, province, city, birth_date, vip_level)

SELECT

((a.n-1)*1000 + b.n) AS customer_id,

CONCAT('C', LPAD(((a.n-1)*1000 + b.n), 8, '0')) AS name,

IF(fn_rand01(CONCAT('g',a.n,'-',b.n)) < 0.5, 'M','F') AS gender,

TIMESTAMP(

DATE_ADD(@DATE_START, INTERVAL CAST(fn_rand01(CONCAT('sd',a.n,'-',b.n))*DATEDIFF(@DATE_END,@DATE_START) AS SIGNED) DAY),

SEC_TO_TIME(CAST(fn_rand01(CONCAT('st',a.n,'-',b.n))*86400 AS SIGNED))

) AS signup_ts,

ELT(1 + (fn_hash32(CONCAT('pv',a.n,'-',b.n)) % 10),

'北京','上海','广东','浙江','江苏','四川','湖北','山东','河南','陕西') AS province,

ELT(1 + (fn_hash32(CONCAT('ct',a.n,'-',b.n)) % 20),

'北京','上海','广州','深圳','杭州','苏州','成都','重庆','武汉','西安',

'南京','郑州','长沙','青岛','厦门','宁波','合肥','佛山','无锡','东莞') AS city,

DATE_ADD('1970-01-01', INTERVAL (18 + CAST(fn_hash32(CONCAT('age',a.n,'-',b.n))%45 AS SIGNED))*365 DAY) AS birth_date,

CAST(fn_hash32(CONCAT('vip',a.n,'-',b.n))%6 AS SIGNED) AS vip_level

FROM seq_1k a

JOIN seq_1k b

WHERE ((a.n-1)*1000 + b.n) <= @N_CUSTOMERS

ORDER BY a.n, b.n;

/* 3.2 stores 2000 */

INSERT INTO stores (store_id, name, region_code, city, opened_ts)

SELECT

t.s_id AS store_id,

CONCAT('S', LPAD(t.s_id, 5, '0')) AS name,

ELT(1 + (fn_hash32(CONCAT('rg', t.s_id)) % 5), 'NORTH','EAST','SOUTH','WEST','CENT') AS region_code,

ELT(1 + (fn_hash32(CONCAT('sc', t.s_id)) % 20),

'北京','上海','广州','深圳','杭州','苏州','成都','重庆','武汉','西安',

'南京','郑州','长沙','青岛','厦门','宁波','合肥','佛山','无锡','东莞') AS city,

TIMESTAMP(

DATE_ADD(@DATE_START, INTERVAL CAST(fn_rand01(CONCAT('op', t.s_id)) * 365 * 3 AS SIGNED) DAY),

SEC_TO_TIME(CAST(fn_rand01(CONCAT('ot', t.s_id)) * 86400 AS SIGNED))

) AS opened_ts

FROM (

SELECT ((a.n - 1) * 1000 + b.n) AS s_id

FROM seq_1k a

JOIN seq_1k b

) AS t

WHERE t.s_id <= @N_STORES

ORDER BY t.s_id;

/* 3.3 products 5万 */

INSERT INTO products (product_id, sku, name, category, brand, base_price, status)

SELECT

t.p_id AS product_id,

CONCAT('SKU', LPAD(t.p_id, 6, '0')) AS sku,

CONCAT('商品', LPAD(t.p_id, 6, '0')) AS name,

ELT(t.category_idx,

'手机','电脑','家电','服饰','美妆','图书','运动','家居','食品','母婴') AS category,

ELT(t.brand_idx,

'Aster','Breeze','Canyon','Dyna','Eclipse','Frost','Glow','Helix','Iris','Juno') AS brand,

ROUND(

(

CASE

WHEN t.category_idx IN (1,2,3) THEN 500 + (fn_hash32(CONCAT('bp', t.p_id)) % 5000)

WHEN t.category_idx IN (4,5,9,10) THEN 20 + (fn_hash32(CONCAT('bp', t.p_id)) % 300)

ELSE 50 + (fn_hash32(CONCAT('bp', t.p_id)) % 1000)

END

) * (0.85 + 0.30 * fn_rand01(CONCAT('v', t.p_id)))

, 2) AS base_price,

IF(fn_rand01(CONCAT('on', t.p_id)) > 0.05, 'ON', 'OFF') AS status

FROM (

SELECT

((a.n - 1) * 1000 + b.n) AS p_id,

(fn_hash32(CONCAT('pc', ((a.n - 1) * 1000 + b.n))) % 10) + 1 AS category_idx,

(fn_hash32(CONCAT('br', ((a.n - 1) * 1000 + b.n))) % 10) + 1 AS brand_idx

FROM seq_1k a

JOIN seq_1k b

) AS t

WHERE t.p_id <= @N_PRODUCTS

ORDER BY t.p_id;

/* 3.4 稀疏库存流水 */

INSERT INTO inventory_ledger (store_id, product_id, tx_ts, tx_type, quantity)

SELECT

1 + (fn_hash32(CONCAT('is',a.n,'-',b.n)) % @N_STORES),

1 + (fn_hash32(CONCAT('ip',a.n,'-',b.n)) % @N_PRODUCTS),

TIMESTAMP(@DATE_START, '00:00:00'),

'IN',

50 + (fn_hash32(CONCAT('iq',a.n,'-',b.n)) % 200)

FROM seq_1k a JOIN seq_1k b

WHERE (fn_hash32(CONCAT('pick',a.n,'-',b.n)) % 1000) < 3;

/* 4) 订单头 1000万(按百万分批) */

DROP PROCEDURE IF EXISTS sp_gen_orders;

DELIMITER $$

CREATE PROCEDURE sp_gen_orders()

BEGIN

DECLARE i INT DEFAULT 0;

DECLARE batch BIGINT DEFAULT 1000000;

WHILE i < @N_ORDERS_M DO

START TRANSACTION;

INSERT INTO orders (order_id, customer_id, store_id, order_ts, channel, payment_method, status, coupon_amount, shipping_fee, total_amount)

SELECT

i*batch + (k1.n + k2.n*1000) + 1 AS order_id,

1 + (fn_hash32(CONCAT('oc', i,'-',k1.n,'-',k2.n)) % @N_CUSTOMERS) AS customer_id,

1 + (fn_hash32(CONCAT('os', i,'-',k1.n,'-',k2.n)) % @N_STORES) AS store_id,

TIMESTAMP(

DATE_ADD(@DATE_START, INTERVAL CAST(fn_rand01(CONCAT('od',i,'-',k1.n,'-',k2.n))*DATEDIFF(@DATE_END,@DATE_START) AS SIGNED) DAY),

SEC_TO_TIME(CAST(fn_rand01(CONCAT('ot',i,'-',k1.n,'-',k2.n))*86400 AS SIGNED))

) AS order_ts,

ELT(1 + (fn_hash32(CONCAT('ch', i,'-',k1.n,'-',k2.n)) % 3), 'APP','WEB','STORE') AS channel,

ELT(1 + (fn_hash32(CONCAT('pm', i,'-',k1.n,'-',k2.n)) % 4), 'ALI','WECHAT','CARD','COD') AS payment_method,

CASE

WHEN fn_rand01(CONCAT('st',i,'-',k1.n,'-',k2.n)) < 0.05 THEN 'REFUNDED'

WHEN fn_rand01(CONCAT('st2',i,'-',k1.n,'-',k2.n)) < 0.20 THEN 'CANCELLED'

WHEN fn_rand01(CONCAT('st3',i,'-',k1.n,'-',k2.n)) < 0.50 THEN 'DELIVERED'

WHEN fn_rand01(CONCAT('st4',i,'-',k1.n,'-',k2.n)) < 0.75 THEN 'SHIPPED'

ELSE 'PAID'

END AS status,

ROUND(

CASE ELT(1 + (fn_hash32(CONCAT('ch', i,'-',k1.n,'-',k2.n)) % 3), 'APP','WEB','STORE')

WHEN 'APP' THEN 3 + 20*fn_rand01(CONCAT('cp',i,'-',k1.n,'-',k2.n))

WHEN 'WEB' THEN 2 + 10*fn_rand01(CONCAT('cp',i,'-',k1.n,'-',k2.n))

ELSE 0

END, 2

) AS coupon_amount,

ROUND(

CASE ELT(1 + (fn_hash32(CONCAT('ch', i,'-',k1.n,'-',k2.n)) % 3), 'APP','WEB','STORE')

WHEN 'STORE' THEN 0

ELSE 5 + 10*fn_rand01(CONCAT('sf',i,'-',k1.n,'-',k2.n))

END, 2

) AS shipping_fee,

0.00 AS total_amount

FROM seq_1k k1 JOIN seq_1k k2;

COMMIT;

SET i = i + 1;

END WHILE;

END$$

DELIMITER ;

CALL sp_gen_orders();

/* 5) 订单明细 2000万(JOIN products & COALESCE 单价防空) */

DROP PROCEDURE IF EXISTS sp_gen_items;

DELIMITER $$

CREATE PROCEDURE sp_gen_items()

BEGIN

DECLARE i TINYINT;

-- item_no = 1

SET i = 1;

START TRANSACTION;

INSERT INTO order_items(order_id, item_no, product_id, quantity, unit_price, discount_rate, tax_rate, line_amount)

SELECT

o.order_id,

i AS item_no,

pid.product_id,

1 + (fn_hash32(CONCAT('qt', o.order_id, '-', i)) % 5) AS quantity,

ROUND(COALESCE(p.base_price, 99.99)

* (0.95 + 0.10*fn_rand01(CONCAT('sf', o.store_id)))

* (0.90 + 0.20*fn_rand01(CONCAT('se', YEAR(o.order_ts), '-', MONTH(o.order_ts))))

* (0.98 + 0.04*fn_rand01(CONCAT('ep', o.order_id, '-', i)))

,2) AS unit_price,

ROUND(

CASE o.channel

WHEN 'APP' THEN 0.05 + 0.15*fn_rand01(CONCAT('dc', o.order_id, '-', i))

WHEN 'WEB' THEN 0.03 + 0.10*fn_rand01(CONCAT('dc', o.order_id, '-', i))

WHEN 'STORE' THEN 0.00 + 0.05*fn_rand01(CONCAT('dc', o.order_id, '-', i))

END

,4) AS discount_rate,

CASE WHEN p.category IN ('手机','电脑','家电') THEN 0.09 ELSE 0.06 END AS tax_rate,

0.00 AS line_amount

FROM orders o

JOIN (

SELECT o2.order_id,

1 + (fn_hash32(CONCAT('pi', o2.order_id, '-1')) % @N_PRODUCTS) AS product_id

FROM orders o2

) pid ON pid.order_id = o.order_id

JOIN products p ON p.product_id = pid.product_id;

COMMIT;

-- item_no = 2

SET i = 2;

START TRANSACTION;

INSERT INTO order_items(order_id, item_no, product_id, quantity, unit_price, discount_rate, tax_rate, line_amount)

SELECT

o.order_id,

i AS item_no,

pid.product_id,

1 + (fn_hash32(CONCAT('qt', o.order_id, '-', i)) % 5) AS quantity,

ROUND(COALESCE(p.base_price, 99.99)

* (0.95 + 0.10*fn_rand01(CONCAT('sf', o.store_id)))

* (0.90 + 0.20*fn_rand01(CONCAT('se', YEAR(o.order_ts), '-', MONTH(o.order_ts))))

* (0.98 + 0.04*fn_rand01(CONCAT('ep', o.order_id, '-', i)))

,2) AS unit_price,

ROUND(

CASE o.channel

WHEN 'APP' THEN 0.05 + 0.15*fn_rand01(CONCAT('dc', o.order_id, '-', i))

WHEN 'WEB' THEN 0.03 + 0.10*fn_rand01(CONCAT('dc', o.order_id, '-', i))

WHEN 'STORE' THEN 0.00 + 0.05*fn_rand01(CONCAT('dc', o.order_id, '-', i))

END

,4) AS discount_rate,

CASE WHEN p.category IN ('手机','电脑','家电') THEN 0.09 ELSE 0.06 END AS tax_rate,

0.00 AS line_amount

FROM orders o

JOIN (

SELECT o3.order_id,

1 + (fn_hash32(CONCAT('pi', o3.order_id, '-2')) % @N_PRODUCTS) AS product_id

FROM orders o3

) pid ON pid.order_id = o.order_id

JOIN products p ON p.product_id = pid.product_id;

COMMIT;

END$$

DELIMITER ;

CALL sp_gen_items();

/* 6) 汇总回填 + 支付/物流/状态历史 + 索引 */

/* 行金额 & 订单总额回填 */

UPDATE order_items

SET line_amount = ROUND(quantity * unit_price * (1 - discount_rate), 2);

UPDATE orders o

JOIN (

SELECT order_id, ROUND(SUM(line_amount),2) AS amt

FROM order_items GROUP BY order_id

) x ON x.order_id = o.order_id

SET o.total_amount = x.amt + o.shipping_fee - o.coupon_amount;

/* 支付 */

INSERT INTO payments (payment_id, order_id, pay_ts, method, amount, status)

SELECT

o.order_id AS payment_id,

o.order_id,

DATE_ADD(o.order_ts, INTERVAL CAST(60*fn_rand01(CONCAT('pdt',o.order_id)) AS SIGNED) MINUTE) AS pay_ts,

o.payment_method,

GREATEST(0.00, o.total_amount) AS amount,

CASE

WHEN o.status IN ('CANCELLED') THEN 'FAILED'

WHEN o.status IN ('REFUNDED') THEN 'REFUNDED'

ELSE 'SUCCESS'

END AS status

FROM orders o;

/* 物流(省/市直接 ELT 内联) */

INSERT INTO shipments (shipment_id, order_id, ship_ts, deliver_ts, carrier, fee, status, province, city)

SELECT

o.order_id AS shipment_id,

o.order_id,

CASE WHEN o.channel IN ('APP','WEB') AND o.status NOT IN ('CANCELLED')

THEN DATE_ADD(o.order_ts, INTERVAL CAST(1+fn_hash32(CONCAT('s1',o.order_id))%72 AS SIGNED) HOUR) END AS ship_ts,

CASE WHEN o.channel IN ('APP','WEB') AND o.status = 'DELIVERED'

THEN DATE_ADD(o.order_ts, INTERVAL CAST(24+fn_hash32(CONCAT('s2',o.order_id))%240 AS SIGNED) HOUR) END AS deliver_ts,

ELT(1+(fn_hash32(CONCAT('cr',o.order_id))%4),'SF','JD','STO','YTO') AS carrier,

o.shipping_fee,

CASE

WHEN o.channel='STORE' THEN 'CANCELLED'

WHEN o.status='DELIVERED' THEN 'DELIVERED'

WHEN o.status='CANCELLED' THEN 'CANCELLED'

WHEN o.status IN ('PAID','SHIPPED') THEN 'IN_TRANSIT'

ELSE 'CREATED'

END AS status,

ELT(1 + (fn_hash32(CONCAT('sp',o.order_id)) % 10),

'北京','上海','广东','浙江','江苏','四川','湖北','山东','河南','陕西') AS province,

ELT(1 + (fn_hash32(CONCAT('scy',o.order_id)) % 20),

'北京','上海','广州','深圳','杭州','苏州','成都','重庆','武汉','西安',

'南京','郑州','长沙','青岛','厦门','宁波','合肥','佛山','无锡','东莞') AS city

FROM orders o;

/* 订单状态历史 */

INSERT INTO order_status_history(order_id, seq_no, status, ts)

SELECT o.order_id, 1, 'CREATED', o.order_ts FROM orders o;

INSERT INTO order_status_history(order_id, seq_no, status, ts)

SELECT o.order_id, 2, 'PAID',

CASE WHEN o.status IN ('PAID','SHIPPED','DELIVERED','REFUNDED')

THEN DATE_ADD(o.order_ts, INTERVAL 5+CAST(60*fn_rand01(CONCAT('e2',o.order_id)) AS SIGNED) MINUTE)

ELSE o.order_ts END

FROM orders o

WHERE o.status IN ('PAID','SHIPPED','DELIVERED','REFUNDED');

INSERT INTO order_status_history(order_id, seq_no, status, ts)

SELECT o.order_id, 3, 'SHIPPED',

CASE WHEN o.status IN ('SHIPPED','DELIVERED')

THEN DATE_ADD(o.order_ts, INTERVAL 1+CAST(60*fn_rand01(CONCAT('e3',o.order_id)) AS SIGNED) HOUR)

ELSE o.order_ts END

FROM orders o

WHERE o.status IN ('SHIPPED','DELIVERED');

INSERT INTO order_status_history(order_id, seq_no, status, ts)

SELECT o.order_id, 4, o.status,

CASE

WHEN o.status='DELIVERED' THEN DATE_ADD(o.order_ts, INTERVAL 24+CAST(60*fn_rand01(CONCAT('e4',o.order_id)) AS SIGNED) HOUR)

WHEN o.status IN ('CANCELLED','REFUNDED') THEN DATE_ADD(o.order_ts, INTERVAL CAST(60*fn_rand01(CONCAT('e5',o.order_id)) AS SIGNED) MINUTE)

ELSE o.order_ts

END

FROM orders o

WHERE o.status IN ('DELIVERED','CANCELLED','REFUNDED');建立索引

/* 索引 */

ALTER TABLE customers ADD INDEX idx_cus_signup (signup_ts), ADD INDEX idx_cus_city (province, city);

ALTER TABLE stores ADD INDEX idx_store_city (city), ADD INDEX idx_store_opened (opened_ts);

ALTER TABLE products ADD INDEX idx_prod_cat (category), ADD INDEX idx_prod_brand (brand), ADD INDEX idx_prod_status (status);

ALTER TABLE orders

ADD INDEX idx_orders_cus (customer_id, order_ts),

ADD INDEX idx_orders_store (store_id, order_ts),

ADD INDEX idx_orders_ts (order_ts),

ADD INDEX idx_orders_status (status),

ADD INDEX idx_orders_channel (channel);

ALTER TABLE order_items

ADD INDEX idx_items_prod (product_id),

ADD INDEX idx_items_order (order_id);

ALTER TABLE payments ADD INDEX idx_pay_order (order_id), ADD INDEX idx_pay_ts (pay_ts);

ALTER TABLE shipments ADD INDEX idx_ship_order (order_id), ADD INDEX idx_ship_ts (ship_ts), ADD INDEX idx_ship_city (province, city);

ALTER TABLE order_status_history ADD INDEX idx_osh_order (order_id), ADD INDEX idx_osh_ts (ts);最终测试数据量

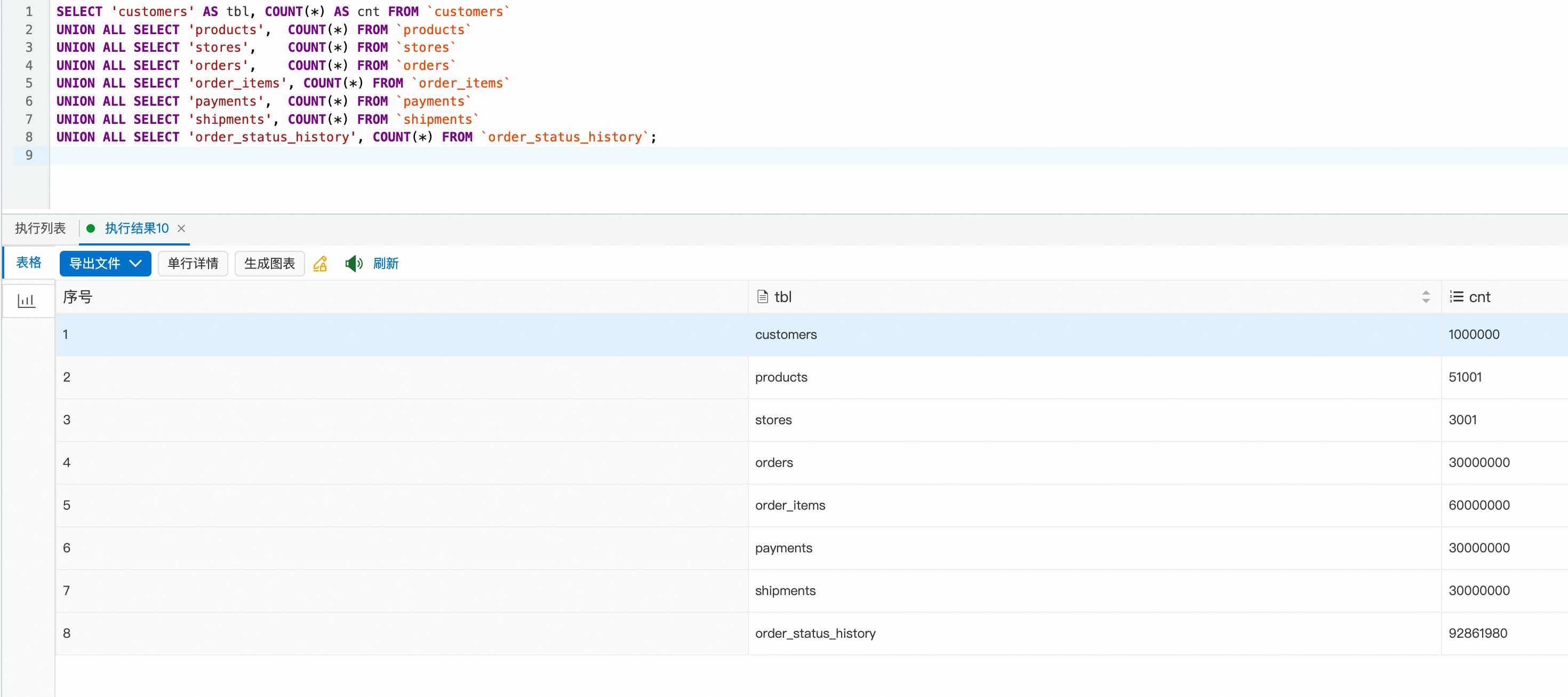

可以看到表量级基本是几千万,有一个状态表上亿

/* ========== 规模校验 ========== */

SELECT 'customers' tbl, COUNT(*) rows FROM customers

UNION ALL SELECT 'products', COUNT(*) FROM products

UNION ALL SELECT 'stores', COUNT(*) FROM stores

UNION ALL SELECT 'orders', COUNT(*) FROM orders

UNION ALL SELECT 'order_items', COUNT(*) FROM order_items

UNION ALL SELECT 'payments', COUNT(*) FROM payments

UNION ALL SELECT 'shipments', COUNT(*) FROM shipments

UNION ALL SELECT 'order_status_history', COUNT(*) FROM order_status_history;

OLAP类查询测试

设计的查询都是较为复杂的AP查询,查询涉及的数据基本上是整个表所有数据,没有通过where条件限制范围

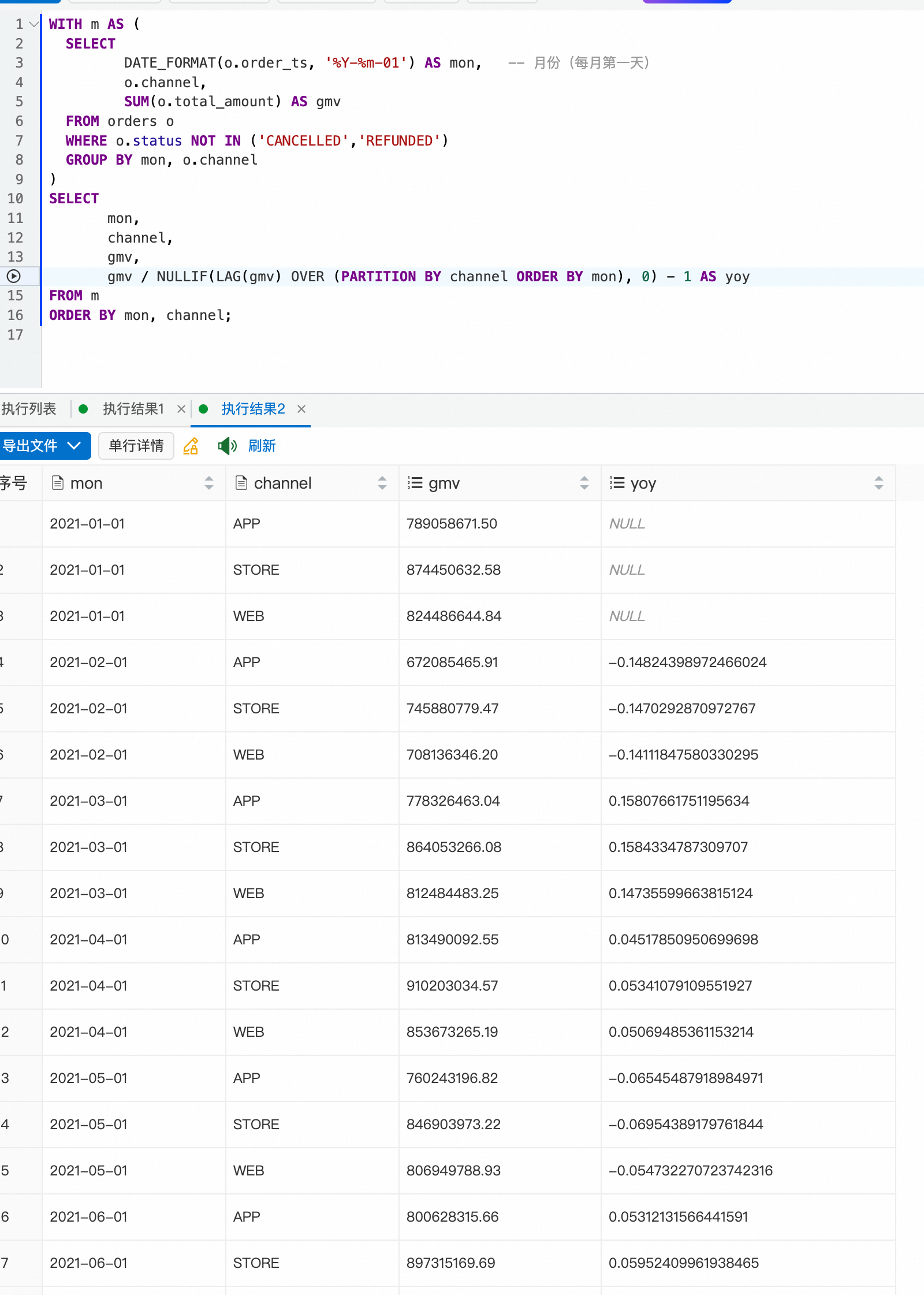

月度GMV

--月度 GMV & 同比(按渠道)

WITH m AS (

SELECT

DATE_FORMAT(o.order_ts, '%Y-%m-01') AS mon, -- 月份(每月第一天)

o.channel,

SUM(o.total_amount) AS gmv

FROM orders o

WHERE o.status NOT IN ('CANCELLED','REFUNDED')

GROUP BY mon, o.channel

)

SELECT

mon,

channel,

gmv,

gmv / NULLIF(LAG(gmv) OVER (PARTITION BY channel ORDER BY mon), 0) - 1 AS yoy

FROM m

ORDER BY mon, channel;

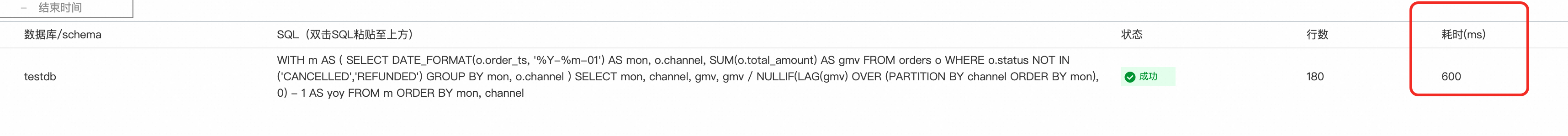

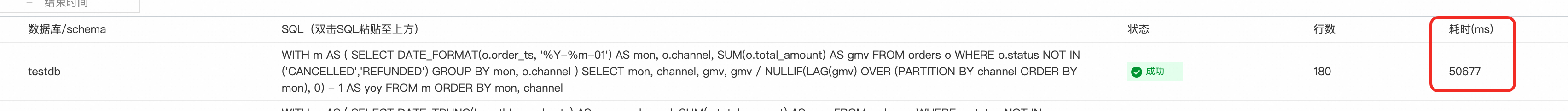

duckdb

mysql

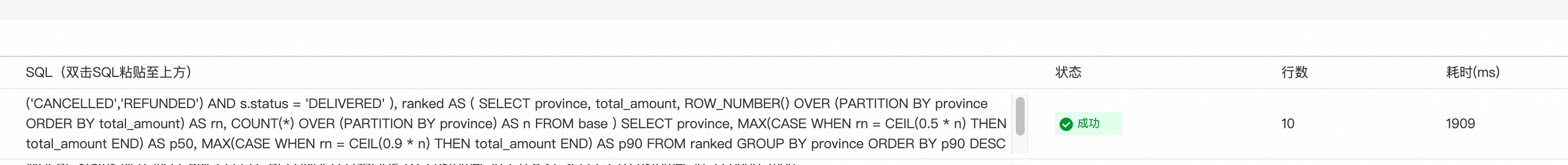

客单价分布(P50/P90)按省份

-- 客单价分布(P50/P90)按省份

WITH base AS (

SELECT s.province, o.total_amount

FROM orders o

JOIN shipments s ON o.order_id = s.order_id

WHERE o.status NOT IN ('CANCELLED','REFUNDED')

AND s.status = 'DELIVERED'

),

ranked AS (

SELECT

province,

total_amount,

ROW_NUMBER() OVER (PARTITION BY province ORDER BY total_amount) AS rn,

COUNT(*) OVER (PARTITION BY province) AS n

FROM base

)

SELECT

province,

MAX(CASE WHEN rn = CEIL(0.5 * n) THEN total_amount END) AS p50,

MAX(CASE WHEN rn = CEIL(0.9 * n) THEN total_amount END) AS p90

FROM ranked

GROUP BY province

ORDER BY p90 DESC

LIMIT 20;

duckdb

mysql

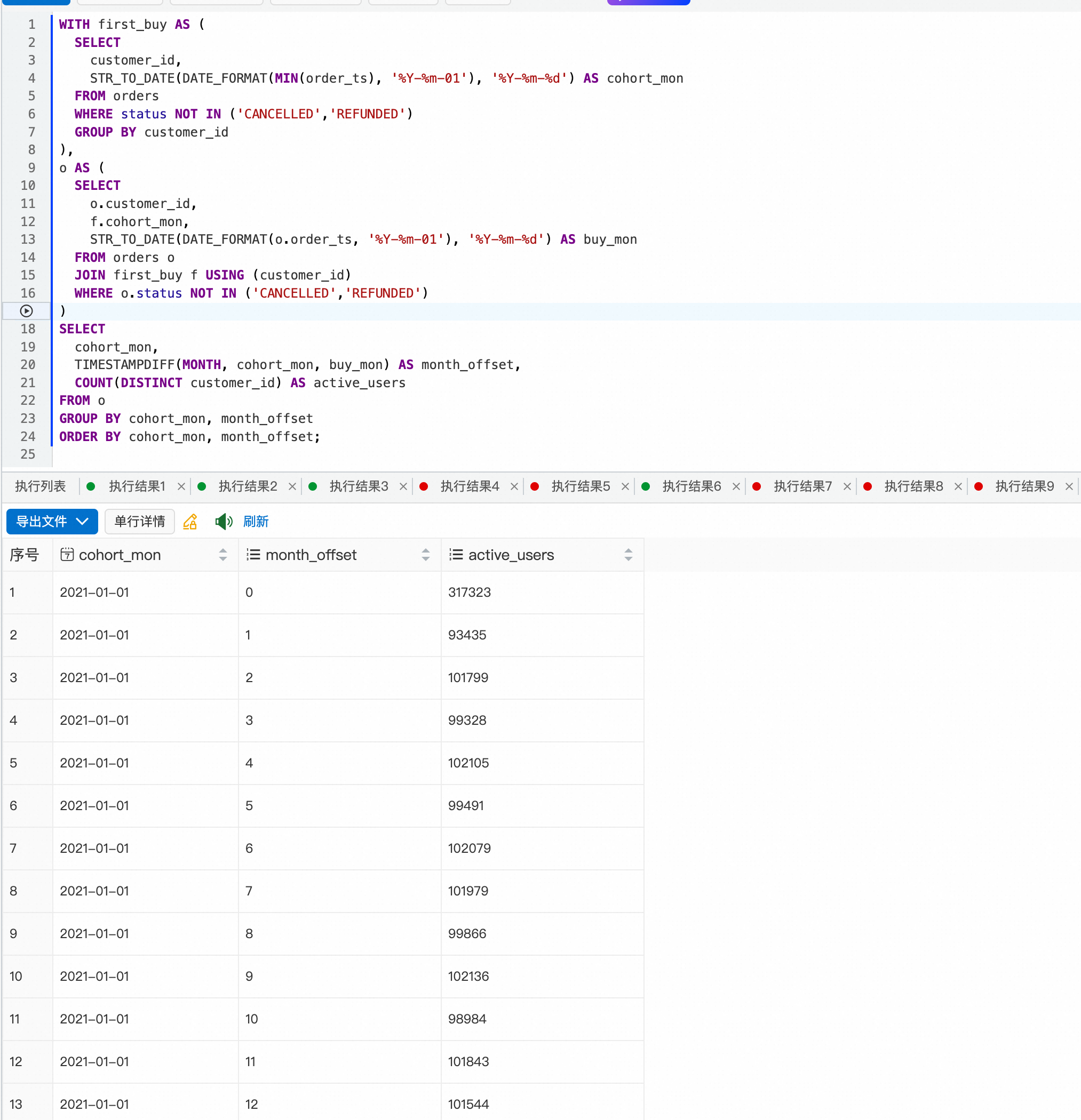

复购分析(按注册月 cohort 的次月复购率)

SQL:

WITH first_buy AS (

SELECT

customer_id,

STR_TO_DATE(DATE_FORMAT(MIN(order_ts), '%Y-%m-01'), '%Y-%m-%d') AS cohort_mon

FROM orders

WHERE status NOT IN ('CANCELLED','REFUNDED')

GROUP BY customer_id

),

o AS (

SELECT

o.customer_id,

f.cohort_mon,

STR_TO_DATE(DATE_FORMAT(o.order_ts, '%Y-%m-01'), '%Y-%m-%d') AS buy_mon

FROM orders o

JOIN first_buy f USING (customer_id)

WHERE o.status NOT IN ('CANCELLED','REFUNDED')

)

SELECT

cohort_mon,

TIMESTAMPDIFF(MONTH, cohort_mon, buy_mon) AS month_offset,

COUNT(DISTINCT customer_id) AS active_users

FROM o

GROUP BY cohort_mon, month_offset

ORDER BY cohort_mon, month_offset;

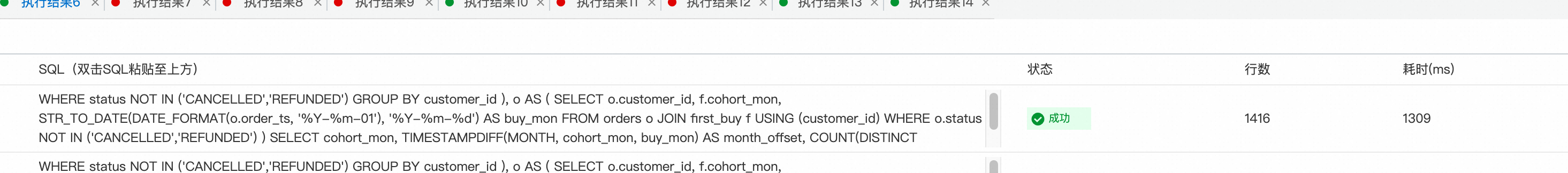

duckdb

mysql

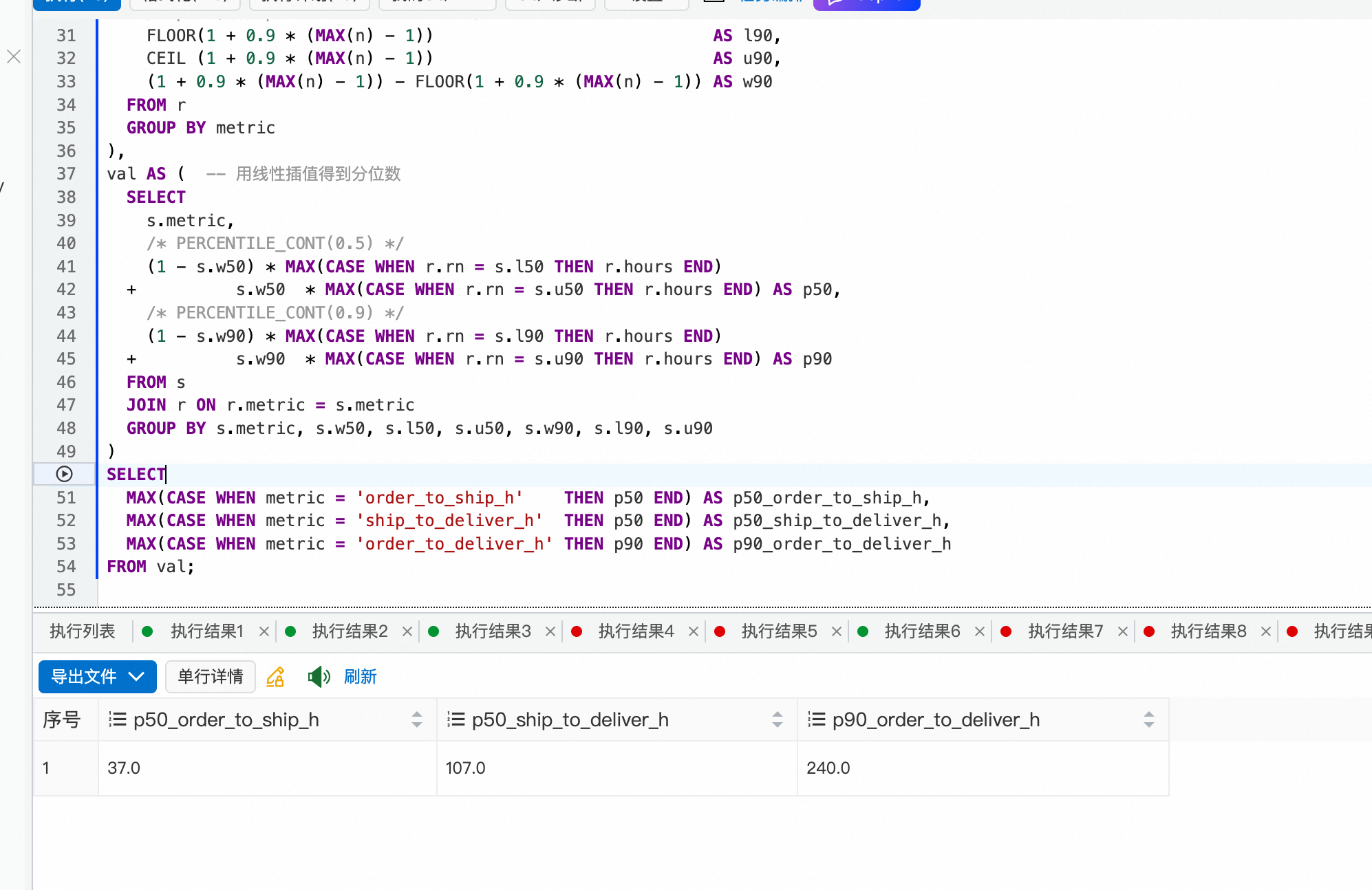

配送时效(下单 -> 发货 -> 送达分位数)

--配送时效(下单 -> 发货 -> 送达分位数)

WITH t AS (

SELECT o.order_id, o.order_ts, s.ship_ts, s.deliver_ts

FROM orders o

JOIN shipments s USING (order_id)

WHERE o.channel IN ('APP','WEB') AND s.status = 'DELIVERED'

),

u AS ( -- 把三个时长统一成一列,便于复用分位数逻辑

SELECT 'order_to_ship_h' AS metric, TIMESTAMPDIFF(HOUR, order_ts, ship_ts) AS hours FROM t

UNION ALL

SELECT 'ship_to_deliver_h' AS metric, TIMESTAMPDIFF(HOUR, ship_ts, deliver_ts) AS hours FROM t

UNION ALL

SELECT 'order_to_deliver_h' AS metric, TIMESTAMPDIFF(HOUR, order_ts, deliver_ts) AS hours FROM t

),

r AS (

SELECT

metric,

hours,

ROW_NUMBER() OVER (PARTITION BY metric ORDER BY hours) AS rn,

COUNT(*) OVER (PARTITION BY metric) AS n

FROM u

),

s AS ( -- 计算 p50 / p90 的插值位置参数

SELECT

metric,

MAX(n) AS n,

/* p50 参数:pos = 1 + 0.5*(n-1) */

FLOOR(1 + 0.5 * (MAX(n) - 1)) AS l50,

CEIL (1 + 0.5 * (MAX(n) - 1)) AS u50,

(1 + 0.5 * (MAX(n) - 1)) - FLOOR(1 + 0.5 * (MAX(n) - 1)) AS w50,

/* p90 参数:pos = 1 + 0.9*(n-1) */

FLOOR(1 + 0.9 * (MAX(n) - 1)) AS l90,

CEIL (1 + 0.9 * (MAX(n) - 1)) AS u90,

(1 + 0.9 * (MAX(n) - 1)) - FLOOR(1 + 0.9 * (MAX(n) - 1)) AS w90

FROM r

GROUP BY metric

),

val AS ( -- 用线性插值得到分位数

SELECT

s.metric,

/* PERCENTILE_CONT(0.5) */

(1 - s.w50) * MAX(CASE WHEN r.rn = s.l50 THEN r.hours END)

+ s.w50 * MAX(CASE WHEN r.rn = s.u50 THEN r.hours END) AS p50,

/* PERCENTILE_CONT(0.9) */

(1 - s.w90) * MAX(CASE WHEN r.rn = s.l90 THEN r.hours END)

+ s.w90 * MAX(CASE WHEN r.rn = s.u90 THEN r.hours END) AS p90

FROM s

JOIN r ON r.metric = s.metric

GROUP BY s.metric, s.w50, s.l50, s.u50, s.w90, s.l90, s.u90

)

SELECT

MAX(CASE WHEN metric = 'order_to_ship_h' THEN p50 END) AS p50_order_to_ship_h,

MAX(CASE WHEN metric = 'ship_to_deliver_h' THEN p50 END) AS p50_ship_to_deliver_h,

MAX(CASE WHEN metric = 'order_to_deliver_h' THEN p90 END) AS p90_order_to_deliver_h

FROM val;

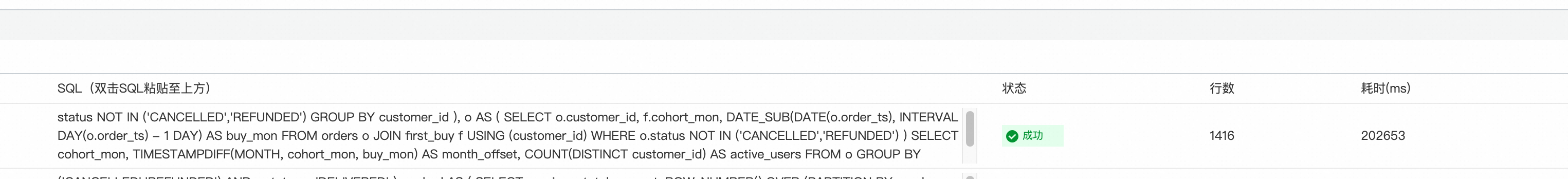

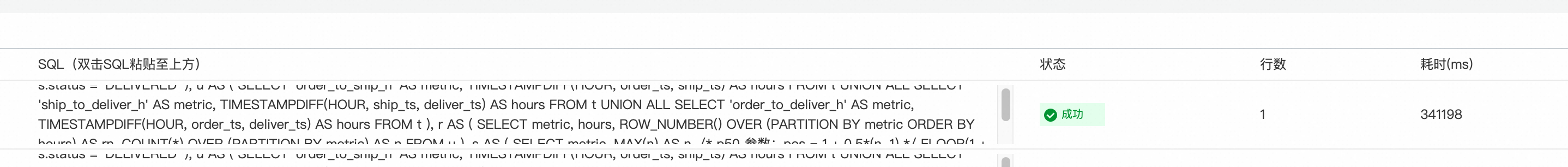

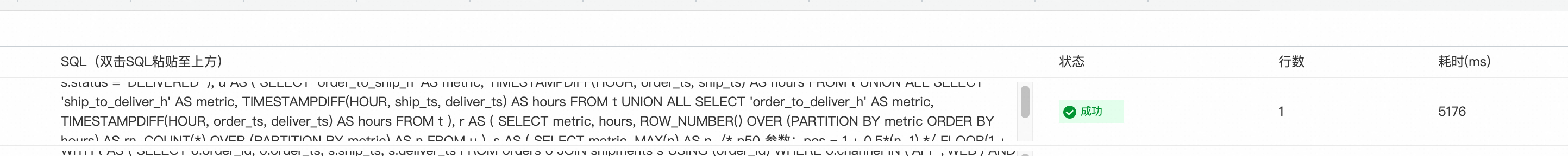

MySQL:

duckdb

整体执行时间对比

整理一下执行时间

|---------------------------|--------|--------|

| | Duckdb | MySQL8 |

| 月度GMV | 600ms | 50s |

| 客单价分布(P50/P90)按省份 | 1.9s | 67s |

| 复购分析(按注册月 cohort 的次月复购率) | 1.3s | 202s |

| 配送时效(下单 -> 发货 -> 送达分位数) | 5.1s | 341s |

整体看下来,分析类查询宣传的5-200倍性能提升对比所言非虚,比CK的性能也有明显的提升,对此我就两个字:

QuickBI

AP查询这么快,而且准实时,那就不仅仅是技术指标了,而是直接转化为业务价值:

- 当分析结果以秒级反馈,业务从"回顾"转变为"实时响应"。

- 数据传递的延迟被压缩,信息到行动之间的距离更短。

- 可以更频繁、更安全地尝试不同数据分析结果产生策略,因为结果马上能验证。

- 分析、产品、运营使用同一实时数据源,减少沟通与等待。

那么Quick BI对数据库的访问,从主实例转移到只读,除了速度快之外,不占用主库资源,架构类似于。

下面我们设计一个复杂的SQL场景使用阿里云QuickBI呈现销售洞察

业务分析场景

目标: 分析 2023年至2024年 期间,高价值客户 (VIP 3级及以上) 在 华东 (EAST) 地区门店 的 季度销售表现。

我们希望按季度和 产品大类('电子产品' vs '时尚美妆')进行下钻,分析以下关键指标 (KPI):

- 总销售额

- 总订单数

- 总客户数

- 总退款金额

- 平均支付到发货时长

- 平均签收时长

- 季度环比复购率

下面是实现该场景的SQL,较为复杂,几乎涉及到本次DEMO的所有表,其中包含多个千万级的表,展示复杂的业务分析场景。

WITH

-- 1. 筛选出符合条件的基准订单 (Filtered Base Orders)

Base_Orders AS (

SELECT

o.order_id,

o.customer_id,

YEAR(o.order_ts) AS order_year,

QUARTER(o.order_ts) AS order_quarter,

o.order_ts,

o.status

FROM orders AS o

JOIN customers AS c ON o.customer_id = c.customer_id

JOIN stores AS s ON o.store_id = s.store_id

WHERE

-- 过滤 2023-2024年, 华东(EAST)地区, VIP 3级以上客户的 (非取消) 订单

YEAR(o.order_ts) IN (2023, 2024)

AND c.vip_level >= 3

AND s.region_code = 'EAST'

AND o.status IN ('PAID', 'SHIPPED', 'DELIVERED', 'REFUNDED')

),

-- 2. 找出订单归属的产品大类 (Map Orders to Super Categories)

-- 这是后续所有聚合的基础

Order_Category_Map AS (

SELECT DISTINCT

bo.order_id,

bo.order_year,

bo.order_quarter,

bo.customer_id,

CASE

WHEN p.category IN ('手机', '电脑', '家电') THEN '电子产品'

WHEN p.category IN ('服饰', '美妆') THEN '时尚美妆'

END AS product_super_category

FROM Base_Orders AS bo

JOIN order_items AS oi ON bo.order_id = oi.order_id

JOIN products AS p ON oi.product_id = p.product_id

WHERE

p.category IN ('手机', '电脑', '家电', '服饰', '美妆')

),

-- 3. 聚合销售指标 (Aggregate Sales Metrics)

Agg_Sales AS (

SELECT

ocm.order_year,

ocm.order_quarter,

ocm.product_super_category,

SUM(oi.line_amount) AS total_sales,

COUNT(DISTINCT ocm.order_id) AS total_orders,

-- 注意: 这是 *当前季度* 的客户数

COUNT(DISTINCT ocm.customer_id) AS total_customers_current_q

FROM Order_Category_Map AS ocm

JOIN order_items AS oi ON ocm.order_id = oi.order_id

GROUP BY

ocm.order_year,

ocm.order_quarter,

ocm.product_super_category

),

-- 4. 履约时长 - 步骤 1: 提取 支付/发货 时间戳

Order_Timestamps AS (

SELECT

order_id,

MAX(CASE WHEN status = 'PAID' THEN ts END) AS paid_ts,

MAX(CASE WHEN status = 'SHIPPED' THEN ts END) AS shipped_ts

FROM order_status_history

WHERE order_id IN (SELECT order_id FROM Base_Orders)

AND status IN ('PAID', 'SHIPPED')

GROUP BY order_id

),

-- 5. 履约时长 - 步骤 2: 聚合履约时长指标

Agg_Fulfillment AS (

SELECT

ocm.order_year,

ocm.order_quarter,

ocm.product_super_category,

-- 计算平均 "支付到发货" 天数

AVG(TIMESTAMPDIFF(HOUR, ot.paid_ts, ot.shipped_ts) / 24.0) AS avg_paid_to_ship_days

FROM Order_Category_Map AS ocm

JOIN Order_Timestamps AS ot ON ocm.order_id = ot.order_id

WHERE

ot.paid_ts IS NOT NULL AND ot.shipped_ts IS NOT NULL

GROUP BY

ocm.order_year,

ocm.order_quarter,

ocm.product_super_category

),

-- 6. 聚合物流时效指标 (Aggregate Delivery Metrics)

Agg_Delivery AS (

SELECT

ocm.order_year,

ocm.order_quarter,

ocm.product_super_category,

AVG(TIMESTAMPDIFF(HOUR, bo.order_ts, s.deliver_ts) / 24.0) AS avg_delivery_days

FROM Order_Category_Map AS ocm

JOIN Base_Orders AS bo ON ocm.order_id = bo.order_id

JOIN shipments AS s ON ocm.order_id = s.order_id

WHERE

bo.status = 'DELIVERED' AND s.deliver_ts IS NOT NULL

GROUP BY

ocm.order_year,

ocm.order_quarter,

ocm.product_super_category

),

-- 7. 聚合退款指标 (Aggregate Refund Metrics)

Agg_Refunds AS (

SELECT

ocm.order_year,

ocm.order_quarter,

ocm.product_super_category,

SUM(p.amount) AS total_refunds

FROM Order_Category_Map AS ocm

JOIN Base_Orders AS bo ON ocm.order_id = bo.order_id

JOIN payments AS p ON ocm.order_id = p.order_id

WHERE

bo.status = 'REFUNDED' AND p.status = 'REFUNDED'

GROUP BY

ocm.order_year,

ocm.order_quarter,

ocm.product_super_category

),

-- 8. 复购率 - 步骤 1: 聚合每个(季,品类)的客户

Customer_Period_Activity AS (

SELECT DISTINCT

order_year,

order_quarter,

product_super_category,

customer_id

FROM Order_Category_Map

),

-- 9. 复购率 - 步骤 2: 聚合复购客户数 (分子)

Agg_Repurchasers_Numerator AS (

SELECT

curr.order_year,

curr.order_quarter,

curr.product_super_category,

-- 分子: 在当前季度购买, 且上一季度也购买的客户数

COUNT(DISTINCT prev.customer_id) AS repurchasing_customers_numerator

FROM Customer_Period_Activity AS curr

JOIN Customer_Period_Activity AS prev

ON curr.customer_id = prev.customer_id

AND curr.product_super_category = prev.product_super_category

-- 核心: 匹配上一季度的逻辑

AND (

-- 同年, 季度-1 (例如 2023-Q2 vs 2023-Q1)

(curr.order_year = prev.order_year AND curr.order_quarter = prev.order_quarter + 1)

OR

-- 跨年, Q1 vs Q4 (例如 2024-Q1 vs 2023-Q4)

(curr.order_year = prev.order_year + 1 AND curr.order_quarter = 1 AND prev.order_quarter = 4)

)

GROUP BY

curr.order_year,

curr.order_quarter,

curr.product_super_category

)

-- 10. 最终组合 (Final Combination)

-- 将所有聚合指标按 年-季度-产品大类 组合在一起

SELECT

sa_curr.order_year,

sa_curr.order_quarter,

sa_curr.product_super_category,

-- 销售指标

COALESCE(sa_curr.total_sales, 0) AS total_sales,

COALESCE(sa_curr.total_orders, 0) AS total_orders,

COALESCE(sa_curr.total_customers_current_q, 0) AS total_customers_current_q,

-- 退款指标

COALESCE(ar.total_refunds, 0) AS total_refunds,

-- 效率指标

COALESCE(af.avg_paid_to_ship_days, 0) AS avg_paid_to_ship_days,

COALESCE(ad.avg_delivery_days, 0) AS avg_delivery_days,

-- 复购率指标 (Repurchase Rate Metrics)

-- 分子: 当前季度的复购客户数 (来自 Agg_Repurchasers_Numerator)

COALESCE(rep.repurchasing_customers_numerator, 0) AS repurchasing_customers_numerator,

-- 分母: 上一季度的总客户数 (来自 sa_prev)

COALESCE(sa_prev.total_customers_current_q, 0) AS total_customers_prior_q_denominator,

-- 复购率 = 分子 / 分母

CASE

WHEN COALESCE(sa_prev.total_customers_current_q, 0) > 0

THEN COALESCE(rep.repurchasing_customers_numerator, 0) * 1.0 / sa_prev.total_customers_current_q

ELSE 0

END AS qoq_repurchase_rate

-- 我们需要当前季度的指标 (sa_curr)

FROM Agg_Sales AS sa_curr

-- (其他指标的 Join)

LEFT JOIN Agg_Fulfillment AS af

ON sa_curr.order_year = af.order_year

AND sa_curr.order_quarter = af.order_quarter

AND sa_curr.product_super_category = af.product_super_category

LEFT JOIN Agg_Delivery AS ad

ON sa_curr.order_year = ad.order_year

AND sa_curr.order_quarter = ad.order_quarter

AND sa_curr.product_super_category = ad.product_super_category

LEFT JOIN Agg_Refunds AS ar

ON sa_curr.order_year = ar.order_year

AND sa_curr.order_quarter = ar.order_quarter

AND sa_curr.product_super_category = ar.product_super_category

-- (复购率的 Join)

-- 1. Join 以获取复购率的 "分子"

LEFT JOIN Agg_Repurchasers_Numerator AS rep

ON sa_curr.order_year = rep.order_year

AND sa_curr.order_quarter = rep.order_quarter

AND sa_curr.product_super_category = rep.product_super_category

-- 2. 将 Agg_Sales *自连接* (aliased as sa_prev), 以获取复购率的 "分母"

LEFT JOIN Agg_Sales AS sa_prev

ON sa_curr.product_super_category = sa_prev.product_super_category

-- 核心: 匹配上一季度的逻辑

AND (

(sa_curr.order_year = sa_prev.order_year AND sa_curr.order_quarter = sa_prev.order_quarter + 1)

OR

(sa_curr.order_year = sa_prev.order_year + 1 AND sa_curr.order_quarter = 1 AND sa_prev.order_quarter = 4)

)

ORDER BY

sa_curr.order_year,

sa_curr.order_quarter,

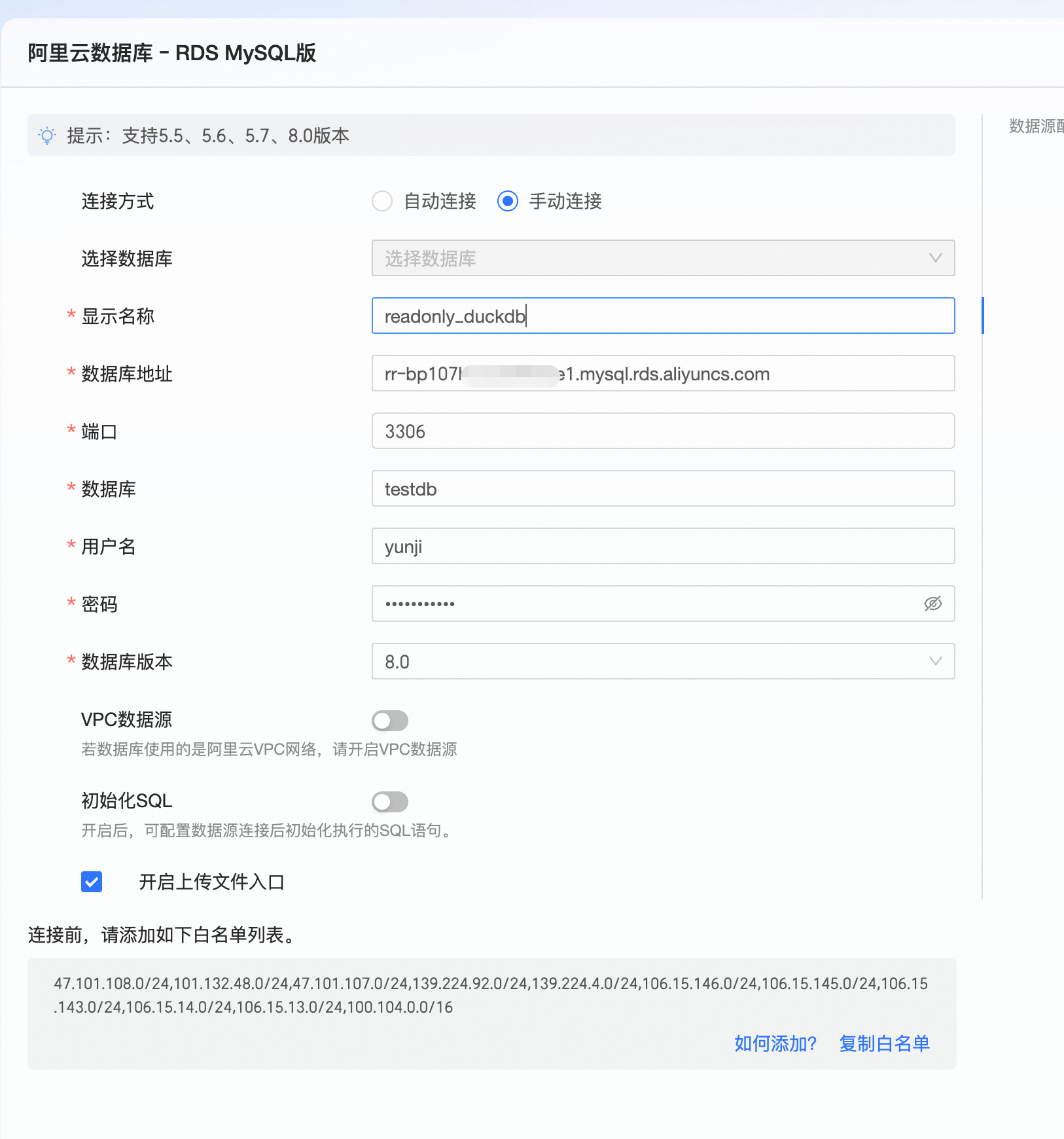

sa_curr.product_super_category;在QuickBI创建数据源,直接使用DuckDB的公网连接地址,如下图:

使用上述SQL创建数据源,结果如下:

我们先看一个简单的交叉表,因为是随机生成的数据,所以数据看起来较为平均,但体感是一样的,能够很容易根据复杂的表洞察销售结果,同时结果是秒级的展现。

此外,DuckDB 节点在 QuickBI 上的使用体验与普通 MySQL 几乎一致,兼容性意味着无迁移成本,从而把"快"的价值进一步放大------不仅是查询快,而是 从提出问题到看到答案的整个链路都快。

小结

基于本次验证,MySQL 主库 + DuckDB 分析只读库的架构在实时性、兼容性与运维复杂度上显著优于"DTS→CK"的异构方案;在复杂 OLAP 查询上也展现出数量级的性能优势,并且与 QuickBI 的对接几乎零改造、体验接近直连 MySQL。综合成本与可用性考虑,后续考虑将 DuckDB 作为 QuickBI 的分析数据源,把大部分 AP 查询从ClickHouse迁移到 DuckDB。