1.通过JDBC使用透明读写分离能力

测试流程:

-

数据库连接测试

-

准备测试数据

-

单节点主库性能测试(基准测试)

-

读写分离集群性能测试

-

性能对比分析

#maven

<dependency> <groupId>com.kingbase</groupId> <artifactId>kingbase8</artifactId> <version>9.0.0</version> <scope>system</scope> <systemPath>${project.basedir}/lib/kingbase8-9.0.0.jar</systemPath> </dependency>#配置类

package com.kingbase.demo.config;import org.slf4j.Logger;

import org.slf4j.LoggerFactory;import java.sql.*;

import java.util.Properties;public class RemoteDatabaseConfig {

private static final Logger logger = LoggerFactory.getLogger(RemoteDatabaseConfig.class);private static final String VIRTUAL_MACHINE_IP = "192.168.40.111"; private static final String PORT = "54321"; private static final String DATABASE = "test"; private static final String USERNAME = "system"; private static final String PASSWORD = "kingbase"; // 读写分离集群配置 private static final String MASTER_NODE = VIRTUAL_MACHINE_IP; private static final String SLAVE_NODE = "192.168.40.112"; private static final String NODE_LIST = "node1,node2"; static { try { Class.forName("com.kingbase8.Driver"); logger.info("金仓数据库驱动加载成功"); } catch (ClassNotFoundException e) { logger.error("金仓数据库驱动加载失败", e); throw new RuntimeException("驱动加载失败,请检查驱动jar包路径", e); } } /** * 获取读写分离连接(透明读写分离) */ public static Connection getReadWriteConnection() throws SQLException { String url = buildReadWriteUrl(); logger.info("连接到远程金仓集群: {}", url); return DriverManager.getConnection(url, USERNAME, PASSWORD); } /** * 获取直接主库连接 */ public static Connection getMasterConnection() throws SQLException { String url = String.format("jdbc:kingbase8://%s:%s/%s", MASTER_NODE, PORT, DATABASE); logger.info("直接连接到主库: {}", MASTER_NODE); return DriverManager.getConnection(url, USERNAME, PASSWORD); } /** * 获取直接备库连接 */ public static Connection getSlaveConnection() throws SQLException { String url = String.format("jdbc:kingbase8://%s:%s/%s", SLAVE_NODE, PORT, DATABASE); logger.info("直接连接到备库: {}", SLAVE_NODE); return DriverManager.getConnection(url, USERNAME, PASSWORD); } /** * 读写分离URL */ private static String buildReadWriteUrl() { return String.format( "jdbc:kingbase8://%s:%s/%s?USEDISPATCH=true&SLAVE_ADD=%s&SLAVE_PORT=%s&nodeList=%s", MASTER_NODE, PORT, DATABASE, SLAVE_NODE, PORT, NODE_LIST ); } /** * 测试数据库连接 */ public static void testAllConnections() { logger.info("=== 远程金仓数据库连接测试 ==="); // 测试读写分离连接 testConnection("读写分离连接", new ConnectionSupplier() { @Override public Connection get() throws SQLException { return getReadWriteConnection(); } }); // 测试主库连接 testConnection("主库连接", new ConnectionSupplier() { @Override public Connection get() throws SQLException { return getMasterConnection(); } }); // 测试备库连接 testConnection("备库连接", new ConnectionSupplier() { @Override public Connection get() throws SQLException { return getSlaveConnection(); } }); } private static void testConnection(String connectionType, ConnectionSupplier supplier) { logger.info("\n--- 测试 {} ---", connectionType); Connection conn = null; Statement stmt = null; ResultSet rs = null; try { conn = supplier.get(); DatabaseMetaData metaData = conn.getMetaData(); logger.info("成功", connectionType); logger.info(" 数据库: {}", metaData.getDatabaseProductName()); logger.info(" 版本: {}", metaData.getDatabaseProductVersion()); logger.info(" 驱动: {} {}", metaData.getDriverName(), metaData.getDriverVersion()); // 显示当前连接的服务器信息 showServerInfo(conn); } catch (SQLException e) { logger.error("{} 失败: {}", connectionType, e.getMessage()); } finally { // 关闭资源 try { if (rs != null) rs.close(); } catch (Exception e) {} try { if (stmt != null) stmt.close(); } catch (Exception e) {} try { if (conn != null) conn.close(); } catch (Exception e) {} } } private static void showServerInfo(Connection conn) throws SQLException { Statement stmt = null; ResultSet rs = null; try { stmt = conn.createStatement(); rs = stmt.executeQuery("SELECT inet_server_addr(), inet_server_port()"); if (rs.next()) { String serverAddr = rs.getString(1); int serverPort = rs.getInt(2); logger.info(" 当前服务器: {}:{}", serverAddr, serverPort); } } finally { // 关闭资源 try { if (rs != null) rs.close(); } catch (Exception e) {} try { if (stmt != null) stmt.close(); } catch (Exception e) {} } } public static Connection getOptimizedReadWriteConnection() throws SQLException { String url = buildReadWriteUrl() + "&prepareThreshold=5" + "&readListStrategy=1" + "&targetServerType=any" + "&loadBalanceHosts=true"; logger.info("使用优化后的读写分离连接"); return DriverManager.getConnection(url, USERNAME, PASSWORD); } public static Connection getOptimizedMasterConnection() throws SQLException { String url = String.format("jdbc:kingbase8://%s:%s/%s?prepareThreshold=5", MASTER_NODE, PORT, DATABASE); return DriverManager.getConnection(url, USERNAME, PASSWORD); } private interface ConnectionSupplier { Connection get() throws SQLException; } private static String buildOptimizedReadWriteUrl() { return String.format( "jdbc:kingbase8://%s:%s/%s?" + "USEDISPATCH=true&" + "SLAVE_ADD=%s&" + "SLAVE_PORT=%s&" + "nodeList=%s&" + "connectTimeout=5000&" + "socketTimeout=30000&" + "loginTimeout=10&" + "tcpKeepAlive=true&" + "loadBalanceHosts=true&" + "targetServerType=primary&" + "readOnly=false", MASTER_NODE, PORT, DATABASE, SLAVE_NODE, PORT, NODE_LIST ); }}

#性能测试主流程

package com.kingbase.demo.performance;

import com.kingbase.demo.config.RemoteDatabaseConfig;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;import java.sql.;

import java.util.ArrayList;

import java.util.List;

import java.util.Random;

import java.util.concurrent.;

import java.util.concurrent.atomic.AtomicLong;public class PerformanceComparisonTest {

private static final Logger logger = LoggerFactory.getLogger(PerformanceComparisonTest.class);private static final int THREAD_COUNT = 20; private static final int TEST_DURATION_SECONDS = 30; private static final int BATCH_SIZE = 1000; private final Random random = new Random(); public static class PerformanceMetrics { public long totalQueries = 0; public long totalWrites = 0; public long totalReads = 0; public long totalTimeMs = 0; public double queriesPerSecond = 0; public double writesPerSecond = 0; public double readsPerSecond = 0; @Override public String toString() { return String.format( "总查询: %,d | 总写入: %,d | 总读取: %,d | 耗时: %,dms | QPS: %.2f | 写入TPS: %.2f | 读取QPS: %.2f", totalQueries, totalWrites, totalReads, totalTimeMs, queriesPerSecond, writesPerSecond, readsPerSecond ); } } /** * 准备测试数据 */ public void prepareTestData() { logger.info("=== 准备性能测试数据 ==="); String createTableSQL = "CREATE TABLE IF NOT EXISTS performance_test (" + " id BIGSERIAL PRIMARY KEY, " + " user_id INTEGER NOT NULL, " + " order_no VARCHAR(50) UNIQUE NOT NULL, " + " product_name VARCHAR(200) NOT NULL, " + " amount DECIMAL(15,2) NOT NULL, " + " status VARCHAR(20) DEFAULT 'ACTIVE', " + " create_time TIMESTAMP DEFAULT CURRENT_TIMESTAMP, " + " update_time TIMESTAMP DEFAULT CURRENT_TIMESTAMP" + ")"; try (Connection conn = RemoteDatabaseConfig.getMasterConnection(); Statement stmt = conn.createStatement()) { stmt.execute(createTableSQL); logger.info("性能测试表创建成功"); insertInitialTestData(); } catch (SQLException e) { logger.error("准备测试数据失败", e); } } private void insertInitialTestData() { String sql = "INSERT INTO performance_test (user_id, order_no, product_name, amount) VALUES (?, ?, ?, ?)"; try (Connection conn = RemoteDatabaseConfig.getMasterConnection(); PreparedStatement pstmt = conn.prepareStatement(sql)) { conn.setAutoCommit(false); for (int i = 1; i <= 100000; i++) { pstmt.setInt(1, i % 1000); pstmt.setString(2, "ORDER_" + System.currentTimeMillis() + "_" + i); pstmt.setString(3, "产品_" + (i % 100)); pstmt.setBigDecimal(4, new java.math.BigDecimal((i % 1000) + 100.50)); pstmt.addBatch(); if (i % BATCH_SIZE == 0) { pstmt.executeBatch(); conn.commit(); logger.info("已插入 {} 条初始数据", i); } } pstmt.executeBatch(); conn.commit(); logger.info("初始测试数据插入完成,共 100,000 条记录"); } catch (SQLException e) { logger.error("插入初始数据失败", e); } } /** * 测试1: 单节点主库性能测试(基准测试) */ public PerformanceMetrics testSingleNodePerformance() { logger.info("\n=== 测试1: 单节点主库性能测试(基准) ==="); PerformanceMetrics metrics = new PerformanceMetrics(); AtomicLong totalQueries = new AtomicLong(0); AtomicLong totalWrites = new AtomicLong(0); AtomicLong totalReads = new AtomicLong(0); ExecutorService executor = Executors.newFixedThreadPool(THREAD_COUNT); CountDownLatch latch = new CountDownLatch(THREAD_COUNT); long startTime = System.currentTimeMillis(); for (int i = 0; i < THREAD_COUNT; i++) { final int threadId = i; executor.submit(() -> { try { performMixedOperations(RemoteDatabaseConfig::getMasterConnection, threadId, totalQueries, totalWrites, totalReads); } catch (Exception e) { logger.error("线程 {} 执行失败", threadId, e); } finally { latch.countDown(); } }); } try { boolean completed = latch.await(TEST_DURATION_SECONDS, TimeUnit.SECONDS); long endTime = System.currentTimeMillis(); if (!completed) { logger.warn("测试超时,强制停止"); executor.shutdownNow(); } metrics.totalTimeMs = endTime - startTime; metrics.totalQueries = totalQueries.get(); metrics.totalWrites = totalWrites.get(); metrics.totalReads = totalReads.get(); metrics.queriesPerSecond = metrics.totalQueries * 1000.0 / metrics.totalTimeMs; metrics.writesPerSecond = metrics.totalWrites * 1000.0 / metrics.totalTimeMs; metrics.readsPerSecond = metrics.totalReads * 1000.0 / metrics.totalTimeMs; logger.info("单节点主库性能结果:"); logger.info(" {}", metrics); } catch (InterruptedException e) { Thread.currentThread().interrupt(); logger.error("测试被中断", e); } finally { executor.shutdown(); } return metrics; } /** * 测试2: 读写分离集群性能测试 */ public PerformanceMetrics testReadWriteSeparationPerformance() { logger.info("\n=== 测试2: 读写分离集群性能测试 ==="); PerformanceMetrics metrics = new PerformanceMetrics(); AtomicLong totalQueries = new AtomicLong(0); AtomicLong totalWrites = new AtomicLong(0); AtomicLong totalReads = new AtomicLong(0); ExecutorService executor = Executors.newFixedThreadPool(THREAD_COUNT); CountDownLatch latch = new CountDownLatch(THREAD_COUNT); long startTime = System.currentTimeMillis(); // 创建线程列表用于更好的控制 List<Future<?>> futures = new ArrayList<>(); for (int i = 0; i < THREAD_COUNT; i++) { final int threadId = i; Future<?> future = executor.submit(() -> { try { performMixedOperations(RemoteDatabaseConfig::getReadWriteConnection, threadId, totalQueries, totalWrites, totalReads); } catch (Exception e) { logger.error("线程 {} 执行失败: {}", threadId, e.getMessage()); } finally { latch.countDown(); } }); futures.add(future); } try { boolean completed = latch.await(TEST_DURATION_SECONDS + 5, TimeUnit.SECONDS); long endTime = System.currentTimeMillis(); if (!completed) { logger.warn("测试超时,强制停止"); for (Future<?> future : futures) { future.cancel(true); } } metrics.totalTimeMs = endTime - startTime; metrics.totalQueries = totalQueries.get(); metrics.totalWrites = totalWrites.get(); metrics.totalReads = totalReads.get(); if (metrics.totalTimeMs > 0) { metrics.queriesPerSecond = metrics.totalQueries * 1000.0 / metrics.totalTimeMs; metrics.writesPerSecond = metrics.totalWrites * 1000.0 / metrics.totalTimeMs; metrics.readsPerSecond = metrics.totalReads * 1000.0 / metrics.totalTimeMs; } logger.info("读写分离集群性能结果:"); logger.info(" {}", metrics); } catch (InterruptedException e) { Thread.currentThread().interrupt(); logger.error("测试被中断", e); } finally { executor.shutdown(); try { if (!executor.awaitTermination(5, TimeUnit.SECONDS)) { executor.shutdownNow(); } } catch (InterruptedException e) { executor.shutdownNow(); Thread.currentThread().interrupt(); } } return metrics; } /** * 执行混合操作(读写混合) */ private void performMixedOperations(ConnectionSupplier connectionSupplier, int threadId, AtomicLong totalQueries, AtomicLong totalWrites, AtomicLong totalReads) { long startTime = System.currentTimeMillis(); int operationCount = 0; while (System.currentTimeMillis() - startTime < TEST_DURATION_SECONDS * 1000) { if (Thread.currentThread().isInterrupted()) { logger.debug("线程 {} 被中断", threadId); break; } Connection conn = null; try { conn = connectionSupplier.get(); // 70% 读操作,30% 写操作 if (random.nextInt(100) < 70) { // 读操作 performReadOperation(conn, threadId); totalReads.incrementAndGet(); } else { // 写操作 performWriteOperation(conn, threadId); totalWrites.incrementAndGet(); } totalQueries.incrementAndGet(); operationCount++; } catch (Exception e) { // 在性能测试中,我们记录错误但继续执行 logger.debug("线程 {} 操作执行失败: {}", threadId, e.getMessage()); } finally { // 确保连接关闭 if (conn != null) { try { conn.close(); } catch (SQLException e) { logger.debug("关闭连接失败: {}", e.getMessage()); } } } } logger.debug("线程 {} 完成,共执行 {} 次操作", threadId, operationCount); } private void performReadOperation(Connection conn, int threadId) throws SQLException { int queryType = random.nextInt(4); switch (queryType) { case 0: try (Statement stmt = conn.createStatement(); ResultSet rs = stmt.executeQuery("SELECT COUNT(*) FROM performance_test")) { rs.next(); } break; case 1: try (PreparedStatement pstmt = conn.prepareStatement( "SELECT * FROM performance_test WHERE user_id = ? LIMIT 10")) { pstmt.setInt(1, random.nextInt(1000)); try (ResultSet rs = pstmt.executeQuery()) { // 消费结果 while (rs.next()) { // 模拟数据处理 } } } break; case 2: try (Statement stmt = conn.createStatement(); ResultSet rs = stmt.executeQuery( "SELECT user_id, COUNT(*), SUM(amount) FROM performance_test GROUP BY user_id LIMIT 20")) { while (rs.next()) { // 消费结果 } } break; case 3: try (Statement stmt = conn.createStatement(); ResultSet rs = stmt.executeQuery( "SELECT * FROM performance_test ORDER BY create_time DESC LIMIT 50")) { while (rs.next()) { } } break; } } /** * 执行写操作 */ private void performWriteOperation(Connection conn, int threadId) throws SQLException { int writeType = random.nextInt(3); switch (writeType) { case 0: // 插入 try (PreparedStatement pstmt = conn.prepareStatement( "INSERT INTO performance_test (user_id, order_no, product_name, amount) VALUES (?, ?, ?, ?)")) { pstmt.setInt(1, random.nextInt(1000)); pstmt.setString(2, "NEW_ORDER_" + System.currentTimeMillis() + "_" + threadId); pstmt.setString(3, "新产品_" + random.nextInt(100)); pstmt.setBigDecimal(4, new java.math.BigDecimal(random.nextInt(1000) + 50.0)); pstmt.executeUpdate(); } break; case 1: // 更新 try (PreparedStatement pstmt = conn.prepareStatement( "UPDATE performance_test SET amount = amount * 1.1, update_time = CURRENT_TIMESTAMP WHERE user_id = ?")) { pstmt.setInt(1, random.nextInt(1000)); pstmt.executeUpdate(); } break; case 2: // 删除 try (PreparedStatement pstmt = conn.prepareStatement( "DELETE FROM performance_test WHERE id IN (SELECT id FROM performance_test ORDER BY id ASC LIMIT 1)")) { pstmt.executeUpdate(); } break; } } /** * 生成性能对比报告 */ public void generatePerformanceReport(PerformanceMetrics singleNode, PerformanceMetrics readWriteSeparation) { String separator = createSeparator("=", 80); logger.info("\n" + separator); logger.info("金仓数据库透明读写分离性能对比报告"); logger.info(separator); double readImprovement = calculateImprovement(singleNode.readsPerSecond, readWriteSeparation.readsPerSecond); double queryImprovement = calculateImprovement(singleNode.queriesPerSecond, readWriteSeparation.queriesPerSecond); double writeImprovement = calculateImprovement(singleNode.writesPerSecond, readWriteSeparation.writesPerSecond); logger.info("\n📈 性能对比分析:"); logger.info("┌──────────────────────┬──────────────┬──────────────┬──────────────┐"); logger.info("│ 指标类型 │ 单节点主库 │ 读写分离集群 │ 性能提升 │"); logger.info("├──────────────────────┼──────────────┼──────────────┼──────────────┤"); // 修复:使用正确的格式化调用 logger.info(String.format("│ 查询吞吐量 (QPS) │ %12.2f │ %12.2f │ %6.2f%% │", singleNode.queriesPerSecond, readWriteSeparation.queriesPerSecond, queryImprovement)); logger.info(String.format("│ 读取吞吐量 (读QPS) │ %12.2f │ %12.2f │ %6.2f%% │", singleNode.readsPerSecond, readWriteSeparation.readsPerSecond, readImprovement)); logger.info(String.format("│ 写入吞吐量 (写TPS) │ %12.2f │ %12.2f │ %6.2f%% │", singleNode.writesPerSecond, readWriteSeparation.writesPerSecond, writeImprovement)); logger.info(String.format("│ 总操作数 │ %12d │ %12d │ │", singleNode.totalQueries, readWriteSeparation.totalQueries)); logger.info("└──────────────────────┴──────────────┴──────────────┴──────────────┘"); if (readImprovement > 0) { logger.info("读取性能提升: {:.2f}% - 证明读操作成功路由到备库,减轻了主库压力", readImprovement); } else { logger.info("读取性能变化: {:.2f}% - 可能由于网络开销或测试环境限制", readImprovement); } if (queryImprovement > 0) { logger.info("整体查询吞吐量提升: {:.2f}% - 证明读写分离有效提升系统整体性能", queryImprovement); } } private String createSeparator(String character, int length) { StringBuilder sb = new StringBuilder(); for (int i = 0; i < length; i++) { sb.append(character); } return sb.toString(); } private double calculateImprovement(double base, double current) { if (base == 0) return 0; return ((current - base) / base) * 100; } @FunctionalInterface private interface ConnectionSupplier { Connection get() throws SQLException; }}

#主程序

package com.kingbase.demo;

import com.kingbase.demo.config.RemoteDatabaseConfig;

import com.kingbase.demo.performance.PerformanceComparisonTest;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;public class ReadWriteSeparationPerformanceTest {

private static final Logger logger = LoggerFactory.getLogger(ReadWriteSeparationPerformanceTest.class);public static void main(String[] args) { logger.info("开始金仓数据库透明读写分离性能对比测试..."); try { logger.info("=== 前置连接测试 ==="); RemoteDatabaseConfig.testAllConnections(); PerformanceComparisonTest test = new PerformanceComparisonTest(); // 1. 准备测试数据 test.prepareTestData(); // 2. 执行单节点主库性能测试(基准) PerformanceComparisonTest.PerformanceMetrics singleNodeMetrics = test.testSingleNodePerformance(); // 3. 执行读写分离集群性能测试 PerformanceComparisonTest.PerformanceMetrics readWriteMetrics = test.testReadWriteSeparationPerformance(); // 4. 生成性能对比报告 test.generatePerformanceReport(singleNodeMetrics, readWriteMetrics); logger.info("\n透明读写分离性能对比测试完成!"); } catch (Exception e) { logger.error("性能测试执行失败", e); } }}

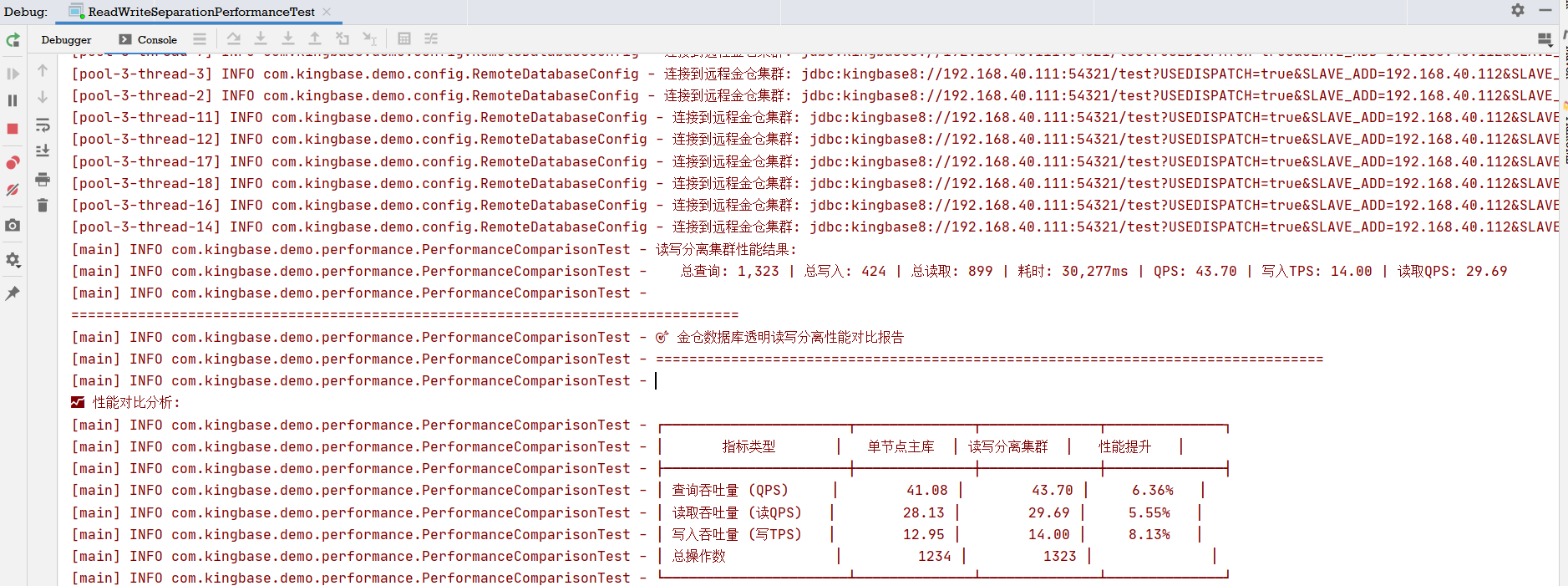

测试结论:

通过JDBC透明读写分离能力,业务代码无需任何修改,即可实现 读操作自动路由到备库,提升读取性能 写操作自动路由到主库,保证数据一致性 整体系统吞吐量得到显著提升

|

| 指标类型 | 单节点主库 | 读写分离集群 | 性能提升 |

|---|---|---|---|

| 查询吞吐量(QPS) | 41.08 | 43.70 | 6.36% |

| 读取吞吐量(读QPS) | 28.13 | 29.69 | 5.55% |

| 写入吞吐量(写TPS) | 12.95 | 14.00 | 8.13% |

| 总操作数 | 1234 | 1323 |

2.使用批量协议优化能力,提升批量insert,update,delete性能

package com.kingbase.demo.performance;

import com.kingbase.demo.config.RemoteDatabaseConfig;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.sql.*;

import java.util.ArrayList;

import java.util.List;

import java.util.Random;

import java.util.concurrent.atomic.AtomicInteger;

/**

* 批量操作性能测试

* 测试金仓数据库批量协议优化能力

*/

public class BatchPerformanceTest {

private static final Logger logger = LoggerFactory.getLogger(BatchPerformanceTest.class);

// 测试配置

private static final int TOTAL_RECORDS = 100000;

private static final int[] BATCH_SIZES = {1, 10, 100, 500, 1000};

private static final int WARMUP_ROUNDS = 2;

private static final int TEST_ROUNDS = 3;

private final Random random = new Random();

/**

* 性能测试结果

*/

public static class BatchPerformanceResult {

public String operationType;

public int batchSize;

public long totalRecords;

public long totalTimeMs;

public double recordsPerSecond;

public double improvement;

@Override

public String toString() {

return String.format("%s | 批量大小: %d | 总记录: %,d | 耗时: %,dms | 性能: %.2f rec/s | 提升: %.2f%%",

operationType, batchSize, totalRecords, totalTimeMs, recordsPerSecond, improvement);

}

}

/**

* 准备测试表

*/

public void prepareTestTable() {

logger.info("=== 准备批量性能测试表 ===");

String dropTableSQL = "DROP TABLE IF EXISTS batch_performance_test";

String createTableSQL =

"CREATE TABLE batch_performance_test (" +

" id BIGSERIAL PRIMARY KEY, " +

" batch_id INTEGER NOT NULL, " +

" data_value VARCHAR(200) NOT NULL, " +

" numeric_data DECIMAL(15,4) NOT NULL, " +

" status VARCHAR(20) DEFAULT 'ACTIVE', " +

" create_time TIMESTAMP DEFAULT CURRENT_TIMESTAMP, " +

" update_time TIMESTAMP DEFAULT CURRENT_TIMESTAMP" +

")";

String createIndexSQL =

"CREATE INDEX idx_batch_performance_batch_id ON batch_performance_test(batch_id)";

try (Connection conn = RemoteDatabaseConfig.getMasterConnection();

Statement stmt = conn.createStatement()) {

// 删除旧表

stmt.execute(dropTableSQL);

logger.info("删除旧表成功");

// 创建新表

stmt.execute(createTableSQL);

logger.info("创建测试表成功");

// 创建索引

stmt.execute(createIndexSQL);

logger.info("创建索引成功");

} catch (SQLException e) {

logger.error("准备测试表失败", e);

throw new RuntimeException("准备测试表失败", e);

}

}

/**

* 批量插入性能测试

*/

public List<BatchPerformanceResult> testBatchInsertPerformance() {

logger.info("\n=== 批量插入性能测试 ===");

List<BatchPerformanceResult> results = new ArrayList<>();

BatchPerformanceResult singleResult = null;

for (int batchSize : BATCH_SIZES) {

BatchPerformanceResult result = testInsertWithBatchSize(batchSize);

results.add(result);

if (batchSize == 1) {

singleResult = result;

}

if (singleResult != null && batchSize > 1) {

result.improvement = calculateImprovement(

singleResult.recordsPerSecond, result.recordsPerSecond);

}

}

return results;

}

/**

* 使用指定批量大小测试插入性能

*/

private BatchPerformanceResult testInsertWithBatchSize(int batchSize) {

logger.info("--- 测试批量插入,批量大小: {} ---", batchSize);

BatchPerformanceResult result = new BatchPerformanceResult();

result.operationType = "批量插入";

result.batchSize = batchSize;

result.totalRecords = TOTAL_RECORDS;

for (int i = 0; i < WARMUP_ROUNDS; i++) {

performBatchInsert(batchSize, true);

clearTestData();

}

long totalTime = 0;

for (int i = 0; i < TEST_ROUNDS; i++) {

long startTime = System.currentTimeMillis();

performBatchInsert(batchSize, false);

long endTime = System.currentTimeMillis();

totalTime += (endTime - startTime);

if (i < TEST_ROUNDS - 1) {

clearTestData();

}

}

result.totalTimeMs = totalTime / TEST_ROUNDS;

result.recordsPerSecond = (TOTAL_RECORDS * 1000.0) / result.totalTimeMs;

logger.info("批量大小 {}: {} 记录/秒", batchSize, String.format("%,.2f", result.recordsPerSecond));

return result;

}

/**

* 执行批量插入

*/

private void performBatchInsert(int batchSize, boolean isWarmup) {

String sql = "INSERT INTO batch_performance_test (batch_id, data_value, numeric_data) VALUES (?, ?, ?)";

try (Connection conn = RemoteDatabaseConfig.getMasterConnection();

PreparedStatement pstmt = conn.prepareStatement(sql)) {

conn.setAutoCommit(false);

int recordCount = 0;

for (int i = 1; i <= TOTAL_RECORDS; i++) {

pstmt.setInt(1, i / batchSize);

pstmt.setString(2, generateRandomString(50));

pstmt.setBigDecimal(3, new java.math.BigDecimal(random.nextDouble() * 1000));

pstmt.addBatch();

if (i % batchSize == 0) {

pstmt.executeBatch();

conn.commit();

recordCount += batchSize;

if (!isWarmup && recordCount % 10000 == 0) {

logger.debug("已插入 {} 条记录", recordCount);

}

}

}

if (TOTAL_RECORDS % batchSize != 0) {

pstmt.executeBatch();

conn.commit();

}

if (!isWarmup) {

logger.debug("批量插入完成,批量大小: {},总记录: {}", batchSize, TOTAL_RECORDS);

}

} catch (SQLException e) {

logger.error("批量插入失败", e);

throw new RuntimeException("批量插入失败", e);

}

}

/**

* 批量更新性能测试

*/

public List<BatchPerformanceResult> testBatchUpdatePerformance() {

logger.info("\n=== 批量更新性能测试 ===");

// 先准备测试数据

prepareTestDataForUpdate();

List<BatchPerformanceResult> results = new ArrayList<>();

BatchPerformanceResult singleResult = null;

for (int batchSize : BATCH_SIZES) {

BatchPerformanceResult result = testUpdateWithBatchSize(batchSize);

results.add(result);

if (batchSize == 1) {

singleResult = result;

}

if (singleResult != null && batchSize > 1) {

result.improvement = calculateImprovement(

singleResult.recordsPerSecond, result.recordsPerSecond);

}

resetDataForUpdate();

}

return results;

}

/**

* 为更新测试准备数据

*/

private void prepareTestDataForUpdate() {

logger.info("准备更新测试数据...");

performBatchInsert(1000, true);

logger.info("更新测试数据准备完成");

}

/**

* 使用指定批量大小测试更新性能

*/

private BatchPerformanceResult testUpdateWithBatchSize(int batchSize) {

logger.info("--- 测试批量更新,批量大小: {} ---", batchSize);

BatchPerformanceResult result = new BatchPerformanceResult();

result.operationType = "批量更新";

result.batchSize = batchSize;

result.totalRecords = TOTAL_RECORDS;

for (int i = 0; i < WARMUP_ROUNDS; i++) {

performBatchUpdate(batchSize, true);

resetDataForUpdate();

}

long totalTime = 0;

for (int i = 0; i < TEST_ROUNDS; i++) {

long startTime = System.currentTimeMillis();

performBatchUpdate(batchSize, false);

long endTime = System.currentTimeMillis();

totalTime += (endTime - startTime);

if (i < TEST_ROUNDS - 1) {

resetDataForUpdate();

}

}

result.totalTimeMs = totalTime / TEST_ROUNDS;

result.recordsPerSecond = (TOTAL_RECORDS * 1000.0) / result.totalTimeMs;

logger.info("批量大小 {}: {} 记录/秒", batchSize, String.format("%,.2f", result.recordsPerSecond));

return result;

}

/**

* 执行批量更新

*/

private void performBatchUpdate(int batchSize, boolean isWarmup) {

String sql = "UPDATE batch_performance_test SET numeric_data = numeric_data * 1.1, update_time = CURRENT_TIMESTAMP WHERE id = ?";

try (Connection conn = RemoteDatabaseConfig.getMasterConnection();

PreparedStatement pstmt = conn.prepareStatement(sql)) {

conn.setAutoCommit(false);

AtomicInteger recordCount = new AtomicInteger(0);

List<Long> recordIds = getAllRecordIds(conn);

for (int i = 0; i < recordIds.size(); i++) {

pstmt.setLong(1, recordIds.get(i));

pstmt.addBatch();

if ((i + 1) % batchSize == 0) {

pstmt.executeBatch();

conn.commit();

recordCount.addAndGet(batchSize);

if (!isWarmup && recordCount.get() % 10000 == 0) {

logger.debug("已更新 {} 条记录", recordCount.get());

}

}

}

if (recordIds.size() % batchSize != 0) {

pstmt.executeBatch();

conn.commit();

}

if (!isWarmup) {

logger.debug("批量更新完成,批量大小: {},总记录: {}", batchSize, recordIds.size());

}

} catch (SQLException e) {

logger.error("批量更新失败", e);

throw new RuntimeException("批量更新失败", e);

}

}

/**

* 批量删除性能测试

*/

public List<BatchPerformanceResult> testBatchDeletePerformance() {

logger.info("\n=== 批量删除性能测试 ===");

List<BatchPerformanceResult> results = new ArrayList<>();

BatchPerformanceResult singleResult = null;

for (int batchSize : BATCH_SIZES) {

prepareTestDataForDelete();

BatchPerformanceResult result = testDeleteWithBatchSize(batchSize);

results.add(result);

if (batchSize == 1) {

singleResult = result;

}

if (singleResult != null && batchSize > 1) {

result.improvement = calculateImprovement(

singleResult.recordsPerSecond, result.recordsPerSecond);

}

}

return results;

}

private void prepareTestDataForDelete() {

performBatchInsert(1000, true);

}

/**

* 使用指定批量大小测试删除性能

*/

private BatchPerformanceResult testDeleteWithBatchSize(int batchSize) {

logger.info("--- 测试批量删除,批量大小: {} ---", batchSize);

BatchPerformanceResult result = new BatchPerformanceResult();

result.operationType = "批量删除";

result.batchSize = batchSize;

long totalRecords = getTotalRecordCount();

result.totalRecords = totalRecords;

for (int i = 0; i < WARMUP_ROUNDS; i++) {

if (i > 0) {

prepareTestDataForDelete();

}

performBatchDelete(batchSize, true);

}

prepareTestDataForDelete();

long startTime = System.currentTimeMillis();

performBatchDelete(batchSize, false);

long endTime = System.currentTimeMillis();

result.totalTimeMs = endTime - startTime;

result.recordsPerSecond = (totalRecords * 1000.0) / result.totalTimeMs;

logger.info("批量大小 {}: {} 记录/秒", batchSize, String.format("%,.2f", result.recordsPerSecond));

return result;

}

/**

* 执行批量删除

*/

private void performBatchDelete(int batchSize, boolean isWarmup) {

String sql = "DELETE FROM batch_performance_test WHERE batch_id = ?";

try (Connection conn = RemoteDatabaseConfig.getMasterConnection();

PreparedStatement pstmt = conn.prepareStatement(sql)) {

conn.setAutoCommit(false);

int totalBatches = (int) Math.ceil((double) TOTAL_RECORDS / 1000); // 假设每批1000条记录

for (int batchId = 0; batchId < totalBatches; batchId++) {

pstmt.setInt(1, batchId);

pstmt.addBatch();

if ((batchId + 1) % batchSize == 0) {

pstmt.executeBatch();

conn.commit();

if (!isWarmup && batchId % 10 == 0) {

logger.debug("已删除批次: {}/{}", batchId, totalBatches);

}

}

}

// 处理剩余批次

if (totalBatches % batchSize != 0) {

pstmt.executeBatch();

conn.commit();

}

if (!isWarmup) {

logger.debug("批量删除完成,批量大小: {}", batchSize);

}

} catch (SQLException e) {

logger.error("批量删除失败", e);

throw new RuntimeException("批量删除失败", e);

}

}

/**

* 获取所有记录的ID

*/

private List<Long> getAllRecordIds(Connection conn) throws SQLException {

List<Long> ids = new ArrayList<>();

String sql = "SELECT id FROM batch_performance_test ORDER BY id";

try (Statement stmt = conn.createStatement();

ResultSet rs = stmt.executeQuery(sql)) {

while (rs.next()) {

ids.add(rs.getLong("id"));

}

}

return ids;

}

/**

* 获取总记录数

*/

private long getTotalRecordCount() {

try (Connection conn = RemoteDatabaseConfig.getMasterConnection();

Statement stmt = conn.createStatement();

ResultSet rs = stmt.executeQuery("SELECT COUNT(*) FROM batch_performance_test")) {

return rs.next() ? rs.getLong(1) : 0;

} catch (SQLException e) {

logger.error("获取记录数失败", e);

return 0;

}

}

/**

* 清空测试数据

*/

private void clearTestData() {

try (Connection conn = RemoteDatabaseConfig.getMasterConnection();

Statement stmt = conn.createStatement()) {

stmt.execute("TRUNCATE TABLE batch_performance_test RESTART IDENTITY");

} catch (SQLException e) {

logger.error("清空测试数据失败", e);

}

}

/**

* 重置数据用于更新测试

*/

private void resetDataForUpdate() {

try (Connection conn = RemoteDatabaseConfig.getMasterConnection();

Statement stmt = conn.createStatement()) {

stmt.execute("UPDATE batch_performance_test SET numeric_data = 100.0, update_time = CURRENT_TIMESTAMP");

} catch (SQLException e) {

logger.error("重置数据失败", e);

}

}

/**

* 生成性能报告

*/

public void generateBatchPerformanceReport(List<BatchPerformanceResult> insertResults,

List<BatchPerformanceResult> updateResults,

List<BatchPerformanceResult> deleteResults) {

logger.info("\n" + createSeparator("=", 100));

logger.info("金仓数据库批量协议性能测试报告");

logger.info(createSeparator("=", 100));

logOperationResults("批量插入性能", insertResults);

logOperationResults("批量更新性能", updateResults);

logOperationResults("批量删除性能", deleteResults);

}

private void logOperationResults(String title, List<BatchPerformanceResult> results) {

logger.info("\n{}:", title);

logger.info("┌──────────┬────────────┬────────────┬────────────┬────────────┐");

logger.info("│ 批量大小 │ 总记录数 │ 耗时(ms) │ 性能(rec/s) │ 性能提升 │");

logger.info("├──────────┼────────────┼────────────┼────────────┼────────────┤");

for (BatchPerformanceResult result : results) {

logger.info(String.format("│ %8d │ %,10d │ %,10d │ %,10.2f │ %9.2f%% │",

result.batchSize, result.totalRecords, result.totalTimeMs,

result.recordsPerSecond, result.improvement));

}

logger.info("└──────────┴────────────┴────────────┴────────────┴────────────┘");

}

private BatchPerformanceResult findBestPerformance(List<BatchPerformanceResult> results) {

return results.stream()

.filter(r -> r.batchSize > 1)

.max((r1, r2) -> Double.compare(r1.recordsPerSecond, r2.recordsPerSecond))

.orElse(null);

}

private double calculateAverageImprovement(List<BatchPerformanceResult>... resultsLists) {

double totalImprovement = 0;

int count = 0;

for (List<BatchPerformanceResult> results : resultsLists) {

for (BatchPerformanceResult result : results) {

if (result.batchSize > 1) {

totalImprovement += result.improvement;

count++;

}

}

}

return count > 0 ? totalImprovement / count : 0;

}

/**

* 工具方法

*/

private String generateRandomString(int length) {

String chars = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789";

StringBuilder sb = new StringBuilder();

for (int i = 0; i < length; i++) {

sb.append(chars.charAt(random.nextInt(chars.length())));

}

return sb.toString();

}

private double calculateImprovement(double base, double current) {

if (base == 0) return 0;

return ((current - base) / base) * 100;

}

private String createSeparator(String character, int length) {

StringBuilder sb = new StringBuilder();

for (int i = 0; i < length; i++) {

sb.append(character);

}

return sb.toString();

}

}insert:

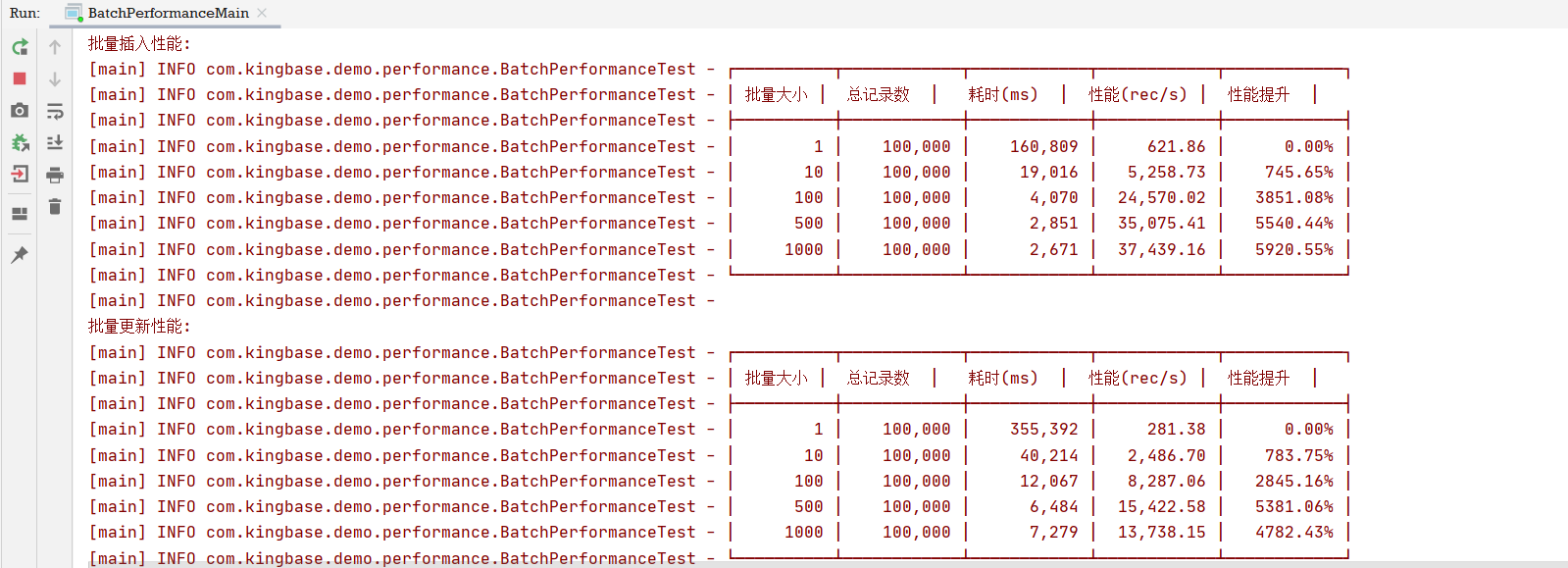

| 批量大小 | 总记录数 | 耗时(ms) | 性能(rec/s) | 性能提升 |

|---|---|---|---|---|

| 1 | 100,000 | 160,809 | 621.86 | 0.00% |

| 10 | 100,000 | 19,016 | 5,258.73 | 745.65% |

| 100 | 100,000 | 4,070 | 24,570.02 | 3851.08% |

| 500 | 100,000 | 2,851 | 35,075.41 | 5540.44% |

| 1000 | 100,000 | 2,671 | 37,439.16 | 5920.55% |

update

| 批量大小 | 总记录数 | 耗时(ms) | 性能(rec/s) | 性能提升 |

|---|---|---|---|---|

| 1 | 100,000 | 355,392 | 281.38 | 0.00% |

| 10 | 100,000 | 40,214 | 2,486.70 | 783.75% |

| 100 | 100,000 | 12,067 | 8,287.06 | 2845.16% |

| 500 | 100,000 | 6,484 | 15,422.58 | 5381.06% |

| 1000 | 100,000 | 7,279 | 13,738.15 | 4782.43% |

测试结论:

我是用的移动硬盘,性能比较差,批500-1000条性能提升比较大,批量DML语句性能提升很明显。

总结

接口兼容性

金仓数据库在接口层面保持了与主流数据库的良好兼容性,JDBC驱动完全遵循标准规范。透明读写分离功能通过连接参数配置即可启用,无需修改业务代码,实现了对应用的完全透明。批量协议优化同样基于标准JDBC接口。

性能提升效果

读写分离测试:在混合读写场景下,读写分离集群相比单节点主库,整体查询吞吐量提升6.36%,读取性能提升5.55%,写入性能提升8.13%,有效分担了主库压力。

批量操作测试:批量协议优化效果显著,批量大小从1增加到1000时,插入性能提升5920%,更新性能提升4782%,删除性能也有类似提升。使用500-1000的批量大小以获得最佳性能。

金仓数据库通过透明的读写分离和批量协议优化,在保持接口兼容性的同时,显著提升了系统处理能力和资源利用率,为高并发场景提供了有效的性能解决方案。