安装labelme2coco,执行如下命令:

bash

pip install labelme2coco将使用LabelMe生成的.json文件转换成coco支持的json文件,执行以下命令:

bash

labelme2coco melon_detect/train --category_id_start 1 # 生成的dataset.json重命名为train.json

labelme2coco melon_detect/val --category_id_start 1 # 生成的dataset.json重命名为val.json结果如下所示:

bash

{

"images":

[{"height":340,"width":437,"id":1,"file_name":"melon_detect\\val\\0940ff7f2754e74b5ad6d13495cd13e1_t.jpeg"},

{"height":534,"width":800,"id":2,"file_name":"melon_detect\\val\\14896085.webp"},

{"height":693,"width":1024,"id":3,"file_name":"melon_detect\\val\\21319742_204241990080_2.jpg"},

{"height":410,"width":600,"id":4,"file_name":"melon_detect\\val\\213511.jpg"},

{"height":1157,"width":1600,"id":5,"file_name":"melon_detect\\val\\222859661.webp"}],

"annotations":

[{"iscrowd":0,"image_id":1,"bbox":[35.65817694369973,171.87667560321717,234.85254691689005,133.51206434316356],"segmentation":[],"category_id":1,"id":1,"area":31355},

{"iscrowd":0,"image_id":1,"bbox":[168.36595174262735,32.46648793565684,250.40214477211802,259.51742627345845],"segmentation":[],"category_id":1,"id":2,"area":64983},

{"iscrowd":0,"image_id":2,"bbox":[105.90717299578058,250.12236286919833,421.5189873417721,232.48945147679322],"segmentation":[],"category_id":1,"id":3,"area":97998},

{"iscrowd":0,"image_id":2,"bbox":[266.2447257383966,39.99578059071733,385.65400843881855,355.27426160337546],"segmentation":[],"category_id":1,"id":4,"area":137012},

{"iscrowd":0,"image_id":3,"bbox":[205.44262295081967,17.53825136612023,618.5792349726776,598.9071038251367],"segmentation":[],"category_id":1,"id":5,"area":370471}],

"categories":

[{"id":1,"name":"watermelon","supercategory":"watermelon"},

{"id":2,"name":"wintermelon","supercategory":"wintermelon"}]

}注:

1.LabelMe的label索引是从0开始的,而COCO类别ID必须从1开始,所以必须要加:--category_id_start 1

2.LabelMe的bbox为{x1,y1,x2,y2},即左上角到右下角的两个点坐标。labelme2coco转换为COCO格式时将bbox自动转为{x1,y1,w,h},但PyTorch中的目标检测需要{x1,y1,x2,y2},所以实现中需要:boxes.append([x, y, x + w, y + h])

CocoDataset类实现如下:

python

import argparse

import colorama

import json

from torch.utils.data import Dataset, DataLoader

from torchvision import transforms

from PIL import Image

from pathlib import Path

def parse_args():

parser = argparse.ArgumentParser(description="labelme to coco")

parser.add_argument("--dataset_path", type=str, help="image dataset path")

parser.add_argument("--json_file", type=str, help="image dataset json file, coco format")

args = parser.parse_args()

return args

class CocoDataset(Dataset):

def __init__(self, json_file, image_dir, transforms=None):

self.image_dir = image_dir

self.transforms = transforms

with open(json_file, mode="r", encoding="utf-8") as f:

coco = json.load(f)

self.image_infos = {img["id"]: img for img in coco["images"]}

self.image_ids = list(self.image_infos.keys())

self.annotations = {}

for ann in coco["annotations"]:

img_id = ann["image_id"]

self.annotations.setdefault(img_id, []).append(ann)

def __len__(self):

return len(self.image_ids)

def __getitem__(self, idx):

img_id = self.image_ids[idx]

img_info = self.image_infos[img_id]

file_path = Path(self.image_dir) / Path(img_info["file_name"]).name

if not file_path.exists():

raise FileNotFoundError(colorama.Fore.RED + f"image not found: {file_path}")

image = Image.open(file_path).convert("RGB")

anns = self.annotations.get(img_id, [])

boxes, labels, areas, iscrowd = [], [], [], []

for ann in anns:

x, y, w, h = ann["bbox"]

if w <= 0 or h <= 0:

continue

# COCO bbox:[xmin, ymin, width, height]; PyTorch need:[xmin, ymin, xmax, ymax]

boxes.append([x, y, x + w, y + h])

labels.append(ann["category_id"])

areas.append(ann.get("area", w * h))

iscrowd.append(ann.get("iscrowd", 0))

target = {

"boxes": boxes,

"labels": labels,

"image_id": img_id,

"areas": areas,

"iscrowd": iscrowd,

}

if self.transforms is not None:

image = self.transforms(image)

return image, target

# custom batch processing function to process different numbers of targets

def collate_fn(batch):

return tuple(zip(*batch))

if __name__ == "__main__":

colorama.init(autoreset=True)

args = parse_args()

transform = transforms.Compose([

transforms.ToTensor()

])

dataset = CocoDataset(args.json_file, args.dataset_path, transforms=transform)

data_loader = DataLoader(dataset, batch_size=2, shuffle=True, num_workers=2, collate_fn=collate_fn)

for idx, (images, targets) in enumerate(data_loader):

print(f"idx: {idx}")

for i in range(len(images)):

print(f"image{i} shape: {images[i].shape}")

print(f"boxes{i}: {targets[i]['boxes']}")

print(f"labels{i}: {targets[i]['labels']}")

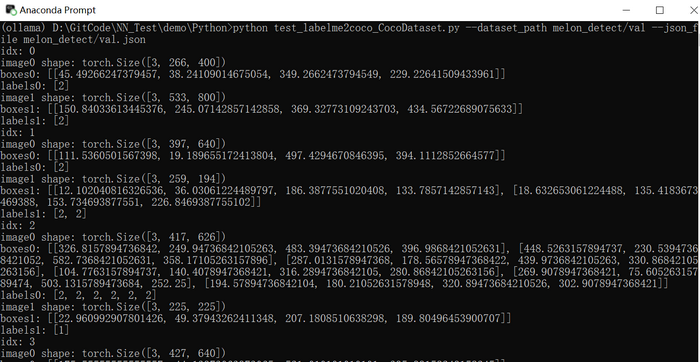

print(colorama.Fore.GREEN + "====== execution completed ======")执行结果如下图所示:

DataLoader中的:

1.collate_fn参数:当每个样本(image)中的target数量不一致或target的shape不一致或样本shape不一致时必须指定collate_fn。

2.num_workers参数:当数据加载速度跟不上训练速度时,需指定num_workers>0,控制后台使用多少个子进程(并行)来加载数据。