前言

最近终于开始接触学习Halcon机器视觉,早就想学学硬件相关的内容,终于逮住机会了,

本打算向领导要一份以前的项目维护一下,研究一下公司使用的Halcon案例。可惜只是让我自己看,自己看能看个啥,,,

于是只能把以前研究过的深度学习又拿出来放到halcon里面研究研究...

得亏我没把素材丢了。。

目录导读

-

- 前言

- 简介

- [使用MVTec Deep Learning Tool 训练模型](#使用MVTec Deep Learning Tool 训练模型)

- [使用MVTec HDevelop 软件调用模型预测图片](#使用MVTec HDevelop 软件调用模型预测图片)

- Qt调用HALCON代码

简介

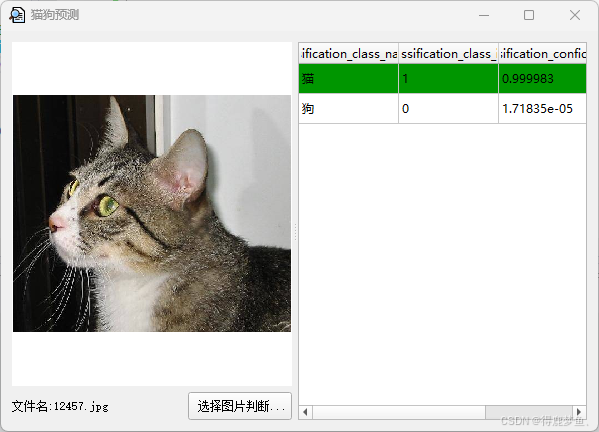

本文主要介绍通过Halcon机器视觉训练猫狗图像二分类模型,在通过Qt界面调用模型实现图片的分类。

就像这样:

训练用的数据集来自 :kaggle猫狗大战数据集.zip

链接: https://pan.baidu.com/s/1PalJWk5LkgNW0NPx1EfUVw?pwd=ir3e 提取码: ir3e

使用MVTec Deep Learning Tool 训练模型

作为一个新手,当然是选择工具训练获取更直观的展示。

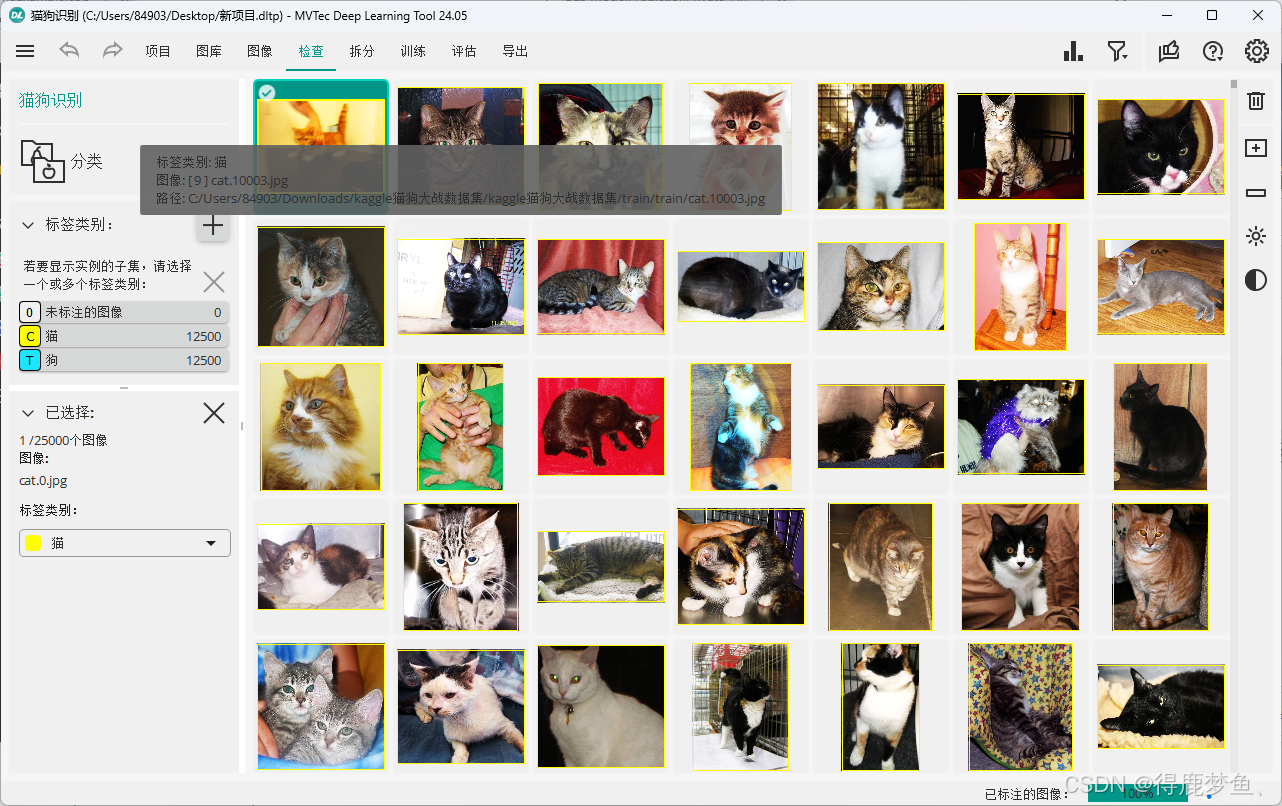

项目深度学习方法选择 【 分类 】

数据集中训练数据猫狗数据各有12500条,直接分批导入设置标签。

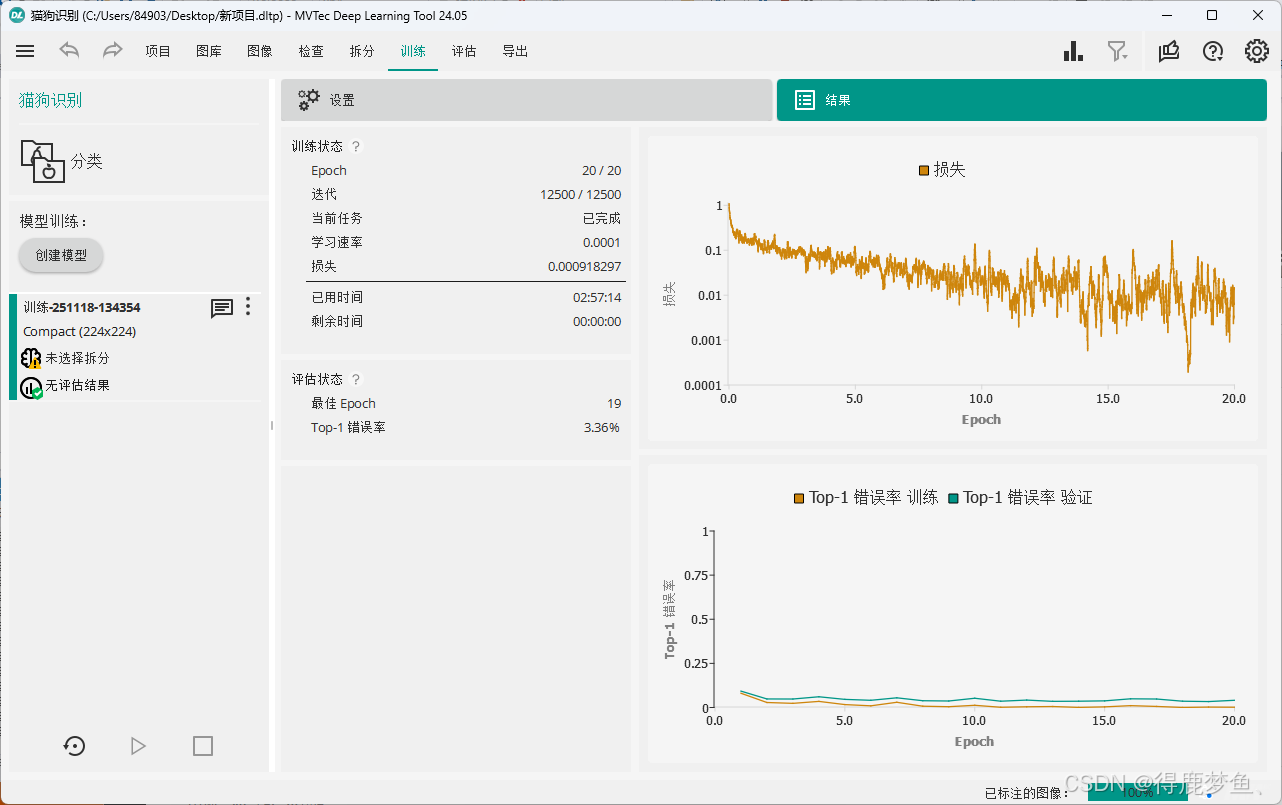

所有训练参数都是默认的,直接训练。

唯独这个图片宽度我训练前应该调大一点,224*244的大小还是小了点,后面我传入大体积图片没处理直接就异常了。

用笔记本训练四个小时,得到以下的训练效果:

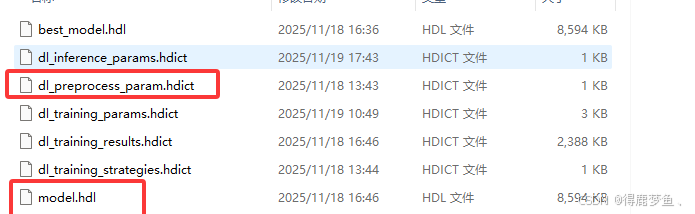

得到了以下的训练模型:

其中 dl_preprocess_param.hdict 和 model.hdl 文件时预测时候必须得文件。

这训练的正确率可比以前使用TensorFlow或Vgg16那种手动调参设置训练出来的高多了,个人感觉。

使用MVTec HDevelop 软件调用模型预测图片

网上对于Halcon的学习资料还是太少了,

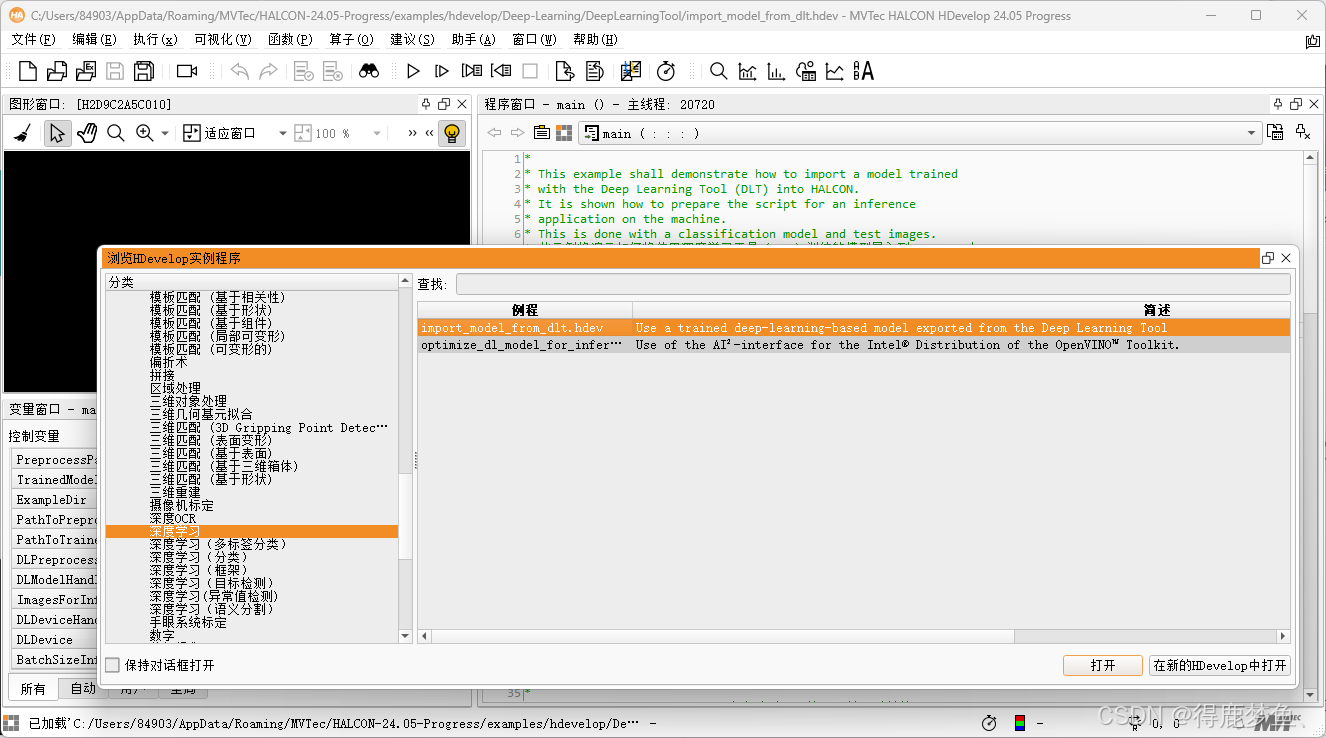

不过还好,自带示例程序,这里需要参考这个示例脚本:import_model_from_dlt.hdev

,通过导出这个脚本的C++代码,在QT中调用。

这个示例将加载模型->选择CPU/GPU设备->从文件夹中随机获取10文件来预测整个流程函数都完整的展示出来,

当我直接把这个脚本导成C++代码时发现有9000多行,

这得改改,

像这样:

cpp

* 闭图形窗口的自动更新

dev_update_off ()

PathToPreprocessParamFile :='C:/Users/84903/Desktop/新项目/训练-251118-134354/dl_preprocess_param.hdict'

PathToTrainedModel := 'C:/Users/84903/Desktop/新项目/训练-251118-134354/model.hdl'

* 读取预处理参数以及使用该参数训练出的模型

read_dict (PathToPreprocessParamFile, [], [], DLPreprocessParam)

read_dl_model (PathToTrainedModel, DLModelHandle)

*

* Press Run (F5) to continue.

stop ()

* ** 3. 查询设备并设置模型参数 **

* 使用基于深度学习的分类器进行推理可以在不同的设备上完成。

* 请参阅安装指南中相应的系统要求。

* 如果可能的话,在此示例中会使用 GPU。

* 如果您明确希望在 CPU 上运行此示例,请选择 CPU 设备。

query_available_dl_devices (['runtime', 'runtime'], ['gpu', 'cpu'], DLDeviceHandles)

if (|DLDeviceHandles| == 0)

throw ('No supported device found to continue this example.')

endif

* 由于查询可用下载设备时所使用的过滤条件,如果存在 GPU 设备,那么第一个设备就是 GPU 设备。

DLDevice := DLDeviceHandles[0]

* Set desired batch size.

* 设置所需的批处理大小。

BatchSizeInference := 1

set_dl_model_param (DLModelHandle, 'batch_size', BatchSizeInference)

*

set_dl_model_param (DLModelHandle, 'device', DLDevice)

* 获取用于可视化展示的类别名称和类别编号。

* 然后将它们放入一个字典中。

DLDataInfo := dict{}

get_dl_model_param (DLModelHandle, 'class_names', DLDataInfo.class_names)

get_dl_model_param (DLModelHandle, 'class_ids', DLDataInfo.class_ids)

*

Batch := 'C:/Users/84903/Downloads/kaggle猫狗大战数据集/kaggle猫狗大战数据集/test1/test1/12441.jpg'

read_image (Image, Batch)

gen_dl_samples_from_images (Image, DLSample)

preprocess_dl_samples (DLSample, DLPreprocessParam)

apply_dl_model (DLModelHandle, DLSample, [], DLResult)去掉不需要的界面,改成单个文件预测识别。

这样一来导出就只有4000多行了,顿时少了一半,也能接受。。

Qt调用HALCON代码

由于新学也不知道,深度需要需要哪些算子/函数。干脆就直接全部复制CPP文件中的代码调用,

设置HALCON开发环境

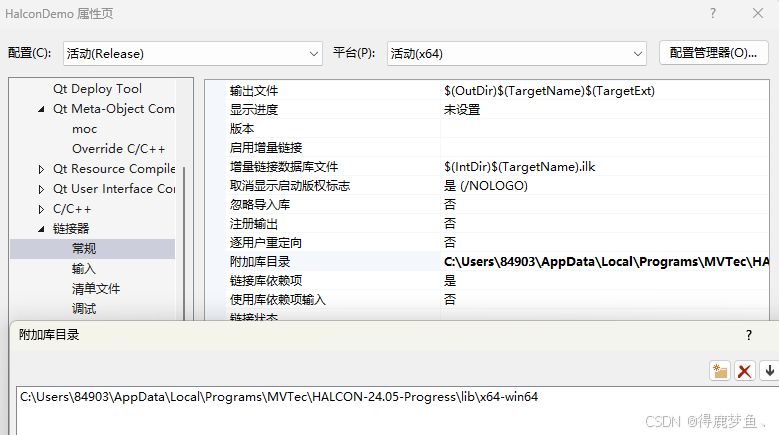

以VS为例,设置Halcon的开发环境:

属性->链接器->常规->附加库目录:

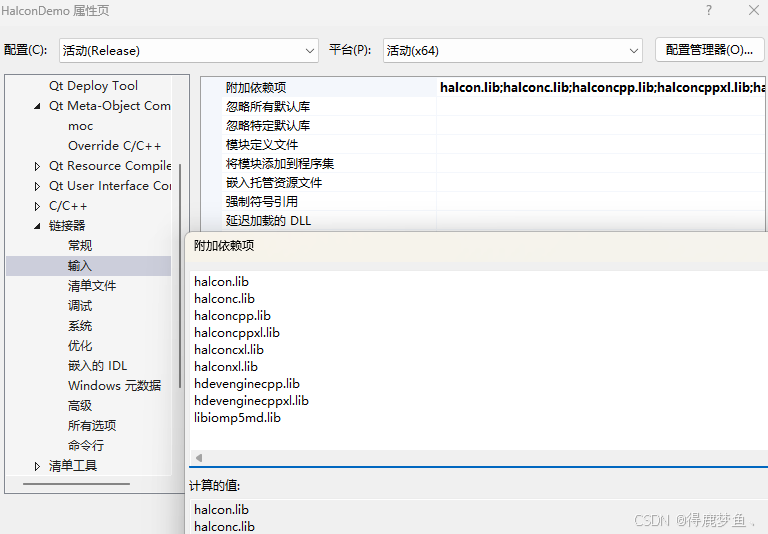

属性->链接器->输入->附加依赖项:

halcon.lib

halconc.lib

halconcpp.lib

halconcppxl.lib

halconcxl.lib

halconxl.lib

hdevenginecpp.lib

hdevenginecppxl.lib

libiomp5md.lib

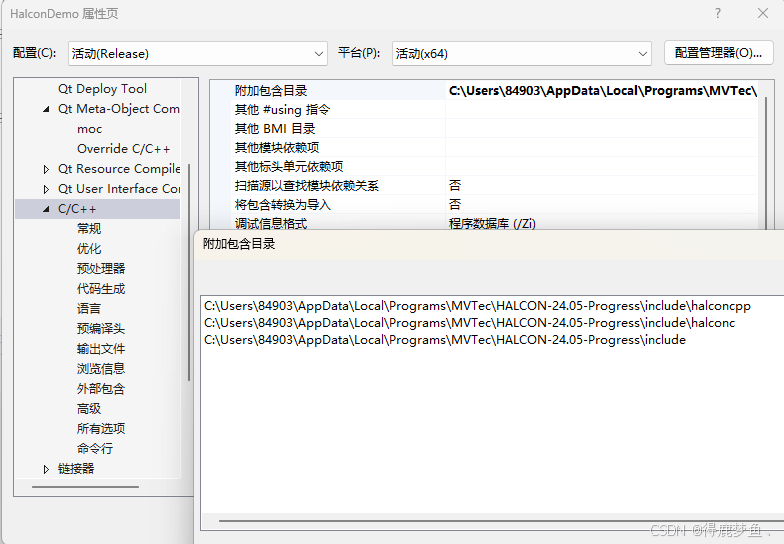

属性->C/C+±>常规->附加包含目录:

封装CPP中的函数调用

使用D-pointer 模式 (Private Implementation 模式)(Qt的私有类实现模式)封装调用。

将halcon的代码统统放到私有类中,这样如果后续有更改,不需要修改调用接口。

代码示例:

BLL_ModelPrediction.h

cpp

#pragma once

#pragma execution_character_set("utf-8")

#include <QObject>

#include <QScopedPointer>

#include <qglobal.h>

#define MODELPRED BLL_ModelPrediction::GetInstance()

//! 私有类具体实现

class BLL_ModelPredictionPrivate;

//! 模型预测

class BLL_ModelPrediction : public QObject

{

Q_OBJECT

public:

BLL_ModelPrediction(QObject *parent=nullptr);

~BLL_ModelPrediction();

//! 获取模型单例

static BLL_ModelPrediction* GetInstance();

//! 初始化预测模型 路径已经固定

void InitModel();

//! 预测图片对应的概率

QList<std::tuple<QString, int, double>> ToPrediction(QString filepath);

private:

QScopedPointer<BLL_ModelPredictionPrivate> d_ptr;

Q_DECLARE_PRIVATE(BLL_ModelPrediction)

};BLL_ModelPrediction.cpp

cpp

#include "BLL_ModelPrediction.h"

#include "BLL_ModelPredictionPrivate_P.h"

#include <QtCore/qglobal.h>

//! 全局变量

Q_GLOBAL_STATIC(BLL_ModelPrediction, globalPredInstance)

BLL_ModelPrediction::BLL_ModelPrediction(QObject *parent)

: QObject(parent),d_ptr(new BLL_ModelPredictionPrivate(this))

{}

BLL_ModelPrediction::~BLL_ModelPrediction()

{}

BLL_ModelPrediction* BLL_ModelPrediction::GetInstance()

{

return globalPredInstance();

}

void BLL_ModelPrediction::InitModel()

{

d_ptr->InitModel();

}

QList<std::tuple<QString, int, double>> BLL_ModelPrediction::ToPrediction(QString filepath)

{

return d_ptr->ToPrediction(filepath);

}BLL_ModelPredictionPrivate_P.h

集成开发环境 (HDevelop工具) 脚本生成的Halcon C++代码:

cpp

#pragma once

#include "BLL_ModelPrediction.h"

#include <QPair>

#include <QDebug>

#include "HalconCpp.h"

#include "HDevThread.h"

#include "HTuple.h"

using namespace HalconCpp;

class BLL_ModelPredictionPrivate

{

BLL_ModelPrediction* q_ptr;

Q_DECLARE_PUBLIC(BLL_ModelPrediction)

public:

BLL_ModelPredictionPrivate(BLL_ModelPrediction* parent);

// Procedure declarations

// External procedures

// Chapter: Deep Learning / Model

// Short Description: Compute zoom factors to fit an image to a target size.

void calculate_dl_image_zoom_factors(HTuple hv_ImageWidth, HTuple hv_ImageHeight,

HTuple hv_TargetWidth, HTuple hv_TargetHeight, HTuple hv_DLPreprocessParam, HTuple* hv_ZoomFactorWidth,

HTuple* hv_ZoomFactorHeight);

// Chapter: Deep Learning / Model

// Short Description: Check the content of the parameter dictionary DLPreprocessParam.

void check_dl_preprocess_param(HTuple hv_DLPreprocessParam);

// Chapter: Deep Learning / Model

// Short Description: Compute 3D normals.

void compute_normals_xyz(HObject ho_x, HObject ho_y, HObject ho_z, HObject* ho_NXImage,

HObject* ho_NYImage, HObject* ho_NZImage, HTuple hv_Smoothing);

// Chapter: Tools / Geometry

// Short Description: Convert the parameters of rectangles with format rectangle2 to the coordinates of its 4 corner-points.

void convert_rect2_5to8param(HTuple hv_Row, HTuple hv_Col, HTuple hv_Length1, HTuple hv_Length2,

HTuple hv_Phi, HTuple* hv_Row1, HTuple* hv_Col1, HTuple* hv_Row2, HTuple* hv_Col2,

HTuple* hv_Row3, HTuple* hv_Col3, HTuple* hv_Row4, HTuple* hv_Col4);

// Chapter: Tools / Geometry

// Short Description: Convert for four-sided figures the coordinates of the 4 corner-points to the parameters of format rectangle2.

void convert_rect2_8to5param(HTuple hv_Row1, HTuple hv_Col1, HTuple hv_Row2, HTuple hv_Col2,

HTuple hv_Row3, HTuple hv_Col3, HTuple hv_Row4, HTuple hv_Col4, HTuple hv_ForceL1LargerL2,

HTuple* hv_Row, HTuple* hv_Col, HTuple* hv_Length1, HTuple* hv_Length2, HTuple* hv_Phi);

// Chapter: Deep Learning / Model

// Short Description: Crops a given image object based on the given domain handling.

void crop_dl_sample_image(HObject ho_Domain, HTuple hv_DLSample, HTuple hv_Key,

HTuple hv_DLPreprocessParam);

// Chapter: Develop

// Short Description: Switch dev_update_pc, dev_update_var, and dev_update_window to 'off'.

void dev_update_off();

// Chapter: Deep Learning / Object Detection and Instance Segmentation

// Short Description: Filter the instance segmentation masks of a DL sample based on a given selection.

void filter_dl_sample_instance_segmentation_masks(HTuple hv_DLSample, HTuple hv_BBoxSelectionMask);

// Chapter: OCR / Deep OCR

// Short Description: Generate ground truth characters if they don't exist and words to characters mapping.

void gen_dl_ocr_detection_gt_chars(HTuple hv_DLSampleTargets, HTuple hv_DLSample,

HTuple hv_ScaleWidth, HTuple hv_ScaleHeight, HTupleVector/*{eTupleVector,Dim=1}*/* hvec_WordsCharsMapping);

// Chapter: OCR / Deep OCR

// Short Description: Generate target link score map for ocr detection training.

void gen_dl_ocr_detection_gt_link_map(HObject* ho_GtLinkMap, HTuple hv_ImageWidth,

HTuple hv_ImageHeight, HTuple hv_DLSampleTargets, HTupleVector/*{eTupleVector,Dim=1}*/ hvec_WordToCharVec,

HTuple hv_Alpha);

// Chapter: OCR / Deep OCR

// Short Description: Generate target orientation score maps for ocr detection training.

void gen_dl_ocr_detection_gt_orientation_map(HObject* ho_GtOrientationMaps, HTuple hv_ImageWidth,

HTuple hv_ImageHeight, HTuple hv_DLSample);

// Chapter: OCR / Deep OCR

// Short Description: Generate target text score map for ocr detection training.

void gen_dl_ocr_detection_gt_score_map(HObject* ho_TargetText, HTuple hv_DLSample,

HTuple hv_BoxCutoff, HTuple hv_RenderCutoff, HTuple hv_ImageWidth, HTuple hv_ImageHeight);

// Chapter: OCR / Deep OCR

// Short Description: Preprocess dl samples and generate targets and weights for ocr detection training.

void gen_dl_ocr_detection_targets(HTuple hv_DLSampleOriginal, HTuple hv_DLPreprocessParam);

// Chapter: OCR / Deep OCR

// Short Description: Generate link score map weight for ocr detection training.

void gen_dl_ocr_detection_weight_link_map(HObject ho_LinkMap, HObject ho_TargetWeight,

HObject* ho_TargetWeightLink, HTuple hv_LinkZeroWeightRadius);

// Chapter: OCR / Deep OCR

// Short Description: Generate orientation score map weight for ocr detection training.

void gen_dl_ocr_detection_weight_orientation_map(HObject ho_InitialWeight, HObject* ho_OrientationTargetWeight,

HTuple hv_DLSample);

// Chapter: OCR / Deep OCR

// Short Description: Generate text score map weight for ocr detection training.

void gen_dl_ocr_detection_weight_score_map(HObject* ho_TargetWeightText, HTuple hv_ImageWidth,

HTuple hv_ImageHeight, HTuple hv_DLSample, HTuple hv_BoxCutoff, HTuple hv_WSWeightRenderThreshold,

HTuple hv_Confidence);

// Chapter: Deep Learning / Model

// Short Description: Store the given images in a tuple of dictionaries DLSamples.

void gen_dl_samples_from_images(HObject ho_Images, HTuple* hv_DLSampleBatch);

// Chapter: OCR / Deep OCR

// Short Description: Generate a word to characters mapping.

void gen_words_chars_mapping(HTuple hv_DLSample, HTupleVector/*{eTupleVector,Dim=1}*/* hvec_WordsCharsMapping);

// Chapter: File / Misc

// Short Description: Get all image files under the given path

void list_image_files(HTuple hv_ImageDirectory, HTuple hv_Extensions, HTuple hv_Options,

HTuple* hv_ImageFiles);

// Chapter: Deep Learning / Model

// Short Description: Preprocess 3D data for deep-learning-based training and inference.

void preprocess_dl_model_3d_data(HTuple hv_DLSample, HTuple hv_DLPreprocessParam);

// Chapter: Deep Learning / Model

// Short Description: Preprocess anomaly images for evaluation and visualization of deep-learning-based anomaly detection or Global Context Anomaly Detection.

void preprocess_dl_model_anomaly(HObject ho_AnomalyImages, HObject* ho_AnomalyImagesPreprocessed,

HTuple hv_DLPreprocessParam);

// Chapter: Deep Learning / Model

// Short Description: Preprocess the provided DLSample image for augmentation purposes.

void preprocess_dl_model_augmentation_data(HTuple hv_DLSample, HTuple hv_DLPreprocessParam);

// Chapter: Deep Learning / Object Detection and Instance Segmentation

// Short Description: Preprocess the bounding boxes of type 'rectangle1' for a given sample.

void preprocess_dl_model_bbox_rect1(HObject ho_ImageRaw, HTuple hv_DLSample, HTuple hv_DLPreprocessParam);

// Chapter: Deep Learning / Object Detection and Instance Segmentation

// Short Description: Preprocess the bounding boxes of type 'rectangle2' for a given sample.

void preprocess_dl_model_bbox_rect2(HObject ho_ImageRaw, HTuple hv_DLSample, HTuple hv_DLPreprocessParam);

// Chapter: Deep Learning / Model

// Short Description: Preprocess images for deep-learning-based training and inference.

void preprocess_dl_model_images(HObject ho_Images, HObject* ho_ImagesPreprocessed,

HTuple hv_DLPreprocessParam);

// Chapter: OCR / Deep OCR

// Short Description: Preprocess images for deep-learning-based training and inference of Deep OCR detection models.

void preprocess_dl_model_images_ocr_detection(HObject ho_Images, HObject* ho_ImagesPreprocessed,

HTuple hv_DLPreprocessParam);

// Chapter: OCR / Deep OCR

// Short Description: Preprocess images for deep-learning-based training and inference of Deep OCR recognition models.

void preprocess_dl_model_images_ocr_recognition(HObject ho_Images, HObject* ho_ImagesPreprocessed,

HTuple hv_DLPreprocessParam);

// Chapter: Deep Learning / Object Detection and Instance Segmentation

// Short Description: Preprocess the instance segmentation masks for a sample given by the dictionary DLSample.

void preprocess_dl_model_instance_masks(HObject ho_ImageRaw, HTuple hv_DLSample,

HTuple hv_DLPreprocessParam);

// Chapter: Deep Learning / Semantic Segmentation and Edge Extraction

// Short Description: Preprocess segmentation and weight images for deep-learning-based segmentation training and inference.

void preprocess_dl_model_segmentations(HObject ho_ImagesRaw, HObject ho_Segmentations,

HObject* ho_SegmentationsPreprocessed, HTuple hv_DLPreprocessParam);

// Chapter: Deep Learning / Model

// Short Description: Preprocess given DLSamples according to the preprocessing parameters given in DLPreprocessParam.

void preprocess_dl_samples(HTuple hv_DLSampleBatch, HTuple hv_DLPreprocessParam);

// Chapter: Image / Manipulation

// Short Description: Change value of ValuesToChange in Image to NewValue.

void reassign_pixel_values(HObject ho_Image, HObject* ho_ImageOut, HTuple hv_ValuesToChange,

HTuple hv_NewValue);

// Chapter: Deep Learning / Model

// Short Description: Remove invalid 3D pixels from a given domain.

void remove_invalid_3d_pixels(HObject ho_ImageX, HObject ho_ImageY, HObject ho_ImageZ,

HObject ho_Domain, HObject* ho_DomainOut, HTuple hv_InvalidPixelValue);

// Chapter: Deep Learning / Model

// Short Description: Replace legacy preprocessing parameters or values.

void replace_legacy_preprocessing_parameters(HTuple hv_DLPreprocessParam);

// Chapter: OCR / Deep OCR

// Short Description: Split rectangle2 into a number of rectangles.

void split_rectangle2(HTuple hv_Row, HTuple hv_Column, HTuple hv_Phi, HTuple hv_Length1,

HTuple hv_Length2, HTuple hv_NumSplits, HTuple* hv_SplitRow, HTuple* hv_SplitColumn,

HTuple* hv_SplitPhi, HTuple* hv_SplitLength1Out, HTuple* hv_SplitLength2Out);

// Chapter: Tuple / Element Order

// Short Description: Sort the elements of a tuple randomly.

void tuple_shuffle(HTuple hv_Tuple, HTuple* hv_Shuffled);

// Local procedures

void get_example_inference_images(HTuple hv_ImageDir, HTuple hv_NumSamples, HTuple* hv_ImageFiles);

public:

//! 初始化模型

void InitModel();

//! 预测概率

QList<std::tuple<QString,int,double>> ToPrediction(QString filepath);

//! 打印数据类型

void ParitType(HTuple V);

// Local iconic variables

HObject ho_Image;

// Local control variables

HTuple hv_PathToPreprocessParamFile, hv_PathToTrainedModel;

HTuple hv_DLPreprocessParam, hv_DLModelHandle, hv_DLDeviceHandles;

HTuple hv_DLDevice, hv_BatchSizeInference, hv_DLDataInfo;

};

///////////////////////////////// BLL_ModelPredictionPrivate /////////////////////////////////

/*由于代码量过高,省略很多代码,*/

void BLL_ModelPredictionPrivate::InitModel()

{

hv_PathToPreprocessParamFile = "C:/Users/84903/Desktop/\346\226\260\351\241\271\347\233\256/\350\256\255\347\273\203-251118-134354/dl_preprocess_param.hdict";

hv_PathToTrainedModel = "C:/Users/84903/Desktop/\346\226\260\351\241\271\347\233\256/\350\256\255\347\273\203-251118-134354/model.hdl";

//读取预处理参数以及使用该参数训练出的模型

ReadDict(hv_PathToPreprocessParamFile, HTuple(), HTuple(), &hv_DLPreprocessParam);

ReadDlModel(hv_PathToTrainedModel, &hv_DLModelHandle);

//** 3. 查询设备并设置模型参数 **

//使用基于深度学习的分类器进行推理可以在不同的设备上完成。

//请参阅安装指南中相应的系统要求。

//如果可能的话,在此示例中会使用 GPU。

//如果您明确希望在 CPU 上运行此示例,请选择 CPU 设备。

QueryAvailableDlDevices((HTuple("runtime").Append("runtime")), (HTuple("gpu").Append("cpu")),

&hv_DLDeviceHandles);

if (0 != (int((hv_DLDeviceHandles.TupleLength()) == 0)))

{

throw HException("No supported device found to continue this example.");

}

//由于查询可用下载设备时所使用的过滤条件,如果存在 GPU 设备,那么第一个设备就是 GPU 设备。

hv_DLDevice = ((const HTuple&)hv_DLDeviceHandles)[0];

//Set desired batch size.

//设置所需的批处理大小。

hv_BatchSizeInference = 1;

SetDlModelParam(hv_DLModelHandle, "batch_size", hv_BatchSizeInference);

//

SetDlModelParam(hv_DLModelHandle, "device", hv_DLDevice);

//获取用于可视化展示的类别名称和类别编号。

//然后将它们放入一个字典中。

CreateDict(&hv_DLDataInfo);

HTuple hv___Tmp_Ctrl_0;

GetDlModelParam(hv_DLModelHandle, "class_names", &hv___Tmp_Ctrl_0);

SetDictTuple(hv_DLDataInfo, "class_names", hv___Tmp_Ctrl_0);

ParitType(hv___Tmp_Ctrl_0);

GetDlModelParam(hv_DLModelHandle, "class_ids", &hv___Tmp_Ctrl_0);

SetDictTuple(hv_DLDataInfo, "class_ids", hv___Tmp_Ctrl_0);

ParitType(hv___Tmp_Ctrl_0);

}

QList<std::tuple<QString, int, double>> BLL_ModelPredictionPrivate::ToPrediction(QString filepath)

{

QList<std::tuple<QString, int, double>> TupleData;

HTuple hv_DLResult;

HTuple hv_Batch, hv_DLSample;

hv_Batch = HTuple(filepath.toUtf8().data());

ReadImage(&ho_Image, hv_Batch);

gen_dl_samples_from_images(ho_Image, &hv_DLSample);

preprocess_dl_samples(hv_DLSample, hv_DLPreprocessParam);

ApplyDlModel(hv_DLModelHandle, hv_DLSample, HTuple(), &hv_DLResult);

ParitType(hv_DLResult);

// 获取字典句柄

HDict dict = hv_DLResult.H();

//HTuple keys = dict.GetDictParam("keys", "classification_confidences");

HTuple confidences = dict.GetDictTuple("classification_confidences");

HTuple ids = dict.GetDictTuple("classification_class_ids");

HTuple names = dict.GetDictTuple("classification_class_names");

for (int i = 0; i < names.Length(); i++)

{

std::tuple<QString, int, double> hcData;

std::get<0>(hcData)= QString::fromUtf8(names[i].S());

std::get<1>(hcData) = ids[i].I();

std::get<2>(hcData) = confidences[i].D();

TupleData.append(hcData);

}

return TupleData;

}

void BLL_ModelPredictionPrivate::ParitType(HTuple hv_DLResult)

{

switch (hv_DLResult.Type())

{

case HTupleType::eTupleTypeEmpty:

{

qDebug() << "HTupleType::eTupleTypeEmpty -> " ;

break;

}

case HTupleType::eTupleTypeLong:

{

qDebug() << "HTupleType::eTupleTypeLong -> " << hv_DLResult.I();

break;

}

case HTupleType::eTupleTypeDouble:

{

qDebug() << "HTupleType::eTupleTypeDouble -> " << hv_DLResult.D();

break;

}

case HTupleType::eTupleTypeString:

{

qDebug() << "HTupleType::eTupleTypeString -> " << QString::fromUtf8(hv_DLResult.S());

break;

}

case HTupleType::eTupleTypeHandle:

{

qDebug() << "HTupleType::eTupleTypeHandle ->";

break;

}

case HTupleType::eTupleTypeMixed:

{

qDebug() << "HTupleType::eTupleTypeMixed ->";

break;

}

default:

break;

}

}代码量太高,都不支持上传了,只复制了调用的实现。

界面选择文件识别:

cpp

QString fileName1 = QFileDialog::getOpenFileName(this, tr("加载图像文件"), "", "JPEG Files(*.jpg);;BMP Files(*.bmp);;PNG Files(*.png);;TIFF Files(*.tiff)");

if (fileName1.isEmpty())

{

return;

}

QFileInfo inf(fileName1);

ui.graphicsView->scene()->clear();

QGraphicsPixmapItem* RegeneratedPicture = new QGraphicsPixmapItem();

RegeneratedPicture->setPixmap(QPixmap(fileName1));

RegeneratedPicture->setFlag(QGraphicsPixmapItem::ItemIsSelectable, false);

RegeneratedPicture->setFlag(QGraphicsPixmapItem::ItemIsMovable, false);

RegeneratedPicture->setFlag(QGraphicsPixmapItem::ItemSendsGeometryChanges, false);

//RegeneratedPicture->setToolTip(tr(""));

ui.graphicsView->scene()->addItem(RegeneratedPicture);

// 获取边界矩形

QRectF bounds = ui.graphicsView->scene()->itemsBoundingRect();

ui.graphicsView->scene()->setSceneRect(bounds);

ui.graphicsView->fitInView(bounds, Qt::KeepAspectRatio);

ui.graphicsView->scene()->update();

ui.label->setText(QString("文件名:%1").arg(inf.fileName()));

QList<std::tuple<QString, int, double>> PredData = MODELPRED->ToPrediction(inf.absoluteFilePath());

ui.tableWidget->clear();

ui.tableWidget->setColumnCount(3);

ui.tableWidget->setRowCount(0);

ui.tableWidget->setHorizontalHeaderLabels(QStringList() << "classification_class_names" << "classification_class_ids" << "classification_confidences");

ui.tableWidget->verticalHeader()->hide();

for (int i = 0; i < PredData.count(); i++)

{

ui.tableWidget->insertRow(i);

QTableWidgetItem* names = new QTableWidgetItem(std::get<0>(PredData[i]));

ui.tableWidget->setItem(i,0, names);

QTableWidgetItem* ids = new QTableWidgetItem(QString::number(std::get<1>(PredData[i])));

ui.tableWidget->setItem(i, 1, ids);

QTableWidgetItem* confidences = new QTableWidgetItem(QString::number(std::get<2>(PredData[i])));

ui.tableWidget->setItem(i, 2, confidences);

if (i == 0)

{

names->setBackground(QBrush(QColor("#009600")));

ids->setBackground(QBrush(QColor("#009600")));

confidences->setBackground(QBrush(QColor("#009600")));

}

}除了HTuple 类的数据类型不好处理外,整个流程下来毫无一点难度,

对C++的支持相当友好。

随便选择几张图片测试,都能正确识别,

整个测试下来对比TensorFlow 的深度学习,以及那些需要Python 环境支持的深度学习,不得不说Halcon 的深度学习更胜一筹阿,

听同事说还有个YOLO模型更简单,回头在学习学习...