问题描述:

dpdk-testpmd在超过128核双numa场景中,启动失败问题,问题日志如下,扫描内存的时候,无法使用numa1的内存。

c

...

EAL: Detected lcore 0 as core 0 on socket 0

EAL: Detected lcore 127 as core 215 on socket 0

EAL: Skipped lcore 128 as core 0 on socket 1

EAL: Maximum logical cores by configuration: 128

EAL: Detected CPU lcores: 128

EAL: Detected NUMA nodes: 1

EAL: huge_pre_init setted 2

......

EAL: Hugepage /dev/hugepages//rtemap_1 is on socket 1

EAL: Hugepage /dev/hugepages//rtemap_0 is on socket 0

EAL: num of reserve hugepage on socket 0: 1

EAL: num of reserve hugepage on socket 1: 1

EAL: num of reserve hugepage on socket 2: 0

......

EAL: Allocating 1 pages of size 1024M on socket 0

EAL: Trying to obtain current memory policy.

EAL: Setting policy MPOL_PREFERRED for socket 0

EAL: Restoring previous memory policy: 0

EAL: Allocating 1 pages of size 1024M on socket 1

EAL: Trying to obtain current memory policy.

EAL: Setting policy MPOL_PREFERRED for socket 1

EAL: eal_memalloc_alloc_seg_bulk(): couldn't find suitable memseg_list

EAL: Restoring previous memory policy: 0

EAL: FATAL: Cannot init memory

EAL: Cannot init memory

EAL: Error - exiting with code: 1

Cause: :: invalid EAL arguments原因分析:

一开始只是关注了error的log,以为是大页分配失败了,没有关注到前面的日志信息。

bash

EAL: Setting policy MPOL_PREFERRED for socket 1

EAL: eal_memalloc_alloc_seg_bulk(): couldn't find suitable memseg_list其实刚开始的日志也非常的重要,这里其实早早的就暴露了问题,dpdk-testpmd启动的时候,只探测了0-127核,跳过了128以及之后的核,最终只扫了numa0,故只能用numa0的内存。

bash

EAL: Detected lcore 0 as core 0 on socket 0

EAL: Detected lcore 127 as core 215 on socket 0

EAL: Skipped lcore 128 as core 0 on socket 1

EAL: Maximum logical cores by configuration: 128

EAL: Detected CPU lcores: 128

EAL: Detected NUMA nodes: 1

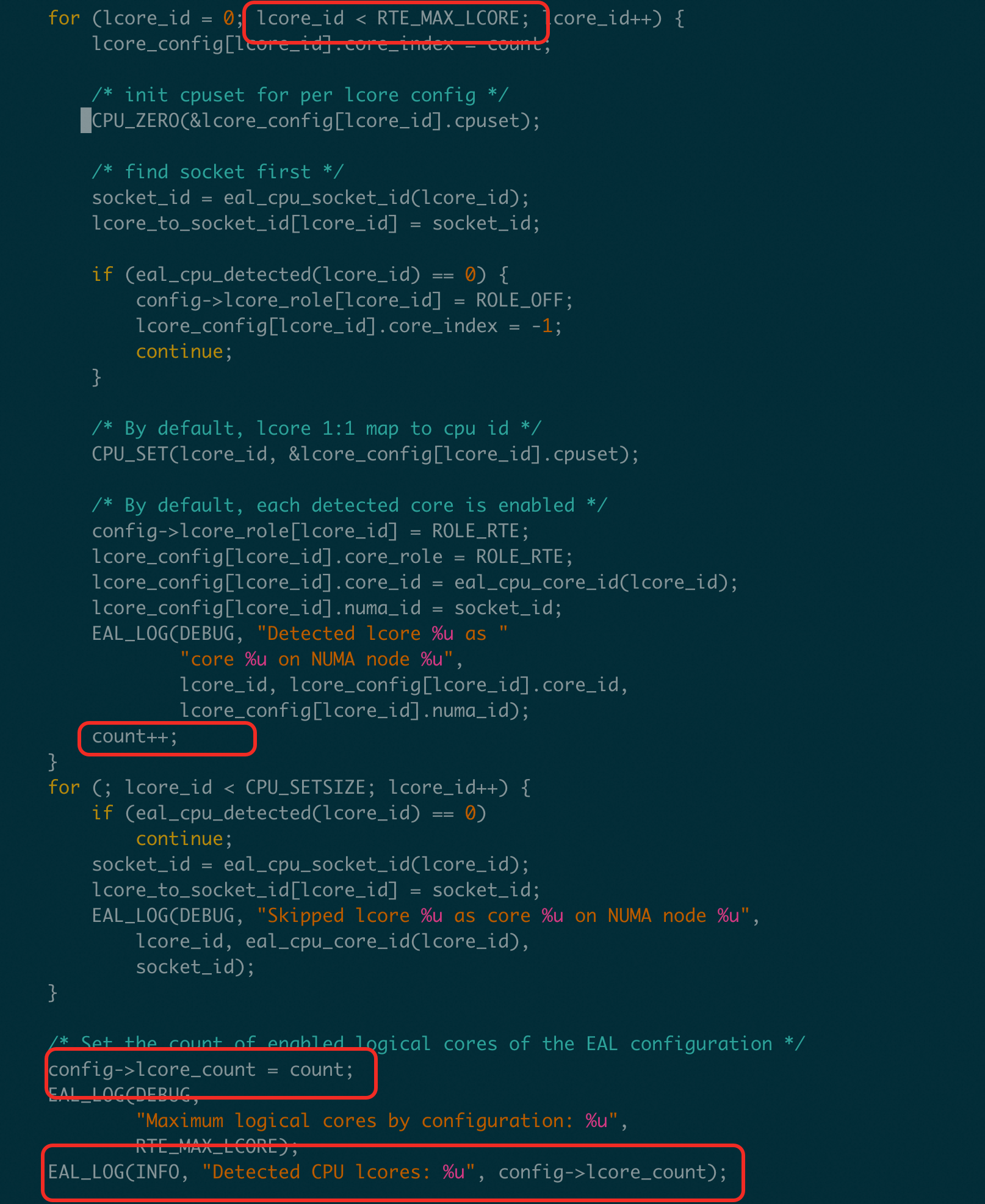

EAL: huge_pre_init setted 2分析代码,可以看到cpu count的上限是由 RTE_MAX_LCORE决定的。而它是由config/x86/meson.build 中的 dpdk_conf.set('RTE_MAX_LCORE', 128) 这个决定。

./lib/eal/common/eal_common_lcore.c

继续分析config/x86/meson.build代码,如下,可以看到通过修改编译,增加-Dmax_lcores来修改cpu数量来解决。

config/x86/meson.build

c

commit 8ef09fdc506b76d505d90e064d1f73533388b640

Author: Juraj Linkeš <juraj.linkes@pantheon.tech>

Date: Tue Aug 17 12:45:56 2021 +0200

build: add optional NUMA and CPU counts detection

Add an option to automatically discover the host's NUMA and CPU counts

and use those values for a non cross-build.

Give users the option to override the per-arch default values or values

from cross files by specifying them on the command line with -Dmax_lcores

and -Dmax_numa_nodes.

Signed-off-by: Juraj Linkeš <juraj.linkes@pantheon.tech>

Reviewed-by: Honnappa Nagarahalli <honnappa.nagarahalli@arm.com>

Reviewed-by: David Christensen <drc@linux.vnet.ibm.com>

Acked-by: Bruce Richardson <bruce.richardson@intel.com>

diff --git a/config/x86/meson.build b/config/x86/meson.build

index 704ba3db56..29f3dea181 100644

--- a/config/x86/meson.build

+++ b/config/x86/meson.build

@@ -70,3 +70,5 @@ else

endif

dpdk_conf.set('RTE_CACHE_LINE_SIZE', 64)

+dpdk_conf.set('RTE_MAX_LCORE', 128)

+dpdk_conf.set('RTE_MAX_NUMA_NODES', 32)解决方案:

编译dpdk-testpmd的时候,增加参数-Dmax_lcores=256,如下解决。

meson build -Dmax_lcores=256

小结:

dpdk在使用的时候,还是和机型绑定在一起的,对于核数和numa数量的增加,都会影响dpdk的使用,需要进行适配。比较好的是,dpdk在开发之初,考虑到了这个问题,通过调整编译参数就可以解决。