elk 9.2.4 安装部署

本地个人测试使用。

usermod -aG sudo test-

注意容器间的网络是否互通,比如elk_default与bridge。

-

注意在es中创建logstash和kibana的用户,并且注意logstash要使用两个用户,一个logstash_user(有创建索引的权限),一个logstash_system(保留用户,无创建索引权限)与es通信。

-

注意ssl证书。

-

chatgpt不可信。

-

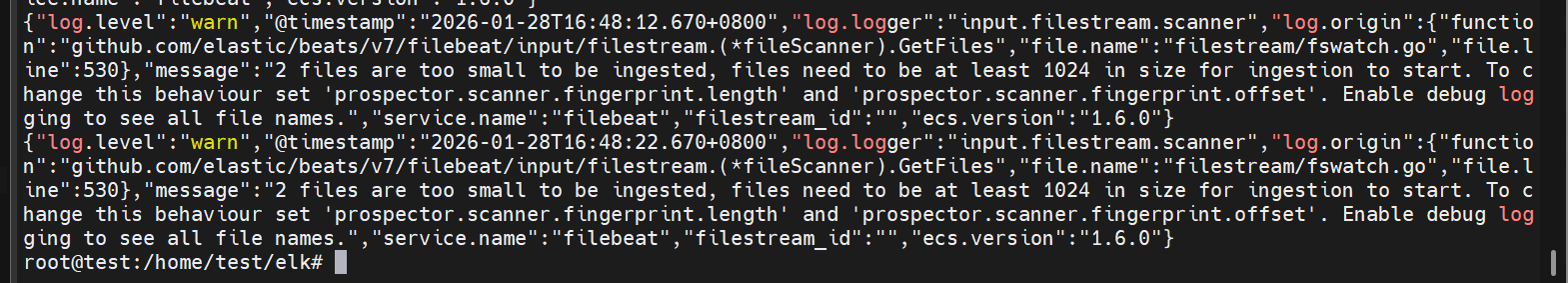

日志至少1024字节,不然filebeat读不到。

ubuntu 24 live server

思路

-

Elasticsearch + Logstash + Kibana:这种架构,通过Logstash收集日志,Elasticsearch分析日志,然后在Kibana中展示数据。这种架构虽然是官网介绍里的方式,但是在生产中却很少使用。

-

Elasticsearch + Logstash + filebeat + Kibana:与上一种架构相比,增加了一个filebeat模块。filebeat是一个轻量的日志收集代理,用来部署在客户端,优势是消耗非常少的资源(较logstash)就能够收集到日志。所以在生产中,往往会采取这种架构方式,但是这种架构有一个缺点,当logstash出现故障, 会造成日志的丢失。

-

Elasticsearch + Logstash + filebeat + redis + Kibana:此种架构是上面架构的完善版,通过增加中间件,来避免数据的丢失。当Logstash出现故障,日志还是存在中间件中,当Logstash再次启动,则会读取中间件中积压的日志。

所以,我们此次选择Elasticsearch + Logstash + filebeat + Kibana架构来进行演示。

静态路由

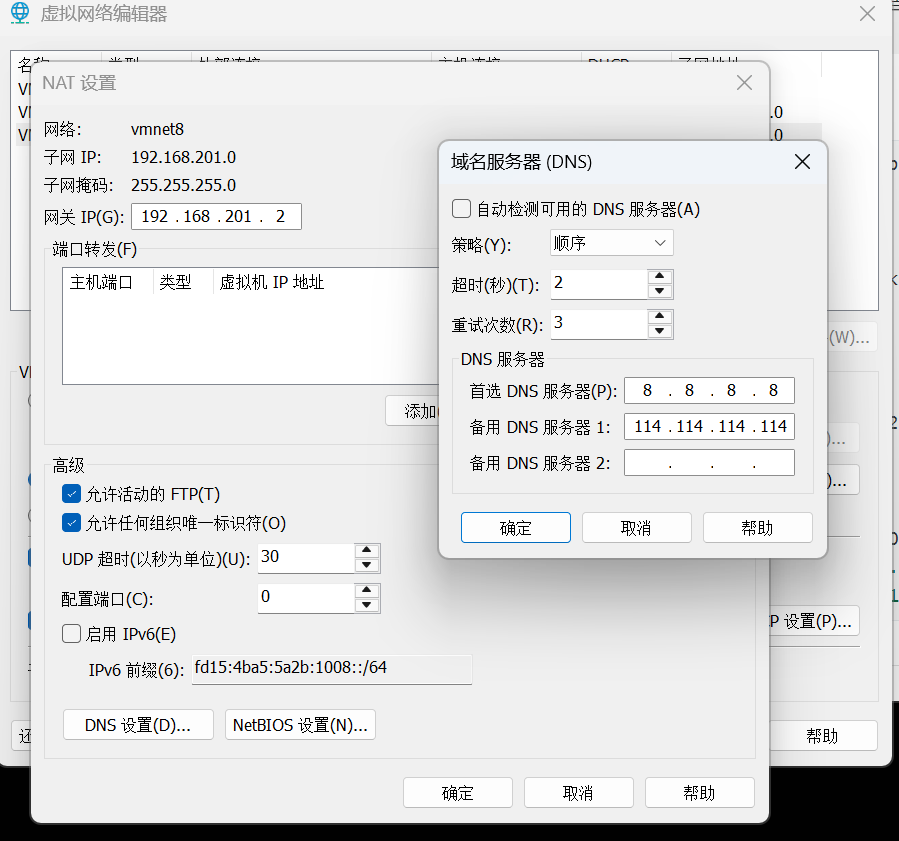

vmware 如果nat ping不通百度,尝试手动设置dns

sudo nano /etc/netplan/01-netcfg.yaml

network:

version: 2

renderer: networkd

ethernets:

ens33:

dhcp4: no

addresses:

- 192.168.201.134/24

nameservers:

addresses: [8.8.8.8, 1.1.1.1]

routes:

- to: 0.0.0.0/0

via: 192.168.201.2

metric: 100ctrl+o ,回车 保存文件

ctrl+X 退出

运行sudo netplan apply

docker安装

sudo apt remove $(dpkg --get-selections docker.io docker-compose docker-compose-v2 docker-doc podman-docker containerd runc | cut -f1)

# Add Docker's official GPG key:

sudo apt update

sudo apt install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:

sudo tee /etc/apt/sources.list.d/docker.sources <<EOF

Types: deb

URIs: https://download.docker.com/linux/ubuntu

Suites: $(. /etc/os-release && echo "${UBUNTU_CODENAME:-$VERSION_CODENAME}")

Components: stable

Signed-By: /etc/apt/keyrings/docker.asc

EOF

sudo apt update

sudo apt install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

sudo systemctl status docker

sudo apt-get update

sudo apt-get install docker-compose-plugin

docker compose version

apt install docker-composeelasticsearch&kibana安装

用官方的镜像来安装多节点集群e&k

test@test:~$ mkdir elk

test@test:~$ cd elknano .env

# Password for the 'elastic' user (at least 6 characters)

# 必须包含数字字母,不能包含特殊字符

ELASTIC_PASSWORD=Admin123

# Password for the 'kibana_system' user (at least 6 characters)

KIBANA_PASSWORD=Admin123

# logstash_system

LOGSTASH_PASSWORD=Admin123

# Version of Elastic products

STACK_VERSION=9.2.4

# Set the cluster name

CLUSTER_NAME=docker-cluster

# Set to 'basic' or 'trial' to automatically start the 30-day trial

LICENSE=basic

#LICENSE=trial

# Port to expose Elasticsearch HTTP API to the host

# 暴露给外部主机

ES_PORT=9200

#ES_PORT=127.0.0.1:9200

# Port to expose Kibana to the host

KIBANA_PORT=5601

#KIBANA_PORT=80

# Increase or decrease based on the available host memory (in bytes)

MEM_LIMIT=1073741824

# Project namespace (defaults to the current folder name if not set)

#COMPOSE_PROJECT_NAME=myproject

# KIBANA_ENCRYTION_KEY

# 生成32位随机密钥

# dd if=/dev/urandom bs=32 count=1 | base64

KIBANA_ENCRYTION_KEY=8y8zNLQkmSzJzQNfeBzzD3+CFGAofKAiUHf1PPm0+fU=

nano docker-compose.yml

配置了一个包含三个节点(es01, es02, es03)的 Elasticsearch 集群。这些节点通过 SSL 安全通信,且依赖相互发现来构建集群。Kibana:提供一个 Web 界面来与 Elasticsearch 集群交互。Kibana 配置了与 Elasticsearch 的安全连接。

#新建kibana和logstash用户

version: "2.2"

services:

setup:

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

user: "0"

command: >

bash -c '

if [ x${ELASTIC_PASSWORD} == x ]; then

echo "Set the ELASTIC_PASSWORD environment variable in the .env file";

exit 1;

elif [ x${KIBANA_PASSWORD} == x ]; then

echo "Set the KIBANA_PASSWORD environment variable in the .env file";

exit 1;

fi;

if [ ! -f config/certs/ca.zip ]; then

echo "Creating CA";

bin/elasticsearch-certutil ca --silent --pem -out config/certs/ca.zip;

unzip config/certs/ca.zip -d config/certs;

fi;

if [ ! -f config/certs/certs.zip ]; then

echo "Creating certs";

echo -ne \

"instances:\n"\

" - name: es01\n"\

" dns:\n"\

" - es01\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es02\n"\

" dns:\n"\

" - es02\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es03\n"\

" dns:\n"\

" - es03\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

> config/certs/instances.yml;

bin/elasticsearch-certutil cert --silent --pem -out config/certs/certs.zip --in config/certs/instances.yml --ca-cert config/certs/ca/ca.crt --ca-key config/certs/ca/ca.key;

unzip config/certs/certs.zip -d config/certs;

fi;

echo "Setting file permissions"

chown -R root:root config/certs;

find . -type d -exec chmod 750 \{\} \;;

find . -type f -exec chmod 640 \{\} \;;

echo "Waiting for Elasticsearch availability";

until curl -s --cacert config/certs/ca/ca.crt https://es01:9200 | grep -q "missing authentication credentials"; do sleep 30; done;

echo "Setting kibana_system password";

until curl -s -X POST --cacert config/certs/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://es01:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done;

until curl -s -X POST --cacert config/certs/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://es01:9200/_security/user/logstash_system/_password -d "{\"password\":\"${LOGSTASH_PASSWORD}\"}" | grep -q "^{}"; do sleep 5; done;

echo "All done!";

'

healthcheck:

test: ["CMD-SHELL", "[ -f config/certs/es01/es01.crt ]"]

interval: 1s

timeout: 5s

retries: 120

es01:

depends_on:

setup:

condition: service_healthy

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata01:/usr/share/elasticsearch/data

ports:

- ${ES_PORT}:9200

environment:

- node.name=es01

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es02,es03

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es01/es01.key

- xpack.security.http.ssl.certificate=certs/es01/es01.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es01/es01.key

- xpack.security.transport.ssl.certificate=certs/es01/es01.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

- xpack.ml.use_auto_machine_memory_percent=true

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es02:

depends_on:

- es01

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata02:/usr/share/elasticsearch/data

environment:

- node.name=es02

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es03

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es02/es02.key

- xpack.security.http.ssl.certificate=certs/es02/es02.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es02/es02.key

- xpack.security.transport.ssl.certificate=certs/es02/es02.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

- xpack.ml.use_auto_machine_memory_percent=true

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es03:

depends_on:

- es02

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata03:/usr/share/elasticsearch/data

environment:

- node.name=es03

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es02

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es03/es03.key

- xpack.security.http.ssl.certificate=certs/es03/es03.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es03/es03.key

- xpack.security.transport.ssl.certificate=certs/es03/es03.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

- xpack.ml.use_auto_machine_memory_percent=true

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

kibana:

depends_on:

es01:

condition: service_healthy

es02:

condition: service_healthy

es03:

condition: service_healthy

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

volumes:

- certs:/usr/share/kibana/config/certs

- kibanadata:/usr/share/kibana/data

ports:

- ${KIBANA_PORT}:5601

environment:

- SERVERNAME=kibana

- ELASTICSEARCH_HOSTS=https://es01:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt

- I18N_LOCALE=zh-CN

- KIBANA_ENCRYPTED_SAVED_OBJECTS_ENCRYPTION_KEY=YOUR_GENERATED_ENCRYPTION_KEY=${KIBANA_ENCRYTION_KEY}

mem_limit: ${MEM_LIMIT}

healthcheck:

test:

[

"CMD-SHELL",

"curl -s -I http://localhost:5601 | grep -q 'HTTP/1.1 302 Found'",

]

interval: 10s

timeout: 10s

retries: 120

volumes:

certs:

driver: local

esdata01:

driver: local

esdata02:

driver: local

esdata03:

driver: local

kibanadata:

driver: localdocker-compose up -d

#寻找第一次启动的fingerprint

docker logs elk_es01_1

docker-compose down

# 停止并删除容器,网络,卷

docker-compose down -velastic

Admin123

elastic

Admin123

logstash安装

Logstash是数据收集处理引擎,支持动态的从各种数据源搜集数据,并对数据进行过滤、分析、丰富、统一格式等操作,然后存储以供后续使用。首先,我们在容器中安装logstash,注意版本号的一致性。

https://www.elastic.co/docs/reference/logstash/docker-config

sodu su

docker pull docker.elastic.co/logstash/logstash:9.2.4

cd /home/test/elk

mkdir -p logstash

sudo chown -R 1000:1000 logstash

chmod -R 777 logstash

docker run --rm -it \

-d \

--name logstash \

-p 9600:9600 \

-p 5044:5044 \

docker.elastic.co/logstash/logstash:9.2.4将容器内的文件复制到主机上的./logstash/目录下

docker cp logstash:/usr/share/logstash/config ./logstash/

docker cp logstash:/usr/share/logstash/pipeline ./logstash/

mkdir ./elasticsearch

sudo chown -R 1000:1000 ./elasticsearch

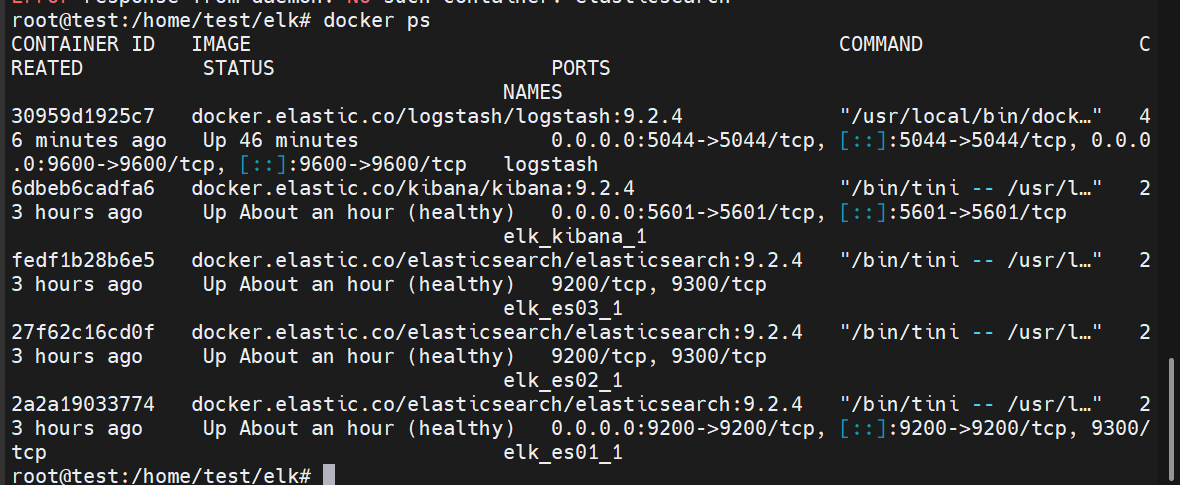

docker ps

# elk_es01_1

docker cp elk_es01_1:/usr/share/elasticsearch/config ./elasticsearch/

docker cp elk_es01_1:/usr/share/elasticsearch/data ./elasticsearch/

docker cp elk_es01_1:/usr/share/elasticsearch/plugins ./elasticsearch/

docker cp elk_es01_1:/usr/share/elasticsearch/logs ./elasticsearch/

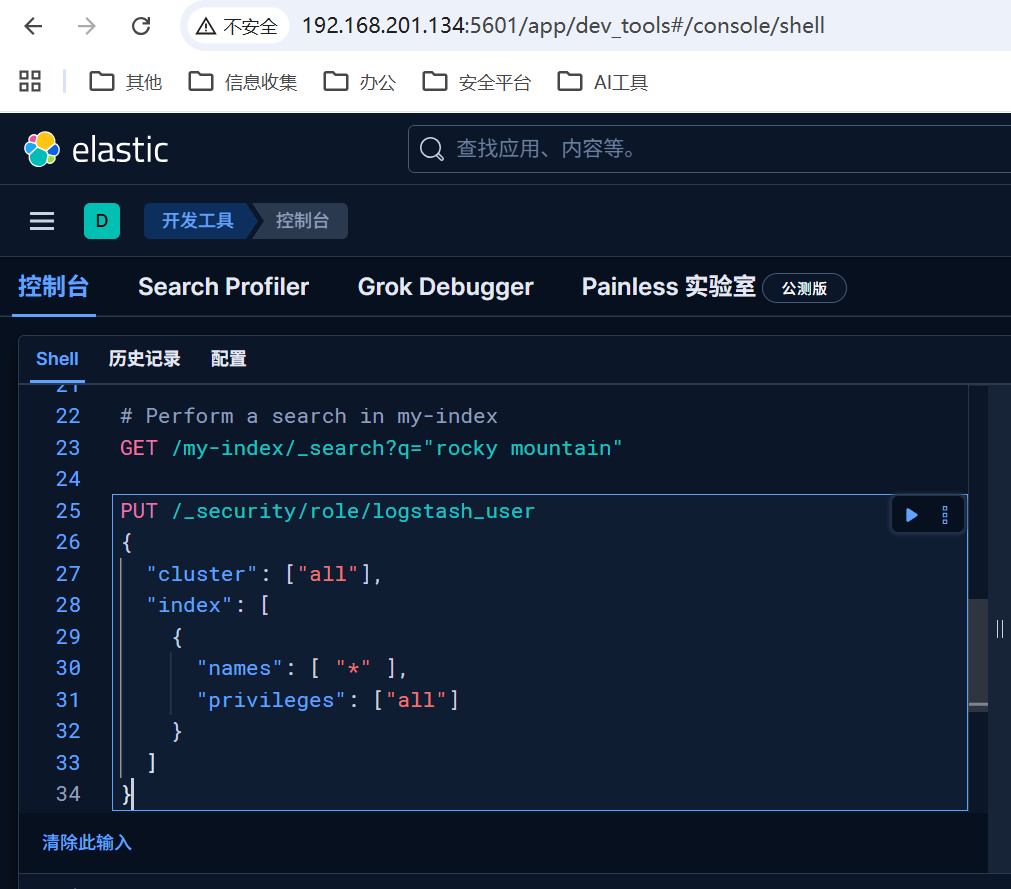

在kibana开发工具-控制台中

#创建角色并分配权限

PUT /_security/role/logstash_manager

{

"cluster": ["manage_index_templates", "manage", "all"],

"index": [

{

"names": [ "*" ],

"privileges": ["write", "create_index", "manage"]

}

]

}

# 创建新用户并分配角色

POST /_security/user/logstash_user

{

"password": "Admin123",

"roles": ["logstash_manager"],

"full_name": "Logstash User",

"email": "logstash_user@example.com"

}

查看es01网络可知es01在elk_default网络上,而logstash通常会在bridge网络上

docker inspect elk_es01_1

"Networks": {

"elk_default": {

"IPAMConfig": null,

"Links": null,

"Aliases": [

"8ef69f588d80",

"es01"

],

"DriverOpts": null,

"GwPriority": 0,

"NetworkID": "dff9f597e7a4e23237bd18573fd4ba1d7f02265c0198b0cd65dab4a7e6eae011",

"EndpointID": "c678cb95fc2fd40037043b1b4f1ae2ab84321e470b9eae010a929be279481f50",

"Gateway": "172.18.0.1",

"IPAddress": "172.18.0.3",

"MacAddress": "e2:b8:a7:00:fa:ca",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"DNSNames": [

"elk_es01_1",

"8ef69f588d80",

"es01"

]

}

}

},#查看es01,es02,es03的ip

root@test:/home/test/elk# docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' elk_es01_1

# 172.18.0.3

# 172.18.0.4

# 172.18.0.5

#提取指纹

openssl x509 -in ./logstash/config/certs/ca/ca.crt -noout -fingerprint -sha256

#sha256 Fingerprint=32:A1:A2:24:D2:EA:05:53:A3:40:87:FF:79:42:21:41:B7:5A:56:E9:9E:71:04:9C:4E:33:81:11:54:15:BD:44

nano ./logstash/config/logstash.yml

api.http.host: "0.0.0.0"

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.hosts: [ "https://es01:9200" ]

xpack.monitoring.elasticsearch.username: "logstash_system"

xpack.monitoring.elasticsearch.password: "Admin123"

xpack.monitoring.elasticsearch.ssl.certificate_authority: "/usr/share/logstash/config/certs/ca/ca.crt"

#xpack.monitoring.elasticsearch.ssl.ca_trusted_fingerprint: "32A1A224D2EA0553A34087FF79422141B75A56E99E71049C4E3381115415BD44"

xpack.monitoring.allow_legacy_collection: truenano ./logstash/pipeline/logstash.conf

input {

beats {

port => 5044

ssl_enabled => false

}

}

filter {

date {

match => [ "@timestamp", "yyyy-MM-dd HH:mm:ss Z" ]

}

mutate {

remove_field => ["@version", "agent", "cloud", "host", "input", "log", "tags", "_index", "_source", "ecs", "event"]

}

}

output {

elasticsearch {

hosts => ["https://es01:9200"]

index => "server-%{+YYYY.MM.dd}"

ssl_enabled => true

ssl_verification_mode => "full"

ssl_certificate_authorities => "/usr/share/logstash/config/certs/ca/ca.crt"

#ca_trusted_fingerprint => "32A1A224D2EA0553A34087FF79422141B75A56E99E71049C4E3381115415BD44"

user => "logstash_user"

password => "Admin123"

}

}删除原本的logstash再装,需要指定网络,不然无法与es通信

docker rm -f logstash

# 指定网络

docker run -it \

-d \

--name logstash \

--network elk_default \

-p 9600:9600 \

-p 5044:5044 \

-v /home/test/elk/logstash/config:/usr/share/logstash/config \

-v /home/test/elk/logstash/pipeline:/usr/share/logstash/pipeline \

docker.elastic.co/logstash/logstash:9.2.4

docker network connect bridge logstash

docker logs -f logstash

docker exec -it logstash /bin/bashFilebeat安装

Filebeat是一个轻量级数据收集引擎,相对于Logstash所占用的系统资源来说,Filebeat 所占用的系统资源几乎是微乎及微,主要是为了解决logstash数据丢失的场景。首先,在Docker中安装Filebeat镜像。

mkdir ./log

mkdir ./log/log

mkdir ./log/log/log

docker pull docker.elastic.co/beats/filebeat:9.2.4

docker run --rm -it \

-d \

--name filebeat \

-e TZ=Asia/Shanghai \

docker.elastic.co/beats/filebeat:9.2.4 \

filebeat -e -c /usr/share/filebeat/filebeat.yml拷贝一下

mkdir ./filebeat

sudo chown -R 1000:1000 ./filebeat

sudo chmod -R 777 /home/test/elk/filebeat

chmod go-w /home/test/elk/filebeat/

chmod go-w /home/test/elk/filebeat/filebeat.yml

docker cp filebeat:/usr/share/filebeat/filebeat.yml /home/test/elk/filebeat/

docker cp filebeat:/usr/share/filebeat/data ./filebeat/

docker cp filebeat:/usr/share/filebeat/logs ./filebeat/ 修改一下配置

docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' logstash

# docker里logstash的地址 172.18.0.2

nano ./filebeat/filebeat.yml

filebeat.config:

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

processors:

- add_cloud_metadata: ~

- add_docker_metadata: ~

output.logstash:

enabled: true

# The Logstash hosts

hosts: ["172.18.0.2:5044"]

filebeat.inputs:

- type: filestream

enabled: true

paths:

- /usr/share/filebeat/target/*.log

# 这个路径是需要收集的日志路径,是docker容器中的路径

scan_frequency: 10s

exclude_lines: ['HEAD']

exclude_lines: ['HTTP/1.1']

multiline.pattern: '^[[:space:]]+(at|.{3})\b|Exception|捕获异常'

multiline.negate: false

multiline.match: after

#output.elasticsearch:

# hosts: '${ELASTICSEARCH_HOSTS:elasticsearch:9200}'

# username: '${ELASTICSEARCH_USERNAME:}'

# password: '${ELASTICSEARCH_PASSWORD:}'docker rm -f filebeat

docker run -it \

-d \

--name filebeat \

--network elk_default \

-e TZ=Asia/Shanghai \

-v /home/test/elk/log:/usr/share/filebeat/target \

-v /home/test/elk/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml \

-v /home/test/elk/filebeat/data:/usr/share/filebeat/data \

-v /home/test/elk/filebeat/logs:/usr/share/filebeat/logs \

docker.elastic.co/beats/filebeat:9.2.4 \

filebeat -e -c /usr/share/filebeat/filebeat.yml

#连接第二个网络

docker network connect bridge filebeat

docker logs logstash | grep 5044

docker exec -it filebeat /bin/bash

bash-5.1$ filebeat test output-v /home/test/elk/log:/usr/share/filebeat/target \是需要收集的日志记录,挂载到容器中

日志最少1024字节

nano test.sh

chmod +x ./test.sh

./test.sh

#!/bin/bash

# 设置日志文件路径

LOG_FILE="/home/test/elk/log/999.log"

# 清空现有日志文件

> $LOG_FILE

# 写入报错信息,直到文件大小达到2048字节

ERROR_MESSAGE="2026-01-28 10:01:00 +0000 Exception: 捕获异常: ERROR: Something went wrong! This is an error message. Please check the system configuration and try again.\n"

count_t=1

# 使用循环填充日志文件,直到它达到2048字节

while [ $(wc -c <"$LOG_FILE") -lt 2048 ]; do

echo -e "$ERROR_MESSAGE $count_t" >> $LOG_FILE

((count_t++)) # 正确的自增语法

done

# 输出日志文件的大小

echo "Log file created with size: $(wc -c <"$LOG_FILE") bytes"

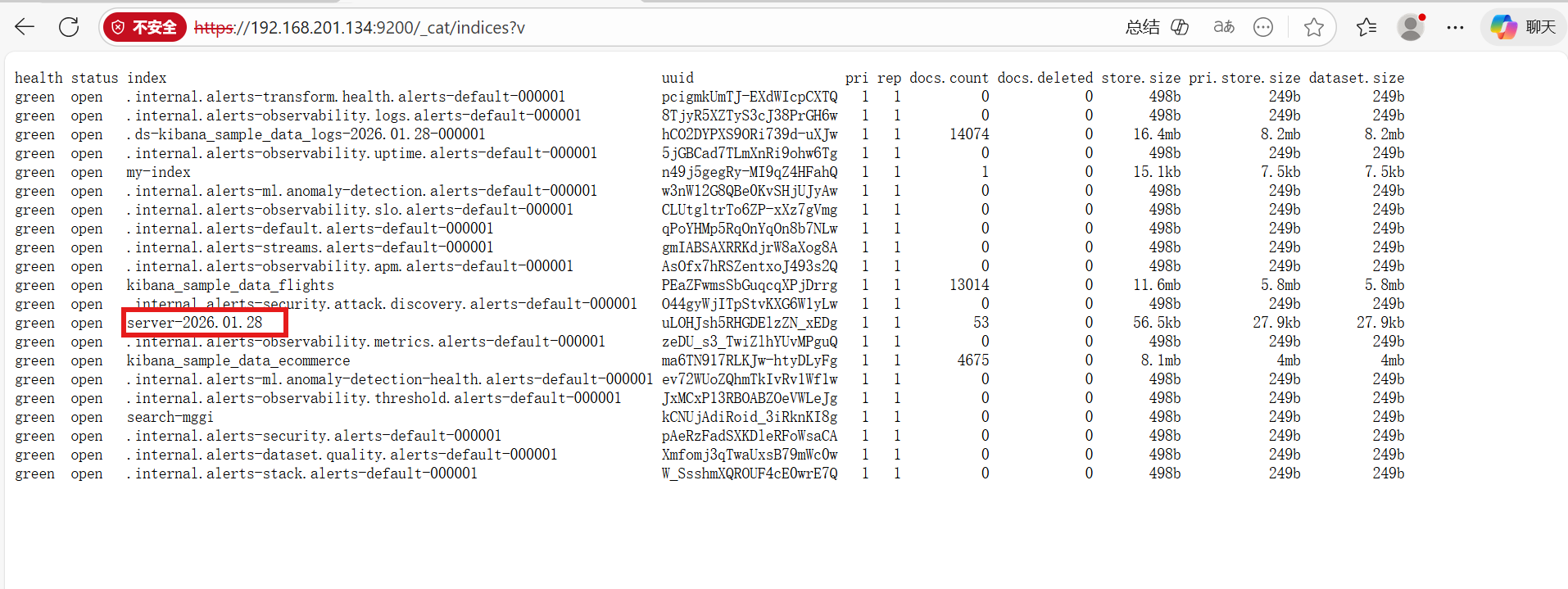

成功发送数据并创建索引

常见报错

docker logs filebeat |grep .log

日志文件最小为1024字节

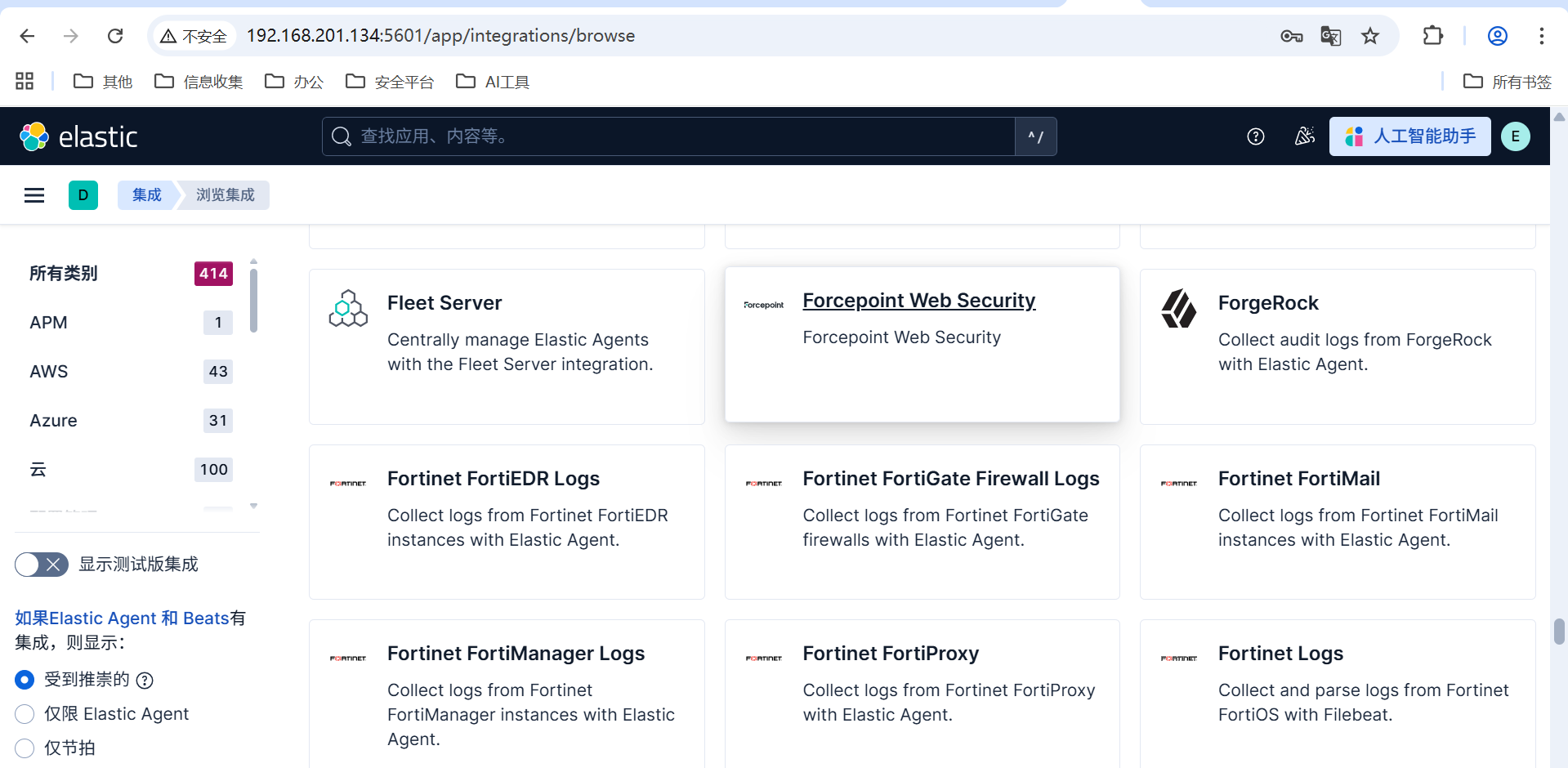

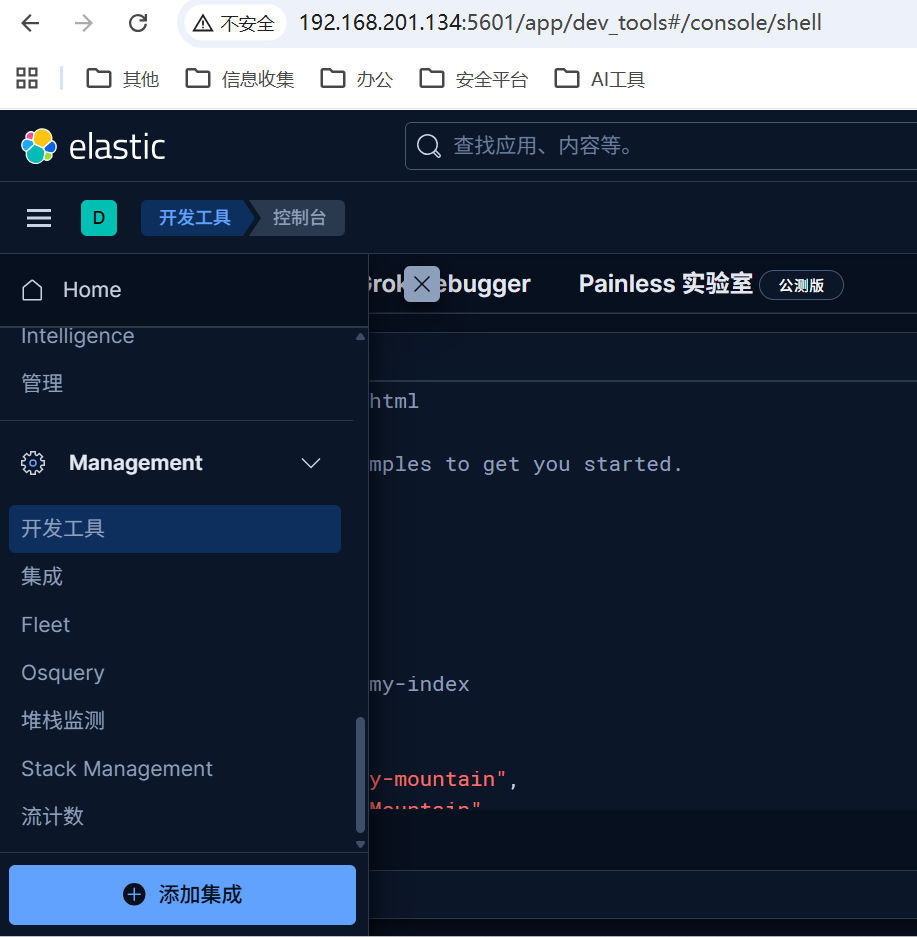

kibana使用

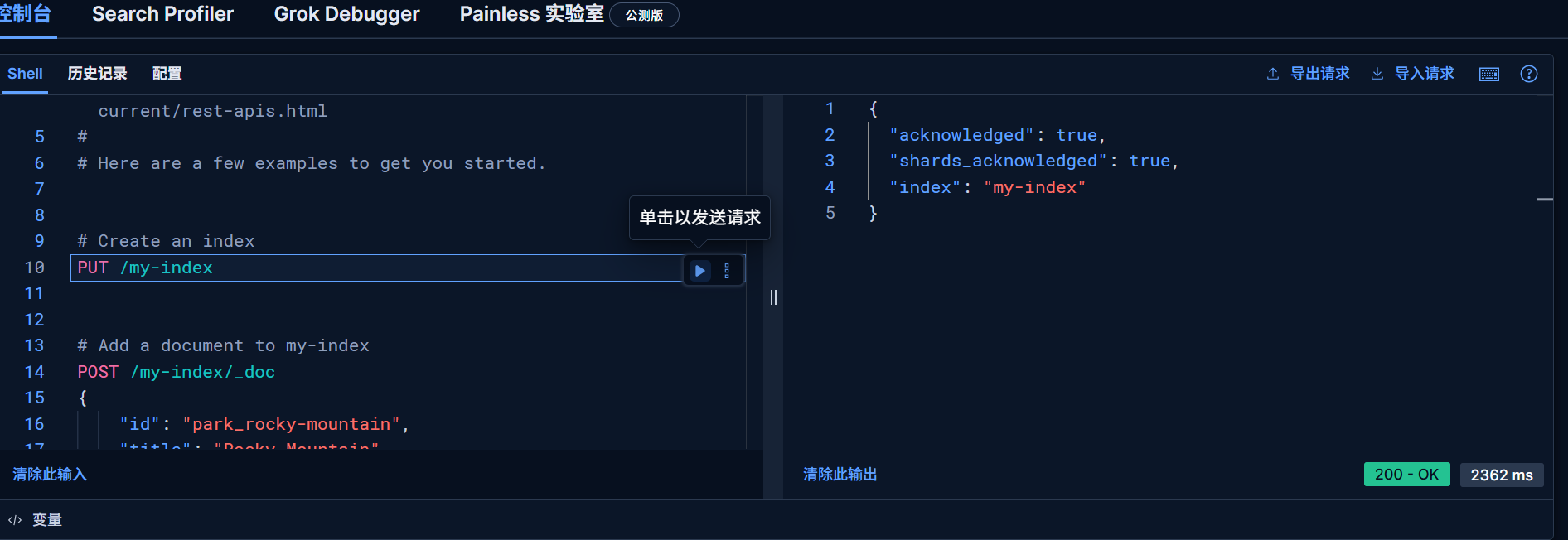

来到开发工具-控制台

发送请求创建索引

# Create an index

PUT /my-index

# Add a document to my-index

POST /my-index/_doc

{

"id": "park_rocky-mountain",

"title": "Rocky Mountain",

"description": "Bisected north to south by the Continental Divide, this portion of the Rockies has ecosystems varying from over 150 riparian lakes to montane and subalpine forests to treeless alpine tundra."

}

# Perform a search in my-index

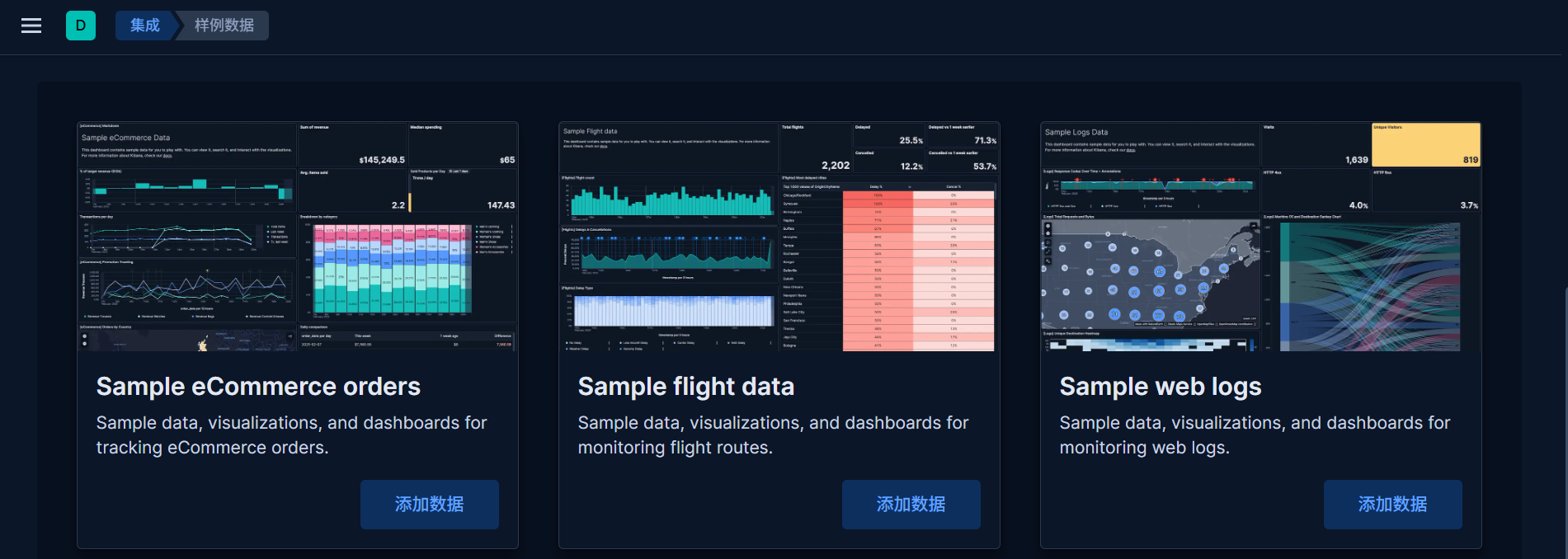

GET /my-index/_search?q="rocky mountain"在主页点击试用样例数据

添加三个样例数据

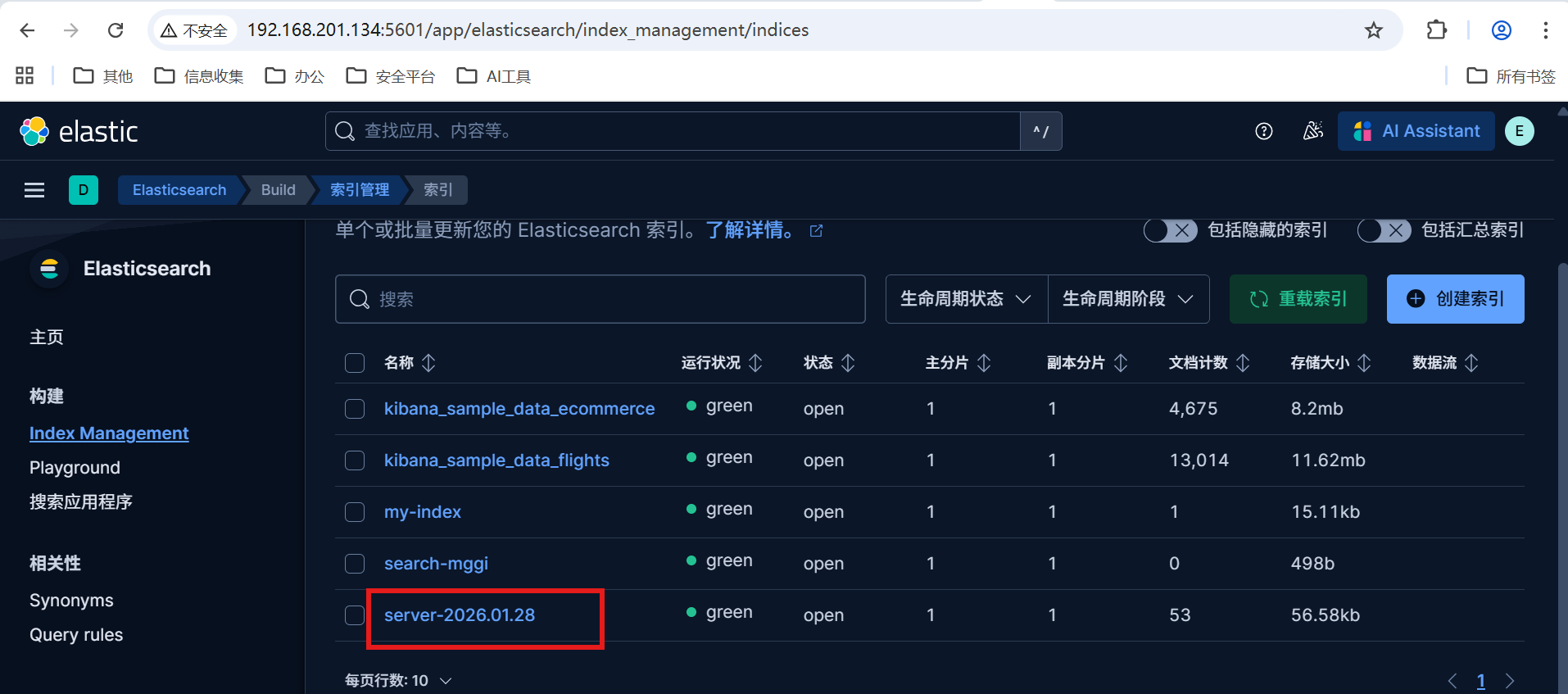

这样我们就把 Kibana 自带的三个数据集摄入到 Elasticsearch 中。上面的操作把数据加载到 Elasticsearch 中,并分别创建它们的 index patterns 也就是它们的索引模式。

我们可以在 index management查看我们filebeat->logstash->es的数据。

参考

https://www.elastic.co/docs/deploy-manage/deploy/self-managed/install-elasticsearch-docker-compose