复制代码

# -*- coding: utf-8 -*-

import pyrealsense2 as rs

import numpy as np

import cv2

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchvision import models, transforms

import urx

import time

import sys

from scipy.spatial.transform import Rotation as R

import open3d as o3d # 点云可视化核心库

import matplotlib.pyplot as plt

# ======================== 1. 个性化参数配置(必须修改!)========================

# 手眼标定矩阵(相机→UR5基坐标系,替换为你的标定结果)

EYE_IN_HAND_MATRIX = np.array([

[0.04422573, -0.14504633, 0.98802194, 0.00212291],

[-0.99891311, -0.00093512, 0.04656854, 0.10114924],

[0.00255313, -0.98941564, -0.14480217, 0.01464284],

[0.0, 0.0, 0.0, 1.0]

])

# UR5机械臂配置

UR5_IP = "192.168.56.100" # 替换为你的UR5 IP地址

SAFE_HEIGHT = 0.15 # 抓取安全高度(距桌面,单位:米)

MOVE_ACC = 0.1 # 运动加速度

MOVE_VEL = 0.1 # 运动速度

# Robotiq夹爪配置(数字输出端口,根据实际接线修改)

GRIPPER_PORT = 0 # 夹爪控制的数字输出端口

GRIPPER_OPEN_VAL = 255 # 夹爪全开值

GRIPPER_CLOSE_VAL = 0 # 夹爪全闭值

GRIPPER_DELAY = 2 # 夹爪开合等待时间(秒)

# Realsense相机内参(替换为你的相机标定内参)

CAM_FX = 614.543 # 焦距x

CAM_FY = 614.178 # 焦距y

CAM_CX = 314.967 # 光心x

CAM_CY = 245.923 # 光心y

# GraspNet配置

CONFIDENCE_THRESHOLD = 0.1 # 置信度阈值(可调)

GRASP_WIDTH = 0.085 # 抓取宽度(米)

GRASP_DEPTH = 0.04 # 抓取深度(米)

PRETRAINED_WEIGHTS_PATH = "graspnet_pretrained.pth" # 预训练权重路径

NEIGHBOR_SIZE = 3 # 深度采样邻域大小(3×3)

POINT_CLOUD_THRESHOLD = 10 # 点云邻域验证阈值(最少点数)

# ======================== 2. CPU版GraspNet模型(无GPU依赖)========================

class GraspNetCPU(nn.Module):

def __init__(self, num_angle=12, num_width=16):

super(GraspNetCPU, self).__init__()

# 骨干网络:ResNet50(加载预训练权重)

self.backbone = models.resnet50(weights=models.ResNet50_Weights.DEFAULT)

self.backbone = nn.Sequential(*list(self.backbone.children())[:-2])

# 关键点分支(预测抓取点置信度)

self.key_point_head = nn.Sequential(

nn.Conv2d(2048, 512, kernel_size=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 1, kernel_size=1),

nn.Sigmoid()

)

# 姿态分支(预测角度+宽度)

self.pose_head = nn.Sequential(

nn.Conv2d(2048, 512, kernel_size=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, num_angle + num_width, kernel_size=1)

)

self.num_angle = num_angle

self.num_width = num_width

self.device = torch.device("cpu")

self.to(self.device)

self.eval() # 固定为推理模式

def forward(self, x):

features = self.backbone(x)

grasp_confidence = self.key_point_head(features)

pose_pred = self.pose_head(features)

angle_pred = pose_pred[:, :self.num_angle, :, :]

width_pred = pose_pred[:, self.num_angle:, :, :]

angle_prob = F.softmax(angle_pred, dim=1)

return {

"grasp_confidence": grasp_confidence,

"angle_prob": angle_prob,

"width_pred": width_pred

}

def load_pretrained_weights(self, weight_path):

"""加载预训练权重"""

try:

checkpoint = torch.load(weight_path, map_location=self.device)

if "model_state_dict" in checkpoint:

self.load_state_dict(checkpoint["model_state_dict"], strict=False)

else:

self.load_state_dict(checkpoint, strict=False)

print(f"✅ 成功加载预训练权重:{weight_path}")

except FileNotFoundError:

print(f"⚠️ 未找到预训练权重 {weight_path},使用随机初始化权重")

except Exception as e:

print(f"⚠️ 加载权重失败:{e},使用随机初始化权重")

# ======================== 3. Realsense D415图像+点云采集 ========================

def capture_realsense_data():

"""采集RGB-D图像、深度缩放因子和点云(修复返回值+重试机制)"""

# 初始化Realsense管道

pipeline = rs.pipeline()

config = rs.config()

# 强制使用相机支持的分辨率/帧率

config.enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

config.enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

# 启动流(增加异常处理)

try:

profile = pipeline.start(config)

except rs.error as e:

print(f"❌ Realsense启动失败:{e}")

print("💡 建议检查:1.相机是否连接 2.USB接口是否为3.0 3.是否被其他程序占用")

pipeline.stop()

sys.exit(1)

# 获取深度缩放因子

depth_sensor = profile.get_device().first_depth_sensor()

depth_scale = depth_sensor.get_depth_scale()

# 配置点云生成器

pc = rs.pointcloud()

points = rs.points()

align = rs.align(rs.stream.color)

# 增加帧获取重试机制(最多重试10次)

color_frame = None

depth_frame = None

retry_count = 0

max_retry = 10

while retry_count < max_retry:

try:

# 设置超时时间(5000ms)

frames = pipeline.wait_for_frames(timeout_ms=5000)

aligned_frames = align.process(frames)

color_frame = aligned_frames.get_color_frame()

depth_frame = aligned_frames.get_depth_frame()

if color_frame and depth_frame:

break # 成功获取帧,退出重试

else:

retry_count += 1

print(f"⚠️ 第{retry_count}次重试获取帧(未获取到有效帧)")

time.sleep(0.5)

except rs.error as e:

retry_count += 1

print(f"⚠️ 第{retry_count}次重试获取帧(错误:{e})")

time.sleep(0.5)

# 最终检查帧是否有效

if not color_frame or not depth_frame:

print("❌ 多次重试后仍未获取到相机帧,退出程序")

pipeline.stop()

sys.exit(1)

# 转换为numpy数组

color_img = np.asanyarray(color_frame.get_data())

depth_img = np.asanyarray(depth_frame.get_data())

# 生成点云(关键补充:返回pcd)

points = pc.calculate(depth_frame)

vtx = np.asanyarray(points.get_vertices()).view(np.float32).reshape(-1, 3) # (N,3) 相机坐标系点云

pcd = o3d.geometry.PointCloud()

pcd.points = o3d.utility.Vector3dVector(vtx)

# 给点云上色

color_data = np.asanyarray(color_frame.get_data()).reshape(-1, 3) / 255.0

pcd.colors = o3d.utility.Vector3dVector(color_data)

# 停止管道

pipeline.stop()

return color_img, depth_img, depth_scale, pcd # 返回4个值,匹配调用

# ======================== 4. 辅助函数:手眼矩阵验证 ========================

def validate_hand_eye_matrix():

"""验证手眼矩阵精度(可选:替换为实际标定点)"""

# 假设标定板中心在相机坐标系的已知位置(需根据实际场景修改)

cam_calib = np.array([0.1, 0.0, 0.5, 1.0])

base_calib = EYE_IN_HAND_MATRIX @ cam_calib

print(f"\n📌 手眼矩阵验证:")

print(f" 相机坐标系标定点:{cam_calib[:3]} m")

print(f" 转换后基坐标系点:{base_calib[:3]} m")

print(f" 💡 若偏差>0.01m,请重新标定手眼矩阵")

# ======================== 4. 抓取位姿检测与转换 ========================

def detect_and_convert_grasp_pose(color_img, depth_img, depth_scale, model, pcd):

"""检测抓取位姿并转换到UR5基坐标系(优化深度采样+点云验证)"""

# 图像预处理(增强对比度)

class EnhanceContrast:

def __call__(self, img):

from PIL import ImageEnhance

enhancer = ImageEnhance.Contrast(img)

return enhancer.enhance(1.5)

transform = transforms.Compose([

transforms.ToPILImage(),

EnhanceContrast(),

transforms.Resize((480, 640)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

input_tensor = transform(color_img).unsqueeze(0).to(model.device)

# CPU推理(关闭梯度)

with torch.no_grad():

output = model(input_tensor)

# 解析检测结果

conf_map = output["grasp_confidence"].squeeze().cpu().numpy()

angle_prob = output["angle_prob"].squeeze().cpu().numpy()

# 优化:置信度图上采样,减少像素还原误差

conf_map_upsampled = cv2.resize(conf_map, (640, 480), interpolation=cv2.INTER_CUBIC)

# 找置信度最高的抓取点(支持次优选择)

def get_best_grasp_point(conf_map_input, skip_idx=None):

conf_flat = conf_map_input.flatten()

if skip_idx is not None:

conf_flat[skip_idx] = 0 # 屏蔽已选点

max_conf = np.max(conf_flat)

max_conf_idx = np.unravel_index(np.argmax(conf_flat), conf_map_input.shape)

return max_conf, max_conf_idx, conf_flat

max_conf, max_conf_idx, conf_flat = get_best_grasp_point(conf_map)

grasp_h, grasp_w = max_conf_idx

skip_index = None

# 检查置信度

if max_conf < CONFIDENCE_THRESHOLD:

print(f"⚠️ 最高置信度 {max_conf:.3f} 低于阈值 {CONFIDENCE_THRESHOLD},强制使用该点")

while True:

# 优化:浮点还原像素坐标(避免整数误差)

grasp_x_px = grasp_w * 32.0

grasp_y_px = grasp_h * 32.0

# 边界裁剪

grasp_x_px = np.clip(grasp_x_px, 0, depth_img.shape[1]-1)

grasp_y_px = np.clip(grasp_y_px, 0, depth_img.shape[0]-1)

# 优化1:邻域均值滤波采样深度(替换单像素)

grasp_y_int = int(grasp_y_px)

grasp_x_int = int(grasp_x_px)

# 计算邻域范围

half_neighbor = NEIGHBOR_SIZE // 2

y_min = max(0, grasp_y_int - half_neighbor)

y_max = min(depth_img.shape[0]-1, grasp_y_int + half_neighbor)

x_min = max(0, grasp_x_int - half_neighbor)

x_max = min(depth_img.shape[1]-1, grasp_x_int + half_neighbor)

# 提取邻域有效深度

neighbor_depth = depth_img[y_min:y_max+1, x_min:x_max+1]

valid_depth = neighbor_depth[neighbor_depth > 0] # 过滤Realsense无效深度(0值)

# 统计场景有效深度中位数(替代固定默认值)

valid_depth_all = depth_img[depth_img > 0] * depth_scale

if len(valid_depth_all) == 0:

print("❌ 无有效深度值,退出")

sys.exit(1)

depth_median = np.median(valid_depth_all)

# 确定深度值

if len(valid_depth) == 0:

print(f"❌ 抓取点邻域无有效深度,使用场景中位数 {depth_median:.3f}m")

depth_value = depth_median

else:

depth_value = np.mean(valid_depth) * depth_scale

# 优化2:深度过滤(用场景中位数替代固定0.2m)

if depth_value < 0.01 or depth_value > 3.0:

print(f"❌ 无效深度值:{depth_value:.3f}m,使用场景中位数 {depth_median:.3f}m")

depth_value = depth_median

# 像素坐标→相机坐标系

cam_x = (grasp_x_px - CAM_CX) * depth_value / CAM_FX

cam_y = (grasp_y_px - CAM_CY) * depth_value / CAM_FY

cam_z = depth_value

# 优化3:点云邻域验证(避免飘到无点云区域)

pcd_points = np.asarray(pcd.points)

distances = np.linalg.norm(pcd_points - [cam_x, cam_y, cam_z], axis=1)

near_points = distances[distances < 0.01] # 0.01m范围内点云数量

if len(near_points) >= POINT_CLOUD_THRESHOLD:

# 点云验证通过,退出循环

break

else:

# 点云验证失败,选择次优置信度点

print(f"⚠️ 抓取点周围无足够点云(仅{len(near_points)}个),切换次优置信度点")

if skip_index is None:

skip_index = np.argmax(conf_flat)

else:

conf_flat[skip_index] = 0

skip_index = np.argmax(conf_flat)

# 获取次优点

max_conf, max_conf_idx, _ = get_best_grasp_point(conf_map, skip_index)

grasp_h, grasp_w = max_conf_idx

# 防止无限循环

if max_conf < CONFIDENCE_THRESHOLD / 2:

print("❌ 无有效抓取点,退出")

sys.exit(1)

# 解析抓取角度(0-180°)

angle_idx = np.argmax(angle_prob[:, grasp_h, grasp_w])

grasp_angle = angle_idx * 15 # 12类→每类15°

# 相机坐标系→UR5基坐标系(手眼标定转换)

cam_pose = np.array([cam_x, cam_y, cam_z, 1.0])

base_pose = EYE_IN_HAND_MATRIX @ cam_pose

# 构造抓取姿态(RPY)

rot = R.from_euler('z', grasp_angle, degrees=True)

rpy = rot.as_euler('xyz', degrees=True)

# 输出调试信息

print(f"\n📌 抓取检测结果:")

print(f" 像素坐标:({grasp_x_px:.1f}, {grasp_y_px:.1f})")

print(f" 置信度:{max_conf:.3f}")

print(f" 相机坐标系:({cam_x:.3f}, {cam_y:.3f}, {cam_z:.3f}) m")

print(f" UR5基坐标系:({base_pose[0]:.3f}, {base_pose[1]:.3f}, {base_pose[2]:.3f}) m")

print(f" 抓取角度:{grasp_angle}°")

print(f" 点云验证:{len(near_points)}个邻近点(阈值{POINT_CLOUD_THRESHOLD})")

return {

"position": (base_pose[0], base_pose[1], base_pose[2]),

"rpy": (rpy[0], rpy[1], rpy[2]),

"angle": grasp_angle,

"confidence": max_conf,

"cam_position": (cam_x, cam_y, cam_z) # 相机坐标系位置(用于点云可视化)

}

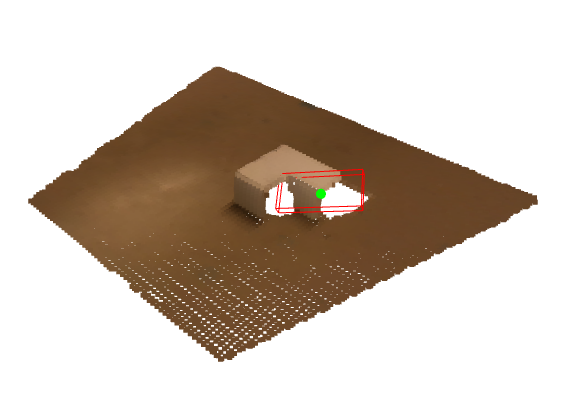

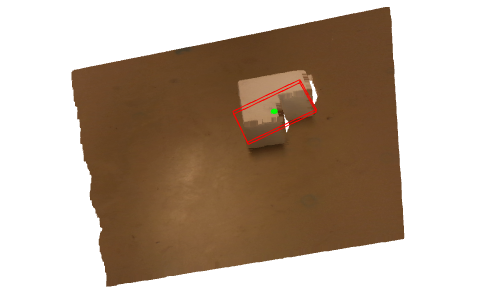

# ======================== 5. 点云+抓取位姿可视化 ========================

def visualize_grasp_pose(pcd, grasp_pose):

"""可视化点云和抓取位姿(适配所有Open3D版本)"""

# 1. 复制点云(避免修改原始数据)

pcd_vis = o3d.geometry.PointCloud()

pcd_vis.points = o3d.utility.Vector3dVector(np.asarray(pcd.points))

if pcd.colors: # 保留彩色点云

pcd_vis.colors = o3d.utility.Vector3dVector(np.asarray(pcd.colors))

# 2. 提取抓取位姿参数

cam_x, cam_y, cam_z = grasp_pose["cam_position"]

grasp_angle = grasp_pose["angle"]

# 3. 生成抓取框的8个顶点(相机坐标系)

center = np.array([cam_x, cam_y, cam_z])

rot_mat = R.from_euler('z', grasp_angle, degrees=True).as_matrix()

half_width = GRASP_WIDTH / 2

half_depth = GRASP_DEPTH / 2

vertices = np.array([

[half_width, -half_depth, 0], [half_width, half_depth, 0],

[-half_width, half_depth, 0], [-half_width, -half_depth, 0],

[half_width, -half_depth, -0.01], [half_width, half_depth, -0.01],

[-half_width, half_depth, -0.01], [-half_width, -half_depth, -0.01]

])

vertices = (rot_mat @ vertices.T).T + center

# 4. 创建抓取框(兼容低版本Open3D)

grasp_box = o3d.geometry.LineSet()

grasp_box.points = o3d.utility.Vector3dVector(vertices)

lines = [

[0,1], [1,2], [2,3], [3,0], # 顶面

[4,5], [5,6], [6,7], [7,4], # 底面

[0,4], [1,5], [2,6], [3,7] # 竖边

]

grasp_box.lines = o3d.utility.Vector2iVector(lines)

colors = [[1, 0, 0] for _ in range(len(lines))]

grasp_box.colors = o3d.utility.Vector3dVector(colors)

# 5. 创建抓取中心点(兼容所有版本)

center_sphere = o3d.geometry.TriangleMesh.create_sphere(radius=0.005)

center_sphere.translate(center)

center_sphere.paint_uniform_color([0, 1, 0]) # 绿色

# 6. 可视化(最稳定的兼容写法)

o3d.visualization.draw_geometries([pcd_vis, grasp_box, center_sphere],

window_name="GraspNet 点云+抓取位姿可视化",

width=800, height=600)

# ======================== 6. 置信度热力图可视化 ========================

def visualize_confidence_map(conf_map):

"""可视化置信度热力图"""

plt.figure(figsize=(8, 6))

plt.imshow(conf_map, cmap='jet')

plt.colorbar(label='Grasp Confidence')

plt.title('GraspNet Confidence Map')

plt.xlabel('Width (scaled)')

plt.ylabel('Height (scaled)')

# 标记最高置信度点

max_idx = np.unravel_index(np.argmax(conf_map), conf_map.shape)

plt.scatter(max_idx[1], max_idx[0], c='red', s=50, marker='*', label='Max Confidence')

plt.legend()

plt.tight_layout()

plt.show()

# ======================== 7. UR5+Robotiq控制 ========================

def control_ur5_grasp(ur5_ip, grasp_pose):

"""控制UR5执行抓取动作"""

# 连接UR5机械臂

try:

rob = urx.Robot(ur5_ip)

rob.set_tcp((0.0, 0.0, 0.1, 0.0, 0.0, 0.0)) # 设置工具坐标系(夹爪)

rob.set_payload(0.5) # 设置负载(kg)

print(f"\n✅ 成功连接UR5:{ur5_ip}")

except Exception as e:

print(f"\n❌ 连接UR5失败:{e}")

sys.exit(1)

# 提取位姿

x, y, z = grasp_pose["position"]

roll, pitch, yaw = grasp_pose["rpy"]

try:

# 步骤1:移动到抓取上方安全位置

safe_pose = (x, y, z + SAFE_HEIGHT, roll, pitch, yaw)

print(f"\n📌 移动到安全位置:{safe_pose}")

rob.movej(safe_pose, acc=MOVE_ACC, vel=MOVE_VEL)

time.sleep(1)

# 步骤2:打开夹爪

print("📌 打开夹爪")

rob.send_program(f"set_digital_out({GRIPPER_PORT}, {GRIPPER_OPEN_VAL})")

time.sleep(GRIPPER_DELAY)

# 步骤3:移动到抓取位置

grasp_pose_full = (x, y, z, roll, pitch, yaw)

print(f"📌 移动到抓取位置:{grasp_pose_full}")

rob.movej(grasp_pose_full, acc=0.05, vel=0.05) # 低速接近

time.sleep(1)

# 步骤4:关闭夹爪抓取物品

print("📌 关闭夹爪抓取物品")

rob.send_program(f"set_digital_out({GRIPPER_PORT}, {GRIPPER_CLOSE_VAL})")

time.sleep(GRIPPER_DELAY)

# 步骤5:抬升机械臂

print("📌 抬升机械臂")

rob.movej(safe_pose, acc=MOVE_ACC, vel=MOVE_VEL)

time.sleep(1)

print("\n🎉 抓取完成!")

except Exception as e:

print(f"\n❌ 抓取过程出错:{e}")

finally:

# 断开连接

rob.close()

# ======================== 8. 主流程 ========================

if __name__ == "__main__":

print("===== UR5+Realsense+GraspNet-v2 精准抓取 =====\n")

# 1. 验证手眼矩阵(可选)

validate_hand_eye_matrix()

# 2. 初始化GraspNet模型

print("\n🔧 初始化GraspNet-v2抓取检测模型...")

grasp_model = GraspNetCPU()

grasp_model.load_pretrained_weights(PRETRAINED_WEIGHTS_PATH)

# 3. 采集相机图像和点云

print("\n📷 采集相机图像和点云(深度滤波中)...")

color_img, depth_img, depth_scale, pcd = capture_realsense_data()

# 4. 检测最优抓取位姿(传入pcd用于点云验证)

print("\n🤖 检测最优抓取位姿(优化深度采样+点云验证)...")

grasp_pose = detect_and_convert_grasp_pose(color_img, depth_img, depth_scale, grasp_model, pcd)

# 5. 可视化结果

print("\n🎨 可视化点云和抓取位姿...")

visualize_grasp_pose(pcd, grasp_pose)

# 6. 显示RGB图像+抓取标记

grasp_x_vis = int((grasp_pose["cam_position"][0] * CAM_FX / grasp_pose["cam_position"][2]) + CAM_CX)

grasp_y_vis = int((grasp_pose["cam_position"][1] * CAM_FY / grasp_pose["cam_position"][2]) + CAM_CY)

cv2.circle(color_img, (grasp_x_vis, grasp_y_vis), 8, (0,255,0), -1)

cv2.putText(color_img, f"Conf: {grasp_pose['confidence']:.3f}", (20, 30), cv2.FONT_HERSHEY_SIMPLEX, 1, (0,0,255), 2)

cv2.putText(color_img, f"Angle: {grasp_pose['angle']}°", (20, 70), cv2.FONT_HERSHEY_SIMPLEX, 1, (0,0,255), 2)

cv2.imshow("Grasp Detection Result (RGB)", color_img)

cv2.waitKey(3000) # 显示3秒

cv2.destroyAllWindows()

# 7. 执行抓取(确认后执行)

input("\n⚠️ 请确保机械臂工作空间无障碍物,按Enter键执行抓取...")

control_ur5_grasp(UR5_IP, grasp_pose)