一 前言

感觉用这玩意越来越多,所以想学学。不过没想好怎么学,也没有提纲,买了两本书,一本是深度学习入门,小日子写的。还有一本就是花书。还有就是回Gatech参加线上课程,CS7643。

CS 7643: Deep Learning | Online Master of Science in Computer Science (OMSCS)

二 深度学习有什么

提纲大概是这样的。

https://omscs.gatech.edu/sites/default/files/documents/2024/Syllabi-CS%207643%202024-1.pdf

Week1:

Module 1: Introduction to Neural Networks Go through Welcome/Getting Started Lesson 1: Linear Classifiers and Gradient Descent Readings: DL book: Linear Algebra background DL book: Probability background DL book: ML Background LeCun et al., Nature '15 Shannon, 1956

Week2:

Lesson 2: Neural Networks Readings: DL book: Deep Feedforward Nets Matrix calculus for deep learning Automatic Differentiation Survey, Baydin et al.

Week3:

Lesson 3: Optimization of Deep Neural Networks Readings: DL book: Regularization for DL DL book: Optimization for Training Deep Models

Week4:

Module 2: Convolutional Neural Networks (OPTIONAL) Lesson 6: Data Wrangling Lesson 5: Convolution and Pooling Layers Readings: Preprocessing for deep learning: from covariance matrix to image whitening cs231n on preprocessing DL book: Convolutional Networks Optional: Khetarpal, Khimya, et al. Reevaluate: Reproducibility in evaluating reinforcement learning algorithms." (2018). See related blog post

Week5:

Lesson 6: Convolutional Neural Network Architectures

Week6:

Lesson 7: Visualization Lesson 8: PyTorch and Scalable Training Readings: Understanding Neural Networks Through Deep Visualization Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization

Week7:

Lesson 9: Advanced Computer Vision Architectures Lesson 10: Bias and Fairness Readings: Fully Convolutional Networks for Semantic Segmentation

Week8:

Module 3: Structured Neural Representations Lesson 11: Introduction to Structured Representations Lesson 12: Language Models Readings: DL Book: Sequential Modeling and Recurrent Neural Networks (RNNs)

Week9:

Lesson 13: Embeddings Readings: word2vec tutorial word2vec paper StarSpace paper

Week10:

Lesson 14: Neural Attention Models Readings: Attention is all you need BERT Paper The Illustrated Transformer

Week11:

Lesson 15: Neural Machine Translation Lesson 16: Automated Speech Recognition (ASR)

Week12:

Module 4: Advanced Topics Lesson 17: Deep Reinforcement Learning Readings: MDP Notes (courtesy Byron Boots) Notes on Q-learning (courtesy Byron Boots) Policy iteration notes (courtesy Byron Boots) Policy gradient notes (courtesy Byron Boots)

Week13:

Lesson 18: Unsupervised and Semi-Supervised Learning

Week14:

Lesson 19: Generative Models Readings: Tutorial on Variational Autoencoder NIPS 2016 Tutorial: Generative Adversarial Networks

从提纲可以看到,核心还是神经网络。

然后就是网络的几种架构。卷积神经网络(CNN) :主要用于图像处理和计算机视觉任务。**循环神经网络(RNN)**及其变种(如LSTM、GRU):主要用于处理序列数据,如时间序列分析和自然语言处理。生成对抗网络(GAN) :用于生成逼真的数据样本,如图像生成。自编码器(Autoencoder):用于无监督学习和特征提取。

大概就是这些,看起来也不是太多。。。

三 AI,机器学习,深度学习的关系

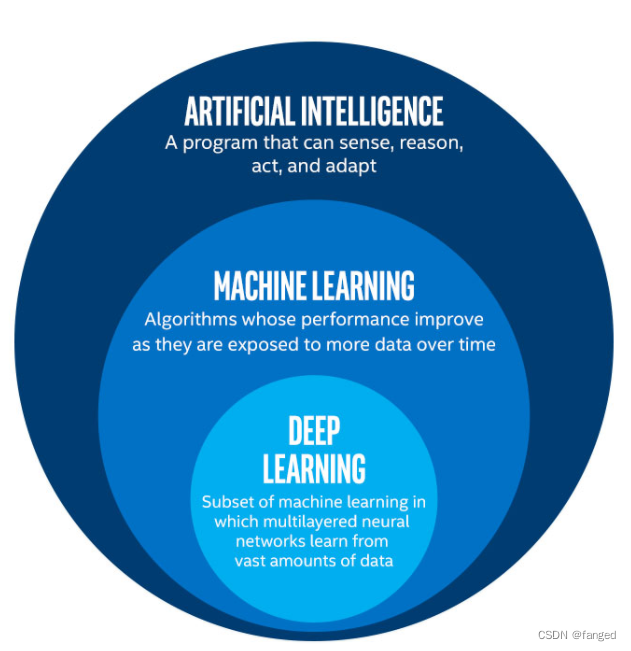

下面这个图说明很好,一下就概括了三者的关系。

AI是一个很宽泛的概念,应该说人工智能这个课题就涵盖了一切。

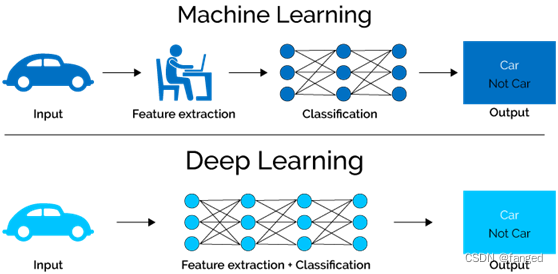

机器学习说的是计算机能够在没有明确编程的情况下自动改进其性能的技术。机器学习依赖于从数据中学习模式和规律,从而做出预测或决策。机器学习的方法包括监督学习、无监督学习和强化学习。常用算法有线性回归、决策树、支持向量机、K-均值聚类和神经网络等。机器学习是实现 AI 的一种方法,通过数据和算法让机器具备学习和改进的能力。

深度学习是机器学习的一个子集,它利用多层神经网络来模拟人脑的工作方式,从大量数据中提取和学习特征。深度学习在处理复杂模式识别任务方面比较优秀。深度学习的核心是深度神经网络(DNN),包括卷积神经网络(CNN)和递归神经网络(RNN)。这些网络通过多个隐藏层逐层提取数据的高层次特征。深度学习是机器学习的一种方法,主要通过多层神经网络实现。它是机器学习中处理复杂数据和任务(如图像识别和自然语言处理)的一种高级技术。

主流的深度学习有,

图像分类:使用卷积神经网络(CNN)进行图像分类,如猫狗识别、物体检测等。

语音识别 :使用循环神经网络(RNN)或长短期记忆网络(LSTM)进行语音识别和转换。

自然语言处理:使用变换器(Transformers)模型进行文本分类、情感分析、翻译等任务。