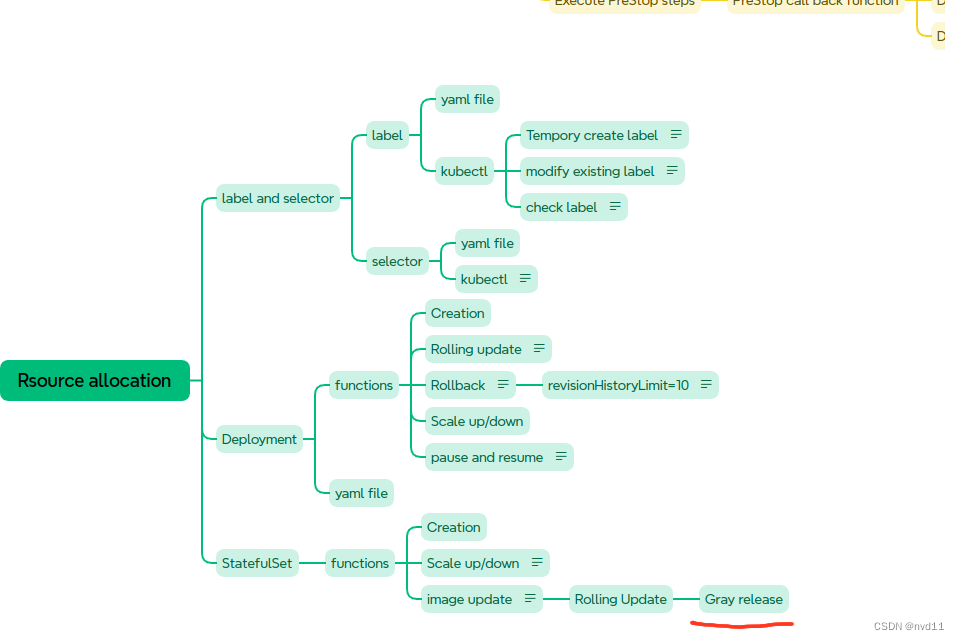

什么是灰度发布 Canary Release

参考

理解 什么是 滚动更新,蓝绿部署,灰度发布 以及它们的区别

配置partition in updateStrategy/rollingUpdate

这次我为修改了 statefulset 的1个yaml file

statefulsets/stateful-nginx-without-pvc.yaml:

yaml

---

apiVersion: v1 # api version

kind: Service # type of this resource e.g. Pod/Deployment ..

metadata:

name: nginx-stateful-service # name of the service

labels:

app: nginx-stateful-service

spec:

ports:

- port: 80 # port of the service, used to access the service

name: web-port

clusterIP: None # the service is not exposed outside the cluster

selector: # label of the Pod that the Service is selecting

app: nginx-stateful # only service selector could skip the matchLabels:

---

apiVersion: apps/v1

kind: StatefulSet # it's for a stateful application, it's a controller

metadata:

name: nginx-statefulset # name of the statefulset

labels:

app: nginx-stateful

spec: # detail description

serviceName: "nginx-stateful-service" # name of the service that used to manange the dns,

# must be the same as the service name defined above

replicas: 3 # desired replica count

selector: # label of the Pod that the StatefulSet is managing

matchLabels:

app: nginx-stateful

template: # Pod template

metadata:

labels:

app: nginx-stateful

spec:

containers:

- name: nginx-containe

image: nginx:1.25.4 # image of the container

ports: # the ports of the container and they will be exposed

- containerPort: 80 # the port used by the container service

name: web-port

updateStrategy:

rollingUpdate:

partition: 2

type: RollingUpdate关键是最后1个block

yaml

updateStrategy:

rollingUpdate:

partition: 2

type: RollingUpdaterollingUpdate 很容易理解, 关键是partition 的值

这个值表示在image 版本更新后, 只会更新 pod-index 中index >= partition 的POD, 其他POD保持先版本, 这就能同时让两个版本存在一段时间, 实现灰度发布

一个例子

首先我们先apply 上面的yaml

bash

[gateman@manjaro-x13 statefulsets]$ kubectl apply -f stateful-nginx-without-pvc.yaml

service/nginx-stateful-service unchanged

statefulset.apps/nginx-statefulset created检查pods , 3个pod 起来了分别是0, 1, 2

bash

[gateman@manjaro-x13 statefulsets]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-statefulset-0 1/1 Running 0 12m 10.244.2.120 k8s-node0 <none> <none>

nginx-statefulset-1 1/1 Running 0 12m 10.244.1.59 k8s-node1 <none> <none>

nginx-statefulset-2 1/1 Running 0 12m 10.244.3.69 k8s-node3 <none> <none>为了更好的present, 我们把pods数量scale 到5个

bash

[gateman@manjaro-x13 statefulsets]$ kubectl scale statefulset nginx-statefulset --replicas=5

statefulset.apps/nginx-statefulset scaled

[gateman@manjaro-x13 statefulsets]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-statefulset-0 1/1 Running 0 13m 10.244.2.120 k8s-node0 <none> <none>

nginx-statefulset-1 1/1 Running 0 13m 10.244.1.59 k8s-node1 <none> <none>

nginx-statefulset-2 1/1 Running 0 13m 10.244.3.69 k8s-node3 <none> <none>

nginx-statefulset-3 1/1 Running 0 13s 10.244.2.121 k8s-node0 <none> <none>

nginx-statefulset-4 1/1 Running 0 12s 10.244.3.70 k8s-node3 <none> <none>这时5个pod 起来了

查看每个pod的image 版本

bash

[gateman@manjaro-x13 statefulsets]$ kubectl get pods -o=jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.containers[*].image}{"\n"}{end}'

nginx-statefulset-0 nginx:1.25.4

nginx-statefulset-1 nginx:1.25.4

nginx-statefulset-2 nginx:1.25.4

nginx-statefulset-3 nginx:1.25.4

nginx-statefulset-4 nginx:1.25.4这时我们更新image 版本从 1.25.4 更新到1.26.1

bash

[gateman@manjaro-x13 statefulsets]$ kubectl set image statefulset/nginx-statefulset nginx-container=nginx:1.26.1

statefulset.apps/nginx-statefulset image updated再次检查 每个pod 的image version

bash

nginx-statefulset-0 nginx:1.25.4

nginx-statefulset-1 nginx:1.25.4

nginx-statefulset-2 nginx:1.26.1

nginx-statefulset-3 nginx:1.26.1

nginx-statefulset-4 nginx:1.26.1可以见到只有pod 2 3 和4 更新了, 0, 1 还是旧版本

下一步, 把新版本应到pod 1 和 pod 0

也很简单, 只需要更改partition 的值就行

yaml

---

apiVersion: v1 # api version

kind: Service # type of this resource e.g. Pod/Deployment ..

metadata:

name: nginx-stateful-service # name of the service

labels:

app: nginx-stateful-service

spec:

ports:

- port: 80 # port of the service, used to access the service

name: web-port

clusterIP: None # the service is not exposed outside the cluster

selector: # label of the Pod that the Service is selecting

app: nginx-stateful # only service selector could skip the matchLabels:

---

apiVersion: apps/v1

kind: StatefulSet # it's for a stateful application, it's a controller

metadata:

name: nginx-statefulset # name of the statefulset

labels:

app: nginx-stateful

spec: # detail description

serviceName: "nginx-stateful-service" # name of the service that used to manange the dns,

# must be the same as the service name defined above

replicas: 5 # desired replica count

selector: # label of the Pod that the StatefulSet is managing

matchLabels:

app: nginx-stateful

template: # Pod template

metadata:

labels:

app: nginx-stateful

spec:

containers:

- name: nginx-container

image: nginx:1.26.1 # image of the container

ports: # the ports of the container and they will be exposed

- containerPort: 80 # the port used by the container service

name: web-port

updateStrategy:

rollingUpdate:

partition: 1

type: RollingUpdate记得修改3个地方, 1是

replicas: 5 改成当前的数量

image: nginx:1.26.1 要改成新的版本

partition: 1 改成1 就是意思把pod 1 也部署新版本

bash

[gateman@manjaro-x13 statefulsets]$ kubectl replace -f stateful-nginx-without-pvc.yaml

service/nginx-stateful-service replaced

statefulset.apps/nginx-statefulset replaced

bash=

[gateman@manjaro-x13 statefulsets]$ kubectl get pods -o=jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.containers[*].image}{"\n"}{end}'

nginx-statefulset-0 nginx:1.25.4

nginx-statefulset-1 nginx:1.26.1

nginx-statefulset-2 nginx:1.26.1

nginx-statefulset-3 nginx:1.26.1

nginx-statefulset-4 nginx:1.26.1这时只有pod0 还是旧版本

如何 把pod0 也同步?

方法1是用上面的方法重新更新partition 的值为0

方法2使用 kubectl patch 修改

kubectl patch statefulset nginx-statefulset --type='json' -p='[{"op": "replace", "path": "/spec/updateStrategy/rollingUpdate/partition", "value": 0}]'

bash

[gateman@manjaro-x13 statefulsets]$ kubectl get pods -o=jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.containers[*].image}{"\n"}{end}'

nginx-statefulset-0 nginx:1.26.1

nginx-statefulset-1 nginx:1.26.1

nginx-statefulset-2 nginx:1.26.1

nginx-statefulset-3 nginx:1.26.1

nginx-statefulset-4 nginx:1.26.1Deployment 的灰度发布

如果是无状态service 的deployment , 可以用这种方法灰度发布吗?

不行

因为 deployment 的pods 的后续不是1个index 数字, 无序的, 所以无法去比较 pod 的index 和 partition的值

强行写上yaml 就会有如下错误:

bash

[gateman@manjaro-x13 bq-api-service]$ kubectl apply -f bq-api-service-test.yaml

error: error validating "bq-api-service-test.yaml": error validating data: ValidationError(Deployment.spec.strategy.rollingUpdate): unknown field "partition" in io.k8s.api.apps.v1.RollingUpdateDeployment; if you choose to ignore these errors, turn validation off with --validate=false但是灰度发布只是1个思想, 实现的方法有很多种

deployment的灰度发布在k8s 是可行的, 例如简单粗暴地用多个deployment 去cover 不同的instance. 具体方法不在此讨论