一、简单的收发消息demo

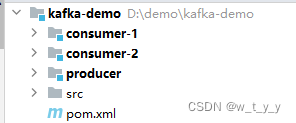

父工程pom:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.example</groupId>

<artifactId>kafka-demo</artifactId>

<version>1.0-SNAPSHOT</version>

<packaging>pom</packaging>

<modules>

<module>producer</module>

<module>consumer-1</module>

<module>consumer-2</module>

</modules>

<!-- springBoot -->

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.4.RELEASE</version>

</parent>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!--kafka-->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<!-- <version>3.0.0</version>-->

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<!--lombok-->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.78</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

</project>1、生产者

1.1、配置文件

spring.kafka.bootstrap-servers=localhost:9092

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

user.topic = userTest

school.topic = schoolTest1.2、dto

package com.example.dto;

import lombok.Builder;

import lombok.Data;

@Data

@Builder

public class SchoolDTO {

private String schoolId;

private String schoolName;

}

package com.example.dto;

import lombok.Builder;

import lombok.Data;

@Data

@Builder

public class UserDTO {

private String userId;

private String userName;

private Integer age;

}1.3、service

package com.example.service.impl;

import com.alibaba.fastjson.JSON;

import com.example.dto.SchoolDTO;

import com.example.dto.UserDTO;

import com.example.service.SchoolService;

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Service;

@Service("schoolService")

@Slf4j

public class SchoolServiceImpl implements SchoolService {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@Value("${school.topic}")

private String schoolTopic;

@Override

public void sendSchoolMsg(SchoolDTO schoolDTO) {

String msg = JSON.toJSONString(schoolDTO);

ProducerRecord producerRecord = new ProducerRecord(schoolTopic,msg);

kafkaTemplate.send(producerRecord);

log.info("school消息发送成功");

}

}

package com.example.service.impl;

import com.alibaba.fastjson.JSON;

import com.example.dto.UserDTO;

import com.example.service.UserService;

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Service;

@Service("userService")

@Slf4j

public class UserServiceImpl implements UserService {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@Value("${user.topic}")

private String userTopic;

@Override

public void sendUserMsg(UserDTO userDTO) {

String msg = JSON.toJSONString(userDTO);

ProducerRecord producerRecord = new ProducerRecord(userTopic,msg);

kafkaTemplate.send(producerRecord);

log.info("user消息发送成功");

}

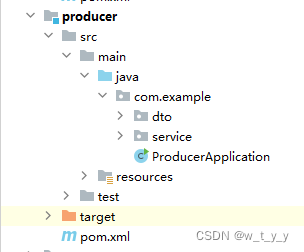

}1.4、启动类

package com.example;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class ProducerApplication {

public static void main(String[] args) {

SpringApplication.run(ProducerApplication.class, args);

}

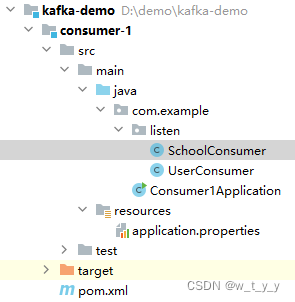

}2、消费者

2.1、配置文件

spring.kafka.bootstrap-servers=localhost:9092

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.auto-offset-reset=earliest

spring.kafka.consumer.enable-auto-commit = true

user.topic = userTest

user.group.id = user-group-1

school.topic = schoolTest

school.group.id = school-group-1

server.port = 22222.2、监听

package com.example.listen;

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.kafka.support.Acknowledgment;

import org.springframework.kafka.support.KafkaHeaders;

import org.springframework.messaging.handler.annotation.Header;

import org.springframework.stereotype.Component;

import javax.annotation.PostConstruct;

import java.time.Duration;

import java.util.ArrayList;

import java.util.Optional;

import java.util.Properties;

@Component

@Slf4j

public class SchoolConsumer {

@KafkaListener(topics = "${school.topic}", groupId = "${school.group.id}")

public void consumer(ConsumerRecord<?, ?> record) {

try {

Object message = record.value();

if (message != null) {

String msg = String.valueOf(message);

log.info("接收到:msg={},topic:{},partition={},offset={}",msg,record.topic(),record.partition(),record.offset());

}

} catch (Exception e) {

log.error("topic:{},is consumed error:{}", record.topic(), e.getMessage());

} finally {

//ack.acknowledge();

}

}

}

package com.example.listen;

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Component

@Slf4j

public class UserConsumer {

@KafkaListener(topics = "${user.topic}", groupId = "${user.group.id}")

public void consumer(ConsumerRecord<?, ?> record) {

try {

Object message = record.value();

if (message != null) {

String msg = String.valueOf(message);

log.info("接收到:msg={},topic:{},partition={},offset={}",msg,record.topic(),record.partition(),record.offset());

}

} catch (Exception e) {

log.error("topic:{},is consumed error:{}", record.topic(), e.getMessage());

} finally {

//ack.acknowledge();

}

}

}不指定group.id会报错,这也验证了kafka consumer必须要有group id。如写:

@KafkaListener(topics = "${user.topic}") public void consumer(ConsumerRecord<?, ?> record) 启动报错:Caused by: java.lang.IllegalStateException: No group.id found in consumer config, container properties, or @KafkaListener annotation; a group.id is required when group management is used.

at org.springframework.util.Assert.state(Assert.java:73) ~[spring-core-5.1.6.RELEASE.jar:5.1.6.RELEASE]

2.3、启动类

package com.example;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.kafka.annotation.EnableKafka;

@SpringBootApplication

//@EnableKafka

public class Consumer1Application {

public static void main(String[] args) {

SpringApplication.run(Consumer1Application.class, args);

}

}3、测试

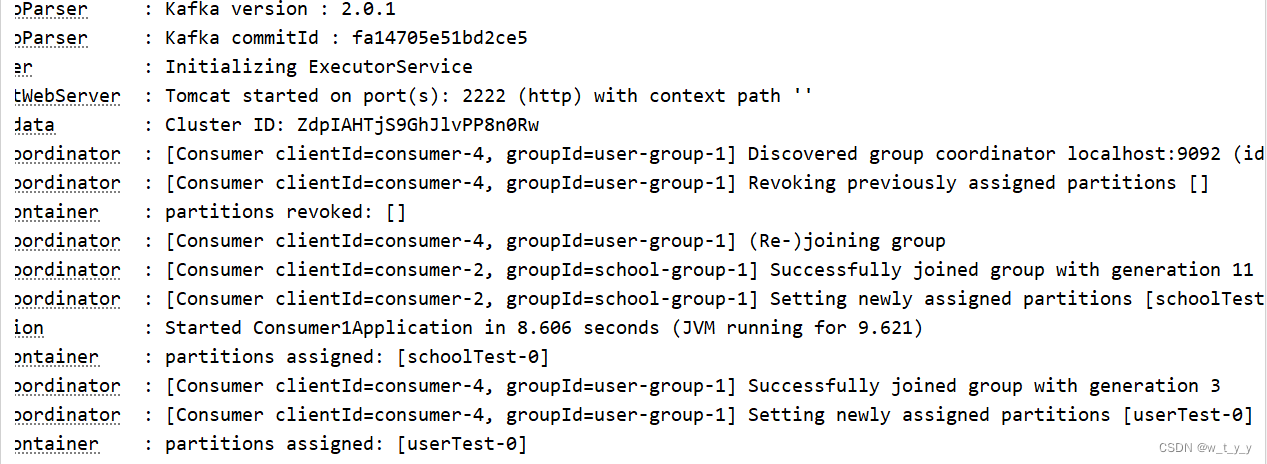

启动消费者:

生产者这里通过单元测试来发送消息:

package com.demo.kafka;

import com.example.ProducerApplication;

import com.example.dto.SchoolDTO;

import com.example.dto.UserDTO;

import com.example.service.SchoolService;

import com.example.service.UserService;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

@SpringBootTest(classes = {ProducerApplication.class}, webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT)

@RunWith(SpringRunner.class)

public class Test {

@Autowired

private SchoolService schoolService;

@Autowired

private UserService userService;

@org.junit.Test

public void sendUserMsg(){

UserDTO userDTO = UserDTO.builder()

.userId("id-1")

.age(18)

.userName("zs")

.build();

userService.sendUserMsg(userDTO);

}

@org.junit.Test

public void sendSchoolMsg(){

SchoolDTO schoolDTO = SchoolDTO.builder()

.schoolId("schoolId-1")

.schoolName("mid school")

.build();

schoolService.sendSchoolMsg(schoolDTO);

}

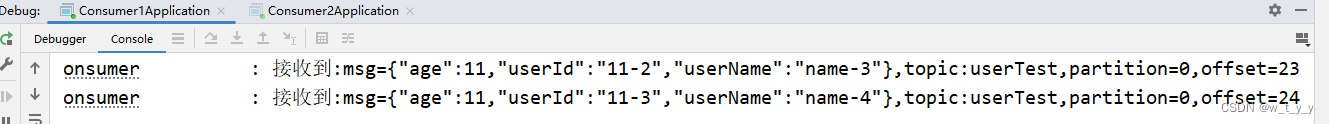

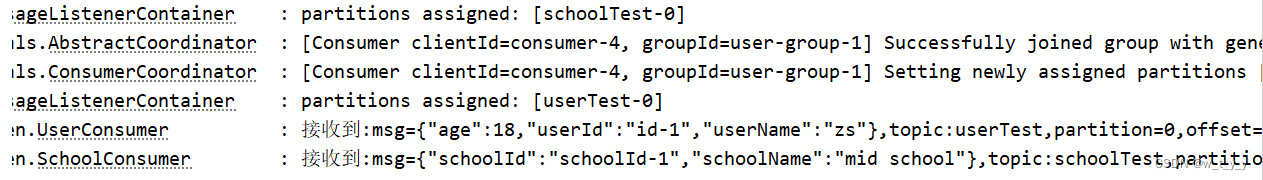

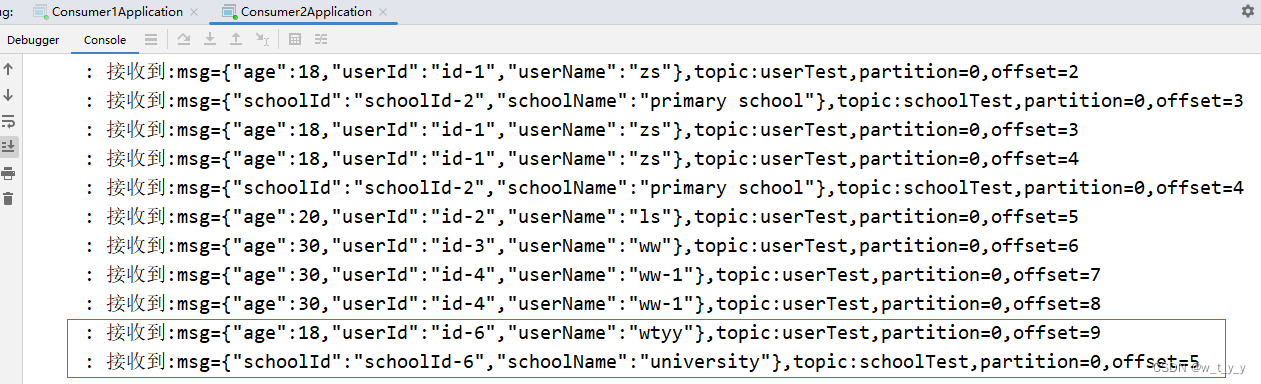

}运行单测,观察消费者输出:

修改参数再次运行,观察到消费者都可以正常监听:

2024-06-22 17:09:06.383 INFO 76104 --- [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port(s): 2222 (http) with context path ''

2024-06-22 17:09:06.390 INFO 76104 --- [ntainer#1-0-C-1] org.apache.kafka.clients.Metadata : Cluster ID: ZdpIAHTjS9GhJlvPP8n0Rw

2024-06-22 17:09:06.392 INFO 76104 --- [ntainer#1-0-C-1] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-4, groupId=user-group-1] Discovered group coordinator localhost:9092 (id: 2147483647 rack: null)

2024-06-22 17:09:06.393 INFO 76104 --- [ntainer#1-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-4, groupId=user-group-1] Revoking previously assigned partitions []

2024-06-22 17:09:06.394 INFO 76104 --- [ntainer#1-0-C-1] o.s.k.l.KafkaMessageListenerContainer : partitions revoked: []

2024-06-22 17:09:06.394 INFO 76104 --- [ntainer#1-0-C-1] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-4, groupId=user-group-1] (Re-)joining group

2024-06-22 17:09:06.401 INFO 76104 --- [ntainer#0-0-C-1] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-2, groupId=school-group-1] Successfully joined group with generation 11

2024-06-22 17:09:06.403 INFO 76104 --- [ntainer#0-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-2, groupId=school-group-1] Setting newly assigned partitions [schoolTest-0]

2024-06-22 17:09:06.403 INFO 76104 --- [ main] com.example.Consumer1Application : Started Consumer1Application in 8.606 seconds (JVM running for 9.621)

2024-06-22 17:09:06.413 INFO 76104 --- [ntainer#0-0-C-1] o.s.k.l.KafkaMessageListenerContainer : partitions assigned: [schoolTest-0]

2024-06-22 17:09:06.491 INFO 76104 --- [ntainer#1-0-C-1] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-4, groupId=user-group-1] Successfully joined group with generation 3

2024-06-22 17:09:06.493 INFO 76104 --- [ntainer#1-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-4, groupId=user-group-1] Setting newly assigned partitions [userTest-0]

2024-06-22 17:09:06.611 INFO 76104 --- [ntainer#1-0-C-1] o.s.k.l.KafkaMessageListenerContainer : partitions assigned: [userTest-0]

2024-06-22 17:16:29.775 INFO 76104 --- [ntainer#1-0-C-1] com.example.listen.UserConsumer : 接收到:msg={"age":18,"userId":"id-1","userName":"zs"},topic:userTest,partition=0,offset=4

2024-06-22 17:16:48.157 INFO 76104 --- [ntainer#0-0-C-1] com.example.listen.SchoolConsumer : 接收到:msg={"schoolId":"schoolId-1","schoolName":"mid school"},topic:schoolTest,partition=0,offset=1

2024-06-22 17:17:39.458 INFO 76104 --- [ntainer#1-0-C-1] com.example.listen.UserConsumer : 接收到:msg={"age":20,"userId":"id-2","userName":"ls"},topic:userTest,partition=0,offset=5

2024-06-22 17:17:59.474 INFO 76104 --- [ntainer#0-0-C-1] com.example.listen.SchoolConsumer : 接收到:msg={"schoolId":"schoolId-2","schoolName":"primary school"},topic:schoolTest,partition=0,offset=24、多个消费者

4.1、同一个groupId

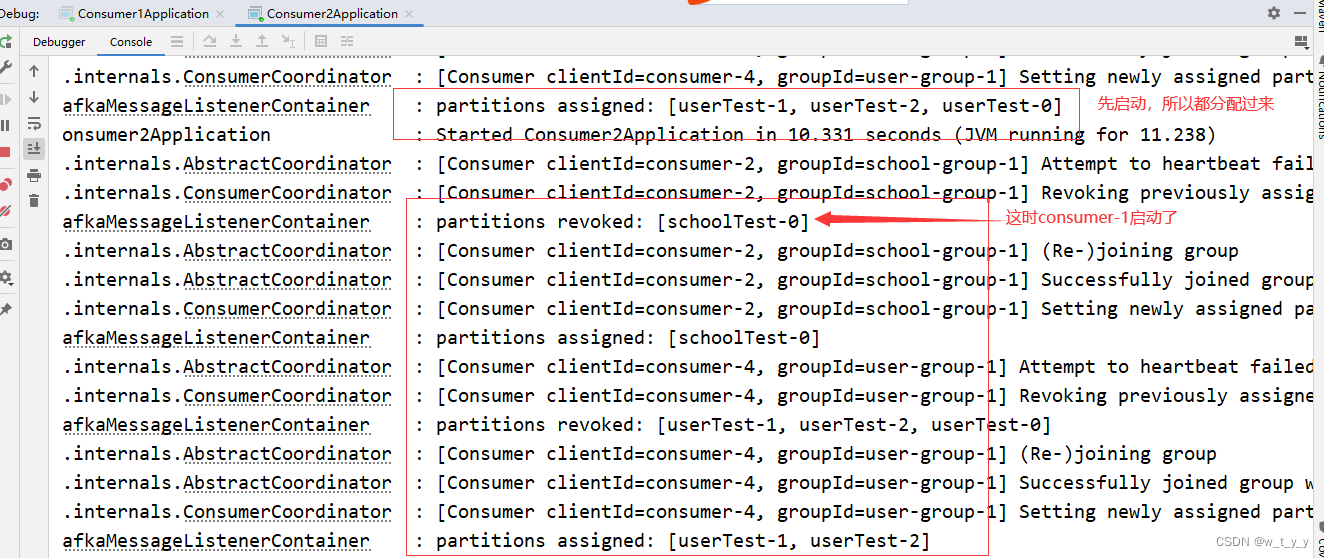

将consumer-1的代码copy到consumer-2,注意端口号修改成不一样的3333,并启动,

spring.kafka.bootstrap-servers=localhost:9092

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.auto-offset-reset=earliest

spring.kafka.consumer.enable-auto-commit = true

user.topic = userTest

user.group.id = user-group-1

school.topic = schoolTest

school.group.id = school-group-1

server.port = 3333

2024-06-22 17:23:59.524 INFO 78096 --- [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port(s): 3333 (http) with context path ''

2024-06-22 17:23:59.531 INFO 78096 --- [ main] example.Consumer2Application : Started Consumer2Application in 7.534 seconds (JVM running for 8.506)

2024-06-22 17:24:00.021 INFO 78096 --- [ntainer#0-0-C-1] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-2, groupId=school-group-1] Successfully joined group with generation 12

2024-06-22 17:24:00.024 INFO 78096 --- [ntainer#0-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-2, groupId=school-group-1] Setting newly assigned partitions []

2024-06-22 17:24:00.025 INFO 78096 --- [ntainer#0-0-C-1] o.s.k.l.KafkaMessageListenerContainer : partitions assigned: []

2024-06-22 17:24:00.028 INFO 78096 --- [ntainer#1-0-C-1] o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-4, groupId=user-group-1] Successfully joined group with generation 4

2024-06-22 17:24:00.028 INFO 78096 --- [ntainer#1-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-4, groupId=user-group-1] Setting newly assigned partitions []

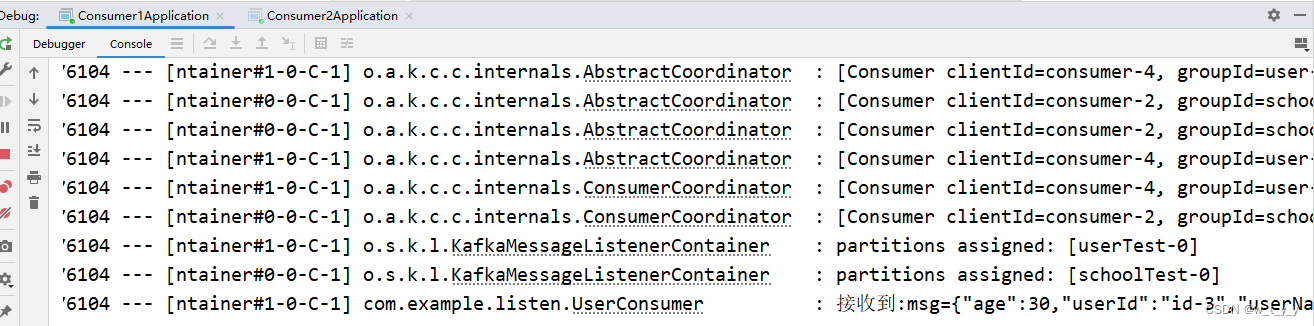

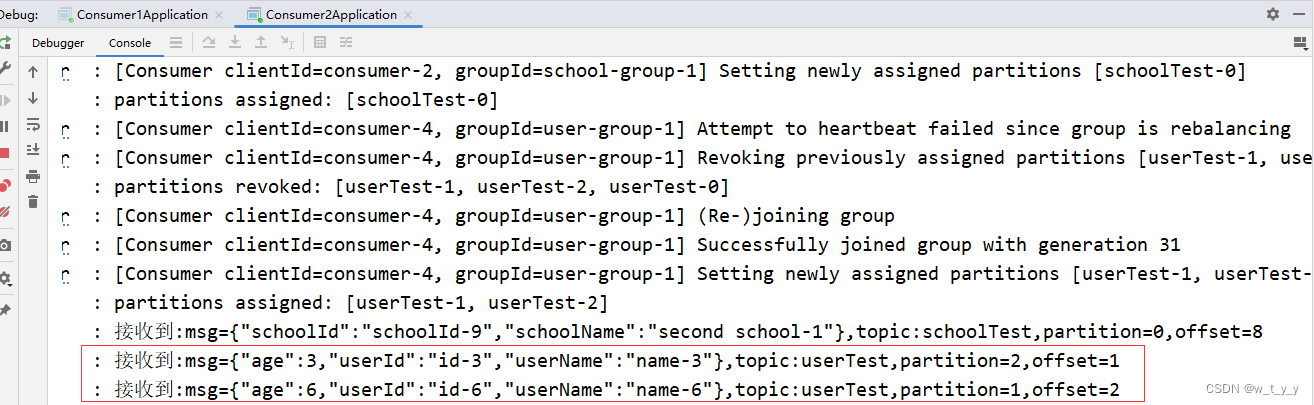

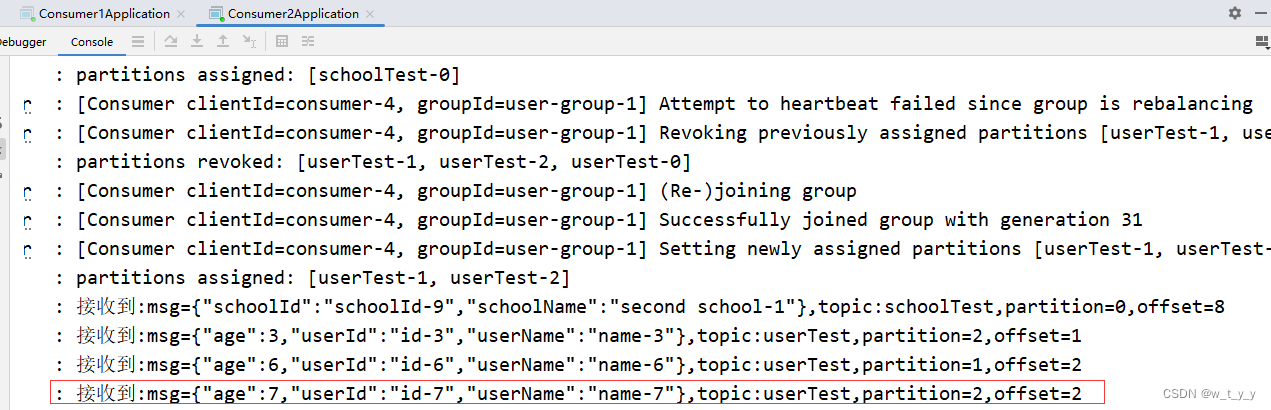

2024-06-22 17:24:00.029 INFO 78096 --- [ntainer#1-0-C-1] o.s.k.l.KafkaMessageListenerContainer : partitions assigned: []再执行次生产者的Test,观察两个消费者:

可以看到consumer-1接收到了,而consumer-2没有接收到。

可以看到consumer-1接收到了,而consumer-2没有接收到。

再次执行,结果相同。school也是同样的结果。

验证了:同一个topic下的某个分区只能被消费者组中的一个消费者消费。

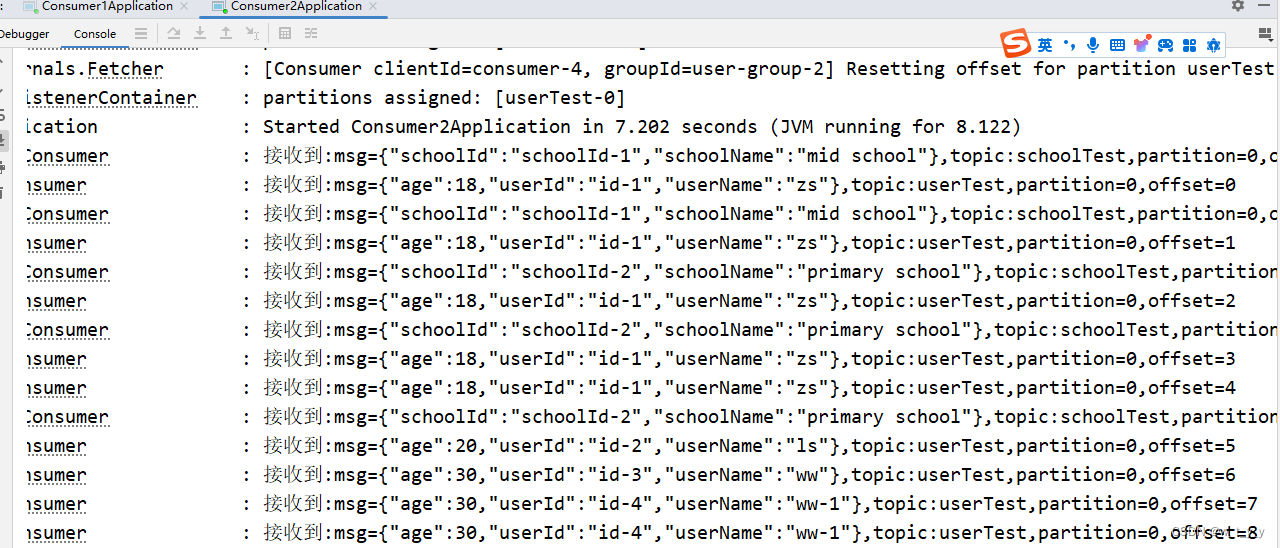

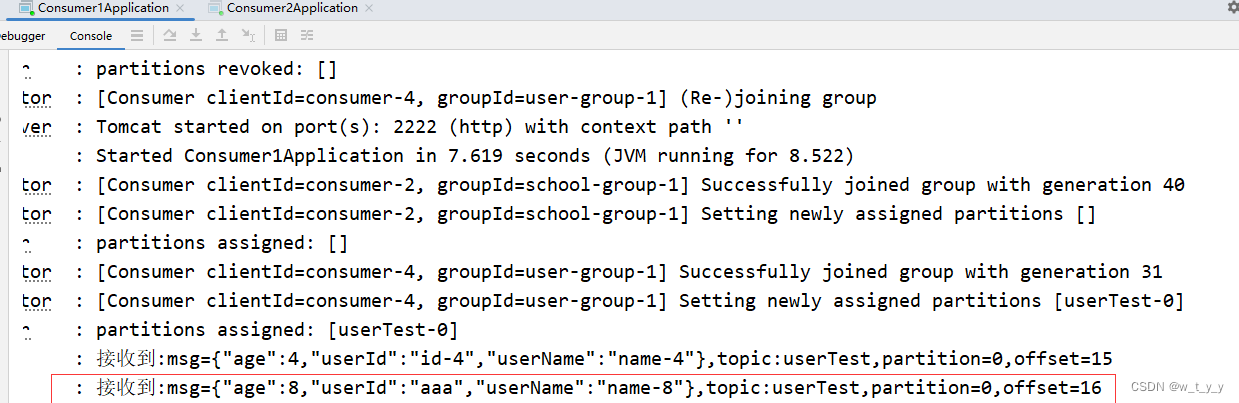

4.2、不同group

现修改cosumer-2中groupId并重启

user.group.id = user-group-2

school.group.id = school-group-2启动后自动接收了之前发送的所有消息(因为这是一个新的消费者组,且配置文件指定了earliest):

再次发送新的消息:

可以看到consumer-1和2同时都接收到了:

验证了:同一个topic可以被不同的消费者组消费。

二、消费者分配策略

上面当conusmer-1和consumer-2使用同一个groupId,并且只有一个partition时,始终只有consumer-1能接收到消息,另外一个consumer-2接收不到消息。这是消费者的分配策略决定的,消费者有4种分配策略

1、RangeAssignor (默认)

即上面未指定的情况。

2、RoundRobinAssignor :

#消费者分配策略

spring.kafka.consumer.properties.partition.assignment.strategy=org.apache.kafka.clients.consumer.RoundRobinAssignor3、StickyAssignor (粘性):

spring.kafka.consumer.properties.partition.assignment.strategy=org.apache.kafka.clients.consumer.StickyAssignor下面将两个consumer的配置文件(除了端口号)都改成一样的:

spring.kafka.bootstrap-servers=localhost:9092

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.auto-offset-reset=earliest

spring.kafka.consumer.enable-auto-commit = true

#消费者分配策略

spring.kafka.consumer.properties.partition.assignment.strategy=org.apache.kafka.clients.consumer.RoundRobinAssignor

user.topic = userTest

user.group.id = user-group-1

school.topic = schoolTest

school.group.id = school-group-1结合下面的生产者分区一块来看下

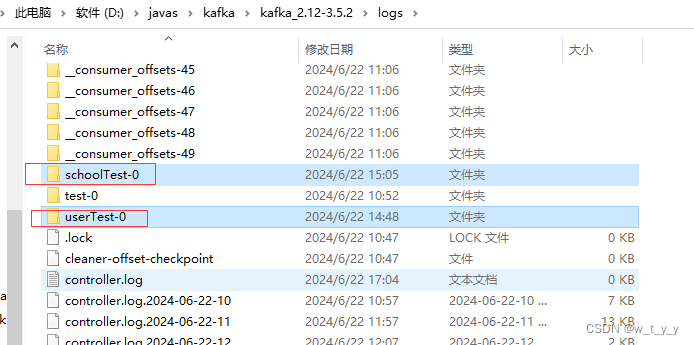

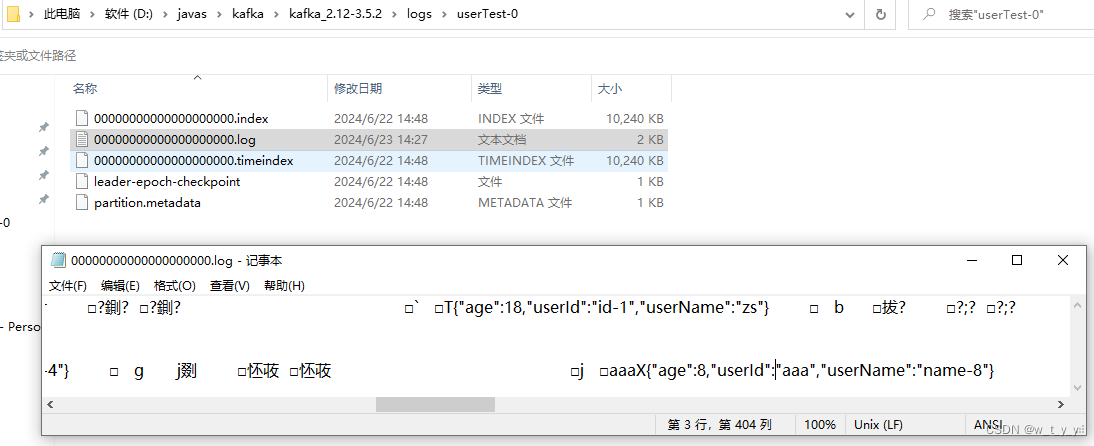

三、生产者分区partition

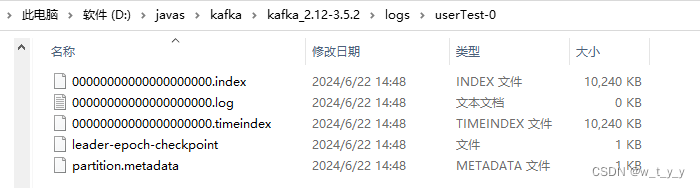

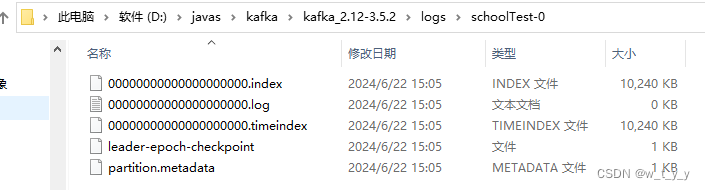

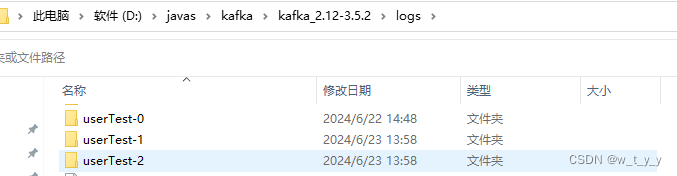

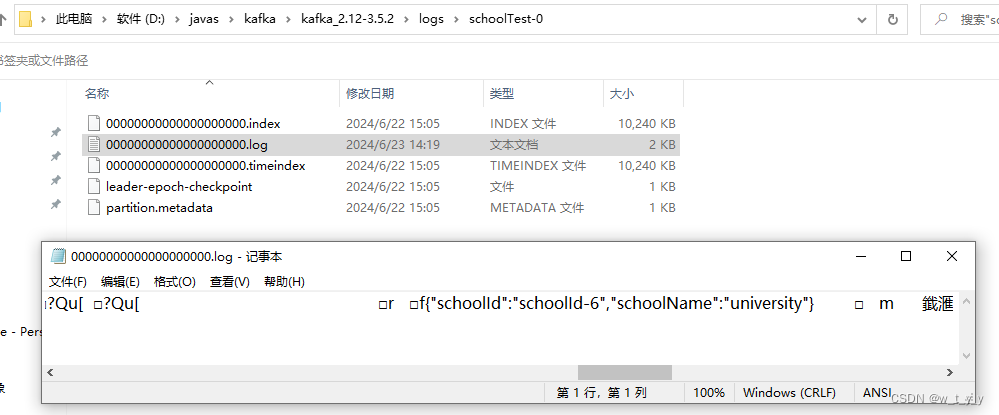

上面只有一个patition, 所以两个topic各自只有一个数据目录,看下数据文件:

1、修改topic分区数量

注意:

partition需要在创建topic时设置,如果不设置,默认分区数量为1,如我直接修改发送的代码

会报错:

org.apache.kafka.common.KafkaException: Invalid partition given with record: 1 is not in the range [0...1).

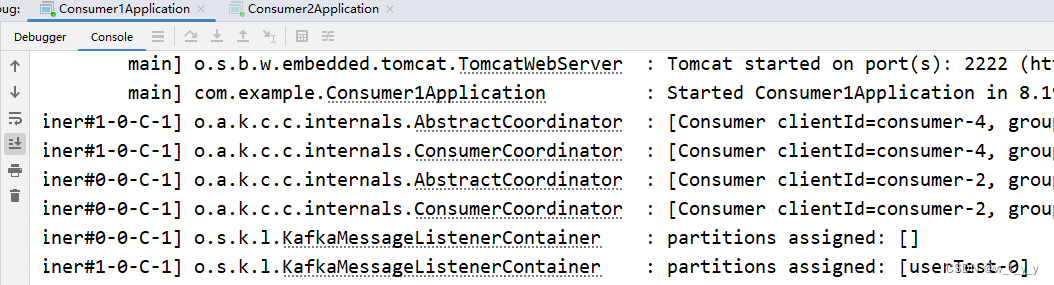

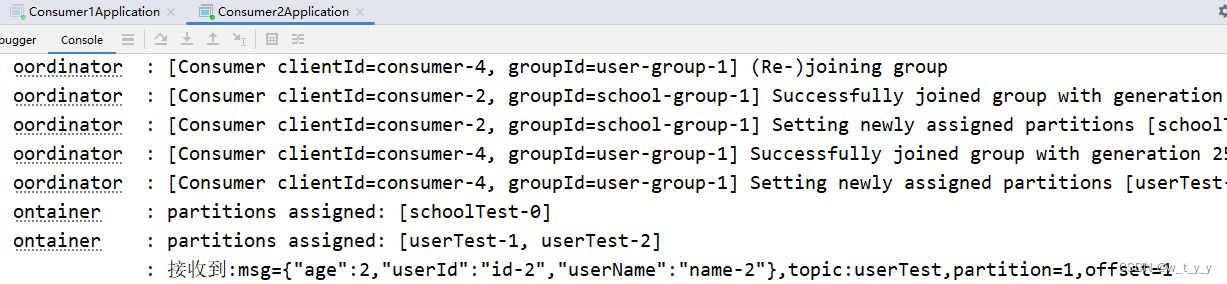

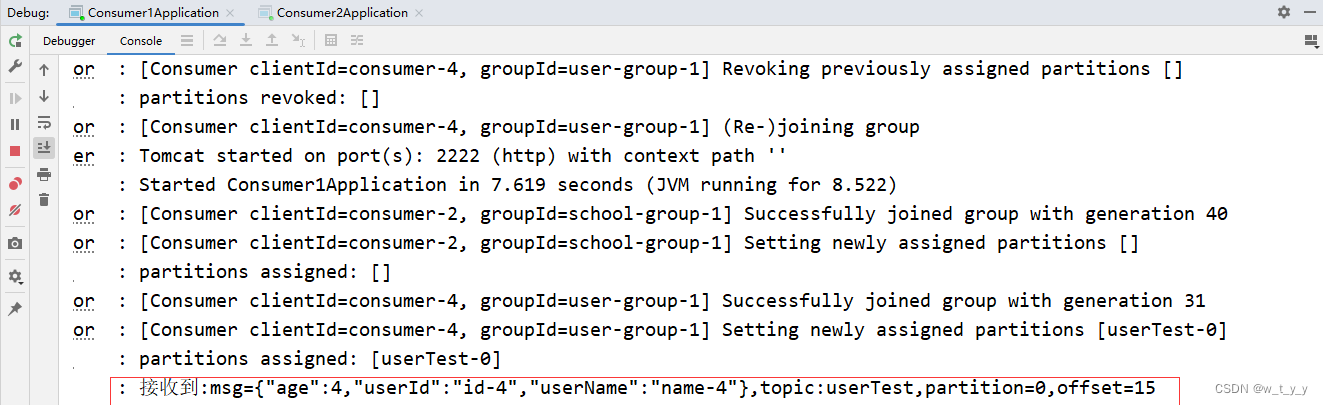

这里需要为已经存在的topic增加分区,方法有两种。命令修改(可视化界面修改)或者java代码修改,修改后消费者也需要重启,否则接收不到新分区的消息,从启动日志也能看到每个consumer分配的topic-partition,如我先启动consumer-2再启动的consumer-1:

1.1、执行kafka命令或者在kafka可视化平台修改

这里我是执行命令修改的:修改为3个partition

D:\javas\kafka\kafka_2.12-3.5.2\bin\windows>

kafka-topics.bat --bootstrap-server localhost:9092 --alter --topic userTest--partitions 3

执行后可以看到多了几个数据文件

1.2、java代码方法修改

import org.apache.kafka.clients.admin.AdminClient;

import org.apache.kafka.clients.admin.AdminClientConfig;

import org.apache.kafka.clients.admin.NewTopic;

import org.apache.kafka.common.KafkaFuture;

import java.util.Collections;

import java.util.Properties;

import java.util.concurrent.ExecutionException;

public class KafkaTopicUpdater {

public static void main(String[] args) throws ExecutionException, InterruptedException {

Properties config = new Properties();

config.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

AdminClient adminClient = AdminClient.create(config);

NewTopic newTopic = new NewTopic("test", 3, (short) 1);

KafkaFuture<Void> future = adminClient.createTopics(Collections.singleton(newTopic)).all();

future.get();

adminClient.close();

}

}2、分区方法

(以下消费者结果,消费者的分配策略均为StickyAssignor )。

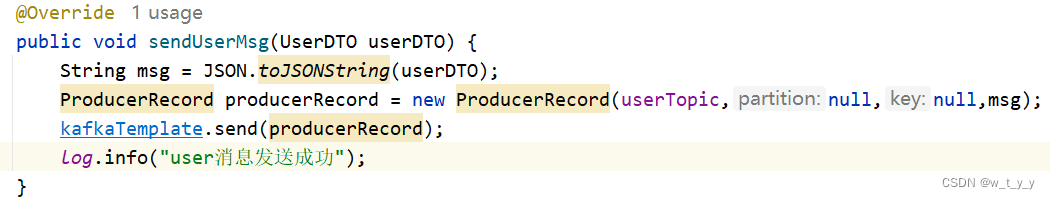

生产者代码,将之前使用的

ProducerRecord(String topic, V value)改为:

ProducerRecord(String topic, Integer partition, K key, V value):2.1、指定分区

当发送时指定了partition就使用该partition。

ProducerRecord producerRecord = new ProducerRecord(userTopic,1,null,msg);消费者:

consumer-2接收到了,consumer-1没有收到消息

将partirion修改为0

ProducerRecord producerRecord = new ProducerRecord(userTopic,0,null,msg);consumer-1接收到,consumer-2没有接收到

**2.2、**轮询

partition=null && key=null

ProducerRecord producerRecord = new ProducerRecord(userTopic,null,null,msg);查看消息输出:可以看到partition在0、1、2轮询

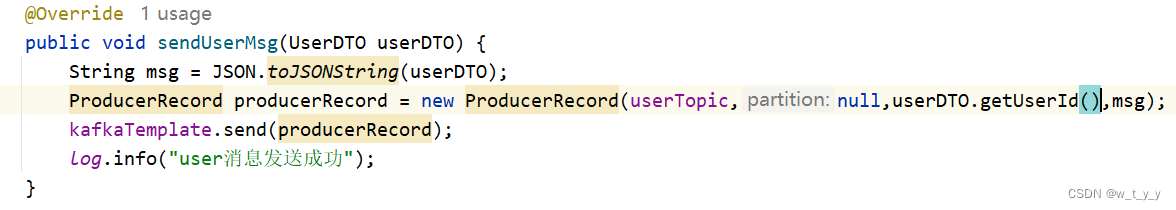

2.3、key哈希分区策略

根据消息的key进行哈希计算,partition=null && key != null

ProducerRecord producerRecord = new ProducerRecord(userTopic,null,userDTO.getUserId(),msg);查看消息输出:

2.4、自定义分区策略(即自定义Partitioner)

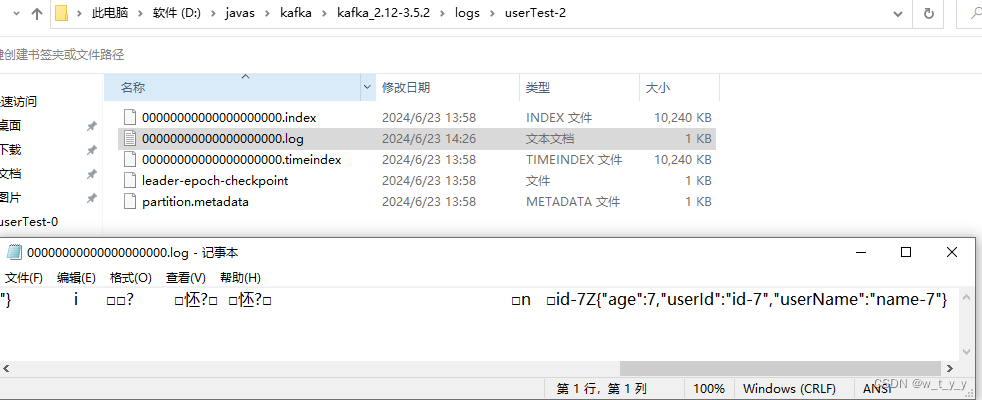

3、查看数据文件

userTest-0分区:

userTest-1分区:

userTest-2分区:

schoolTest-0分区:

四、消息类型

1、异步消息

默认为异步消息

1.1、普通异步

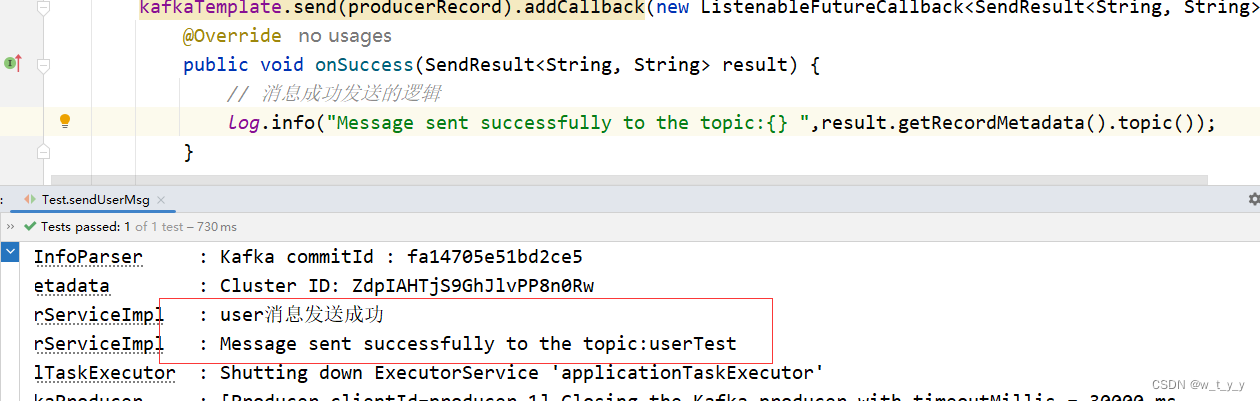

kafkaTemplate.send(producerRecord);1.2、回调异步

package com.example.service.impl;

import com.alibaba.fastjson.JSON;

import com.example.dto.UserDTO;

import com.example.service.UserService;

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.support.SendResult;

import org.springframework.stereotype.Service;

import org.springframework.util.concurrent.ListenableFutureCallback;

import java.util.concurrent.ExecutionException;

@Service("userService")

@Slf4j

public class UserServiceImpl implements UserService {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@Value("${user.topic}")

private String userTopic;

@Override

public void sendUserMsg(UserDTO userDTO) {

String msg = JSON.toJSONString(userDTO);

ProducerRecord producerRecord = new ProducerRecord(userTopic,null,userDTO.getUserId(),msg);

//1.1、普通异步

//kafkaTemplate.send(producerRecord);

//1.2、回调异步

kafkaTemplate.send(producerRecord).addCallback(new ListenableFutureCallback<SendResult<String, String>>() {

@Override

public void onSuccess(SendResult<String, String> result) {

// 消息成功发送的逻辑

log.info("Message sent successfully to the topic:{} ",result.getRecordMetadata().topic());

}

@Override

public void onFailure(Throwable ex) {

// 消息发送失败的逻辑

log.error("Error sending message:{}", ex.getMessage());

}

});

//2、同步

/*try {

kafkaTemplate.send(producerRecord).get();

} catch (InterruptedException e) {

throw new RuntimeException(e);

} catch (ExecutionException e) {

throw new RuntimeException(e);

}*/

log.info("user消息发送成功");

}

}

2、同步消息

末尾加get为同步发送

kafkaTemplate.send(producerRecord).get();3、顺序消息

如这里按age大小发送消息,希望接收到的age是有序的:则将age相同的发送到同一个partition

ProducerRecord producerRecord = new ProducerRecord(userTopic,null,String.valueOf(userDTO.getAge()),msg);

kafkaTemplate.send(producerRecord);