1.数据仓库的数据来源为业务数据库(mysql)

2.通过sqoop将mysql中的业务数据导入到大数据平台(hive)

3.通过hive进行数据计算和数据分析 形成数据报表

4.再通过sqoop将数据报表导出到mysql

5.使用FineReport制作数据报表

1.数据仓库的数据来源为业务数据库(mysql)

-- 测试sqoop是否能够连接mysql

sqoop list-databases --connect jdbc:mysql://bigdata004:3306/ --username root --password root123初始化脚本

init_mysql.sql 在navicat中新建查询 ,运行

sql

-- 设置sql_mode

set sql_mode = 'NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES';

-- 创建数据库mall

create database mall;

-- 切换数据库

use mall;

-- 创建用户信息表

CREATE TABLE t_user_info(

user_id varchar(100) not null,

user_name varchar(100) not null,

sex varchar(10) not null,

age int not null,

country_code varchar(100) not null,

province_code varchar(100) not null,

city_code varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建订单表

CREATE TABLE t_sale_order(

sale_id varchar(100) not null,

user_id varchar(100) not null,

goods_id varchar(100) not null,

price int not null,

sale_count int not null,

total_price int not null,

create_time varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建商品信息表

CREATE TABLE dim_goods_info(

goods_id varchar(100) not null,

goods_name varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建国家信息表

CREATE TABLE dim_country_info(

country_code varchar(100) not null,

country_name varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建省份信息表

CREATE TABLE dim_province_info(

province_code varchar(100) not null,

province_name varchar(100) not null,

country_code varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建城市信息表

CREATE TABLE dim_city_info(

city_code varchar(100) not null,

city_name varchar(100) not null,

province_code varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建用户浏览日志表

CREATE TABLE t_access_log(

log_str varchar(500) not null

)DEFAULT CHARSET='utf8';

-- 创建商品类别表

CREATE TABLE dim_goods_type(

goods_id varchar(100) not null,

type_id varchar(100) not null,

type_name varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 用户信息表插入数据

insert into t_user_info values('c001','王小名','男',22,'86','32','320100');

insert into t_user_info values('c002','李虎','男',40,'86','32','320200');

insert into t_user_info values('c003','韩静','女',26,'86','32','320600');

insert into t_user_info values('c004','董冬','男',35,'86','32','321100');

insert into t_user_info values('c005','张茗','男',21,'86','32','321200');

insert into t_user_info values('c006','张一凡','男',56,'86','32','321300');

insert into t_user_info values('c007','王花','女',20,'86','32','320100');

insert into t_user_info values('c008','刘梦','女',31,'86','32','320600');

insert into t_user_info values('u001','peter','男',30,'1','12','2233');

insert into t_user_info values('u002','rose','女',22,'1','08','2345');

insert into t_user_info values('u003','jack','男',26,'1','02','3663');

insert into t_user_info values('u004','marel','男',31,'1','11','4567');

commit;

-- 订单表插入数据

insert into t_sale_order values('s001','c002','g005',1099,1,1099,'2022-11-08 09:23:54');

insert into t_sale_order values('s002','c002','g001',3000,2,6000,'2022-11-08 10:12:36');

insert into t_sale_order values('s003','c004','g006',2899,1,2899,'2022-11-08 09:23:54');

insert into t_sale_order values('s004','u001','g001',3000,1,3000,'2022-11-08 08:01:21');

insert into t_sale_order values('s005','u002','g002',100,3,300,'2022-11-08 13:40:00');

insert into t_sale_order values('s006','c006','g009',299,1,299,'2022-11-08 08:11:20');

insert into t_sale_order values('s007','u003','g005',1099,1,1099,'2022-11-08 15:01:33');

insert into t_sale_order values('s008','c006','g004',3000,1,3000,'2022-11-08 17:08:01');

insert into t_sale_order values('s009','c005','g008',10,8,80,'2022-11-08 12:08:23');

insert into t_sale_order values('s010','c006','g002',100,1,100,'2022-11-08 22:23:14');

insert into t_sale_order values('s011','c006','g007',99,10,999,'2022-11-08 23:07:42');

insert into t_sale_order values('s012','c007','g007',99,1,99,'2022-11-08 06:51:03');

commit;

-- 商品信息表插入数据

insert into dim_goods_info values('g001','OPPO K9x 5G全网通手机');

insert into dim_goods_info values('g002','儿童历史地理大百科全书 绘本礼盒典藏全40册');

insert into dim_goods_info values('g003','欧珀莱 AUPRES 时光锁小紫钻抗皱紧实眼霜');

insert into dim_goods_info values('g004','苏泊尔(SUPOR)净水器家用超滤软水机');

insert into dim_goods_info values('g005','小米粽 平板电脑');

insert into dim_goods_info values('g006','GoPro HERO11 Black运动相机');

insert into dim_goods_info values('g007','云南实建褚橙冰糖橙');

insert into dim_goods_info values('g008','四色蓝泡泡洁厕');

insert into dim_goods_info values('g009','奥康男鞋');

commit;

-- 国家信息表插入数据

insert into dim_country_info values('1','美国');

insert into dim_country_info values('65','新加坡');

insert into dim_country_info values('81','日本');

insert into dim_country_info values('61','澳大利亚');

insert into dim_country_info values('54','阿根廷');

insert into dim_country_info values('55','巴西');

insert into dim_country_info values('45','丹麦');

insert into dim_country_info values('86','中国');

commit;

-- 省份信息表插入数据

insert into dim_province_info values('11','北京市','86');

insert into dim_province_info values('12','天津市','86');

insert into dim_province_info values('31','上海市','86');

insert into dim_province_info values('50','重庆市','86');

insert into dim_province_info values('13','河北省','86');

insert into dim_province_info values('41','河南省','86');

insert into dim_province_info values('53','云南省','86');

insert into dim_province_info values('21','辽宁省','86');

insert into dim_province_info values('23','湖南省','86');

insert into dim_province_info values('43','黑龙江省','86');

insert into dim_province_info values('34','安徽省','86');

insert into dim_province_info values('37','山东省','86');

insert into dim_province_info values('65','新疆维吾尔自治区','86');

insert into dim_province_info values('32','江苏省','86');

insert into dim_province_info values('33','浙江省','86');

insert into dim_province_info values('36','江西省','86');

commit;

-- 城市信息表插入数据

insert into dim_city_info values('320100','南京市','32');

insert into dim_city_info values('320200','无锡市','32');

insert into dim_city_info values('320300','徐州市','32');

insert into dim_city_info values('320400','常州市','32');

insert into dim_city_info values('320500','苏州市','32');

insert into dim_city_info values('320600','南通市','32');

insert into dim_city_info values('320700','连云港市','32');

insert into dim_city_info values('320800','淮安市','32');

insert into dim_city_info values('320900','盐城市','32');

insert into dim_city_info values('321000','扬州市','32');

insert into dim_city_info values('321100','镇江市','32');

insert into dim_city_info values('321200','泰州市','32');

insert into dim_city_info values('321300','宿迁市','32');

commit;

-- 用户浏览日志表插入数据

insert into t_access_log values('{"user_id": "c001","productId": "g002","productName": "儿童历史地理大百科全书 绘本礼盒典藏全40册","viewTimestamp": "2022-11-07 13:42:38"}');

insert into t_access_log values('{"user_id": "c006","productId": "g007","productName": "云南实建褚橙冰糖橙","viewTimestamp": "2022-11-09 01:02:18"}');

insert into t_access_log values('{"user_id": "c002","productId": "g001","productName": "OPPO K9x 5G全网通手机","viewTimestamp": "2022-11-07 11:02:28"}');

insert into t_access_log values('{"user_id": "c006","productId": "g001","productName": "OPPO K9x 5G全网通手机","viewTimestamp": "2022-11-09 13:01:05"}');

insert into t_access_log values('{"user_id": "c008","productId": "g005","productName": "小米粽 平板电脑","viewTimestamp": "2022-11-09 01:02:18"}');

insert into t_access_log values('{"user_id": "c006","productId": "g007","productName": "云南实建褚橙冰糖橙","viewTimestamp": "2022-11-09 01:02:18"}');

insert into t_access_log values('{"user_id": "u001","productId": "g001","productName": "OPPO K9x 5G全网通手机","viewTimestamp": "2022-11-08 08:45:00"}');

insert into t_access_log values('{"user_id": "u003","productId": "g005","productName": "小米粽 平板电脑","viewTimestamp": "2022-11-09 01:02:18"}');

insert into t_access_log values('{"user_id": "u001","productId": "g006","productName": "GoPro HERO11 Black运动相机","viewTimestamp": "2022-11-07 09:06:27"}');

insert into t_access_log values('{"user_id": "u001","productId": "g002","productName": "儿童历史地理大百科全书 绘本礼盒典藏全40册","viewTimestamp": "2022-11-09 08:02:29"}');

commit;

-- 商品类别表插入数据

insert into dim_goods_type values('g001','1','3C产品');

insert into dim_goods_type values('g002','2','书籍');

insert into dim_goods_type values('g003','3','日用品');

insert into dim_goods_type values('g004','4','家电');

insert into dim_goods_type values('g005','1','3C产品');

insert into dim_goods_type values('g006','1','3C产品');

insert into dim_goods_type values('g007','5','水果');

insert into dim_goods_type values('g008','3','日用品');

insert into dim_goods_type values('g009','6','鞋帽');

commit;init_result.sql

sql

-- 设置sql_mode

set sql_mode = 'NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES';

-- 创建数据库result,并进行切换

create database result;

use result;

-- 创建城市订单总额表

CREATE TABLE t_city_sale_total(

city_name varchar(100) not null,

city_total_price int not null

)DEFAULT CHARSET='utf8';

-- 创建商品类别浏览量表

CREATE TABLE t_goods_type_view_count(

goods_type varchar(100) not null,

view_count int not null

)DEFAULT CHARSET='utf8';数据库搭建完成

2.通过sqoop将mysql中的业务数据导入到大数据平台(hive)

在hive中建立映射的数据库

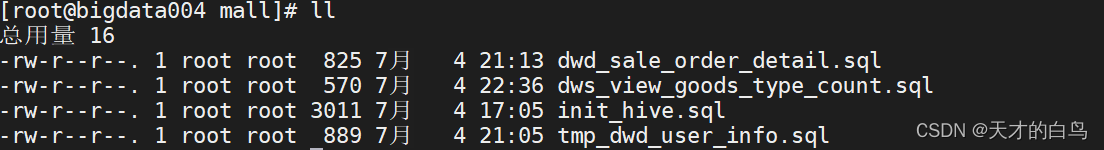

init_hive.sql 在beeline中运行

表的格式和mysql中的一一对应

sql

--创建数据库mall_bigdata

create database if not exists mall_bigdata;

--切换数据库至mall_bigdata

use mall_bigdata;

--创建用户信息表

create table if not exists mall_bigdata.ods_user_info

(

user_id STRING comment "用户id"

,user_name STRING comment "用户姓名"

,sex STRING comment "性别"

,age INT comment "年龄"

,country_code STRING comment "国家码"

,province_code STRING comment "省份码"

,city_code STRING comment "城市码"

)

comment "用户信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建订单表

create table if not exists mall_bigdata.ods_sale_order

(

sale_id STRING comment "订单id"

,user_id STRING comment "用户id"

,goods_id STRING comment "商品id"

,price INT comment "单价"

,sale_count INT comment "购买数量"

,total_price INT comment "购买总金额"

,create_time STRING comment "订单生成时间"

)

comment "销售订单表"

row format delimited fields terminated by ","

stored as textfile;

--创建商品信息表

create table if not exists mall_bigdata.dim_goods_info

(

goods_id STRING comment "商品id"

,goods_name STRING comment "商品名称"

)

comment "商品信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建国家信息表

create table if not exists mall_bigdata.dim_country_info

(

country_code STRING comment "国家码"

,country_name STRING comment "国家名称"

)

comment "国家信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建省份信息表

create table if not exists mall_bigdata.dim_province_info

(

province_code STRING comment "省份码"

,province_name STRING comment "省份名称"

,country_code STRING comment "国家码"

)

comment "省份信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建城市信息表

create table if not exists mall_bigdata.dim_city_info

(

city_code STRING comment "城市码"

,city_name STRING comment "城市名称"

,province_code STRING comment "省份码"

)

comment "城市信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建用户浏览日志表

create table if not exists mall_bigdata.ods_access_log

(

log_str STRING comment "浏览日志"

)

comment "用户浏览日志表"

row format delimited fields terminated by "|"

stored as textfile;

--创建商品类别表

create table if not exists mall_bigdata.dim_goods_type

(

goods_id STRING comment "商品id"

,type_id STRING comment "商品类别id"

,type_name STRING comment "商品类别名称"

)

comment "商品类别表"

row format delimited fields terminated by ","

stored as textfile;

导入数据

表的映射关系

|-------------------|-------------------|

| hive | mysql |

| ods_user_info | t_user_info |

| ods_sale_order | t_sale_order |

| dim_goods_info | dim_goods_info |

| dim_country_info | dim_country_info |

| dim_province_info | dim_province_info |

| dim_city_info | dim_city_info |

| ods_access_log | t_access_log |

| dim_goods_type | dim_goods_type |

sqoop import \

虚拟机 端口号 mysql中的数据库名

--connect jdbc:mysql://bigdata004:3306/mall \

--username root \

--password root123 \

mysql中的表名

--table t_user_info \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

hive中的表名

--hive-table mall_bigdata.ods_user_info

sql

sqoop import \

--connect jdbc:mysql://bigdata004:3306/mall \

--username root \

--password root123 \

--table t_user_info \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

--hive-table mall_bigdata.ods_user_info直接在虚拟机运行,每次改变两个表,将所有数据导入到hive中

3.通过hive进行数据计算和数据分析 形成数据报表

用户信息表

sql

user_id STRING comment "用户id"

,user_name STRING comment "用户姓名"

,sex STRING comment "性别"

,age INT comment "年龄"

,country_code STRING comment "国家码"

,province_code STRING comment "省份码"

,city_code STRING comment "城市码"补全用户信息表中的关于用户的所在国家名称,所在省份名称,所在城市名称

sql

--切换数据库

use mall_bigdata;

--补全用户信息表中的关于用户的所在国家名称,所在省份名称,所在城市名称

create table if not exists mall_bigdata.tmp_dwd_user_info

as

select

user_id,

user_name,

sex,

age,

country_name,

province_name,

city_name

from

(select

user_id,

user_name,

sex,

age,

country_code,

province_code,

city_code

from mall_bigdata.ods_user_info) t1

left join

(

select

country_code,

country_name

from dim_country_info

) t2

on t1.country_code = t2.country_code

left join

(

select

province_code,

province_name,

country_code

from dim_province_info

) t3i

on t1.province_code=t3.province_code and t1.country_code= t3.country_code

left join

(

select

city_code,

city_name,

province_code

from dim_city_info

) t4

on t1.city_code=t4.city_code and t1.province_code=t4.province_code;补全订单表中的用户名称和商品名称

过滤中国用户的订单记录

sql

--补全订单表中的用户名称和商品名称

--过滤中国用户的订单记录

--切换数据库

use mall_bigdata;

create table if not exists mall_bigdata.dwd_sale_order_detail

as

select

sale_id,

t1.user_id,

user_name,

sex,

age,

country_name,

province_name,

city_name,

t1.goods_id,

goods_name,

price,sale_count,

total_price,

create_time

from

(

select

sale_id,

user_id,

goods_id,

price,

sale_count,

total_price,

create_time

from ods_sale_order

) t1

left join

(

select

user_id,

user_name,

sex,

age,

country_name,

province_name,

city_name

from tmp_dwd_user_info

) t2

on t1.user_id=t2.user_id

left join

(

select

goods_id,

goods_name

from dim_goods_info

) t3

on t1.goods_id = t3.goods_id

where country_name='中国';计算不同城市的销售总额

sql

--切换数据库

use mall_bigdata;

--计算不同城市的销售总额

create table if not exists mall_bigdata.dws_sale_order_city_total

as

select

city_name,

sum(total_price) as total_price

from dwd_sale_order_detail

group by city_name;提取用户浏览日志表中的商品信息 补全商品的类型

再根据商品类型的不同 计算用户对于不同商品类型的浏览次数

sql

--切换数据库

use mall_bigdata;

--提取用户浏览日志表中的商品信息 补全商品的类型

--再根据商品类型的不同 计算用户对于不同商品类型的浏览次数

create table if not exists mall_bigdata.dws_view_goods_type_count

as

select

type_name,

count(type_name) as view_goods_type_count

from

(

select

get_json_object(log_str,'$.productId') as product_id

from mall_bigdata.ods_access_log

) t1

inner join

(

select

goods_id,

typr_name

from dim_goods_type

) t2

on t1.product_id = t2.goods_id

group by type_name;