rm -r dp-203 -f

git clone https://github.com/MicrosoftLearning/dp-203-azure-data-engineer dp-203

cd dp-203/Allfiles/labs/06

./setup.ps1

python

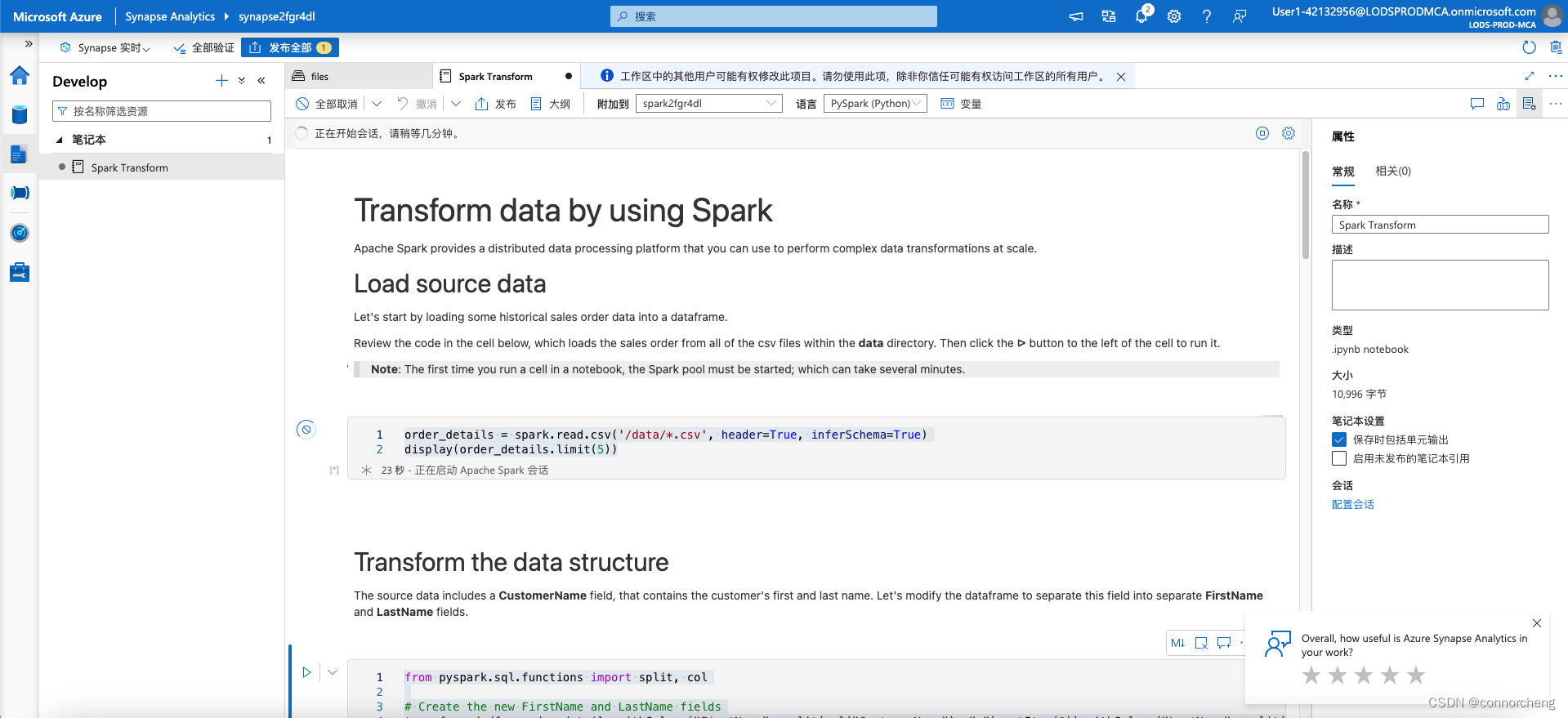

order_details = spark.read.csv('/data/*.csv', header=True, inferSchema=True)

display(order_details.limit(5))

python

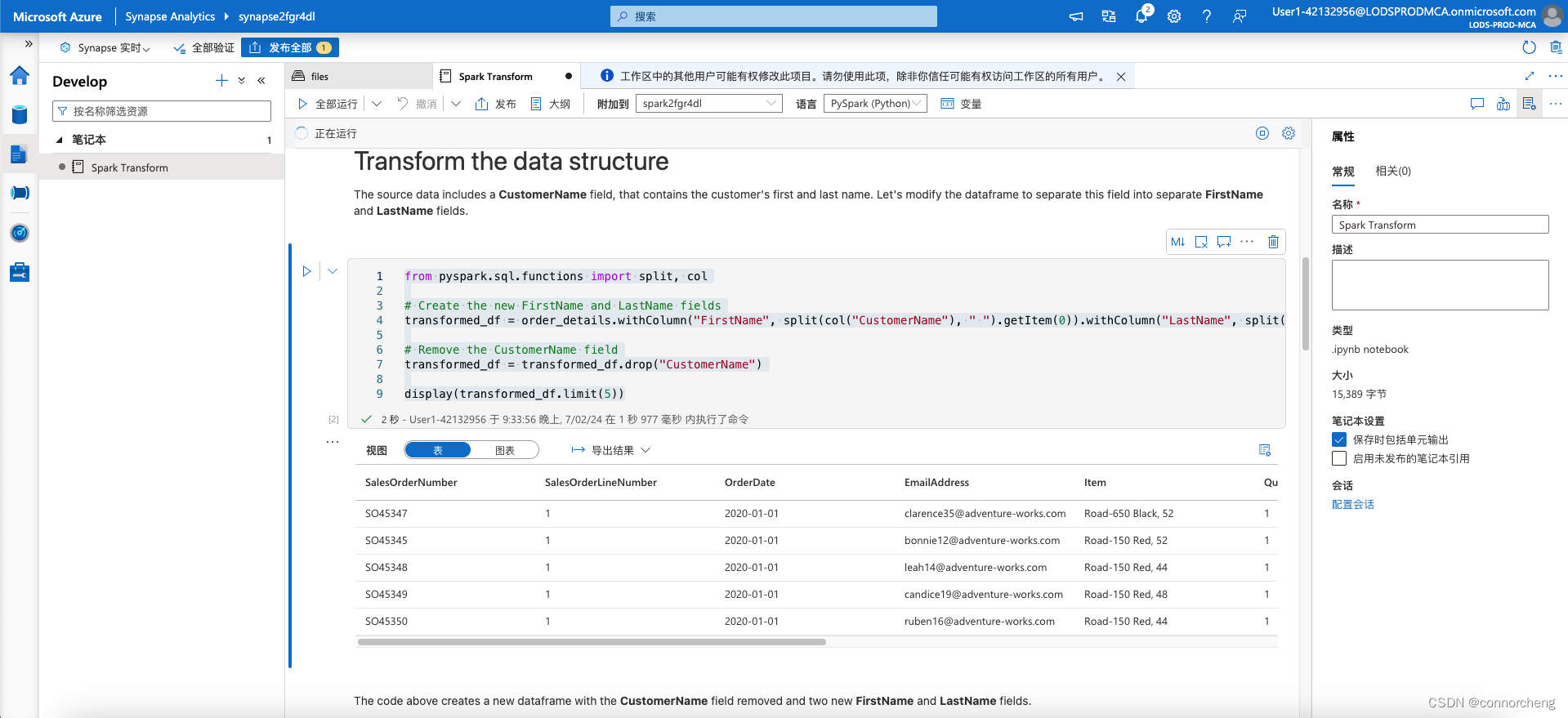

from pyspark.sql.functions import split, col

# Create the new FirstName and LastName fields

transformed_df = order_details.withColumn("FirstName", split(col("CustomerName"), " ").getItem(0)).withColumn("LastName", split(col("CustomerName"), " ").getItem(1))

# Remove the CustomerName field

transformed_df = transformed_df.drop("CustomerName")

display(transformed_df.limit(5))

python

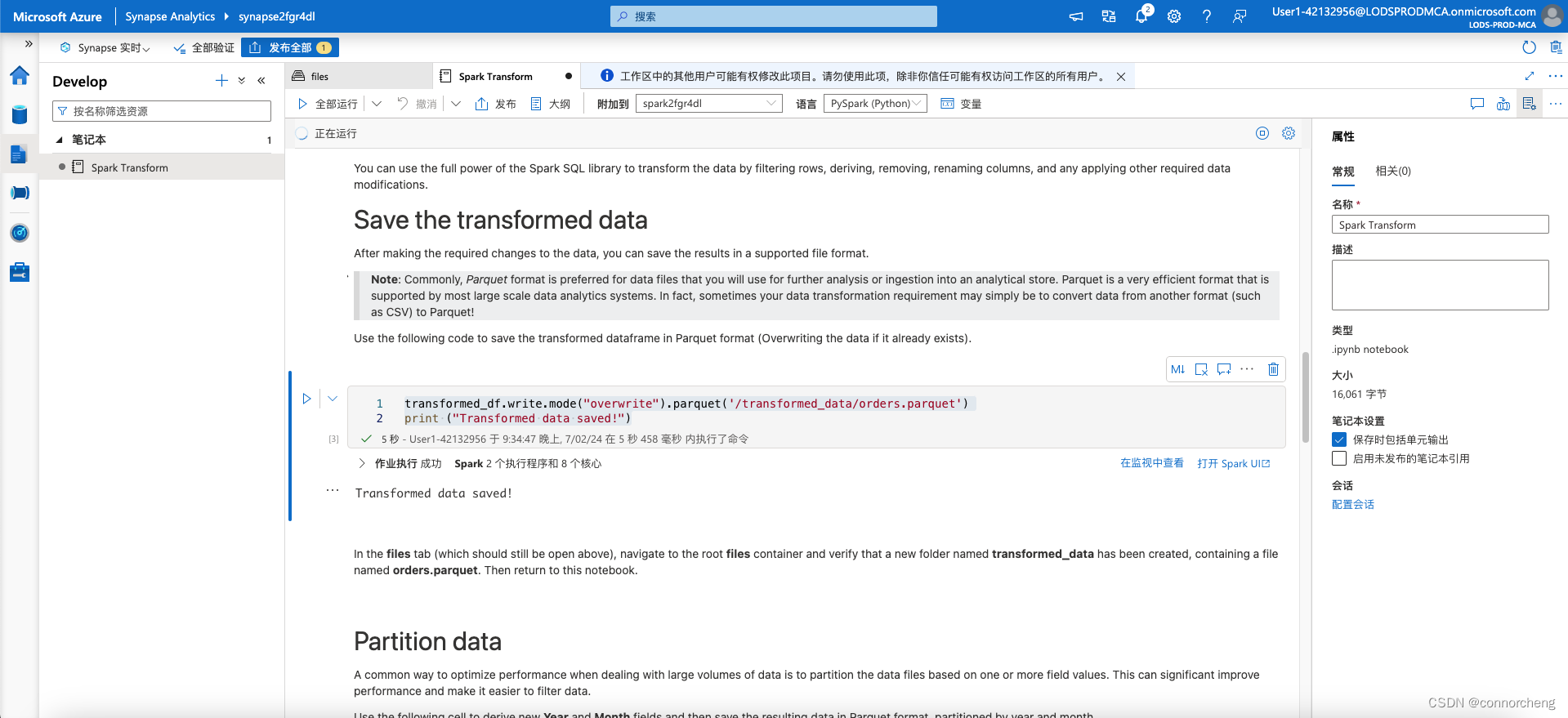

transformed_df.write.mode("overwrite").parquet('/transformed_data/orders.parquet')

print ("Transformed data saved!")

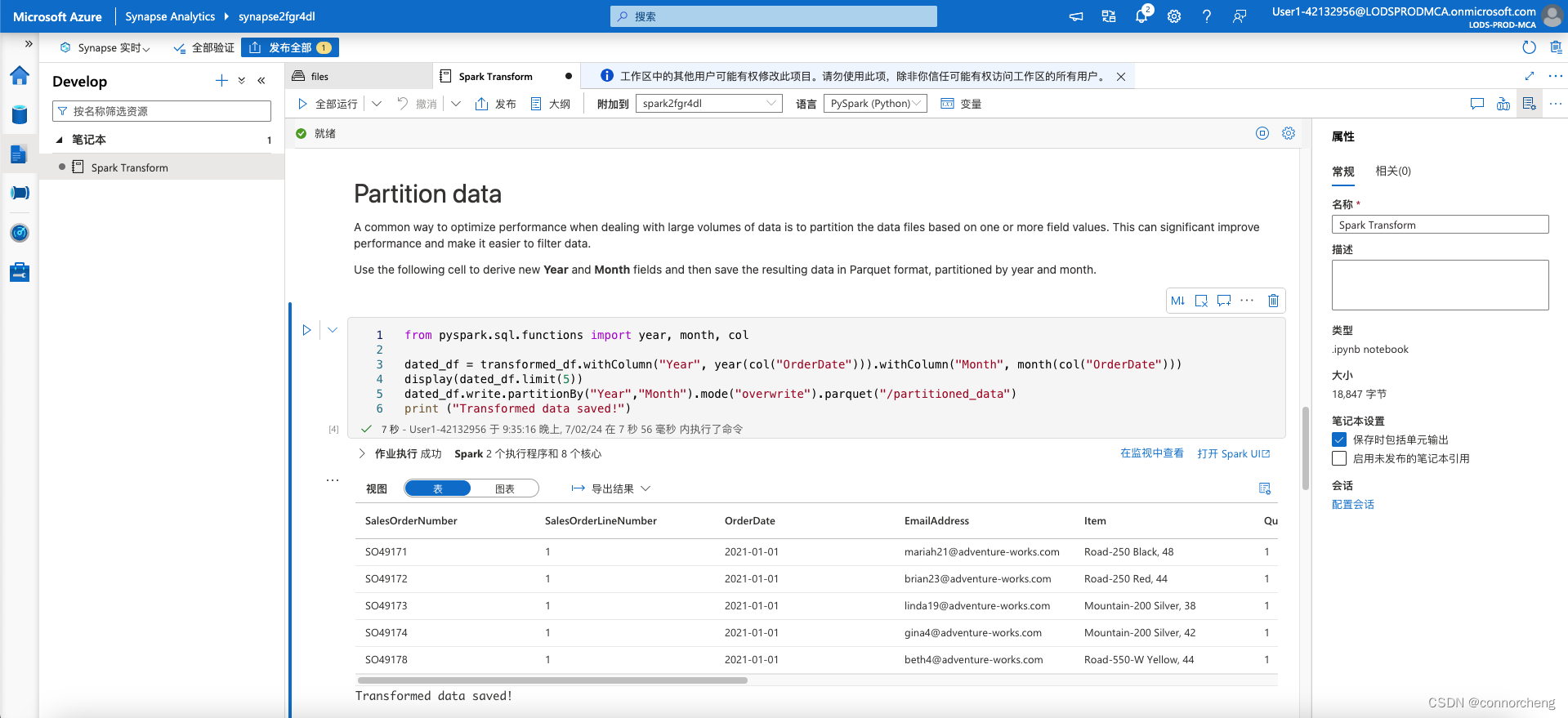

python

from pyspark.sql.functions import year, month, col

dated_df = transformed_df.withColumn("Year", year(col("OrderDate"))).withColumn("Month", month(col("OrderDate")))

display(dated_df.limit(5))

dated_df.write.partitionBy("Year","Month").mode("overwrite").parquet("/partitioned_data")

print ("Transformed data saved!")

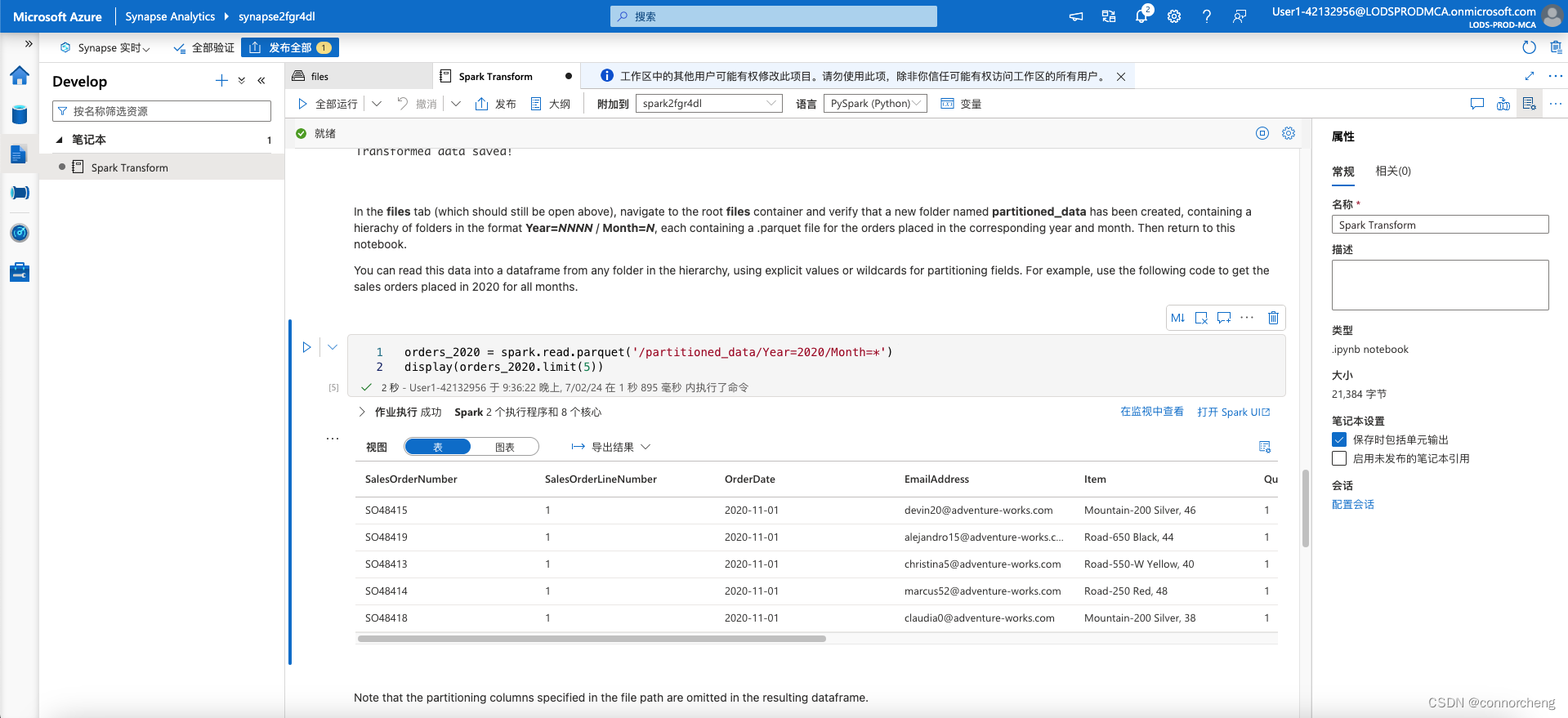

python

orders_2020 = spark.read.parquet('/partitioned_data/Year=2020/Month=*')

display(orders_2020.limit(5))

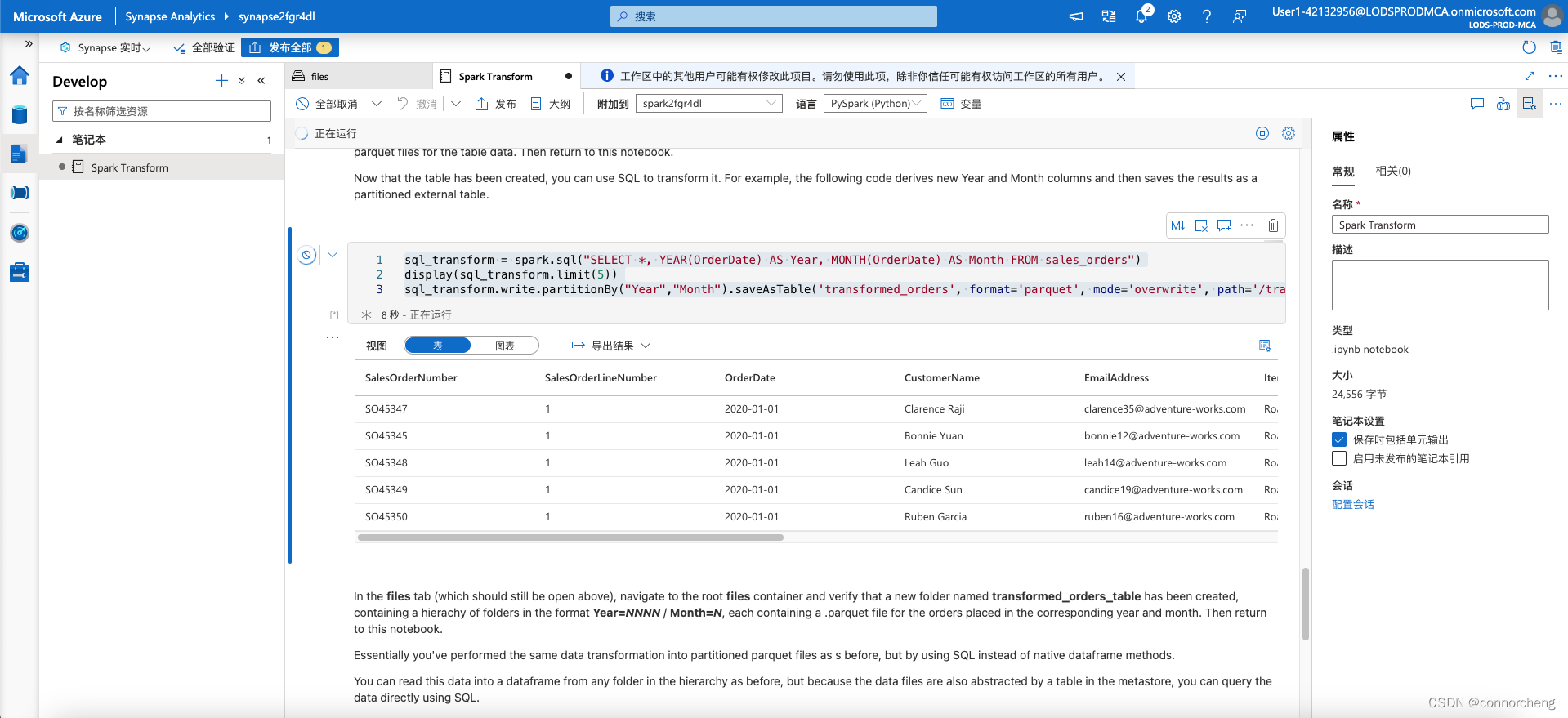

python

order_details.write.saveAsTable('sales_orders', format='parquet', mode='overwrite', path='/sales_orders_table')

python

sql_transform = spark.sql("SELECT *, YEAR(OrderDate) AS Year, MONTH(OrderDate) AS Month FROM sales_orders")

display(sql_transform.limit(5))

sql_transform.write.partitionBy("Year","Month").saveAsTable('transformed_orders', format='parquet', mode='overwrite', path='/transformed_orders_table')

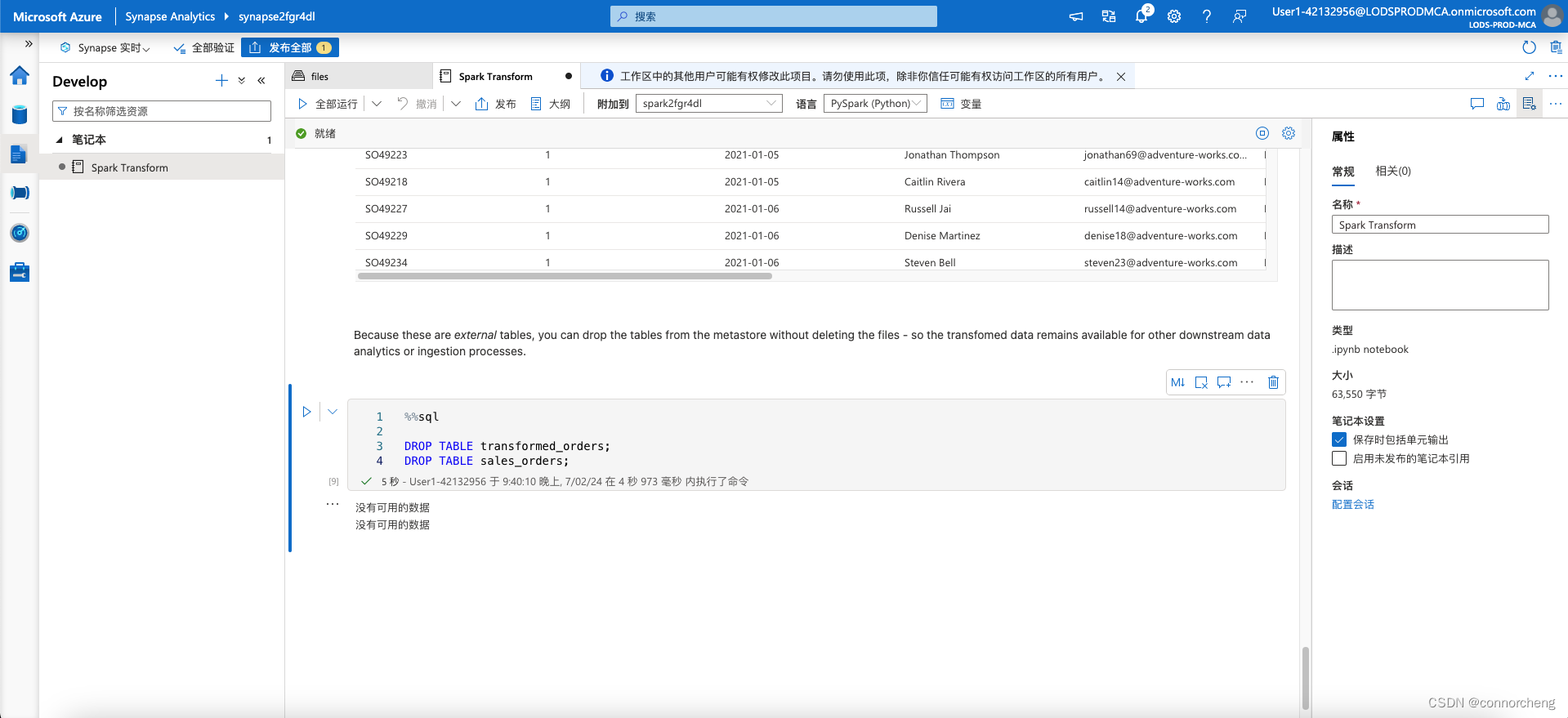

sql

%%sql

SELECT * FROM transformed_orders

WHERE Year = 2021

AND Month = 1

sql

%%sql

DROP TABLE transformed_orders;

DROP TABLE sales_orders;