错误解决方案

root@k8s-master calico\]# kubectl get po -n kube-system

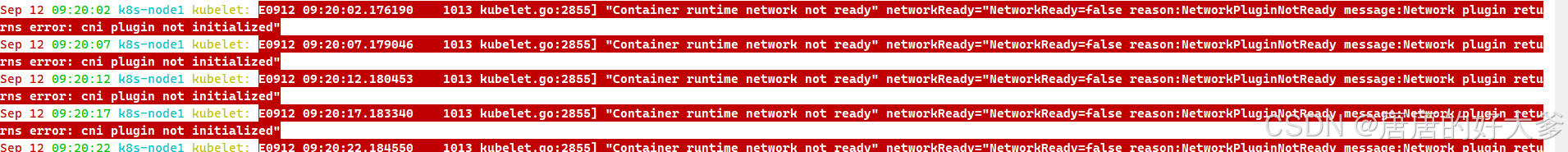

等一会,如果还是没连上,查看节点错误日志vim /var/log/message,shift+g跳转到最后一行(如果都没有pending就是网络问题)

错误1:Centos 7 系列操作系统在安装k8s时可能会遇到hostPath type check failed:/sys/fs/bpf is not a direcctory错误,该问题为内核版本过低导致的。/sys/fs/bpf 在4.4版本中的内核中才有,对于版本比较高得k8s集群建议升级内核到4.4以上。这里直接更新就行,如果不能用再更新到4.4以上。

解决方法:更新内核并重启(三台主机都要)

\[root@k8s-master \~\]# yum -y update kernel

\[root@k8s-master \~\]# reboot

\[root@k8s-master \~\]# uname -r //查看版本

3.10.0-1160.119.1.el7.x86_64

错误2:缺少网络cni

解决方法:(三台都做)

方法1.因为重装集群的时候,将/etc/cni目录彻底删除,所以需要重装组件kubernetes-cni。但在重装组件的时候发生了新的警告,用--nogpgcheck参数即可解决,随后对所有集群的节点进行重装kubernetes-cni,实现问题修复。

yum reinstall -y kubernetes-cni

yum reinstall -y kubernetes-cni --nogpgcheck

方法2.直接把这两个文件复制进去

\[root@k8s-node2 \~\]# ls /etc/cni/net.d/

10-calico.conflist calico-kubeconfig

\[root@k8s-node2 \~\]# cat /etc/cni/net.d/10-calico.conflist

{

"name": "k8s-pod-network",

"cniVersion": "0.3.1",

"plugins": \[

{

"type": "calico",

"log_level": "info",

"log_file_path": "/var/log/calico/cni/cni.log",

"datastore_type": "kubernetes",

"nodename": "k8s-node2",

"mtu": 0,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "/etc/cni/net.d/calico-kubeconfig"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

},

{

"type": "bandwidth",

"capabilities": {"bandwidth": true}

}

}[root@k8s-node2 ~]# cat /etc/cni/net.d/calico-kubeconfig

Kubeconfig file for Calico CNI plugin. Installed by calico/node.

apiVersion: v1

kind: Config

clusters:

cluster:

server: https://10.96.0.1:443

certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJUW5yNTh0RUF2QkF3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TkRBNU1URXdPVEk0TWpCYUZ3MHpOREE1TURrd09UTXpNakJhTUJVeApFekFSQmdOVkJBTVRDbXQxWW1WeWJtVjBaWE13Z2dFaU1BMEdDU3FHU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLCkFvSUJBUURST2QyM1lpZTJ4MUlRZkdEZi8zMHc5RnhCNGdWTmNSU0dKNE1QOGp2eUxHeWhSUVZRbTlldnE4cEQKaVEwOHhidmI1aFZMZFNkOURIWGVqUHhMQWVyMEU0MTBTc3ZFV0hDQkxVMHNUdkZlVUovb3FZSk5wV0lxeVd0dwpnaTlhZCttajV6RXRwemtwR2RrWHliVFRxK0tKbnNFWHZRdkRIbURFcEZlQWJ4Q3NGNVdxZkdoNnBGWFhmeEt3CjByWmU5cWdWdWU5ZXU4RzUvSit1WkFQNGZPTStPMitHUU44TmM4RVQ0eDhoZWNQamJsWmVXYVlNYUlUMHVVYWsKSi93b0hBU1ZFeFBraVhnUHpTWnc5NkN6dHUyNzdubzUvRG1QanJYTkFGOUZoL0krZTZOeGJoSnBabEM2VnhiSApnTWEzamlKTjdyVHNHLzU4OGppdnZrVmVSLzVWQWdNQkFBR2pXVEJYTUE0R0ExVWREd0VCL3dRRUF3SUNwREFQCkJnTlZIUk1CQWY4RUJUQURBUUgvTUIwR0ExVWREZ1FXQkJUajNUUTZVVEt6U29PcDNwVUJlL3dsbGVsWkRUQVYKQmdOVkhSRUVEakFNZ2dwcmRXSmxjbTVsZEdWek1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQVpOZDZRMlVuLwp1cnZIY1BENytJTVExNzNSdmdaVkFYcWZibGhTcml2ZXJMcHhjK3U1QkNqaVRYc0hlVkZoQ21jdEJaeC96dWdsCm9HYXRWTHlTdzh0MDd4ZWpEVE56aTRMb2g1QmMvSGxNSm1IS2Zqb2hHSDduTlpUVUtTditDZ3JNRDN5ZGI0SVcKNEdTTVRiNDR1Q0VsN0E0cnU5eW03cE9RczB6N2xNS3M5cHZnNnNVOU40MkRtUHZ4alRaOEZJK3h5TmcvMDlULwo5bTkvUG5FY0x0S0ovVnVVNERHNE9GYWtQU2NYckpSOEI2QTVFSVV6YU1ETklpWlhzcW5lODFvNHM3N1Z3WldjCmw0RTNOdWZBdzcwZVRYbFloRGlKWjlieHVnTCtqRTRhN1lkbHMySElobGFzMzNFckxLZU5SK2pTSXMxRFZHaUkKSEtDM2J6US9WMUw4Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K"

users:

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkhDS3ZqbHIyRHRyQUdZVm05R1lTT2NRR0pRMml4ZDNucHJlU1JscHFLc0EifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzI2MTkwOTczLCJpYXQiOjE3MjYxMDQ1NzMsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJjYWxpY28tY25pLXBsdWdpbiIsInVpZCI6ImQ5OWRlY2YyLWY1YzktNDI2ZS05NTYxLWI5MjgzNzQ4NzRjMiJ9fSwibmJmIjoxNzI2MTA0NTczLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06Y2FsaWNvLWNuaS1wbHVnaW4ifQ.bmaUCJuOtCgPrhQmt5oiPzwBlHU9dA53d5kVFL0TvmCcMcpxzAAdCYNo75g1JHWM9fwuyYmMgrfIUPLNV-xd6jbiy1Bu8qVT5XR8kZjMvlFgPDWiKxE7htUdY2jtY1jDUn0AHHQVDEPANq_2nHFA4TZC_x9j44uVYiYjw-Sp20HHcFPgQtzTqqkh4a_pRkc0Hbo1dC-vb00mXcxJ2nwKe8h3iWUzGqLH8LNzOeyts2PxPlaCGXh3gpEP2WHGzobKwzleoJE9W2JVZ_ASOh0OIr2Yw24x5vmAJxhA8DoRyZ5FU_5VGiLw_Gxnd7cTumU1yj3beGfMhsXmXVlw256SfA

contexts:

context:

cluster: local

user: calico

current-context: calico-context

root@k8s-master \~\]# kubectl get nodes //全部ready就成功了

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 16h v1.28.2

k8s-node1 Ready \ 16h v1.28.2

k8s-node2 Ready \ 16h v1.28.2

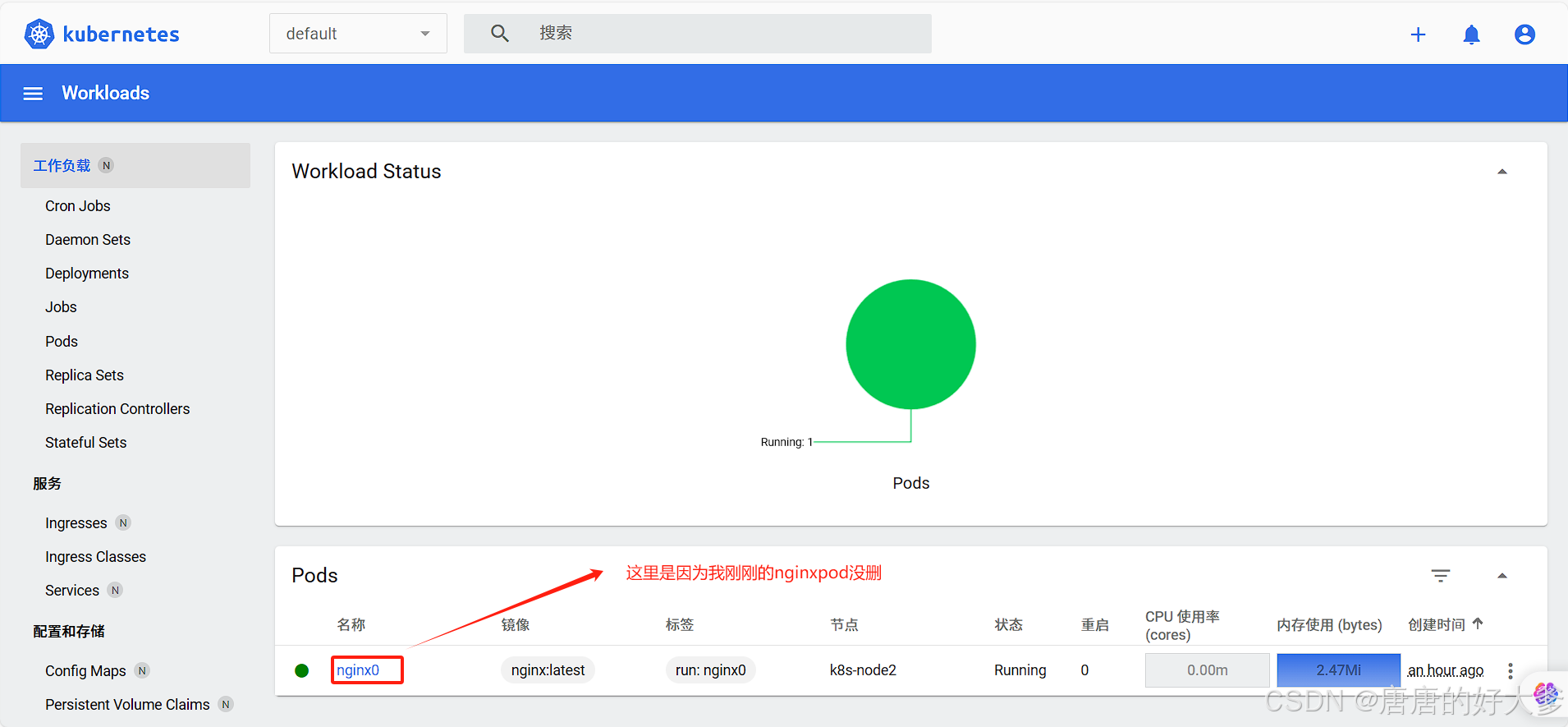

\[root@k8s-master \~\]# kubectl run nginx0 --image=nginx:latest //添加一个新的nginxpod

pod/nginx0 created

\[root@k8s-master \~\]# kubectl get po -Aowide \| grep nginx //挺慢的,多等会

default nginx0 1/1 Running 0 14s 172.16.169.131 k8s-node2 \ \

\[root@k8s-master \~\]# curl 172.16.169.131 //访问一下

\[root@k8s-master \~\]# kubectl delete pod nginx0 //删除该pod

### 6.Metrics 部署

在新版的 Kubernetes 中系统资源的采集均使⽤ Metrics-server,可以通过 Metrics 采集节点和 Pod 的内存、磁盘、CPU和⽹络的使⽤率。

#### (1)复制证书到所有 node 节点

\[root@k8s-master \~\]# scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node2:/etc/kubernetes/pki/

\[root@k8s-master \~\]# scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node1:/etc/kubernetes/pki/

#### (2)安装 metrics server

\[root@k8s-master \~\]# mkdir pods //创建pod目录,方便管理

\[root@k8s-master \~\]# cd k8s-ha-install/kubeadm-metrics-server/

\[root@k8s-master kubeadm-metrics-server\]# ls

comp.yaml

\[root@k8s-master kubeadm-metrics-server\]# cp comp.yaml /root/pods/components.yaml

\[root@k8s-master kubeadm-metrics-server\]# cd

\[root@k8s-master \~\]# cd pods/

\[root@k8s-master pods\]# ls

components.yaml

\[root@k8s-master pods\]# cat components.yaml \| wc -l //有202行

202

\[root@k8s-master pods\]# kubectl create -f components.yaml //使用这个yaml文件添加metric server的pod资源

\[root@k8s-master pods\]# kubectl get po -Aowide \| grep metrics //查看

kube-system metrics-server-79776b6d54-vxjlp 1/1 Running 0 98s 172.16.169.132 k8s-node2 \ \

#### (3)查看 metrics server 状态

\[root@k8s-master pods\]# kubectl get po -n kube-system -l k8s-app=metrics-server //在kube-system命名空间下查看metrics server的pod运⾏状态

NAME READY STATUS RESTARTS AGE

metrics-server-79776b6d54-vxjlp 1/1 Running 0 25m

\[root@k8s-master pods\]# kubectl top nodes //查看node节点的系统资源使⽤情况

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 152m 7% 1126Mi 30%

k8s-node1 62m 3% 453Mi 56%

k8s-node2 53m 2% 447Mi 55%

\[root@k8s-master pods\]# kubectl top pods -A //查看各个pod的资源占用率

NAMESPACE NAME CPU(cores) MEMORY(bytes)

default nginx0 0m 2Mi

kube-system calico-kube-controllers-6d48795585-ctctq 1m 21Mi

kube-system calico-node-4pxdg 33m 91Mi

kube-system calico-node-8jdkf 22m 120Mi

kube-system calico-node-b5jjz 26m 101Mi

kube-system coredns-6554b8b87f-6q7kh 2m 14Mi

kube-system coredns-6554b8b87f-b5c6f 3m 15Mi

kube-system etcd-k8s-master 23m 76Mi

kube-system kube-apiserver-k8s-master 68m 266Mi

kube-system kube-controller-manager-k8s-master 20m 53Mi

kube-system kube-proxy-89nmv 1m 20Mi

kube-system kube-proxy-fb7bb 1m 30Mi

kube-system kube-proxy-mgz7v 1m 39Mi

kube-system kube-scheduler-k8s-master 3m 21Mi

kube-system metrics-server-79776b6d54-vxjlp 4m 16Mi

### 7. Dashboard部署

Dashboard ⽤于展示集群中的各类资源,同时也可以通过Dashboard 实时查看 Pod 的⽇志和在容器中执⾏⼀些命令等。

#### (1)安装组件

\[root@k8s-master \~\]# cd k8s-ha-install/dashboard/

\[root@k8s-master dashboard\]# ls

dashboard-user.yaml dashboard.yaml

\[root@k8s-master dashboard\]# kubectl create -f . //建⽴dashboard的pod资源

#### (2)查看节点(等他全部running)

\[root@k8s-master dashboard\]# kubectl get po -Aowide\|grep dashboard

kubernetes-dashboard dashboard-metrics-scraper-7b554c884f-xhwjc 1/1 Running 0 117s 172.16.36.68 k8s-node1 \ \

kubernetes-dashboard kubernetes-dashboard-54b699784c-tcv7x 1/1 Running 0 117s 172.16.169.133 k8s-node2 \ \

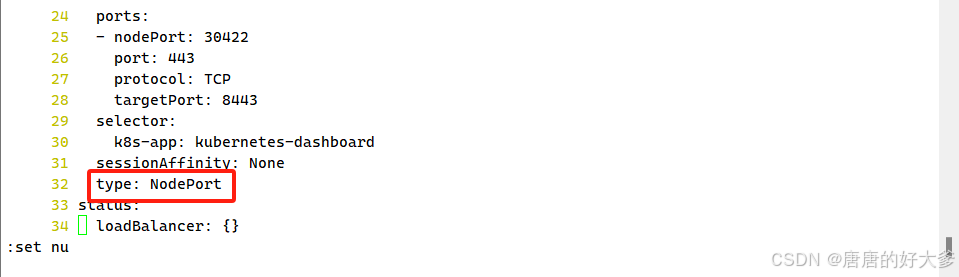

#### (3)更改 svc 模式

\[root@k8s-master dashboard\]# kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard //kubectl edit svc 服务名称 -n 命名空间

####

(4)查看访问端⼝号

\[root@k8s-master dashboard\]# kubectl get svc kubernetes-dashboard -n kubernetes-dashboard //获取kubernetes-dashboard状态信息,包含端⼝,服务IP等

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.96.55.69 \ 443:30422/TCP 8m54s

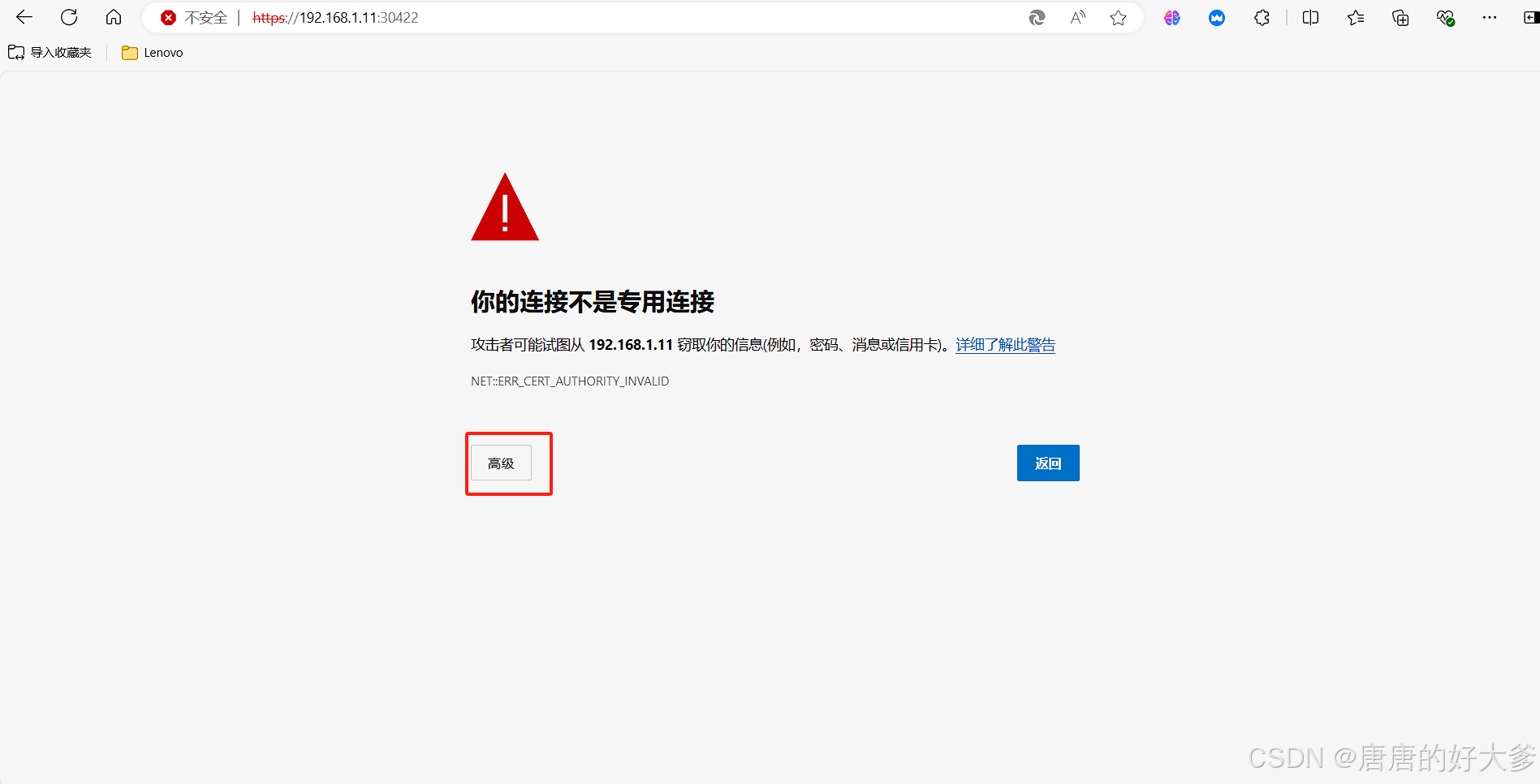

找到端⼝号后,通过 master 的 IP+端⼝即可访问 dashboard(端⼝)为终端查询到的端⼝,要⽤ https 协议访问)

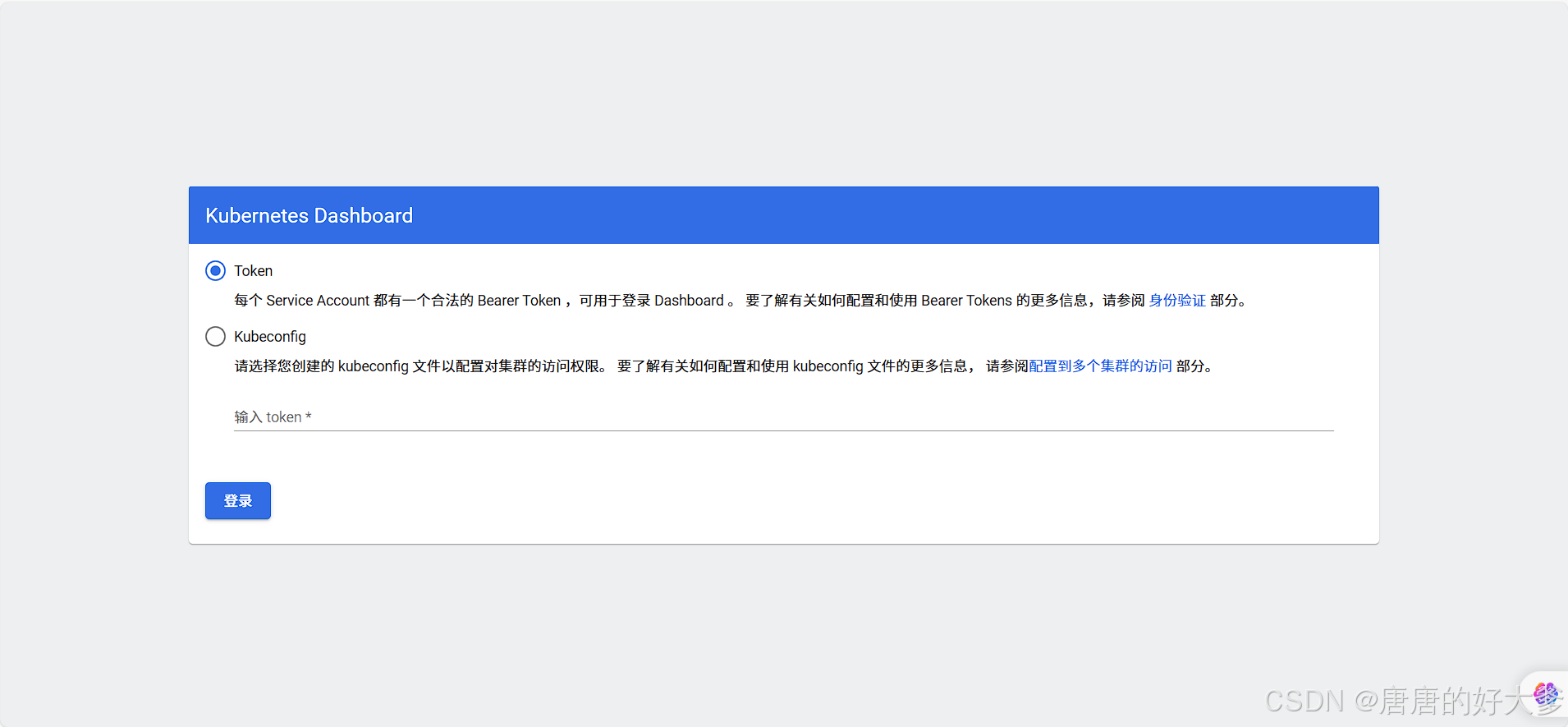

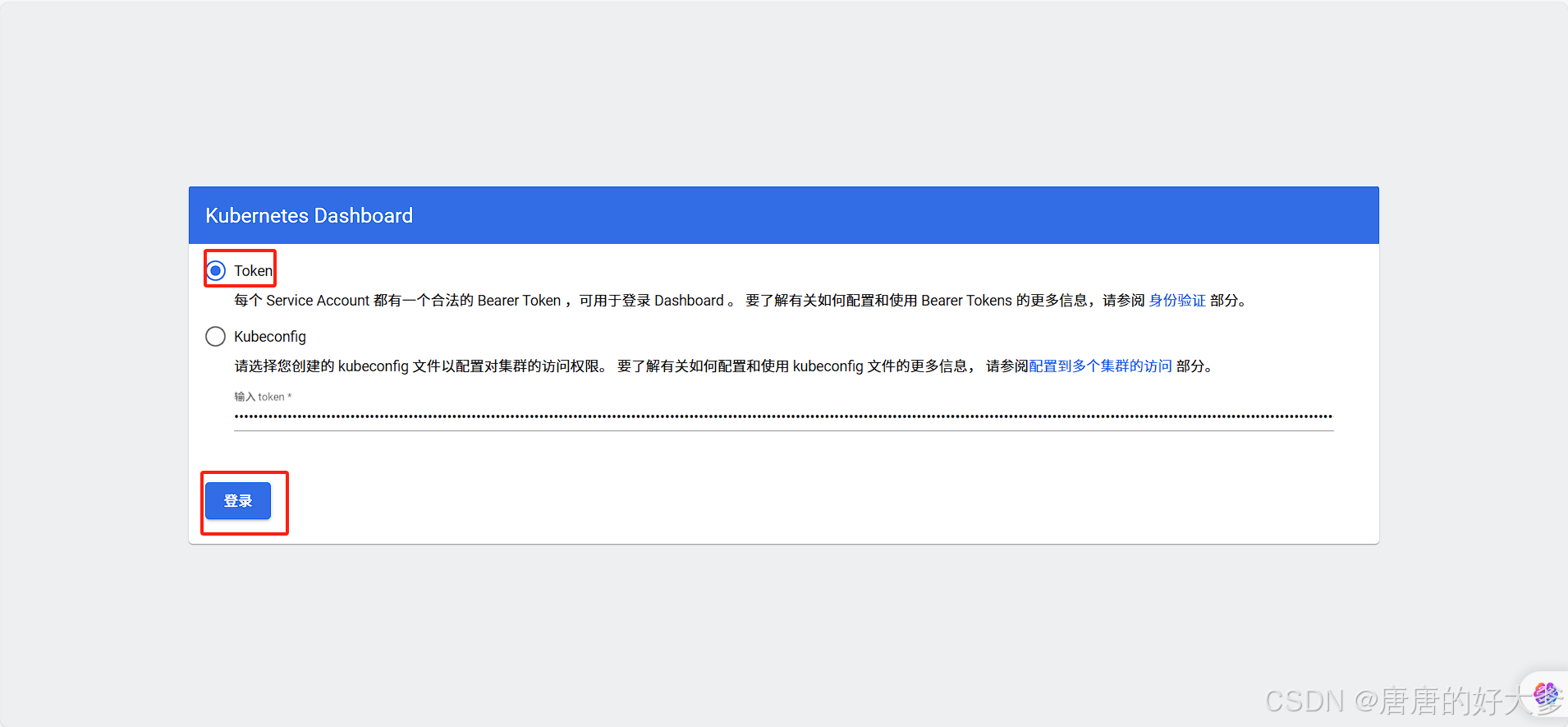

#### (5)浏览器登录 dashboard

####

####

####

(6)创建登录 token

\[root@k8s-master dashboard\]# kubectl create token admin-user -n kube-system

eyJhbGciOiJSUzI1NiIsImtpZCI6IkhDS3ZqbHIyRHRyQUdZVm05R1lTT2NRR0pRMml4ZDNucHJlU1JscHFLc0EifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzI2MTE1NDQzLCJpYXQiOjE3MjYxMTE4NDMsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiMDQ2ZjgxMTctNDZjMy00OWNmLTkwNWMtMTQ2MTZiNGE1YzFiIn19LCJuYmYiOjE3MjYxMTE4NDMsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbi11c2VyIn0.2CznRFPgfiHsQd6CgfqR3qJcsE_3lgeZ1HvNC64JcEw5WMoRwTjO3OlF4an8__dE9kGbtq8bUxUuvvVV9mw0VQz2PTXw3shdjByRo-q2g00_9Qk_tZiRuMQJ_xSujICbHpLKK6ODxmis7PByvNRLtgFm1uqIY6WIhxBOkK5kzBezDRSDeOGYEU2bpoGJfk4nLHvlbYjqmoCi27_jcBLT3PQiLjLaThkvfxvxiT32jb7kWmx4FdWG_V3k28iKxgLwqBu2ZMZW6qRN-KaY2_u91guJY3YvsYNLr_Mp-rOnoS3_26eQJyJkwRcWCmBiMFuQeCDIUWqCVsQCzGEcIc2L2Q

在"输⼊ token \*"内输⼊终端⽣成的 token

###

###

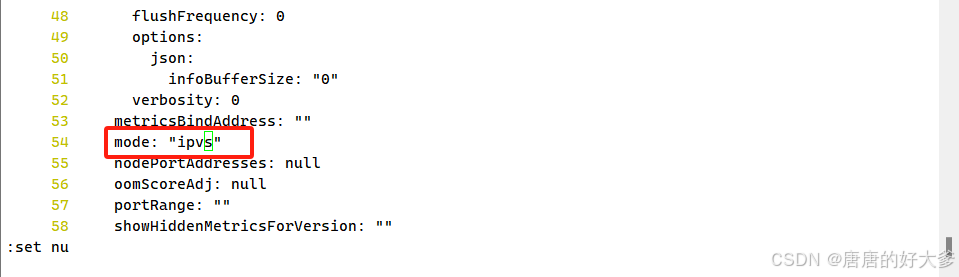

8. Kube-proxy

#### (1)改为 ipvs模式

\[root@k8s-master \~\]# kubectl edit cm kube-proxy -n kube-system

#### (2)更新 Kube-Proxy 的 Pod

\[root@k8s-master \~\]# kubectl patch daemonset kube-proxy -p "{\\"spec\\":{\\"template\\":{\\"metadata\\": {\\"annotations\\":{\\"date\\":\\"\`date +'%s'\`\\"}}}}}" -n kube-system

daemonset.apps/kube-proxy patched

#### (3)访问测试:

\[root@master \~\]# curl 127.0.0.1:10249/proxyMode

ipvs

## 五、集群可⽤性验证

### 1. 验证节点

\[root@k8s-master \~\]# kubectl get node //全部ready表示正常

### 2. 验证 Pod

\[root@k8s-master \~\]# kubectl get po -A //全部running表示正常

### 3. 验证集群⽹段是否冲突

三⽅⽹段均不冲突(service、Pod、宿主机)

\[root@k8s-master \~\]# kubectl get svc //查看服务的⽹段

\[root@k8s-master \~\]# kubectl get po -A -owide //查看所有命名空间下的所有⽹段,再与服务的⽹段进⾏⽐较

### 4. 验证是否可正常创建参数

\[root@k8s-master \~\]# kubectl create deploy cluster-test --image=registry.cn-beijing.aliyuncs.com/dotbalo/debug-tools -- sleep 3600 //这个pod可以用来测试,里面装了许多工具

deployment.apps/cluster-test created

\[root@k8s-master \~\]# kubectl get po -A\|grep cluster-test

default cluster-test-66bb44bd88-khb8j 1/1 Running 0 2m51s

### 5. Pod 必须能够解析 Service

#### (1)nslookup kubernetes

\[root@k8s-master \~\]# kubectl exec -it cluster-test-66bb44bd88-khb8j -- bash //进⼊pod下的这个容器

(06:54 cluster-test-66bb44bd88-khb8j:/) nslookup kubernetes

Server: 10.96.0.10

Address: 10.96.0.10#53

Name: kubernetes.default.svc.cluster.local

Address: 10.96.0.1

#### (2)nslookup kube-dns.kube-system

(06:55 cluster-test-66bb44bd88-khb8j:/) nslookup kube-dns.kube-system

Server: 10.96.0.10

Address: 10.96.0.10#53

Name: kube-dns.kube-system.svc.cluster.local

Address: 10.96.0.10

(06:58 cluster-test-66bb44bd88-khb8j:/) exit

exit

### 6. 确认是否可访问 Kubernetes 的 443端口 和 kube-dns 的53端口

每个节点都必须能访问 Kubernetes 的 kubernetes svc 443 和kube-dns 的 service 53

\[root@k8s-master \~\]# curl -k https://10.96.0.1:443

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "forbidden: User \\"system:anonymous\\" cannot get path \\"/\\"",

"reason": "Forbidden",

"details": {},

"code": 403

}\[root@k8s-master \~\]# curl 10.96.0.10:53

curl: (52) Empty reply from server

### 7. 确认各 Pod 之间是否可正常通信

\[root@k8s-master \~\]# kubectl get po -Aowide //查看个pod IP地址

ping一下pod IP地址