背景:

对于不能占满所有cpu核数的进程,进行on-cpu的分析是没有意义的,因为可能程序大部分时间都处在阻塞状态。

实验例子程序:

以centos8和golang1.23.3为例,测试下面的程序:

pprof_netio.go

Go

package main

import (

"fmt"

"net/http"

_ "net/http/pprof"

//"time"

)

func main() {

go func() {

_ = http.ListenAndServe("0.0.0.0:9091", nil)

}()

//并发数

var ConChan = make(chan bool, 100)

for {

ConChan <- true

go func() {

defer func() {

<-ConChan

}()

doNetIO()

}()

}

}

func doNetIO() {

//fmt.Printf("doNetIO start: %s\n", time.Now().Format(time.DateTime))

for i := 0; i < 10; i++ {

_, err := http.Get("http://127.0.0.1:8080/echo_delay")

if err != nil {

fmt.Printf("i:%d err: %v\n", i, err)

return

}

}

//fmt.Printf("doNetIO end: %s\n", time.Now().Format(time.DateTime))

}测试请求的是nginx,nginx配置如下:

agent-8080.conf

python

server{

listen 8080 reuseport;

index index.html index.htm index.php;

root /usr/share/nginx/html;

access_log /var/log/nginx/access-8080.log main;

error_log /var/log/nginx/access-8080.log error;

location ~ /echo_delay {

limit_rate 30;

return 200 '{"code":"0","message":"ok","data":"012345678901234567890123456789"}';

}

location ~ /*.mp3 {

root /usr/share/nginx/html;

limit_rate 10k;

}

location ~ /* {

return 200 '{}';

}

}编译运行程序:

bash

go build pprof_netio.go

./pprof_netiotop查看,cpu利用率非常低:

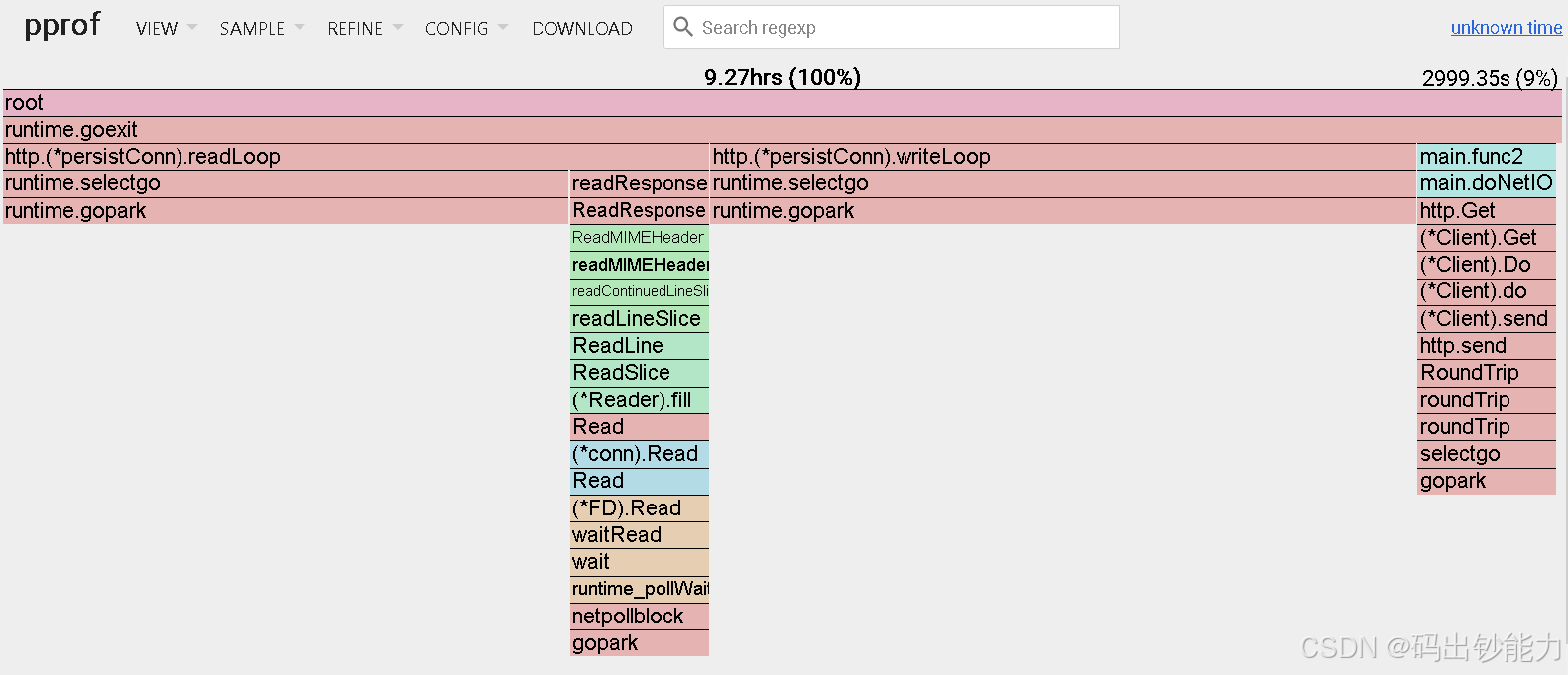

通过pprof:profile查看on-cpu耗时情况:

bash

go tool pprof -http=192.168.36.5:9000 http://127.0.0.1:9091/debug/pprof/profile

默认采样总时长30s,on-cpu时间才690ms,准确说是在30s内只采样到69次,每次采样间隔10ms,pprof推算on-cpu时间是690ms,总之cpu利用率很低。

通过perf查看off-cpu耗时情况:

查看perf支持的调度事件:

以centos8为例,安装依赖:

bash

yum install kernel-debug kernel-debug-devel --nogpgcheck

echo 1 > /proc/sys/kernel/sched_schedstatsperf生成off-cpu火焰图脚本:

bash

#/bin/sh

if [ "$1" == "" ]; then

echo "usage: $0 prog_name"

exit

fi

pid=`ps aux | grep $1 | grep -v 'grep' | grep -v 'perf-offcpu' | awk '{print $2}'`

echo prog_name:$1

echo pid:$pid

perf record -e sched:sched_stat_sleep -e sched:sched_switch \

-e sched:sched_stat_iowait -e sched:sched_process_exit \

-e sched:sched_stat_blocked -e sched:sched_stat_wait \

-g -o perf.data.raw -p $pid -- sleep 30

perf inject -v -s -i perf.data.raw -o perf.data

perf script -F comm,pid,tid,cpu,time,period,event,ip,sym,dso,trace | awk '

NF > 4 { exec = $1; period_ms = int($5 / 1000000) }

NF > 1 && NF <= 4 && period_ms > 0 { print $2 }

NF < 2 && period_ms > 0 { printf "%s\n%d\n\n", exec, period_ms }' | \

stackcollapse.pl | \

flamegraph.pl --countname=ms --title="Off-CPU Time Flame Graph" --colors=io > offcpu.svg进行采样:

bash

sh perf-offcpu.sh 'pprof_netio'perf的off-cpu火焰图:

可以看出阻塞时间的65%都在等待网络连接的建立、发送、读取。

通过bcc/tools/offcputime查看off-cpu耗时情况:

centos8安装bcc-tools:

bash

yum install bcc-tools --nogpgcheckbcc生成off-cpu火焰图脚本:

bash

#/bin/sh

if [ "$1" == "" ]; then

echo "usage: $0 prog_name"

exit

fi

pid=`ps aux | grep $1 | grep -v 'grep' | grep -v 'bcc-offcputime' | awk '{print $2}'`

echo prog_name:$1

echo pid:$pid

/usr/share/bcc/tools/offcputime -df -p $pid 30 > out.stacks

flamegraph.pl --color=io --title="bcc Off-CPU Time Flame Graph" --countname=us < out.stacks > offcpu-bcc.svg进行采样:

bash

sh bcc-offcputime.sh 'pprof_netio'bcc的off-cpu火焰图:

可以看出阻塞时间的67%都在等待网络连接的建立、发送、读取。

通过fgprof以代码侵入方式对golang程序进行off-cpu耗时分析:

修改代码,添加fgprof支持:

pprof_netio.go

Go

package main

import (

"fmt"

"net/http"

_ "net/http/pprof"

//"time"

"github.com/felixge/fgprof"

)

func main() {

//fgprof支持

http.DefaultServeMux.Handle("/debug/fgprof", fgprof.Handler())

go func() {

_ = http.ListenAndServe("0.0.0.0:9091", nil)

}()

//并发数

var ConChan = make(chan bool, 100)

for {

ConChan <- true

go func() {

defer func() {

<-ConChan

}()

doNetIO()

}()

}

}

func doNetIO() {

//fmt.Printf("doNetIO start: %s\n", time.Now().Format(time.DateTime))

for i := 0; i < 10; i++ {

_, err := http.Get("http://127.0.0.1:8080/echo_delay")

if err != nil {

fmt.Printf("i:%d err: %v\n", i, err)

return

}

}

//fmt.Printf("doNetIO end: %s\n", time.Now().Format(time.DateTime))

}进行fgprof采样:

bash

go tool pprof --http=192.168.36.5:9000 http://localhost:9091/debug/fgprof?seconds=30fgprof的off-cpu火焰图:

从图看,能大致定位到是阻塞在网络读写上,但给人感觉采样的范围和频率不及perf和bcc,而且看资料不支持采样cgo程序。

参考资料:

Linux perf_events Off-CPU Time Flame Graph

fgprof package - github.com/felixge/fgprof - Go Packages

--end--