ref

Three.js Journey --- Learn WebGL with Three.js

The note is for personal review , please support the original one made by Bruno Simon who is definitely a brilliant tutor in Three.js and WebGL.

Part 1 3D TEXT

How to get a typeface font

There are many ways of getting fonts in that format. First, you can convert your font with converters like this one: https://gero3.github.io/facetype.js/. You have to provide a file and click on the convert button.

You can also find fonts in the Three.js examples located in the /node_modules/three/examples/fonts/ folder. You can take those fonts and put them in the /static/ folder, or you can import them directly in your JavaScript file because they are json and .json files are supported just like .js files in Vite:

import typefaceFont from 'three/examples/fonts/helvetiker_regular.typeface.json'We will mix those two techniques by opening the /node_modules/three/examples/fonts/, taking the helvetiker_regular.typeface.json and LICENSE files, and putting these in the /static/fonts/ folder (that you need to create).

The font is now accessible just by writing /fonts/helvetiker_regular.typeface.json at the end of the base URL.

Load the font

To load the font, we must use a new loader class called FontLoader.

This class is not available in the THREE variable. We need to import it like we did with the OrbitControls class earlier in the course:

import { FontLoader } from 'three/examples/jsm/loaders/FontLoader.js'This loader works like the TextureLoader. Add the following code after the textureLoader part (if you are using another font, don't forget to change the path):

/**

* Fonts

*/

const fontLoader = new FontLoader()

fontLoader.load(

'/fonts/helvetiker_regular.typeface.json',

(font) =>

{

console.log('loaded')

}

)Create the geometry

Be careful with the example code on the documentation page; the values are much bigger than those in our scene.

Make sure to write your code inside the success function:

fontLoader.load(

'/fonts/helvetiker_regular.typeface.json',

(font) =>

{

const textGeometry = new TextGeometry(

'Hello Three.js',

{

font: font,

size: 0.5,

height: 0.2,

curveSegments: 12,

bevelEnabled: true,

bevelThickness: 0.03,

bevelSize: 0.02,

bevelOffset: 0,

bevelSegments: 5

}

)

const textMaterial = new THREE.MeshBasicMaterial()

const text = new THREE.Mesh(textGeometry, textMaterial)

scene.add(text)

}

)Textures used as map and matcap are supposed to be encoded in sRGB and we need to inform Three.js of this by setting their colorSpace to THREE.SRGBColorSpace:

const matcapTexture = textureLoader.load('/textures/matcaps/1.png')

matcapTexture.colorSpace = THREE.SRGBColorSpaceWe can now replace our ugly MeshBasicMaterial by a beautiful MeshMatcapMaterial and use our matcapTexture variable with the matcap property:

const textMaterial = new THREE.MeshMatcapMaterial({ matcap: matcapTexture })Add objects

for(let i = 0; i < 100; i++)

{

const donutGeometry = new THREE.TorusGeometry(0.3, 0.2, 20, 45)

const donutMaterial = new THREE.MeshMatcapMaterial({ matcap: matcapTexture })

const donut = new THREE.Mesh(donutGeometry, donutMaterial)

donut.position.x = (Math.random() - 0.5) * 10

donut.position.y = (Math.random() - 0.5) * 10

donut.position.z = (Math.random() - 0.5) * 10

donut.rotation.x = Math.random() * Math.PI

donut.rotation.y = Math.random() * Math.PI

const scale = Math.random()

donut.scale.set(scale, scale, scale)

scene.add(donut)

}Part 2 Go Live

You can't simply put the whole project with the node_modules/ folder and the Vite configuration on the host. First, you need to "build" your project within that webpack configuration in order to create HTML, CSS, JS and assets files that can be interpreted by browsers.

To build your project, run npm run build in the terminal.

This command will run the script located in the /package.json file in the scripts > build property.

Wait a few seconds and the files should be available in the /dist/ folder which will be created when build is executed. You can then put those files online using your favorite FTP client.

Whenever you want to upload a new version, run npm run build again even if the /dist/ folder already exists.

We are not going to cover the setup of one of those "traditional" hosting solutions because we are going to use a more appropriate solution in the next section.

Vercel

Vercel is one of those "modern" hosting solutions and features continuous integration (automatisation of testing, deployment and others development steps like this). It is very developer friendly and easy to setup.

You can use it for complex projects, but also for very simple "single page" websites like the ones we create within this course.

Create an account

Add Vercel to your project

Vercel is available as an NPM module that you can install globally on your computer or as a dependency on your project. In the terminal of your project, run npm install vercel.

Although we added Vercel as a project dependency, instead of globally, it's still not available in the terminal. Your NPM scripts will have access to it, but you will first need to make the following change.

In package.json, in the "scripts" property, add a new script named "deploy" and write "vercel --prod" as the value (don't forget the , after the "dev" script):

{

"scripts": {

// ...

"deploy": "vercel --prod"

},

}If you use the "vercel" command without ----prod, the code will be published on a preview URL so that you can test it before going to production. Though it is an interesting feature, we don't need a preview version.

From now on, if you want to deploy your project online, you can simply run npm run deploy in the terminal.

Deploy for the first time

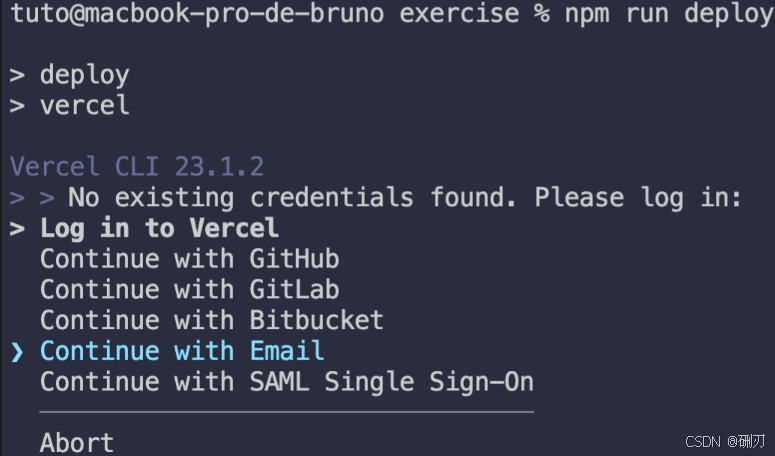

In the terminal, run npm run deploy

If earlier, you chose to connect with an email, use the up and down arrows to select Continue with Email and press Enter:

Vercel detects the settings automatically thanks to the vite.config.js file and we don't need to change anything.

Further settings

Go to vercel.com and make sure you are logged in.

You should have access to your dashboard and see your project

Part 3 Lights

We could have used MeshLambertMaterial, MeshPhongMaterial or MeshToonMaterial, but instead we will use the MeshStandardMaterial because it's the most realistic one as we saw in the previous lesson. We also reduced the roughness of the material to 0.4 to see the reflections of the lights.

Once the starter is working remove the AmbientLight and the PointLight to start from scratch. You should get a black render with nothing visible in it.

AmbientLight

The AmbientLight applies omnidirectional lighting on all geometries of the scene. The first parameter is the color and the second parameter is the intensity. As for the materials, you can set the properties directly while instantiating the AmbientLight class:

// Ambient light

const ambientLight = new THREE.AmbientLight()

ambientLight.color = new THREE.Color(0xffffff)

ambientLight.intensity = 1

scene.add(ambientLight)Note that we had to instantiate a Color when we updated the color property.

And like we did for the materials, you can add the properties to the Debug UI. We won't do that in the rest of the lesson but feel free to add tweaks if you want to ease the testing:

gui.add(ambientLight, 'intensity').min(0).max(3).step(0.001)DirectionalLight

The DirectionalLight will have a sun-like effect as if the sun rays were traveling in parallel. The first parameter is the color and the second parameter is the intensity:

// Directional light

const directionalLight = new THREE.DirectionalLight(0x00fffc, 0.9)

scene.add(directionalLight)By default, the light will seem to come from above. To change that, you must move the whole light by using the position property like if it were a common Three.js object.

directionalLight.position.set(1, 0.25, 0)HemisphereLight

The HemisphereLight is similar to the AmbientLight but with a different color from the sky than the color coming from the ground. Faces facing the sky will be lit by one color while another color will lit faces facing the ground.

The first parameter is the color corresponding to the sky color, the second parameter is the groundColor and the third parameter is the intensity:

const hemisphereLight = new THREE.HemisphereLight(0xff0000, 0x0000ff, 0.9)

scene.add(hemisphereLight)PointLight

The PointLight is almost like a lighter. The light source is infinitely small, and the light spreads uniformly in every direction. The first parameter is the color and the second parameter is the intensity:

const pointLight = new THREE.PointLight(0xff9000, 1.5)

pointLight.position.set(1, - 0.5, 1)

scene.add(pointLight)By default, the light intensity doesn't fade. But you can control that fade distance and how fast it is fading using the distance and decay properties. You can set those in the parameters of the class as the third and fourth parameters, or in the properties of the instance:

const pointLight = new THREE.PointLight(0xff9000, 1.5, 10, 2)RectAreaLight

The RectAreaLight works like the big rectangle lights you can see on the photoshoot set. It's a mix between a directional light and a diffuse light. The first parameter is the color, the second parameter is the intensity, the third parameter is width of the rectangle, and the fourth parameter is its height:

const rectAreaLight = new THREE.RectAreaLight(0x4e00ff, 6, 1, 1)

scene.add(rectAreaLight)The RectAreaLight only works with MeshStandardMaterial and MeshPhysicalMaterial.

You can then move the light and rotate it. To ease the rotation, you can use the lookAt(...) method that we saw in a previous lesson:

rectAreaLight.position.set(- 1.5, 0, 1.5)

rectAreaLight.lookAt(new THREE.Vector3())SpotLight

The SpotLight works like a flashlight. It's a cone of light starting at a point and oriented in a direction. Here the list of its parameters:

-

color: the color -

intensity: the strength -

distance: the distance at which the intensity drops to0 -

angle: how large is the beam -

penumbra: how diffused is the contour of the beam -

decay: how fast the light dimsconst spotLight = new THREE.SpotLight(0x78ff00, 4.5, 10, Math.PI * 0.1, 0.25, 1)

spotLight.position.set(0, 2, 3)

scene.add(spotLight)

Rotating our SpotLight is a little harder. The instance has a property named target, which is an Object3D. The SpotLight is always looking at that target object. But if you try to change its position, the SpotLight won't budge:

spotLight.target.position.x = - 0.75That is due to our target not being in the scene. Simply add the target to the scene, and it should work:

scene.add(spotLight.target)Performance

Lights are great and can be realistic if well used. The problem is that lights can cost a lot when it comes to performance. The GPU will have to do many calculations like the distance from the face to the light, how much that face is facing the light, if the face is in the spot light cone, etc.

Try to add as few lights as possible and try to use the lights that cost less.

Minimal cost:

- AmbientLight

- HemisphereLight

Moderate cost:

- DirectionalLight

- PointLight

High cost:

- SpotLight

- RectAreaLight

Baking

A good technique for lighting is called baking. The idea is that you bake the light into the texture. This can be done in a 3D software. Unfortunately, you won't be able to move the lights, because there are none and you'll probably need a lot of textures.

Helpers

Positioning and orienting the lights is hard. To assist us, we can use helpers. Only the following helpers are supported:

To use them, simply instantiate those classes. Use the corresponding light as a parameter, and add them to the scene. The second parameter enables you to change the helper's size:

const hemisphereLightHelper = new THREE.HemisphereLightHelper(hemisphereLight, 0.2)

scene.add(hemisphereLightHelper)

const directionalLightHelper = new THREE.DirectionalLightHelper(directionalLight, 0.2)

scene.add(directionalLightHelper)

const pointLightHelper = new THREE.PointLightHelper(pointLight, 0.2)

scene.add(pointLightHelper)

const spotLightHelper = new THREE.SpotLightHelper(spotLight)

scene.add(spotLightHelper)The RectAreaLightHelper is a little harder to use. Right now, the class isn't part of the THREE core variables. You must import it from the examples dependencies as we did with OrbitControls:

import { RectAreaLightHelper } from 'three/examples/jsm/helpers/RectAreaLightHelper.js'Then you can use it:

const rectAreaLightHelper = new RectAreaLightHelper(rectAreaLight)

scene.add(rectAreaLightHelper)Part 4 Shadows

The back of the objects are indeed in the dark, and this is called the core shadow . What we are missing is the drop shadow, where objects create shadows on the other objects.

Shadows have always been a challenge for real-time 3D rendering, and developers must find tricks to display realistic shadows at a reasonable frame rate.

There are many ways of implementing them, and Three.js has a built-in solution. Be aware, this solution is convenient, but it's far from perfect.

How it works

We won't detail how shadows are working internally, but we will try to understand the basics.

When you do one render, Three.js will first do a render for each light supposed to cast shadows. Those renders will simulate what the light sees as if it was a camera. During these lights renders, MeshDepthMaterial replaces all meshes materials.

The results are stored as textures and named shadow maps.

You won't see those shadow maps directly, but they are used on every material supposed to receive shadows and projected on the geometry.

Here's an excellent example of what the directional light and the spotlight see: three.js webgl - ShadowMapViewer example

How to activate shadows

First, we need to activate the shadow maps on the renderer:

renderer.shadowMap.enabled = trueThen, we need to go through each object of the scene and decide if the object can cast a shadow with the castShadow property, and if the object can receive shadow with the receiveShadow property.

Try to activate these on as few objects as possible:

sphere.castShadow = true

// ...

plane.receiveShadow = trueFinally, activate the shadows on the light with the castShadow property.

Only the following types of lights support shadows:

And again, try to activate shadows on as few lights as possible:

directionalLight.castShadow = trueShadow map optimisations

Render size

As for our render, we need to specify a size. By default, the shadow map size is only 512x512 for performance reasons. We can improve it but keep in mind that you need a power of 2 value for the mipmapping:

directionalLight.shadow.mapSize.width = 1024

directionalLight.shadow.mapSize.height = 1024Near and far

Three.js is using cameras to do the shadow maps renders. Those cameras have the same properties as the cameras we already used. This means that we must define a near and a far. It won't really improve the shadow's quality, but it might fix bugs where you can't see the shadow or where the shadow appears suddenly cropped.

To help us debug the camera and preview the near and far, we can use a CameraHelper with the camera used for the shadow map located in the directionalLight.shadow.camera property:

const directionalLightCameraHelper = new THREE.CameraHelper(directionalLight.shadow.camera)

scene.add(directionalLightCameraHelper)Now you can visually see the near and far of the camera. Try to find a value that fits the scene:

directionalLight.shadow.camera.near = 1

directionalLight.shadow.camera.far = 6Amplitude

Because we are using a DirectionalLight, Three.js is using an OrthographicCamera. If you remember from the Cameras lesson, we can control how far on each side the camera can see with the top, right, bottom, and left properties. Let's reduce those properties:

directionalLight.shadow.camera.top = 2

directionalLight.shadow.camera.right = 2

directionalLight.shadow.camera.bottom = - 2

directionalLight.shadow.camera.left = - 2Blur

You can control the shadow blur with the radius property:

directionalLight.shadow.radius = 10Shadow map algorithm

Different types of algorithms can be applied to shadow maps:

- **THREE.BasicShadowMap:**Very performant but lousy quality

- **THREE.PCFShadowMap:**Less performant but smoother edges

- **THREE.PCFSoftShadowMap:**Less performant but even softer edges

- **THREE.VSMShadowMap:**Less performant, more constraints, can have unexpected results

To change it, update the renderer.shadowMap.type property. The default is THREE.PCFShadowMap but you can use THREE.PCFSoftShadowMap for better quality.

renderer.shadowMap.type = THREE.PCFSoftShadowMapSpotLight

// Spot light

const spotLight = new THREE.SpotLight(0xffffff, 3.6, 10, Math.PI * 0.3)

spotLight.castShadow = true

spotLight.position.set(0, 2, 2)

scene.add(spotLight)

scene.add(spotLight.target)

const spotLightCameraHelper = new THREE.CameraHelper(spotLight.shadow.camera)

scene.add(spotLightCameraHelper)PointLight

// Point light

const pointLight = new THREE.PointLight(0xffffff, 2.7)

pointLight.castShadow = true

pointLight.position.set(- 1, 1, 0)

scene.add(pointLight)

const pointLightCameraHelper = new THREE.CameraHelper(pointLight.shadow.camera)

scene.add(pointLightCameraHelper)Baking shadows

Three.js shadows can be very useful if the scene is simple, but it might otherwise become messy.

A good alternative is baked shadows. We talk about baked lights in the previous lesson and it is exactly the same thing. Shadows are integrated into textures that we apply on materials.

Instead of commenting all the shadows related lines of code, we can simply deactivate them in the renderer:

renderer.shadowMap.enabled = falseNow we can load a shadow texture located in /static/textures/bakedShadow.jpg using the classic TextureLoader.

javascript

/**

* Textures

*/

const textureLoader = new THREE.TextureLoader()

const bakedShadow = textureLoader.load('/textures/bakedShadow.jpg')

bakedShadow.colorSpace = THREE.SRGBColorSpace

const plane = new THREE.Mesh(

new THREE.PlaneGeometry(5, 5),

new THREE.MeshBasicMaterial({

map: bakedShadow

})

)Ghosts

We are going to use simple lights floating around the house and passing through the ground and graves.

/**

* Ghosts

*/

const ghost1 = new THREE.PointLight('#ff00ff', 6, 3)

scene.add(ghost1)

const ghost2 = new THREE.PointLight('#00ffff', 6, 3)

scene.add(ghost2)

const ghost3 = new THREE.PointLight('#ffff00', 6, 3)

scene.add(ghost3)Now we can animate them using some mathematics with a lot of trigonometry:

const clock = new THREE.Clock()

const tick = () =>

{

const elapsedTime = clock.getElapsedTime()

// Ghosts

const ghost1Angle = elapsedTime * 0.5

ghost1.position.x = Math.cos(ghost1Angle) * 4

ghost1.position.z = Math.sin(ghost1Angle) * 4

ghost1.position.y = Math.sin(elapsedTime * 3)

const ghost2Angle = - elapsedTime * 0.32

ghost2.position.x = Math.cos(ghost2Angle) * 5

ghost2.position.z = Math.sin(ghost2Angle) * 5

ghost2.position.y = Math.sin(elapsedTime * 4) + Math.sin(elapsedTime * 2.5)

const ghost3Angle = - elapsedTime * 0.18

ghost3.position.x = Math.cos(ghost3Angle) * (7 + Math.sin(elapsedTime * 0.32))

ghost3.position.z = Math.sin(ghost3Angle) * (7 + Math.sin(elapsedTime * 0.5))

ghost3.position.y = Math.sin(elapsedTime * 4) + Math.sin(elapsedTime * 2.5)

// ...

}Part 5 Particles

First particles

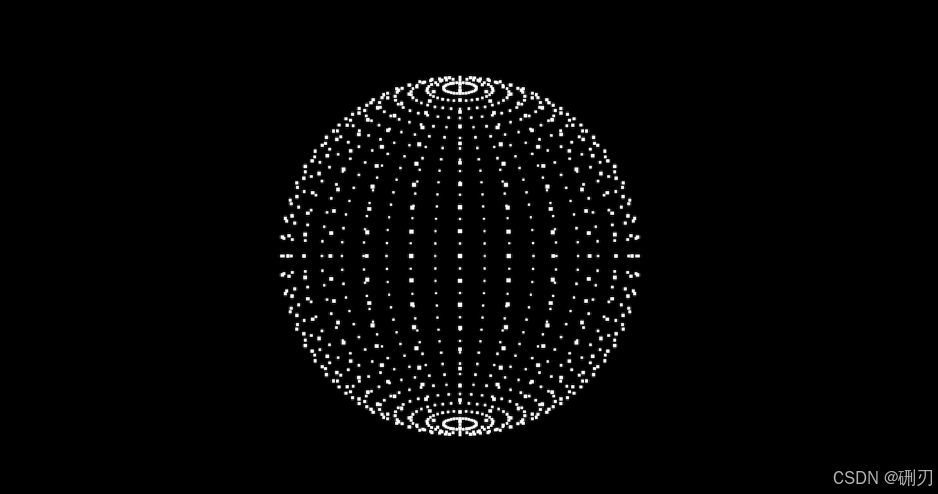

Let's get rid of our cube and create a sphere composed of particles to start.

javascript

/**

* Particles

*/

// Geometry

const particlesGeometry = new THREE.SphereGeometry(1, 32, 32)

// Material

const particlesMaterial = new THREE.PointsMaterial()

particlesMaterial.size = 0.02

particlesMaterial.sizeAttenuation = true

// Points

const particles = new THREE.Points(particlesGeometry, particlesMaterial)

scene.add(particles)

Custom geometry

javascript

// Geometry

const particlesGeometry = new THREE.BufferGeometry()

const count = 500

const positions = new Float32Array(count * 3) // Multiply by 3 because each position is composed of 3 values (x, y, z)

for(let i = 0; i < count * 3; i++) // Multiply by 3 for same reason

{

positions[i] = (Math.random() - 0.5) * 10 // Math.random() - 0.5 to have a random value between -0.5 and +0.5

}

particlesGeometry.setAttribute('position', new THREE.BufferAttribute(positions, 3)) // Create the Three.js BufferAttribute and specify that each information is composed of 3 values

const particlesMaterial = new THREE.PointsMaterial()

particlesMaterial.size = 0.1

particlesMaterial.color = new THREE.Color('#ff88cc')

// Points

const particles = new THREE.Points(particlesGeometry, particlesMaterial)

scene.add(particles)

/**

* Textures

*/

const textureLoader = new THREE.TextureLoader()

const particleTexture = textureLoader.load('/textures/particles/2.png')

// ...

particlesMaterial.map = particleTextureUsing alphaTest

The alphaTest is a value between 0 and 1 that enables the WebGL to know when not to render the pixel according to that pixel's transparency. By default, the value is 0 meaning that the pixel will be rendered anyway. If we use a small value such as 0.001, the pixel won't be rendered if the alpha is 0:

particlesMaterial.alphaTest = 0.001Using depthTest

When drawing, the WebGL tests if what's being drawn is closer than what's already drawn. That is called depth testing and can be deactivated (you can comment the alphaTest):

particlesMaterial.depthTest = falseUsing depthWrite

The depth of what's being drawn is stored in what we call a depth buffer. Instead of not testing if the particle is closer than what's in this depth buffer, we can tell the WebGL not to write particles in that depth buffer (you can comment the depthTest):

particlesMaterial.depthWrite = falseBlending

By changing the blending property, we can tell the WebGL not only to draw the pixel, but also to add the color of that pixel to the color of the pixel already drawn. That will have a saturation effect that can look amazing.

To test that, simply change the blending property to THREE.AdditiveBlending (keep the depthWrite property):

particlesMaterial.depthWrite = false

particlesMaterial.blending = THREE.AdditiveBlendingDifferent colors

const positions = new Float32Array(count * 3)

const colors = new Float32Array(count * 3)

for(let i = 0; i < count * 3; i++)

{

positions[i] = (Math.random() - 0.5) * 10

colors[i] = Math.random()

}

particlesGeometry.setAttribute('position', new THREE.BufferAttribute(positions, 3))

particlesGeometry.setAttribute('color', new THREE.BufferAttribute(colors, 3))To activate those vertex colors, simply change the vertexColors property to true:

particlesMaterial.vertexColors = trueAnimate

const tick = () =>

{

const elapsedTime = clock.getElapsedTime()

// Update particles

particles.rotation.y = elapsedTime * 0.2

// ...

}The easiest way to simulate waves movement is to use a simple sinus. First, we are going to update all vertices to go up and down on the same frequency.

The y coordinate can be access in the array at the index i3 + 1:

javascript

const tick = () =>

{

// ...

for(let i = 0; i < count; i++)

{

const i3 = i * 3

particlesGeometry.attributes.position.array[i3 + 1] = Math.sin(elapsedTime)

}

particlesGeometry.attributes.position.needsUpdate = true

// ...

}All the particles should be moving up and down like a plane.

That's a good start and we are almost there. All we need to do now is apply an offset to the sinus between the particles so that we get that wave shape.

To do that, we can use the x coordinate. And to get this value we can use the same technique that we used for the y coordinate but instead of i3 + 1, it's just i3:

const tick = () =>

{

// ...

for(let i = 0; i < count; i++)

{

let i3 = i * 3

const x = particlesGeometry.attributes.position.array[i3]

particlesGeometry.attributes.position.array[i3 + 1] = Math.sin(elapsedTime + x)

}

particlesGeometry.attributes.position.needsUpdate = true

// ...

}Part 6 Imported models

Load the model in Three.js

javascript

import { GLTFLoader } from 'three/examples/jsm/loaders/GLTFLoader.js'

const gltfLoader = new GLTFLoader()

gltfLoader.load(

'/models/Duck/glTF/Duck.gltf',

(gltf) =>

{

console.log('success')

console.log(gltf)

},

(progress) =>

{

console.log('progress')

console.log(progress)

},

(error) =>

{

console.log('error')

console.log(error)

}

)

gltfLoader.load(

'/models/Duck/glTF/Duck.gltf', // Default glTF

// Or

gltfLoader.load(

'/models/Duck/glTF-Binary/Duck.glb', // glTF-Binary

// Or

gltfLoader.load(

'/models/Duck/glTF-Embedded/Duck.gltf', // glTF-EmbeddedHandle the animation

If you look at the loaded gltf object, you can see a property named animations containing multiple AnimationClip.

These AnimationClip cannot be used easily. We first need to create an AnimationMixer. An AnimationMixer is like a player associated with an object that can contain one or many AnimationClips. The idea is to create one for each object that needs to be animated.

Inside the success function, create a mixer and send the gltf.scene as parameter

To play the animation, we must tell the mixer to update itself at each frame. The problem is that our mixer variable has been declared in the load callback function, and we don't have access to it in the tick function. To fix that, we can declare the mixer variable with a null value outside of the load callback function and update it when the model is loaded:

let mixer = null

gltfLoader.load(

'/models/Fox/glTF/Fox.gltf',

(gltf) =>

{

gltf.scene.scale.set(0.03, 0.03, 0.03)

scene.add(gltf.scene)

mixer = new THREE.AnimationMixer(gltf.scene)

const action = mixer.clipAction(gltf.animations[0])

action.play()

}

)And finally, we can update the mixer in the tick function with the already calculated deltaTime.

But before updating it, we must test if the mixer variable is different from null. This way, we update the mixer if the model is loaded, meaning that the animation is not ready:

const tick = () =>

{

// ...

if(mixer)

{

mixer.update(deltaTime)

}

// ...

}The animation should be running. You can test the other animations by changing the value in the clipAction(...) method.

const action = mixer.clipAction(gltf.animations[2])