先决条件: 已经部署好k8s

Go

#这里我使用的版本是1.28.12

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$kubectl version

Client Version: v1.28.12

Kustomize Version: v5.0.4-0.20230601165947-6ce0bf390ce3

Server Version: v1.28.12安装git服务

Go

#安装git服务,用于后面拉取prometheus代码

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$yum install git -y下载kube-prometheus 源代码

#说明一下这里默认拉取的是最新版本的代码版本.可以根据自己的k8s版本来获取对应的kube-prometheus代码版本

Go

#拉取代码

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$git clone https://github.com/prometheus-operator/kube-prometheus.git下面是部分兼容性示例图,

代码拉取完成后会有一个kube-prometheus目录以下是相关的源码内容.

Go

[root@prometheus-operator /zpf/prometheus]$cd kube-prometheus/

[root@prometheus-operator /zpf/prometheus/kube-prometheus]$ls

build.sh CONTRIBUTING.md example.jsonnet go.mod jsonnetfile.json kustomization.yaml manifests scripts

CHANGELOG.md developer-workspace examples go.sum jsonnetfile.lock.json LICENSE README.md tests

code-of-conduct.md docs experimental jsonnet kubescape-exceptions.json Makefile RELEASE.md

[root@prometheus-operator /zpf/prometheus/kube-prometheus]$cd manifests/

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$ls

.

.

.

grafana-config.yaml nodeExporter-prometheusRule.yaml prometheus-podDisruptionBudget.yaml

grafana-dashboardDatasources.yaml nodeExporter-serviceAccount.yaml prometheus-prometheusRule.yaml

grafana-dashboardDefinitions.yaml nodeExporter-serviceMonitor.yaml prometheus-prometheus.yaml

.

.

.

setup

#这里的setup是一个目录首先创建预置资源,注册相关的资源项目

Go

#当前目录位置

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$pwd

/zpf/prometheus/kube-prometheus/manifests

#创建注册相关资源

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$kubectl create -f ./setup/

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusagents.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/scrapeconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring createdprometheus,grafana网络权限配置修改,

如果要使prometheus,grafana这些web服务可以使用浏览器访问的话,需要做一些配置修改

Go

查查看有哪些网络配置文件,

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$ls|grep networkPolicy

alertmanager-networkPolicy.yaml

blackboxExporter-networkPolicy.yaml

grafana-networkPolicy.yaml

kubeStateMetrics-networkPolicy.yaml

nodeExporter-networkPolicy.yaml

prometheusAdapter-networkPolicy.yaml

prometheus-networkPolicy.yaml

prometheusOperator-networkPolicy.yaml修改配置文件

#修改grafana-networkPolicy.yaml

Go

#修改grafana-networkPolicy.yaml

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$cat grafana-networkPolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 11.6.0

name: grafana

namespace: monitoring

spec:

egress: #这里默认就是所有出流量都放行

- {}

ingress:

- from: [] #这里将原本的放行流量注释掉,修改成进入流量全部放行,

# - podSelector:

# matchLabels:

# app.kubernetes.io/name: prometheus

ports:

- port: 3000

protocol: TCP

podSelector:

matchLabels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

policyTypes:

- Egress

- Ingress

#ingress:控制谁可以访问 Prometheus(入站流量)。

#egress:控制 Prometheus 可以访问谁(出站流量)。

# Egress 配置:控制 Prometheus 可以访问的目标

# Ingress 配置:控制谁可以访问 Prometheus# 修改prometheus-networkPolicy.yaml

Go

# 修改prometheus-networkPolicy.yaml

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$cat prometheus-networkPolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 3.2.1

name: prometheus-k8s

namespace: monitoring

spec:

egress:

- {}

ingress:

- from: []

# - podSelector:

# matchLabels:

# app.kubernetes.io/name: prometheus

ports:

- port: 9090

protocol: TCP

- port: 8080

protocol: TCP

- from: []

# - podSelector:

# matchLabels:

# app.kubernetes.io/name: prometheus-adapter

ports:

- port: 9090

protocol: TCP

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: grafana

ports:

- port: 9090

protocol: TCP

podSelector:

matchLabels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

policyTypes:

- Egress

- Ingress修改service信息,用于浏览器访问暴漏端口

修改prometheus-service.yaml

Go

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$cat prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 3.2.1

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort #添加端口暴漏形式,这里是NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30090 #添加端口号,这里注意不要跟已有端口冲突

- name: reloader-web

port: 8080

targetPort: reloader-web

nodePort: 30080 #同上添加端口号

selector:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP修改grafana-service.yaml

Go

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$cat grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 11.6.0

name: grafana

namespace: monitoring

spec:

type: NodePort #暴漏类型

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30030 #暴漏端口号

selector:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus另外:说明一下有些镜像可能下载不下来,需要单独下载,并在相关deploy文件中做修改

Go

#以下是需要的镜像名称,自己单独拉取下来放到自己的容器镜像仓库中

ghcr.io/jimmidyson/configmap-reload v0.15.0 74ddcf8dfe2a 7 days ago 11.5MB

grafana/grafana 11.6.0 5c42a1c2e40b 3 weeks ago 663MB

quay.io/prometheus/prometheus v3.2.1 503e04849f1c 7 weeks ago 295MB

quay.io/brancz/kube-rbac-proxy v0.19.0 da047c323be4 8 weeks ago 73.9MB

registry.cn-beijing.aliyuncs.com/scorpio/kube-state-metrics v2.15.0 8650a5270cac 2 months ago 51.4MB

#将相关deploy文件中的镜像进行替换,(这里提前做好secret),以下是需要修改的deploy文件名称

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$ls |grep deploy

blackboxExporter-deployment.yaml

grafana-deployment.yaml

kubeStateMetrics-deployment.yaml

prometheusAdapter-deployment.yaml批量启动相关服务

Go

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$kubectl create -f .

/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

.

.

.

.

serviceaccount/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-operator created看到一堆创建信息,表示配置文件没错,

查看创建资源状态

Go

[root@prometheus-operator /zpf/prometheus/kube-prometheus/manifests]$kubectl get all -n monitoring

NAME READY STATUS RESTARTS AGE

pod/alertmanager-main-0 2/2 Running 0 5m56s

pod/alertmanager-main-1 2/2 Running 0 5m56s

pod/alertmanager-main-2 2/2 Running 0 5m56s

pod/blackbox-exporter-7c696fd6c-hm6qh 3/3 Running 0 6m4s

pod/grafana-756dd56bbd-7xfhk 1/1 Running 0 6m2s

pod/kube-state-metrics-859985d9d9-86ldk 3/3 Running 0 6m2s

pod/node-exporter-cfkjr 2/2 Running 0 6m1s

pod/prometheus-adapter-79756858dc-bkfhw 1/1 Running 0 6m

pod/prometheus-adapter-79756858dc-fqhz8 1/1 Running 0 6m

pod/prometheus-k8s-0 2/2 Running 0 5m56s

pod/prometheus-k8s-1 2/2 Running 0 5m56s

pod/prometheus-operator-5f9c6bb959-wn9rt 2/2 Running 0 6m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager-main ClusterIP 10.233.7.18 <none> 9093/TCP,8080/TCP 6m4s

service/alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 5m56s

service/blackbox-exporter ClusterIP 10.233.20.198 <none> 9115/TCP,19115/TCP 6m4s

service/grafana NodePort 10.233.41.58 <none> 3000:30030/TCP 6m3s

service/kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 6m2s

service/node-exporter ClusterIP None <none> 9100/TCP 6m1s

service/prometheus-adapter ClusterIP 10.233.21.11 <none> 443/TCP 6m1s

service/prometheus-k8s NodePort 10.233.28.110 <none> 9090:30090/TCP,8080:30080/TCP 6m1s

service/prometheus-operated ClusterIP None <none> 9090/TCP 5m56s

service/prometheus-operator ClusterIP None <none> 8443/TCP 6m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/node-exporter 1 1 1 1 1 kubernetes.io/os=linux 6m2s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/blackbox-exporter 1/1 1 1 6m4s

deployment.apps/grafana 1/1 1 1 6m3s

deployment.apps/kube-state-metrics 1/1 1 1 6m2s

deployment.apps/prometheus-adapter 2/2 2 2 6m1s

deployment.apps/prometheus-operator 1/1 1 1 6m

NAME DESIRED CURRENT READY AGE

replicaset.apps/blackbox-exporter-7c696fd6c 1 1 1 6m4s

replicaset.apps/grafana-756dd56bbd 1 1 1 6m3s

replicaset.apps/kube-state-metrics-859985d9d9 1 1 1 6m2s

replicaset.apps/prometheus-adapter-79756858dc 2 2 2 6m1s

replicaset.apps/prometheus-operator-5f9c6bb959 1 1 1 6m

NAME READY AGE

statefulset.apps/alertmanager-main 3/3 5m56s

statefulset.apps/prometheus-k8s 2/2 5m56s资源创建成功

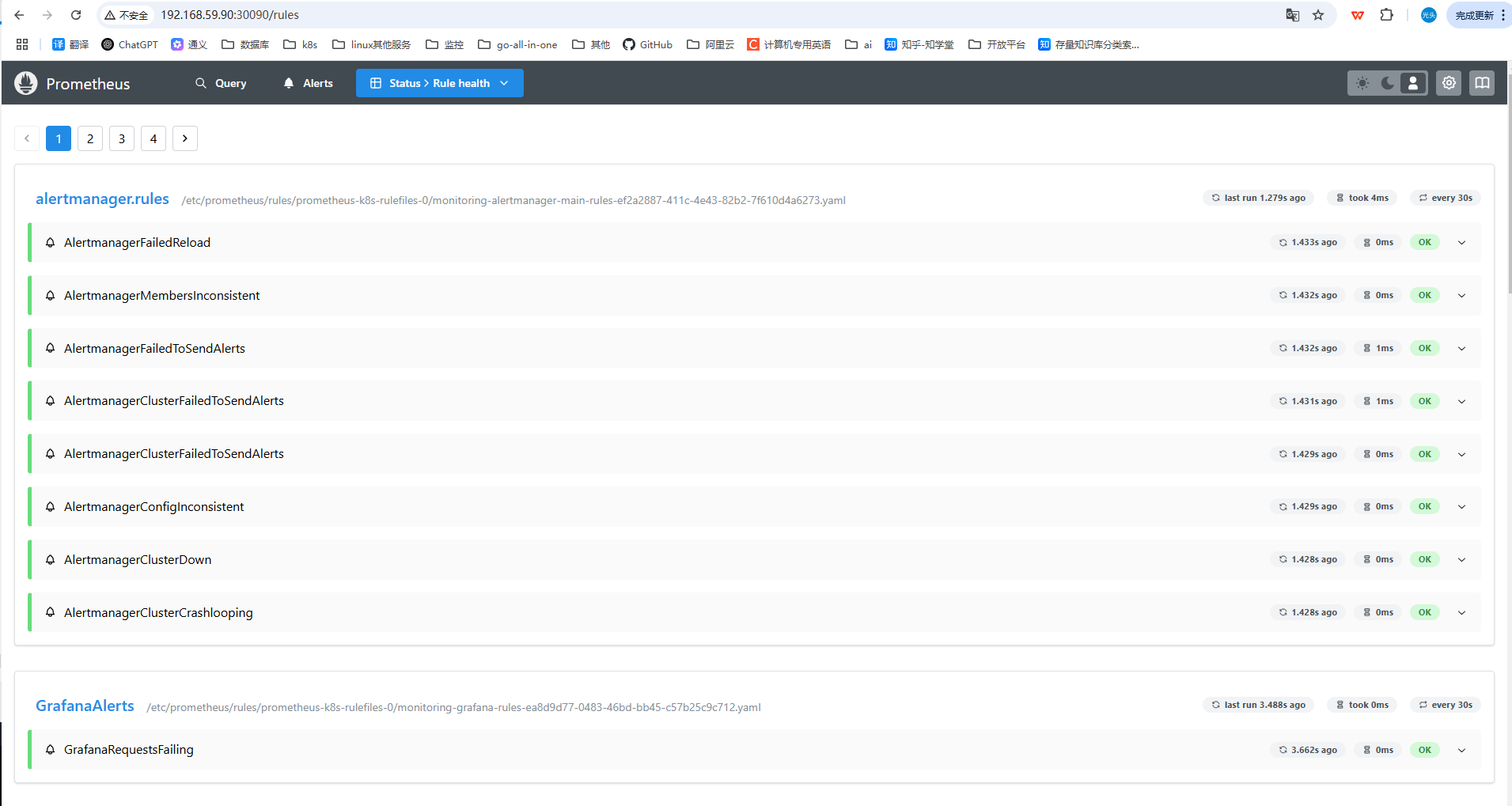

页面验证

prometheus页面验证

grafana页面验证

这里我自己添加了个node-exporter,自己添加就行

验证成功