环境

iOS 18

Xcode 16.3

swift-driver version: 1.120.5 Apple Swift version 6.1 (swiftlang-6.1.0.110.21 clang-1700.0.13.3) Target: x86_64-apple-macosx15.0

Core Audio 的架构

声音数据的描述

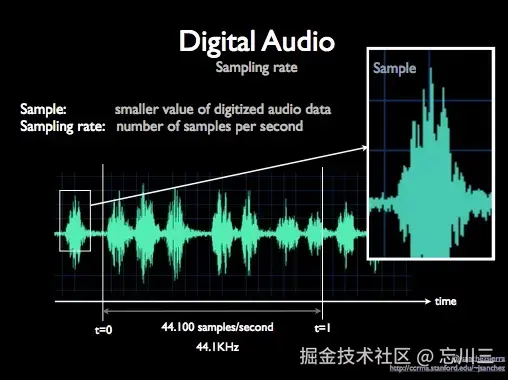

sample : 一个声道采样的值,采样率定义了每秒从连续 信号中提取并组成离散 信号的采样个数,它用赫兹(Hz)来表示。

frame : 一个时间常量内的sample 的集合 packet: 一个或多个连续的帧

AudioStreamBasicDescription

c

// CoreAudioBaseTypes.h

// 音频的格式信息

struct AudioStreamBasicDescription

{

Float64 mSampleRate; // 采样率

AudioFormatID mFormatID; // 采样格式类型

AudioFormatFlags mFormatFlags; // 采样格式标志位

UInt32 mBytesPerPacket; // 每个packet的字节数

// 每个packet的frame数,在未压缩的音频中 mFramesPerPacket == 1

UInt32 mFramesPerPacket;

UInt32 mBytesPerFrame; // 每个frame的字节数

UInt32 mChannelsPerFrame; // 每个frame的声道数

UInt32 mBitsPerChannel; // 每个通道的比特数

UInt32 mReserved; // 保留

};

typedef struct AudioStreamBasicDescription AudioStreamBasicDescription;

c

CF_ENUM(AudioFormatID)

{

kAudioFormatLinearPCM = 'lpcm',

kAudioFormatAC3 = 'ac-3',

kAudioFormat60958AC3 = 'cac3',

kAudioFormatAppleIMA4 = 'ima4',

kAudioFormatMPEG4AAC = 'aac',

kAudioFormatMPEG4CELP = 'celp',

kAudioFormatMPEG4HVXC = 'hvxc',

kAudioFormatMPEG4TwinVQ = 'twvq',

kAudioFormatMACE3 = 'MAC3',

kAudioFormatMACE6 = 'MAC6',

kAudioFormatULaw = 'ulaw',

kAudioFormatALaw = 'alaw',

kAudioFormatQDesign = 'QDMC',

kAudioFormatQDesign2 = 'QDM2',

kAudioFormatQUALCOMM = 'Qclp',

kAudioFormatMPEGLayer1 = '.mp1',

kAudioFormatMPEGLayer2 = '.mp2',

kAudioFormatMPEGLayer3 = '.mp3',

kAudioFormatTimeCode = 'time',

kAudioFormatMIDIStream = 'midi',

kAudioFormatParameterValueStream = 'apvs',

kAudioFormatAppleLossless = 'alac',

kAudioFormatMPEG4AAC_HE = 'aach',

kAudioFormatMPEG4AAC_LD = 'aacl',

kAudioFormatMPEG4AAC_ELD = 'aace',

kAudioFormatMPEG4AAC_ELD_SBR = 'aacf',

kAudioFormatMPEG4AAC_ELD_V2 = 'aacg',

kAudioFormatMPEG4AAC_HE_V2 = 'aacp',

kAudioFormatMPEG4AAC_Spatial = 'aacs',

kAudioFormatMPEGD_USAC = 'usac',

kAudioFormatAMR = 'samr',

kAudioFormatAMR_WB = 'sawb',

kAudioFormatAudible = 'AUDB',

kAudioFormatiLBC = 'ilbc',

kAudioFormatDVIIntelIMA = 0x6D730011,

kAudioFormatMicrosoftGSM = 0x6D730031,

kAudioFormatAES3 = 'aes3',

kAudioFormatEnhancedAC3 = 'ec-3',

kAudioFormatFLAC = 'flac',

kAudioFormatOpus = 'opus',

kAudioFormatAPAC = 'apac',

};

c

CF_ENUM(AudioFormatFlags)

{

kAudioFormatFlagIsFloat = (1U << 0), // 0x1

kAudioFormatFlagIsBigEndian = (1U << 1), // 0x2

kAudioFormatFlagIsSignedInteger = (1U << 2), // 0x4

kAudioFormatFlagIsPacked = (1U << 3), // 0x8

kAudioFormatFlagIsAlignedHigh = (1U << 4), // 0x10

kAudioFormatFlagIsNonInterleaved = (1U << 5), // 0x20

kAudioFormatFlagIsNonMixable = (1U << 6), // 0x40

kAudioFormatFlagsAreAllClear = 0x80000000,

kLinearPCMFormatFlagIsFloat = kAudioFormatFlagIsFloat,

kLinearPCMFormatFlagIsBigEndian = kAudioFormatFlagIsBigEndian,

kLinearPCMFormatFlagIsSignedInteger = kAudioFormatFlagIsSignedInteger,

kLinearPCMFormatFlagIsPacked = kAudioFormatFlagIsPacked,

kLinearPCMFormatFlagIsAlignedHigh = kAudioFormatFlagIsAlignedHigh,

kLinearPCMFormatFlagIsNonInterleaved = kAudioFormatFlagIsNonInterleaved,

kLinearPCMFormatFlagIsNonMixable = kAudioFormatFlagIsNonMixable,

kLinearPCMFormatFlagsSampleFractionShift = 7,

kLinearPCMFormatFlagsSampleFractionMask = (0x3F << kLinearPCMFormatFlagsSampleFractionShift),

kLinearPCMFormatFlagsAreAllClear = kAudioFormatFlagsAreAllClear,

kAppleLosslessFormatFlag_16BitSourceData = 1,

kAppleLosslessFormatFlag_20BitSourceData = 2,

kAppleLosslessFormatFlag_24BitSourceData = 3,

kAppleLosslessFormatFlag_32BitSourceData = 4

};About the name: Although this data type has "stream" in its name, you use it in every instance where you need to represent an audio data format in Core Audio---including in non-streamed, standard files. You could think of it as the "audio format basic description" data type. The "stream" in the name refers to the fact that audio formats come into play whenever you need to move (that is, stream) audio data around in hardware or software.

类型中的stream只是表示播放的概念,这里定义的就是iOS音频格式的描述

获取声音的格式信息

swift

public func audioFormat() -> AudioStreamBasicDescription? {

var size: UInt32 = 0

var audioFile: AudioFileID? // 使用可选类型

var status = AudioFileOpenURL(self.path as CFURL, .readPermission, 0, &audioFile)

guard status == noErr else {

print("*** Error *** filePath: \(self.path) -- code: \(status)")

return nil

}

// 获取音频数据格式

var dataFormat = AudioStreamBasicDescription()

size = UInt32(MemoryLayout<AudioStreamBasicDescription>.size)

status = AudioFileGetProperty(

audioFile!,

kAudioFilePropertyDataFormat,

&size,

&dataFormat

)

// 可选:检查属性获取是否成功

guard status == noErr else {

print("Failed to get data format: \(status)")

AudioFileClose(audioFile!) // 关闭文件防止泄漏

return nil

}

return dataFormat

}

我加载的是一个44.1kHz,双声道的mp3文件 778924083 = 0x2E6D7033 => ".mp3"

AudioStreamPacketDescription

c

// CoreAudioBaseTypes.h

// 表示每个音频packet的数据

struct AudioStreamPacketDescription {

// For example, if the data buffer contains 5 bytes of data, with one byte per packet, then the start offset for the last packet is 4. There are 4 bytes in the buffer before the start of the last packet.

// 偏移量

// 比如缓冲区有5字节的数据,当每个packet包含1个字节,那最后一个包的这个值就是4

SInt64 mStartOffset;

UInt32 mVariableFramesInPacket; // frame的个数,如果是格式是CBR按常量编码的,这个值设置为0

UInt32 mDataByteSize; // 字节数

};

typedef struct AudioStreamPacketDescription AudioStreamPacketDescription;Proxy Objects,Properties, Scopes, Elements

| 名称 | 说明 |

|---|---|

| Proxy Objects | 音频文件,流的代码抽象 |

| Properties | 管理对象的状态或行为 |

| Scopes | 作用域:输入/输出 |

| Elements | 具体的设备,比如蓝牙,有线... |

Properties的key一般是个常量值,比如 kAudioFilePropertyFileFormat或者kAudioQueueDeviceProperty_NumberChannels.

回调函数: 与Core Audio交互

Core Audio接口通过回调函数与应用交互,一般有下面几种场景

- 音频数据传送到应用程序(例如用于录制;然后通过回调将新数据写入磁盘)

- 从应用程序请求音频数据(例如用于播放;回调从磁盘/内存读取并提供数据)。

- 通知应用程序音频对象已更改状态(回调会执行适当操作,比如更新界面)。

回调函数实现分两步: 注册 && 回调

相关框架

| 名称 | 说明 |

|---|---|

| AVFoundation | 提供AVAudioPlayer类,这是一个用于音频播放的简化的原生接口,还提供AVAudioEngine类,用于更复杂的音频处理 |

| AudioToolbox | 为Core Audio中的高级服务提供接口,包括Audio Session服务,用于在iOS管理应用程序的音频行为,允许应用程序使用音频插件,包括音频单元和编解码器 |

| OpenAL | 提供了与OpenAL配合使用的接口 |

| CoreAudioTypes | 提供Core Audio使用的数据类型以及低级服务的接口。 |

示例

播放音乐

使用AudioToolbox.framework

swift

func play() {

var format = self.audioFormat()

guard format != nil else {

return;

}

// 打开音频文件

var status = AudioFileOpenURL(path as CFURL, .readPermission, 0, &audioFile)

guard status == noErr else {

return

}

let context = UnsafeMutableRawPointer(Unmanaged.passUnretained(self).toOpaque())

/**

Audio Queue(音频队列)是 iOS 或 Mac OS X 中用来 录音 或 播放音频 的软件对象。它由 AudioQueueRef 数据类型表示,在 AudioQueue.h 中声明

音频队列的工作是:

* 连接音频硬件

* 管理内存

* 根据需要,为压缩音频格式使用编解码器

* 调解录音或回放

*/

// 创建输出队列,注册回调函数

status = AudioQueueNewOutput(&format!, playbackCallback, context, nil, nil, 0, &queue)

guard status == noErr else {

print("Create new output queue error")

return

}

status = AudioFileGetProperty(audioFile!, kAudioFilePropertyPacketSizeUpperBound, &size, &maxPacketSize)

guard status == noErr else {

print("Get maxPacketSize property error")

return

}

// 计算缓冲区大小

// For uncompressed audio, the value is 1. For variable bit-rate formats, the value is a larger fixed number, such as 1024 for AAC. For formats with a variable number of frames per packet, such as Ogg Vorbis, set this field to 0.

// MP3的值是1152

if format!.mFramesPerPacket != 0 {

// 每秒的包的数量 = 采样率/每个包的帧数

// 比如MP3每秒采样44100次,每个包有1152帧,两者相除就是每秒的包数量

let numPacketsPerSecond = format!.mSampleRate / Double(format!.mFramesPerPacket)

// 每秒的包的数量 * 包大小的理论峰值, 即1s内占用内存的峰值

outBufferSize = UInt32(numPacketsPerSecond * Double(maxPacketSize))

} else {

outBufferSize = max(maxBufferSize, maxPacketSize)

}

// 调整缓冲区大小

if outBufferSize > maxBufferSize && outBufferSize > maxPacketSize {

outBufferSize = maxBufferSize

} else if outBufferSize < minBufferSize {

outBufferSize = minBufferSize

}

// 计算包数量并分配包描述数组

// 每次读取的包的数量 = 缓冲区大小 / 包大小的理论峰值

numPacketsToRead = outBufferSize / maxPacketSize

packetDescs = UnsafeMutablePointer<AudioStreamPacketDescription>.allocate(capacity: Int(numPacketsToRead))

packetDescs?.initialize(repeating: AudioStreamPacketDescription(), count: Int(numPacketsToRead))

// 分配3个缓冲区

for i in 0..<maxBufferNum {

var buffer: AudioQueueBufferRef?

/**

Once allocated, the pointer to the buffer and the buffer's size are fixed and cannot be

changed. The mAudioDataByteSize field in the audio queue buffer structure,

AudioQueueBuffer, is initially set to 0.

@param inAQ

// 分配缓冲区的音频队列

The audio queue you want to allocate a buffer.

@param inBufferByteSize

// 缓冲区大小

The desired size of the new buffer, in bytes. An appropriate buffer size depends on the

processing you will perform on the data as well as on the audio data format.

@param outBuffer

// 指向新创建的缓冲区

On return, points to the newly created audio buffer. The mAudioDataByteSize field in the

audio queue buffer structure, AudioQueueBuffer, is initially set to 0.

@result An OSStatus result code.

*/

AudioQueueAllocateBuffer(queue!, outBufferSize, &buffer)

buffers[i] = buffer

// 预先读maxBufferNum个缓冲区

audioQueueOutput(audioQueue: queue!, queueBuffer: buffer!)

}

// 启动队列

AudioQueueStart(queue!, nil)

}在回调方法中读取音频文件并将数据入队供系统的队列播放

swift

private let playbackCallback: AudioQueueOutputCallback = {

inUserData, // UnsafeMutableRawPointer?

inAQ, // AudioQueueRef

buffer // AudioQueueBufferRef

in

// 1. 保护

guard let inUserData = inUserData else { return }

// 2. 将指针转换为对象实例

let player:AudioToolboxTest = Unmanaged<AudioToolboxTest>.fromOpaque(inUserData).takeUnretainedValue()

// 3. 实际处理逻辑

// 处理音频缓冲区

player.audioQueueOutput(audioQueue: inAQ, queueBuffer: buffer)

}

swift

public func audioQueueOutput(audioQueue: AudioQueueRef, queueBuffer audioQueueBuffer: AudioQueueBufferRef) {

var ioNumBytes = outBufferSize

var ioNumPackets = numPacketsToRead

// 读取音频文件

let status = AudioFileReadPacketData(

audioFile!, // 音频文件

false, // 是否缓存

// on input the size of outBuffer in bytes.

// on output, the number of bytes actually returned.

// 输入场景等于缓存大小

// 输出场景:比如播放根据文件的实际情况

&ioNumBytes, // 包的长度

packetDescs, // 音频包信息

packetIndex, // 索引值

&ioNumPackets, // 包的个数

audioQueueBuffer.pointee.mAudioData // 读取到的数据

)

guard status == noErr else {

print("Error reading packet data: \(status)")

return

}

if ioNumPackets > 0 {

// 设置音频缓冲区数据大小

audioQueueBuffer.pointee.mAudioDataByteSize = ioNumBytes

// 将音频数据入队列让系统去播放

AudioQueueEnqueueBuffer(

audioQueue,

audioQueueBuffer,

ioNumPackets,

packetDescs

)

// 更新包的索引值

self.packetIndex += Int64(ioNumPackets)

}

}