目录

一、前言

HAProxy是一个使用C语言编写的自由及开放源代码软件,其提供高可用性、负载均衡,以及基于TCP和HTTP的应用程序代理。Keepalived 是一个基于VRRP(Virtual Router Redundancy Protocol)协议的高可用性解决方案。本文基于以上两种技术,以apachetomcat作为WEB应用服务器实现高可用负载均衡的部署。

二、服务器规划

共计四台服务器以及一个VIP(192.168.100.135),具体软件部署如下所示,所以服务器均安装RockyLinux9.5操作系统。关于RockyLinux9.5参见Rocky Linux 9.5操作系统安装_rocky linux 9.5安装-CSDN博客

|--------|-----------------|---------------|--------------|-------------------------------|

| 类别 | IP | OS | hostname | 安装软件 |

| 负载均衡 | 192.168.100.131 | RockyLinux9.5 | lb-haproxy1 | haproxy2.4.22,keepalived2.2.8 |

| 负载均衡 | 192.168.100.132 | RockyLinux9.5 | lb-haproxy2 | haproxy2.4.22,keepalived2.2.8 |

| WEB应用 | 192.168.100.133 | RockyLinux9.5 | web-tomcat1 | jdk18,apache tomcat11 |

| WEB应用 | 192.168.100.134 | RockyLinux9.5 | web-tomcat2 | jdk18,apache tomcat11 |

三、部署

1、jdk18安装

以下内容在web-tomcat1,web-tomcat2中执行

(1)、将安装文件jdk-18.0.2.1_linux-x64_bin.tar.gz拷贝到/usr/local目录下

(2)、解压文件tar -zxvf jdk-18.0.2.1_linux-x64_bin.tar.gz,解压后usr/local目录下出现文件夹jdk-18.0.2.1,该文件夹内容如下:

bash

[root@web-tomcat1 local]# ls -l jdk-18.0.2.1

total 24

drwxr-xr-x 2 root root 4096 Apr 29 10:51 bin

drwxr-xr-x 5 root root 123 Apr 29 10:51 conf

drwxr-xr-x 3 root root 132 Apr 29 10:51 include

drwxr-xr-x 2 root root 4096 Apr 29 10:51 jmods

drwxr-xr-x 72 root root 4096 Apr 29 10:51 legal

drwxr-xr-x 5 root root 4096 Apr 29 10:51 lib

lrwxrwxrwx 1 10668 10668 23 Aug 16 2022 LICENSE -> legal/java.base/LICENSE

drwxr-xr-x 3 root root 18 Apr 29 10:51 man

-rw-r--r-- 1 10668 10668 290 Aug 16 2022 README

-rw-r--r-- 1 10668 10668 1233 Aug 16 2022 release(3)、进入该目录,生成jre文件夹不然在运行tomcat时会报缺失jre的错误。执行如下命令:

./bin/jlink --module-path jmods --add-modules java.desktop --output jre,完成后jdk-18.0.2.1目录下出现jre文件夹

bash

[root@web-tomcat1 jdk-18.0.2.1]# ls -l

total 24

drwxr-xr-x 2 root root 4096 Apr 29 10:51 bin

drwxr-xr-x 5 root root 123 Apr 29 10:51 conf

drwxr-xr-x 3 root root 132 Apr 29 10:51 include

drwxr-xr-x 2 root root 4096 Apr 29 10:51 jmods

drwxr-xr-x 8 root root 94 Apr 29 11:00 jre

...........................................(4)、配置jdk环境变量,通过vim /etc/profile命令在profile文件中加入以下内容,完成后执行source /etc/profile命令使之生效。

bash

export JAVA_HOME=/usr/local/jdk-18.0.2.1

export PATH=$JAVA_HOME/bin:$PATH

export JRE_HOME=${JAVA_HOME}

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib(5)、执行java -version命令查看jdk版本,确认jdk安装完成

bash

[root@web-tomcat1 /]# java -version

java version "18.0.2.1" 2022-08-18

Java(TM) SE Runtime Environment (build 18.0.2.1+1-1)

Java HotSpot(TM) 64-Bit Server VM (build 18.0.2.1+1-1, mixed mode, sharing)2、tomcat安装

以下内容在web-tomcat1,web-tomcat2中执行,安装前关闭防火墙及selinux

(1)、将安装文件apache-tomcat-11.0.6.tar.gz拷贝到/usr/local目录下

(2)、tar -zxvf apache-tomcat-11.0.6.tar.gz,解压后usr/local目录下出现文件夹apache-tomcat-11.0.6,该文件夹内容如下:

bash

[root@test-web1 apache-tomcat-11.0.6]# ls -l

total 140

drwxr-x--- 2 root root 4096 Apr 22 10:50 bin

-rw-r----- 1 root root 24262 Apr 1 22:40 BUILDING.txt

drwx------ 3 root root 4096 Apr 24 16:50 conf

-rw-r----- 1 root root 6166 Apr 1 22:40 CONTRIBUTING.md

drwxr-x--- 2 root root 4096 Apr 22 10:50 lib

-rw-r----- 1 root root 60517 Apr 1 22:40 LICENSE

drwxr-x--- 2 root root 4096 Apr 29 10:07 logs

-rw-r----- 1 root root 2333 Apr 1 22:40 NOTICE

-rw-r----- 1 root root 3291 Apr 1 22:40 README.md

-rw-r----- 1 root root 6469 Apr 1 22:40 RELEASE-NOTES

-rw-r----- 1 root root 16109 Apr 1 22:40 RUNNING.txt

drwxr-x--- 2 root root 30 Apr 22 10:50 temp

drwxr-x--- 7 root root 81 Apr 1 22:40 webapps

drwxr-x--- 3 root root 22 Apr 22 13:27 work(3)、配置tomcat环境变量,通过vim /etc/profile命令在profile文件中加入以下内容,完成后执行source /etc/profile命令使之生效。

bash

export CATALINA_HOME=//usr/local/apache-tomcat-11.0.6

export PATH=${CATALINA_HOME}/bin:$PATH(4)、执行命令./apache-tomcat-11.0.6/bin/version.sh查看tomcat环境变量及版本信息

bash

Using CATALINA_BASE: //usr/local/apache-tomcat-11.0.6

Using CATALINA_HOME: //usr/local/apache-tomcat-11.0.6

Using CATALINA_TMPDIR: //usr/local/apache-tomcat-11.0.6/temp

Using JRE_HOME: /usr/local/jdk-18.0.2.1

Using CLASSPATH: //usr/local/apache-tomcat-11.0.6/bin/bootstrap.jar://usr/local/apache-tomcat-11.0.6/bin/tomcat-juli.jar

Using CATALINA_OPTS:

Server version: Apache Tomcat/11.0.6

Server built: Apr 1 2025 14:40:40 UTC

Server number: 11.0.6.0

OS Name: Linux

OS Version: 5.14.0-503.14.1.el9_5.x86_64

Architecture: amd64

JVM Version: 18.0.2.1+1-1

JVM Vendor: Oracle Corporation(5)、启动tomcat,进入目录/usr/local/apache-tomcat-11.0.6/bin,执行命令./startup.sh,看到如下内容说明tomcat启动成功

bash

[root@web-tomcat2 ~]# cd /usr/local/apache-tomcat-11.0.6/bin

[root@web-tomcat2 bin]# ./startup.sh

Using CATALINA_BASE: //usr/local/apache-tomcat-11.0.6

Using CATALINA_HOME: //usr/local/apache-tomcat-11.0.6

Using CATALINA_TMPDIR: //usr/local/apache-tomcat-11.0.6/temp

Using JRE_HOME: /usr/local/jdk-18.0.2.1

Using CLASSPATH: //usr/local/apache-tomcat-11.0.6/bin/bootstrap.jar://usr/local/apache-tomcat-11.0.6/bin/tomcat-juli.jar

Using CATALINA_OPTS:

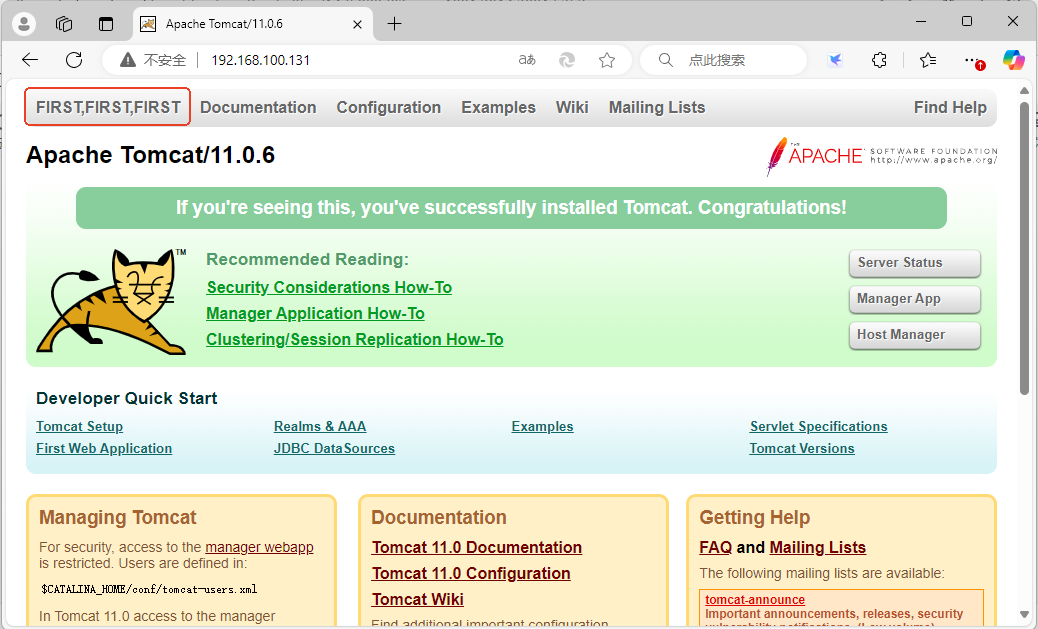

Tomcat started.(6)、修改tomcat首页内容,为了后期体现出负载均衡haproxy服务访问不同的服务器,这将tomcat首页内容进行了一点修改,即修改/usr/local/apache-tomcat-11.0.6/webapps/ROOT/index.jsp页面内容。web-tomcat1服务器index.jsp页面此处修改为:

<span id="nav-home"><a href="${tomcatUrl}">FIRST,FIRST,FIRST</a></span>

web-tomcat1服务器index.jsp页面此处修改为:

<span id="nav-home"><a href="${tomcatUrl}">SECOND,SECOND,SECOND</a></span>

(7)、通过浏览器分别访问两台服务器的tomcat,验证安装完成

web-tomcat1首页

web-tomcat2首页

(8)、通过自定义service文件,设定tomcat自动启动。进入目录/etc/systemd/system/,创建tomcat.service文件,输入如下内容,保存后执行命令systemctl enable tomcat.service,完成开机自动启动的设定。

bash

[Unit]

Description=Tomcat11

After=network.target

[Service]

Type=forking

Environment="JAVA_HOME=/usr/local/jdk-18.0.2.1"

Environment="CATALINA_HOME=/usr/local/apache-tomcat-11.0.6"

Environment="CATALINA_BASE=/usr/local/apache-tomcat-11.0.6"

Environment="CATALINA_OPTS=-Xms512M -Xmx1024M -server -XX:+UseParallelGC"

Environment="JAVA_OPTS=-Djava.awt.headless=true -Djava.security.egd=file:///dev/urandom"

ExecStart=/usr/local/apache-tomcat-11.0.6/bin/startup.sh

ExecStop=/usr/local/apache-tomcat-11.0.6/bin/shutdown.sh

ExecReload=/bin/kill -s HUP $MAINPID

RemainAfterExit=yes

[Install]

WantedBy=multi-user.target重启服务器验证tomcat服务状态,可以看到tomcat服务处于运行之中。

bash

[root@web-tomcat1 system]# systemctl list-units --type=service --state=running | grep tomcat

tomcat.service loaded active running Tomcat113、haproxy安装

以下内容在lb-haproxy1,lb-haproxy2中执行,安装前关闭防火墙及selinux。

(1)、通过yum install -y haproxy命令进行安装,执行过程如下:

bash

[root@lb-haproxy1 local]# yum install -y haproxy

Last metadata expiration check: 4:21:21 ago on Tue 29 Apr 2025 10:27:00 AM CST.

Dependencies resolved.

========================================================================================================================================================

Package Architecture Version Repository Size

========================================================================================================================================================

Installing:

haproxy x86_64 2.4.22-3.el9_5.1 appstream 2.2 M

Transaction Summary

========================================================================================================================================================

Install 1 Package

Total download size: 2.2 M

Installed size: 6.6 M

Downloading Packages:

haproxy-2.4.22-3.el9_5.1.x86_64.rpm 2.5 MB/s | 2.2 MB 00:00

--------------------------------------------------------------------------------------------------------------------------------------------------------

Total 1.4 MB/s | 2.2 MB 00:01

Rocky Linux 9 - AppStream 1.7 MB/s | 1.7 kB 00:00

Importing GPG key 0x350D275D:

Userid : "Rocky Enterprise Software Foundation - Release key 2022 <releng@rockylinux.org>"

Fingerprint: 21CB 256A E16F C54C 6E65 2949 702D 426D 350D 275D

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-Rocky-9

Key imported successfully

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Running scriptlet: haproxy-2.4.22-3.el9_5.1.x86_64 1/1

Installing : haproxy-2.4.22-3.el9_5.1.x86_64 1/1

Running scriptlet: haproxy-2.4.22-3.el9_5.1.x86_64 1/1

Verifying : haproxy-2.4.22-3.el9_5.1.x86_64 1/1

Installed:

haproxy-2.4.22-3.el9_5.1.x86_64

Complete!

[root@lb-haproxy1 local]#通过haproxy -v命令查看haproxy版本信息。

bash

[root@lb-haproxy1 haproxy]# haproxy -v

HAProxy version 2.4.22-f8e3218 2023/02/14 - https://haproxy.org/

Status: long-term supported branch - will stop receiving fixes around Q2 2026.

Known bugs: http://www.haproxy.org/bugs/bugs-2.4.22.html

Running on: Linux 5.14.0-503.14.1.el9_5.x86_64 #1 SMP PREEMPT_DYNAMIC Fri Nov 15 12:04:32 UTC 2024 x86_64(2)、启动haproxy服务,设定开机自动启动,并查看服务状态为active。

bash

[root@lb-haproxy1 local]# systemctl start haproxy

[root@lb-haproxy1 local]# systemctl enable haproxy

Created symlink /etc/systemd/system/multi-user.target.wants/haproxy.service → /usr/lib/systemd/system/haproxy.service.

[root@lb-haproxy1 local]# systemctl status haproxy

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; preset: disabled)

Active: active (running) since Tue 2025-04-29 14:53:55 CST; 10s ago

Main PID: 33813 (haproxy)

Tasks: 3 (limit: 22798)

Memory: 4.1M

CPU: 40ms

CGroup: /system.slice/haproxy.service

├─33813 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -f /etc/haproxy/conf.d -p /run/haproxy.pid

└─33815 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -f /etc/haproxy/conf.d -p /run/haproxy.pid

Apr 29 14:53:55 lb-haproxy1 systemd[1]: Started HAProxy Load Balancer.

Apr 29 14:53:55 lb-haproxy1 haproxy[33815]: [WARNING] (33815) : Server static/static is DOWN, reason: Layer4 connection problem, info: "Connection ref>

Apr 29 14:53:55 lb-haproxy1 haproxy[33815]: [NOTICE] (33815) : haproxy version is 2.4.22-f8e3218

Apr 29 14:53:55 lb-haproxy1 haproxy[33815]: [NOTICE] (33815) : path to executable is /usr/sbin/haproxy

Apr 29 14:53:55 lb-haproxy1 haproxy[33815]: [ALERT] (33815) : backend 'static' has no server available!

Apr 29 14:53:55 lb-haproxy1 haproxy[33815]: [WARNING] (33815) : Server app/app1 is DOWN, reason: Layer4 connection problem, info: "Connection refused">

Apr 29 14:53:56 lb-haproxy1 haproxy[33815]: [WARNING] (33815) : Server app/app2 is DOWN, reason: Layer4 connection problem, info: "Connection refused">

Apr 29 14:53:56 lb-haproxy1 haproxy[33815]: [WARNING] (33815) : Server app/app3 is DOWN, reason: Layer4 connection problem, info: "Connection refused">

Apr 29 14:53:56 lb-haproxy1 haproxy[33815]: [WARNING] (33815) : Server app/app4 is DOWN, reason: Layer4 connection problem, info: "Connection refused">

Apr 29 14:53:56 lb-haproxy1 haproxy[33815]: [ALERT] (33815) : backend 'app' has no server available!

lines 1-21/21 (END)(3)、修改haproxy的配置使之可以访问后端tomcat服务器,将以下内容加入/etc/haproxy/目录下haproxy.cfg文件中,之后通过systemctl restart haproxy.service命令重启haproxy服务。

bash

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# https://www.haproxy.org/download/1.8/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

#local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

stats timeout 30s

# utilize system-wide crypto-policies

ssl-default-bind-ciphers PROFILE=SYSTEM

ssl-default-server-ciphers PROFILE=SYSTEM

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend http_front

bind *:80

# 如果使用HTTPS,取消以下注释并配置证书

# bind *:443 ssl crt /etc/haproxy/yourdomain.pem

# redirect scheme https if !{ ssl_fc }

# 定义ACL规则(可选)

# acl is_tomcat path_beg /yourcontextpath

# 使用默认后端

default_backend tomcat_servers

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend static

balance roundrobin

server static 127.0.0.1:4331 check

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend tomcat_servers

balance roundrobin # 负载均衡算法

option forwardfor # 添加X-Forwarded-For头,建议tomcat server.xml加入相应设置

http-request set-header X-Forwarded-Port %[dst_port]

http-request add-header X-Forwarded-Proto https if { ssl_fc }

# 配置Tomcat服务器

server web-tomcat1 192.168.100.133:8080 check

server web-tomcat2 192.168.100.134:8080 check

listen stats

bind *:1936

stats enable

stats uri /haproxy?stats

stats realm HAProxy\ Statistics

stats auth admin:password # 替换为您的用户名密码重启haproxy服务后,在浏览器中通过haproxy地址(两台haproxy服务器各自IP地址)可以访问到tomcat服务,如下图所示,由于负载均衡采用的是轮询算法,所以每次刷新页面,会访问不同的tomcat服务器。

通过tomcat访问日志可以看到haproxy的IP地址访问了tomcat的web页面

bash

[root@web-tomcat1 logs]# tail -15 localhost_access_log.2025-04-29.txt

192.168.100.131 - - [29/Apr/2025:15:40:08 +0800] "GET /asf-logo-wide.svg HTTP/1.1" 200 27235

192.168.100.131 - - [29/Apr/2025:15:40:08 +0800] "GET /bg-middle.png HTTP/1.1" 200 1918

192.168.100.131 - - [29/Apr/2025:15:40:08 +0800] "GET /favicon.ico HTTP/1.1" 200 21630

192.168.100.131 - - [29/Apr/2025:15:41:06 +0800] "GET /tomcat.css HTTP/1.1" 200 5584

192.168.100.131 - - [29/Apr/2025:15:41:06 +0800] "GET /bg-upper.png HTTP/1.1" 200 3103

192.168.100.131 - - [29/Apr/2025:15:41:06 +0800] "GET /bg-button.png HTTP/1.1" 200 713

192.168.100.131 - - [29/Apr/2025:15:41:06 +0800] "GET /asf-logo-wide.svg HTTP/1.1" 200 27235

192.168.100.131 - - [29/Apr/2025:15:41:22 +0800] "GET / HTTP/1.1" 200 11248

192.168.100.132 - - [29/Apr/2025:15:46:33 +0800] "GET / HTTP/1.1" 200 11248

192.168.100.132 - - [29/Apr/2025:15:46:33 +0800] "GET /tomcat.svg HTTP/1.1" 200 67795

192.168.100.132 - - [29/Apr/2025:15:46:33 +0800] "GET /asf-logo-wide.svg HTTP/1.1" 200 27235

192.168.100.132 - - [29/Apr/2025:15:46:33 +0800] "GET /bg-upper.png HTTP/1.1" 200 3103

192.168.100.132 - - [29/Apr/2025:15:46:33 +0800] "GET /favicon.ico HTTP/1.1" 200 21630

192.168.100.132 - - [29/Apr/2025:15:47:45 +0800] "GET / HTTP/1.1" 200 11248

192.168.100.131 - - [29/Apr/2025:15:47:54 +0800] "GET / HTTP/1.1" 200 112484、keepalived安装

以下内容在lb-haproxy1,lb-haproxy2中执行。

(1)、通过命令yum install keepalived -y安装keepalived程序,安装过程如下:

bash

[root@lb-haproxy1 haproxy]# yum install keepalived -y

Last metadata expiration check: 1:07:06 ago on Tue 29 Apr 2025 02:54:05 PM CST.

Dependencies resolved.

========================================================================================================================================================

Package Architecture Version Repository Size

========================================================================================================================================================

Installing:

keepalived x86_64 2.2.8-4.el9_5 appstream 553 k

Installing dependencies:

lm_sensors-libs x86_64 3.6.0-10.el9 appstream 41 k

mariadb-connector-c x86_64 3.2.6-1.el9_0 appstream 195 k

mariadb-connector-c-config noarch 3.2.6-1.el9_0 appstream 9.8 k

net-snmp-agent-libs x86_64 1:5.9.1-17.el9 appstream 693 k

Transaction Summary

========================================================================================================================================================

Install 5 Packages

Total download size: 1.5 M

Installed size: 4.4 M

Downloading Packages:

(1/5): mariadb-connector-c-config-3.2.6-1.el9_0.noarch.rpm 85 kB/s | 9.8 kB 00:00

(2/5): lm_sensors-libs-3.6.0-10.el9.x86_64.rpm 200 kB/s | 41 kB 00:00

(3/5): mariadb-connector-c-3.2.6-1.el9_0.x86_64.rpm 643 kB/s | 195 kB 00:00

(4/5): net-snmp-agent-libs-5.9.1-17.el9.x86_64.rpm 1.6 MB/s | 693 kB 00:00

(5/5): keepalived-2.2.8-4.el9_5.x86_64.rpm 1.2 MB/s | 553 kB 00:00

--------------------------------------------------------------------------------------------------------------------------------------------------------

Total 933 kB/s | 1.5 MB 00:01

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : mariadb-connector-c-config-3.2.6-1.el9_0.noarch 1/5

Installing : mariadb-connector-c-3.2.6-1.el9_0.x86_64 2/5

Installing : lm_sensors-libs-3.6.0-10.el9.x86_64 3/5

Installing : net-snmp-agent-libs-1:5.9.1-17.el9.x86_64 4/5

Installing : keepalived-2.2.8-4.el9_5.x86_64 5/5

Running scriptlet: keepalived-2.2.8-4.el9_5.x86_64 5/5

Verifying : lm_sensors-libs-3.6.0-10.el9.x86_64 1/5

Verifying : mariadb-connector-c-3.2.6-1.el9_0.x86_64 2/5

Verifying : mariadb-connector-c-config-3.2.6-1.el9_0.noarch 3/5

Verifying : net-snmp-agent-libs-1:5.9.1-17.el9.x86_64 4/5

Verifying : keepalived-2.2.8-4.el9_5.x86_64 5/5

Installed:

keepalived-2.2.8-4.el9_5.x86_64 lm_sensors-libs-3.6.0-10.el9.x86_64 mariadb-connector-c-3.2.6-1.el9_0.x86_64

mariadb-connector-c-config-3.2.6-1.el9_0.noarch net-snmp-agent-libs-1:5.9.1-17.el9.x86_64

Complete!安装完成后,通过命令haproxy -v查看haprox版本信息。

bash

[root@lb-haproxy1 haproxy]# haproxy -v

HAProxy version 2.4.22-f8e3218 2023/02/14 - https://haproxy.org/

Status: long-term supported branch - will stop receiving fixes around Q2 2026.

Known bugs: http://www.haproxy.org/bugs/bugs-2.4.22.html

Running on: Linux 5.14.0-503.14.1.el9_5.x86_64 #1 SMP PREEMPT_DYNAMIC Fri Nov 15 12:04:32 UTC 2024 x86_64(2)、修改配置文件/etc/keepalived/keepalived.conf,使高可用对haproxy两台服务器生效。

编辑keepalived.conf加入如下内容:

bash

#Configuration File for keepalived

global_defs {

router_id lb_master #主节点标识,需唯一

script_user root

enable_script_security

}

vrrp_script check_haproxy {

script "/usr/bin/killall -0 haproxy" #检查haproxy进程是否存在

interval 2 # 每2秒检查一次

weight -15 # 检查失败时降低优先级,这里注意减少的优先级要比备节点的优先级小:

fall 2 # require 2 failures for KO

rise 2 # require 2 successes for OK

}

vrrp_instance db_vip {

state MASTER # 初始状态为MASTER

interface ens33 # 监听的网卡名称(需根据实际修改)

virtual_router_id 51 # 虚拟路由ID,主从必须相同

priority 100 # 主节点优先级(1-255) 主节点优先级(priority 100)高于备节点(priority 90)

nopreempt

advert_int 1 # VRRP通告间隔(秒)

authentication {

auth_type PASS # 认证方式

auth_pass secret456 # 密码(主从需一致)

}

virtual_ipaddress {

192.168.100.135 # 虚拟IP(VIP),客户端访问的IP

}

track_script {

check_haproxy # 关联健康检查脚本

}

notify_master "/etc/keepalived/notify.sh MASTER" # 切换为主时执行的脚本(可选)

}启动keepalived服务并设置开机自动启动,查看keepalived状态,为运行状态。

bash

[root@lb-haproxy1 keepalived]# systemctl start keepalived

[root@lb-haproxy1 keepalived]# systemctl enable keepalived

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

[root@lb-haproxy1 keepalived]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; preset: disabled)

Active: active (running) since Tue 2025-04-29 16:15:54 CST; 39s ago

Main PID: 36609 (keepalived)

Tasks: 2 (limit: 22798)

Memory: 4.4M

CPU: 209ms

CGroup: /system.slice/keepalived.service

├─36609 /usr/sbin/keepalived --dont-fork -D

└─36610 /usr/sbin/keepalived --dont-fork -D

Apr 29 16:16:20 lb-haproxy1 Keepalived_vrrp[36610]: (db_vip) ip address associated with VRID 51 not present in MASTER advert: 192.168.100.135

Apr 29 16:16:21 lb-haproxy1 Keepalived_vrrp[36610]: (db_vip) ip address associated with VRID 51 not present in MASTER advert: 192.168.100.135

Apr 29 16:16:27 lb-haproxy1 Keepalived_vrrp[36610]: (db_vip) ip address associated with VRID 51 not present in MASTER advert: 192.168.100.135

Apr 29 16:16:28 lb-haproxy1 Keepalived_vrrp[36610]: (db_vip) ip address associated with VRID 51 not present in MASTER advert: 192.168.100.135当两台服务器上的keepalived服务启动后,由于lb-haproxy1服务器优先级较高,所以vip处于lb-haproxy1服务器上,通过ip addr命令可以查看到。

(4)、开启浏览器,通过VIP访问tomcat首页

通过以上步骤完成了HAproxy+keepalived+tomcat高可用负载均衡的配置

三、测试

1、服务器停机测试

(1)、关闭lb-haproxy1服务器,在lb-haproxy2服务器上查看VIP是否正常切换至该服务器上。

bash

[root@lb-haproxy1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:94:e4:e9 brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 192.168.100.131/24 brd 192.168.100.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.100.135/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe94:e4e9/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@lb-haproxy1 ~]# shutdown -h now

bash

[root@lb-haproxy2 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a8:64:88 brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 192.168.100.132/24 brd 192.168.100.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.100.135/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea8:6488/64 scope link noprefixroute

valid_lft forever preferred_lft forever从以上可以看到VIP192.168.100.135从服务器lb-haproxy1转移到了服务器lb-haproxy2。curl 192.168.100.135可正常访问tomcat服务。当服务器lb-haproxy1重新启动后由于keepalived优先级别高于lb-haproxy2,所以VIP192.168.100.135会重新转移到服务器lb-haproxy1。

2、停止haproxy服务测试

(1)、关闭lb-haproxy1服务器上haproxy服务,在lb-haproxy2服务器上查看VIP是否正常切换至该服务器上。

bash

[root@lb-haproxy1 ~]# systemctl stop haproxy.service

[root@lb-haproxy1 ~]# ps -ef|grep -v grep|grep /usr/sbin/haproxy

[root@lb-haproxy1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:94:e4:e9 brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 192.168.100.131/24 brd 192.168.100.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe94:e4e9/64 scope link noprefixroute

valid_lft forever preferred_lft forever

bash

[root@lb-haproxy2 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a8:64:88 brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 192.168.100.132/24 brd 192.168.100.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.100.135/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea8:6488/64 scope link noprefixroute

valid_lft forever preferred_lft forever从以上可以看到VIP192.168.100.135从服务器lb-haproxy1转移到了服务器lb-haproxy2。curl 192.168.100.135可正常访问tomcat服务。当服务器lb-haproxy1重新启动haproxy服务后由于keepalived优先级别高于lb-haproxy2,所以VIP192.168.100.135会重新转移到服务器lb-haproxy1。

总结

通过以上配置实现了haproxy+keepalived+tomcat高可用负载均衡部署,负载均衡服务器也可以用nginx来替代。实践中可根据需求加以选择。