前言

在AI编程时代,如果自己能够知道一些可行的解决方案,那么描述清楚交给AI,可以有很大的帮助。

但是我们往往不知道真正可行的解决方案是什么?

我自己有过这样的经历,遇到一个需求,我不知道有哪些解决方案,就去问AI,然后AI输出一大堆东西,我一个个去试,然后再换个AI问,又提出了不同的解决方案。

在换AI问与一个个试的过程中好像浪费了很多时间。

突然出现了一个想法,不是可以一下子把问题丢给多个AI,然后再总结一下出现最多的三个方案。那么这三个方案可行的概率会大一点。然后再丢给Cursor或者Cline等AI编程工具帮我们实现一下。

这样做的缺点是比起直接在网页上问,调用API需要耗费Token,但是硅基流动给我赠送了很多额度还没用完,随便玩一下。

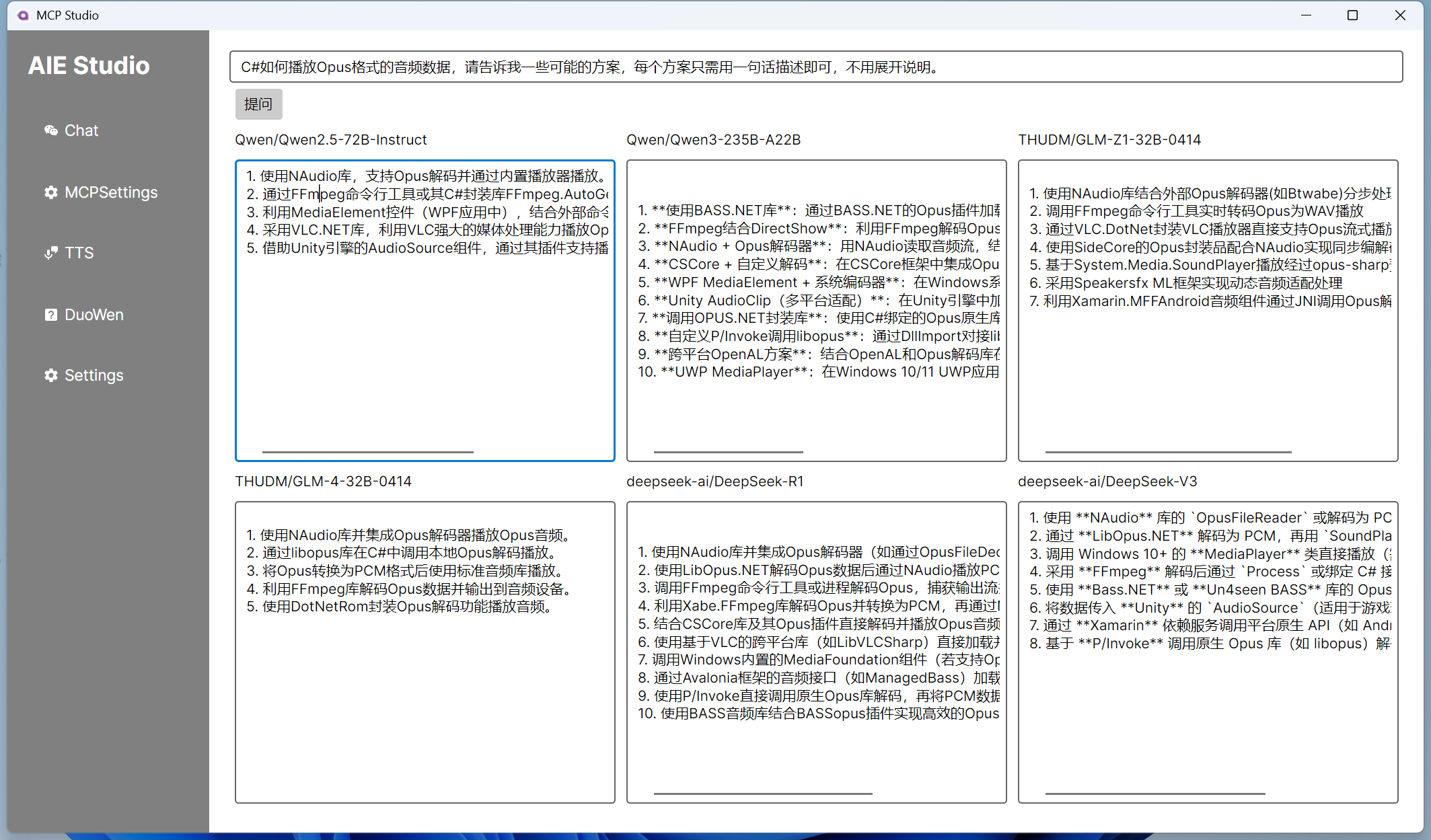

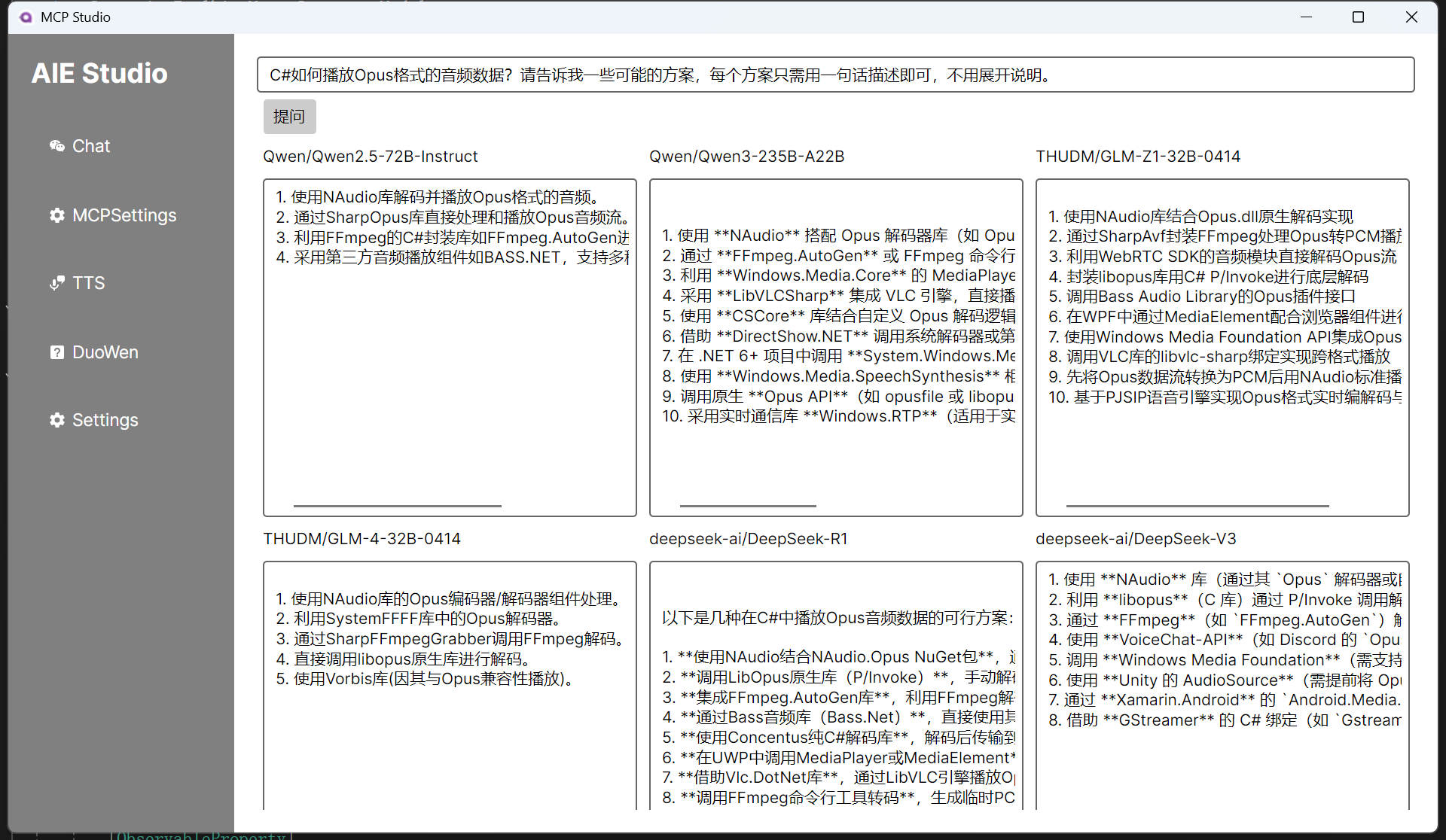

实现效果:

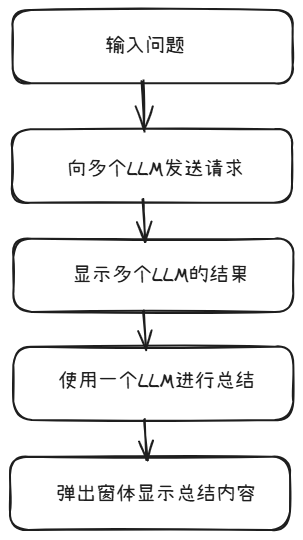

实现方案

实现方案也很简单,如下图所示:

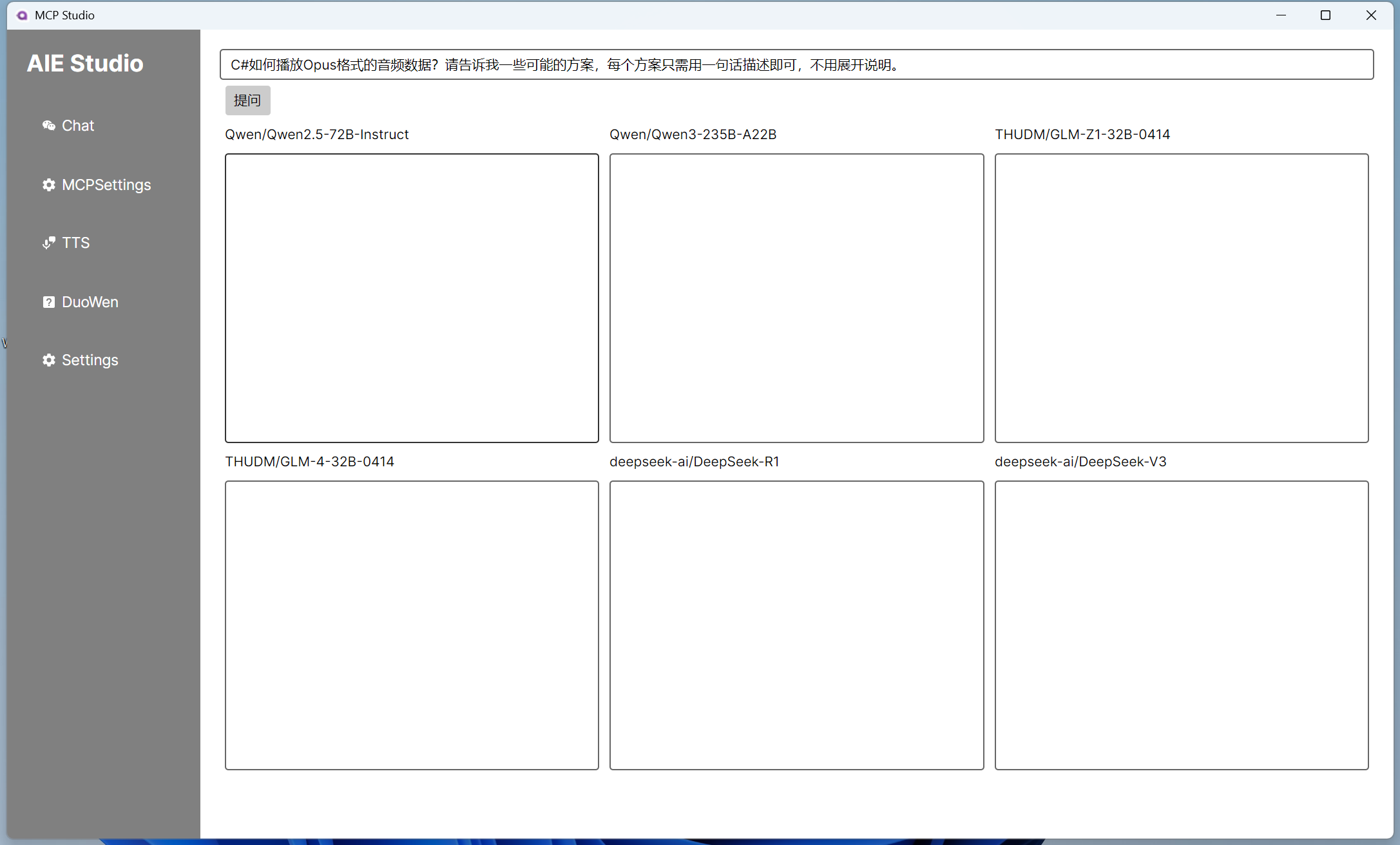

先设计一下布局:

xaml

<UserControl xmlns="https://github.com/avaloniaui"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

mc:Ignorable="d" d:DesignWidth="800" d:DesignHeight="450"

xmlns:vm="using:AIE_Studio.ViewModels"

x:DataType="vm:DuoWenViewModel"

x:Class="AIE_Studio.Views.DuoWenView">

<StackPanel>

<TextBox Text="{Binding Question}"></TextBox>

<Button Content="提问" Command="{Binding DuoWenStreamingParallelCommand}" Margin="5"/>

<ScrollViewer VerticalScrollBarVisibility="Auto">

<Grid>

<Grid.RowDefinitions>

<RowDefinition Height="*"/>

<RowDefinition Height="*"/>

</Grid.RowDefinitions>

<Grid.ColumnDefinitions>

<ColumnDefinition Width="*"/>

<ColumnDefinition Width="*"/>

<ColumnDefinition Width="*"/>

</Grid.ColumnDefinitions>

<!-- Row 1, Column 1 -->

<StackPanel Grid.Row="0" Grid.Column="0">

<TextBlock Text="{Binding Title1}" Margin="5"/>

<ScrollViewer VerticalScrollBarVisibility="Auto">

<TextBox Text="{Binding Result1}" AcceptsReturn="True" Margin="5" Height="300"/>

</ScrollViewer>

</StackPanel>

<!-- Row 1, Column 2 -->

<StackPanel Grid.Row="0" Grid.Column="1">

<TextBlock Text="{Binding Title2}" Margin="5"/>

<ScrollViewer VerticalScrollBarVisibility="Auto">

<TextBox Text="{Binding Result2}" AcceptsReturn="True" Margin="5" Height="300"/>

</ScrollViewer>

</StackPanel>

<!-- Row 1, Column 3 -->

<StackPanel Grid.Row="0" Grid.Column="2">

<TextBlock Text="{Binding Title3}" Margin="5"/>

<ScrollViewer VerticalScrollBarVisibility="Auto">

<TextBox Text="{Binding Result3}" AcceptsReturn="True" Margin="5" Height="300"/>

</ScrollViewer>

</StackPanel>

<!-- Row 2, Column 1 -->

<StackPanel Grid.Row="1" Grid.Column="0">

<TextBlock Text="{Binding Title4}" Margin="5"/>

<ScrollViewer VerticalScrollBarVisibility="Auto">

<TextBox Text="{Binding Result4}" AcceptsReturn="True" Margin="5" Height="300"/>

</ScrollViewer>

</StackPanel>

<!-- Row 2, Column 2 -->

<StackPanel Grid.Row="1" Grid.Column="1">

<TextBlock Text="{Binding Title5}" Margin="5"/>

<ScrollViewer VerticalScrollBarVisibility="Auto">

<TextBox Text="{Binding Result5}" AcceptsReturn="True" Margin="5" Height="300"/>

</ScrollViewer>

</StackPanel>

<!-- Row 2, Column 3 -->

<StackPanel Grid.Row="1" Grid.Column="2">

<TextBlock Text="{Binding Title6}" Margin="5"/>

<ScrollViewer VerticalScrollBarVisibility="Auto">

<TextBox Text="{Binding Result6}" AcceptsReturn="True" Margin="5" Height="300"/>

</ScrollViewer>

</StackPanel>

</Grid>

</ScrollViewer>

</StackPanel>

</UserControl>

在ViewModel中先来看一下最原始的显示结果的方式:

csharp

[RelayCommand]

private async Task DuoWen()

{

ApiKeyCredential apiKeyCredential = new ApiKeyCredential("your api key");

OpenAIClientOptions openAIClientOptions = new OpenAIClientOptions();

openAIClientOptions.Endpoint = new Uri("https://api.siliconflow.cn/v1");

IChatClient client1 =

new OpenAI.Chat.ChatClient("Qwen/Qwen2.5-72B-Instruct", apiKeyCredential, openAIClientOptions).AsChatClient();

var result1 = await client1.GetResponseAsync(Question);

Result1 = result1.ToString();

IChatClient client2 =

new OpenAI.Chat.ChatClient("Qwen/Qwen3-235B-A22B", apiKeyCredential, openAIClientOptions).AsChatClient();

var result2 = await client2.GetResponseAsync(Question);

Result2 = result2.ToString();

IChatClient client3 =

new OpenAI.Chat.ChatClient("THUDM/GLM-Z1-32B-0414", apiKeyCredential, openAIClientOptions).AsChatClient();

var result3 = await client3.GetResponseAsync(Question);

Result3 = result3.ToString();

IChatClient client4 =

new OpenAI.Chat.ChatClient("THUDM/GLM-4-32B-0414", apiKeyCredential, openAIClientOptions).AsChatClient();

var result4 = await client4.GetResponseAsync(Question);

Result4 = result4.ToString();

IChatClient client5 =

new OpenAI.Chat.ChatClient("deepseek-ai/DeepSeek-R1", apiKeyCredential, openAIClientOptions).AsChatClient();

var result5 = await client5.GetResponseAsync(Question);

Result5 = result5.ToString();

IChatClient client6 =

new OpenAI.Chat.ChatClient("deepseek-ai/DeepSeek-V3", apiKeyCredential, openAIClientOptions).AsChatClient();

var result6 = await client6.GetResponseAsync(Question);

Result6 = result6.ToString();这种最简单的方式是非流式的并且也不是并行的,你会发现一个结束了才会继续向下一个提问。

但至少已经成功显示结果了,现在想要实现的是有一个窗体进行总结。

窗体设计:

xaml

<Window xmlns="https://github.com/avaloniaui"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

mc:Ignorable="d" d:DesignWidth="600" d:DesignHeight="450"

xmlns:vm="using:AIE_Studio.ViewModels"

x:Class="AIE_Studio.Views.ShowResultWindow"

x:DataType="vm:ShowResultWindowViewModel"

Title="ShowResultWindow">

<StackPanel>

<TextBlock Text="最终结果:" Margin="5" />

<ScrollViewer VerticalScrollBarVisibility="Auto">

<TextBox Text="{Binding ReceivedValue}" AcceptsReturn="True" Margin="5" Height="400"/>

</ScrollViewer>

</StackPanel>

</Window>窗体的ViewModel:

csharp

public partial class ShowResultWindowViewModel : ViewModelBase

{

[ObservableProperty]

private string? receivedValue;

}然后只要在全部都有结果之后,再进行一下总结即可。

csharp

IChatClient client7 =

new OpenAI.Chat.ChatClient("Qwen/Qwen2.5-72B-Instruct", apiKeyCredential, openAIClientOptions).AsChatClient();

List<Microsoft.Extensions.AI.ChatMessage> messages = new List<Microsoft.Extensions.AI.ChatMessage>();

string prompt = $"""

请分析以下各个助手给出的方案,选择其中提到最多的3种方案。

助手1:{result1}

助手2:{result2}

助手3:{result3}

助手4:{result4}

助手5:{result5}

助手6:{result6}

""";

messages.Add(new Microsoft.Extensions.AI.ChatMessage(ChatRole.User, prompt));

var result7 = await client7.GetResponseAsync(messages);

var showWindow = _serviceProvider.GetRequiredService<ShowResultWindow>();

var showWindowViewModel = _serviceProvider.GetRequiredService<ShowResultWindowViewModel>();

showWindowViewModel.ReceivedValue = result7.ToString();

showWindow.DataContext = showWindowViewModel;

showWindow.Show();以上就成功实现了。

但是还是有可以改进的地方,首先是并行,一个一个问不如同时问。

csharp

[RelayCommand]

private async Task DuoWenParallel()

{

ApiKeyCredential apiKeyCredential = new ApiKeyCredential("your api key");

OpenAIClientOptions openAIClientOptions = new OpenAIClientOptions();

openAIClientOptions.Endpoint = new Uri("https://api.siliconflow.cn/v1");

// 创建一个列表来存储所有的任务

var tasks = new List<Task<string>>();

// 向每个助手发送请求并将任务添加到列表中

tasks.Add(GetResponseFromClient("Qwen/Qwen2.5-72B-Instruct", apiKeyCredential, openAIClientOptions));

tasks.Add(GetResponseFromClient("Qwen/Qwen3-235B-A22B", apiKeyCredential, openAIClientOptions));

tasks.Add(GetResponseFromClient("THUDM/GLM-Z1-32B-0414", apiKeyCredential, openAIClientOptions));

tasks.Add(GetResponseFromClient("THUDM/GLM-4-32B-0414", apiKeyCredential, openAIClientOptions));

tasks.Add(GetResponseFromClient("deepseek-ai/DeepSeek-R1", apiKeyCredential, openAIClientOptions));

tasks.Add(GetResponseFromClient("deepseek-ai/DeepSeek-V3", apiKeyCredential, openAIClientOptions));

// 等待所有任务完成

var results = await Task.WhenAll(tasks);

// 将结果分配给相应的属性

Result1 = results[0];

Result2 = results[1];

Result3 = results[2];

Result4 = results[3];

Result5 = results[4];

Result6 = results[5];

}

private async Task<string> GetResponseFromClient(string model, ApiKeyCredential apiKeyCredential, OpenAIClientOptions options)

{

IChatClient client = new OpenAI.Chat.ChatClient(model, apiKeyCredential, options).AsChatClient();

var result = await client.GetResponseAsync(Question);

return result.ToString();

}现在虽然是并行了,但是只有等到所有助手都回答了之后,才会统一显示,用户体验也不好。

改成流式:

csharp

[RelayCommand]

private async Task DuoWenStreaming()

{

ApiKeyCredential apiKeyCredential = new ApiKeyCredential("your api key");

OpenAIClientOptions openAIClientOptions = new OpenAIClientOptions();

openAIClientOptions.Endpoint = new Uri("https://api.siliconflow.cn/v1");

//string question = "C#如何获取鼠标滑动选中的值?请告诉我一些可能的方案,每个方案只需用一句话描述即可,不用展开说明。";

IChatClient client1 =

new OpenAI.Chat.ChatClient("Qwen/Qwen2.5-72B-Instruct", apiKeyCredential, openAIClientOptions).AsChatClient();

await foreach (var item in client1.GetStreamingResponseAsync(Question))

{

Result1 += item.ToString();

}

}现在查看效果:

最后再改造成流式+并行就好了。

csharp

[RelayCommand]

private async Task DuoWenStreamingParallel()

{

ApiKeyCredential apiKeyCredential = new ApiKeyCredential("your api key");

OpenAIClientOptions openAIClientOptions = new OpenAIClientOptions();

openAIClientOptions.Endpoint = new Uri("https://api.siliconflow.cn/v1");

// Clear previous results

Result1 = Result2 = Result3 = Result4 = Result5 = Result6 = string.Empty;

// Create a list of tasks for parallel processing

var tasks = new List<Task>

{

ProcessStreamingResponse("Qwen/Qwen2.5-72B-Instruct", apiKeyCredential, openAIClientOptions, (text) => Result1 += text),

ProcessStreamingResponse("Qwen/Qwen3-235B-A22B", apiKeyCredential, openAIClientOptions, (text) => Result2 += text),

ProcessStreamingResponse("THUDM/GLM-Z1-32B-0414", apiKeyCredential, openAIClientOptions, (text) => Result3 += text),

ProcessStreamingResponse("THUDM/GLM-4-32B-0414", apiKeyCredential, openAIClientOptions, (text) => Result4 += text),

ProcessStreamingResponse("deepseek-ai/DeepSeek-R1", apiKeyCredential, openAIClientOptions, (text) => Result5 += text),

ProcessStreamingResponse("deepseek-ai/DeepSeek-V3", apiKeyCredential, openAIClientOptions, (text) => Result6 += text)

};

// Wait for all streaming responses to complete

await Task.WhenAll(tasks);

IChatClient client7 =

new OpenAI.Chat.ChatClient("Qwen/Qwen2.5-72B-Instruct", apiKeyCredential, openAIClientOptions).AsChatClient();

List<Microsoft.Extensions.AI.ChatMessage> messages = new List<Microsoft.Extensions.AI.ChatMessage>();

string prompt = $"""

请分析以下各个助手给出的方案,选择其中提到最多的3种方案。

助手1:{Result1}

助手2:{Result2}

助手3:{Result3}

助手4:{Result4}

助手5:{Result5}

助手6:{Result6}

""";

messages.Add(new Microsoft.Extensions.AI.ChatMessage(ChatRole.User, prompt));

var result7 = await client7.GetResponseAsync(messages);

var showWindow = _serviceProvider.GetRequiredService<ShowResultWindow>();

var showWindowViewModel = _serviceProvider.GetRequiredService<ShowResultWindowViewModel>();

showWindowViewModel.ReceivedValue = result7.ToString();

showWindow.DataContext = showWindowViewModel;

showWindow.Show();

}

private async Task ProcessStreamingResponse(string model, ApiKeyCredential apiKeyCredential, OpenAIClientOptions options, Action<string> updateResult)

{

IChatClient client = new OpenAI.Chat.ChatClient(model, apiKeyCredential, options).AsChatClient();

await foreach (var item in client.GetStreamingResponseAsync(Question))

{

updateResult(item.ToString());

}

}这里使用了一个带有一个参数的委托来更新每个助手回复的结果。

现在再查看效果:

Qwen/Qwen3-235B-A22B、THUDM/GLM-Z1-32B-0414、deepseek-ai/DeepSeek-R1有思考过程,返回结果比较慢。

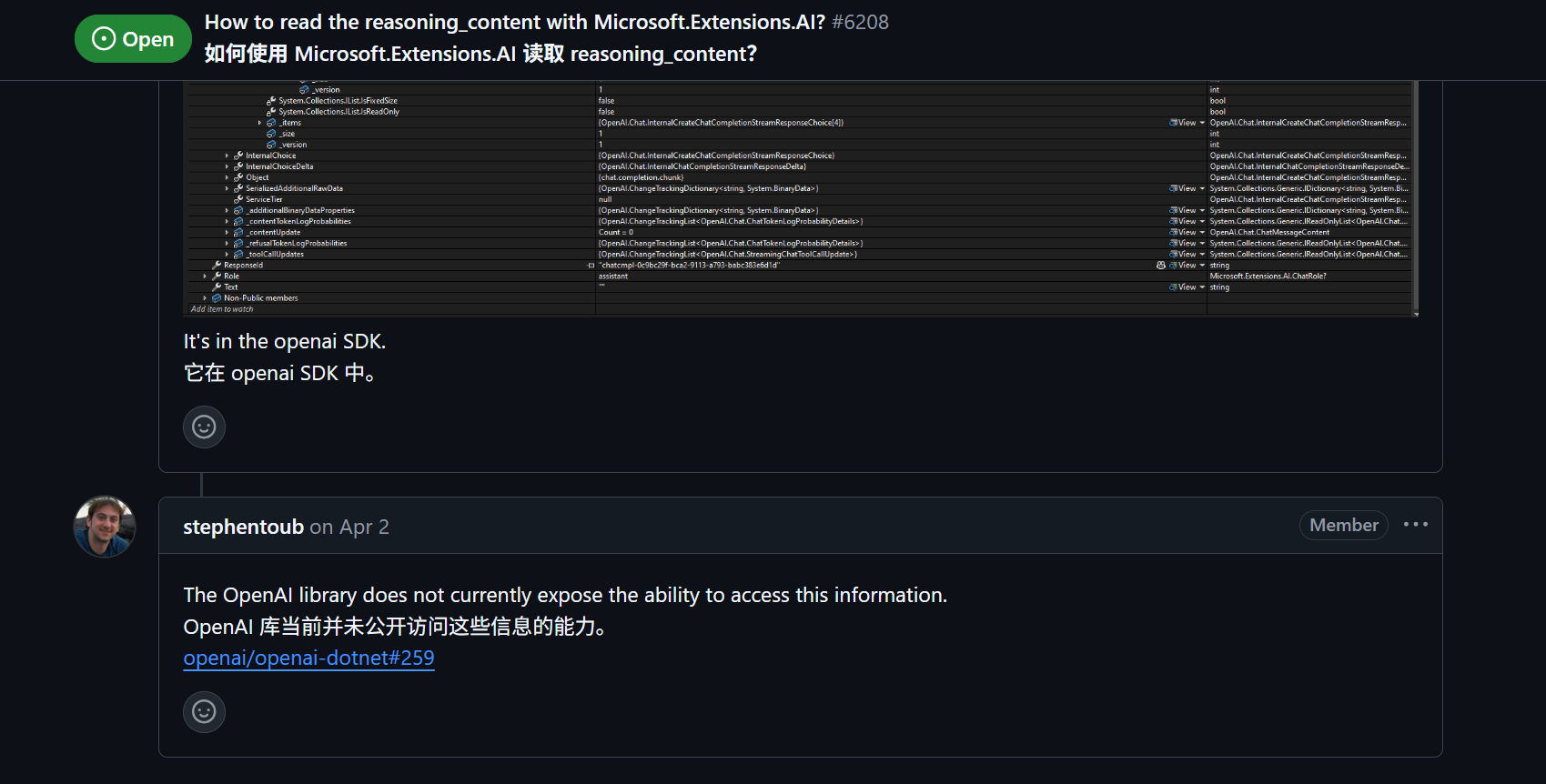

目前Microsoft.Extensions.AI.OpenAI好像还无法获取思考内容。

等待久一会之后,可以看到结果都出来了:

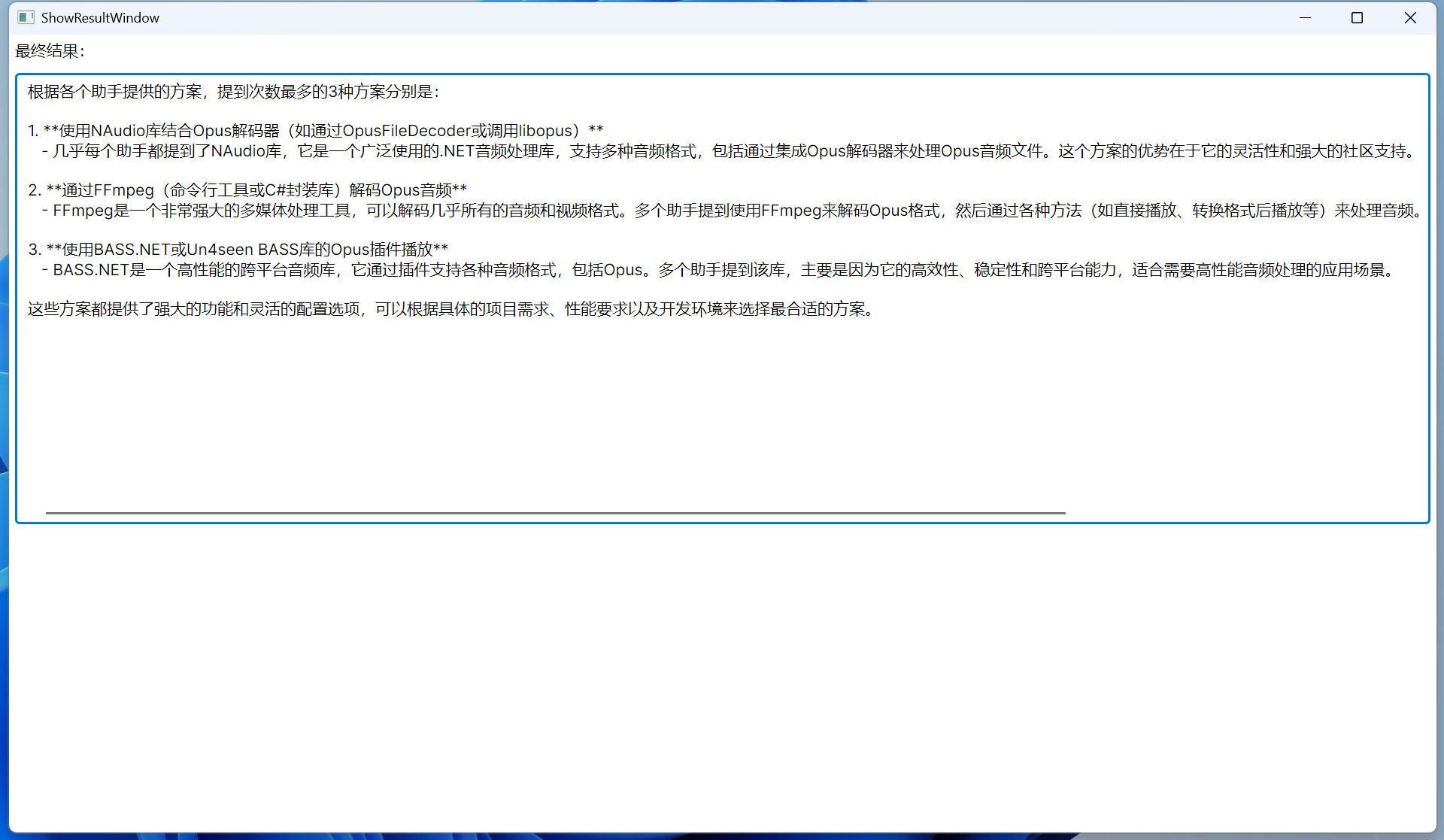

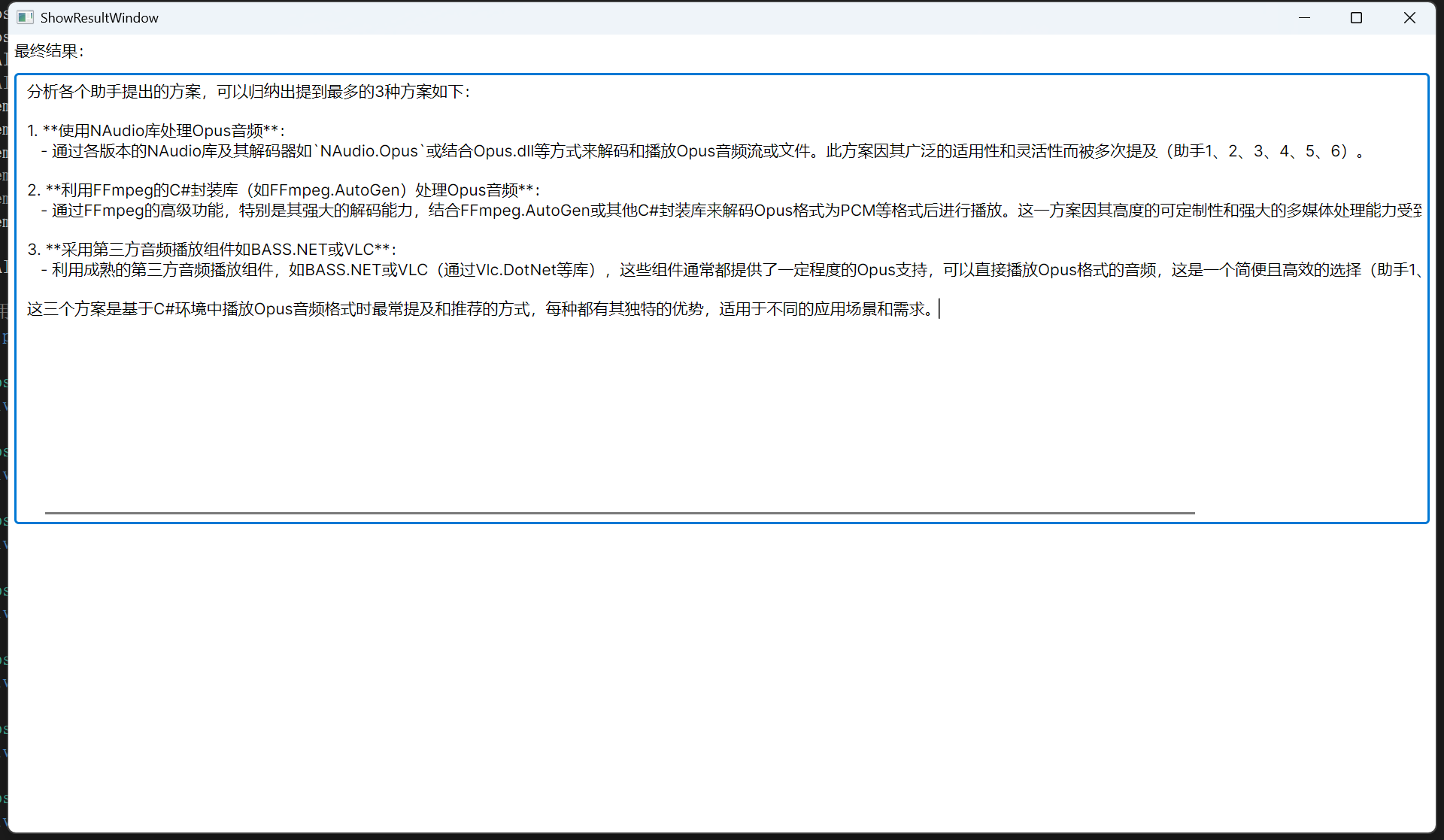

然后总结窗口会显示最终的总结内容:

确定方案之后可以让Cursor或者Cline帮我们写一下试试。