1./home/pc/2026BOSS/biaoding_ws/src/sensor_calibration/cam_lidar_calibration/cfg/initial_params.yaml

%YAML:1.0

---

# 图像的话题

image_topic: "/usb_cam/image_raw"

#激光点云话题

pointcloud_topic: "/velodyne_points"

# 0:非鱼眼相机

distortion_model: 0

# 激光雷达线数

scan_line: 16

# 标定棋盘格的参数:内角点数量(长×宽)、每个格子的尺寸(毫米)

chessboard:

length: 11

width: 8

grid_size: 50

# 标定板实际尺寸大小(毫米)

board_dimension:

length: 600

width: 450

# 棋盘纸中心与标定板中心的偏移

translation_error:

length: 0

width: 0

# 相机的内参矩阵K

camera_matrix: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 9.7180866511700151e+02, 0.0000000000000000e+00, 3.3491215826758486e+02, 0.0000000000000000e+00, 9.7193700964989625e+02, 1.9635418932283801e+02, 0.0000000000000000e+00, 0.0000000000000000e+00, 1.0000000000000000e+00 ]

# 相机的失真系数D

distortion_coefficients: !!opencv-matrix

rows: 5

cols: 1

dt: d

data: [ -3.6265908294014132e-01, -7.2711317201317649e-01, 6.1342672227935073e-04, 2.1504607127206905e-03, 7.9988582487419491e+00 ]

# 相机图像分辨率

image_pixel:

width: 640

height: 4802./home/pc/2026BOSS/biaoding_ws/src/sensor_calibration/cam_lidar_calibration/src/input_sample.cpp

// 按 'i' 来采集样本,按 'o' 来开始优化,按 'e' 来终止节点

#include "ros/ros.h" // 引入 ROS 头文件

#include "std_msgs/Int8.h" // 引入标准消息类型 Int8

#include <termios.h> // 引入终端控制头文件,以实现非阻塞输入

// 获取一个字符的非阻塞输入

int getch()

{

static struct termios oldt, newt;

tcgetattr(STDIN_FILENO, &oldt); // 保存当前终端设置

newt = oldt;

newt.c_lflag &= ~(ICANON); // 禁用缓冲模式,使输入不需要回车

tcsetattr(STDIN_FILENO, TCSANOW, &newt); // 应用新的终端设置

int c = getchar(); // 读取一个字符(非阻塞)

tcsetattr(STDIN_FILENO, TCSANOW, &oldt); // 恢复旧的终端设置

return c; // 返回输入的字符

}

int main(int argc, char** argv)

{

ros::init(argc, argv, "input_sample"); // 初始化 ROS 节点,并命名为 "input_sample"

ros::NodeHandle sample("~"); // 创建节点句柄,用于与 ROS 通信

ros::Publisher sample_publisher; // 声明一个发布者对象

std_msgs::Int8 flag; // 创建一个 Int8 型的消息标志

sample_publisher = sample.advertise<std_msgs::Int8>("/flag", 20); // 初始化发布者,主题名称为 "/flag"

// 等待订阅者连接

while(sample_publisher.getNumSubscribers() == 0)

{

ROS_ERROR("Waiting for a subscriber ..."); // 输出错误信息,提示正在等待订阅者

sleep(2); // 暂停 2 秒

}

ROS_INFO("Found a subscriber!"); // 一旦找到订阅者,输出信息

// 提示用户操作

std::cout << " Now, press an appropriate key ... " << std::endl;

std::cout << " 'i' to take an input sample" << std::endl; // 用户提示:按 'i' 采集样本

std::cout << " 'o' to start the optimization process" << std::endl; // 用户提示:按 'o' 开始优化

std::cout << " 'e' to end the calibration process" << std::endl; // 用户提示:按 'e' 结束校准

ros::spinOnce(); // 处理任何ROS回调

// 主循环,直到节点关闭

while(ros::ok())

{

int c = getch(); // 获取用户按键输入

if (c == 'i') // 如果按下 'i' 键

{

flag.data = 1; // 设置标志为 1,表示采集样本

ROS_INFO("pressed i, take an input sample!"); // 输出信息,提示采集样本

sample_publisher.publish(flag); // 发布标志

}

if (c == 'o') // 如果按下 'o' 键

{

flag.data = 2; // 设置标志为 2,表示开始优化

sample_publisher.publish(flag); // 发布标志

ROS_INFO("starting optimization ..."); // 输出信息,提示开始优化

}

if (c == '\n') // 如果按下回车键

{

flag.data = 4; // 设置标志为 4,表示使用输入样本

sample_publisher.publish(flag); // 发布标志

}

if (c == 'e') // 如果按下 'e' 键

{

ros::shutdown(); // 关闭 ROS 节点

}

}

return 0; // 退出程序

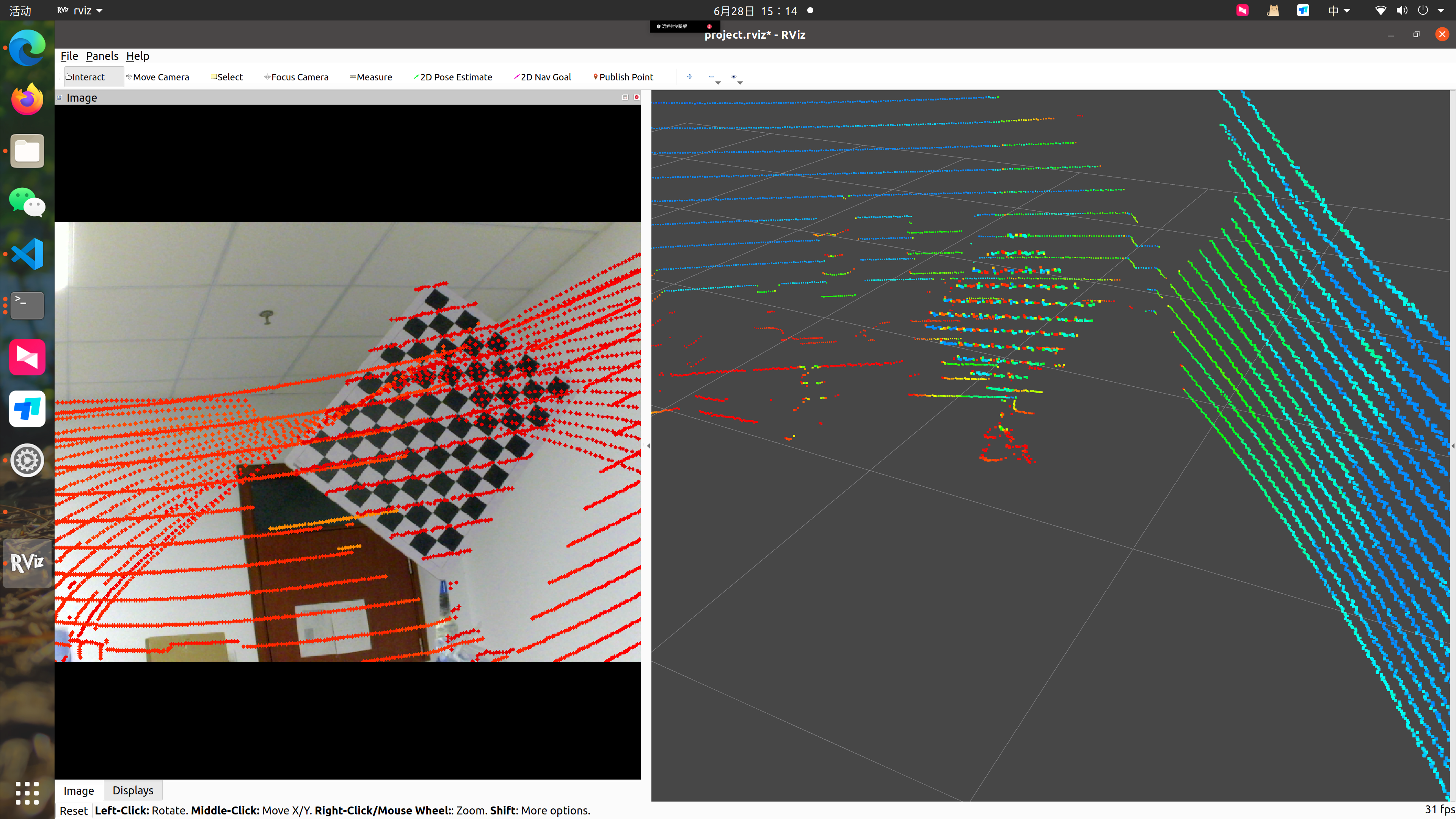

}3./home/pc/2026BOSS/biaoding_ws/src/sensor_calibration/cam_lidar_calibration/src/projector.cpp

#include <cam_lidar_calibration/projector.h> // 引入projctor类的定义

// Projection类的构造函数

Projection::Projection(ros::NodeHandle &nh)

{

// 1. 加载标定文件的参数

if (nh.getParam("cfg", calibration_file)) // 从参数服务器获取标定文件路径

{

std::cout << "标定文件 :" << calibration_file << std::endl; // 输出标定文件路径

}

else

{

std::cerr << "标定文件目录不存在" << std::endl

<< "命令: rosrun calibration collect_hand_eye_data cfg:=/home/jh/birl/module_robot_ws/src/sensor_calibration" << std::endl;

return; // 如果参数读取失败,则返回

}

read_extrinsic(); // 读取标定文件中的外参

// 2. 订阅图像和点云数据并进行时间戳近似同步

pointcloud_pub_ = nh.advertise<sensor_msgs::PointCloud2>("/Syn/velodyne_points", 1); // 发布经过处理的点云数据

image_pub_ = nh.advertise<sensor_msgs::Image>("/Syn/image", 1); // 发布经过处理的图像

// 3. 订阅相机和激光雷达的原始数据

message_filters::Subscriber<sensor_msgs::Image> image_sub(nh, "/usb_cam/image_raw", 1); // 订阅相机图像

message_filters::Subscriber<sensor_msgs::PointCloud2> velodyne_sub(nh, "/velodyne_points", 1); // 订阅激光雷达点云

typedef message_filters::sync_policies::ApproximateTime<sensor_msgs::Image, sensor_msgs::PointCloud2> MySyncPolicy; // 定义一个近似时间同步策略

message_filters::Synchronizer<MySyncPolicy> sync(MySyncPolicy(10), image_sub, velodyne_sub); // 创建同步器

sync.registerCallback(boost::bind(&Projection::image_pointcolud_cb, this, _1, _2)); // 注册回调函数

reproject_image_pub_ = nh.advertise<sensor_msgs::Image>("/reprojected_image", 1, true); // 发布重投影得到的图像

ros::spin(); // 进入ROS事件循环

}

// Projection类的析构函数

Projection::~Projection()

{

}

// 用于读取外参和内参文件的函数

void Projection::read_extrinsic()

{

// 打开标定文件

cv::FileStorage fs(calibration_file, cv::FileStorage::READ | cv::FileStorage::FORMAT_YAML);

if (!fs.isOpened()) // 检查文件是否成功打开

{

std::cout << " [ " + calibration_file + " ] 文件打开失败" << std::endl; // 输出错误信息

return; // 若失败,退出函数

}

cv::Mat lidar_camera_T; // 存储LiDAR到相机的变换矩阵

fs["lidar_camera_extrinsic"] >> lidar_camera_T; // 从文件中读取该矩阵

fs["camera_matrix"] >> camera_matrix; // 读取相机内参矩阵

fs["camera_distortion_coefficients"] >> distortion_coefficients; // 读取相机畸变系数

image_width = fs["camera_image_width"]; // 读取图像宽度

image_height = fs["camera_image_height"]; // 读取图像高度

// 输出读取的相机参数

std::cout << "camera K:\n" << camera_matrix << std::endl;

std::cout << "camera D:\n" << distortion_coefficients << std::endl;

std::cout << "LiDAR to camera T:\n" << lidar_camera_T << std::endl;

fs.release(); // 关闭文件

// 用Eigen库处理外参

Eigen::Matrix3d R; // 创建3x3旋转矩阵

// 从OpenCV矩阵中提取旋转部分

R << lidar_camera_T.at<double>(0, 0), lidar_camera_T.at<double>(0, 1), lidar_camera_T.at<double>(0, 2),

lidar_camera_T.at<double>(1, 0), lidar_camera_T.at<double>(1, 1), lidar_camera_T.at<double>(1, 2),

lidar_camera_T.at<double>(2, 0), lidar_camera_T.at<double>(2, 1), lidar_camera_T.at<double>(2, 2);

Eigen::Quaterniond q(R); // 将旋转矩阵转换为四元数

q.normalize(); // 归一化四元数

// 提取平移部分

Eigen::Vector3d t(lidar_camera_T.at<double>(0, 3), lidar_camera_T.at<double>(1, 3), lidar_camera_T.at<double>(2, 3));

// 设置tf变换对象

transform_lidar_to_camera.setOrigin(tf::Vector3(t(0), t(1), t(2))); // 设置平移

transform_lidar_to_camera.setRotation(tf::Quaternion(q.x(), q.y(), q.z(), q.w())); // 设置旋转

}

// 回调函数,用于处理同步的图像和点云数据

void Projection::image_pointcolud_cb(const sensor_msgs::Image::ConstPtr &ori_image, const sensor_msgs::PointCloud2::ConstPtr &ori_pointcloud)

{

std::cout << "Callback triggered!" << std::endl;

// 创建新的点云和图像对象

sensor_msgs::PointCloud2 syn_pointcloud = *ori_pointcloud; // 复制原始点云数据

sensor_msgs::Image syn_image = *ori_image; // 复制原始图像数据

pcl::PointCloud<pcl::PointXYZI> laserCloudIn; // 创建PCL点云对象

pcl::fromROSMsg(syn_pointcloud, laserCloudIn); // 从ROS消息转换为PCL格式

std::vector<Eigen::Vector2d> reproject_pointclouds; // 存储重投影的点

cv_bridge::CvImagePtr cv_image_src; // 创建CvImage指针对象

cv_image_src = cv_bridge::toCvCopy(ori_image, "bgr8"); // 将ROS图像消息转换为OpenCV格式

// 设置tf消息以传递坐标变换

geometry_msgs::TransformStamped tf_msg;

tf_msg.transform.rotation.w = transform_lidar_to_camera.inverse().getRotation().w(); // 传递逆变换的旋转部分

tf_msg.transform.rotation.x = transform_lidar_to_camera.inverse().getRotation().x();

tf_msg.transform.rotation.y = transform_lidar_to_camera.inverse().getRotation().y();

tf_msg.transform.rotation.z = transform_lidar_to_camera.inverse().getRotation().z();

tf_msg.transform.translation.x = transform_lidar_to_camera.inverse().getOrigin().x(); // 传递逆变换的平移部分

tf_msg.transform.translation.y = transform_lidar_to_camera.inverse().getOrigin().y();

tf_msg.transform.translation.z = transform_lidar_to_camera.inverse().getOrigin().z();

sensor_msgs::PointCloud2 cloud_reproject_tf_ros; // 创建变换后的点云数据消息

// 使用tf2进行变换

tf2::doTransform(syn_pointcloud, cloud_reproject_tf_ros, tf_msg);

pcl::PointCloud<pcl::PointXYZI> cloud_reproject_tf; // 创建变换后的点云对象

pcl::fromROSMsg(cloud_reproject_tf_ros, cloud_reproject_tf); // 从ROS消息转换为PCL对象

int cloudSize = cloud_reproject_tf.points.size(); // 获取变换后点云的大小

// 遍历每个点云数据进行重投影

for (int i = 0; i < cloudSize; i++)

{

double tmpxC = cloud_reproject_tf.points[i].x / cloud_reproject_tf.points[i].z; // 计算归一化坐标

double tmpyC = cloud_reproject_tf.points[i].y / cloud_reproject_tf.points[i].z;

double tmpzC = cloud_reproject_tf.points[i].z; // 保存z坐标

double dis = pow(cloud_reproject_tf.points[i].x * cloud_reproject_tf.points[i].x + cloud_reproject_tf.points[i].y * cloud_reproject_tf.points[i].y +

cloud_reproject_tf.points[i].z * cloud_reproject_tf.points[i].z,

0.5); // 计算点至原点的距离

cv::Point2d planepointsC; // 存储重投影的图像坐标

int range = std::min(round((dis / 30.0) * 49), 49.0); // 根据距离计算颜色索引

// 应用畸变处理

double r2 = tmpxC * tmpxC + tmpyC * tmpyC; // 计算平方距离

double r1 = pow(r2, 0.5); // 计算r

double a0 = std::atan(r1); // 计算反正切值

double a1;

a1 = a0 * (1 + distortion_coefficients.at<double>(0, 0) * pow(a0, 2) + distortion_coefficients.at<double>(0, 1) * pow(a0, 4) +

distortion_coefficients.at<double>(0, 2) * pow(a0, 6) + distortion_coefficients.at<double>(0, 3) * pow(a0, 8)); // 畸变校正

// 计算重投影坐标

planepointsC.x = (a1 / r1) * tmpxC;

planepointsC.y = (a1 / r1) * tmpyC;

// 应用相机内参进行坐标转换

planepointsC.x = camera_matrix.at<double>(0, 0) * planepointsC.x + camera_matrix.at<double>(0, 2);

planepointsC.y = camera_matrix.at<double>(1, 1) * planepointsC.y + camera_matrix.at<double>(1, 2);

// 检查重投影坐标范围及有效性

if (planepointsC.y >= 0 and planepointsC.y < image_height and planepointsC.x >= 0 and planepointsC.x < image_width and

tmpzC >= 0 and std::abs(tmpxC) <= 1.35)

{

int point_size = 2; // 定义点的大小

// 在图像中绘制重投影点

cv::circle(cv_image_src->image,

cv::Point(planepointsC.x, planepointsC.y), point_size,

CV_RGB(255 * colmap[50 - range][0], 255 * colmap[50 - range][1], 255 * colmap[50 - range][2]), -1);

}

}

// 发布重建后的点云和图像

pointcloud_pub_.publish(syn_pointcloud); // 发布点云

image_pub_.publish(syn_image); // 发布图像

reproject_image_pub_.publish(cv_image_src->toImageMsg()); // 发布重投影后的图像

}

// 主函数

int main(int argc, char **argv)

{

ros::init(argc, argv, "project_pointcloud_to_image"); // 初始化ROS节点

ros::NodeHandle nh("~"); // 创建节点句柄

Projection *p = new Projection(nh); // 创建Projection对象

// ros::spin(); // 启动ROS事件循环

ros::shutdown(); // 关闭ROS

return 0; // 返回程序

}4./home/pc/2026BOSS/biaoding_ws/src/sensor_calibration/cam_lidar_calibration/src/feature_extraction.cpp

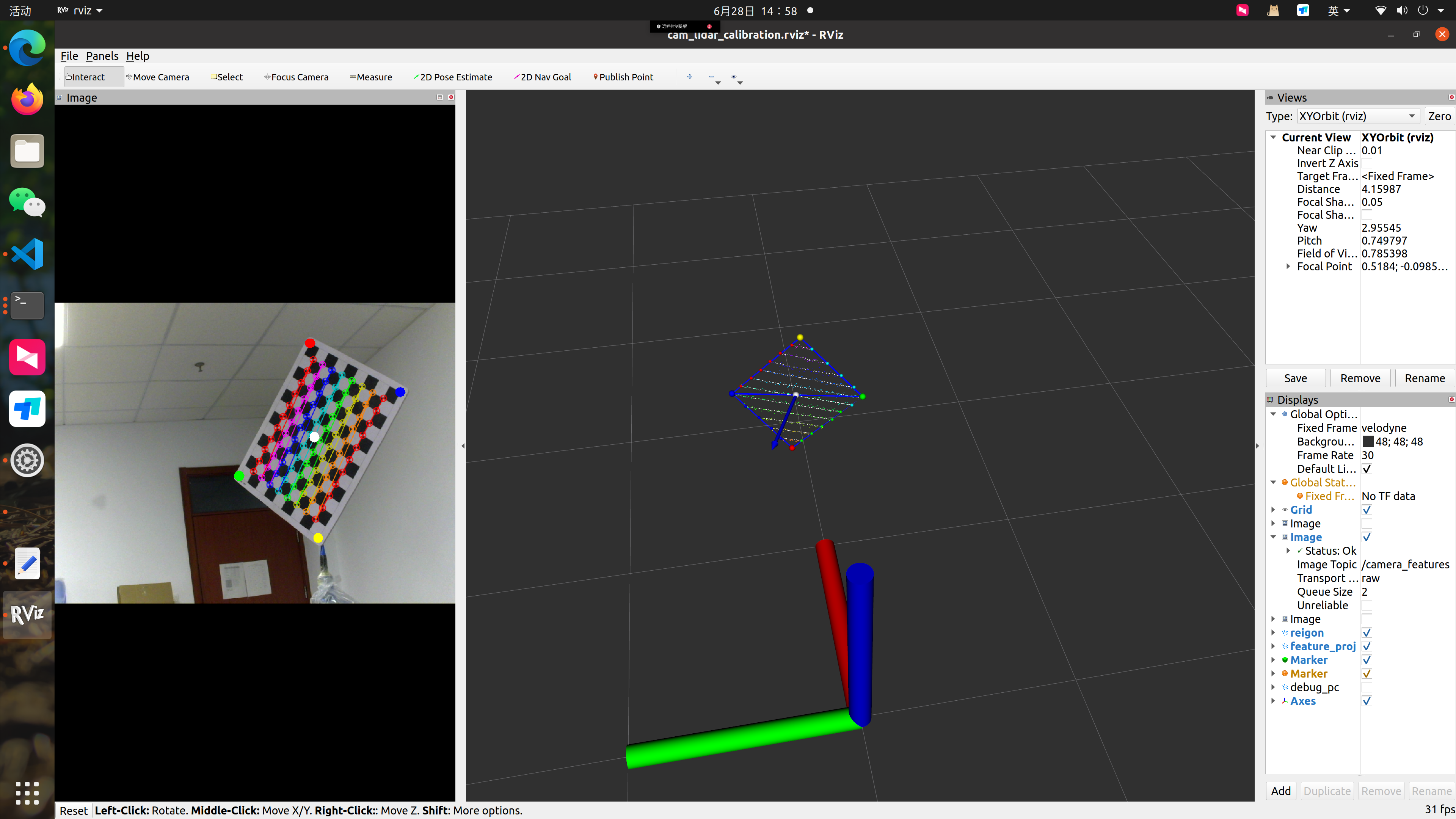

#include "feature_extraction.h" // 包含特征提取的头文件 namespace extrinsic_calibration // 声明命名空间 { // 构造函数 feature_extraction::feature_extraction() {} // 去畸变图像 void feature_extraction::undistort_img(cv::Mat original_img, cv::Mat undistort_img) { remap(original_img, undistort_img, undistort_map1, undistort_map2, cv::INTER_LINEAR); // 使用重映射函数进行去畸变 } // 初始化函数 void feature_extraction::onInit() { // 从配置文件读取参数 pkg_loc = ros::package::getPath("cam_lidar_calibration"); // 获取包的路径 std::string str_initial_file = pkg_loc + "/cfg/initial_params.yaml"; // 配置文件路径 std::cout << str_initial_file << std::endl; // 打印配置文件路径 // 打开配置文件 cv::FileStorage fs(str_initial_file, cv::FileStorage::READ | cv::FileStorage::FORMAT_YAML); if (!fs.isOpened()) { std::cout << " [ " + str_initial_file + " ] 文件打开失败" << std::endl; // 如果文件未打开,输出错误信息 return; } // 读取配置文件中的参数 i_params.camera_topic = std::string(fs["image_topic"]); // 相机主题 ROS_INFO_STREAM("camera topic: " << i_params.camera_topic); i_params.lidar_topic = std::string(fs["pointcloud_topic"]); // Lidar主题 std::cout << "i_params.lidar_topic: " << i_params.lidar_topic << std::endl; i_params.fisheye_model = int(fs["distortion_model"]); // 畸变模型类型 i_params.lidar_ring_count = int(fs["scan_line"]); // Lidar环数 i_params.grid_size = std::make_pair(int(fs["chessboard"]["length"]), int(fs["chessboard"]["width"])); // 棋盘格网格大小 std::cout << "i_params.grid_size: " << i_params.grid_size.first << ", " << i_params.grid_size.second << std::endl; i_params.square_length = fs["chessboard"]["grid_size"]; // 棋盘格单个网格的边长 i_params.board_dimension = std::make_pair(int(fs["board_dimension"]["length"]), int(fs["board_dimension"]["width"])); // 棋盘尺寸 i_params.cb_translation_error = std::make_pair(int(fs["translation_error"]["length"]), int(fs["translation_error"]["width"])); // 棋盘平移误差 // 读取相机内参矩阵和畸变系数 fs["camera_matrix"] >> i_params.cameramat; std::cout << "camera_matrix: " << i_params.cameramat << std::endl; i_params.distcoeff_num = 5; // 畸变系数数量 fs["distortion_coefficients"] >> i_params.distcoeff; std::cout << "distortion_coefficients: " << i_params.distcoeff << std::endl; // 图像宽度和高度 img_width = fs["image_pixel"]["width"]; img_height = fs["image_pixel"]["height"]; // 计算棋盘对角线长度(单位为米) diagonal = sqrt(pow(i_params.board_dimension.first, 2) + pow(i_params.board_dimension.second, 2)) / 1000; std::cout << "diagonal of the board is " << diagonal; std::cout << "Input parameters received" << std::endl; // 创建 ROS NodeHandle ros::NodeHandle &private_nh = getNodeHandle(); // 获取私有节点句柄 ros::NodeHandle &public_nh = getPrivateNodeHandle(); // 获取公共节点句柄 ros::NodeHandle &pnh = getMTPrivateNodeHandle(); // 获取多线程私有节点句柄 // 从参数服务器读取阈值 public_nh.param<double>("plane_distance_threshold", plane_dist_threshold_, 0.05); public_nh.param<double>("line_distance_threshold", line_dist_threshold_, 0.01); ROS_INFO_STREAM("plane_distance_threshold " << plane_dist_threshold_ << " line_distance_threshold" << line_dist_threshold_); // 初始化图像传输 it_.reset(new image_transport::ImageTransport(public_nh)); // 图像传输公共节点 it_p_.reset(new image_transport::ImageTransport(private_nh)); // 图像传输私有节点 image_sub = new image_sub_type(public_nh, i_params.camera_topic, queue_rate); // 相机图像订阅者 pcl_sub = new pc_sub_type(public_nh, i_params.lidar_topic, queue_rate); // Lidar点云订阅者 // 创建动态重配置服务器以设置实验区域边界 server = boost::make_shared<dynamic_reconfigure::Server<cam_lidar_calibration::boundsConfig>>(pnh); dynamic_reconfigure::Server<cam_lidar_calibration::boundsConfig>::CallbackType f; f = boost::bind(&feature_extraction::bounds_callback, this, _1, _2); // 设置回调函数 server->setCallback(f); // 设置回调 // 同步器以获取同步的相机-Lidar扫描对 sync = new message_filters::Synchronizer<MySyncPolicy>(MySyncPolicy(queue_rate), *image_sub, *pcl_sub); sync->registerCallback(boost::bind(&feature_extraction::extractROI, this, _1, _2)); // 注册回调 // 发布者定义 roi_publisher = public_nh.advertise<cam_lidar_calibration::calibration_data>("roi/points", 10, true); pub_cloud = public_nh.advertise<sensor_msgs::PointCloud2>("velodyne_features", 1); expt_region = public_nh.advertise<sensor_msgs::PointCloud2>("Experimental_region", 10); debug_pc_pub = public_nh.advertise<sensor_msgs::PointCloud2>("debug_pc", 10); flag_subscriber = public_nh.subscribe<std_msgs::Int8>("/flag", 1, &feature_extraction::flag_cb, this); // 订阅标志 vis_pub = public_nh.advertise<visualization_msgs::Marker>("visualization_marker", 0); // 可视化标记发布者 visPub = public_nh.advertise<visualization_msgs::Marker>("boardcorners", 0); // 棋盘角点发布者 image_publisher = it_p_->advertise("camera_features", 1); // 图像特征发布者 NODELET_INFO_STREAM("Camera Lidar Calibration"); // 输出初始化成功信息 // 加载去畸变参数并获取重映射参数 // 去畸变并保留最大图像 cv::Size img_size(img_width, img_height); cv::initUndistortRectifyMap(i_params.cameramat, i_params.distcoeff, cv::Mat(), cv::getOptimalNewCameraMatrix(i_params.cameramat, i_params.distcoeff, img_size, 1, img_size, 0), img_size, CV_16SC2, undistort_map1, undistort_map2); } // 回调函数处理标志 void feature_extraction::flag_cb(const std_msgs::Int8::ConstPtr &msg) { flag = msg->data; // 读取由input_sample节点发布的标志 } // 动态重配置的回调函数 void feature_extraction::bounds_callback(cam_lidar_calibration::boundsConfig &config, uint32_t level) { bound = config; // 读取滑块条运动对应的值 } // 将相机坐标系下的三维点转换为图像坐标系下的2D像素点 double *feature_extraction::converto_imgpts(double x, double y, double z) { double tmpxC = x / z; // 归一化处理 double tmpyC = y / z; cv::Point2d planepointsC; // 存储像素点的二维坐标 planepointsC.x = tmpxC; planepointsC.y = tmpyC; double r2 = tmpxC * tmpxC + tmpyC * tmpyC; // 计算r的平方 if (i_params.fisheye_model) // 判断是鱼眼镜头模型 { double r1 = pow(r2, 0.5); double a0 = std::atan(r1); // 计算角度 // 鱼眼镜头的畸变函数 double a1 = a0 * (1 + i_params.distcoeff.at<double>(0) * pow(a0, 2) + i_params.distcoeff.at<double>(1) * pow(a0, 4) + i_params.distcoeff.at<double>(2) * pow(a0, 6) + i_params.distcoeff.at<double>(3) * pow(a0, 8)); planepointsC.x = (a1 / r1) * tmpxC; // 更新坐标 planepointsC.y = (a1 / r1) * tmpyC; // 应用相机内参来转换坐标 planepointsC.x = i_params.cameramat.at<double>(0, 0) * planepointsC.x + i_params.cameramat.at<double>(0, 2); planepointsC.y = i_params.cameramat.at<double>(1, 1) * planepointsC.y + i_params.cameramat.at<double>(1, 2); } else // 针孔相机模型 { double tmpdist = 1 + i_params.distcoeff.at<double>(0) * r2 + i_params.distcoeff.at<double>(1) * r2 * r2 + i_params.distcoeff.at<double>(4) * r2 * r2 * r2; planepointsC.x = tmpxC * tmpdist + 2 * i_params.distcoeff.at<double>(2) * tmpxC * tmpyC + i_params.distcoeff.at<double>(3) * (r2 + 2 * tmpxC * tmpxC); planepointsC.y = tmpyC * tmpdist + i_params.distcoeff.at<double>(2) * (r2 + 2 * tmpyC * tmpyC) + 2 * i_params.distcoeff.at<double>(3) * tmpxC * tmpyC; planepointsC.x = i_params.cameramat.at<double>(0, 0) * planepointsC.x + i_params.cameramat.at<double>(0, 2); planepointsC.y = i_params.cameramat.at<double>(1, 1) * planepointsC.y + i_params.cameramat.at<double>(1, 2); } double *img_coord = new double[2]; // 动态分配内存存储坐标 *(img_coord) = planepointsC.x; // 存储x坐标 *(img_coord + 1) = planepointsC.y; // 存储y坐标 return img_coord; // 返回二维坐标 } // 可视化端点 void feature_extraction::visualize_end_points(pcl::PointCloud<pcl::PointXYZIR>::Ptr &min_points, pcl::PointCloud<pcl::PointXYZIR>::Ptr &max_points) { // 可视化最小和最大点 visualization_msgs::Marker minmax; // 创建可视化标记 minmax.header.frame_id = "velodyne"; // 设置帧ID minmax.header.stamp = ros::Time(); // 设置时间戳 minmax.ns = "my_sphere"; // 设置命名空间 minmax.type = visualization_msgs::Marker::SPHERE; // 设置标记类型为球体 minmax.action = visualization_msgs::Marker::ADD; // 添加标记 minmax.pose.orientation.w = 1.0; // 设置旋转 minmax.scale.x = 0.02; // 设置缩放 minmax.scale.y = 0.02; minmax.scale.z = 0.02; minmax.color.a = 1.0; // 设置透明度 int y_min_pts; for (y_min_pts = 0; y_min_pts < min_points->points.size(); y_min_pts++) { minmax.id = y_min_pts + 13; // 设置标记ID minmax.pose.position.x = min_points->points[y_min_pts].x; // 最小点的坐标 minmax.pose.position.y = min_points->points[y_min_pts].y; minmax.pose.position.z = min_points->points[y_min_pts].z; minmax.color.b = 1.0; // 蓝色 minmax.color.r = 1.0; // 红色 minmax.color.g = 0.0; // 绿色 visPub.publish(minmax); // 发布标记 } for (int y_max_pts = 0; y_max_pts < max_points->points.size(); y_max_pts++) { minmax.id = y_min_pts + 13 + y_max_pts; // 设置最大点的标记ID minmax.pose.position.x = max_points->points[y_max_pts].x; minmax.pose.position.y = max_points->points[y_max_pts].y; minmax.pose.position.z = max_points->points[y_max_pts].z; minmax.color.r = 0.0; // 绿色 minmax.color.g = 1.0; minmax.color.b = 1.0; // 青色 visPub.publish(minmax); // 发布标记 } } // 可视化边缘点 void feature_extraction::visualize_edge_points(pcl::PointCloud<pcl::PointXYZIR>::Ptr &left_down, pcl::PointCloud<pcl::PointXYZIR>::Ptr &right_down, pcl::PointCloud<pcl::PointXYZIR>::Ptr &left_up, pcl::PointCloud<pcl::PointXYZIR>::Ptr &right_up) { // 可视化左右上下边缘点 visualization_msgs::Marker minmax; // 创建标记 minmax.header.frame_id = "velodyne"; // 设置帧ID minmax.header.stamp = ros::Time(); // 设置时间戳 minmax.ns = "my_sphere"; // 设置命名空间 minmax.type = visualization_msgs::Marker::SPHERE; // 设置类型为球体 minmax.action = visualization_msgs::Marker::ADD; // 添加标记 minmax.pose.orientation.w = 1.0; // 设置旋转 minmax.scale.x = 0.02; // 设置缩放 minmax.scale.y = 0.02; minmax.scale.z = 0.02; minmax.color.a = 1.0; // 设置透明度 int mark_id = 13; // 标记ID for (int y_min_pts = 0; y_min_pts < left_down->points.size(); y_min_pts++) { mark_id++; minmax.id = mark_id; // 设置标记ID minmax.pose.position.x = left_down->points[y_min_pts].x; // 左下点坐标 minmax.pose.position.y = left_down->points[y_min_pts].y; minmax.pose.position.z = left_down->points[y_min_pts].z; minmax.color.b = 1.0; // 蓝色 minmax.color.r = 0.0; // 红色 minmax.color.g = 0.0; // 绿色 visPub.publish(minmax); // 发布标记 } for (int y_max_pts = 0; y_max_pts < right_down->points.size(); y_max_pts++) { mark_id++; minmax.id = mark_id; // 设置标记ID minmax.pose.position.x = right_down->points[y_max_pts].x; // 右下点坐标 minmax.pose.position.y = right_down->points[y_max_pts].y; minmax.pose.position.z = right_down->points[y_max_pts].z; minmax.color.r = 0.0; // 绿色 minmax.color.g = 1.0; // 绿色 minmax.color.b = 0.0; // 没颜色 visPub.publish(minmax); // 发布标记 } for (int y_max_pts = 0; y_max_pts < left_up->points.size(); y_max_pts++) { mark_id++; minmax.id = mark_id; // 设置标记ID minmax.pose.position.x = left_up->points[y_max_pts].x; // 左上点坐标 minmax.pose.position.y = left_up->points[y_max_pts].y; minmax.pose.position.z = left_up->points[y_max_pts].z; minmax.color.r = 1.0; // 红色 minmax.color.g = 0.0; // 没颜色 minmax.color.b = 0.0; // 没颜色 visPub.publish(minmax); // 发布标记 } for (int y_max_pts = 0; y_max_pts < right_up->points.size(); y_max_pts++) { mark_id++; minmax.id = mark_id; // 设置标记ID minmax.pose.position.x = right_up->points[y_max_pts].x; // 右上点坐标 minmax.pose.position.y = right_up->points[y_max_pts].y; minmax.pose.position.z = right_up->points[y_max_pts].z; minmax.color.r = 0.0; // 绿色 minmax.color.g = 1.0; // 绿色 minmax.color.b = 1.0; // 青色 visPub.publish(minmax); // 发布标记 } } // 提取关注的特征 void feature_extraction::extractROI(const sensor_msgs::Image::ConstPtr &img, const sensor_msgs::PointCloud2::ConstPtr &pc) { // 创建点云对象 pcl::PointCloud<pcl::PointXYZIR>::Ptr cloud1(new pcl::PointCloud<pcl::PointXYZIR>), cloud_filtered1(new pcl::PointCloud<pcl::PointXYZIR>), cloud_passthrough1(new pcl::PointCloud<pcl::PointXYZIR>); sensor_msgs::PointCloud2 cloud_final1, debug_pc_msg; pcl::fromROSMsg(*pc, *cloud1); // 将ROS消息转换为点云 // 过滤超出实验区域的点云 pcl::PassThrough<pcl::PointXYZIR> pass1; pass1.setInputCloud(cloud1); pass1.setFilterFieldName("x"); pass1.setFilterLimits(bound.x_min, bound.x_max); // 设置X轴过滤范围 pass1.filter(*cloud_passthrough1); pcl::PassThrough<pcl::PointXYZIR> pass_z1; pass_z1.setInputCloud(cloud_passthrough1); pass_z1.setFilterFieldName("z"); pass_z1.setFilterLimits(bound.z_min, bound.z_max); // 设置Z轴过滤范围 pass_z1.filter(*cloud_passthrough1); pcl::PassThrough<pcl::PointXYZIR> pass_final1; pass_final1.setInputCloud(cloud_passthrough1); pass_final1.setFilterFieldName("y"); pass_final1.setFilterLimits(bound.y_min, bound.y_max); // 设置Y轴过滤范围 pass_final1.filter(*cloud_passthrough1); // 发布实验区域点云 pcl::toROSMsg(*cloud_passthrough1, cloud_final1); expt_region.publish(cloud_final1); // 仅在用户按下 'i' 键以获取样本时才会运行 if (flag == 1) // 判断标志是否为1 { // 初始化棋盘角点 cv::Mat corner_vectors = cv::Mat::eye(3, 5, CV_64F); // 保存棋盘角点 cv::Mat chessboard_normal = cv::Mat(1, 3, CV_64F); // 棋盘法向量 std::vector<cv::Point2f> image_points, imagePoints1, imagePoints; // 图像点 flag = 0; // 将标志重置为0 //////////////// 图像特征 ////////////////// cv_bridge::CvImagePtr cv_ptr; // OpenCV图像指针 cv::Size2i patternNum(i_params.grid_size.first, i_params.grid_size.second); // 棋盘格物理尺寸 cv::Size2i patternSize(i_params.square_length, i_params.square_length); // 棋盘格每个块的大小 // 将ROS消息转换为OpenCV格式 try { cv_ptr = cv_bridge::toCvCopy(img, sensor_msgs::image_encodings::BGR8); } catch (cv_bridge::Exception &e) { ROS_ERROR("cv_bridge exception: %s", e.what()); // 捕获异常 } // 处理图像 cv::Mat gray; // 灰度图像 std::vector<cv::Point2f> corners, corners_undistorted; // 保存找到的角点 std::vector<cv::Point3f> grid3dpoint; // 棋盘格的3D点 cv::cvtColor(cv_ptr->image, gray, CV_BGR2GRAY); // 转换为灰度图 ROS_INFO_STREAM("cols: " << gray.cols << " rows: " << gray.rows); // 查找图像中的棋盘格角点 bool patternfound = cv::findChessboardCorners(gray, patternNum, corners, CALIB_CB_ADAPTIVE_THRESH + CALIB_CB_NORMALIZE_IMAGE); if (patternfound) // 判断是否找到棋盘格 { // 精确定位角点 ROS_INFO_STREAM("patternfound!"); cornerSubPix(gray, corners, Size(11, 11), Size(-1, -1), TermCriteria(CV_TERMCRIT_EPS + CV_TERMCRIT_ITER, 30, 0.1)); // 在图像上绘制角点 cv::drawChessboardCorners(cv_ptr->image, patternNum, corners, patternfound); cv::Size imgsize; imgsize.height = cv_ptr->image.rows; // 图像高度 imgsize.width = cv_ptr->image.cols; // 图像宽度 double tx, ty; // 平移值 // 棋盘框架原点的位置(棋盘左下角) tx = (patternNum.height - 1) * patternSize.height / 2; ty = (patternNum.width - 1) * patternSize.width / 2; // 初始化棋盘格3D点 for (int i = 0; i < patternNum.height; i++) { for (int j = 0; j < patternNum.width; j++) { cv::Point3f tmpgrid3dpoint; // 将原点从左下角移动到棋盘中心 tmpgrid3dpoint.x = i * patternSize.height - tx; // x坐标 tmpgrid3dpoint.y = j * patternSize.width - ty; // y坐标 tmpgrid3dpoint.z = 0; // z坐标 grid3dpoint.push_back(tmpgrid3dpoint); // 存储棋盘格3D点 } } // 初始化棋盘角点 std::vector<cv::Point3f> boardcorners; // 棋盘角落的坐标 boardcorners.push_back( cv::Point3f((i_params.board_dimension.second - i_params.cb_translation_error.second) / 2, (i_params.board_dimension.first - i_params.cb_translation_error.first) / 2, 0.0)); boardcorners.push_back( cv::Point3f(-(i_params.board_dimension.second + i_params.cb_translation_error.second) / 2, (i_params.board_dimension.first - i_params.cb_translation_error.first) / 2, 0.0)); boardcorners.push_back( cv::Point3f(-(i_params.board_dimension.second + i_params.cb_translation_error.second) / 2, -(i_params.board_dimension.first + i_params.cb_translation_error.first) / 2, 0.0)); boardcorners.push_back( cv::Point3f((i_params.board_dimension.second - i_params.cb_translation_error.second) / 2, -(i_params.board_dimension.first + i_params.cb_translation_error.first) / 2, 0.0)); // 棋盘中心坐标(由于棋盘放置不正确而引入) boardcorners.push_back(cv::Point3f(-i_params.cb_translation_error.second / 2, -i_params.cb_translation_error.first / 2, 0.0)); std::vector<cv::Point3f> square_edge; // 中间棋盘格边缘坐标(相对于棋盘中心) square_edge.push_back(cv::Point3f(-i_params.square_length / 2, -i_params.square_length / 2, 0.0)); square_edge.push_back(cv::Point3f(i_params.square_length / 2, i_params.square_length / 2, 0.0)); cv::Mat rvec(3, 3, CV_64F); // 初始化旋转向量 cv::Mat tvec(3, 1, CV_64F); // 初始化平移向量 if (i_params.fisheye_model) // 鱼眼镜头模型 { // 去畸变处理 cv::fisheye::undistortPoints(corners, corners_undistorted, i_params.cameramat, i_params.distcoeff, i_params.cameramat); // 去畸变后的点 cv::Mat fake_distcoeff = (Mat_<double>(4, 1) << 0, 0, 0, 0); // 假的畸变系数 cv::solvePnP(grid3dpoint, corners_undistorted, i_params.cameramat, fake_distcoeff, rvec, tvec); // 解算PnP // 根据已知3D点和2D点的关系获取图像点 cv::fisheye::projectPoints(grid3dpoint, image_points, rvec, tvec, i_params.cameramat, i_params.distcoeff); // 标记中心点 cv::fisheye::projectPoints(square_edge, imagePoints1, rvec, tvec, i_params.cameramat, i_params.distcoeff); cv::fisheye::projectPoints(boardcorners, imagePoints, rvec, tvec, i_params.cameramat, i_params.distcoeff); // 在图像上绘制标记 for (int i = 0; i < grid3dpoint.size(); i++) cv::circle(cv_ptr->image, image_points[i], 5, CV_RGB(255, 0, 0), -1); // 红色圆点 } // 针孔相机模型 else { cv::solvePnP(grid3dpoint, corners, i_params.cameramat, i_params.distcoeff, rvec, tvec); // 解算PnP cv::projectPoints(grid3dpoint, rvec, tvec, i_params.cameramat, i_params.distcoeff, image_points); // 计算图像点 // 标记中心点 cv::projectPoints(square_edge, rvec, tvec, i_params.cameramat, i_params.distcoeff, imagePoints1); cv::projectPoints(boardcorners, rvec, tvec, i_params.cameramat, i_params.distcoeff, imagePoints); } // 棋盘位置的4x4变换矩阵 | R&T cv::Mat chessboardpose = cv::Mat::eye(4, 4, CV_64F); // 初始化齐次变换矩阵 cv::Mat tmprmat = cv::Mat(3, 3, CV_64F); // 旋转矩阵 cv::Rodrigues(rvec, tmprmat); // 将旋转向量转换为旋转矩阵 for (int j = 0; j < 3; j++) { for (int k = 0; k < 3; k++) { chessboardpose.at<double>(j, k) = tmprmat.at<double>(j, k); // 填充旋转部分 } chessboardpose.at<double>(j, 3) = tvec.at<double>(j); // 填充平移部分 } // 法线向量初始化 chessboard_normal.at<double>(0) = 0; chessboard_normal.at<double>(1) = 0; chessboard_normal.at<double>(2) = 1; // Z方向是法线方向 chessboard_normal = chessboard_normal * chessboardpose(cv::Rect(0, 0, 3, 3)).t(); // 变换法线 for (int k = 0; k < boardcorners.size(); k++) { // 处理棋盘角点 cv::Point3f pt(boardcorners[k]); for (int i = 0; i < 3; i++) { corner_vectors.at<double>(i, k) = chessboardpose.at<double>(i, 0) * pt.x + chessboardpose.at<double>(i, 1) * pt.y + chessboardpose.at<double>(i, 3); // 将角点放入角点向量中 } // 将3D坐标转换为图像坐标 double *img_coord = feature_extraction::converto_imgpts(corner_vectors.at<double>(0, k), corner_vectors.at<double>(1, k), corner_vectors.at<double>(2, k)); // 绘制角点和中心点 if (k == 0) cv::circle(cv_ptr->image, cv::Point(img_coord[0], img_coord[1]), 8, CV_RGB(0, 255, 0), -1); // 绿色 else if (k == 1) cv::circle(cv_ptr->image, cv::Point(img_coord[0], img_coord[1]), 8, CV_RGB(255, 255, 0), -1); // 黄色 else if (k == 2) cv::circle(cv_ptr->image, cv::Point(img_coord[0], img_coord[1]), 8, CV_RGB(0, 0, 255), -1); // 蓝色 else if (k == 3) cv::circle(cv_ptr->image, cv::Point(img_coord[0], img_coord[1]), 8, CV_RGB(255, 0, 0), -1); // 红色 else cv::circle(cv_ptr->image, cv::Point(img_coord[0], img_coord[1]), 8, CV_RGB(255, 255, 255), -1); // 白色表示中心 delete[] img_coord; // 释放内存 } // 发布带有所有特征标记的图像 image_publisher.publish(cv_ptr->toImageMsg()); } // if (patternfound) else ROS_ERROR("PATTERN NOT FOUND"); // 查找失败时输出错误信息 //////////////// 点云特征 ////////////////// // 创建点云对象 pcl::PointCloud<pcl::PointXYZIR>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZIR>), cloud_filtered(new pcl::PointCloud<pcl::PointXYZIR>), cloud_passthrough(new pcl::PointCloud<pcl::PointXYZIR>), corrected_plane(new pcl::PointCloud<pcl::PointXYZIR>); sensor_msgs::PointCloud2 cloud_final; // 创建点云消息对象 pcl::fromROSMsg(*pc, *cloud); // 将ROS消息转换为点云 // 过滤超出实验区域的点云 pcl::PassThrough<pcl::PointXYZIR> pass; pass.setInputCloud(cloud); pass.setFilterFieldName("x"); pass.setFilterLimits(bound.x_min, bound.x_max); // 设置X轴过滤范围 pass.filter(*cloud_passthrough); // 过滤后的点云 pcl::PassThrough<pcl::PointXYZIR> pass_z; pass_z.setInputCloud(cloud_passthrough); pass_z.setFilterFieldName("z"); pass_z.setFilterLimits(bound.z_min, bound.z_max); // 设置Z轴过滤范围 pass_z.filter(*cloud_passthrough); pcl::PassThrough<pcl::PointXYZIR> pass_final; pass_final.setInputCloud(cloud_passthrough); pass_final.setFilterFieldName("y"); pass_final.setFilterLimits(bound.y_min, bound.y_max); // 设置Y轴过滤范围 pass_final.filter(*cloud_passthrough); // 查找在实验区域内最高的点(Z值最大) double z_max = cloud_passthrough->points[0].z; // 初始化最大z值 size_t pt_index; for (size_t i = 0; i < cloud_passthrough->points.size(); ++i) { if (cloud_passthrough->points[i].z > z_max) // 查找最大Z值 { pt_index = i; z_max = cloud_passthrough->points[i].z; } } // 从最大Z值中减去近似对角线长度(单位为米) ROS_INFO_STREAM("z max is: " << z_max); double z_min = z_max - diagonal; // 获取最小Z值 ROS_INFO_STREAM("z min is: " << z_min); pass_z.setInputCloud(cloud_passthrough); pass_z.setFilterFieldName("z"); pass_z.setFilterLimits(z_min, z_max); // 设置Z轴过滤范围 pass_z.filter(*cloud_filtered); // 最终过滤后的点云 // 拟合通过棋盘点云的平面 pcl::ModelCoefficients::Ptr coefficients(new pcl::ModelCoefficients()); // 模型系数 pcl::PointIndices::Ptr inliers(new pcl::PointIndices()); // 内点索引 int i = 0, nr_points = static_cast<int>(cloud_filtered->points.size()); // 点云总数 pcl::SACSegmentation<pcl::PointXYZIR> seg; // 创建分割对象 seg.setOptimizeCoefficients(true); // 启用系数优化 seg.setModelType(pcl::SACMODEL_PLANE); // 设置模型类型为平面 seg.setMethodType(pcl::SAC_RANSAC); // 设置方法类型为RANSAC seg.setMaxIterations(1000); // 最大迭代次数 seg.setDistanceThreshold(plane_dist_threshold_); // 设置距离阈值 pcl::ExtractIndices<pcl::PointXYZIR> extract; // 创建提取对象 seg.setInputCloud(cloud_filtered); // 设置输入点云 seg.segment(*inliers, *coefficients); // 执行平面拟合 // 计算法向量的大小 float mag = sqrt(pow(coefficients->values[0], 2) + pow(coefficients->values[1], 2) + pow(coefficients->values[2], 2)); // 提取内点 pcl::PointCloud<pcl::PointXYZIR>::Ptr cloud_seg(new pcl::PointCloud<pcl::PointXYZIR>); extract.setInputCloud(cloud_filtered); // 设置输入点云 extract.setIndices(inliers); // 设置内点索引 extract.setNegative(false); // 提取内点 extract.filter(*cloud_seg); // 提取后的点云 // 将内点投影到拟合平面上 pcl::PointCloud<pcl::PointXYZIR>::Ptr cloud_projected(new pcl::PointCloud<pcl::PointXYZIR>); pcl::ProjectInliers<pcl::PointXYZIR> proj; // 创建投影对象 proj.setModelType(pcl::SACMODEL_PLANE); // 设置模型类型为平面 proj.setInputCloud(cloud_seg); // 设置输入的内点云 proj.setModelCoefficients(coefficients); // 设置模型系数 proj.filter(*cloud_projected); // 得到投影后的点云 // 发布投影的内点 pcl::toROSMsg(*cloud_projected, cloud_final); pub_cloud.publish(cloud_final); // 发布相关的点云 // 在每个环中找到对应于棋盘的最大和最小点 std::vector<std::deque<pcl::PointXYZIR *>> candidate_segments(i_params.lidar_ring_count); // 按环数存储候选点 std::vector<RingFeature> capture_rings; // 存储环特征信息 // 遍历投影的点云 for (size_t i = 0; i < cloud_projected->points.size(); ++i) { int ring_number = static_cast<int>(cloud_projected->points[i].ring); // 获取环号 // 将点推入相应的环中 candidate_segments[ring_number].push_back(&(cloud_projected->points[i])); } // 第二步:按 Y 坐标降序排列每个环中的点 pcl::PointXYZIR max, min; // 用于存储最大最小点 pcl::PointCloud<pcl::PointXYZIR>::Ptr max_points(new pcl::PointCloud<pcl::PointXYZIR>); // 附件中的最大点云 pcl::PointCloud<pcl::PointXYZIR>::Ptr min_points(new pcl::PointCloud<pcl::PointXYZIR>); // 附件中的最小点云 double max_distance = -9999.0; // 初始化最大距离 int center_ring = -1; // 初始化中心环 for (int i = 0; static_cast<size_t>(i) < candidate_segments.size(); i++) { if (candidate_segments[i].size() == 0) // 检查当前环是否有点 { continue; // 如果没有点,则跳过 } double x_min = 9999.0; // 初始化X轴最小值 double x_max = -9999.0; // 初始化X轴最大值 int x_min_index, x_max_index; // 存储最小最大点的索引 // 查找当前环的最大最小点 for (int p = 0; p < candidate_segments[i].size(); p++) { if (candidate_segments[i][p]->x > x_max) // 更新最大值 { x_max = candidate_segments[i][p]->x; x_max_index = p; } if (candidate_segments[i][p]->x < x_min) // 更新最小值 { x_min = candidate_segments[i][p]->x; x_min_index = p; } } // 将最大和最小点推入对应的点云中 pcl::PointXYZIR min_p = *candidate_segments[i][x_min_index]; pcl::PointXYZIR max_p = *candidate_segments[i][x_max_index]; double distance = pcl::euclideanDistance(min_p, max_p); // 计算距离 if (distance < 0.001) // 如果距离过小(噪声) { continue; // 跳过 } if (distance > max_distance) // 更新最大距离 { max_distance = distance; // 更新最大距离 center_ring = min_p.ring; // 更新中心环 } ROS_INFO_STREAM("ring number: " << i << " distance: " << distance); // 发布激光雷达的环数据(从下到上0->31) min_points->push_back(min_p); // 保存最小点 max_points->push_back(max_p); // 保存最大点 } // 创建四个点云对象,用于存储边缘点 pcl::PointCloud<pcl::PointXYZIR>::Ptr left_up_points(new pcl::PointCloud<pcl::PointXYZIR>); pcl::PointCloud<pcl::PointXYZIR>::Ptr left_down_points(new pcl::PointCloud<pcl::PointXYZIR>); pcl::PointCloud<pcl::PointXYZIR>::Ptr right_up_points(new pcl::PointCloud<pcl::PointXYZIR>); pcl::PointCloud<pcl::PointXYZIR>::Ptr right_down_points(new pcl::PointCloud<pcl::PointXYZIR>); // 将最小最大点分为左下、右下、左上、右上 for (int m = 0; m < min_points->size(); ++m) { if (min_points->points[m].ring < center_ring) { left_down_points->push_back(max_points->points[m]); // 保存左下角最大点 right_down_points->push_back(min_points->points[m]); // 保存右下角最小点 } else { left_up_points->push_back(max_points->points[m]); // 保存左上最大点 right_up_points->push_back(min_points->points[m]); // 保存右上最小点 } } // 可视化端点 visualize_edge_points(left_down_points, right_down_points, left_up_points, right_up_points); // 拟合线段 pcl::ModelCoefficients::Ptr coefficients_left_up(new pcl::ModelCoefficients); pcl::PointIndices::Ptr inliers_left_up(new pcl::PointIndices); pcl::ModelCoefficients::Ptr coefficients_left_dwn(new pcl::ModelCoefficients); pcl::PointIndices::Ptr inliers_left_dwn(new pcl::PointIndices); pcl::ModelCoefficients::Ptr coefficients_right_up(new pcl::ModelCoefficients); pcl::PointIndices::Ptr inliers_right_up(new pcl::PointIndices); pcl::ModelCoefficients::Ptr coefficients_right_dwn(new pcl::ModelCoefficients); pcl::PointIndices::Ptr inliers_right_dwn(new pcl::PointIndices); seg.setModelType(pcl::SACMODEL_LINE); // 设置模型类型为线段 seg.setMethodType(pcl::SAC_RANSAC); // 使用RANSAC方法 seg.setDistanceThreshold(line_dist_threshold_); // 设置线段距离阈值 // 对每个点云进行线段拟合 seg.setInputCloud(left_up_points); seg.segment(*inliers_left_up, *coefficients_left_up); // 通过最大点拟合线段 seg.setInputCloud(left_down_points); seg.segment(*inliers_left_dwn, *coefficients_left_dwn); // 通过最大点拟合线段 seg.setInputCloud(right_up_points); seg.segment(*inliers_right_up, *coefficients_right_up); // 通过最小点拟合线段 seg.setInputCloud(right_down_points); seg.segment(*inliers_right_dwn, *coefficients_right_dwn); // 通过最小点拟合线段 // 查找出两条(四条)线段交点 Eigen::Vector4f Point_l; // 交点 pcl::PointCloud<pcl::PointXYZ>::Ptr basic_cloud_ptr(new pcl::PointCloud<pcl::PointXYZ>); // 存储交点 pcl::PointXYZ basic_point; // 交点 // 根据线段修改两条交点 if (pcl::lineWithLineIntersection(*coefficients_left_up, *coefficients_left_dwn, Point_l)) { basic_point.x = Point_l[0]; // 设置点的xyz坐标 basic_point.y = Point_l[1]; basic_point.z = Point_l[2]; basic_cloud_ptr->points.push_back(basic_point); // 存储基本点 } if (pcl::lineWithLineIntersection(*coefficients_right_up, *coefficients_right_dwn, Point_l)) { basic_point.x = Point_l[0]; basic_point.y = Point_l[1]; basic_point.z = Point_l[2]; basic_cloud_ptr->points.push_back(basic_point); // 存储基本点 } if (pcl::lineWithLineIntersection(*coefficients_left_dwn, *coefficients_right_dwn, Point_l)) { basic_point.x = Point_l[0]; basic_point.y = Point_l[1]; basic_point.z = Point_l[2]; basic_cloud_ptr->points.push_back(basic_point); // 存储基本点 } if (pcl::lineWithLineIntersection(*coefficients_left_up, *coefficients_right_up, Point_l)) { basic_point.x = Point_l[0]; basic_point.y = Point_l[1]; basic_point.z = Point_l[2]; basic_cloud_ptr->points.push_back(basic_point); // 存储基本点 } // 输入数据 sample_data.velodynepoint[0] = (basic_cloud_ptr->points[0].x + basic_cloud_ptr->points[1].x) * 1000 / 2; // 激光雷达位置 sample_data.velodynepoint[1] = (basic_cloud_ptr->points[0].y + basic_cloud_ptr->points[1].y) * 1000 / 2; sample_data.velodynepoint[2] = (basic_cloud_ptr->points[0].z + basic_cloud_ptr->points[1].z) * 1000 / 2; // 归一化法向量 sample_data.velodynenormal[0] = -coefficients->values[0] / mag; sample_data.velodynenormal[1] = -coefficients->values[1] / mag; sample_data.velodynenormal[2] = -coefficients->values[2] / mag; // 计算2D到3D法向量溶解 double top_down_radius = sqrt(pow(sample_data.velodynepoint[0] / 1000, 2) + pow(sample_data.velodynepoint[1] / 1000, 2)); double x_comp = sample_data.velodynepoint[0] / 1000 + sample_data.velodynenormal[0] / 2; double y_comp = sample_data.velodynepoint[1] / 1000 + sample_data.velodynenormal[1] / 2; double vector_dist = sqrt(pow(x_comp, 2) + pow(y_comp, 2)); // 如果计算出的距离超出阈值,则翻转法向量 if (vector_dist > top_down_radius) { sample_data.velodynenormal[0] = -sample_data.velodynenormal[0]; sample_data.velodynenormal[1] = -sample_data.velodynenormal[1]; sample_data.velodynenormal[2] = -sample_data.velodynenormal[2]; } // 摄像机标记点设置 sample_data.camerapoint[0] = corner_vectors.at<double>(0, 4); sample_data.camerapoint[1] = corner_vectors.at<double>(1, 4); sample_data.camerapoint[2] = corner_vectors.at<double>(2, 4); sample_data.cameranormal[0] = chessboard_normal.at<double>(0); sample_data.cameranormal[1] = chessboard_normal.at<double>(1); sample_data.cameranormal[2] = chessboard_normal.at<double>(2); sample_data.velodynecorner[0] = basic_cloud_ptr->points[2].x; // 激光雷达角点 sample_data.velodynecorner[1] = basic_cloud_ptr->points[2].y; sample_data.velodynecorner[2] = basic_cloud_ptr->points[2].z; // 计算像素数据 sample_data.pixeldata = sqrt(pow((imagePoints1[1].x - imagePoints1[0].x), 2) + pow((imagePoints1[1].y - imagePoints1[0].y), 2)); // 可视化四个激光雷达板角点、边缘和中心点 visualization_msgs::Marker marker1, line_strip, corners_board; // 创建可视化标记 marker1.header.frame_id = line_strip.header.frame_id = corners_board.header.frame_id = "velodyne"; // 设置帧ID marker1.header.stamp = line_strip.header.stamp = corners_board.header.stamp = ros::Time(); // 设置时间戳 marker1.ns = line_strip.ns = corners_board.ns = "my_sphere"; // 设置命名空间 line_strip.id = 10; // 线条标记ID marker1.id = 11; // 点标记ID marker1.type = visualization_msgs::Marker::POINTS; // 设置标记类型为点 line_strip.type = visualization_msgs::Marker::LINE_STRIP; // 设置标记类型为线条 corners_board.type = visualization_msgs::Marker::SPHERE; // 设置标记类型为草图 marker1.action = line_strip.action = corners_board.action = visualization_msgs::Marker::ADD; // 添加标记 marker1.pose.orientation.w = line_strip.pose.orientation.w = corners_board.pose.orientation.w = 1.0; // 设置旋转 marker1.scale.x = 0.02; // 设置点的缩放 marker1.scale.y = 0.02; corners_board.scale.x = 0.04; // 设置棋盘角的缩放 corners_board.scale.y = 0.04; corners_board.scale.z = 0.04; line_strip.scale.x = 0.009; // 设置线的缩放 marker1.color.a = line_strip.color.a = corners_board.color.a = 1.0; // 设置不透明度 line_strip.color.b = 1.0; // 对于线条的颜色 marker1.color.b = marker1.color.g = marker1.color.r = 1.0; // 点的颜色 // 添加角点标记 for (int i = 0; i < 5; i++) { if (i < 4) { corners_board.pose.position.x = basic_cloud_ptr->points[i].x; // 角点坐标 corners_board.pose.position.y = basic_cloud_ptr->points[i].y; corners_board.pose.position.z = basic_cloud_ptr->points[i].z; } else { corners_board.pose.position.x = sample_data.velodynepoint[0] / 1000; // 中心坐标 corners_board.pose.position.y = sample_data.velodynepoint[1] / 1000; corners_board.pose.position.z = sample_data.velodynepoint[2] / 1000; } corners_board.id = i; // 为标记分配ID // 根据ID设置颜色 if (corners_board.id == 0) corners_board.color.b = 1.0; // 第一个点 - 蓝色 else if (corners_board.id == 1) { corners_board.color.b = 0.0; // 第二个点 - 绿色 corners_board.color.g = 1.0; } else if (corners_board.id == 2) { corners_board.color.b = 0.0; // 第三个点 - 红色 corners_board.color.g = 0.0; corners_board.color.r = 1.0; } else if (corners_board.id == 3) { corners_board.color.b = 0.0; // 第四个点 - 青色 corners_board.color.r = 1.0; corners_board.color.g = 1.0; } else if (corners_board.id == 4) { corners_board.color.b = 1.0; // 中心点 - 白色 corners_board.color.r = 1.0; corners_board.color.g = 1.0; } visPub.publish(corners_board); // 发布角点标记 } // 绘制棋盘边缘线 for (int i = 0; i < 2; i++) { geometry_msgs::Point p; // 创建点 p.x = basic_cloud_ptr->points[1 - i].x; // 设置点坐标 p.y = basic_cloud_ptr->points[1 - i].y; p.z = basic_cloud_ptr->points[1 - i].z; marker1.points.push_back(p); // 添加点到标记中 line_strip.points.push_back(p); // 添加点到线中 p.x = basic_cloud_ptr->points[3 - i].x; // 另一条边的点 p.y = basic_cloud_ptr->points[3 - i].y; p.z = basic_cloud_ptr->points[3 - i].z; marker1.points.push_back(p); // 添加点到标记中 line_strip.points.push_back(p); // 添加点到线中 } geometry_msgs::Point p; // 创建点 p.x = basic_cloud_ptr->points[1].x; // 获取第二个点的坐标 p.y = basic_cloud_ptr->points[1].y; p.z = basic_cloud_ptr->points[1].z; marker1.points.push_back(p); // 添加点到标记 line_strip.points.push_back(p); // 添加点到线中 p.x = basic_cloud_ptr->points[0].x; // 获取第一个点的坐标 p.y = basic_cloud_ptr->points[0].y; p.z = basic_cloud_ptr->points[0].z; marker1.points.push_back(p); // 添加点到标记 line_strip.points.push_back(p); // 添加点到线中 // 发布棋盘边缘线 visPub.publish(line_strip); // 可视化棋盘法向量 marker.header.frame_id = "velodyne"; // 设置帧ID marker.header.stamp = ros::Time(); // 设置时间戳 marker.ns = "my_namespace"; // 设置命名空间 marker.id = 12; // 设置标记ID marker.type = visualization_msgs::Marker::ARROW; // 设置标记类型为箭头 marker.action = visualization_msgs::Marker::ADD; // 添加标记 marker.scale.x = 0.02; // 箭头x轴缩放 marker.scale.y = 0.04; // 箭头y轴缩放 marker.scale.z = 0.06; // 箭头z轴缩放 marker.color.a = 1.0; // 设置可见性 marker.color.r = 0.0; // 设置颜色为蓝色 marker.color.g = 0.0; marker.color.b = 1.0; // 设置箭头起始和结束点 geometry_msgs::Point start, end; start.x = sample_data.velodynepoint[0] / 1000; // 箭头起点 start.y = sample_data.velodynepoint[1] / 1000; start.z = sample_data.velodynepoint[2] / 1000; end.x = start.x + sample_data.velodynenormal[0] / 2; // 箭头终点 end.y = start.y + sample_data.velodynenormal[1] / 2; end.z = start.z + sample_data.velodynenormal[2] / 2; marker.points.resize(2); // 为标记分配两点以形成箭头 marker.points[0] = start; // 设置起点 marker.points[1] = end; // 设置终点 vis_pub.publish(marker); // 发布法向量标记 } // if (flag == 1) // 仅在用户按下 'enter' 时发布特征数据 if (flag == 4) { roi_publisher.publish(sample_data); // 发布特征数据 flag = 0; // 重置标志 } } // 结束提取ROI方法 } // 结束命名空间 extrinsic_calibration

5.内参标定

#!/usr/bin/env python3

"""

自动从 rosbag 或 图像目录提取图片并完成相机标定。

请在配置区修改以下参数:

USE_BAG: True-从 rosbag 提取;False-从图像目录读取

BAG_PATH: rosbag 文件完整路径

TOPIC: bag 中图像话题

IMG_DIR: 图像文件夹路径(当 USE_BAG=False 时生效)

PATTERN: 棋盘格列×行

SQUARE_SIZE: 棋盘格方格边长(米)

OUT_DIR: 输出目录

MIN_IMAGES: 最少需要成功检测角点的图像数量

MAX_FRAMES: bag 最大提取帧数

SKIP: bag 提取间隔

运行:

直接执行脚本,无需命令行参数

"""

import os

import cv2

import numpy as np

# === 配置区(请根据实际情况修改) ===

USE_BAG = False

BAG_PATH = '/home/pc/2026BOSS/Tools_RosBag2KITTI/catkin_ws/output/1.bag'

TOPIC = '/camera/image_raw'

IMG_DIR = '/home/pc/2026BOSS/Tools_RosBag2KITTI/catkin_ws/output/calib_result/png'

PATTERN = (8, 11)

SQUARE_SIZE = 0.05

OUT_DIR = 'calib_result'

MIN_IMAGES = 5

MAX_FRAMES = 50

SKIP = 1

# === 配置区结束 ===

# 动态导入 rosbag

if USE_BAG:

try:

import rosbag

from cv_bridge import CvBridge

except ImportError:

print('缺少 rosbag 或 cv_bridge,请安装依赖或设置 USE_BAG=False')

exit(1)

def extract_images_from_bag(bag_path, topic, out_dir, max_frames, skip):

bag = rosbag.Bag(bag_path, 'r')

bridge = CvBridge()

paths, count = [], 0

for i, (_, msg, _) in enumerate(bag.read_messages(topics=[topic])):

if i % skip != 0:

continue

img = bridge.imgmsg_to_cv2(msg, 'bgr8')

fname = os.path.join(out_dir, f'frame_{count:04d}.png')

cv2.imwrite(fname, img)

paths.append(fname)

count += 1

if count >= max_frames:

break

bag.close()

return paths

def load_images_from_dir(img_dir):

exts = {'.png', '.jpg', '.jpeg'}

return [os.path.join(img_dir, f) for f in sorted(os.listdir(img_dir))

if os.path.splitext(f.lower())[1] in exts]

def find_corners(paths, pattern, square_size, out_dir):

objp = np.zeros((pattern[1]*pattern[0], 3), np.float32)

objp[:, :2] = np.indices(pattern).T.reshape(-1, 2) * square_size

objpoints, imgpoints, goods = [], [], []

for p in paths:

img = cv2.imread(p)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, corners = cv2.findChessboardCorners(gray, pattern)

if not ret:

continue

corners2 = cv2.cornerSubPix(gray, corners, (11,11), (-1,-1),

(cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER,30,0.001))

objpoints.append(objp)

imgpoints.append(corners2)

goods.append(p)

vis = cv2.drawChessboardCorners(img.copy(), pattern, corners2, ret)

cv2.imwrite(os.path.join(out_dir, 'corners_'+os.path.basename(p)), vis)

return objpoints, imgpoints, goods

def calibrate_camera(objp, imgp, size):

return cv2.calibrateCamera(objp, imgp, size, None, None)

def save_opencv_yaml(filename, K, D, size):

# 按 OpenCV 格式手写 YAML

with open(filename, 'w') as f:

f.write('# 相机的内参矩阵 K\n')

f.write('camera_matrix: !!opencv-matrix\n')

f.write(' rows: 3\n')

f.write(' cols: 3\n')

f.write(' dt: d\n')

data = ', '.join(f'{v:.16e}' for v in K.flatten())

f.write(f' data: [ {data} ]\n\n')

f.write('# 相机的失真系数 D\n')

f.write('distortion_coefficients: !!opencv-matrix\n')

f.write(f' rows: {D.flatten().shape[0]}\n')

f.write(' cols: 1\n')

f.write(' dt: d\n')

ddata = ', '.join(f'{v:.16e}' for v in D.flatten())

f.write(f' data: [ {ddata} ]\n\n')

f.write('# 相机图像分辨率\n')

f.write('image_pixel:\n')

f.write(f' width: {size[0]}\n')

f.write(f' height: {size[1]}\n')

print(f'已保存 OpenCV 格式 camera.yaml 到 {filename}')

def save_fisheye_yaml(filename, K, D, size):

"""保存鱼眼相机格式的 YAML 文件"""

with open(filename, 'w') as f:

f.write('distortion_model: "fisheye"\n')

f.write(f'width: {size[0]}\n')

f.write(f'height: {size[1]}\n')

# 保存失真系数 D(通常鱼眼模型只取前4个参数)

if len(D.flatten()) >= 4:

d_values = D.flatten()[:4]

else:

# 如果失真系数不足4个,用0填充

d_values = np.pad(D.flatten(), (0, 4 - len(D.flatten())), 'constant')

d_str = ','.join(f'{v:.7f}' for v in d_values)

f.write(f'D: [{d_str}]\n')

# 保存内参矩阵 K(展平为9个元素)

k_values = K.flatten()

k_str = ','.join(f'{v:.7g}' if abs(v) > 1e-6 else '0.0' for v in k_values)

f.write(f'K: [{k_str}]\n')

print(f'已保存鱼眼格式 camera_fisheye.yaml 到 {filename}')

def main():

os.makedirs(OUT_DIR, exist_ok=True)

if USE_BAG:

print('从 bag 提取图像...')

paths = extract_images_from_bag(BAG_PATH, TOPIC, OUT_DIR, MAX_FRAMES, SKIP)

if not paths:

print(f'未提取到图像,请检查话题 {TOPIC}')

return

else:

print('从目录加载图像...')

paths = load_images_from_dir(IMG_DIR)

print(f'共获取 {len(paths)} 张图像')

objp, imgp, goods = find_corners(paths, PATTERN, SQUARE_SIZE, OUT_DIR)

print(f'成功检测 {len(goods)} 张角点图')

if len(goods) < MIN_IMAGES:

print('角点不足,标定失败')

return

img0 = cv2.imread(goods[0])

size = (img0.shape[1], img0.shape[0])

print('开始标定...')

ret, K, D, _, _ = calibrate_camera(objp, imgp, size)

print(f'重投影误差: {ret}')

# 保存两种格式

save_opencv_yaml(os.path.join(OUT_DIR, 'camera.yaml'), K, D, size)

save_fisheye_yaml(os.path.join(OUT_DIR, 'camera_fisheye.yaml'), K, D, size)

if __name__ == '__main__':

main()