一.环境准备

节点角色:(2c 4G)

192.168.181.10 master

192.168.181.100 worker01

192.168.181.11 worker02

1.1关闭防火墙规则,关闭selinux,关闭swap交换

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/enforcing/disabled/' /etc/selinux/config

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

swapoff -a #交换分区必须要关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久关闭swap分区,&符号在sed命令中代表上次匹配

的结果

1.2加载 ip_vs 模块

for i in (ls /usr/lib/modules/(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo i; /sbin/modinfo -F filename i >/dev/null 2>&1 && /sbin/modprobe $i;done

1.3修改主机名

hostnamectl set-hostname master

hostnamectl set-hostname worker01

hostnamectl set-hostname worker02

1.4所有节点修改hosts文件

vim /etc/hosts

192.168.181.10 master

192.168.181.100 worker01

192.168.181.11 worker02

1.5调整内核参数

cat > /etc/sysctl.d/kubernetes.conf << EOF

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

EOF

#生效参数

sysctl --system

二.所有节点安装docker//导入docker****配置

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce-24.0.1 docker-ce-cli-24.0.1 containerd.io

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors":

"https://docker.hpcloud.cloud", "https://docker.m.daocloud.io", "https://docker.unsee.tech", "https://docker.1panel.live", "http://mirrors.ustc.edu.cn", "https://docker.chenby.cn", "http://mirror.azure.cn", "https://dockerpull.org", "https://dockerhub.icu", "https://hub.rat.dev" \], "exec-opts": \["native.cgroupdriver=systemd"\], "log-driver": "json-file", "log-opts": { "max-size": "100m" } } EOF #启动docker systemctl daemon-reload systemctl restart docker.service systemctl enable docker.service ## **三.所有节点安装****kubeadm****,****kubelet****和****kubectl** cat \> /etc/yum.repos.d/kubernetes.repo \<\< EOF \[kubernetes

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet-1.20.15 kubeadm-1.20.15 kubectl-1.20.15

#开机自启kubelet

systemctl enable kubelet.service

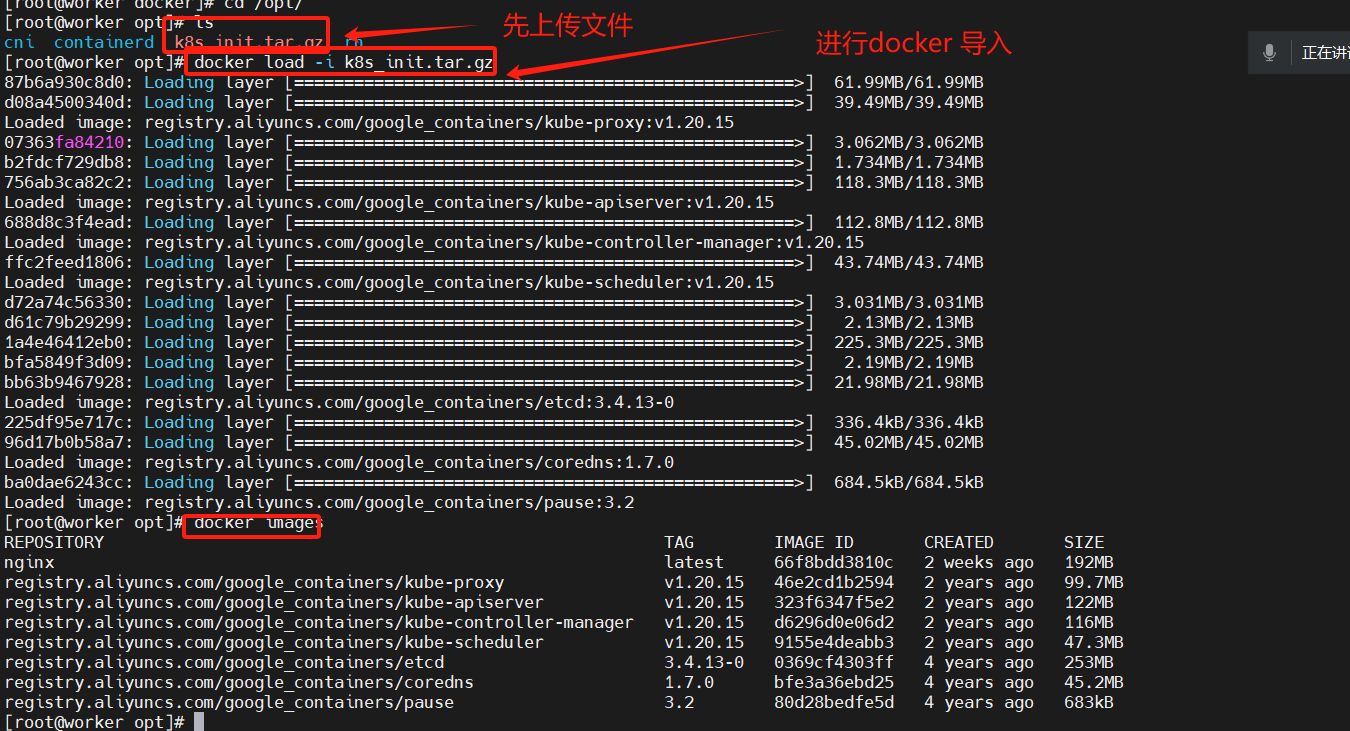

**//**上传压缩包,导入镜像(所有节点)

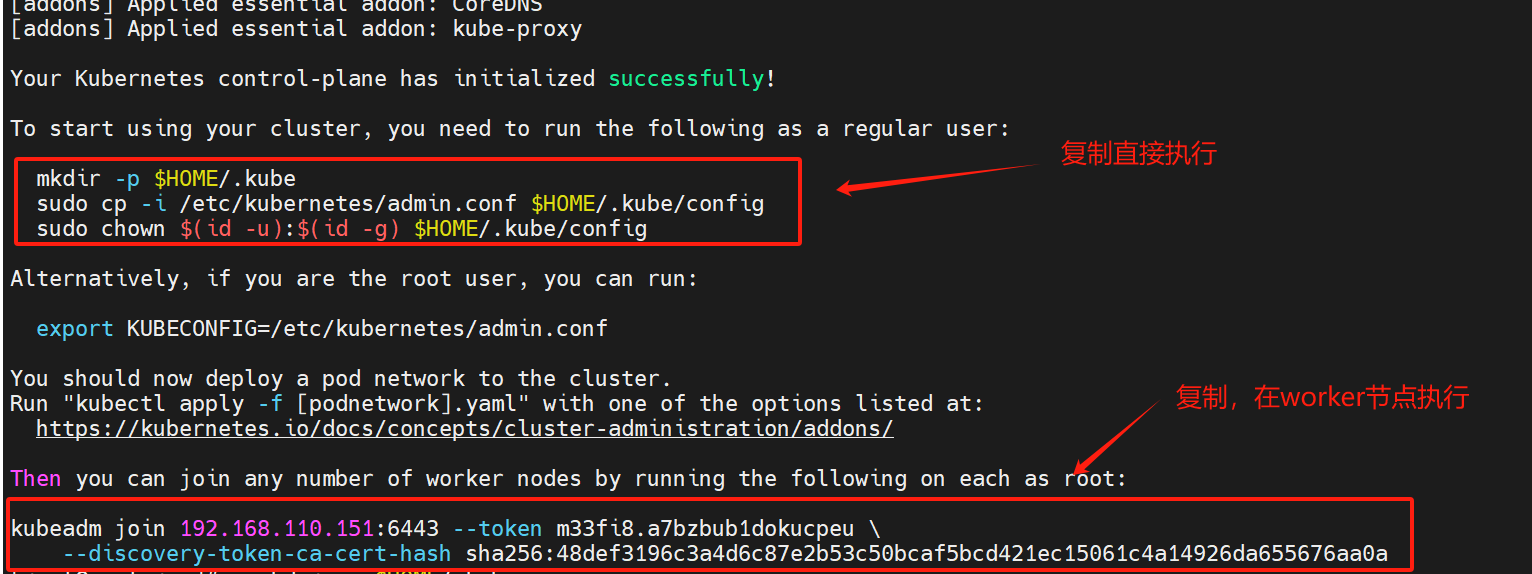

四.kubeadm****初始化集群

kubeadm init \

--apiserver-advertise-address=192.168.181.10 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.20.15 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16 \

--token-ttl=0

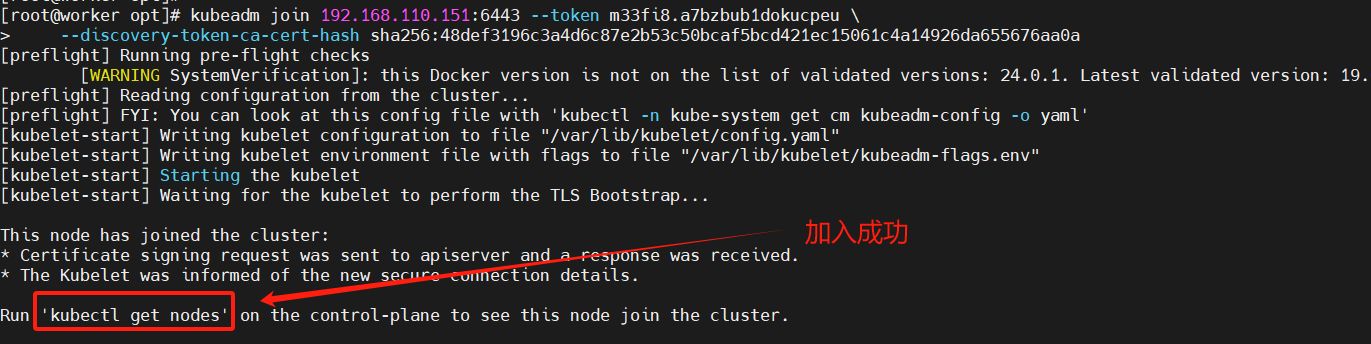

复制kubeadm join加入集群的命令:

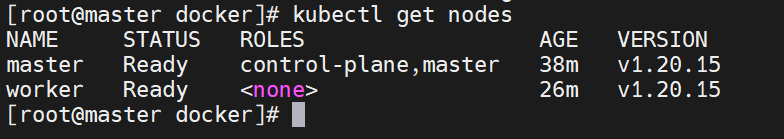

//worker****节点执行后,检查结果

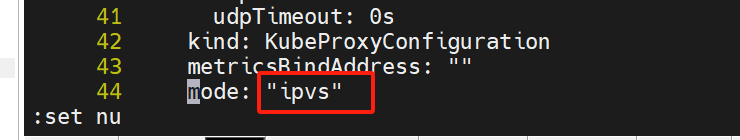

//以上执行后,在master节点执行"systemctl restart kubelet"重启kubelet**//开启ipvs****(master节点)**

kubectl edit cm kube-proxy -n=kube-system

找到44行,修改内容

// kubectl get cs 集群不健康问题解决:

vim /etc/kubernetes/manifests/kube-scheduler.yaml

vim /etc/kubernetes/manifests/kube-controller-manager.yaml

修改如下内容

把--bind-address=127.0.0.1变成--bind-address=192.168.110.151 #修改成k8s的控制节点

master01的ip

把httpGet:字段下的hosts由127.0.0.1变成192.168.110.151(有两处)

#- --port=0 # 搜索port=0,把这一行注释掉

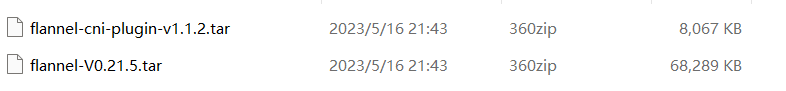

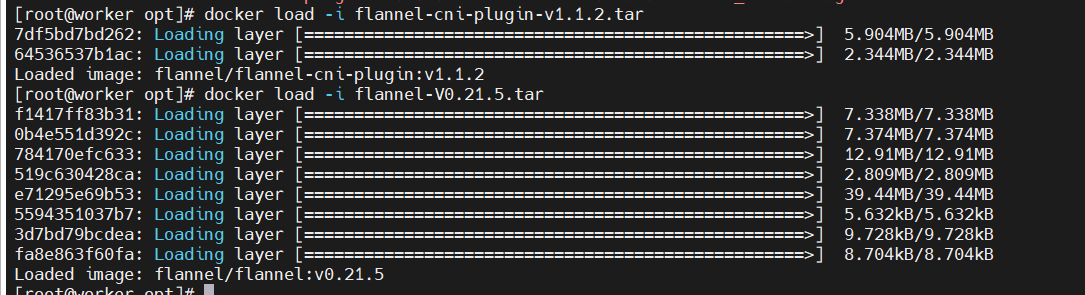

//所有节点上传以下文件

**//所有节点执行以下:

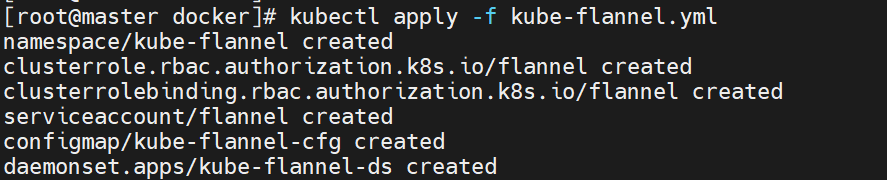

**//上传kube-flannel.yml文件,然后执行:

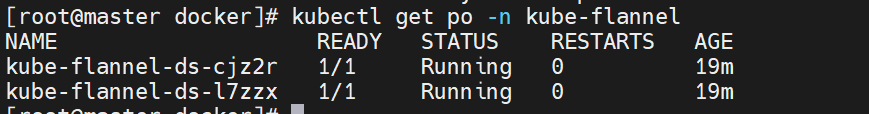

//使用kubectl get po -n kube-flannel等待pod转为ready****状态:

//最后查看k8s****集群状态: