graylog6.3 docker-compose部署全流程

docker升级到新版本

如果docker版本低,需要升级到最新版本

卸载旧版本(如果安装过)

sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine安装依赖包

sudo yum install -y yum-utils device-mapper-persistent-data lvm2添加 Docker 官方仓库

sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo可使用阿里云加速源(安装和运行都更快)

# 添加阿里云 Docker yum 源 sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装最新版 Docker CE

sudo yum install -y docker-ce docker-ce-cli containerd.io如果你想安装指定版本 ,可以先运行

yum list docker-ce --showduplicates | sort -r查看所有可用版本。

安装最新docker

镜像源修改

tee /etc/docker/daemon.json << EOF

{

"registry-mirrors": [

"https://registry.cyou"

]

}

EOF启动并设置 Docker 开机启动

sudo systemctl start docker

sudo systemctl enable docker验证 Docker 是否安装成功

docker version

docker infograylog配置文件准备

创建数据目录/data/graylog6

mkdir /data/graylog6可以根据自己的实际部署目录修改

graylog-compose.yml

touch docker-compose.yml

tee docker-compose.yml << EOF

version: '3.8'

services:

mongodb:

image: mongo:6.0

container_name: graylog-mongodb

restart: unless-stopped

networks:

- graylog

healthcheck:

test: ["CMD", "mongosh", "--eval", "db.adminCommand('ping')"]

interval: 10s

timeout: 5s

retries: 5

volumes:

- /data/graylog6/mongodb/db:/data/db

- /data/graylog6/mongodb/config:/data/configdb

datanode:

image: graylog/graylog-datanode:6.3

container_name: graylog-datanode

restart: unless-stopped

hostname: datanode

networks:

- graylog

environment:

GRAYLOG_DATANODE_NODE_ID_FILE: /var/lib/graylog-datanode/node-id

GRAYLOG_DATANODE_PASSWORD_SECRET: ${GRAYLOG_PASSWORD_SECRET}

GRAYLOG_DATANODE_MONGODB_URI: mongodb://mongodb:27017/graylog

ports:

- "8999:8999"

- "9200:9200"

- "9300:9300"

volumes:

- /data/graylog6/datanode:/var/lib/graylog-datanode

graylog:

image: graylog/graylog:6.3

container_name: graylog-server

depends_on:

mongodb:

condition: service_healthy

datanode:

condition: service_started

restart: unless-stopped

networks:

- graylog

environment:

GRAYLOG_PASSWORD_SECRET: "${GRAYLOG_PASSWORD_SECRET}"

GRAYLOG_ROOT_PASSWORD_SHA2: "${GRAYLOG_ROOT_PASSWORD_SHA2}"

GRAYLOG_HTTP_BIND_ADDRESS: 0.0.0.0:9000

GRAYLOG_HTTP_EXTERNAL_URI: http://108.15.42.77:9000/ #外网访问地址根据自己的ip进行修改

GRAYLOG_MONGODB_URI: mongodb://mongodb:27017/graylog

ports:

- "9000:9000"

- "12201:12201/udp"

volumes:

- /data/graylog6/graylog:/usr/share/graylog/data/data

- /data/graylog6/journal:/usr/share/graylog/data/journal

networks:

graylog:

driver: bridge

EOF秘钥配置

touch .env

tee .env << EOF

# You MUST set a secret to secure/pepper the stored user passwords here. Use at least 64 characters.

# Generate one by using for example: pwgen -N 1 -s 96

# ATTENTION: This value must be the same on all Graylog nodes in the cluster.

# Changing this value after installation will render all user sessions and encrypted values in the database invalid. (e.g. encrypted access tokens)

GRAYLOG_PASSWORD_SECRET="nVcyUIHP2pSBK9YV"

# You MUST specify a hash password for the root user (which you only need to initially set up the

# system and in case you lose connectivity to your authentication backend)

# This password cannot be changed using the API or via the web interface. If you need to change it,

# modify it in this file.

# Create one by using for example: echo -n yourpassword | shasum -a 256

# and put the resulting hash value into the following line

# CHANGE THIS!

GRAYLOG_ROOT_PASSWORD_SHA2="8c6976e5b5410415bde908bd4dee15dfb16c2f0064574d3eaa8b6bd9c8b1f6e3"

EOF如何生成这两个值:

GRAYLOG_PASSWORD_SECRET(任意 64 位以上随机字符串)

你可以用:

bash

openssl rand -base64 64输出类似:

nVcyUIHP2pSBK9YVmzEbUyk7O9qMl3OE7p0H18hv7r1f8M0K8z6mgfLzKb3g23BQfC8+OU6jzNFwRnP7++y8Kw==GRAYLOG_ROOT_PASSWORD_SHA2

将你的 Graylog 管理员密码做 sha256,比如 admin:

bash

echo -n admin | sha256sum | awk '{print $1}'输出类似:

bash

8c6976e5b5410415bde908bd4dee15dfb16c2f0064574d3eaa8b6bd9c8b1f6e3挂载目录创建及权限分配

sudo mkdir -p /data/graylog6/mongodb/db

sudo chown -R 1100:1100 /data/graylog6/mongodb/db

sudo mkdir -p /data/graylog6/mongodb/config

sudo chown -R 1100:1100 /data/graylog6/mongodb/config

sudo mkdir -p /data/graylog6/graylog

sudo chown -R 1100:1100 /data/graylog6/graylog

sudo mkdir -p /data/graylog6/journal

sudo chown -R 1100:1100 /data/graylog6/journal

sudo mkdir -p /data/graylog6/datanode

sudo chown -R 1100:1100 /data/graylog6/datanode其他配置

Graylog DataNode 启动时进行预检(preflight check),检查系统参数 vm.max_map_count,要求最少是 262144。

这个参数 vm.max_map_count 是 Linux 中控制一个进程可以映射的虚拟内存区域数量的内核参数。像 Elasticsearch(Graylog 的底层依赖)这类使用大量 mmap 的应用通常需要这个值更高。

编辑配置文件 /etc/sysctl.conf,添加这一行:

bash

sudo echo vm.max_map_count=262144 >> /etc/sysctl.conf然后运行:

bash

sudo sysctl -p验证设置是否成功

cat /proc/sys/vm/max_map_count返回值应为:

262144启动graylog

进入配置文件所在目录

bash

docker compose up -d初次up的时候,会自动进行镜像的下载,如果没有修改镜像,使用官方的镜像源耗时很长,甚至访问不了,目前亲测https://registry.cyou这个镜像源下载速度还不错

查看日志并找到初始password

bash

docker logs -f graylog访问并配置

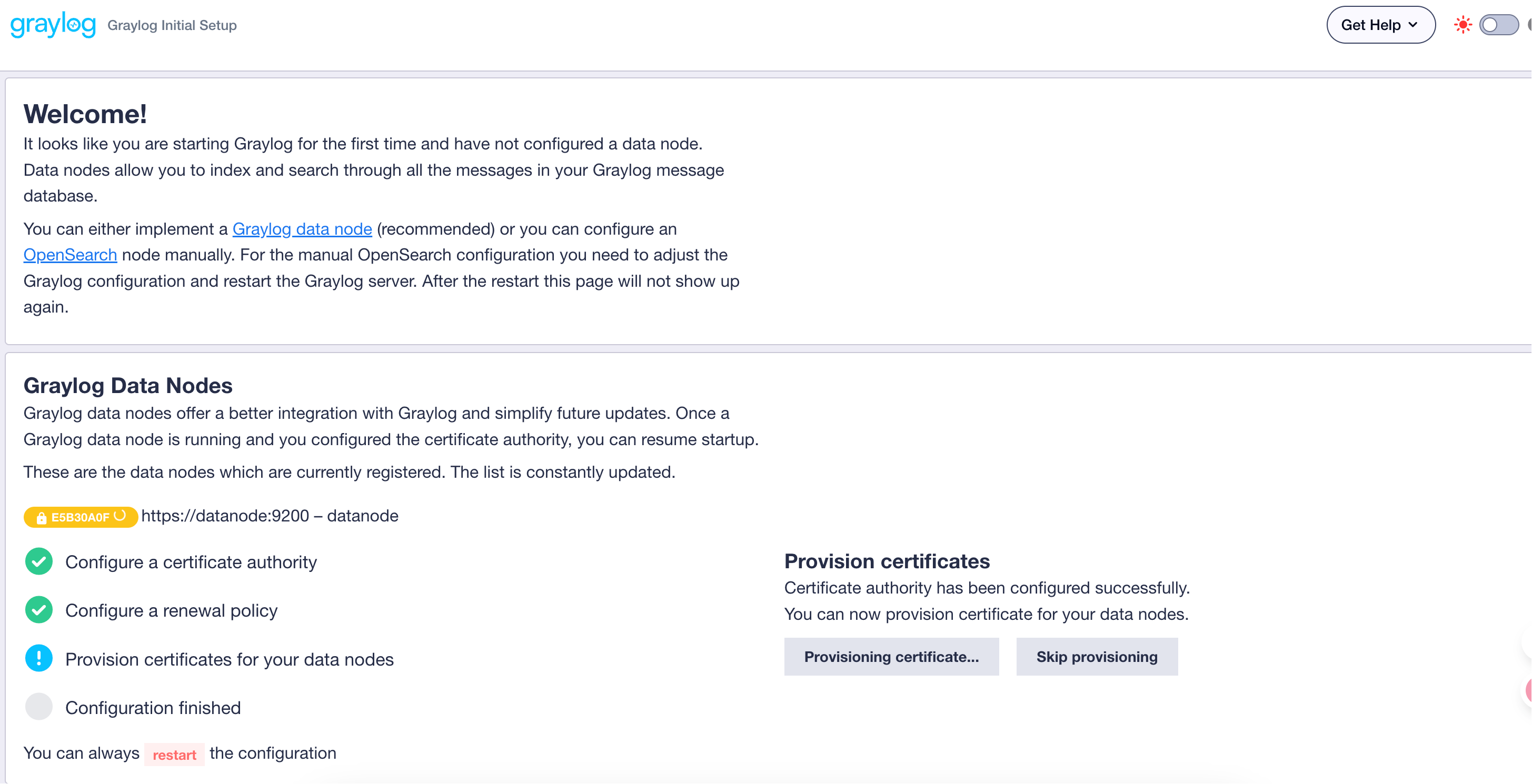

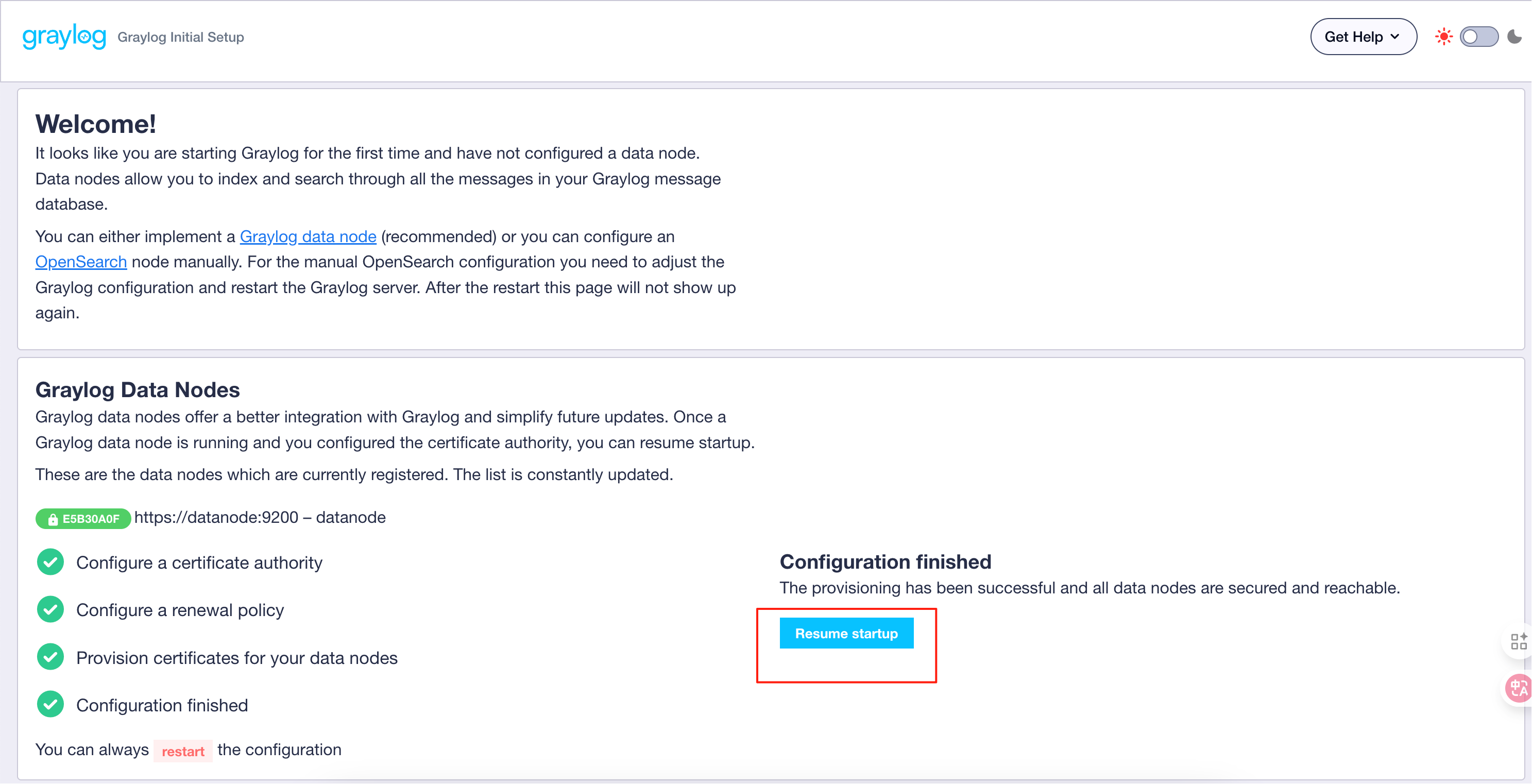

打开http://108.15.42.77:9000/链接,输入初始化密码,进入配置页面,这个页面按要求进行配置即可

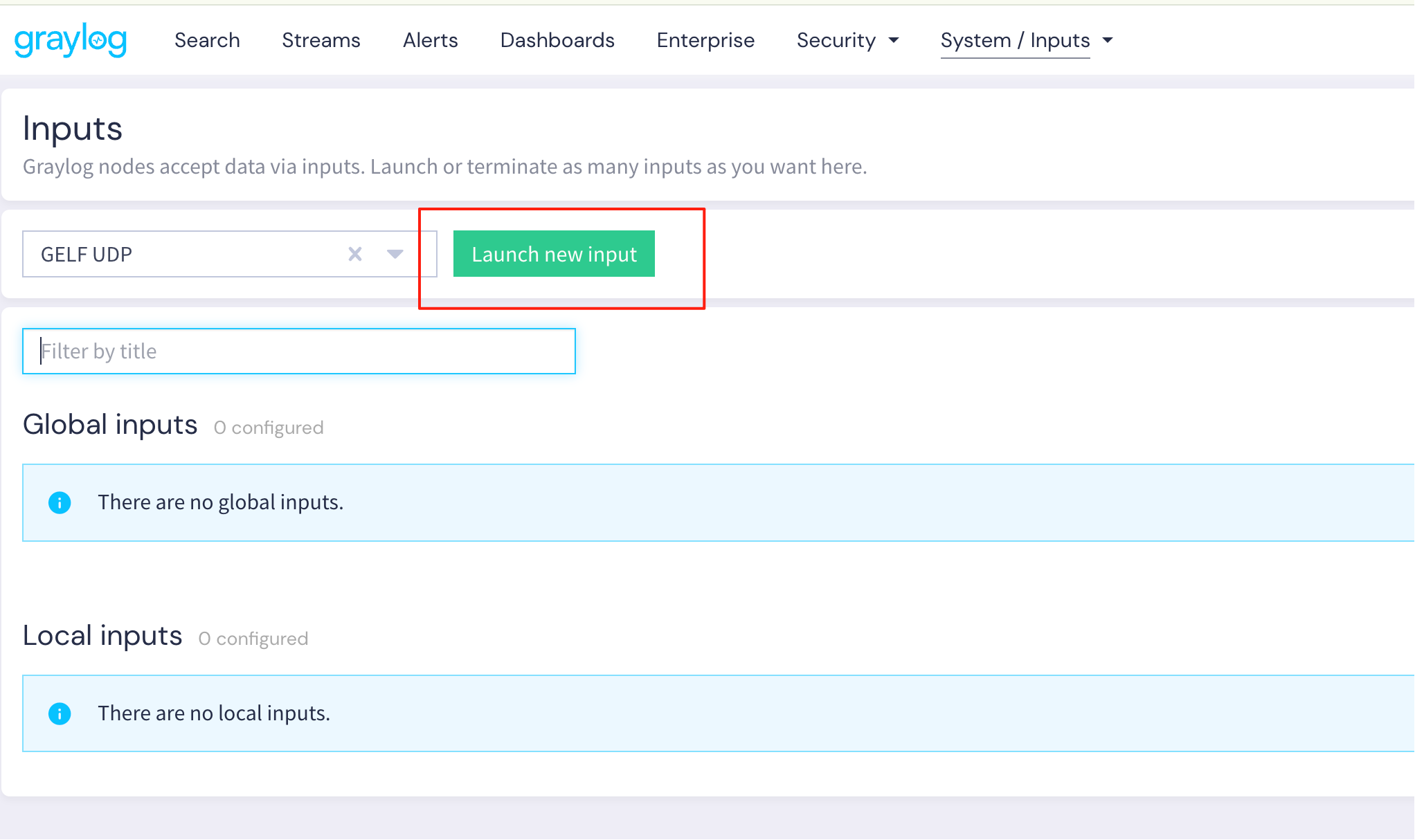

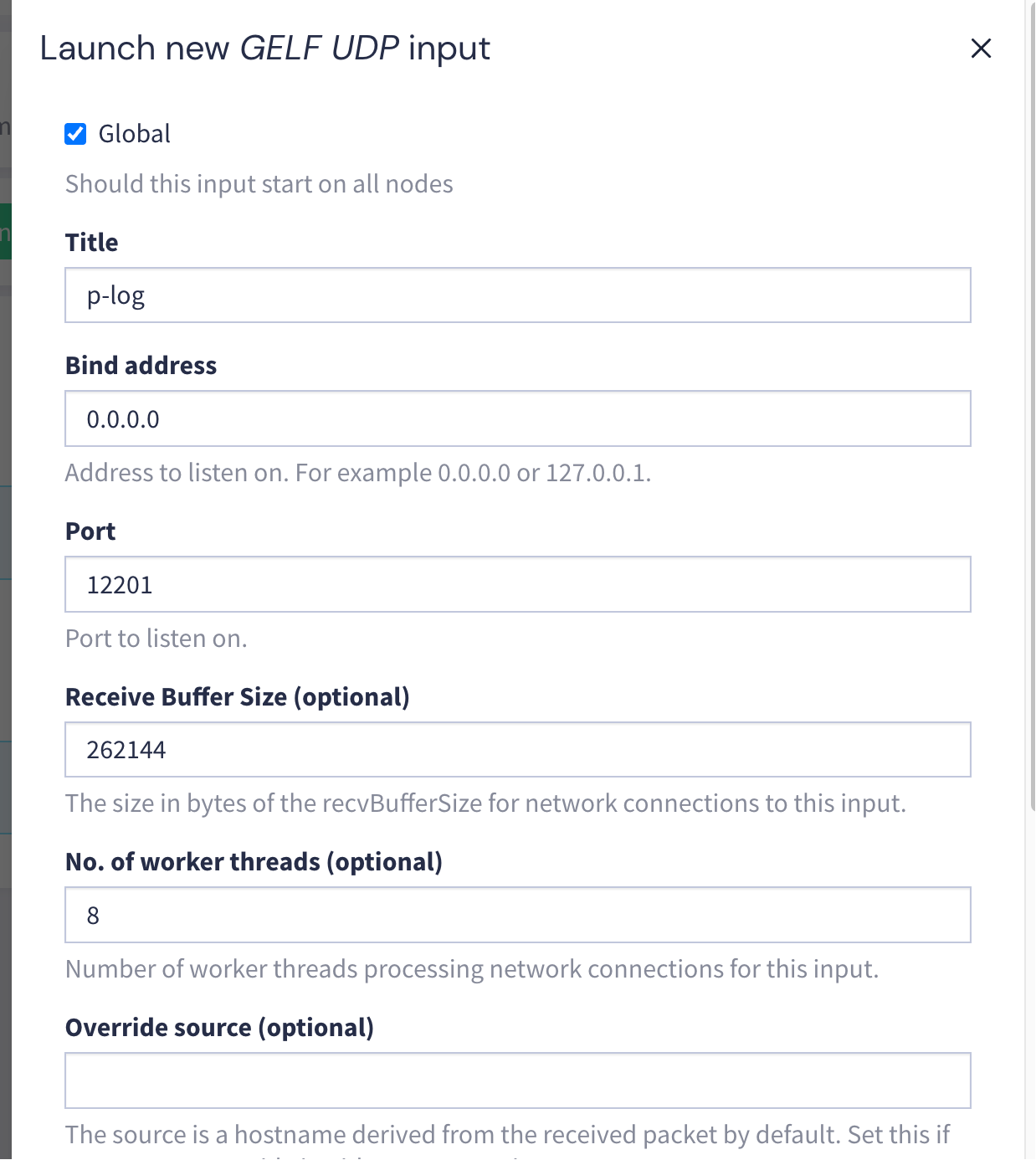

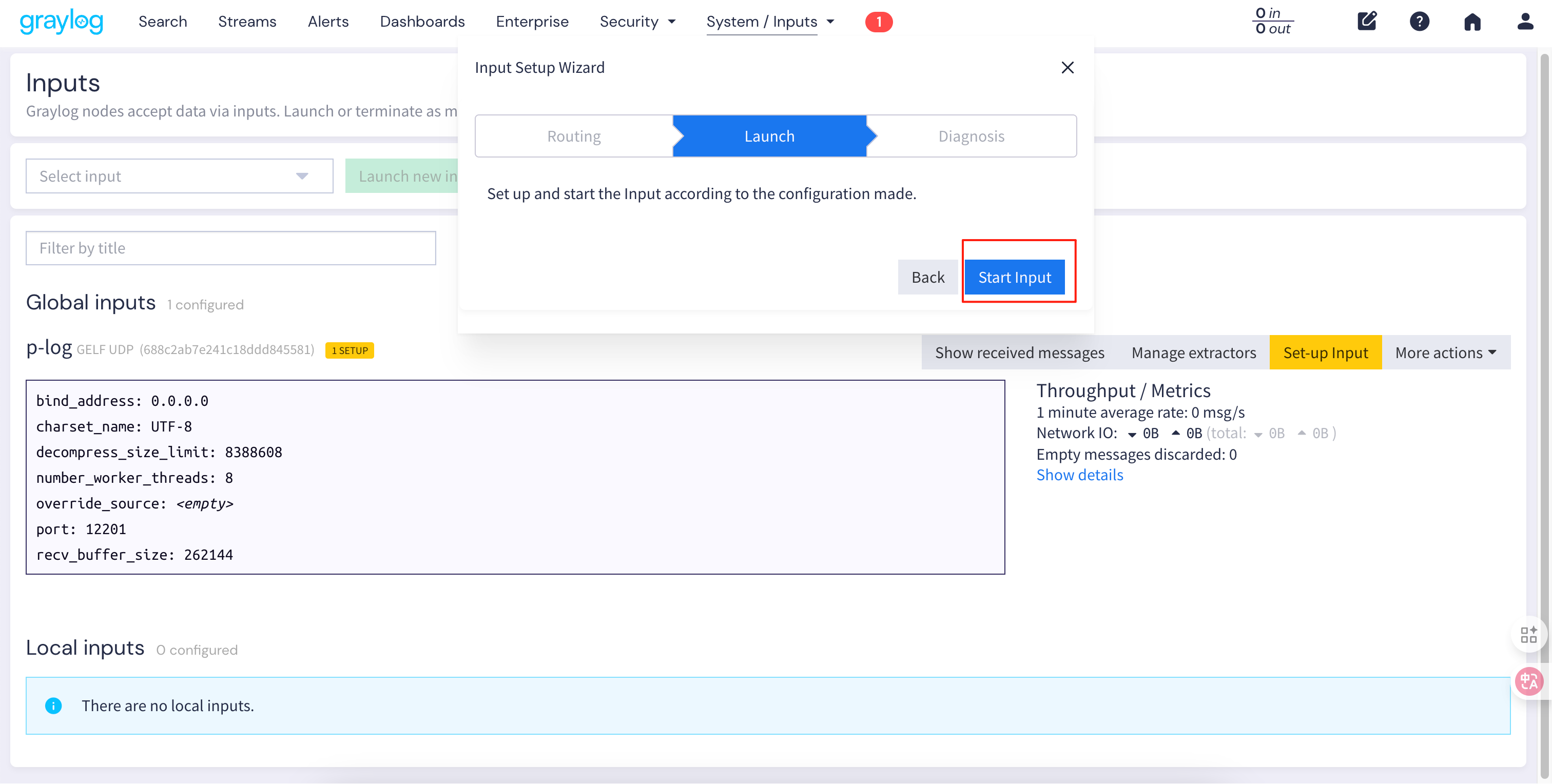

配置input

配置完后要记得点start input,否则不会生效

服务日志配置文件中添加graylog配置,我是用的logback

xml

<appender name="GELF" class="biz.paluch.logging.gelf.logback.GelfLogbackAppender">

<!-- graylog部署的IP-->

<host>${graylogHost}</host>

<port>${graylogPort}</port>

<version>1.1</version>

<!-- 这里可以定义为服务名等-->

<facility>${appName}</facility>

<!-- 手动添加字段 -->

<additionalFields>activeProfile=${activeProfile}</additionalFields>

<extractStackTrace>true</extractStackTrace>

<filterStackTrace>true</filterStackTrace>

<mdcProfiling>true</mdcProfiling>

<timestampPattern>yyyy-MM-dd HH:mm:ss,SSS</timestampPattern>

<maximumMessageSize>81920000</maximumMessageSize>

<!-- This are fields using MDC -->

<mdcFields>mdcField1,mdcField2</mdcFields>

<dynamicMdcFields>mdc.*,(mdc|MDC)fields</dynamicMdcFields>

<includeFullMdc>true</includeFullMdc>

</appender>完整的logback.xml

xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration debug="false" scan="true" scanPeriod="1 seconds">

<springProperty name="appName" source="spring.application.name"/>

<springProperty name="graylogHost" source="graylog.host"/>

<springProperty name="graylogPort" source="graylog.port"/>

<springProperty name="activeProfile" source="spring.profiles.active"/>

<contextName>${appName}</contextName>

<property name="log.path" value="logs/"/>

<property name="log.pattern" value="%d{HH:mm:ss.SSS} %-5level [%15.15thread{15}] %40.40logger{40} [%3.3line{3}] : %msg%n"/>

<property name="maxHistory" value="60"/>

<property name="maxFileSize" value="200MB"/>

<property name="totalSizeCap" value="30GB"/>

<!-- 彩色日志 -->

<!-- 彩色日志依赖的渲染类 -->

<conversionRule conversionWord="clr" converterClass="org.springframework.boot.logging.logback.ColorConverter" />

<conversionRule conversionWord="wex" converterClass="org.springframework.boot.logging.logback.WhitespaceThrowableProxyConverter" />

<conversionRule conversionWord="wEx" converterClass="org.springframework.boot.logging.logback.ExtendedWhitespaceThrowableProxyConverter" />

<!-- 彩色日志格式 -->

<property name="CONSOLE_LOG_PATTERN" value="${CONSOLE_LOG_PATTERN:-%clr(%d{HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %clr(${PID:- }){magenta} %clr(---){faint} %clr([%10.10t{10}]){faint} %clr(%-40.40logger{39}){cyan} %clr([%3.3line{3}]){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}}"/>

<!--输出到控制台-->

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<!--此日志appender是为开发使用,只配置最底级别,控制台输出的日志级别是大于或等于此级别的日志信息-->

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>info</level>

</filter>

<encoder>

<Pattern>${CONSOLE_LOG_PATTERN}</Pattern>

<!-- 设置字符集 -->

<charset>UTF-8</charset>

</encoder>

</appender>

<appender name="GELF" class="biz.paluch.logging.gelf.logback.GelfLogbackAppender">

<!-- graylog部署的IP-->

<host>${graylogHost}</host>

<port>${graylogPort}</port>

<version>1.1</version>

<!-- 这里可以定义为服务名等-->

<facility>${appName}</facility>

<!-- 手动添加字段 -->

<additionalFields>activeProfile=${activeProfile}</additionalFields>

<extractStackTrace>true</extractStackTrace>

<filterStackTrace>true</filterStackTrace>

<mdcProfiling>true</mdcProfiling>

<timestampPattern>yyyy-MM-dd HH:mm:ss,SSS</timestampPattern>

<maximumMessageSize>81920000</maximumMessageSize>

<!-- This are fields using MDC -->

<mdcFields>mdcField1,mdcField2</mdcFields>

<dynamicMdcFields>mdc.*,(mdc|MDC)fields</dynamicMdcFields>

<includeFullMdc>true</includeFullMdc>

</appender>

<!-- info日志 -->

<appender name="INFO" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${log.path}${file.separator}info.log</file>

<encoder>

<pattern>

${log.pattern}

</pattern>

</encoder>

<!-- 滚动策略 按照日期生成日志存档文件,并进行zip压缩-->

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>

${log.path}${file.separator}%d{yyyy-MM-dd,aux}${file.separator}info-%d{yyyy-MM-dd}.%i.log.gz

</fileNamePattern>

<!--日志文件保留天数-->

<maxHistory>${maxHistory}</maxHistory>

<maxFileSize>${maxFileSize}</maxFileSize>

<totalSizeCap>${totalSizeCap}</totalSizeCap>

</rollingPolicy>

</appender>

<!-- warn日志 -->

<appender name="WARN" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${log.path}${file.separator}warn.log</file>

<!-- 过滤日志 -->

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>WARN</level>

<onMatch>ACCEPT</onMatch>

<onMismatch>DENY</onMismatch>

</filter>

<encoder>

<pattern>

${log.pattern}

</pattern>

</encoder>

<!-- 滚动策略 -->

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>

${log.path}${file.separator}%d{yyyy-MM-dd,aux}${file.separator}warn-%d{yyyy-MM-dd}.%i.log.gz

</fileNamePattern>

<!--日志文件保留天数-->

<maxHistory>${maxHistory}</maxHistory>

<maxFileSize>${maxFileSize}</maxFileSize>

<totalSizeCap>${totalSizeCap}</totalSizeCap>

</rollingPolicy>

</appender>

<!-- error日志 -->

<appender name="ERROR" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${log.path}${file.separator}error.log</file>

<!-- 过滤日志 -->

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>ERROR</level>

<onMatch>ACCEPT</onMatch>

<onMismatch>DENY</onMismatch>

</filter>

<encoder>

<pattern>

${log.pattern}

</pattern>

</encoder>

<!-- 滚动策略 -->

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>

${log.path}${file.separator}%d{yyyy-MM-dd,aux}${file.separator}error-%d{yyyy-MM-dd}.%i.log.gz

</fileNamePattern>

<!--日志文件保留天数-->

<maxHistory>${maxHistory}</maxHistory>

<maxFileSize>${maxFileSize}</maxFileSize>

<totalSizeCap>${totalSizeCap}</totalSizeCap>

</rollingPolicy>

</appender>

<!-- show parameters for hibernate sql 专为 Hibernate 定制 -->

<!--logger name="org.hibernate.type.descriptor.sql.BasicBinder" level="TRACE"/>

<logger name="org.hibernate.type.descriptor.sql.BasicExtractor" level="DEBUG" /-->

<logger name="org.hibernate.SQL" level="DEBUG"/>

<logger name="org.hibernate.engine.QueryParameters" level="DEBUG"/>

<logger name="org.hibernate.engine.query.HQLQueryPlan" level="DEBUG"/>

<!-- show parameters for jdbcTemplate sql 专为 jdbcTemplate 定制 -->

<logger name="org.springframework.jdbc.core.JdbcTemplate" level="DEBUG"/>

<!-- <logger name="org.springframework.jdbc.core.StatementCreatorUtils" level="TRACE" />-->

<root level="INFO">

<appender-ref ref="GELF"/>

<appender-ref ref="STDOUT"/>

<appender-ref ref="INFO"/>

<appender-ref ref="ERROR"/>

<appender-ref ref="WARN"/>

</root>

</configuration>