一、简介

Wan2.2-TI2V-5B 是阿里巴巴通义万相(Tongyi Wanxiang)团队于 2025 年 7 月开源的一款轻量级统一视频生成模型,属于 Wan2.2 系列中的核心成员。它以高效部署和多功能生成为特点,显著降低了电影级视频制作的技术门槛。

1. 核心定位与功能亮点

统一生成能力

- 多模态输入支持:同时支持文本生成视频(Text-to-Video)和图文结合生成视频(Image+Text-to-Video),实现 "二合一" 功能,满足多样化的创作需求。

- 电影级美学控制:用户可通过调整光影、色彩、构图等超 60 个参数,精细化控制画面风格,生成具有电影质感的动态内容。

轻量化设计

参数规模仅 5B(50 亿参数),远小于同系列 MoE 架构模型(如 T2V-A14B 的 27B),但通过高压缩技术实现高性能输出。

2. 技术架构创新

高压缩 3D VAE 架构

- 采用 时空压缩比 4×16×16 的 3D 变分自编码器,信息压缩率提升至 64 倍(远超前代 Wan2.1 的 4×8×8),显著减少显存占用。

- 技术优势:在保持 720P 高清画质(24fps)的同时,大幅提升生成效率。

消费级硬件适配

- 仅需 22GB 显存(如单张 RTX 4090)即可运行,支持共享显存模式(最低 8GB 显存可生成,但速度较慢)。

- 生成耗时:单次生成 5 秒视频仅需数分钟,是目前开源模型中速度最快的 720P 视频生成方案之一。

3. 性能与效率表现

| 指标 | 参数 / 性能 | 对比优势 |

|---|---|---|

| 分辨率与帧率 | 720P @ 24fps | 支持高清流畅输出 |

| 单次生成时长 | 5 秒 | 满足短视频、分镜需求 |

| 显存占用 | 22GB(推荐) / 8GB(最低) | 消费级显卡可部署 |

| 生成速度 | ≈2.5 分钟 / 20 步(RTX 4090) | 较同系列 A14B 模型快 50% 以上 |

4.Wan2.2 系列核心型号及功能对比

| 型号 | Wan2.2-T2V-A14B | Wan2.2-I2V-A14B | Wan2.2-TI2V-5B |

|---|---|---|---|

| 类型 | 文生视频(Text-to-Video) | 图生视频(Image-to-Video) | 统一视频生成(Text/Image-to-Video) |

| 参数量 | 总参数 27B,激活参数 14B | 总参数 27B,激活参数 14B | 5B(密集架构) |

| 核心架构 | MoE(混合专家)架构 | MoE 架构 | 高压缩 3D VAE 架构 |

| 生成质量 | 电影级(光影 / 构图 / 微表情) | 电影级(细节优化突出) | 高清 720P(流畅性优先) |

| 美学控制 | ✅ 支持 60 + 参数精细调节 | ✅ 支持同等美学控制 | ⚠️ 部分支持(依赖提示词) |

| 硬件要求 | 高性能 GPU(显存≥80GB) | 高性能 GPU(显存≥80GB) | 消费级 GPU(22GB 显存) |

| 生成速度 | 5 秒视频约 15 分钟(高性能卡) | 类似 T2V-A14B | 5 秒视频≤9 分钟(RTX 4090) |

| 开源平台 | GitHub/HuggingFace/ 魔搭社区 | 同左 | 同左 |

二、本地部署

本次部署采用 ComfyUI 作为本地部署框架。

| 环境 | 版本号 |

|---|---|

| Python | 3.12 |

| PyTorch | 2.5.1 |

| cuda | 12.1 |

| Ubtuntu | 22.4.0 |

1.安装 Miniconda

步骤 1:更新系统

首先,更新系统软件包:

sql

sudo apt update

sudo apt upgrade -y步骤 2:下载 Miniconda 安装脚本

访问 Miniconda 的官方网站或使用以下命令直接下载最新版本的安装脚本(以 Python 3 为例):

arduino

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh步骤 3:验证安装脚本的完整性(可选)

下载 SHA256 校验和文件并验证安装包的完整性:

bash

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh.sha256

sha256sum Miniconda3-latest-Linux-x86_64.sh比较输出的校验和与.sha256 文件中的值是否一致,确保文件未被篡改。

步骤 4:运行安装脚本

为安装脚本添加执行权限:

bash

chmod +x Miniconda3-latest-Linux-x86_64.sh运行安装脚本:

./Miniconda3-latest-Linux-x86_64.sh步骤 5:按照提示完成安装

安装过程中需要:

阅读许可协议 :按 Enter 键逐页阅读,或者按 Q 退出阅读。

接受许可协议 :输入 yes 并按 Enter。

选择安装路径 :默认路径为/home/用户名/miniconda3,直接按 Enter 即可,或输入自定义路径。

是否初始化 Miniconda :输入 yes 将 Miniconda 添加到您的 PATH 环境变量中。

步骤 6:激活 Miniconda 环境

安装完成后,使环境变量生效:

bash

source ~/.bashrc步骤 7:验证安装是否成功

检查 conda 版本:

css

conda --version步骤 8:更新 conda(推荐)

为了获得最新功能和修复,更新 conda:

sql

conda update conda2.部署 ComfyUI

2.1 克隆代码仓库

bash

git clone https://github.com/comfyanonymous/ComfyUI.git2.2 安装依赖

- 创建 conda 虚拟环境

ini

conda create -n comfyenv python==3.12

conda activate comfyenv- 安装 PyTorch

ini

conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidia- 安装依赖

bash

cd ComfyUI

pip install -r requirements.txt- 安装 ComfyUI Manager(可选)

bash

#进入插件的文件

cd /ComfyUI/custom_nodes/

#下载ComfyUI Manager

git clone https://github.com/Comfy-Org/ComfyUI-Manager.git3.下载 Wan2.2-TI2V-5B 模型相关文件

使用 modelscope 下载 Wan2.2-TI2V-5B 模型:以下是必要的模型文件

Diffusion Model wan2.2_ti2v_5B_fp16.safetensors

Text Encoder umt5_xxl_fp8_e4m3fn_scaled.safetensors

需要将以上三个文件放入之前下载的 comfyUI 的对应目录下:

ComfyUI/

├───📂 models/

│ ├───📂 diffusion_models/

│ │ └───wan2.2_ti2v_5B_fp16.safetensors

│ ├───📂 text_encoders/

│ │ └─── umt5_xxl_fp8_e4m3fn_scaled.safetensors

│ └───📂 vae/

│ └── wan2.2_vae.safetensors

对应下载命令:(local_dir 部分的路径可以自定义)

wan2.2_ti2v_5B_fp16.safetensors:

css

modelscope download --model Comfy-Org/Wan_2.2_ComfyUI_Repackaged --include '*/wan2.2_ti2v_5B_fp16.safetensors' --local_dir /models/diffusion_models/umt5_xxl_fp8_e4m3fn_scaled.safetensors:

css

modelscope download --model Comfy-Org/Wan_2.2_ComfyUI_Repackaged --include '*/umt5_xxl_fp8_e4m3fn_scaled.safetensors' --local_dir /models/diffusion_models/wan2.2_vae.safetensors:

css

modelscope download --model Comfy-Org/Wan_2.2_ComfyUI_Repackaged --include '*/wan2.2_vae.safetensors' --local_dir /models/diffusion_models/4.启动 ComfyUI

之前下载的 comfyUI 根目录应该有 main.py 文件。

css

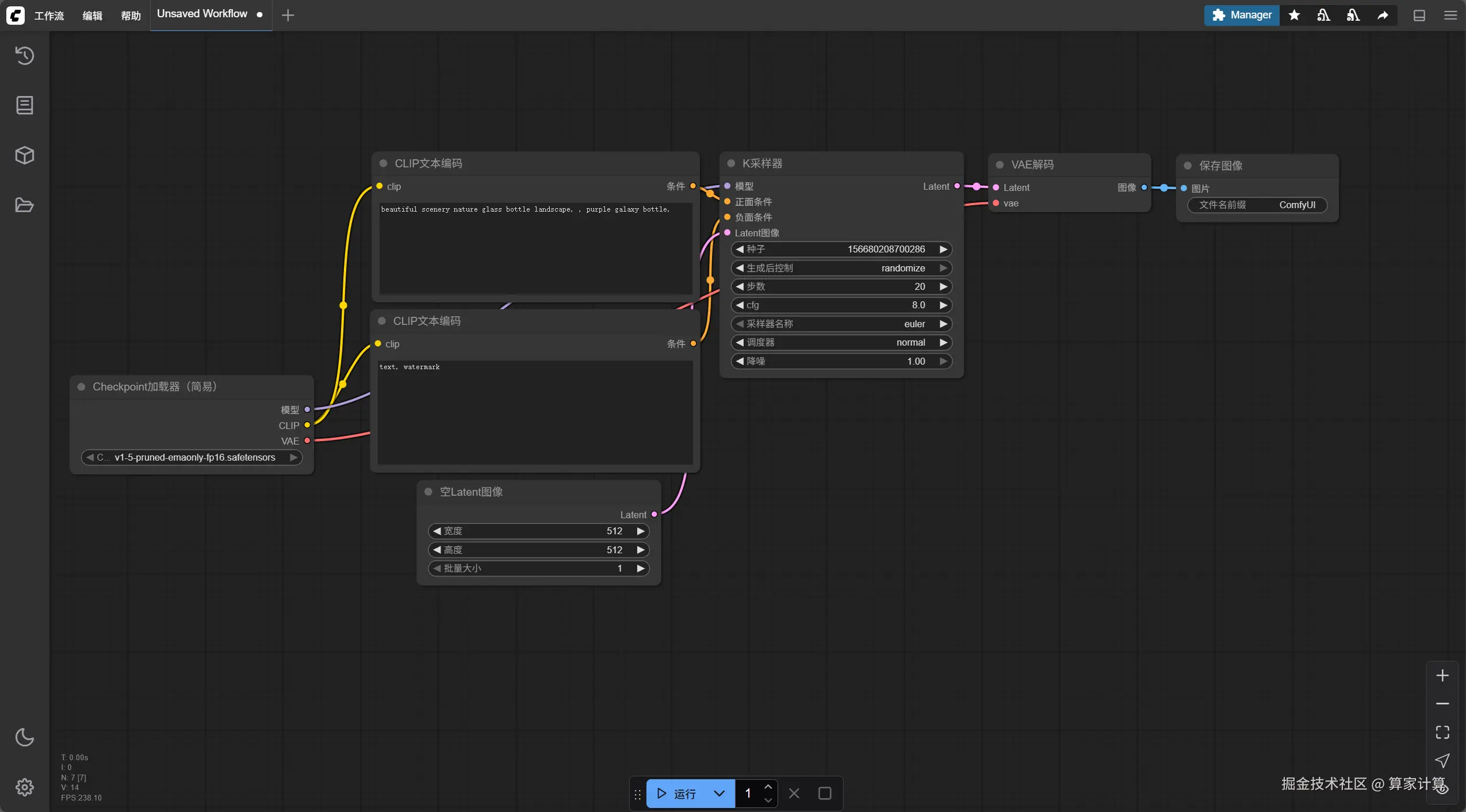

python main.py运行 main.py 文件 之后根据控制台显示的 url 在浏览器输入该url网址进入 ComfyUI 的 GUI 界面:

css

To see the GUI go to: http://127.0.0.1:8188如图:

5.使用 Wan2.2-TI2V-5B 工作流

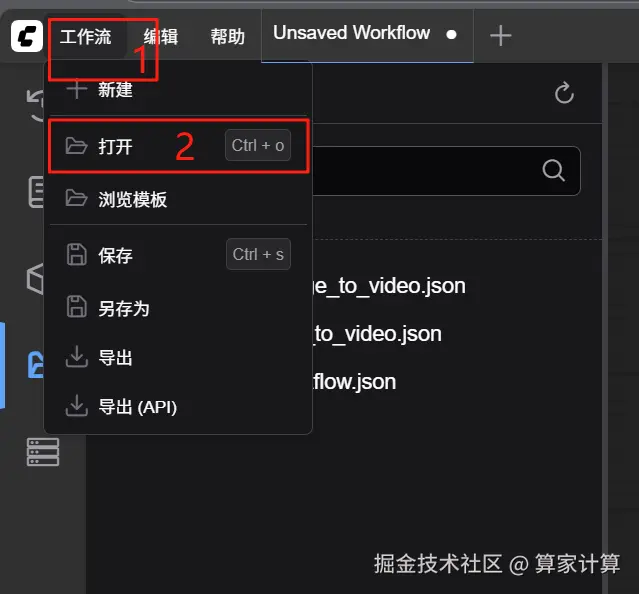

依次点击 工作流 -> 打开,即可在电脑本地导入工作流 josn 文件。

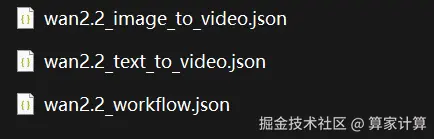

选择导入:

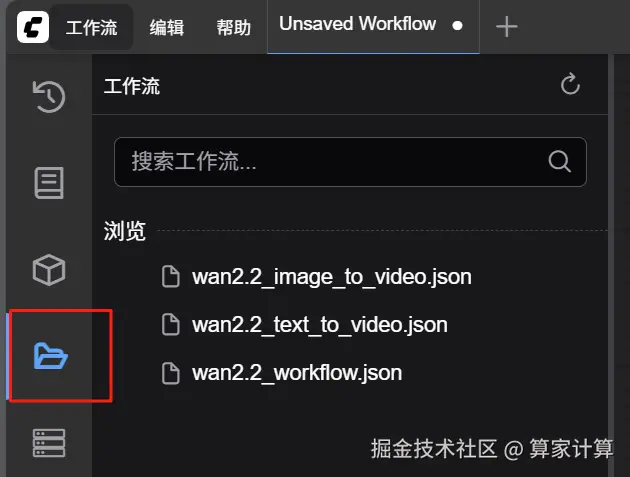

完成,工作流文件在以下红色框住的位置点击后出现。然后选取想使用的 Wan2.2-TI2V-5B 工作流

注: 当然也可以自主定制 工作流,后面放出三个工作流 json 文件内容作为参考。

Wan2.2-TI2V-5B 工作流文件内容

wan2.2_image_to_video.json

css

{

"id": "91f6bbe2-ed41-4fd6-bac7-71d5b5864ecb",

"revision": 0,

"last_node_id": 57,

"last_link_id": 106,

"nodes": [

{

"id": 8,

"type": "VAEDecode",

"pos": [

1210,

190

],

"size": [

210,

46

],

"flags": {},

"order": 10,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 35

},

{

"name": "vae",

"type": "VAE",

"link": 76

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"slot_index": 0,

"links": [

56,

93

]

}

],

"properties": {

"Node name for S&R": "VAEDecode"

},

"widgets_values": []

},

{

"id": 7,

"type": "CLIPTextEncode",

"pos": [

413,

389

],

"size": [

425.27801513671875,

180.6060791015625

],

"flags": {},

"order": 6,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 75

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"slot_index": 0,

"links": [

52

]

}

],

"title": "CLIP Text Encode (Negative Prompt)",

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"色调艳丽,过曝,静态,细节模糊不清,字幕,风格,作品,画作,画面,静止,整体发灰,最差质量,低质量,JPEG压缩残留,丑陋的,残缺的,多余的手指,画得不好的手部,画得不好的脸部,畸形的,毁容的,形态畸形的肢体,手指融合,静止不动的画面,杂乱的背景,三条腿,背景人很多,倒着走"

],

"color": "#322",

"bgcolor": "#533"

},

{

"id": 3,

"type": "KSampler",

"pos": [

863,

187

],

"size": [

315,

262

],

"flags": {},

"order": 9,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 95

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 46

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 52

},

{

"name": "latent_image",

"type": "LATENT",

"link": 104

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"slot_index": 0,

"links": [

35

]

}

],

"properties": {

"Node name for S&R": "KSampler"

},

"widgets_values": [

869177064731501,

"randomize",

30,

5,

"uni_pc",

"simple",

1

]

},

{

"id": 28,

"type": "SaveAnimatedWEBP",

"pos": [

1460,

190

],

"size": [

870.8511352539062,

648.4141235351562

],

"flags": {},

"order": 11,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 56

}

],

"outputs": [],

"properties": {},

"widgets_values": [

"ComfyUI",

24.000000000000004,

false,

90,

"default"

]

},

{

"id": 39,

"type": "VAELoader",

"pos": [

20,

340

],

"size": [

330,

60

],

"flags": {},

"order": 0,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "VAE",

"type": "VAE",

"slot_index": 0,

"links": [

76,

105

]

}

],

"properties": {

"Node name for S&R": "VAELoader"

},

"widgets_values": [

"wan2.2_vae.safetensors"

],

"color": "#223",

"bgcolor": "#335"

},

{

"id": 38,

"type": "CLIPLoader",

"pos": [

20,

190

],

"size": [

380,

106

],

"flags": {},

"order": 1,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "CLIP",

"type": "CLIP",

"slot_index": 0,

"links": [

74,

75

]

}

],

"properties": {

"Node name for S&R": "CLIPLoader"

},

"widgets_values": [

"umt5_xxl_fp8_e4m3fn_scaled.safetensors",

"wan",

"default"

],

"color": "#223",

"bgcolor": "#335"

},

{

"id": 48,

"type": "ModelSamplingSD3",

"pos": [

440,

60

],

"size": [

210,

58

],

"flags": {},

"order": 7,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 94

}

],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"slot_index": 0,

"links": [

95

]

}

],

"properties": {

"Node name for S&R": "ModelSamplingSD3"

},

"widgets_values": [

8.000000000000002

]

},

{

"id": 37,

"type": "UNETLoader",

"pos": [

20,

60

],

"size": [

346.7470703125,

82

],

"flags": {},

"order": 2,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"slot_index": 0,

"links": [

94

]

}

],

"properties": {

"Node name for S&R": "UNETLoader"

},

"widgets_values": [

"wan2.2_ti2v_5B_fp16.safetensors",

"default"

],

"color": "#223",

"bgcolor": "#335"

},

{

"id": 47,

"type": "SaveWEBM",

"pos": [

2367.213134765625,

193.6114959716797

],

"size": [

670,

650

],

"flags": {},

"order": 12,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 93

}

],

"outputs": [],

"properties": {

"Node name for S&R": "SaveWEBM"

},

"widgets_values": [

"ComfyUI",

"vp9",

24,

16.111083984375

]

},

{

"id": 57,

"type": "LoadImage",

"pos": [

87.407958984375,

620.4816284179688

],

"size": [

274.080078125,

314

],

"flags": {},

"order": 3,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

106

]

},

{

"name": "MASK",

"type": "MASK",

"links": null

}

],

"properties": {

"Node name for S&R": "LoadImage"

},

"widgets_values": [

"fennec_girl_hug.png",

"image"

]

},

{

"id": 56,

"type": "Note",

"pos": [

710.781005859375,

608.9545288085938

],

"size": [

320.9936218261719,

182.6057586669922

],

"flags": {},

"order": 4,

"mode": 0,

"inputs": [],

"outputs": [],

"properties": {},

"widgets_values": [

"Optimal resolution is: 1280x704 length 121\n\nThe reason it's lower in this workflow is just because I didn't want you to wait too long to get an initial video.\n\nTo get image to video just plug in a start image. For text to video just don't give it a start image."

],

"color": "#432",

"bgcolor": "#653"

},

{

"id": 55,

"type": "Wan22ImageToVideoLatent",

"pos": [

420,

610

],

"size": [

271.9126892089844,

150

],

"flags": {},

"order": 8,

"mode": 0,

"inputs": [

{

"name": "vae",

"type": "VAE",

"link": 105

},

{

"name": "start_image",

"shape": 7,

"type": "IMAGE",

"link": 106

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

104

]

}

],

"properties": {

"Node name for S&R": "Wan22ImageToVideoLatent"

},

"widgets_values": [

1280,

704,

41,

1

]

},

{

"id": 6,

"type": "CLIPTextEncode",

"pos": [

415,

186

],

"size": [

422.84503173828125,

164.31304931640625

],

"flags": {},

"order": 5,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 74

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"slot_index": 0,

"links": [

46

]

}

],

"title": "CLIP Text Encode (Positive Prompt)",

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"a cute anime girl with fennec ears and a fluffy tail walking in a beautiful field"

],

"color": "#232",

"bgcolor": "#353"

}

],

"links": [

[

35,

3,

0,

8,

0,

"LATENT"

],

[

46,

6,

0,

3,

1,

"CONDITIONING"

],

[

52,

7,

0,

3,

2,

"CONDITIONING"

],

[

56,

8,

0,

28,

0,

"IMAGE"

],

[

74,

38,

0,

6,

0,

"CLIP"

],

[

75,

38,

0,

7,

0,

"CLIP"

],

[

76,

39,

0,

8,

1,

"VAE"

],

[

93,

8,

0,

47,

0,

"IMAGE"

],

[

94,

37,

0,

48,

0,

"MODEL"

],

[

95,

48,

0,

3,

0,

"MODEL"

],

[

104,

55,

0,

3,

3,

"LATENT"

],

[

105,

39,

0,

55,

0,

"VAE"

],

[

106,

57,

0,

55,

1,

"IMAGE"

]

],

"groups": [],

"config": {},

"extra": {

"ds": {

"scale": 1.1167815779425287,

"offset": [

3.5210927484772534,

-9.231468990407302

]

},

"frontendVersion": "1.23.4"

},

"version": 0.4

}wan2.2_text_to_video.json

css

{

"id": "91f6bbe2-ed41-4fd6-bac7-71d5b5864ecb",

"revision": 0,

"last_node_id": 57,

"last_link_id": 106,

"nodes": [

{

"id": 8,

"type": "VAEDecode",

"pos": [

1210,

190

],

"size": [

210,

46

],

"flags": {},

"order": 9,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 35

},

{

"name": "vae",

"type": "VAE",

"link": 76

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"slot_index": 0,

"links": [

56,

93

]

}

],

"properties": {

"Node name for S&R": "VAEDecode"

},

"widgets_values": []

},

{

"id": 7,

"type": "CLIPTextEncode",

"pos": [

413,

389

],

"size": [

425.27801513671875,

180.6060791015625

],

"flags": {},

"order": 6,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 75

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"slot_index": 0,

"links": [

52

]

}

],

"title": "CLIP Text Encode (Negative Prompt)",

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"色调艳丽,过曝,静态,细节模糊不清,字幕,风格,作品,画作,画面,静止,整体发灰,最差质量,低质量,JPEG压缩残留,丑陋的,残缺的,多余的手指,画得不好的手部,画得不好的脸部,畸形的,毁容的,形态畸形的肢体,手指融合,静止不动的画面,杂乱的背景,三条腿,背景人很多,倒着走"

],

"color": "#322",

"bgcolor": "#533"

},

{

"id": 3,

"type": "KSampler",

"pos": [

863,

187

],

"size": [

315,

262

],

"flags": {},

"order": 8,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 95

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 46

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 52

},

{

"name": "latent_image",

"type": "LATENT",

"link": 104

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"slot_index": 0,

"links": [

35

]

}

],

"properties": {

"Node name for S&R": "KSampler"

},

"widgets_values": [

285741127119524,

"randomize",

30,

5,

"uni_pc",

"simple",

1

]

},

{

"id": 39,

"type": "VAELoader",

"pos": [

20,

340

],

"size": [

330,

60

],

"flags": {},

"order": 0,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "VAE",

"type": "VAE",

"slot_index": 0,

"links": [

76,

105

]

}

],

"properties": {

"Node name for S&R": "VAELoader"

},

"widgets_values": [

"wan2.2_vae.safetensors"

],

"color": "#223",

"bgcolor": "#335"

},

{

"id": 38,

"type": "CLIPLoader",

"pos": [

20,

190

],

"size": [

380,

106

],

"flags": {},

"order": 1,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "CLIP",

"type": "CLIP",

"slot_index": 0,

"links": [

74,

75

]

}

],

"properties": {

"Node name for S&R": "CLIPLoader"

},

"widgets_values": [

"umt5_xxl_fp8_e4m3fn_scaled.safetensors",

"wan",

"default"

],

"color": "#223",

"bgcolor": "#335"

},

{

"id": 48,

"type": "ModelSamplingSD3",

"pos": [

440,

60

],

"size": [

210,

58

],

"flags": {},

"order": 7,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 94

}

],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"slot_index": 0,

"links": [

95

]

}

],

"properties": {

"Node name for S&R": "ModelSamplingSD3"

},

"widgets_values": [

8.000000000000002

]

},

{

"id": 37,

"type": "UNETLoader",

"pos": [

20,

60

],

"size": [

346.7470703125,

82

],

"flags": {},

"order": 2,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"slot_index": 0,

"links": [

94

]

}

],

"properties": {

"Node name for S&R": "UNETLoader"

},

"widgets_values": [

"wan2.2_ti2v_5B_fp16.safetensors",

"default"

],

"color": "#223",

"bgcolor": "#335"

},

{

"id": 47,

"type": "SaveWEBM",

"pos": [

2367.213134765625,

193.6114959716797

],

"size": [

670,

650

],

"flags": {},

"order": 11,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 93

}

],

"outputs": [],

"properties": {

"Node name for S&R": "SaveWEBM"

},

"widgets_values": [

"ComfyUI",

"vp9",

24,

16.111083984375

]

},

{

"id": 56,

"type": "Note",

"pos": [

710.781005859375,

608.9545288085938

],

"size": [

320.9936218261719,

182.6057586669922

],

"flags": {},

"order": 3,

"mode": 0,

"inputs": [],

"outputs": [],

"properties": {},

"widgets_values": [

"Optimal resolution is: 1280x704 length 121\n\nThe reason it's lower in this workflow is just because I didn't want you to wait too long to get an initial video.\n\nTo get image to video just plug in a start image. For text to video just don't give it a start image."

],

"color": "#432",

"bgcolor": "#653"

},

{

"id": 55,

"type": "Wan22ImageToVideoLatent",

"pos": [

420,

610

],

"size": [

271.9126892089844,

150

],

"flags": {},

"order": 4,

"mode": 0,

"inputs": [

{

"name": "vae",

"type": "VAE",

"link": 105

},

{

"name": "start_image",

"shape": 7,

"type": "IMAGE",

"link": null

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

104

]

}

],

"properties": {

"Node name for S&R": "Wan22ImageToVideoLatent"

},

"widgets_values": [

1280,

704,

41,

1

]

},

{

"id": 6,

"type": "CLIPTextEncode",

"pos": [

415,

186

],

"size": [

422.84503173828125,

164.31304931640625

],

"flags": {},

"order": 5,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 74

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"slot_index": 0,

"links": [

46

]

}

],

"title": "CLIP Text Encode (Positive Prompt)",

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"drone shot of a volcano erupting with a fox walking on it"

],

"color": "#232",

"bgcolor": "#353"

},

{

"id": 28,

"type": "SaveAnimatedWEBP",

"pos": [

1460,

190

],

"size": [

870.8511352539062,

648.4141235351562

],

"flags": {},

"order": 10,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 56

}

],

"outputs": [],

"properties": {},

"widgets_values": [

"ComfyUI",

24.000000000000004,

false,

80,

"default"

]

}

],

"links": [

[

35,

3,

0,

8,

0,

"LATENT"

],

[

46,

6,

0,

3,

1,

"CONDITIONING"

],

[

52,

7,

0,

3,

2,

"CONDITIONING"

],

[

56,

8,

0,

28,

0,

"IMAGE"

],

[

74,

38,

0,

6,

0,

"CLIP"

],

[

75,

38,

0,

7,

0,

"CLIP"

],

[

76,

39,

0,

8,

1,

"VAE"

],

[

93,

8,

0,

47,

0,

"IMAGE"

],

[

94,

37,

0,

48,

0,

"MODEL"

],

[

95,

48,

0,

3,

0,

"MODEL"

],

[

104,

55,

0,

3,

3,

"LATENT"

],

[

105,

39,

0,

55,

0,

"VAE"

]

],

"groups": [],

"config": {},

"extra": {

"ds": {

"scale": 1.11678157794253,

"offset": [

7.041966347099882,

-19.733042401058505

]

},

"frontendVersion": "1.23.4"

},

"version": 0.4

}wan2.2_workflow.json

swift

{

"id": "91f6bbe2-ed41-4fd6-bac7-71d5b5864ecb",

"revision": 0,

"last_node_id": 59,

"last_link_id": 108,

"nodes": [

{

"id": 37,

"type": "UNETLoader",

"pos": [

-30,

50

],

"size": [

346.7470703125,

82

],

"flags": {},

"order": 0,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"slot_index": 0,

"links": [

94

]

}

],

"properties": {

"Node name for S&R": "UNETLoader",

"cnr_id": "comfy-core",

"ver": "0.3.45",

"models": [

{

"name": "wan2.2_ti2v_5B_fp16.safetensors",

"url": "https://huggingface.co/Comfy-Org/Wan_2.2_ComfyUI_Repackaged/resolve/main/split_files/diffusion_models/wan2.2_ti2v_5B_fp16.safetensors",

"directory": "diffusion_models"

}

]

},

"widgets_values": [

"wan2.2_ti2v_5B_fp16.safetensors",

"default"

]

},

{

"id": 38,

"type": "CLIPLoader",

"pos": [

-30,

190

],

"size": [

350,

110

],

"flags": {},

"order": 1,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "CLIP",

"type": "CLIP",

"slot_index": 0,

"links": [

74,

75

]

}

],

"properties": {

"Node name for S&R": "CLIPLoader",

"cnr_id": "comfy-core",

"ver": "0.3.45",

"models": [

{

"name": "umt5_xxl_fp8_e4m3fn_scaled.safetensors",

"url": "https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/text_encoders/umt5_xxl_fp8_e4m3fn_scaled.safetensors",

"directory": "text_encoders"

}

]

},

"widgets_values": [

"umt5_xxl_fp8_e4m3fn_scaled.safetensors",

"wan",

"default"

]

},

{

"id": 39,

"type": "VAELoader",

"pos": [

-30,

350

],

"size": [

350,

60

],

"flags": {},

"order": 2,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "VAE",

"type": "VAE",

"slot_index": 0,

"links": [

76,

105

]

}

],

"properties": {

"Node name for S&R": "VAELoader",

"cnr_id": "comfy-core",

"ver": "0.3.45",

"models": [

{

"name": "wan2.2_vae.safetensors",

"url": "https://huggingface.co/Comfy-Org/Wan_2.2_ComfyUI_Repackaged/resolve/main/split_files/vae/wan2.2_vae.safetensors",

"directory": "vae"

}

]

},

"widgets_values": [

"wan2.2_vae.safetensors"

]

},

{

"id": 8,

"type": "VAEDecode",

"pos": [

1190,

150

],

"size": [

210,

46

],

"flags": {},

"order": 10,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 35

},

{

"name": "vae",

"type": "VAE",

"link": 76

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"slot_index": 0,

"links": [

107

]

}

],

"properties": {

"Node name for S&R": "VAEDecode",

"cnr_id": "comfy-core",

"ver": "0.3.45"

},

"widgets_values": []

},

{

"id": 57,

"type": "CreateVideo",

"pos": [

1200,

240

],

"size": [

270,

78

],

"flags": {},

"order": 11,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 107

},

{

"name": "audio",

"shape": 7,

"type": "AUDIO",

"link": null

}

],

"outputs": [

{

"name": "VIDEO",

"type": "VIDEO",

"links": [

108

]

}

],

"properties": {

"Node name for S&R": "CreateVideo",

"cnr_id": "comfy-core",

"ver": "0.3.45"

},

"widgets_values": [

24

]

},

{

"id": 58,

"type": "SaveVideo",

"pos": [

1200,

370

],

"size": [

660,

450

],

"flags": {},

"order": 12,

"mode": 0,

"inputs": [

{

"name": "video",

"type": "VIDEO",

"link": 108

}

],

"outputs": [],

"properties": {

"Node name for S&R": "SaveVideo",

"cnr_id": "comfy-core",

"ver": "0.3.45"

},

"widgets_values": [

"video/ComfyUI",

"auto",

"auto"

]

},

{

"id": 55,

"type": "Wan22ImageToVideoLatent",

"pos": [

380,

540

],

"size": [

271.9126892089844,

150

],

"flags": {},

"order": 8,

"mode": 0,

"inputs": [

{

"name": "vae",

"type": "VAE",

"link": 105

},

{

"name": "start_image",

"shape": 7,

"type": "IMAGE",

"link": 106

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

104

]

}

],

"properties": {

"Node name for S&R": "Wan22ImageToVideoLatent",

"cnr_id": "comfy-core",

"ver": "0.3.45"

},

"widgets_values": [

1280,

704,

121,

1

]

},

{

"id": 56,

"type": "LoadImage",

"pos": [

0,

540

],

"size": [

274.080078125,

314

],

"flags": {},

"order": 3,

"mode": 4,

"inputs": [],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

106

]

},

{

"name": "MASK",

"type": "MASK",

"links": null

}

],

"properties": {

"Node name for S&R": "LoadImage",

"cnr_id": "comfy-core",

"ver": "0.3.45"

},

"widgets_values": [

"example.png",

"image"

]

},

{

"id": 7,

"type": "CLIPTextEncode",

"pos": [

380,

260

],

"size": [

425.27801513671875,

180.6060791015625

],

"flags": {},

"order": 7,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 75

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"slot_index": 0,

"links": [

52

]

}

],

"title": "CLIP Text Encode (Negative Prompt)",

"properties": {

"Node name for S&R": "CLIPTextEncode",

"cnr_id": "comfy-core",

"ver": "0.3.45"

},

"widgets_values": [

"色调艳丽,过曝,静态,细节模糊不清,字幕,风格,作品,画作,画面,静止,整体发灰,最差质量,低质量,JPEG压缩残留,丑陋的,残缺的,多余的手指,画得不好的手部,画得不好的脸部,畸形的,毁容的,形态畸形的肢体,手指融合,静止不动的画面,杂乱的背景,三条腿,背景人很多,倒着走"

],

"color": "#322",

"bgcolor": "#533"

},

{

"id": 59,

"type": "MarkdownNote",

"pos": [

-550,

10

],

"size": [

480,

340

],

"flags": {},

"order": 4,

"mode": 0,

"inputs": [],

"outputs": [],

"title": "Model Links",

"properties": {},

"widgets_values": [

"[Tutorial](https://docs.comfy.org/tutorials/video/wan/wan2_2\n) | [教程](https://docs.comfy.org/zh-CN/tutorials/video/wan/wan2_2\n)\n\n**Diffusion Model**\n- [wan2.2_ti2v_5B_fp16.safetensors](https://huggingface.co/Comfy-Org/Wan_2.2_ComfyUI_Repackaged/resolve/main/split_files/diffusion_models/wan2.2_ti2v_5B_fp16.safetensors)\n\n**VAE**\n- [wan2.2_vae.safetensors](https://huggingface.co/Comfy-Org/Wan_2.2_ComfyUI_Repackaged/resolve/main/split_files/vae/wan2.2_vae.safetensors)\n\n**Text Encoder** \n- [umt5_xxl_fp8_e4m3fn_scaled.safetensors](https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/text_encoders/umt5_xxl_fp8_e4m3fn_scaled.safetensors)\n\n\nFile save location\n\n```\nComfyUI/\n├───📂 models/\n│ ├───📂 diffusion_models/\n│ │ └───wan2.2_ti2v_5B_fp16.safetensors\n│ ├───📂 text_encoders/\n│ │ └─── umt5_xxl_fp8_e4m3fn_scaled.safetensors \n│ └───📂 vae/\n│ └── wan2.2_vae.safetensors\n```\n"

],

"color": "#432",

"bgcolor": "#653"

},

{

"id": 6,

"type": "CLIPTextEncode",

"pos": [

380,

50

],

"size": [

422.84503173828125,

164.31304931640625

],

"flags": {},

"order": 6,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 74

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"slot_index": 0,

"links": [

46

]

}

],

"title": "CLIP Text Encode (Positive Prompt)",

"properties": {

"Node name for S&R": "CLIPTextEncode",

"cnr_id": "comfy-core",

"ver": "0.3.45"

},

"widgets_values": [

"Low contrast. In a retro 1970s-style subway station, a street musician plays in dim colors and rough textures. He wears an old jacket, playing guitar with focus. Commuters hurry by, and a small crowd gathers to listen. The camera slowly moves right, capturing the blend of music and city noise, with old subway signs and mottled walls in the background."

],

"color": "#232",

"bgcolor": "#353"

},

{

"id": 3,

"type": "KSampler",

"pos": [

850,

130

],

"size": [

315,

262

],

"flags": {},

"order": 9,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 95

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 46

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 52

},

{

"name": "latent_image",

"type": "LATENT",

"link": 104

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"slot_index": 0,

"links": [

35

]

}

],

"properties": {

"Node name for S&R": "KSampler",

"cnr_id": "comfy-core",

"ver": "0.3.45"

},

"widgets_values": [

898471028164125,

"randomize",

20,

5,

"uni_pc",

"simple",

1

]

},

{

"id": 48,

"type": "ModelSamplingSD3",

"pos": [

850,

20

],

"size": [

210,

58

],

"flags": {

"collapsed": false

},

"order": 5,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 94

}

],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"slot_index": 0,

"links": [

95

]

}

],

"properties": {

"Node name for S&R": "ModelSamplingSD3",

"cnr_id": "comfy-core",

"ver": "0.3.45"

},

"widgets_values": [

8

]

}

],

"links": [

[

35,

3,

0,

8,

0,

"LATENT"

],

[

46,

6,

0,

3,

1,

"CONDITIONING"

],

[

52,

7,

0,

3,

2,

"CONDITIONING"

],

[

74,

38,

0,

6,

0,

"CLIP"

],

[

75,

38,

0,

7,

0,

"CLIP"

],

[

76,

39,

0,

8,

1,

"VAE"

],

[

94,

37,

0,

48,

0,

"MODEL"

],

[

95,

48,

0,

3,

0,

"MODEL"

],

[

104,

55,

0,

3,

3,

"LATENT"

],

[

105,

39,

0,

55,

0,

"VAE"

],

[

106,

56,

0,

55,

1,

"IMAGE"

],

[

107,

8,

0,

57,

0,

"IMAGE"

],

[

108,

57,

0,

58,

0,

"VIDEO"

]

],

"groups": [

{

"id": 1,

"title": "Step1 - Load models",

"bounding": [

-50,

-20,

400,

453.6000061035156

],

"color": "#3f789e",

"font_size": 24,

"flags": {}

},

{

"id": 2,

"title": "Step3 - Prompt",

"bounding": [

370,

-20,

448.27801513671875,

473.2060852050781

],

"color": "#3f789e",

"font_size": 24,

"flags": {}

},

{

"id": 3,

"title": "For i2v, use Ctrl + B to enable",

"bounding": [

-50,

450,

400,

420

],

"color": "#3f789e",

"font_size": 24,

"flags": {}

},

{

"id": 4,

"title": "Video Size & length",

"bounding": [

370,

470,

291.9127197265625,

233.60000610351562

],

"color": "#3f789e",

"font_size": 24,

"flags": {}

}

],

"config": {},

"extra": {

"ds": {

"scale": 0.8390545288824454,

"offset": [

252.56402822629002,

99.17599442262053

]

},

"frontendVersion": "1.25.1",

"VHS_latentpreview": false,

"VHS_latentpreviewrate": 0,

"VHS_MetadataImage": true,

"VHS_KeepIntermediate": true

},

"version": 0.4

}