需求:

1)从2个UDP端口接收数据,并在同样的端口回显。echo

2)多个处理线程,多个发送线程;

3)使用条件变量唤醒;

cpp

#include <stack>

#include <mutex>

#include <atomic>

#include <iostream>

#include <thread>

#include <mutex>

#include <queue>

#include <vector>

#include <unistd.h>

#include <string.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <sys/epoll.h>

#include <arpa/inet.h> // for inet_pton

#include <cstring> // for memset

#include <fcntl.h> // for non-block

#include <signal.h>

#include <condition_variable>

// #include "concurrentqueue.h" // moodycamel concurrentqueue 头文件

using namespace std;

/*

编译方式(Linux)

g++ -std=c++11 -pthread cssff.cpp -o udp_server -lstdc++

g++ -std=c++11 -Wall -O2 -pthread cssff.cpp -o udp_server -lstdc++

你可以用 netcat 发送测试数据:

echo "hello port1" | nc -u 127.0.0.1 4000

echo "hello port2" | nc -u 127.0.0.1 5000

*/

/*

你几乎不会收到大于 1472~1500 的数据

推荐设为 1536 字节:正好是大多数操作系统分配 UDP buffer 的默认对齐粒度,还是 64 的倍数(好对齐

*/

#define NUM_PROC_THREADS 1

#define NUM_SEND_THREADS 1

#define BUF_SIZE_DEF 1536

constexpr int PORT1 = 4000;

constexpr int PORT2 = 5000;

constexpr int MAX_EVENTS = 10;

constexpr int BUF_SIZE = BUF_SIZE_DEF;

// 一次批量处理至多32个,防止抖动和阻塞

const size_t MAX_BATCH = 32;

// 退出标志

std::atomic<bool> g_running(true);

// 数据包的简单封装

struct Packet {

char data[BUF_SIZE];

sockaddr_in addr_from;

socklen_t addr_from_len;

int port_from; // 记录是哪个端口接收的

size_t data_len;

sockaddr_in addr_to;

socklen_t addr_to_len;

int port_to;

// 构造函数,默认初始化所有成员

Packet() {

reset();

}

// reset 函数,供复用时调用,清空所有数据和结构

void reset() {

memset(data, 0, sizeof(data));

memset(&addr_from, 0, sizeof(addr_from));

addr_from_len = 0;

port_from = 0;

data_len = 0;

memset(&addr_to, 0, sizeof(addr_to));

addr_to_len = 0;

port_to = 0;

}

};

// 这个无锁内存池,需要使用外部类支持

// 手动下载源码(没有官方包管理器直接安装)

// git clone https://github.com/cameron314/concurrentqueue.git

//

// 编译指定头文件目录

// g++ -std=c++11 -pthread -I/path/to/concurrentqueue cssff.cpp -o udp_server

//

// #define LOCK_IN_POOL 1

// class PacketPool {

// moodycamel::ConcurrentQueue<Packet*> pool;

// const size_t MAX_POOL_SIZE;

// std::atomic<size_t> total_allocated;

// public:

// PacketPool(size_t max_pool = 10000)

// : MAX_POOL_SIZE(max_pool), total_allocated(0) {}

// ~PacketPool() {

// Packet* pkt;

// while (pool.try_dequeue(pkt)) {

// delete pkt;

// }

// }

// Packet* acquire() {

// Packet* pkt = nullptr;

// if (pool.try_dequeue(pkt)) {

// return pkt;

// }

// total_allocated.fetch_add(1, std::memory_order_relaxed);

// return new Packet();

// }

// void release(Packet* pkt) {

// if (!pkt) return;

// size_t cached = pool.size_approx(); // 估算当前缓存大小

// if (cached < MAX_POOL_SIZE) {

// pool.enqueue(pkt);

// } else {

// delete pkt;

// total_allocated.fetch_sub(1, std::memory_order_relaxed);

// }

// }

// size_t allocatedCount() const {

// return total_allocated.load(std::memory_order_relaxed);

// }

// // 估算缓存数(不是严格准确)

// size_t cachedCount() const {

// return pool.size_approx();

// }

// };

class PacketPool {

std::vector<Packet*> pool;

mutable std::mutex mtx;

const size_t MAX_POOL_SIZE = 10000;

public:

~PacketPool() {

for (auto pkt : pool) delete pkt;

}

Packet* acquire() {

std::lock_guard<std::mutex> lock(mtx);

if (!pool.empty()) {

Packet* pkt = pool.back();

pool.pop_back();

pkt->reset();

return pkt;

}

return new Packet();

}

void release(Packet* pkt) {

if (pkt == nullptr) return;

std::lock_guard<std::mutex> lock(mtx);

if (pool.size() < MAX_POOL_SIZE) {

pool.push_back(pkt);

} else {

delete pkt;

}

}

size_t cachedCount() const {

std::lock_guard<std::mutex> lock(mtx);

return pool.size();

}

};

/////////////////////////////////////////////////////////////////////

// TODO: std::condition_variable 优化等待,暂时可能性能问题不大

// 线程安全队列

std::queue<Packet*> recv_queue_1;

std::queue<Packet*> send_queue_1;

// 枷锁使用,或者配合那个条件变量使用

std::mutex recv_mutex_1;

std::mutex send_mutex_1;

// 使用条件变量来同步

std::condition_variable recv_cv_1;

std::condition_variable send_cv_1;

/*

notify_one 基本规则:

每调用一次 notify_one(),只会唤醒 一个正在等待在该 condition_variable 上的线程。

如果没有线程在等待,这次调用 不会保存或累积信号,即后来的线程 wait() 依然会阻塞,直到下一次 notify_one() 或 notify_all()。

⚠️ 注意:条件变量不是信号量,不会记住之前的"通知次数"。

⚠️ 所以:谓词一定要加上队列不为空,否则,如果干活时候错过了唤醒会造成一直等待信号的问题。

其中wait原理伪代码:

function wait(unique_lock& lock, predicate pred):

while not pred(): // 先检查谓词

// 1. 把 mutex 解锁,让其他线程可以修改共享状态

lock.unlock()

// 2. 阻塞等待条件变量通知

wait_for_notify()

// 3. 被唤醒后,重新加锁

lock.lock()

end while

// 当 predicate() 返回 true 时,函数返回

return

*/

// 全局的内存池子

PacketPool pool;

const int MAX_READ_PER_FD = 10;

// 1)这个线程负责从这2个端口接收数据,放到对应的队列中;

void recv_thread(int sock1, int sock2) {

int epfd = epoll_create1(0);

if (epfd == -1) {

perror("epoll_create1");

return;

}

epoll_event ev1 = {0}, ev2 = {0};

ev1.events = EPOLLIN;

ev1.data.fd = sock1;

epoll_ctl(epfd, EPOLL_CTL_ADD, sock1, &ev1);

ev2.events = EPOLLIN;

ev2.data.fd = sock2;

epoll_ctl(epfd, EPOLL_CTL_ADD, sock2, &ev2);

epoll_event events[MAX_EVENTS];

while (true) {

if (false == g_running){

std::cout << "recv thread end here by signal " << std::endl;

return;

}

int nfds = epoll_wait(epfd, events, MAX_EVENTS, 1000);

if (nfds == -1) {

if (errno == EINTR)

continue; // 被信号中断,可忽略

std::cout << "recv thread end, epoll_wait err " << std::endl;

perror("epoll_wait");

break;

}

for (int i = 0; i < nfds; ++i) {

int sockfd = events[i].data.fd;

// 尝试读多次,直到没数据,提高效率; 但是也要防止一个fd上数据太多,造成其他的句柄饿死

int read_count = 0;

while (read_count < MAX_READ_PER_FD) {

read_count ++;

Packet* packet = pool.acquire();

socklen_t addr_len = sizeof(packet->addr_from);

ssize_t len = recvfrom(sockfd, packet->data, BUF_SIZE, 0,

(sockaddr*)&packet->addr_from, &addr_len);

if (len > 0) {

packet->data_len = len;

packet->addr_from_len = addr_len;

packet->port_from = (sockfd == sock1) ? PORT1 : PORT2;

// 转换IP地址为字符串

char ip_str[INET_ADDRSTRLEN]; // 存储IPv4地址的缓冲区

const char* ip_addr;

// 判断地址族(IPv4)

if (packet->addr_from.sin_family == AF_INET) {

// 转换IPv4地址

struct sockaddr_in* ipv4_addr = (struct sockaddr_in*)&packet->addr_from;

ip_addr = inet_ntop(AF_INET, &(ipv4_addr->sin_addr), ip_str, INET_ADDRSTRLEN);

}

// 如需支持IPv6可添加以下代码

// else if (packet->addr_from.sa_family == AF_INET6) {

// struct sockaddr_in6* ipv6_addr = (struct sockaddr_in6*)&packet->addr_from;

// ip_addr = inet_ntop(AF_INET6, &(ipv6_addr->sin6_addr), ip_str, INET6_ADDRSTRLEN);

// }

else {

ip_addr = "未知地址族";

}

// 放到同一个队列中

{

std::lock_guard<std::mutex> lock(recv_mutex_1);

recv_queue_1.push(packet);

}

recv_cv_1.notify_one();

std::cout << "read data from port " << packet->port_from << " IP="<< ip_addr << std::endl;

} // end if len > 0

else {

pool.release(packet);

if (errno == EAGAIN || errno == EWOULDBLOCK) {

// 缓冲区读空了,退出循环

break;

} else {

std::cerr << "read data error: " << strerror(errno) << std::endl;

break;

}

} // end of else

}// end 针对一个socket的循环读取while

} // end foreach(events)

}// end of while(true)

return;

}

// 处理PORT1端口数据,echo 模拟

// 将要发送的数据放到队列中

void process_port1(Packet * pkt){

pkt->addr_to = pkt->addr_from;

pkt->addr_to_len = pkt->addr_from_len;

{

std::lock_guard<std::mutex> send_lock(send_mutex_1);

send_queue_1.push(pkt);

}

send_cv_1.notify_one();

}

// 处理PORT1端口数据echo 模拟

void process_port2(Packet * pkt){

pkt->addr_to = pkt->addr_from;

pkt->addr_to_len = pkt->addr_from_len;

{

std::lock_guard<std::mutex> send_lock(send_mutex_1);

send_queue_1.push(pkt);

}

send_cv_1.notify_one();

}

// 处理收到数据的线程,这里只是简单的拷贝到发送队列中,做一个echo的逻辑

void process_thread() {

while (true) {

if (false == g_running){

std::cout << "process thread end here by signal " << std::endl;

return;

}

// 提高锁的效率,一次性处理多个包,

std::vector<Packet*> pkts1;

// 使用条件变量等待

{

std::unique_lock<std::mutex> lock(recv_mutex_1);

recv_cv_1.wait(lock, [] { return !recv_queue_1.empty() || !g_running; });

// 唤醒后检查一下是否退出

if (!g_running.load() && recv_queue_1.empty())

return;

size_t cnt = 0;

while (!recv_queue_1.empty() && cnt < MAX_BATCH) {

pkts1.push_back(recv_queue_1.front());

recv_queue_1.pop();

cnt++;

}

}

// 处理这最多32个数据包,

for (auto pkt : pkts1) {

if (pkt->port_from == PORT1){

process_port1(pkt);

}else{

process_port2(pkt);

}

}

}// end of while

}

// 处理需要发送的数据

void send_thread(int sock1, int sock2) {

while (true) {

if (!g_running.load()) {

std::cout << "send thread end here by signal " << std::endl;

return;

}

std::vector<Packet*> pkts;

// 批量取出发送队列的数据

{

std::unique_lock<std::mutex> lock(send_mutex_1);

// 这里的谓词很重要,谓词中一定要包含队列不为空

send_cv_1.wait(lock, []{

return !send_queue_1.empty() || !g_running.load();

});

// 唤醒后检查退出条件

if (!g_running.load() && send_queue_1.empty())

return;

size_t cnt = 0;

while (!send_queue_1.empty() && cnt < MAX_BATCH) {

pkts.push_back(send_queue_1.front());

send_queue_1.pop();

cnt++;

}

}

// 循环发送

for (auto pkt : pkts) {

if (pkt->port_from == PORT1) {

sendto(sock1, pkt->data, pkt->data_len, 0, (sockaddr *)&pkt->addr_to, pkt->addr_to_len);

} else {

sendto(sock2, pkt->data, pkt->data_len, 0, (sockaddr *)&pkt->addr_to, pkt->addr_to_len);

}

pool.release(pkt);

}

} // end of while

}

// 设置为非阻塞模式端口

int set_nonblocking(int sockfd) {

int flags = fcntl(sockfd, F_GETFL, 0);

if (flags == -1) return -1;

return fcntl(sockfd, F_SETFL, flags | O_NONBLOCK);

}

// 打开2个端口进行监听

int create_udp_socket(int port) {

int sock = socket(AF_INET, SOCK_DGRAM, 0);

if (sock < 0) {

perror("socket create error");

exit(1);

}

sockaddr_in addr{};

addr.sin_family = AF_INET;

addr.sin_addr.s_addr = INADDR_ANY;

addr.sin_port = htons(port);

if (bind(sock, (sockaddr *)&addr, sizeof(addr)) < 0) {

std::cout << "binding on UDP port " << port << " error " << std::endl;

perror("bind error");

exit(1);

}

return sock;

}

// 让线程检测这个标志退出

void signal_handler(int signum) {

if (signum == SIGINT) {

std::cout << "\nSIGINT received. Exiting here!" << std::endl;

g_running.store(false);

send_cv_1.notify_all();

recv_cv_1.notify_all();

}

}

sockaddr_in buildSockaddr(const std::string& ip, uint16_t port) {

sockaddr_in addr;

memset(&addr, 0, sizeof(addr)); // 清零结构体

addr.sin_family = AF_INET; // IPv4 协议

addr.sin_port = htons(port); // 端口转网络字节序

inet_pton(AF_INET, ip.c_str(), &addr.sin_addr); // 将IP字符串转为二进制

return addr;

}

/// @brief 入口函数

/// @return 0

int main() {

// 注册 Ctrl+C 信号

signal(SIGINT, signal_handler);

int sock1 = create_udp_socket(PORT1);

int sock2 = create_udp_socket(PORT2);

// 在 create_udp_socket 后调用:

set_nonblocking(sock1);

set_nonblocking(sock2);

std::cout << "Listening on UDP ports " << PORT1 << " and " << PORT2 << std::endl;

std::thread t_recv(recv_thread, sock1, sock2);

std::vector<std::thread> proc_threads, send_threads;

for (int i = 0; i < NUM_PROC_THREADS; ++i)

proc_threads.emplace_back(process_thread);

for (int i = 0; i < NUM_SEND_THREADS; ++i)

send_threads.emplace_back(send_thread, sock1, sock2);

// 主线程等信号

while (g_running) {

std::this_thread::sleep_for(std::chrono::milliseconds(100));

}

// 等待线程退出

if (t_recv.joinable())

t_recv.join();

// 等待处理线程退出

for (auto& t : proc_threads) {

if (t.joinable())

t.join();

}

// 等待发送线程退出

for (auto& t : send_threads) {

if (t.joinable())

t.join();

}

// 关闭资源

close(sock1);

close(sock2);

std::cout << "ssff app Clean shutdown complete." << std::endl;

return 0;

}编译以后,

可以使用nc测试一下;

cpp

echo "hello port1" | nc -u 127.0.0.1 4000

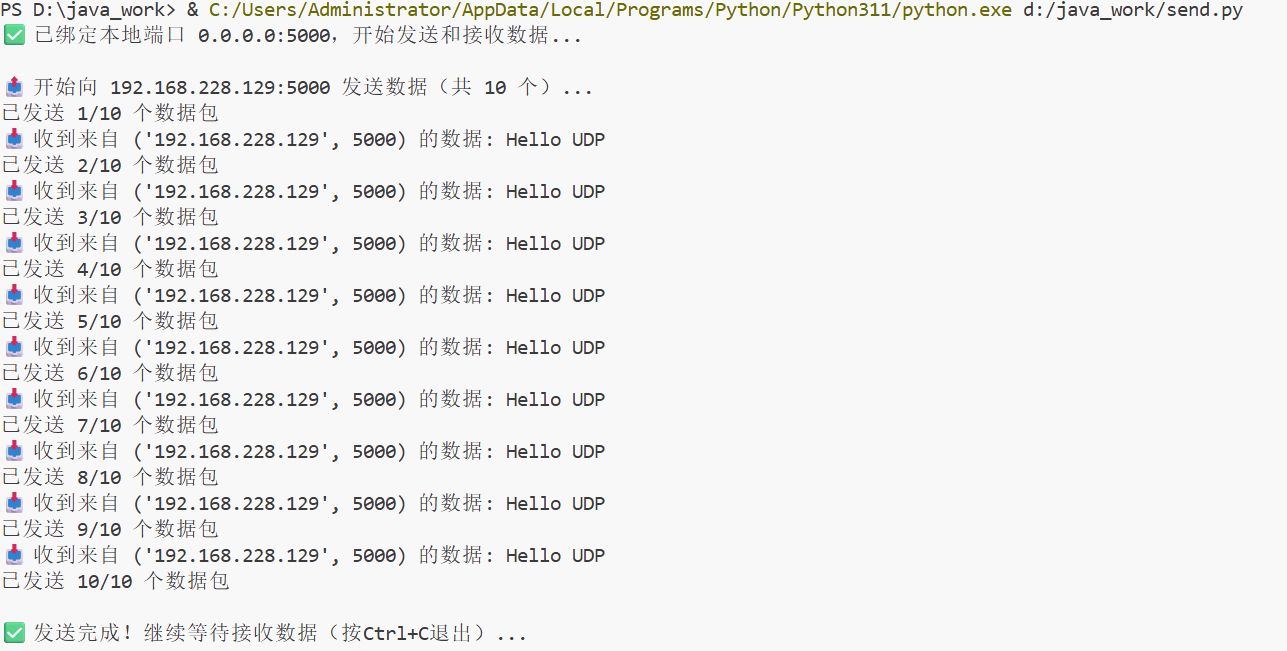

echo "hello port2" | nc -u 127.0.0.1 5000或者写一个python程序发送代码,可以跨主机试试:

python

import socket

import time

import argparse

import sys

import threading

def udp_handler(local_ip, local_port, target_ip, target_port, count, interval, data):

"""

单个套接字处理发送和接收:

- 绑定本地4000端口

- 向目标4000端口发送数据

- 在同一个端口接收回显数据

"""

# 创建UDP套接字并绑定本地4001端口(发送和接收共用)

sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

try:

# 绑定本地端口(关键:发送和接收都用这个端口)

sock.bind((local_ip, local_port))

print(f"✅ 已绑定本地端口 {local_ip}:{local_port},开始发送和接收数据...")

# 启动接收线程(用同一个套接字)

def receive_loop():

while True:

try:

# 接收数据(阻塞等待)

data_recv, addr = sock.recvfrom(1024)

# 显示接收内容

try:

message = data_recv.decode('utf-8')

print(f"\n📥 收到来自 {addr} 的数据: {message}")

except UnicodeDecodeError:

print(f"\n📥 收到来自 {addr} 的数据(无法解码): {data_recv.hex()}")

except Exception as e:

print(f"\n❌ 接收出错: {str(e)}")

break

# 启动接收线程

receive_thread = threading.Thread(target=receive_loop, daemon=True)

receive_thread.start()

# 发送数据

print(f"\n📤 开始向 {target_ip}:{target_port} 发送数据(共 {count} 个)...")

for i in range(count):

# 用已绑定的套接字发送(源端口固定为4001)

sock.sendto(data.encode('utf-8'), (target_ip, target_port))

# 显示发送进度

sys.stdout.write(f"\r已发送 {i+1}/{count} 个数据包")

sys.stdout.flush()

# 最后一个包不等待

if i < count - 1:

time.sleep(interval)

print("\n\n✅ 发送完成!继续等待接收数据(按Ctrl+C退出)...")

# 保持程序运行,等待接收

while True:

time.sleep(1)

except KeyboardInterrupt:

print("\n\n⚠️ 用户中断程序")

except Exception as e:

print(f"\n❌ 程序出错: {str(e)}")

finally:

sock.close()

print("\n🔌 套接字已关闭")

if __name__ == "__main__":

port = 5000

parser = argparse.ArgumentParser(description='UDP双向通信工具(固定本地4000端口)')

parser.add_argument('--local-ip', type=str, default='0.0.0.0',

help='本地绑定IP,默认0.0.0.0(所有网卡)')

parser.add_argument('--local-port', type=int, default=port,

help='本地绑定端口,默认4000')

parser.add_argument('--target-ip', type=str, default='192.168.228.129',

help='目标IP地址,默认虚拟机IP')

parser.add_argument('--target-port', type=int, default=port,

help='目标端口,默认4001')

parser.add_argument('--count', type=int, default=10,

help='发送数据包数量,默认10个')

parser.add_argument('--interval', type=float, default=1.0,

help='发送间隔(秒),默认1秒')

parser.add_argument('--data', type=str, default='Hello UDP',

help='发送的数据内容,默认"Hello UDP"')

args = parser.parse_args()

# 启动UDP处理逻辑

udp_handler(

local_ip=args.local_ip,

local_port=args.local_port,

target_ip=args.target_ip,

target_port=args.target_port,

count=args.count,

interval=args.interval,

data=args.data

)